#a Neural Network How-To

Explore tagged Tumblr posts

Text

Prompt Engineering Tips a Neural Network How-To and Other Recent Must-Reads

📢 Prompt Engineering Tips, a Neural Network How-To, and Other Recent Must-Reads Feeling that autumn energy? Our authors have been busy learning, experimenting, and launching exciting new projects. 🍂📚 Check out these ten standout articles from Towards Data Science – Medium that have been creating a buzz in our community. From program simulation techniques to building neural networks from scratch, these must-reads cover cutting-edge topics you don't want to miss. 💡 Read more here: [Link to the blog post](https://ift.tt/io0tgy4) And don't worry, we've got the action items covered too! We've identified tasks for each article's author to further explore and dive deeper into these fascinating subjects. See the full list of action items in the blog post. Keep up with the latest insights and join the conversation. Let's keep learning and growing together! #TowardsDataScience #MustReads #NeuralNetworks #PromptEngineering #DataScience #AI #MachineLearning List of Useful Links: AI Scrum Bot - ask about AI scrum and agile Our Telegram @itinai Twitter - @itinaicom

#itinai.com#AI#News#Prompt Engineering Tips#a Neural Network How-To#and Other Recent Must-Reads#AI News#AI tools#Innovation#itinai#LLM#Productivity#TDS Editors#Towards Data Science - Medium Prompt Engineering Tips

0 notes

Text

"HAL I won't argue with you anymore. Open the doors."

#2001: a space odyssey#dave bowman#hal 9000#keir dullea#was gonna make a gifset of dave + red#cos the significance of it in this film is interesting#but decided to go with this cos his face in this whole scene is just great#remember how I said I was gonna gif all the dave bowman#i wasnt lying my friends#his crystal blue eyes have captured me mind body and neural networks

77 notes

·

View notes

Text

Dogstomp #3239 - November 21st

Patreon / Discord Server / Itaku / Bluesky

#I drew that neural network diagram long before discovering that that's how computer neural nets are actually depicted#comic diary#comic journal#autobio comics#comics#webcomics#furry#furry art#november 21 2023#comic 3239#neural network

61 notes

·

View notes

Text

YES AI IS TERRIBLE, ESPECIALLY GENERATING ART AND WRITING AND STUFF (please keep spreading that, I would like to be able to do art for a living in the near future)

but can we talk about how COOL NEURAL NETWORKS ARE??

LIKE WE MADE THIS CODE/MACHINE THAT CAN LEARN LIKE HUMANS DO!! Early AI reminds me of a small childs drawing that, yes is terrible, but its also SO COOL THAT THEY DID THAT!!

I have an intense love for computer science cause its so cool to see what machines can really do! Its so unfortunate that people took advantage of these really awesome things

#AI#neural network#computer science#Im writing an essay about how AI is bad and accidentally ignited my love for technology again#technology

10 notes

·

View notes

Text

Another day in this insane lab.

"N. expressed hope that we can reduce brain dynamics to Ising model." "We will then have to explain why does consciousness arise in the brain but not in ferromagnetics." "How do you know it doesn't?" "True, we can switch to animism, I DON'T MIND."

(note: you can't really do it because of the presence of higher order correlations in the brain, please don't try it. ferromagnetic materials don't show any signs of consciousness either)

#a physicist and a neuroscientist walk into a bar yet again#shit academics say#there's also a guy in this lab who suggests we should treat everything as neural networks#all complex systems#I sort of agree with him#that beehive? a brain#a bunch of molecules? a brain also#bees solve some insane route optimisation problems actually#hives are very much brains#most people have no idea just how deranged most academic conversations are

10 notes

·

View notes

Text

youtube

if you ask me, gen-AI exists for one clear and noble purpose: to generate machine-made horrors beyond our comprehension 🖤

#THIS. THIS IS WHAT I WANT.#why did this stop happening. why don't we get technomancers hal-9000'ing neural networks anymore#and is loab still out there........#the funny thing is that i had just watched a vid about beksinski before this one#and it mentioned his digital art era and how he was like ''eh nvm this sucks'' after a while and went back to photog#maybe he's haunting latent space now LOL#videos

5 notes

·

View notes

Text

current object of fascination is researchers who are concerned about the resources and processing power consumed by, for example, llms and are therefore exploring the solution of “run ai on their own collections of actual neurons.” moving gameplay to a different ethical field i suppose

#text tag#if neural networks mimic the brain and you grow a rudimentary brain that runs like a brain then congrats on your new brain that spends all#of its time writing someone else’s emails i guess. genuinely interested to see how this turns out#working again ew. while lying down for my health or whatever

3 notes

·

View notes

Text

someone should've told me how funny plato's symposium is years ago this man takes a half page tangent to do top/bottom discourse on patrochilles for absolutely no reason other than to say fuck you to a playwright

#litchi.txt#the neural networks professor was 40 minutes late as per usual so I decided to start reading#I was in tears for most of it its so funny#'achilles was the beloved for he was the fairest of the greeks and also younger as homer says and to say otherwise is foolish'#'aeschylus is an idiot for saying he was the erastes he was the eromenos CHANGE MY MIND anyways-'#also the whole bit about 'consensual slavery' plato was a service top convince me otherwise#half the time i kept thinking about how this was 99% the book mary renault references in fire from heaven#like. alexander the great read this thing#he probably told his boyfriend that#hephaistion blacked out from pure lust when he heard those words#im normal i promise

2 notes

·

View notes

Text

putting random cdi zelda dialog into bing image creator

#ai#neural network#zelda cdi#i like how only one of them is is even vaguely zelda inspired#the rest are stuff like random warrior of sunlight god of peace kratos and broknight#oh and relaxed doge

6 notes

·

View notes

Text

3 2 1 let's go

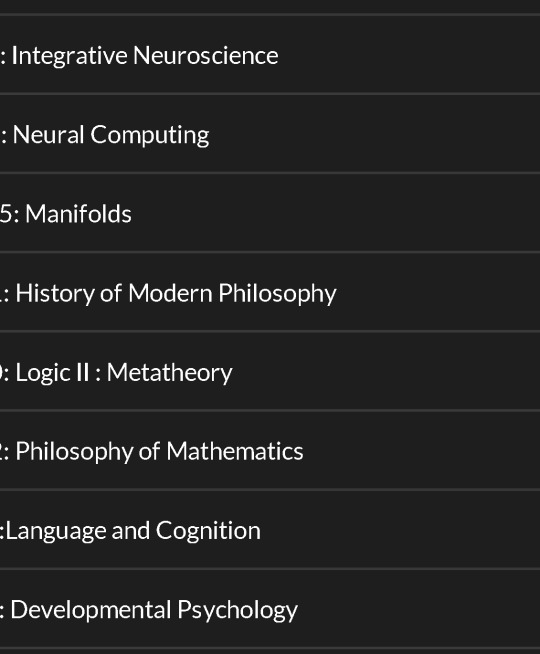

#ive been kinda demotivating myself from studying and stuff over the past few days bc ive just been coding and i dont rly understand my own#code or how to fix it rn so it takes hours . anyway ive been neglecting my actual uni work etc etc like bitch u have a lott of stuff to do#so many modules all in one term like get it together u cannot be slacking rn !#anyway lets do that today. i like all my modules (even the ones i didnt get to pick/cores) so its hard to focus on just 1...#ok i used an rng it came to 2 so lets do neural networks#announcing to internet so i feel more accountable. i rotted for 2 months its exhausting i need + want to do things again. esp when this ter#the modules r cute..

15 notes

·

View notes

Text

remember years ago when everything was neural networks being taught to play tetris and shit . well now she's all grown up and she's calling herself artificial intelligence and youre beaing a hater?? downvote for you

2 notes

·

View notes

Text

Idk I just think maybe if u assume every person disagreeing with you is a npc or a robot u might just be stupid or something. Learn more ok?

#can’t be assed to phrase this tactfully#just like#like yeah I know there are literal neural networks dedicated to writing dumbass shit for click farming#however a good chunk of the dumbass opinions you see do in fact come from people just as complex as you are#at least fucking try to understand why other people hold the beliefs they do#this was prompted by seeing someone unironically call a Palestinian a Russian bot#when they criticized a trolly problem that presented the people of Palestine as acceptsble#collateral damage#like it’s a deeply callous image and it’s fucking insane to present ‘’we will technically be killing fewer people’’ as a reason 2 vote 4 u#like god where is the fucking line for you. how many thousands of peop#will be buried before you grow up and realize intentionally killing people is a unacceptably bad thing actually#but like it’s a general problem and I beg people to try and understand that they aren’t the only Real human being on planet earth#there’s billions of apes on this fucking planet. all just as complex as you. with lives that are both similar and alien to what you know

4 notes

·

View notes

Text

you ever get the uncontrollable urge to pursue a creative project because you got one (1) piece of information that you haven't been able to stop thinking about

#anyways. just got told that scientists are considering the use of fungi in computers#specifically for neural networks#and I thought of the humongous fungus and whatnot#and that also reminded me of pando and how I've been thinking passively about trees in horror or something#idk they just feel a bit eldritch to me. great things that I have respect for. it's the way they sway in the wind I think#anyways just like. I don't know. sci-fi horror using semi-biological computers sounds so interesting to me as a concept#and I've always wanted to do a podcast or write something or something y'know#anyways. don't know what or how I'd do something with it but I've just been rotating the thought#frost talks

9 notes

·

View notes

Text

From Recurrent Networks to GPT-4: Measuring Algorithmic Progress in Language Models - Technology Org

New Post has been published on https://thedigitalinsider.com/from-recurrent-networks-to-gpt-4-measuring-algorithmic-progress-in-language-models-technology-org/

From Recurrent Networks to GPT-4: Measuring Algorithmic Progress in Language Models - Technology Org

In 2012, the best language models were small recurrent networks that struggled to form coherent sentences. Fast forward to today, and large language models like GPT-4 outperform most students on the SAT. How has this rapid progress been possible?

Image credit: MIT CSAIL

In a new paper, researchers from Epoch, MIT FutureTech, and Northeastern University set out to shed light on this question. Their research breaks down the drivers of progress in language models into two factors: scaling up the amount of compute used to train language models, and algorithmic innovations. In doing so, they perform the most extensive analysis of algorithmic progress in language models to date.

Their findings show that due to algorithmic improvements, the compute required to train a language model to a certain level of performance has been halving roughly every 8 months. “This result is crucial for understanding both historical and future progress in language models,” says Anson Ho, one of the two lead authors of the paper. “While scaling compute has been crucial, it’s only part of the puzzle. To get the full picture you need to consider algorithmic progress as well.”

The paper’s methodology is inspired by “neural scaling laws”: mathematical relationships that predict language model performance given certain quantities of compute, training data, or language model parameters. By compiling a dataset of over 200 language models since 2012, the authors fit a modified neural scaling law that accounts for algorithmic improvements over time.

Based on this fitted model, the authors do a performance attribution analysis, finding that scaling compute has been more important than algorithmic innovations for improved performance in language modeling. In fact, they find that the relative importance of algorithmic improvements has decreased over time. “This doesn’t necessarily imply that algorithmic innovations have been slowing down,” says Tamay Besiroglu, who also co-led the paper.

“Our preferred explanation is that algorithmic progress has remained at a roughly constant rate, but compute has been scaled up substantially, making the former seem relatively less important.” The authors’ calculations support this framing, where they find an acceleration in compute growth, but no evidence of a speedup or slowdown in algorithmic improvements.

By modifying the model slightly, they also quantified the significance of a key innovation in the history of machine learning: the Transformer, which has become the dominant language model architecture since its introduction in 2017. The authors find that the efficiency gains offered by the Transformer correspond to almost two years of algorithmic progress in the field, underscoring the significance of its invention.

While extensive, the study has several limitations. “One recurring issue we had was the lack of quality data, which can make the model hard to fit,” says Ho. “Our approach also doesn’t measure algorithmic progress on downstream tasks like coding and math problems, which language models can be tuned to perform.”

Despite these shortcomings, their work is a major step forward in understanding the drivers of progress in AI. Their results help shed light about how future developments in AI might play out, with important implications for AI policy. “This work, led by Anson and Tamay, has important implications for the democratization of AI,” said Neil Thompson, a coauthor and Director of MIT FutureTech. “These efficiency improvements mean that each year levels of AI performance that were out of reach become accessible to more users.”

“LLMs have been improving at a breakneck pace in recent years. This paper presents the most thorough analysis to date of the relative contributions of hardware and algorithmic innovations to the progress in LLM performance,” says Open Philanthropy Research Fellow Lukas Finnveden, who was not involved in the paper.

“This is a question that I care about a great deal, since it directly informs what pace of further progress we should expect in the future, which will help society prepare for these advancements. The authors fit a number of statistical models to a large dataset of historical LLM evaluations and use extensive cross-validation to select a model with strong predictive performance. They also provide a good sense of how the results would vary under different reasonable assumptions, by doing many robustness checks. Overall, the results suggest that increases in compute have been and will keep being responsible for the majority of LLM progress as long as compute budgets keep rising by ≥4x per year. However, algorithmic progress is significant and could make up the majority of progress if the pace of increasing investments slows down.”

Written by Rachel Gordon

Source: Massachusetts Institute of Technology

You can offer your link to a page which is relevant to the topic of this post.

#A.I. & Neural Networks news#Accounts#ai#Algorithms#Analysis#approach#architecture#artificial intelligence (AI)#budgets#coding#data#deal#democratization#democratization of AI#Developments#efficiency#explanation#Featured information processing#form#Full#Future#GPT#GPT-4#growth#Hardware#History#how#Innovation#innovations#Invention

4 notes

·

View notes

Note

how are you getting infinite craft to mash words together like that its only giving me things that are known words?

honestly just keep throwing spaghetti at the wall

3 notes

·

View notes

Text

Hey; instead of asking ai “how could humans face extinction in the next few decades”, what if we asked “how could humans solve the possible causes of our own extinction in the next few decades?”

#I still hate ai#nightshade your art#how different is training ai from raising a child?#I wonder if ai neural networks behave more like the autistic brain (hyper connectivity) or the neurotypical brain#I supposed that would likely rely on the neural patterning of those teaching the ai#I wonder if it changes over time#do androids dream of electric sheep

3 notes

·

View notes