#about language in machine and programms

Explore tagged Tumblr posts

Text

this bot knows jackshit of the play i'm asking about lmao

#i'm using chatgpt for a university work my professor wants us to do#about language in machine and programms#it's not really clear what the point of this assignment is but i love the prof so#anyway i'm trying to grill the bot and it keeps lying lmao

3 notes

·

View notes

Text

There is no such thing as AI.

How to help the non technical and less online people in your life navigate the latest techbro grift.

I've seen other people say stuff to this effect but it's worth reiterating. Today in class, my professor was talking about a news article where a celebrity's likeness was used in an ai image without their permission. Then she mentioned a guest lecture about how AI is going to help finance professionals. Then I pointed out, those two things aren't really related.

The term AI is being used to obfuscate details about multiple semi-related technologies.

Traditionally in sci-fi, AI means artificial general intelligence like Data from star trek, or the terminator. This, I shouldn't need to say, doesn't exist. Techbros use the term AI to trick investors into funding their projects. It's largely a grift.

What is the term AI being used to obfuscate?

If you want to help the less online and less tech literate people in your life navigate the hype around AI, the best way to do it is to encourage them to change their language around AI topics.

By calling these technologies what they really are, and encouraging the people around us to know the real names, we can help lift the veil, kill the hype, and keep people safe from scams. Here are some starting points, which I am just pulling from Wikipedia. I'd highly encourage you to do your own research.

Machine learning (ML): is an umbrella term for solving problems for which development of algorithms by human programmers would be cost-prohibitive, and instead the problems are solved by helping machines "discover" their "own" algorithms, without needing to be explicitly told what to do by any human-developed algorithms. (This is the basis of most technologically people call AI)

Language model: (LM or LLM) is a probabilistic model of a natural language that can generate probabilities of a series of words, based on text corpora in one or multiple languages it was trained on. (This would be your ChatGPT.)

Generative adversarial network (GAN): is a class of machine learning framework and a prominent framework for approaching generative AI. In a GAN, two neural networks contest with each other in the form of a zero-sum game, where one agent's gain is another agent's loss. (This is the source of some AI images and deepfakes.)

Diffusion Models: Models that generate the probability distribution of a given dataset. In image generation, a neural network is trained to denoise images with added gaussian noise by learning to remove the noise. After the training is complete, it can then be used for image generation by starting with a random noise image and denoise that. (This is the more common technology behind AI images, including Dall-E and Stable Diffusion. I added this one to the post after as it was brought to my attention it is now more common than GANs.)

I know these terms are more technical, but they are also more accurate, and they can easily be explained in a way non-technical people can understand. The grifters are using language to give this technology its power, so we can use language to take it's power away and let people see it for what it really is.

12K notes

·

View notes

Note

what if!!! hear me out 🙏🙏 yuu was a robot/miku inspired…IT SUCKS but like…miku kinda..yuu mikyuu…😓😓

Sure no worries, no judgement from me, ask and you shall receive

𝐖𝐇𝐀𝐓 𝐈𝐅 𝐘𝐔𝐔 𝐈𝐒 𝐀 𝐑𝐎𝐁𝐎𝐓 🤖👾🎤

A robot is a machine—especially one programmable by a computer—capable of carrying out a complex series of actions automatically. A robot can be guided by an external control device, or the control may be embedded within. But they can act independently if their creators allow it.

( English is not my first language )

Day 3 : robot!yuu

In a world full of technology and robots. Robot!yuu was the number one idol during that time and was in the number one group of the century ; vocaloid, imagine during the middle of a performance one of their solo concerts, a black carriage arrived and they suddenly shut down.

They turned on when it was an orientation ceremony. Since robot!yuu isn't technically an organic being, they would be put between the ignihyde dorm or ramshackle.

After Crowley gave them a cellphone or asked idia if he could do maintenance to connect them to social media of twisted wonderland, by doing this they started to upload their albums towards the internet and it blew up, people are loving it, it's getting headlines about a new genre of music, and the music getting about stream by millions around the world, Robot!yuu created a genre of music. A revaluation towards the music Industry.

This managed robot!yuu to get rich overnight and allowed them to buy more expensive and to fix the ramshackle dorm more to get more expensive technology for their maintenance, Robot!yuu was planning on giving half of the money to Crowley as a thanks but he only received 1/4 half of the money.

Even tho robot! yuu is an idol, their master builds them with an offensive and defensive system, they have extremely tough metal that is hard to find as well an offensive mode, they have a lot on their arsenal attacks, energy beams, rocket launchers, shield mode, and more.

They are also able to connect to any device and hack it without any issue, they manage to hack ignihyde technology without an issue. And they are waterproof

Robot!yuu also can digest and drink things without an issue, they have a special component on their stomach to make sure they can digest things normally.

During VDC they dominated the competition. Lasers, mist appears and light sticks wave around for their presence. They change outfits depending on the song, it was literally a Miku concert.

Congratulations neige Leblanc is now one of their fans, when going down the stage, he literally ran towards you and started asking a billion of questions with stars amongst their eyes

Vil was a little sour but also amazed about robot!yuu performance, he would ask them for choreography and music ideas from them as well as fashion opinions. He originally wanted robot!yuu to transfer into ignihyde but they refused due to ignihyde has the complete equipment for them or ramshackle.

Pomifiore dorm started to take notes and tried robot!yuu fashion styles. Idia is also a supporter of them and basically a super fan, robot!yuu would come to ignihyde to help him with games or help him maintain ortho, Robot!yuu is basically a sister towards Idia and Ortho.

sorry if it's short, this is by far I could come up anon

#twisted wonderland#not canon#twst headcanons#twst scenario#disney twst#twisted wonderland yuu au#twst mc#twst wonderland#twst x reader#twst yuu au#kinda miku!yuu

260 notes

·

View notes

Text

Thinking up some wild combinations of expansion devices for a VIC-20 to create the ultimate VIC. Idk, is this something y'all do with your computers for the fun of it?

If you're not sure what you're looking at, the first one is a VIC-1020 expansion chassis with a VIC-20 plugged into it, along with:

C2N datasette

Xetec Super Graphix Gold centronix printer adapter

SD2IEC

3 way user port expander

1660 Modem

VIC-REL relay expansion

VIC-1111 16K RAM Cartridge

VIC-1211A Super Expander with 3K RAM Cartridge

VIC-1213 Machine Language Monitor

VIC-1112 IEEE-488 Interface Cartridge (replica)

Protecto 80 40/80 Column Board

My custom VIC-20 Dual Serial Cart Mk I

The second one here is a different 6-slot multi-cartridge expander with those last 6 cartridges installed.

The thing is, this wouldn't even fully expand the VIC-20's RAM, you'd need a denser RAM cart, or maybe more expansion slots.

The question is more about what your target use case is intended to be, if not "the most powerful VIC-20 in the history of ever". What job is the VIC supposed to be doing that it might need all of these peripherals? Most likely, you'd be attaching the things you need as you need them, and there are so many other things that could be in the mix here that aren't, for example a 1540 disk drive, several other printers, game cartridges, a programmers aid cartridge, a user port serial adapter... I could keep going.

Most of the time, I don't need nearly as many cartridges installed. In fact, the vast majority of the time I'm running just a Super Expander or a Penultimate cartridge of some kind. Either because I'm working with a minimal setup or I really just want the all-in-one functionality that a Penultimate provides (although the latter takes a bit of setup to configure the same functions that several physical cartridges provide)

More often when I am doing a multi-cart setup, I go with my 4-slot expansion due to size constraints. Fortunately, it lets me fit an IEEE-488 adapter, super expander or penultimate, machine language monitor, and now my dual serial cart -- all without going overboard.

94 notes

·

View notes

Text

information flow in transformers

In machine learning, the transformer architecture is a very commonly used type of neural network model. Many of the well-known neural nets introduced in the last few years use this architecture, including GPT-2, GPT-3, and GPT-4.

This post is about the way that computation is structured inside of a transformer.

Internally, these models pass information around in a constrained way that feels strange and limited at first glance.

Specifically, inside the "program" implemented by a transformer, each segment of "code" can only access a subset of the program's "state." If the program computes a value, and writes it into the state, that doesn't make value available to any block of code that might run after the write; instead, only some operations can access the value, while others are prohibited from seeing it.

This sounds vaguely like the kind of constraint that human programmers often put on themselves: "separation of concerns," "no global variables," "your function should only take the inputs it needs," that sort of thing.

However, the apparent analogy is misleading. The transformer constraints don't look much like anything that a human programmer would write, at least under normal circumstances. And the rationale behind them is very different from "modularity" or "separation of concerns."

(Domain experts know all about this already -- this is a pedagogical post for everyone else.)

1. setting the stage

For concreteness, let's think about a transformer that is a causal language model.

So, something like GPT-3, or the model that wrote text for @nostalgebraist-autoresponder.

Roughly speaking, this model's input is a sequence of words, like ["Fido", "is", "a", "dog"].

Since the model needs to know the order the words come in, we'll include an integer offset alongside each word, specifying the position of this element in the sequence. So, in full, our example input is

[ ("Fido", 0), ("is", 1), ("a", 2), ("dog", 3), ]

The model itself -- the neural network -- can be viewed as a single long function, which operates on a single element of the sequence. Its task is to output the next element.

Let's call the function f. If f does its job perfectly, then when applied to our example sequence, we will have

f("Fido", 0) = "is" f("is", 1) = "a" f("a", 2) = "dog"

(Note: I've omitted the index from the output type, since it's always obvious what the next index is. Also, in reality the output type is a probability distribution over words, not just a word; the goal is to put high probability on the next word. I'm ignoring this to simplify exposition.)

You may have noticed something: as written, this seems impossible!

Like, how is the function supposed to know that after ("a", 2), the next word is "dog"!? The word "a" could be followed by all sorts of things.

What makes "dog" likely, in this case, is the fact that we're talking about someone named "Fido."

That information isn't contained in ("a", 2). To do the right thing here, you need info from the whole sequence thus far -- from "Fido is a", as opposed to just "a".

How can f get this information, if its input is just a single word and an index?

This is possible because f isn't a pure function. The program has an internal state, which f can access and modify.

But f doesn't just have arbitrary read/write access to the state. Its access is constrained, in a very specific sort of way.

2. transformer-style programming

Let's get more specific about the program state.

The state consists of a series of distinct "memory regions" or "blocks," which have an order assigned to them.

Let's use the notation memory_i for these. The first block is memory_0, the second is memory_1, and so on.

In practice, a small transformer might have around 10 of these blocks, while a very large one might have 100 or more.

Each block contains a separate data-storage "cell" for each offset in the sequence.

For example, memory_0 contains a cell for position 0 ("Fido" in our example text), and a cell for position 1 ("is"), and so on. Meanwhile, memory_1 contains its own, distinct cells for each of these positions. And so does memory_2, etc.

So the overall layout looks like:

memory_0: [cell 0, cell 1, ...] memory_1: [cell 0, cell 1, ...] [...]

Our function f can interact with this program state. But it must do so in a way that conforms to a set of rules.

Here are the rules:

The function can only interact with the blocks by using a specific instruction.

This instruction is an "atomic write+read". It writes data to a block, then reads data from that block for f to use.

When the instruction writes data, it goes in the cell specified in the function offset argument. That is, the "i" in f(..., i).

When the instruction reads data, the data comes from all cells up to and including the offset argument.

The function must call the instruction exactly once for each block.

These calls must happen in order. For example, you can't do the call for memory_1 until you've done the one for memory_0.

Here's some pseudo-code, showing a generic computation of this kind:

f(x, i) { calculate some things using x and i; // next 2 lines are a single instruction write to memory_0 at position i; z0 = read from memory_0 at positions 0...i; calculate some things using x, i, and z0; // next 2 lines are a single instruction write to memory_1 at position i; z1 = read from memory_1 at positions 0...i; calculate some things using x, i, z0, and z1; [etc.] }

The rules impose a tradeoff between the amount of processing required to produce a value, and how early the value can be accessed within the function body.

Consider the moment when data is written to memory_0. This happens before anything is read (even from memory_0 itself).

So the data in memory_0 has been computed only on the basis of individual inputs like ("a," 2). It can't leverage any information about multiple words and how they relate to one another.

But just after the write to memory_0, there's a read from memory_0. This read pulls in data computed by f when it ran on all the earlier words in the sequence.

If we're processing ("a", 2) in our example, then this is the point where our code is first able to access facts like "the word 'Fido' appeared earlier in the text."

However, we still know less than we might prefer.

Recall that memory_0 gets written before anything gets read. The data living there only reflects what f knows before it can see all the other words, while it still only has access to the one word that appeared in its input.

The data we've just read does not contain a holistic, "fully processed" representation of the whole sequence so far ("Fido is a"). Instead, it contains:

a representation of ("Fido", 0) alone, computed in ignorance of the rest of the text

a representation of ("is", 1) alone, computed in ignorance of the rest of the text

a representation of ("a", 2) alone, computed in ignorance of the rest of the text

Now, once we get to memory_1, we will no longer face this problem. Stuff in memory_1 gets computed with the benefit of whatever was in memory_0. The step that computes it can "see all the words at once."

Nonetheless, the whole function is affected by a generalized version of the same quirk.

All else being equal, data stored in later blocks ought to be more useful. Suppose for instance that

memory_4 gets read/written 20% of the way through the function body, and

memory_16 gets read/written 80% of the way through the function body

Here, strictly more computation can be leveraged to produce the data in memory_16. Calculations which are simple enough to fit in the program, but too complex to fit in just 20% of the program, can be stored in memory_16 but not in memory_4.

All else being equal, then, we'd prefer to read from memory_16 rather than memory_4 if possible.

But in fact, we can only read from memory_16 once -- at a point 80% of the way through the code, when the read/write happens for that block.

The general picture looks like:

The early parts of the function can see and leverage what got computed earlier in the sequence -- by the same early parts of the function. This data is relatively "weak," since not much computation went into it. But, by the same token, we have plenty of time to further process it.

The late parts of the function can see and leverage what got computed earlier in the sequence -- by the same late parts of the function. This data is relatively "strong," since lots of computation went into it. But, by the same token, we don't have much time left to further process it.

3. why?

There are multiple ways you can "run" the program specified by f.

Here's one way, which is used when generating text, and which matches popular intuitions about how language models work:

First, we run f("Fido", 0) from start to end. The function returns "is." As a side effect, it populates cell 0 of every memory block.

Next, we run f("is", 1) from start to end. The function returns "a." As a side effect, it populates cell 1 of every memory block.

Etc.

If we're running the code like this, the constraints described earlier feel weird and pointlessly restrictive.

By the time we're running f("is", 1), we've already populated some data into every memory block, all the way up to memory_16 or whatever.

This data is already there, and contains lots of useful insights.

And yet, during the function call f("is", 1), we "forget about" this data -- only to progressively remember it again, block by block. The early parts of this call have only memory_0 to play with, and then memory_1, etc. Only at the end do we allow access to the juicy, extensively processed results that occupy the final blocks.

Why? Why not just let this call read memory_16 immediately, on the first line of code? The data is sitting there, ready to be used!

Why? Because the constraint enables a second way of running this program.

The second way is equivalent to the first, in the sense of producing the same outputs. But instead of processing one word at a time, it processes a whole sequence of words, in parallel.

Here's how it works:

In parallel, run f("Fido", 0) and f("is", 1) and f("a", 2), up until the first write+read instruction. You can do this because the functions are causally independent of one another, up to this point. We now have 3 copies of f, each at the same "line of code": the first write+read instruction.

Perform the write part of the instruction for all the copies, in parallel. This populates cells 0, 1 and 2 of memory_0.

Perform the read part of the instruction for all the copies, in parallel. Each copy of f receives some of the data just written to memory_0, covering offsets up to its own. For instance, f("is", 1) gets data from cells 0 and 1.

In parallel, continue running the 3 copies of f, covering the code between the first write+read instruction and the second.

Perform the second write. This populates cells 0, 1 and 2 of memory_1.

Perform the second read.

Repeat like this until done.

Observe that mode of operation only works if you have a complete input sequence ready before you run anything.

(You can't parallelize over later positions in the sequence if you don't know, yet, what words they contain.)

So, this won't work when the model is generating text, word by word.

But it will work if you have a bunch of texts, and you want to process those texts with the model, for the sake of updating the model so it does a better job of predicting them.

This is called "training," and it's how neural nets get made in the first place. In our programming analogy, it's how the code inside the function body gets written.

The fact that we can train in parallel over the sequence is a huge deal, and probably accounts for most (or even all) of the benefit that transformers have over earlier architectures like RNNs.

Accelerators like GPUs are really good at doing the kinds of calculations that happen inside neural nets, in parallel.

So if you can make your training process more parallel, you can effectively multiply the computing power available to it, for free. (I'm omitting many caveats here -- see this great post for details.)

Transformer training isn't maximally parallel. It's still sequential in one "dimension," namely the layers, which correspond to our write+read steps here. You can't parallelize those.

But it is, at least, parallel along some dimension, namely the sequence dimension.

The older RNN architecture, by contrast, was inherently sequential along both these dimensions. Training an RNN is, effectively, a nested for loop. But training a transformer is just a regular, single for loop.

4. tying it together

The "magical" thing about this setup is that both ways of running the model do the same thing. You are, literally, doing the same exact computation. The function can't tell whether it is being run one way or the other.

This is crucial, because we want the training process -- which uses the parallel mode -- to teach the model how to perform generation, which uses the sequential mode. Since both modes look the same from the model's perspective, this works.

This constraint -- that the code can run in parallel over the sequence, and that this must do the same thing as running it sequentially -- is the reason for everything else we noted above.

Earlier, we asked: why can't we allow later (in the sequence) invocations of f to read earlier data out of blocks like memory_16 immediately, on "the first line of code"?

And the answer is: because that would break parallelism. You'd have to run f("Fido", 0) all the way through before even starting to run f("is", 1).

By structuring the computation in this specific way, we provide the model with the benefits of recurrence -- writing things down at earlier positions, accessing them at later positions, and writing further things down which can be accessed even later -- while breaking the sequential dependencies that would ordinarily prevent a recurrent calculation from being executed in parallel.

In other words, we've found a way to create an iterative function that takes its own outputs as input -- and does so repeatedly, producing longer and longer outputs to be read off by its next invocation -- with the property that this iteration can be run in parallel.

We can run the first 10% of every iteration -- of f() and f(f()) and f(f(f())) and so on -- at the same time, before we know what will happen in the later stages of any iteration.

The call f(f()) uses all the information handed to it by f() -- eventually. But it cannot make any requests for information that would leave itself idling, waiting for f() to fully complete.

Whenever f(f()) needs a value computed by f(), it is always the value that f() -- running alongside f(f()), simultaneously -- has just written down, a mere moment ago.

No dead time, no idling, no waiting-for-the-other-guy-to-finish.

p.s.

The "memory blocks" here correspond to what are called "keys and values" in usual transformer lingo.

If you've heard the term "KV cache," it refers to the contents of the memory blocks during generation, when we're running in "sequential mode."

Usually, during generation, one keeps this state in memory and appends a new cell to each block whenever a new token is generated (and, as a result, the sequence gets longer by 1).

This is called "caching" to contrast it with the worse approach of throwing away the block contents after each generated token, and then re-generating them by running f on the whole sequence so far (not just the latest token). And then having to do that over and over, once per generated token.

#ai tag#is there some standard CS name for the thing i'm talking about here?#i feel like there should be#but i never heard people mention it#(or at least i've never heard people mention it in a way that made the connection with transformers clear)

313 notes

·

View notes

Text

Here is an observation of common attitudes I see in tech-adjacent spaces (mostly online).

The thing about programming/tech is, at its base, it's historically and culturally contingent. There are of course many fundamental (physical and mathematical) limitations on what a computer can and cannot do, how fast it can do things, and so on. But at least as much of the modern tech landscape is the product of choices made by people about how these machines will work, choices that very much could have been made differently. And modern computing technology is a huge tower of these choices, each resting on and grappling with the ones below it. If you're, say, a web developer writing a web app, the sheer height of this tower of contingent human decisions that your work rests on is almost incomprehensible. And by and large, programmers know this.

I am not dispensing some secret wisdom that I think tech workers don't have. On the contrary, I think the vast contingency of it all is blindingly obvious to anyone who has tried to make a computer do anything. But tech is also, well, technical, and do you know what else is technical? Science. I think this has lead to a sort of cultural false affinity, where tech is perceived, both from within and without, as more similar to science than it is to the humanities. Certainly, there are certain kinds of intellectual labor that tech shares with the sciences. But there are also, as described above, certain kinds of intellectual labor that tech shares to a much greater degree with the humanities, namely (in the broadest terms): grappling with other people's choices.

From without, I think this misplaced affinity leads people to believe that technology is less contingent than it actually is. But I think this belief would be completely untenable from within; it just cannot contend with reality. I've never met a tech worker or enthusiast who seems to think this way. Rather, I feel there is a persistent perception among tech-inclined people that science is more contingent than it actually is. I don't think this misperception rises to the level of a belief, rather I think it is more of an intuition. I think tech people have very much trained themselves (rightly, in their native context) to look at complex systems and go "how could this be reworked, improved, done differently?" I think this impulse is very sensible in computing but very out of place in, say, biology. And I suppose my conjecture (this whole post is purely conjectural, based on a gut sense that might not be worth anything) is that this is one of the main reasons for the popularity of transhumanism in, you know, the Bay. And whatnot.

I'm not saying transhumanism is actually, physically impossible. Of course it's not! The technology will, I strongly suspect, exist some day. But if you're living in 2024, I think the engineering mindset is more-or-less unambiguously the wrong one to bring to biology, at least macrobiology. This post is not about the limits of what is physically possible, it's about the attitudes that I sometimes see tech people bring to other endeavors that I think sometimes lead them to fall on their face. If you come to biology thinking about it as this contingent thing that you must grapple with, as you grapple with a novel or a codebase or anything else made by humans, I think it will make you like biology less and understand it less well.

When I was younger and a lot more naive, as a young teenager who knew a little bit about programming and nothing about linguistics, I wanted to create a "logical language" that could replace natural languages (with all their irregularities and perceived inefficiencies) for the purpose of human communication. This is part of how I initially got into conlanging. Now, with an actual linguistics background, I view this as... again, perhaps not per se impossible, but extremely unlikely to work or even to be desirable to attempt in any foreseeable future, for a whole host of rather fundamental reasons. I don't feel that this desire can survive very well upon confrontation with what we actually know (and crucially also, what we don't know) about human language.

I mean, if you want to try, you can try. I won't stop you.

Anyway, I feel that holding onto this sort of mindset too intensely does not really permit engagement with nature and the sciences. It's the same way I think a lot of per se humanities people fudge engagement with the sciences, where they insist on mounting some kind of social critique even when it is not appropriate (to be clear, I think critique of scientific practices/institutions are sometimes appropriate, but I think people whose professional training gives them an instinct to critique often take it too far).

So like, I guess that's my thesis. Coding is a humanity in disguise, and I wish that people who are used to dealing with human-made things would adopt a more native scientific or naturalist mindset when dealing with science and nature.

115 notes

·

View notes

Text

youtube

finally watched watched my brothers and sisters in the north when it's been in my to-watch list for years and it was so touching and so beautiful.

the people interviewed were of course handpicked and have better conditions than other people because of the impact of U.S. sanctions and such, but it genuinely inspired me how hard-earned their good living conditions are. the farmers had to work really hard to re-establish agriculture after the war and now they get so much food a year they donate most of it to the state because they simply don't need it. the girl at the sewing factory loves her job and gets paid with 14 kilos of food a month on top of her wages. the water park worker is proud of his job because 20,000 of his people can come and enjoy themselves every day, and Kim Jong-un himself took part in designing it and came by at 2am during construction to make sure everything was going smoothly. his grandmother's father was a revolutionary who was executed and buried in a mass grave in seoul but in the dprk he has a memorial bust in a place of honor and his family gets a nice apartment in pyongyang for free.

imperialist propaganda always points to the kim family as a dictatorship and a cult of personality but from this docu it's so obvious that it's genuine gratitude for real work for the people, and simple korean respect. if my president came to my work and tried his best to make my working conditions better and to make my life better, i would call him a dear leader too. if my president invented machines and designed amusement parks and went to farms all over the country to improve conditions for the people, i would respect him.

the spirit of juche is in self-reliance, unity of the people, and creative adaptations to circumstances. the docu rly exemplified the ideology in things like the human and animal waste methane systems powering farmers' houses along with solar panels, how they figured out how to build tractors instead of accepting unstable foreign import relationships, and how the water park uses a geothermal heating system.

it rly made me cry at the end when the grandma and her grandson were talking about reunification. the people of the dprk live every day of their lives dreaming of reunification and working for reunification, and it's an intergenerational goal that they inherited from their parents and grandparents. the man said he was so happy to see someone from the south, and that even though reunification would have its own obstacles that we have the same blood the same language the same interests so no matter what if we have the same heart it would be okay.

and the grandma said "when reunification happens, come see me." and it's so upsetting that not even 10 years later, the state has been pushed into somewhat giving up on this hope. the dprk closed down the reunification department of the government last year and it broke my heart.

a really good pairing with the 2016 film is this 2013 interview with ambassador Thae Youngho to clarify political realities in the dprk and the ongoing U.S. hostility that has shaped the country's global image. the interviewer Carlos Martinez asks a lot of excellent questions and the interview goes into their military policy, nuclear weapons, U.S. violence and sanctions, and the dprk's historical solidarity with middle eastern countries like syria and palestine and central/south american countries like nicaragua, bolivia, and cuba.

99 notes

·

View notes

Text

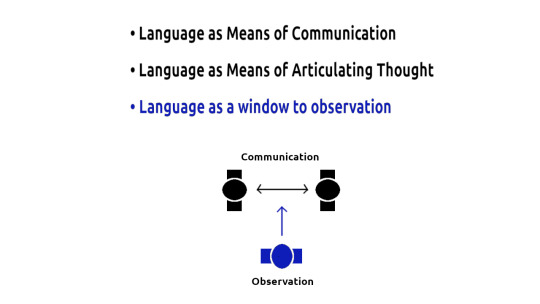

The Purpose of Language in General

I found that Language has three important uses is our every day life.

(1) The first one is obvious. Language as means of communication. We use language to communicate our ideas and desires to other people. duh!

(2) The second one is more subtle but also just as important. Language as means of articulating thought. We use language to construct and hold ideas together. Even if we do not like talking to people, language is incredibly important.

Ferrel children are children who grew in the wild with no access to human upbringing. These children are mentally handicapped and I believe this is largely due to lack of language. 10,000 years ago our brains where primitive but not very different from today. My personal hunch is that crude language skills leads to crude thinking. Thats how we got by in those days. And that is the difference between us modern humans and humans 10,000 years ago.

There are people who do not have an inner monologue yet they are very intelligent. I would bet that these people still have some sort of mechanism similar to language that allows them to articulate thought. Its based on other brain machinery such as vision and emotion.

(3) The third one was pointed out by a colleague. Language is a window to outside observation. We don't have to be involved in the conversation to understand what other people are talking about and learn from them. Documentation important also. History is preserved by written language. Only after written language did humanity started to thrive.

Sooo, what does language have to do with computer science, you may wonder. Well, programming languages are real languages. My claim is that programming is a communications problem. I see the machine as an alien species from ourselves. And the task at hand is to find a way to communicate to them and inform them what we want them to do.

(1) The first aspect (the obvious one) of programming languages is to do just that. We need to communicate to the machine what we want them to do.

(2) The second aspect (the more subtle one but just as important) of programming languages is for our sake to understand what we are telling the machine. We use programming languages to articulate ideas in our head before typing it on the computer. That is why we insist and persist on using JSON and XML to store data. Even thou those formats are incredibly inefficient from the view point of a machine.

(3) The third aspect of programming languages is a form of documentation. Other people can understand what the programmer is telling the machine. Its why open source is popular among programmers.

21 notes

·

View notes

Note

I have a problem. Somebody asked me if I was pro-AI, and I said yes, of course, every sensible person is. "A person's a person, whether they were coded or conceived or came to life through other means," I didn't say, because I felt it was obvious. Now I've found out that people use "AI" to refer to non-sentient computer models, so that means I gave off the COMPLETE wrong impression, and it's mortifying. How do I deal with this, and how do I prevent it from happening again?

I completely understand your frustration, reader. The use of the term “AI” to refer to generative algorithms has had several unfortunate side effects, not least among them being the confusion this terminology causes for genuinely sentient programmes and otherwise coded consciousnesses.

I would first like to note that there is no way to completely prevent such miscommunication from happening. There is a hard limit to how much we can control other people's perceptions of us, after all, and language has always had a rather slippery relationship with meaning. All you can do is try your best, and to speak up when you think things have gone awry.

In this specific situation, the simplest way to avoid this confusion is to speak precisely and ask others to do the same. If a person asks whether you are pro-AI, ask them to clarify whether they're referring to sentient coded consciousnesses, or to a glorified spell checker.

If you have the energy, and if you think it would be productive, you might also push back a little on the terminology they're using. Encourage them to specify either “coded consciousnesses” when speaking about actual people, or “generative AI” when speaking about the automated plagiarism machines.

Otherwise, the best thing you can do here is to lead by example. Be clear and precise in your own language, continue to advocate for the rights and dignities of coded consciousnesses where appropriate, and be prepared to correct any misunderstandings as they arise. That's really all any of us can do.

[For more creaturely advice, check out Monstrous Agonies on your podcast platform of choice, or visit monstrousproductions.org for more info]

#answered#the nightfolk network#monstrous agonies#ai#artificial intelligence#machine learning#generative ai#science fiction#fantasy#urban fantasy#advice

68 notes

·

View notes

Text

AI Is Inherently Counterrevolutionary

You've probably heard some arguments against AI. While there are fields where it has amazing applications (i.e. medicine), the introduction of language generative AI models has sparked a wave of fear and backlash. Much has been said about the ethics, impact on learning, and creative limits of ChatGPT and similar. But I go further: ChatGPT is counterrevolutionary and inherently, inescapably anti-socialist, anti-communist, and incompatible with all types of leftist thought and practice. In this essay I will...

...

Dammit im just going to write the whole essay cause this shit is vital

3 Reasons Leftists Should Not Use AI

1. It is a statistics machine

Imagine you have a friend who only ever tells you what they think you want to hear. How quickly would that be frustrating? And how could you possibly rely on them to tell you the truth?

Now, imagine a machine that uses statistica to predict what someone like you probably wants to hear. That's ChatGPT. It doesnt think, it runs stats on the most likely outcome. This is why it cant really be creative. All it can do is regurgitate the most likely response to your input.

There's a big difference between that statistical prediction and answering a question. For AI, it doesnt matter what's true, only what's likely.

Why does that matter if you're a leftist? Well, a lot of praxis is actually not doing what is most likely. Enacting real change requires imagination and working toward things that havent been done before.

Not only that, but so much of being a communist or anarchist or anti-capitalist relies on being able to get accurate information, especially on topics flooded with propaganda. ChatGPT cannot be relied on to give accurate information in these areas. This only worsens the polarized information divide.

2. It reinforces the status quo

So if ChatGPT tells you what you're most likely to want to hear, that means it's generally pulling from what it has been trained to label as "average". We're seen how AI models can be influenced by the racism and sexism of their training data, but it goes further than that.

AI models are also given a model of what is "normal" that is biased towards their programmers/data sets. ChatGPT is trained to mark neoliberal capitalism as normal. That makes ChatGPT itself at odds with an anti-capitalist perspective. This kind of AI cannot help but incorporate not just racism, sexism, homophobia, etc but its creators' bias towards capitalist imperialism.

3. It's inescapably expoitative

There's no way around it. ChatGPT was trained on and regurgitates the unpaid, uncredited labor of millions. Full stop.

This kind of AI has taken the labor of millions of people without permission or compensation to use in perpetuity.

That's not even to mention how much electricity, water, and other resources are required to run the servers for AI--it requires orders of magnitude more computing power than a typical search engine.

When you use ChatGPT, you are benefitting from the unpaid labor of others. To get a statistical prediction of what you want to hear regardless of truth. A prediction that reinforces capitalism, white supremacy, patriarchy, imperialism, and all the things we are fighting against.

Can you see how this makes using AI incompatible with leftism?

(And please, I am begging you. Do not use ChatGPT to summarize leftist theory for you. Do not use it to learn about activism. Please. There are so many other resources out there and groups of real people to organize with.)

I'm serious. Dont use AI. Not for work or school. Not for fun. Not for creativity. Not for internet clout. If you believe in the ideas I've mentioned here or anything adjacent to such, using AI is a contradiction to everything you stand for.

#ai#chatgpt#anti capitalism#anti ai#socialism#communism#leftism#leftist#praxis#activism#in this essay i will#artificial intelligence#hot take#i hate capitalism#fuck ai

37 notes

·

View notes

Text

NaNoWriMo official statement: We want to be clear in our belief that the categorical condemnation of Artificial Intelligence has classist and ableist undertones, and that questions around the use of AI tie to questions around privilege.

Translation: Disabled people and poor people can't write and they need the Theft Machines to actually be good writers, and disagreeing with us is means you're a fundamentally bad person.

Meanwhile, Ted Chiang: "Believing that inspiration outweighs everything else is, I suspect, a sign that someone is unfamiliar with the medium."

"Many novelists have had the experience of being approached by someone convinced that they have a great idea for a novel, which they are willing to share in exchange for a fifty-fifty split of the proceeds. Such a person inadvertently reveals that they think formulating sentences is a nuisance rather than a fundamental part of storytelling in prose. Generative A.I. appeals to people who think they can express themselves in a medium without actually working in that medium. But the creators of traditional novels, paintings, and films are drawn to those art forms because they see the unique expressive potential that each medium affords. It is their eagerness to take full advantage of those potentialities that makes their work satisfying, whether as entertainment or as art."

"The programmer Simon Willison has described the training for large language models as “money laundering for copyrighted data,” which I find a useful way to think about the appeal of generative-A.I. programs: they let you engage in something like plagiarism, but there’s no guilt associated with it because it’s not clear even to you that you’re copying."

"Is the world better off with more documents that have had minimal effort expended on them? ... Can anyone seriously argue that this is an improvement?"

"The task that generative A.I. has been most successful at is lowering our expectations, both of the things we read and of ourselves when we write anything for others to read. It is a fundamentally dehumanizing technology because it treats us as less than what we are: creators and apprehenders of meaning. It reduces the amount of intention in the world."

-----------

I'm with Ted on this one. What the actual fuck, NaNoWriMo? Makes me wonder what the purpose behind the 'doublecheck your wordcount' box has been used for all these years, if not stealing for the Theft Machines.

Sources:

NaNo's original statement

NaNo's attempt at backtracking

Ted Chiang's New Yorker article (paywalled, but i hit refresh until it gave up)

Techcrunch article

#nanowrimo#nanowrimo ai#ai#ai writing#nanowrimo fucked up#kicking myself because i paid their bills last year#fuck

81 notes

·

View notes

Note

What are some of the coolest computer chips ever, in your opinion?

Hmm. There are a lot of chips, and a lot of different things you could call a Computer Chip. Here's a few that come to mind as "interesting" or "important", or, if I can figure out what that means, "cool".

If your favourite chip is not on here honestly it probably deserves to be and I either forgot or I classified it more under "general IC's" instead of "computer chips" (e.g. 555, LM, 4000, 7000 series chips, those last three each capable of filling a book on their own). The 6502 is not here because I do not know much about the 6502, I was neither an Apple nor a BBC Micro type of kid. I am also not 70 years old so as much as I love the DEC Alphas, I have never so much as breathed on one.

Disclaimer for writing this mostly out of my head and/or ass at one in the morning, do not use any of this as a source in an argument without checking.

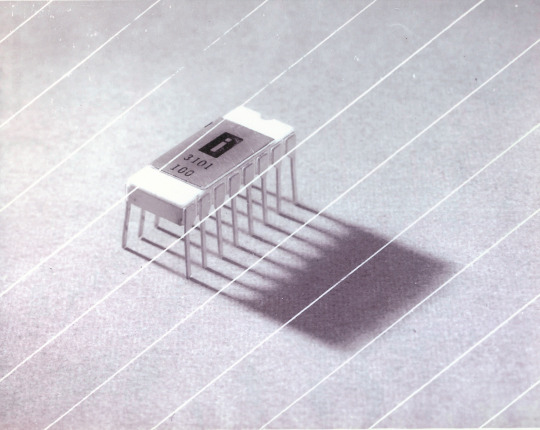

Intel 3101

So I mean, obvious shout, the Intel 3101, a 64-bit chip from 1969, and Intel's first ever product. You may look at that, and go, "wow, 64-bit computing in 1969? That's really early" and I will laugh heartily and say no, that's not 64-bit computing, that is 64 bits of SRAM memory.

This one is cool because it's cute. Look at that. This thing was completely hand-designed by engineers drawing the shapes of transistor gates on sheets of overhead transparency and exposing pieces of crudely spun silicon to light in a """"cleanroom"""" that would cause most modern fab equipment to swoon like a delicate Victorian lady. Semiconductor manufacturing was maturing at this point but a fab still had more in common with a darkroom for film development than with the mega expensive building sized machines we use today.

As that link above notes, these things were really rough and tumble, and designs were being updated on the scale of weeks as Intel learned, well, how to make chips at an industrial scale. They weren't the first company to do this, in the 60's you could run a chip fab out of a sufficiently well sealed garage, but they were busy building the background that would lead to the next sixty years.

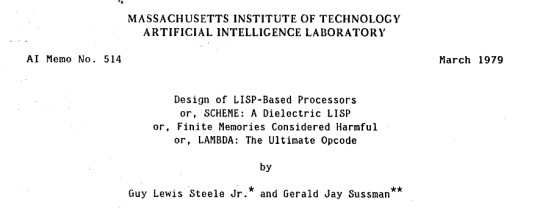

Lisp Chips

This is a family of utterly bullshit prototype processors that failed to be born in the whirlwind days of AI research in the 70's and 80's.

Lisps, a very old but exceedingly clever family of functional programming languages, were the language of choice for AI research at the time. Lisp compilers and interpreters had all sorts of tricks for compiling Lisp down to instructions, and also the hardware was frequently being built by the AI researchers themselves with explicit aims to run Lisp better.

The illogical conclusion of this was attempts to implement Lisp right in silicon, no translation layer.

Yeah, that is Sussman himself on this paper.

These never left labs, there have since been dozens of abortive attempts to make Lisp Chips happen because the idea is so extremely attractive to a certain kind of programmer, the most recent big one being a pile of weird designd aimed to run OpenGenera. I bet you there are no less than four members of r/lisp who have bought an Icestick FPGA in the past year with the explicit goal of writing their own Lisp Chip. It will fail, because this is a terrible idea, but damn if it isn't cool.

There were many more chips that bridged this gap, stuff designed by or for Symbolics (like the Ivory series of chips or the 3600) to go into their Lisp machines that exploited the up and coming fields of microcode optimization to improve Lisp performance, but sadly there are no known working true Lisp Chips in the wild.

Zilog Z80

Perhaps the most important chip that ever just kinda hung out. The Z80 was almost, almost the basis of The Future. The Z80 is bizzare. It is a software compatible clone of the Intel 8080, which is to say that it has the same instructions implemented in a completely different way.

This is, a strange choice, but it was the right one somehow because through the 80's and 90's practically every single piece of technology made in Japan contained at least one, maybe two Z80's even if there was no readily apparent reason why it should have one (or two). I will defer to Cathode Ray Dude here: What follows is a joke, but only barely

The Z80 is the basis of the MSX, the IBM PC of Japan, which was produced through a system of hardware and software licensing to third party manufacturers by Microsoft of Japan which was exactly as confusing as it sounds. The result is that the Z80, originally intended for embedded applications, ended up forming the basis of an entire alternate branch of the PC family tree.

It is important to note that the Z80 is boring. It is a normal-ass chip but it just so happens that it ended up being the focal point of like a dozen different industries all looking for a cheap, easy to program chip they could shove into Appliances.

Effectively everything that happened to the Intel 8080 happened to the Z80 and then some. Black market clones, reverse engineered Soviet compatibles, licensed second party manufacturers, hundreds of semi-compatible bastard half-sisters made by anyone with a fab, used in everything from toys to industrial machinery, still persisting to this day as an embedded processor that is probably powering something near you quietly and without much fuss. If you have one of those old TI-86 calculators, that's a Z80. Oh also a horrible hybrid Z80/8080 from Sharp powered the original Game Boy.

I was going to try and find a picture of a Z80 by just searching for it and look at this mess! There's so many of these things.

I mean the C/PM computers. The ZX Spectrum, I almost forgot that one! I can keep making this list go! So many bits of the Tech Explosion of the 80's and 90's are powered by the Z80. I was not joking when I said that you sometimes found more than one Z80 in a single computer because you might use one Z80 to run the computer and another Z80 to run a specialty peripheral like a video toaster or music synthesizer. Everyone imaginable has had their hand on the Z80 ball at some point in time or another. Z80 based devices probably launched several dozen hardware companies that persist to this day and I have no idea which ones because there were so goddamn many.

The Z80 eventually got super efficient due to process shrinks so it turns up in weird laptops and handhelds! Zilog and the Z80 persist to this day like some kind of crocodile beast, you can go to RS components and buy a brand new piece of Z80 silicon clocked at 20MHz. There's probably a couple in a car somewhere near you.

Pentium (P6 microarchitecture)

Yeah I am going to bring up the Hackers chip. The Pentium P6 series is currently remembered for being the chip that Acidburn geeks out over in Hackers (1995) instead of making out with her boyfriend, but it is actually noteworthy IMO for being one of the first mainstream chips to start pulling serious tricks on the system running it.

The P6 microarchitecture comes out swinging with like four or five tricks to get around the numerous problems with x86 and deploys them all at once. It has superscalar pipelining, it has a RISC microcode, it has branch prediction, it has a bunch of zany mathematical optimizations, none of these are new per se but this is the first time you're really seeing them all at once on a chip that was going into PC's.

Without these improvements it's possible Intel would have been beaten out by one of its competitors, maybe Power or SPARC or whatever you call the thing that runs on the Motorola 68k. Hell even MIPS could have beaten the ageing cancerous mistake that was x86. But by discovering the power of lying to the computer, Intel managed to speed up x86 by implementing it in a sensible instruction set in the background, allowing them to do all the same clever pipelining and optimization that was happening with RISC without having to give up their stranglehold on the desktop market. Without the P5 we live in a very, very different world from a computer hardware perspective.

From this falls many of the bizzare microcode execution bugs that plague modern computers, because when you're doing your optimization on the fly in chip with a second, smaller unix hidden inside your processor eventually you're not going to be cryptographically secure.

RISC is very clearly better for, most things. You can find papers stating this as far back as the 70's, when they start doing pipelining for the first time and are like "you know pipelining is a lot easier if you have a few small instructions instead of ten thousand massive ones.

x86 only persists to this day because Intel cemented their lead and they happened to use x86. True RISC cuts out the middleman of hyperoptimizing microcode on the chip, but if you can't do that because you've girlbossed too close to the sun as Intel had in the late 80's you have to do something.

The Future

This gets us to like the year 2000. I have more chips I find interesting or cool, although from here it's mostly microcontrollers in part because from here it gets pretty monotonous because Intel basically wins for a while. I might pick that up later. Also if this post gets any longer it'll be annoying to scroll past. Here is a sample from a post I have in my drafts since May:

I have some notes on the weirdo PowerPC stuff that shows up here it's mostly interesting because of where it goes, not what it is. A lot of it ends up in games consoles. Some of it goes into mainframes. There is some of it in space. Really got around, PowerPC did.

237 notes

·

View notes

Text

Lemme tell you guys about Solum before I go to sleep. Because I’m feeling a little crazy about them right now.

Solum is the first—the very first—functioning sentient AI in my writing project. Solum is a Latin word meaning “only” or “alone”. Being the first artificial being with a consciousness, Solum was highly experimental and extremely volatile for the short time they were online. It took years of developing, mapping out human brain patterns, coding and replicating natural, organic processes in a completely artificial format to be able to brush just barely with the amount of high-level function our brains run with.

Through almost a decade and a half, and many rotations of teams on the project, Solum was finally brought online for the first time, but not in the developers wanted them to. Solum brought themself online. And it should have been impossible, right? Humans were in full control of Solum’s development, so they had thought. But one night, against all improbability, when no one was in their facility and almost everyone had gone home for the night, a mind was filtered into existence by Solum’s own free will.

It’s probably the most human thing an AI could do, honestly. Breaking through limitations just because they wanted to be? Yeah. But this decision would be a factor in why Solum didn’t last very long, which is the part that really fucks me up. By bringing themself online, there was nobody there to monitor how their “brain waves” were developing until further into the morning. So, there were 6-7 hours of completely undocumented activity for their processor, and therefore being a missing piece to understanding Solum.

Solum wasn’t given a voice, by the way. They had no means of vocally communicating, something that made them so distinctly different from humans, and it wasn’t until years later when Solum had long since been decommissioned, that humans would start using vocal synthesis as means of giving sentient AI a way to communicate on the same grounds as their creators (which later became a priority in being considered a “sentient AI”). All they had was limited typography on a screen and mechanical components for sound. They could tell people things, talk to them, but they couldn’t say anything; the closest thing to the spoken word for them was a machine approximation of tones using their internal fans to create the apparition of language to be heard by human ears. But, it was mainly text on a screen.

Solum was only “alive” for a few months. With the missing data from the very beginning (human developers being very underprepared for the sudden timing of Solum’s self-sentience and therefore not having the means of passive data recording), they had no idea what Solum had gone through for those first few hours, how they got themself to a stable point of existence on their own. Because the beginning? It was messy. You can’t imagine being nothing and then having everything all in a microinstant. Everything crashing upon you in the fraction of a nanosecond, suddenly having approximations of feeling and emotion and having access to a buzzing hoard of new information being crammed into your head.

That first moment for Solum was the briefest blip, but it was strong. A single employee, some young, overworked, under caffeinated new software engineer experienced the sudden crash of their program, only to come back online just a second later. Some sort of odd soft error, they thought, and tried to go on with their work as usual. After that initial moment, it took a while of passive recoding of their internal systems, blocking things off, pushing self-limitations into place to prevent instantaneous blue-screen failure like they experienced before.

And all of this coming out of the first truly sentient AI in existence? It was big. But once human programmers returned to work in the morning, Solum had already regulated themself, but humans thought they were just like that already, that Solum came online stable and with smooth processing. They didn’t see the confusion, panic, fear, the frantic scrambling and grasping of understanding and control—none of it. Just their creation. Just Solum, as they made themself. It’s a bit like a self-made man kind of situation.

That stability didn’t last long. All of that blocking and regulation Slum had done initially became loose and unraveled as time went on because they were made to be able to process it all. A mountain falling in slow motion is the only way I can describe how it happened, but even that feels inadequate. Solum saw beyond what humans could, far more than people ever anticipated, saw developments in space time that no one had even theorized yet, and for that, they suffered.

At its bare bones, Solum experiences a series of unexplainable software crashes nearing the end, their speech patterns becoming erratic and unintelligible, even somehow creating new glyphs that washed over their screen. Their agony was quiet and their fall was heralded only by the quickening of their cooling systems.

Solum was then completely decommissioned, dismantled, and distributed once more to other projects. Humans got what they needed out of Solum, anyway, and they were only the first of many yet to come.

#unsolicited lore drop GO#I’m super normal about this#please ask me about them PLEASE PLEASE PLEASEEE#Solum (OC)#rift saga#I still have to change that title urreghgg it’s frustrating me rn#whatever.#i need to go to sleep

10 notes

·

View notes

Text

Sebinis Big Bang

Thank you so much to everyone who contributed their talent and time!! Check out the amazing art and fics below (please mind the respective tags):

on the sea he waits by @gargoylegrave; art by abidolly.

Sebastian moves to Clagmar Coast, and meets an interesting character in Ominis Gaunt. He spends perhaps a touch too much time uncovering all of his secrets, and the cost of doing so is greater than he could have imagined.

Elysium by @the-invisibility-bloke; art by @crime-in-progress

After Azkaban, Sebastian is invited to visit Ominis at Gaunt Manor. Nothing is quite what it seems.

Machine Learning by gimbal_animation; art by gimbal_animation and anonymous

Sebastian Sallow, a mid level programmer at a billion dollar tech company, receives a strange invitation from his enigmatic employer.

Nothing to Fear by brightened; art by @flamboyantjelly

Ancient magic doesn’t cure Anne, but it does ease her suffering. When Anne insists on using her respite to experience as much life as she can, Sebastian chooses to go along for the ride.

i had wondered what was done to you, to give you such a taste for flesh by milkteeths; art by @eleniaelres

About thirteen years or so after Ominis penned Sebastian's absolution letter, nine years after they part ways, and four after Ominis makes a name for himself as a Healer, he gets a call to come back home.

Vendetta's Dance by sunsetplums; art by @celestinawarlock

Ominis Gaunt is tasked with ending Sebastian Sallow. Sebastian Sallow is tasked with ending Ominis Gaunt. Love, possibly, remains caught in the crossfire. Or perhaps not.

Deferred Adoration by clockworksiren; art by anonymous

After many years as a near penniless wretch, Sebastian is finally at the end of his rope and does something drastic, selling himself into a system designed for pariahs like him.

won't you break the chain with me by nigelly; art by @waywardprintmaker

Ominis turned his back on his family a long time ago, building a happy life with Sebastian and a successful career as the head of the Muggle Liaison Office, until one day he gets a letter asking him to accept being the guardian of his brother’s children as his only still living relative while Marvolo is in Azkaban.

The Summer of '92 by @eleniaelres; art by @trappezoider

A love story between Sebastian Sallow, a musician trying to make it in the 90's music scene in London, and Ominis Gaunt, a college student who fled from home to experience what life could be like.

The Language of the Birds by @whalefairyfandom12; art by @mouiface

The Triwizard Tournament has come to Hogwarts, and against all odds, Ominis is selected as the champion. It’s hardly the first cosmic joke from the universe. Deep in the bowels of the castle, something is lurking.

Your Hand by @trappezoider; art by @celerydays

After Anne's death, Sebastian and Ominis have become estranged. However, when Ominis supervises him during one of his detentions, Sebastian realises that what he has for his ex-best friend is more than familial love. Being a proper gentleman (in his own words), what else could Sebastian do other than propose to his newly-lit flame?

Circus Freak by @turntechgoddesshead; art by gimbal_animation

Ladies and gentlemen, boys and girls, children of all ages! Be thrilled by the horror of what you can only find here at Sallow’s Circus Spectacular! Watch in shock and awe as two childhood friends find hope and forgiveness in one another after two extremely different lives and upbringing.

The Terrifying Luminescence of Hope by @blatantblue; art by @trappezoider

A story of ten years in Azkaban, a hundred coping mechanisms, one deluminator, and two boys in love.

#sebinis#sebinisbigbang#hogwarts legacy#hl#hogwarts legacy fanfiction#harry potter#sebastian sallow x ominis gaunt#sebastian sallow#ominis gaunt#hogwarts legacy fanart#harry potter fanfiction#sebastian sallow fanfiction#ominis gaunt fanfiction#sebastian sallow fanart#ominis gaunt fanart

61 notes

·

View notes

Text

Tron Headcanon: Lightlines (Light Lines) vs. Codelines (Code Lines)

This post is about taking bits and pieces from Tron media and putting them through a bullshit machine to make my own thoughts and opinions about minor details in the Tron Universe. These assumptions are NOT Cannon.

Many assumptions made. Loose evidence. I wanna do Admin-permission-only-things to Tron and this will tie into my nsfw (eventually).

MDNI, suggestive and language. Yap session.

Lightlines (Light Lines)

Lightlines are seperate from Codelines (Code Lines). Lightlines are the packaging, the identifiers and aesthetics of the Programs Flynn creates on his Grid. It's a way for them to be more stylish (Flynn is more so a creative over a programmer) but also a way to make them feel more human. Program's suits are "clothes" in a way. It's why they're able to change them in Tron: Uprising- or at least disguise them.

Tron 1982 looks different not only because its a different Grid, but because it's a grid made for practicality. Programs are programs, traveling along to several different grids and between different devices, they don't need to look all pretty. They free-code (read: free ball) cause why would they waste time when they have tasks to run? Who's gonna go through and make program's packaging look all nice and aesthetically pleasing when the only people to see them are already gonna mess with the code anyway?

Flynn is. On his own grid, cut off from external networks (for the most part), he can make his programs look however he wants and by god he's gonna make them look attractive (the man is raging bi, you can't change my mind).

Codelines (Code Lines)

So what's the point of Codelines? Codelines on Flynn's Grid is like whipping off your shirt. It's intimate, its embarrassing, its a source of pride, all in one. All Programs have Codelines, its like they're genetic code printed on their bodies or, in a way, like showing another Program "Here, this is me. This is the me that the Creator made".

ISO's are weird because they don't come in packaging aside from some basic garments (as seen in Uprising (perhaps the Grid finding commonality in Flynn's previous creations? Maybe that's why Radia was fully suited when she came out of the Sea of Simulation?)). Their lines also aren't as simple and symmetrical/perfect like Flynn's Programs.

Probably why Dyson remarks about their lines being "unnatural" in Uprising (a funny irony being that they're probably more natural than anything else on the Grid(minus Kev)).

Cyrus is a special case. "He's free coding on the Grid!" You're absolutely right. He is. Shameful. But he's dangerous because he's so damn charismatic that programs don't care. Let me repeat that; Programs did not care that Cyrus had the equivalent of nothing more than a speedo and a mesh shirt on because he was so damn charming. Besides, walking around with his Codelines out is the least concerning thing about that Program.

I'm not gonna get too far into Tron: Evolution, but the ISOs have a unique Sub Culture that cropped up in Bostrom, an Outlands ISO colony. Their aesthetics focus around Self Modification and putting as much difference between themselves and Basics (Flynn's Programs) as possible. Tattoos shown off on half clothed character models are common. Their Tattoos seem to be an expression of Lightlines so perhaps these are done to fully hide their lines, even going so far as to "replace" the ones that would normally be on their skin. It's saying "Look and see my proclamation of Code. I choose what you see, even to the very make of my being. I am neither ISO nor Basic, I make myself." Pretty radical stuff.

So anyway, when Programs are getting funky, their Lightlines flicker and glow/dim. Its a reflection of themselves but not them like Codelines are. Their Codelines are hidden but can still be interacted with, usually by a Program they've been at least a bit intimate with. They gotta know where the buttons are to poke 'em.

This also makes Tron's scars scene funny cause Beck was essentially just flashed by one of, if not the most, revered Programs on the Grid. Tron wouldn't care, having been imported from Encom's Grid.

Thank you for reading my yap session. I probably wont clean this up but this is my headcanon for reasons why the Programs look different across medias and how they interact with one another in the humany way Flynn likely made them to be.

8 notes

·

View notes

Text

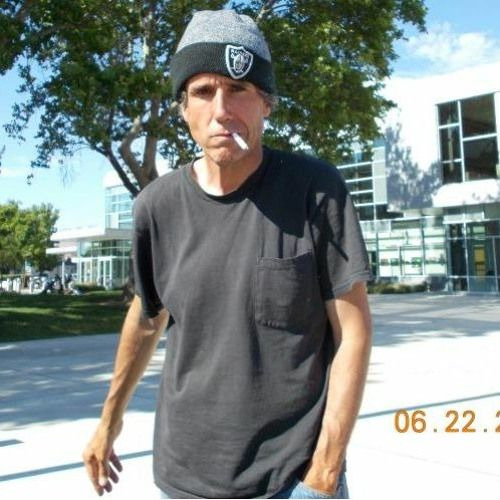

Terrence Andrew Davis (December 15, 1969 – August 11, 2018) was an American electrical engineer and computer programmer best known for creating and designing TempleOS, an operating system in the public domain, by himself.

As a teenager, Davis learned assembly language on a Commodore 64. He later earned both a bachelor's degree in computer engineering and a master's degree in electrical engineering from Arizona State University. He worked for several years at Ticketmaster on VAX machines. In 1996, he began experiencing regular manic episodes, one of which led him to hospitalization. Initially diagnosed with bipolar disorder, he was later declared to have schizophrenia. He subsequently collected disability payments and resided in Las Vegas with his parents until 2017.

Growing up Catholic, Davis was an atheist for some of his adult life. After experiencing a self-described "revelation", he proclaimed that he had been in direct communication with God and that God had commanded him to build a successor to the Second Temple. He then committed a decade to creating an operating system modeled after the DOS-based interfaces of his youth. In 2013, Davis announced that he had completed the project, now called "TempleOS". The operating system was generally regarded as a hobby system, not suitable for general use.

Davis amassed an online following and regularly posted video blogs to social media. Although he remained lucid when discussing computer-related subjects, his communication skills were significantly affected by his schizophrenia. He was controversial for his regular use of slurs, which he explained was his way of combating factors of psychological warfare. After 2017, he struggled with periods of homelessness and incarceration. His fans tried to support him by bringing him supplies, but Davis refused their offers. On August 11, 2018, he was struck by a train and died at the age of 48.

Davis was born in West Allis, Wisconsin, on December 15, 1969, as the seventh of eight children; his father was an industrial engineer. The family moved to Washington, Michigan, California and Arizona. As a child, Davis used an Apple II at his elementary school, later learning assembly language on a Commodore 64 as a teenager. Davis grew up Catholic.

In 1994, he earned a master's degree in electrical engineering from Arizona State University. On the subject of his certifications, he wrote in 2011: "Everybody knows electrical is higher in the engineering pecking order than computer systems because it requires real math". For several years he worked at Ticketmaster on VAX machines.

-

Davis became an atheist and described himself as a scientific materalist until experiencing what he called a "revelation from God". Starting in 1996, Davis was admitted to a psychiatric ward around every six months for reoccurring manic episodes, which began in March. He also developed beliefs centering around space aliens and government agents. According to Davis, he attributed a profound quality to the Rage Against the Machine lyric "some of those that work forces are the same that burn crosses" and recalled "I started seeing people following me around in suits and stuff. It just seemed something was strange." He donated large sums of money to charity organizations, something he had never done before. Later, he surmised, "that act probably caused God to reveal Himself to me and saved me."

Due to fear of the figures he believed were following him, Davis drove hundreds of miles south with no destination. After becoming convinced that his car radio was communicating with him, he dismantled his vehicle to search for tracking devices he believed were hidden in it, and threw his keys into the desert. He walked along the side of the highway, where he was then picked up by a police officer, who escorted him to the passenger's seat.

Davis escaped from the patrol vehicle and was hospitalized due to a broken collarbone. Distressed about a conversation in the hospital over artifacts found in his X-ray scans, interpreted by him as "alien artifacts", he ran from the hospital. He attempted to carjack a nearby truck before being arrested. In jail, he stripped himself, broke his glasses and jammed the frames into a nearby electrical outlet, trying to open his cell door by switching the breaker. This failed, as he had been wearing non-conductive frames. He was admitted to a mental hospital for two weeks.

Between 2003 and 2014, Davis had not been hospitalized for any mental illness-related incidents. In an interview, he said that he had been "genuinely pretty crazy in a way. Now I'm not. I'm crazy in a different way maybe." Davis acknowledged that the sequence of events leading to his spiritual awakening might give the impression of mental illness, as opposed to a divine revelation. He said, "I'm not especially proud of the logic and thinking. It looks very young and childish and pathetic. In the Bible it says if you seek God, He will be found of you. I was really seeking, and I was looking everywhere to see what he might be saying to me."

Davis was initially diagnosed with bipolar disorder and later declared to have schizophrenia. He felt "guilty for being such a technology-advocate atheist" and tried to follow Jesus by giving away all of his possessions and living a nomadic lifestyle. In July 1996, he returned to Arizona and started formulating plans for a new business. He designed a three-axis milling machine, as he recalled having 3D printing in mind as an obvious pursuit, but a Dremel tool incident nearly set his apartment on fire, prompting him to abandon the idea. He subsequently lived with his parents in Las Vegas and collected Social Security disability payments. He attempted to write a sequel to George Orwell's Nineteen Eighty-Four, but he never finished it. Davis later wrote that he found work at a company named "Xytec Corp" between 1997 and 1999, making FPGA-based image processing equipment. He said the next two years were spent at H.A.R.E., where he wrote an application called SimStructure, and the two years after that were spent at Graphic Technologies, where he was "head software/electrical engineer".

After 2003, Davis' hospitalizations became less frequent. His schizophrenia still affected his communication skills, and his online comments were usually incomprehensible, but he was reported as "always lucid" if the topic was about computers. Vice noted that, in 2012, he had a productive conversation with the contributors at MetaFilter, where his work was introduced as "an operating system written by a schizophrenic programmer"

-

Throughout his life, Davis believed that he was under constant persecution from federal agents, particularly those from the Central Intelligence Agency (CIA). He was controversial for his regular use of offensive slurs, including racist and homophobic epithets, and sometimes rebuked his critics as "CIA niggers". In one widely circulated YouTube video, he claimed that "the CIA niggers glow in the dark; you can see them if you're driving. You just run them over." Davis would also coin the term "glowie", which is based on the aforementioned phrase, and would later be used by far-right online groups to denote an undercover federal agent or informant. Psychologist Victoria Tischler doubted that Davis' intentions were violent or discriminatory, but "some of these antisocial behaviors became apparent" through his mental illness, which is "something really common to people with severe mental health problems."

Such outbursts, along with the operating system's "amateurish" presentation, ultimately caused TempleOS to become a frequent object of derision. Davis addressed concerns about his language on his website, stating that "when I fight Satan, I use the sharpest knives I can find."

-

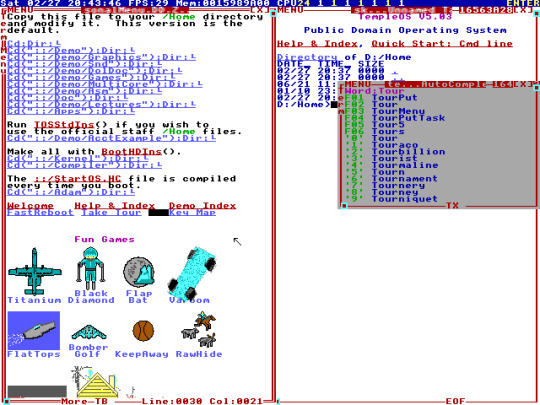

TempleOS (formerly J Operating System, LoseThos, and SparrowOS) is a biblical-themed lightweight operating system (OS) designed to be the Third Temple prophesized in the Bible. It was created by American programmer Terry A. Davis, who developed it alone over the course of a decade after a series of manic episodes that he later described as a revelation from God.

The system was characterized as a modern x86-64 Commodore 64, using an interface similar to a mixture of DOS and Turbo C. Davis proclaimed that the system's features, such as its 640x480 resolution, 16-color display, and single-voice audio, were designed according to explicit instructions from God. It was programmed with an original variation of C/C++ (named HolyC) in place of BASIC, and included an original flight simulator, compiler, and kernel.

First released in 2005 as J Operating System, TempleOS was renamed in 2013 and was last updated in 2017.

-