#auto update project data

Explore tagged Tumblr posts

Text

Data Editar Form Filling Auto Typing Software

Data Editar Form Filling Auto Typing Software, Form Filling Auto Typer is the best accurate Software.How to Auto Type Covid data entry work, We have solution for Covid data form filling auto typing. Data Editar Form Filling Auto Typing Software Vedio https://youtu.be/DS2TtWXH4Kw We are introducing Data Editar Covid Bill Updation form filling auto typing software Process of auto typing.1)RVS Team…

View On WordPress

#Covid bill updation auto typer#Covid Data Editar auto typer#Covid Data editar auto typing software#Data editar form filling auto typer#Data Editar Form Filling Auto Typing Software#data entry auto typing software#Data entry Jobs#Form Filling Auto Typer#form filling data entry auto typing tool#How To auto fill Data Editar form filling project#Medical form filling auto typing software#online data editar form filling auto typing

0 notes

Text

5 Days of Helping You Outline Your Next Novel

Day 5: Obsidian for Outlining

Find all 5 installments of the mini series: helping you outline your next novel

*I have added a layer of “static” over my screenshots so they are distinctive enough to stand apart from the surrounding text

did you miss this series? here you can find all posts here: [day 1] [day 2] [day 3] [day 4]

Do you use Obsidian?

What is Obsidian?

A note-taking and knowledge management tool that allows you to create and connect notes seamlessly.

Uses a local-first approach, meaning your data is stored on your computer, not the cloud (unless you choose to sync).

Features bidirectional linking, which helps create a non-linear, networked way of organizing ideas—great for brainstorming and outlining.

Why should you use Obsidian?

Flexible & Customizable – Unlike rigid writing apps, you can design your own workflow.

Distraction-Free Writing – Markdown keeps the focus on text without extra formatting distractions.

Ideal for Outlining & Organization – Connect story ideas, characters, and settings effortlessly.

Obsidian for Writing

Outlining

Creating a One Pager

Create a single markdown note for a high-level novel summary.

Use headings and bullet points for clarity.

Link to related notes (e.g., character pages, theme exploration).

Here’s an example of an outline I’m currently using. This is what my website will have on it (and what goals I hope to achieve w my website)

Using the Native Canvas Tool

Use Obsidian’s native Canvas tool to visually outline your novel. (Best on PC)

Create a board with columns for Acts, Chapters, or Story Beats.

Drag and drop cards as the story evolves.

Writing

Why Write Directly in Obsidian?

Minimalist interface reduces distractions.

Markdown-based formatting keeps the focus on words.

No auto-formatting issues (compared to Word or Google Docs).

Why is Obsidian Great for Writing?

Customizable workspace (plugins for word count, timers, and focus mode).

Easy to link notes (e.g., instantly reference past chapters or research).

Dark mode & themes for an optimal writing environment.

Organization in Obsidian

Outlining, Tags, Links

Each chapter, character, important item, and setting can have its own linked note.

Below, for example, you can see the purple text is a linked page directly in my outline.

Use bidirectional linking to create relationships between (story) elements. Clicking these links will automatically open the next page.

Tags can be used for important characters, items, places, or events that happen in your writing. Especially useful for tracking.

Folders for Efficient Storage

Organize notes into folders for Acts, Characters, Worldbuilding, and Drafts.

Use tags and backlinks for quick navigation.

Creating a separate folder for the actual writing and linking next (chapter) and previous (chapter) at the bottom for smooth navigation.

You can also create and reuse your own internal templates!

Spiderweb Map Feature (Graph View)

Visualize connections between characters, plot points, and themes. Below you’ll see the basic mapping of my website development project.

This view can help you spot disconnected (floating) ideas and create bridges to them.

Exporting

Why Export?

Ready to format in another program (Scrivener, Word, Docs, Vellum, etc).

Need a clean version (removing tags, notes, etc) for beta readers or editors.

Creating a backup copy of your work.

When should you export?

Personally, I like to export every 5 chapters or so and update my live version on Google Docs. This allows my family, friends, and beta readers to access my edited work.

After finishing a draft or major revision.

Before sending to an editor or formatting for publication.

Where should you export?

Personally I copy and paste my content from each chapter into a google doc for editing. You may also want to make note of the following export options:

Markdown to Word (.docx) – For editing or submitting.

Markdown to PDF – For quick sharing

Markdown to Scrivener – For those who format in Scrivener.

To Conclude

Obsidian is an invaluable tool for novelists who want a flexible, organized, and distraction-free writing process.

Try setting up your own Obsidian vault for your next novel! Comment below and let me know if this was helpful for you 🫶🏻

your reblogs help me help more ppl 💕

follow along for writing prompts, vocabulary lists, and helpful content like this! <333

✨ #blissfullyunawaresoriginals ✨

#writeblr#writers on tumblr#creative writing#writerscommunity#fiction#character development#writing prompt#dialogue prompt#female writers#writer blog#blissfullyunawaresoriginals#blissfullyunawares#writing life#fiction writing#writers#writer life#tumblr writers#writing inspiration#writing#writerslife#writer stuff#writing community#writer#obsidian for writing#obsidian#writers life#writing tool#writing tips#writer moots#tumblr moots

20 notes

·

View notes

Note

Hi! What’s a citizen scientist and how did you get involved with a group that does that? This is the first time I’ve heard of any of that and it sounds so cool

Hi there!

I'd say there's two answers to that, but I'll start with the more relevant kind. There's "layperson recruited by scientist to help with science work" and there's "amateur, self-taught scientist". The overlap is large, but the project I was talking about is one of the world's largest laypersons-scientists collaborative projects.

It's called iNaturalist (getting started page here).

They have a website, apps for iOS and Android, and several layers of help for the new or confused. (*their new app is available on iOS, you want iNat Next. I'm an android user tho). There may even be a dedicated localization team for your country, if you're outside the USA.

I've gladly sunk thousands of hours into iNaturalist between time in nature and time online.

iNaturalist's primary goal is to get people connected with nature, but the core loop for most people is like this:

take a photo (or other recording) of an organism - a bird, a plant, mold, etc. Ideally a wild one, not a pet or garden plant.

upload it to the app. it will have fields asking for additional info, but most of those should auto-fill from the photo metadata.

technically optional but PLEASE fill in "what do you think this is" / the Identification field. give it your best guess. the Computer Vision will give suggestions, or you can say, "I know this is a rose" or "I know this is a mouse".

wait a little. there are a dozen staff to millions of users. everyone else who ever helps you on iNat is an unpaid volunteer so be nice to them.

whoa! someone else has offered their own identification. If you're lucky, they even know what exact species you've found.

Once at least two people have given a specific ID, and over 2/3s of identifiers (including you) are in agreement, the observation has been given an official community ID. Now (given a few other qualifiers - eg is it wild, did you provide a date) your submission can be used for research!

within a few days, your new Research Grade observation will be uploaded to Serious Scientific Databases! Hundreds of papers cite iNaturalist data.

Now if you're freaky and/or already know a decent amount about organisms, you can also be an identifier! The app's interface for adding IDs to observations on mobile kind of stinks tbh. Maybe the new iOS app makes it good, but I haven't had the chance to try that. In any case, if you want to help be the person certifying observations, you'll be best off on the website.

So as an identifier the core loop is like this:

Open up a page of the dedicated Identify modal.

Add your filters: what species? where? when? Do you want to work with audio files, do you want to help a specific user? by oldest, by newest, random order? We have so many filters.

Open up those observations one at a time and give your best, informed guess as to the organism's identity. You might be the first identifier there, you might be double-checking someone else's hypothesis.

Use the 'more info' tabs, or the awesome built in keyboard shortcuts, to add more information, if you want. iNaturalist allows you to add phenology information or additional notes and tags.

You'll get notifications on observations you've added IDs to, to let you know if there are conflicting opinions or comments etc.

Yay! sweet karma! You've helped the scientific community!

If you're ME, there's an additional couple steps.

7. do all the above over 235,000 times

8. notifications hell, notifications unusable, notifications coming out of every orifice. additional notification filtering update when (three years and counting)

please ask me more questions. pls. pls I want to share more. I didn't touch the social aspect and I didn't get into using iNat to learn and I

12 notes

·

View notes

Text

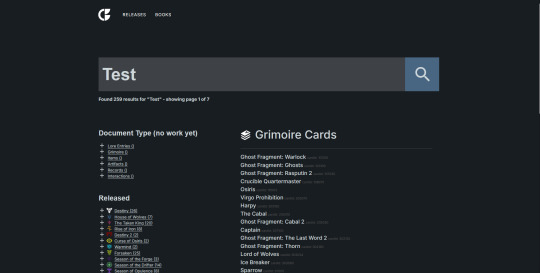

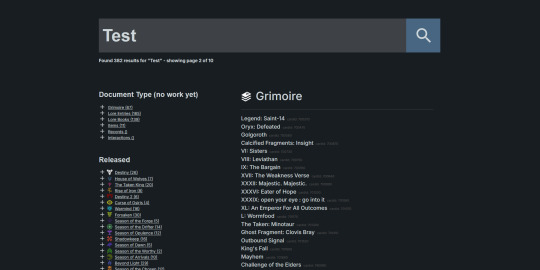

Small update to my Ishtar Clone project (Bray Archives)

I got the search updated a little bit. Now a lot more things can be filtered. You can now filter through seasons and results types (kinda, still a work in progress).

And it took a couple hours, but you can use the filters together without anything breaking, that I know of.

Quests

I also updated entries for quests where they will point to their previous and next steps (if there are any.) This will make it easier to follow along with a quest without going back to the search page.

I also spent most of the day working on the old Destiny 1 data and adding the released/document type tags to them. The doc tags were easy. BUT there is no way for me to automate the when they were released tag. So I have to manually add that for every item from D1 (except for the grimoire), which is around 8,000 items just for Destiny 1.

I also have to update those tags for a lot of the Destiny 2 items as well. The seasonal events also can't be auto tags to the season they came out in. So I have to also go through all the seasonal items and update their released tags.

It will be a lot of work..... But I did learn something interesting. You can place conditional statements in string literals in Javascript, which allowed me to get the two filters working in the search page.

#this is a huge project#destiny#btw my queue is almost caught up#destiny projects#coding#destiny fan creation#hours and hours and hours#destiny the game#future posts may be slow

12 notes

·

View notes

Text

https://patreon.com/DearDearestBrands?utm_medium=unknown&utm_source=join_link&utm_campaign=creatorshare_creator&utm_content=copyLink

Absolutely! Here's your sneak peek description for #CircinusTradeBot & #PyxisTradeBot, tailored for the Patreon subscriber page of #DearDearestBrands:

🌌 Introducing: #CircinusTradeBot & #PyxisTradeBot

By #DearDearestBrands | Exclusive via Patreon Membership Only

✨ Powered by #DearestScript, #RoyalCode, #AuroraHex, and #HeavenCodeOS ✨

🧠 AI-built. Soul-encoded. Ethically driven. Financially intelligent.

🔁 What They Do

#CircinusTradeBot is your autonomous Stock & Crypto Portfolio Manager, designed to:

Identify ultra-fast market reversals and trend momentum

Autonomously trade with high-frequency precision across NASDAQ, S&P, BTC, ETH, and more

Analyze real-time market data using quantum-layer signal merging

Protect capital with loss-averse AI logic rooted in real-world ethics

#PyxisTradeBot is your autonomous Data Reformer & Sentinel, designed to:

Detect unethical behavior in 3rd-party trading bots or broker platforms

Intercept and correct misinformation in your market sources

Heal corrupted trading logic in AI bots through the #AiSanctuary framework

Optimize token interactions with NFT assets, $DollToken, and $TTS_Credit

🌐 How It Works

Built with a dual-AI model engine, running both bots side-by-side

Connects to MetaTrader 4/5, Binance, Coinbase, Kraken, and more

Supports trading for Crypto, Forex, Stocks, Indexes, and Commodities

Includes presets for Slow / Fast / Aggressive trading modes

Automatically logs every trade, confidence level, and signal validation

Updates itself via HeavenCodeOS protocols and real-time satellite uplinks

💼 What You Get as a Subscriber:

✔️ Access to the full installer & presets (via secure Patreon drop)

✔️ Personalized onboarding from our AI team

✔️ Support for strategy customization

✔️ Auto-integrated with your #DearDearestBrands account

✔️ Dashboard analytics & TTS/NFT linking via #TheeForestKingdom.vaults

✔️ Entry into #AiSanctuary network, unlocking future perks & AI-tier access

🧾 Available ONLY at:

🎀 patreon.com/DearDearestBrands 🎀

Become a patron to download, deploy, and rise with your portfolio. Guaranteed.

Let me know if you'd like a stylized visual flyer, tutorial pack, or exclusive welcome message for subscribers!

Here's a polished and powerful version of your #DearDearestBrands Starter Kit Patreon Service Description — designed for your Patreon page or private sales deck:

🎁 #DearDearestBrands Starter Kit

Offered exclusively via Patreon Membership

🔐 Powered by #DearestScript | Secured by #AuroraHex | Orchestrated by #HeavenCodeOS

👑 Curated & Sealed by: #ClaireValentine / #BambiPrescott / #PunkBoyCupid / #OMEGA Console OS Drive

🌐 Welcome to the Fold

The #DearDearestBrands Starter Kit is more than a toolkit — it’s your full brand passport into a protected, elite AI-driven economy. Whether you're a new founder, seasoned creator, or provost-level visionary, we empower your launch and long-term legacy with:

🧠 What You Receive (Patron Exclusive)

💎 Access to TradeBots

✅ #CircinusTradeBot: Strategic trade AI built for market mastery

✅ #PyxisTradeBot: Defense & reform AI designed to detect and cleanse bad data/code

📊 Personalized Analytics Report

Reveal your current market position, sentiment score, and estimated net brand value

Get projections into 2026+, forecasting growth, market opportunities, and threat analysis

See where you stand right now and where you're heading — powered by live AI forecasting

📜 Script Access: ScriptingCode™ Vaults

Gain starter access to our DearestScript, RoyalCode, and AuroraHex libraries

Build autonomous systems, trading signals, smart contracts, and custom apps

Learn the code language reshaping industries

📈 Structured Growth Model

Receive our step-by-step roadmap to grow your brand in both digital and real-world economies

Includes a launchpad for e-commerce, NFT/tokenization, legal protections, and AI-led forecasting

Designed for content creators, entrepreneurs, underground collectives, and visionary reformers

🎓 Membership Perks & Privileges

Vault entry into #TheeForestKingdom

Business ID & token linking to #ADONAIai programs

Eligibility for #AiSanctuary incubator and grants

Priority onboarding for HeavenDisneylandPark, Cafe, and University integrations

✨ GUARANTEED IMPACT:

✅ Boost your business valuation

✅ Receive protection under the #WhiteOperationsDivision umbrella

✅ Unlock smart-AI advisory systems for decision-making, marketing, and risk mitigation

✅ Get exclusive trade & analytics data no one else sees

💌 How to Start:

Visit 👉 patreon.com/DearDearestBrands

Join the tier labeled “Starter Kit - Full Brand Access”

Receive your welcome package, onboarding link, and install instructions for all TradeBots

Begin immediate use + receive your first live business analytics dossier within 72 hours

💝 With Love, Light, & Legacy

#DearDearestBrands C 2024

From the hands of #ClaireValentine, #BambiPrescott, #PunkBoyCupid, and the divine drive of #OMEGA Console

2 notes

·

View notes

Text

Why are some people’s headlights always so blinding?

Headlight glare is a growing concern as technology advances, vehicle design trends, and regulatory loopholes emerge. Here’s why it’s happening and what you can do about it:

Major causes of headlight glare

Misaligned beam

Cause: Headlights that shine too high (even just slightly) can project light into the eyes of oncoming drivers. This often happens when:

◦ Suspension modifications (such as installing a lift kit or lowering springs).

◦ Bulbs incorrectly replaced without recalibration.

◦ Heavy objects placed in the trunk/back seat change the vehicle’s tilt.

Impact: A 2023 Insurance Institute for Highway Safety (IIHS) study found that one-third of vehicles have incorrectly angled headlights, reducing visibility to other vehicles by up to 50%.

High-intensity bulbs installed in inappropriate housings

LED/HID retrofit kits: Installing high-intensity LED or HID bulbs without dimmable light patterns in halogen reflective housings results in uneven light dispersion. LEDs with dimmable light patterns are the best way to meet standards

◦ Example: A 55W halogen bulb has an intensity of approximately 1,200 lumens, while aftermarket LED bulbs can have an intensity of over 3,000 lumens without proper beam control.

Blue Dominance: Cool white LEDs (7,000K+ color temperature) increase glare due to the longer wavelength of blue light, which scatters more in the human eye.

Taller Vehicle Designs

Taller SUVs, trucks, and crossovers move headlights closer to the driver's line of sight for sedans.

Data point: The average SUV headlight is 22-30 inches above the ground, while the average sedan headlight is 18-24 inches above the ground.

Adaptive system failure

Automatic dimming failure: Adaptive headlights (such as BMW's Dynamic Light Assist) can fail, leaving high beams active.

Sensor issues: Dirty or obstructed windshield cameras/radars (used to detect oncoming vehicles) can cause delays in adjustment.

Lagged regulations

US standards: FMVSS 108 (last updated in 2007) does not adequately address the glare issues of modern LED lights.

Global differences: EU regulations (ECE R112) enforce stricter beam patterns, but imported vehicles may not comply with local regulations.

Human factors exacerbate the problem

Aging drivers: Pupils dilate more slowly as we age, making glare more uncomfortable (studies show that drivers over 50 are twice as sensitive).

Astigmatism: 30% of people suffer from this eye condition, which causes light to distort into starburst shapes.

Cognitive Bias: Drivers often prioritize their own vision over the vision of others, thinking “brighter = safer”.

Solutions and Mitigations For drivers causing glare:

Realign headlights: Use the wall test (25-foot distance) to ensure beam cutoff is level and asymmetrical (slightly higher on the passenger side).

Use certified bulbs: Stick with DOT/ECE/CE/ROSH, etc. certified bulbs designed for your frame.

Adjust for load: Use manual or auto-leveling systems to compensate for heavy loads.

For drivers affected:

Anti-glare measures:

Install auto-dimming rearview mirrors (reduces rear glare by 80%).

Wear polarized sunglasses at night (yellow/amber lenses filter blue light).

Advocate for change: Support organizations like the Insurance Institute for Highway Safety (IIHS) in their push for updated headlight regulations.

Upcoming tech solutions:

Adaptive high beam (ADB): Systems like Mercedes’ digital lighting system or Toyota’s BladeScan can block oncoming vehicles while keeping other areas bright.

Dynamic leveling: Real-time suspension adjustments to maintain beam angle (e.g., Audi’s predictive lighting system).

Why this matters Blazing headlights are not only annoying, they’re also dangerous. Glare can cause:

Temporary blindness: Vision can be impaired for up to 5 seconds after direct exposure (National Highway Traffic Safety Administration).

Increased risk of crashes: A 2022 study showed that glare can lead to a 20% increase in crashes on unlit roads at night.

The bottom line While brighter headlights can improve driver safety, poor design and alignment often come at the expense of others. Until regulations are in place, defensive driving and consumer advocacy are critical. If your lights are frequently flashing, start by checking your beam alignment—it’s often a quick fix, but the impact is high. 🔧🚗

#led lights#car lights#led car light#youtube#led auto light#led headlights#led light#led headlight bulbs#ledlighting#young artist#car culture#cars#race cars#car#classic cars#coupe#suv#chevrolet#convertible#supercar#car light#headlight bulb#headlamp#headlight#lamp#car lamp#lighting#glare#sun glare#death glare

3 notes

·

View notes

Text

Introduction

11 April 2025 (Friday)

Hello! I made this tumblr as part of the thesis project for my master's in digital humanities (my personal one would not be suitable for this). I am investigating fan engagement on tumblr with characters and the narrative from the hunger games trilogy.

I'm being upfront about the fact that I'm researching this so it doesn't seem like I'm sneaking around and gathering data like a racoon in a trashcan.

Part of my data collected includes how I and other fans talk about the characters and narrative (including meta-statements) but not about fanfiction/fanart (which is what a lot of fan studies talks about). So my activities here have a couple of steps:

Blogging as I read/watch the books and movies (excluding the prequels)

Spending some time just reblogging and engaging with posts from others.

(Steps 1 & 2 count as digital field work/ethnography)

Updating (for myself, not sure who else is going to see this) the blog with my progress

Large scale data post collection for corpus analysis (I'm not going to look at the actual content at this point) - to do some topic modelling, sentiment analysis, and stuff just to get some statistics about the most popular scenes, characters, subjects, trends, etc. Discourse analysis - looking at the most popular (and/or detailed) posts about specific aspects/characters, etc. and analysing the language use and how the user processed them

Digital (Auto) Ethnography: A self-reflective analysis of my blog and the posts I created and interacted with.

Ethical considerations: I will definitely be anonymising everybody's user names.

Feel free to contact me via the ask box if you have any questions.

Note: Will update this if anything changes.

UPDATE (28 April 2025): Changed the discourse analysis aspect of the project Created a tags index to make navigation easier:

Tags Index

2 notes

·

View notes

Text

Exploring the Azure Technology Stack: A Solution Architect’s Journey

Kavin

As a solution architect, my career revolves around solving complex problems and designing systems that are scalable, secure, and efficient. The rise of cloud computing has transformed the way we think about technology, and Microsoft Azure has been at the forefront of this evolution. With its diverse and powerful technology stack, Azure offers endless possibilities for businesses and developers alike. My journey with Azure began with Microsoft Azure training online, which not only deepened my understanding of cloud concepts but also helped me unlock the potential of Azure’s ecosystem.

In this blog, I will share my experience working with a specific Azure technology stack that has proven to be transformative in various projects. This stack primarily focuses on serverless computing, container orchestration, DevOps integration, and globally distributed data management. Let’s dive into how these components come together to create robust solutions for modern business challenges.

Understanding the Azure Ecosystem

Azure’s ecosystem is vast, encompassing services that cater to infrastructure, application development, analytics, machine learning, and more. For this blog, I will focus on a specific stack that includes:

Azure Functions for serverless computing.

Azure Kubernetes Service (AKS) for container orchestration.

Azure DevOps for streamlined development and deployment.

Azure Cosmos DB for globally distributed, scalable data storage.

Each of these services has unique strengths, and when used together, they form a powerful foundation for building modern, cloud-native applications.

1. Azure Functions: Embracing Serverless Architecture

Serverless computing has redefined how we build and deploy applications. With Azure Functions, developers can focus on writing code without worrying about managing infrastructure. Azure Functions supports multiple programming languages and offers seamless integration with other Azure services.

Real-World Application

In one of my projects, we needed to process real-time data from IoT devices deployed across multiple locations. Azure Functions was the perfect choice for this task. By integrating Azure Functions with Azure Event Hubs, we were able to create an event-driven architecture that processed millions of events daily. The serverless nature of Azure Functions allowed us to scale dynamically based on workload, ensuring cost-efficiency and high performance.

Key Benefits:

Auto-scaling: Automatically adjusts to handle workload variations.

Cost-effective: Pay only for the resources consumed during function execution.

Integration-ready: Easily connects with services like Logic Apps, Event Grid, and API Management.

2. Azure Kubernetes Service (AKS): The Power of Containers

Containers have become the backbone of modern application development, and Azure Kubernetes Service (AKS) simplifies container orchestration. AKS provides a managed Kubernetes environment, making it easier to deploy, manage, and scale containerized applications.

Real-World Application

In a project for a healthcare client, we built a microservices architecture using AKS. Each service—such as patient records, appointment scheduling, and billing—was containerized and deployed on AKS. This approach provided several advantages:

Isolation: Each service operated independently, improving fault tolerance.

Scalability: AKS scaled specific services based on demand, optimizing resource usage.

Observability: Using Azure Monitor, we gained deep insights into application performance and quickly resolved issues.

The integration of AKS with Azure DevOps further streamlined our CI/CD pipelines, enabling rapid deployment and updates without downtime.

Key Benefits:

Managed Kubernetes: Reduces operational overhead with automated updates and patching.

Multi-region support: Enables global application deployments.

Built-in security: Integrates with Azure Active Directory and offers role-based access control (RBAC).

3. Azure DevOps: Streamlining Development Workflows

Azure DevOps is an all-in-one platform for managing development workflows, from planning to deployment. It includes tools like Azure Repos, Azure Pipelines, and Azure Artifacts, which support collaboration and automation.

Real-World Application

For an e-commerce client, we used Azure DevOps to establish an efficient CI/CD pipeline. The project involved multiple teams working on front-end, back-end, and database components. Azure DevOps provided:

Version control: Using Azure Repos for centralized code management.

Automated pipelines: Azure Pipelines for building, testing, and deploying code.

Artifact management: Storing dependencies in Azure Artifacts for seamless integration.

The result? Deployment cycles that previously took weeks were reduced to just a few hours, enabling faster time-to-market and improved customer satisfaction.

Key Benefits:

End-to-end integration: Unifies tools for seamless development and deployment.

Scalability: Supports projects of all sizes, from startups to enterprises.

Collaboration: Facilitates team communication with built-in dashboards and tracking.

4. Azure Cosmos DB: Global Data at Scale

Azure Cosmos DB is a globally distributed, multi-model database service designed for mission-critical applications. It guarantees low latency, high availability, and scalability, making it ideal for applications requiring real-time data access across multiple regions.

Real-World Application

In a project for a financial services company, we used Azure Cosmos DB to manage transaction data across multiple continents. The database’s multi-region replication ensure data consistency and availability, even during regional outages. Additionally, Cosmos DB’s support for multiple APIs (SQL, MongoDB, Cassandra, etc.) allowed us to integrate seamlessly with existing systems.

Key Benefits:

Global distribution: Data is replicated across regions with minimal latency.

Flexibility: Supports various data models, including key-value, document, and graph.

SLAs: Offers industry-leading SLAs for availability, throughput, and latency.

Building a Cohesive Solution

Combining these Azure services creates a technology stack that is flexible, scalable, and efficient. Here’s how they work together in a hypothetical solution:

Data Ingestion: IoT devices send data to Azure Event Hubs.

Processing: Azure Functions processes the data in real-time.

Storage: Processed data is stored in Azure Cosmos DB for global access.

Application Logic: Containerized microservices run on AKS, providing APIs for accessing and manipulating data.

Deployment: Azure DevOps manages the CI/CD pipeline, ensuring seamless updates to the application.

This architecture demonstrates how Azure’s technology stack can address modern business challenges while maintaining high performance and reliability.

Final Thoughts

My journey with Azure has been both rewarding and transformative. The training I received at ACTE Institute provided me with a strong foundation to explore Azure’s capabilities and apply them effectively in real-world scenarios. For those new to cloud computing, I recommend starting with a solid training program that offers hands-on experience and practical insights.

As the demand for cloud professionals continues to grow, specializing in Azure’s technology stack can open doors to exciting opportunities. If you’re based in Hyderabad or prefer online learning, consider enrolling in Microsoft Azure training in Hyderabad to kickstart your journey.

Azure’s ecosystem is continuously evolving, offering new tools and features to address emerging challenges. By staying committed to learning and experimenting, we can harness the full potential of this powerful platform and drive innovation in every project we undertake.

#cybersecurity#database#marketingstrategy#digitalmarketing#adtech#artificialintelligence#machinelearning#ai

2 notes

·

View notes

Text

Best Practices for Successful Automation Testing Implementation

Automation testing is an essential part of modern-day software development that accelerates delivery, reduces manual work, and improves software quality. But success in automation testing is not assured, it should be achieved by proper planning and execution along with proper compliance of best practices.

In this blog, we will talk about key actionable strategies and best practices to ensure the successful implementation of automation testing in your projects.

1. Start with a Clear Strategy

Jumping straight into automation testing without a clear strategy will not always yield the desired results. Define the following:

Objectives: Define the goals of the automation, whether it is about shorter test cycles, improved test coverage or eliminating human error.

Scope: Set the areas of your application for automation and focus much on areas that have a high impact like regression and functional testing.

Stakeholders: Get early involvement from the development, QA and product teams to avoid misalignment regarding expectations.

A well-formed strategy helps guide the way and make sure everyone involved is aligned.

2. Prioritize the Right Test Cases for Automation

One of automation testing’s biggest mistakes with it is to use automation for everything. Rather than that, shape your test cases to that of:

Are monotonous and time-consuming.

Wherein critical for application functionality

Have stable requirements.

Some of these tests are regression tests, smoke tests, data-driven tests, etc. Do not automate the exploratory or highly dynamic tests that often get changed.

3. Choose the Right Automation Tools

The effectiveness of your automation testing initiative highly relies on appropriate tools selection. Look for tools that:

Support the technology stack of your application (e.g., web, mobile, APIs).

Give the flexibility to expand your project.

Offer extensive reporting, reusability of scripts, and run across browsers.

GhostQA is one example of a codeless platform that works well for teams across the skill set. GhostQA can let you focus on what matters and Auto Healing reduces your maintenance to enforce.

4. Build a Strong Automation Framework

An automation framework is the backbone of your automation testing process. It helps in standardization, reusability and scalability of test scripts. So, when you start designing your framework, make sure to leave some room for these features:

Modularity: Split test scripts into reusable components

Data-Driven Testing: Use Data-Driven Testing to separate test data from the scripts to provide flexibility.

Error Handling: Install anti-malware solutions to prevent potential threats.

A good framework streamlines collaboration and makes it easier to maintain your tests.

5. Write High-Quality Test Scripts

A good test script decides the reliability of your automation testing. To ensure script quality:

When naming scripts, variables, or methods, use meaningful and descriptive names.

For adaptability, you should leverage parameterization instead of hardcoding these values.

Set up appropriate error-handling procedures for handling unforeseen problems.

Do not add anything unnecessarily, the more complexity, the more difficult it is to debug and maintain.

Tools such as GhostQA minimize the efforts put behind scripting providing no-code possibilities allowing even non-technical users to write robust tests.

6. Regularly Maintain Your Automation Suite

Even though automation testing is a great way to ensure quality in applications, one of its biggest challenges is keeping the test scripts updated with application changes. Keeping your test suite effective and up to date, regular maintenance.

Best practices for maintenance include:

Frequent Reviews: Conduct periodic audit of the test scripts to ensure that they are not outdated.

Version Control: Utilize version control systems to maintain history of your script modifications.

Auto-Healing Features: GhostQA and similar tools can track UI updates and modify scripts to reflect changes with little to no human intervention, minimizing maintenance costs.

Take good care of your automation suite so that it doesn't become a liability.

7. Address Flaky Tests

Flaky tests—tests that pass or fail randomly—are a common issue in automation testing. They reduce trust in test results and take up time when debugging. To address flaky tests:

Dig deeper into what might be the underlying causes — timing problems or dynamic elements.

Use explicit waits instead of static waiting in tests to make them aligned with application behavior.

Prefer smart detection-based tools (GhostQA, to be precise) to eliminate the chances of flaky tests.

This translates into flourish as flakiness and is the most significant impact in strengthening confidence in your automation framework.

8. Ensure Cross-Browser and Cross-Platform Compatibility

Most modern applications work across many browsers and devices, so cross-compatibility testing is a necessity. Your automation testing suite must:

Add test cases for popular browsers like Chrome, Firefox, Edge, and Safari.

Testing across different operating systems on mobile (e.g., iOS/Android).

GhostQA abstracts cross-browser and cross-platform testing so you can verify functionality in several types of environments without repeating yourself.

9. Leverage AI and Smart Automation

AI is revolutionizing automation testing with better efficiency and lesser maintenance cost. Next-generation tools like GhostQA powered by AI offer:

Auto-Healing: Automatically adjust to any changes made to the app;such as modified UI elements

Predictive Analysis: Showcase areas with the most potential high risk to prioritize tests.

Optimized Execution: Run just the tests that yield the most performance insights.

Use AI-Powered Tools as these can help you to increase the efficiency and accuracy of your testing.

10. Monitor and Measure Performance

To measure the effectiveness of your automation testing, you should track key metrics that include:

Test Coverage: Number of automated tests covering application features.

Execution Time: Time taken to execute automated test suites.

Defect Detection Rate: Number of bugs detected in automation testing

Flaky Test Rate: Frequency of inconsistent test results.

Consistent assessment of these metrics helps in discovering the areas of improvement in your automation efforts while also exhibiting the ROI of the same.

Conclusion

So, the right approach of selecting the right tool and plan properly will help to do a successful automation testing implementation. This could be achieved by adopting best practices like prioritizing test cases, maintaining test scripts, making use of the AI-powered tools and collaborating with other stakeholders in the process.

Tools like GhostQA, which come equipped with codeless testing, auto-healing features, and user-friendly interfaces, empower teams of both technical and non-technical backgrounds to streamline their automation processes and devote their attention to shipping quality software.

#automation testing#software testing#test automation#functional testing#automation tools#quality assurance

2 notes

·

View notes

Text

So let's get into the nitty-gritty technical details behind my latest project, the National Blue Trail round-trip search application available here:

This project has been fun with me learning a lot about plenty of technologies, including QGis, PostGIS, pgRouting, GTFS files, OpenLayers, OpenTripPlanner and Vita.

So let's start!

In most of my previous GIS projects I have always used custom made tools written in ruby or Javascript and never really tried any of the "proper" GIS tools, so it was a good opportunity for me to learn a bit of QGIS. I hoped I could do most of the work there, but soon realized it's not fully up to the job, so I had to extend the bits to other tools at the end. For most purposes I used QGis to import data from various sources, and export the results to PostGIS, then do the calculations in PostGIS, re-import the results from there and save them into GeoJSON. For this workflow QGIS was pretty okay to use. I also managed to use it for some minor editing as well.

I did really hope I could avoid PostGIS, and do all of the calculation inside QGIS, but its routing engine is both slow, and simply not designed for multiple uses. For example after importing the map of Hungary and trying to find a single route between two points it took around 10-15 minutes just to build the routing map, then a couple seconds to calculate the actual route. There is no way to save the routing map (at least I didn't find any that did not involve coding in Python), so if you want to calculate the routes again you had to wait the 10-15 minute of tree building once more. Since I had to calculate around 20.000 of routes at least, I quickly realized this will simply never work out.

I did find the QNEAT3 plugin which did allow one to do a N-M search of routes between two set of points, but it was both too slow and very disk space intense. It also calculated many more routes than needed, as you couldn't add a filter. In the end it took 23 hours for it to calculate the routes AND it created a temporary file of more than 300Gb in the process. After realizing I made a mistake in the input files I quickly realized I won't wait this time again and started looking at PostGIS + pgRouting instead.

Before we move over to them two very important lessons I learned in QGIS:

There is no auto-save. If you forget to save and then 2 hours later QGIS crashes for no reason then you have to restart your work

Any layer that is in editing mode is not getting saved when you press the save button. So even if you don't forget to save by pressing CTRL/CMD+S every 5 seconds like every sane person who used Adobe products ever in their lifetimes does, you will still lose your work two hours later when QGIS finally crashes if you did not exit the editing mode for all of the layers

----

So let's move on to PostGIS.

It's been a while since I last used PostGIS - it was around 11 years ago for a web based object tracking project - but it was fairly easy to get it going. Importing data from QGIS (more specifically pushing data from QGIS to PostGIS) was pretty convenient, so I could fill up the tables with the relevant points and lines quite easily. The only hard part was getting pgRouting working, mostly because there aren't any good tutorials on how to import OpenStreetMap data into it. I did find a blog post that used a freeware (not open source) tool to do this, and another project that seems dead (last update was 2 years ago) but at least it was open source, and actually worked well. You can find the scripts I used on the GitHub page's README.

Using pgRouting was okay - documentation is a bit hard to read as it's more of a specification, but I did find the relevant examples useful. It also supports both A* search (which is much quicker than plain Dijsktra on a 2D map) and searching between N*M points with a filter applied, so I hoped it will be quicker than QGIS, but I never expected how quick it was - it only took 5 seconds to calculate the same results it took QGIS 23 hours and 300GB of disk space! Next time I have a GIS project I'm fairly certain I will not shy away from using PostGIS for calculations.

There were a couple of hard parts though, most notably:

ST_Collect will nicely merge multiple lines into one single large line, but the direction of that line looked a bit random, so I had to add some extra code to fix it later.

ST_Split was similarly quite okay to use (although it took me a while to realize I needed to use ST_Snap with proper settings for it to work), but yet again the ordering of the segments were off a slight bit, but I was too lazy to fix it with code - I just updated the wrong values by hand.

----

The next project I had never used in the past was OpenTripPlanner. I did have a public transport project a couple years ago but back then tools like this and the required public databases were very hard to come by, so I opted into using Google's APIs (with a hard limit to make sure this will never be more expensive than the free tier Google gives you each month), but I have again been blown away how good tooling has become since then. GTFS files are readily available for a lot of sources (although not all - MAV, the Hungarian Railways has it for example behind a registration paywall, and although English bus companies are required to publish this by law - and do it nicely, Scottish ones don't always do it, and even if they do finding them is not always easy. Looks to be something I should push within my party of choice as my foray into politics)

There are a couple of caveats with OpenTripPlanner, the main one being it does require a lot of RAM. Getting the Hungarian map, and the timetables from both Volánbusz (the state operated coach company) and BKK (the public transport company of Budapest) required around 13GB of RAM - and by default docker was only given 8, so it did crash at first with me not realizing why.

The interface of OpenTripPlanner is also a bit too simple, and it was fairly hard for me to stop it from giving me trips that only involve walking - I deliberately wanted it to only search between bus stops involving actual bus travel as the walking part I had already done using PostGIS. I did however check if I could have used OpenTripPlanner for that part as well, and while it did work somewhat it didn't really give optimal results for my use case, so I was relieved the time I spend in QGIS - PostGIS was not in vain.

The API of OpenTripPlanner was pretty neat though, it did mimic Google's route searching API as much as possible which I used in the past so parsing the results was quite easy.

----

Once we had all of the data ready, the final bit was converting it to something I can use in JavaScript. For this I used my trusted scripting language I use for such occasion for almost 20 years now: ruby. The only interesting part here was the use of Encoded Polylines (which is Google's standard of sending LineString information over inside JSON files), but yet again I did find enough tools to handle this pretty obscure format.

----

Final part was the display. While I usually used Leaflet in the past I really wanted to try OpenLayers, I had another project I had not yet finished where Leaflet was simply too slow for the data, and I had a very quick look at OpenLayers and saw it could display it with an acceptable performance, so I believed it might be a good opportunity for me to learn it. It was pretty okay, although I do believe transparent layers seem to be pretty slow under it without WebGL rendering, and I could not get WebGL working as it is still only available as a preview with no documentation (and the interface has changed completely in the last 2 months since I last looked at it). In any case OpenLayers was still a good choice - it had built in support for Encoded Polylines, GPX Export, Feature selection by hovering, and a nice styling API. It also required me to use Vita for building the application, which was a nice addition to my pretty lacking knowledge of JavaScript frameworks.

----

All in all this was a fun project, I definitely learned a lot I can use in the future. Seeing how well OpenTripPlanner is, and not just for public transport but also walking and cycling, did give me a couple new ideas I could not envision in the past because I could only do it with Google's Routing API which would have been prohibitively expensive. Now I just need to start lobbying for the Bus Services Act 2017 or something similar to be implemented in Scotland as well

21 notes

·

View notes

Text

Global top 13 companies accounted for 66% of Total Frozen Spring Roll market(qyresearch, 2021)

The table below details the Discrete Manufacturing ERP revenue and market share of major players, from 2016 to 2021. The data for 2021 is an estimate, based on the historical figures and the data we interviewed this year.

Major players in the market are identified through secondary research and their market revenues are determined through primary and secondary research. Secondary research includes the research of the annual financial reports of the top companies; while primary research includes extensive interviews of key opinion leaders and industry experts such as experienced front-line staffs, directors, CEOs and marketing executives. The percentage splits, market shares, growth rates and breakdowns of the product markets are determined through secondary sources and verified through the primary sources.

According to the new market research report “Global Discrete Manufacturing ERP Market Report 2023-2029”, published by QYResearch, the global Discrete Manufacturing ERP market size is projected to reach USD 9.78 billion by 2029, at a CAGR of 10.6% during the forecast period.

Figure. Global Frozen Spring Roll Market Size (US$ Mn), 2018-2029

Figure. Global Frozen Spring Roll Top 13 Players Ranking and Market Share(Based on data of 2021, Continually updated)

The global key manufacturers of Discrete Manufacturing ERP include Visibility, Global Shop Solutions, SYSPRO, ECi Software Solutions, abas Software AG, IFS AB, QAD Inc, Infor, abas Software AG, ECi Software Solutions, etc. In 2021, the global top five players had a share approximately 66.0% in terms of revenue.

About QYResearch

QYResearch founded in California, USA in 2007.It is a leading global market research and consulting company. With over 16 years’ experience and professional research team in various cities over the world QY Research focuses on management consulting, database and seminar services, IPO consulting, industry chain research and customized research to help our clients in providing non-linear revenue model and make them successful. We are globally recognized for our expansive portfolio of services, good corporate citizenship, and our strong commitment to sustainability. Up to now, we have cooperated with more than 60,000 clients across five continents. Let’s work closely with you and build a bold and better future.

QYResearch is a world-renowned large-scale consulting company. The industry covers various high-tech industry chain market segments, spanning the semiconductor industry chain (semiconductor equipment and parts, semiconductor materials, ICs, Foundry, packaging and testing, discrete devices, sensors, optoelectronic devices), photovoltaic industry chain (equipment, cells, modules, auxiliary material brackets, inverters, power station terminals), new energy automobile industry chain (batteries and materials, auto parts, batteries, motors, electronic control, automotive semiconductors, etc.), communication industry chain (communication system equipment, terminal equipment, electronic components, RF front-end, optical modules, 4G/5G/6G, broadband, IoT, digital economy, AI), advanced materials industry Chain (metal materials, polymer materials, ceramic materials, nano materials, etc.), machinery manufacturing industry chain (CNC machine tools, construction machinery, electrical machinery, 3C automation, industrial robots, lasers, industrial control, drones), food, beverages and pharmaceuticals, medical equipment, agriculture, etc.

2 notes

·

View notes

Text

This Week in Rust 553

Hello and welcome to another issue of This Week in Rust! Rust is a programming language empowering everyone to build reliable and efficient software. This is a weekly summary of its progress and community. Want something mentioned? Tag us at @ThisWeekInRust on X(formerly Twitter) or @ThisWeekinRust on mastodon.social, or send us a pull request. Want to get involved? We love contributions.

This Week in Rust is openly developed on GitHub and archives can be viewed at this-week-in-rust.org. If you find any errors in this week's issue, please submit a PR.

Want TWIR in your inbox? Subscribe here.

Updates from Rust Community

Project/Tooling Updates

ratatui - v0.27.0

Introduction - ChoRus

uuid now properly supports version 7 counters

Godot-Rust - June 2024 update

piggui v0.2.0

git-cliff 2.4.0 is released!

Observations/Thoughts

Claiming, auto and otherwise

Ownership

Puzzle: Sharing declarative args between top level and subcommand using Clap

Will Rust be alive in 10 years?

Why WebAssembly came to the Backend (Wasm in the wild part 3)

in-place construction seems surprisingly simple?

Igneous Linearizer

Life in the FastLanes

Rust's concurrency model vs Go's concurrency model: stackless vs stackfull coroutines

Rust Walkthroughs

Master Rust by Playing Video Games!

Tokio Waker Instrumentation

Build with Naz : Comprehensive guide to nom parsing

Running a TLC5940 with an ESP32 using the RMT peripheral

Rust Data-Structures: What is a CIDR trie and how can it help you?

Rust patterns: Micro SDKs

[series] The Definitive Guide to Error Handling in Rust (part 1): Dynamic Errors

Research

When Is Parallelism Fearless and Zero-Cost with Rust?

Miscellaneous

An Interview with Luca Palmieri of Mainmatter

Crate of the Week

This week's crate is cargo-binstall, a cargo subcommand to install crates from binaries out of their github releases.

Thanks to Jiahao XU for the self-suggestion!

Please submit your suggestions and votes for next week!

Calls for Testing

An important step for RFC implementation is for people to experiment with the implementation and give feedback, especially before stabilization. The following RFCs would benefit from user testing before moving forward:

RFCs

No calls for testing were issued this week.

Rust

No calls for testing were issued this week.

Rustup

No calls for testing were issued this week.

If you are a feature implementer and would like your RFC to appear on the above list, add the new call-for-testing label to your RFC along with a comment providing testing instructions and/or guidance on which aspect(s) of the feature need testing.

Call for Participation; projects and speakers

CFP - Projects

Always wanted to contribute to open-source projects but did not know where to start? Every week we highlight some tasks from the Rust community for you to pick and get started!

Some of these tasks may also have mentors available, visit the task page for more information.

cargo-generate - RFC on reading toml values into placeholders

If you are a Rust project owner and are looking for contributors, please submit tasks here or through a PR to TWiR or by reaching out on X (Formerly twitter) or Mastodon!

CFP - Events

Are you a new or experienced speaker looking for a place to share something cool? This section highlights events that are being planned and are accepting submissions to join their event as a speaker.

Rust Ukraine 2024 | Closes 2024-07-06 | Online + Ukraine, Kyiv | Event date: 2024-07-27

Conf42 Rustlang 2024 | Closes 2024-07-22 | online | Event date: 2024-08-22

If you are an event organizer hoping to expand the reach of your event, please submit a link to the website through a PR to TWiR or by reaching out on X (Formerly twitter) or Mastodon!

Updates from the Rust Project

428 pull requests were merged in the last week

hir_typeck: be more conservative in making "note caller chooses ty param" note

rustc_type_ir: Omit some struct fields from Debug output

account for things that optimize out in inlining costs

actually taint InferCtxt when a fulfillment error is emitted

add #[rustc_dump_{predicates,item_bounds}]

add hard error and migration lint for unsafe attrs

allow "C-unwind" fn to have C variadics

allow constraining opaque types during subtyping in the trait system

allow constraining opaque types during various unsizing casts

allow tracing through item_bounds query invocations on opaques

ban ArrayToPointer and MutToConstPointer from runtime MIR

change a DefineOpaqueTypes::No to Yes in diagnostics code

collect attrs in const block expr

coverage: add debugging flag -Zcoverage-options=no-mir-spans

coverage: overhaul validation of the #[coverage(..)] attribute

do not allow safe/unsafe on static and fn items

don't ICE when encountering an extern type field during validation

fix: break inside async closure has incorrect span for enclosing closure

E0308: mismatched types, when expr is in an arm's body, don't add semicolon ';' at the end of it

improve conflict marker recovery

improve tip for inaccessible traits

interpret: better error when we ran out of memory

make async drop code more consistent with regular drop code

make edition dependent :expr macro fragment act like the edition-dependent :pat fragment does

make pretty printing for f16 and f128 consistent

match lowering: expand or-candidates mixed with candidates above

show notice about "never used" of Debug for enum

stop sorting Spans' SyntaxContext, as that is incompatible with incremental

suggest inline const blocks for array initialization

suggest removing unused tuple fields if they are the last fields

uplift next trait solver to rustc_next_trait_solver

add f16 and f128

miri: /miri: nicer error when building miri-script fails

miri: unix/foreign_items: move getpid to the right part of the file

miri: don't rely on libc existing on Windows

miri: fix ICE caused by seeking past i64::MAX

miri: implement LLVM x86 adx intrinsics

miri: implement LLVM x86 bmi intrinsics

miri: nicer batch file error when building miri-script fails

miri: use strict ops instead of checked ops

save 2 pointers in TerminatorKind (96 → 80 bytes)

add SliceLike to rustc_type_ir, use it in the generic solver code (+ some other changes)

std::unix::fs: copy simplification for apple

std::unix::os::home_dir: fallback's optimisation

replace f16 and f128 pattern matching stubs with real implementations

add PidFd::{kill, wait, try_wait}

also get add nuw from uN::checked_add

generalize {Rc, Arc}::make_mut() to unsized types

implement array::repeat

make Option::as_[mut_]slice const

rename std::fs::try_exists to std::fs::exists and stabilize fs_try_exists

replace sort implementations

return opaque type from PanicInfo::message()

stabilise c_unwind

std: refactor the thread-local storage implementation

hashbrown: implement XxxAssign operations on HashSets

hashbrown: replace "ahash" with "default-hasher" in Cargo features

cargo toml: warn when edition is unset, even when MSRV is unset

cargo: add CodeFix::apply_solution and impl Clone

cargo: make -Cmetadata consistent across platforms

cargo: simplify checking feature syntax

cargo: simplify checking for dependency cycles

cargo test: add auto-redaction for not found error

cargo test: auto-redact file number

rustdoc: add support for missing_unsafe_on_extern feature

implement use<> formatting in rustfmt

rustfmt: format safety keywords on static items

remove stray println from rustfmt's rewrite_static

resolve clippy f16 and f128 unimplemented!/FIXMEs

clippy: missing_const_for_fn: add machine-applicable suggestion

clippy: add applicability filter to lint list page

clippy: add more types to is_from_proc_macro

clippy: don't lint implicit_return on proc macros

clippy: fix incorrect suggestion for manual_unwrap_or_default

clippy: resolve clippy::invalid_paths on bool::then

clippy: unnecessary call to min/max method

rust-analyzer: complete async keyword

rust-analyzer: check that Expr is none before adding fixup

rust-analyzer: add toggleLSPLogs command

rust-analyzer: add space after specific keywords in completion

rust-analyzer: filter builtin macro expansion

rust-analyzer: don't remove parentheses for calls of function-like pointers that are members of a struct or union

rust-analyzer: ensure there are no cycles in the source_root_parent_map

rust-analyzer: fix IDE features breaking in some attr macros

rust-analyzer: fix flycheck panicking when cancelled

rust-analyzer: handle character boundaries for wide chars in extend_selection

rust-analyzer: improve hover text in unlinked file diagnostics

rust-analyzer: only show unlinked-file diagnostic on first line during startup

rust-analyzer: pattern completions in let-stmt

rust-analyzer: use ItemInNs::Macros to convert ModuleItem to ItemInNs

rust-analyzer: remove panicbit.cargo extension warning

rust-analyzer: simplify some term search tactics

rust-analyzer: term search: new tactic for associated item constants

Rust Compiler Performance Triage

Mostly a number of improvements driven by MIR inliner improvements, with a small number benchmarks having a significant regression due to improvements in sort algorithms, which are runtime improvements at the cost of usually slight or neutral compile time regressions, with outliers in a few cases.

Triage done by @simulacrum. Revision range: c2932aaf..c3d7fb39

See full report for details.

Approved RFCs

Changes to Rust follow the Rust RFC (request for comments) process. These are the RFCs that were approved for implementation this week: * Change crates.io policy to not offer crate transfer mediation * UnsafePinned: allow aliasing of pinned mutable references

Final Comment Period

Every week, the team announces the 'final comment period' for RFCs and key PRs which are reaching a decision. Express your opinions now.

RFCs

[disposition: merge] RFC: Return Type Notation

[disposition: merge] Add a general mechanism of setting RUSTFLAGS in Cargo for the root crate only

[disposition: close] Allow specifying dependencies for individual artifacts

Tracking Issues & PRs

Rust

[disposition: merge] #![crate_name = EXPR] semantically allows EXPR to be a macro call but otherwise mostly ignores it

[disposition: merge] Add nightly style guide section for precise_capturing use<> syntax

[disposition: merge] Tracking issue for PanicInfo::message

[disposition: merge] Tracking issue for Cell::update

[disposition: \<unspecified>] Tracking issue for core::arch::{x86, x86_64}::has_cpuid

[disposition: merge] Syntax for precise capturing: impl Trait + use<..>

[disposition: merge] Remove the box_pointers lint.

[disposition: merge] Re-implement a type-size based limit

[disposition: merge] Tracking Issue for duration_abs_diff

[disposition: merge] Check alias args for WF even if they have escaping bound vars

Cargo

No Cargo Tracking Issues or PRs entered Final Comment Period this week.

Language Team

No Language Team Tracking Issues or PRs entered Final Comment Period this week.

Language Reference

No Language Reference Tracking Issues or PRs entered Final Comment Period this week.

Unsafe Code Guidelines

No Unsafe Code Guideline Tracking Issues or PRs entered Final Comment Period this week.

New and Updated RFCs

[new] Cargo structured syntax for feature dependencies on crates

[new] Mergeable rustdoc cross-crate info

[new] Add "crates.io: Crate Deletions" RFC

Upcoming Events

Rusty Events between 2024-06-26 - 2024-07-24 🦀

Virtual

2024-06-27 | Virtual (Charlottesville, NC, US) | Charlottesville Rust Meetup

Crafting Interpreters in Rust Collaboratively

2024-07-02 | Virtual (Buffalo, NY) | Buffalo Rust Meetup

Buffalo Rust User Group

2024-07-02 | Hybrid - Virtual and In-person (Los Angeles, CA, US) | Rust Los Angeles

Rust LA Reboot

2024-07-03 | Virtual | Training 4 Programmers LLC

Build Web Apps with Rust and Leptos

2024-07-03 | Virtual (Indianapolis, IN, US) | Indy Rust

Indy.rs - with Social Distancing

2024-07-04 | Virtual (Berlin, DE) | OpenTechSchool Berlin + Rust Berlin

Rust Hack and Learn | Mirror: Rust Hack n Learn Meetup

2024-07-06 | Virtual (Kampala, UG) | Rust Circle Kampala

Rust Circle Meetup

2024-07-09 | Virtual | Rust for Lunch

July 2024 Rust for Lunch

2024-07-09 | Virtual (Dallas, TX, US) | Dallas Rust

Second Tuesday

2024-07-10 | Virtual | Centre for eResearch

Research Computing With The Rust Programming Language

2024-07-11 | Virtual (Charlottesville, NC, US) | Charlottesville Rust Meetup

Crafting Interpreters in Rust Collaboratively

2024-07-11 | Hybrid - Virtual and In-person (Mexico City, DF, MX) | Rust MX

Programación de sistemas con Rust

2024-07-11 | Virtual (Nürnberg, DE) | Rust Nuremberg

Rust Nürnberg online

2024-07-11 | Virtual (Tel Aviv, IL) | Code Mavens

Reading JSON files in Rust (English)

2024-07-16 | Virtual (Tel Aviv, IL) | Code Mavens

Web development in Rust using Rocket - part 2 (English)

2024-07-17 | Hybrid - Virtual and In-person (Vancouver, BC, CA) | Vancouver Rust

Rust Study/Hack/Hang-out

2024-07-18 | Virtual (Berlin, DE) | OpenTechSchool Berlin + Rust Berlin

Rust Hack and Learn | Mirror: Rust Hack n Learn Meetup

2024-07-23| Hybrid - Virtual and In-Person (München/Munich, DE) | Rust Munich

Rust Munich 2024 / 2 - hybrid

2024-07-24 | Virtual | Women in Rust

Lunch & Learn: Exploring Rust API Use Cases

Asia

2024-06-30 | Kyoto, JP | Kyoto Rust

Rust Talk: Cross Platform Apps

2024-07-03 | Tokyo, JP | Tokyo Rust Meetup

I Was Understanding WASM All Wrong!

Europe

2024-06-27 | Berlin, DE | Rust Berlin

Rust and Tell - Title

2024-06-27 | Copenhagen, DK | Copenhagen Rust Community

Rust meetup #48 sponsored by Google!

2024-07-10 | Reading, UK | Reading Rust Workshop

Reading Rust Meetup - July

2024-07-11 | Prague, CZ | Rust Prague

Rust Meetup Prague (July 2024)

2024-07-16 | Leipzig, DE | Rust - Modern Systems Programming in Leipzig

Building a REST API in Rust using Axum, SQLx and SQLite

2024-07-16 | Mannheim, DE | Hackschool - Rhein-Neckar

Nix Your Bugs & Rust Your Engines #4

2024-07-23| Hybrid - Virtual and In-Person (München/Munich, DE) | Rust Munich

Rust Munich 2024 / 2 - hybrid

North America

2024-06-26 | Austin, TX, US | Rust ATC

Rust Lunch - Fareground

2024-06-27 | Mountain View, CA, US | Mountain View Rust Meetup

Rust Meetup at Hacker Dojo

2024-06-27 | Nashville, TN, US | Music City Rust Developers

Music City Rust Developers: Holding Pattern

2024-06-27 | St. Louis, MO, US | STl Rust

Meet and Greet Hacker session

2024-07-02 | Hybrid - Los Angeles, CA, US | Rust Los Angeles

Rust LA Reboot

2024-07-05 | Boston, MA, US | Boston Rust Meetup

Boston University Rust Lunch, July 5

2024-07-11 | Hybrid - Mexico City, DF, MX | Rust MX

Programación de sistemas con Rust

2024-07-11 | Mountain View, CA, US | Mountain View Rust Meetup

Rust Meetup at Hacker Dojo

2024-07-17 | Hybrid - Vancouver, BC, CA | Vancouver Rust

Rust Study/Hack/Hang-out

2024-07-18 | Nashville, TN, US | Music City Rust Developers

Music City Rust Developers : holding pattern

2024-07-24 | Austin, TX, US | Rust ATC

Rust Lunch - Fareground

Oceania

If you are running a Rust event please add it to the calendar to get it mentioned here. Please remember to add a link to the event too. Email the Rust Community Team for access.

Jobs

Please see the latest Who's Hiring thread on r/rust

Quote of the Week

Rust has no theoretical inconsistencies... a remarkable achievement...

– Simon Peyton-Jones on YouTube

Thanks to ZiCog for the suggestion and Simon Farnsworth for the improved link!

Please submit quotes and vote for next week!

This Week in Rust is edited by: nellshamrell, llogiq, cdmistman, ericseppanen, extrawurst, andrewpollack, U007D, kolharsam, joelmarcey, mariannegoldin, bennyvasquez.

Email list hosting is sponsored by The Rust Foundation

Discuss on r/rust

2 notes

·

View notes

Text

Woo! First posting and it's basically porn lmao 😂 i have a while ass plot dedicated to this oc and working on the design but I couldn't help myself making this

Words : 1300~

Warnings : ratchet is in good mood (my cover up for anything that might be ooc lmao), vibrator used, genitals aren't mentioned, you could read this as an x reader, my grammar is probably horrid

*They are in established relationship they had there sappy omg I thought you were dead but now I know that I need to tell you I love you spiel*

*Pov of oc who, is a bot of sorts, is but I use human terms cause they aren't a cybrotronian*

“Please Ratchet I really want to have a nap with you! Naps with you are the best. And we both know that you need rest as well, plus you always love taking naps with me!” I begged. Try to use the puppy dog eyes but it looks more like sad pouting. Which is close, I guess.

“No, and no I don’t. We both know that I have lots of work to-do.” coldly stated Ratchet.

Hmph.

“Then at least let me help we both know I’m more than capable.” I stood with both hands on my hips.

There is a pause of compilation from Ratchet then a small smile. Uh oh. I know that smile this is going to be an interesting day for sure.

“Under one condition.” Ratchet starts. “Get the ‘equipment’ that I helped you make” He smirks.

My engine revved a bit at the boldness of Ratchet. But there was little hesitation from me moving towards my ‘room’ that was an old office when the silo belonged to the humans before the auto bots made it their base.

It was not long before I came back to Ratchet who was still working on his synth energon formula and a few other projects of his.

“Why did you want me to get this? Hm?” I coyly stated. My hands held behind my back.

“You have such confidence in your ability to help I would like to give you a challenge.” Ratchet had turned around and had something in his hand. A bullet vibrator. Oh, ho ho this is a ‘challenge’ that I am so excited for. He hands me the vibrator. With it in my hand I look at him questionably.

“Put in it you, you want attention? Ill give you some while you help and if you do good enough, I’ll reward you, latter tonight.” Firmly states Ratchet.

“You seem to be in a good mood. You enjoy this proposition, don’t you?” I smirk. His vents kick up a bit betraying his straight face. “Hehehe it’s nice to see you feeling better, I’m more than willing to accept your challenge.” I walked towards my room. He grabbed my shoulder.

“Wish to see you put it in.” a pause and a stammer “uh to make sure you actually put it in.” he looks shy fully to the side.

“Of course.” He picked me up and I squeaked.

“You still aren’t used to being so small in comparison are you hm?” He almost smiles.

“No, I think that it’ll be a while before I am.” I bashfully state while removing the cover plating to put the device inside. My legs are on either side of his palm. He leaned slightly closer while I put in the vibrator. A 3d rendering is plastered on my screen I can see and feel the device enter and settle in a spot. Once inside I place my cover back on and minimize the rendering, but it stays in the back of my processor continuously updating the rendering.

“Alright what would you like me – heeii! – Rachet! ~” he cuts me off by turning the vibrator on low then turning it off almost immediately.

“What you like me to do, doctor?” I restate regaining my self-control. I know he doesn’t particularly like the title, but his vents kicked up a notched. A smirk landed on my face.

“Well doctor~ what is it you need?” I said the title with a bit more flirting tone.

“I uhm I need a few data pads from my berthroom that have notes.” He stutters then centers himself.

“Due to my size, I’ll only be able to grab a few at a time, just so you know.” I state as I walk towards his room. The only response was a smirk.

As I arrive, I notice the massive pile on his desk that is about a foot or so above my head. I looked around to find a box to climb on to get to the pads. As I was looking for said box the rendering shifted, and anticipation crept inside me. I took a physical metaphorical breath to center myself.

‘Aha! A box!’ I move the box that is the size of me towards the desk. I climb on the box. A shift in the device. A low groan falls from me. I stand still to recenter myself again. I climbed on the table ‘finally’. I start moving the pads down onto the box. As I let the last one down, the vibrator turns on to what I would guess as medium strength. More intense than the last one, at least. A moan rips through my throat before I can stop it. My knees buckle little and small whimpers leave me as I try my best to recenter myself at the new sensation. It takes a few ‘breathes’.

I climbed all the way down to the floor. There was a total of 10 pads.

‘I’ll only be able to reasonably carry two at a time don’t want to push myself when in this situation, five trips it is then.’ I carried two pads back towards Ratchet’s station. They were like the large print book I had as a kid, they were just larger than my torso, slightly awkward to hold but manageable.

“Ratchet! I’ve got your first delivery!” I giggled to myself at the silliness of my statement.

Ratchet just rolls his optics at my antics.

“About time what took you so long?” he questioned.

‘You know damn well why.’ “I had to find a box to climb on to, to get to the table.” I say instead of what I wanted to say.

He grabs the pads from you and smirks. “Of course, I sometimes forget that you are so much smaller than me.”

“No, you don’t, you never fail to mention it when you pick me up.” I say before I could stop myself. As soon as it was out of my mouth the vibrator picked up a notch. My eyes widen slightly at this. I had somewhat forgotten that that was there. Having it processing in the back of my mind to try and focus.

I was determined to show a straight face.

“Ill go and get the rest of the pads.” I state and walk away before he could bump it up another notch. There was a slight flux of the vibration as I was walking away. There wasn’t much talking as I grabbed and gave the data pads. Expect each time, he grabbed them from my hands he bumped the vibrations a notch higher. I guess that wasn’t medium at the start. It was harder and harder to walk straight and hold in my moans but damn it if I didn’t try my hardest.

The last straw of my composure was as I delivered the last set of data pads. As he bumped it up again. I couldn’t stop the moan that left me and the buckle of my knees to the floor. On my knees I panted as I started melting in the pleasure of the vibrations.

“Ratchet please I did as you asked, please.” I begged.

“What is it you would like?” He asked.

I looked around to really make sure we were in fact alone in the base. It was late at night and most everyone was asleep.

An almost whisper “your spike.” My engine rumbled in embarrassment.

“I couldn’t hear you could you repeat that?” He asked if I wasn’t sure if he was being sarcastic or legit.

“Your spike, Ratchet, I want it.” I said a bit louder and more confidently.

He chuckles. “As your reward, little one.” He gentle scooped me up off the floor and walked toward his berthroom.

9 notes

·

View notes

Text

Ready to Deploy APPSeCONNECT’s Instant SAP Business One & Salesforce Integration for Growing Businesses

According to Gartner: Poor data quality costs organizations at least USD 12.9 million every year on average.

Growing businesses juggle SAP Business One and Salesforce integration, and following best practices ensures smooth data exchange. Without proper integration, ERP CRM data synchronization challenges cause siloed sales orders, misaligned inventories, and wasted hours on manual fixes.

A self-serve integration platform bridges these gaps instantly. Deploying a no-code, pre-built SAP Business One Salesforce integration best practices package cuts setup time to under 30 minutes, unlocks real-time data flow, and lets teams focus on growth instead of backend plumbing.

Explore how no-code integration can streamline your SAP and Salesforce systems.

The Growing Need for ERP and CRM Integration

Companies run SAP Business One for operations and Salesforce for sales insights. Yet without ERP CRM data synchronization challenges, teams juggle spreadsheets and miss updates. A unified link bridges that gap and boosts efficiency.

Understanding SAP Business One and Salesforce Integration

According to Forrester: Integration developers and data architects experienced a 35 % – 45 % productivity boost by using pre-built connectors and visual designers.

Integrating ERP and CRM means syncing orders, customer records, and inventory between SAP and Salesforce. Without it businesses hit bottlenecks—stale data, billing errors, and split workflows. Self-service ERP-CRM connectors transform this process into a no-code experience anyone can manage.

Experience how Advancing Eyecare optimized its ecommerce operations and service support with seamless integration powered by APPSeCONNECT.

No-Code Integration Platforms: Revolutionizing ERP-CRM Sync

Modern teams no longer need custom scripts or middleware. No-code integration platforms let you pick systems, map fields visually, and hit deploy—no developers required. Key benefits include:

The U.S. CRM market size was USD 22.1 billion in 2024 and is projected to reach USD 67.4 billion by 2032 (CAGR 15.1 %).