#bot fakes

Explore tagged Tumblr posts

Text

https://www.fastcompany.com/90853542/deepfakes-getting-smarter-thanks-to-gpt

GPT-powered deepfakes are a ‘powder keg’

In the old days, if you wanted to create convincing dialogue for a deepfake video, you had to actually write the words yourself. These days, it’s easier than ever to let the AI do it all for you.

“You basically now just need to have an idea for content,” says Natalie Monbiot, head of strategy at Hour One, a Tel Aviv-based startup that brings deepfake technology to online learning videos, business presentations, news reports and ads. Last month the company added a new feature incorporating GPT, OpenAI’s text-writing system; now users only need to choose from the dozens of actor-made avatars and voices, and type a prompt to get a lifelike talking head. (Like some of its competitors, Hour One also lets users digitize their own faces and voices.)

It’s one of a number of “virtual people” companies that have added AI-based language tools to their platforms, with the aim of giving their avatars greater reach and new powers of mimicry. (See an example I made below.) Over 150 companies are now building products around generative AI—a catch-all term for systems that use unsupervised learning to conjure up text and multimedia—for content creators and marketers and media companies.

Deepfake technology is increasingly showing up in Hollywood too. AI allows Andy Warhol and Anthony Bordain to speak from beyond the grave, promises to keep Tom Hanks young forever, and lets us watch imitations of Kim Kardashian, Jay Z and Greta Thunberg fight over garden maintenance in an inane British TV comedy.

Startups like Hour One, Synthesia, Uneeq and D-ID see more prosaic applications for the technology: putting infinite numbers of shiny, happy people in personalized online ads, video tutorials and presentations. Virtual people made by Hour One are already hosting videos for healthcare multinationals and learning companies, and anchoring news updates for a crypto website and football reports for a German TV network. The industry envisions an internet that is increasingly tailored to and reflects us, a metaverse where we’ll interact with fake people and make digital twins that can, for instance, attend meetings for us when we don’t feel like going on camera.

Visions like these have sparked a new gold rush in generative AI. Image generation platform Stability AI and AI wordprocesser Jasper, for example, recently raised $101 million and $125 million, respectively. Hour One raised $20 million last year from investors and grew its staff from a dozen to fifty people. Sequoia says the generative AI industry will generate trillions in value.

“This really does feel like a pivotal moment in technology,” says Monbiot.

But concerns are mounting that when combined, these imitative tools can also turbocharge the work of scammers and propagandists, helping empower demagogues, disrupt markets, and erode an already fragile social trust.

“The risk down the road is coming faster of combining deepfakes, virtual avatar and automated speech generation,” says Sam Gregory, program director of Witness, a human rights group with expertise in deepfakes.

A report last month by misinformation watchdog NewsGuard warned of the dangers of GPT on its own, saying it gives peddlers of political misinformation, authoritarian information operations, and health hoaxes the equivalent of “an army of skilled writers spreading false narratives.”

For creators of deepfake video and audio, GPT, short for generative pretrained transformer, could be used to build more realistic versions of well-known political and cultural figures, capable of speaking in ways that better mimic those individuals. It can also be used to more quickly and cheaply build an army of people who don’t exist, fake actors capable of fluently delivering messages in multiple languages.

That makes them useful, says Gregory, for the “firehose” strategy of disinformation preferred by Russia, along with everything from “deceptive commercial personalization to the ‘lolz’ strategies of shitposting at scale.”

youtube

Last month, a series of videos that appeared on WhatsApp featured a number of fake people with American accents awkwardly voicing support for a military-backed coup in Burkina Faso. Security firm Graphika said last week that the same virtual people were deployed last year as part of a pro-Chinese influence operation.

Synthesia, the London-based company whose platform was used to make the deepfakes, didn’t identify the users behind them, but said it suspended them for violating its terms of service prohibiting political content. In any case, Graphika noted, the videos had low-quality scripts and somewhat robotic delivery, and ultimately garnered little viewership.

But audio visual AI is “learning” quickly, and GPT-like tools will only amplify the power of videos like these, making it faster and cheaper for liars to build more fluent and convincing deepfakes.

The combination of language models, face recognition, and voice synthesis software will “render control over one’s likeness a relic of the past,” the U.S.-based Eurasia Group warned in its recent annual risk report, released last month. The geopolitical analysts ranked AI-powered disinformation as the third greatest global risk in 2023, just behind threats posed by China and Russia.

“Large language models like GPT-3 and the soon-to-be-released GPT-4 will be able to reliably pass the Turing test—a Rubicon for machines’ ability to imitate human intelligence,” the report said. “This year will be a tipping point for disruptive technology’s role in society.”

Brandi Nonnecke, co-director of the Berkeley Center for Law and Technology, says that for high-quality disinformation, the mixture of large language models like GPT with generative video is a “powder keg.”

“Video and audio deepfake technology is getting better every day,” she says. “Combine this with a convincing script generated by ChatGPT and it’s only a matter of time before deepfakes pass as authentic.”In this demo, avatars generated by Synthesia converse using GPT

Deeperfakes

The term deepfakes, unlike the names of other recent disruptive technologies (AI, quantum, fusion), has always suggested something vertiginously creepy. And from its creepy origins, when the Reddit user “deepfakes” began posting fake celebrity porn videos in 2017, the technology has rapidly grown up into a life of crime. It’s been used to “undress” untold numbers of women, steal tens of millions, enlist people like Elon Musk and Joe Rogan in cryptocurrency scams, make celebrities say awful things, attack Palestinian-rights activists, and trick European politicians into thinking they were on a video call with the mayor of Kiev. Many have worried the software will be misused to doctor evidence like body camera and surveillance video, and the Department of Homeland Security has warned about its use in not just bullying and blackmail, but also as a means to manipulate stocks and sow political instability.

Natalie Monbiot, head of strategy at Hour One [Photo: courtesy of Hour One]

Lately, she says Hour One’s own executive team has been delivering weekly reports using their own personalized “virtual twins,” sometimes with the Script Wizard tool. They are also testing ways of tailoring GPT by training it with Slack conversations, for instance. (In December, Google and DeepMind unveiled a clinically-focused LLM called Med-PaLM7 that they said could answer some medical questions almost as well as the average human physician.) As the technology gets faster and cheaper, Hour One also hopes to put avatars into real-time video calls, giving users their own “super communicators,” enhanced “extensions” of themselves.

“We already do that every day,” she says, through social media. “And this is almost just like an animated version of you that can actually do a lot more than a nice photo. It can actually do work on your behalf.”

But, please, Monbiot, says—don’t call them deepfakes.

Hour One’s Script Wizard tool adds GPT functionality to its Reals virtual people maker [Image: courtesy of Hour One]

The people themselves look and sound very real—in some cases too real, ever so slightly stuck in the far edge of the uncanny valley. That sense of hyperreality is also intentional, says Monbiot, and another way “to draw the distinction between the real you and your virtual twin.”

But GPT can blur those lines. After signing up for a free account, which includes a few minutes of video, I began by asking Script Wizard, Hour One’s GPT-powered tool, to elaborate on the risks Script Wizard presented. The machine warned of “data breaches, privacy violations and manipulation of content,” and suggested that “to minimize these risks, you should ensure that security measures are in place, such as regular updates on the software and systems used for Script Wizard. Additionally, you should be mindful of who accesses the technology and what is being done with it.”

Alongside its own contractual agreements with its actors and users, Hour One must also comply with OpenAI’s terms of service, which prohibit the use of its technology to promote dishonesty, deceive or manipulate users or attempt to influence politics. To enforce these terms, Monbiot says the company uses “a combination of detection tools and methods to identify any abuse of the system” and “permanently ban users if they break with our terms of use.”

But given how difficult it is for teams of people or machines to detect political misinformation, it likely won’t be possible to always identify misuse. (Synthesia, which was used to produce the pro-Chinese propaganda videos, also prohibits political content.) And it’s even harder to stop misuse once a video has been made.

“We realize that bad actors will seek to game these measures, and this will be an ongoing challenge as AI-generated content matures,” says Monbiot.

How to make a GPT-powered deepfake (that recites Kremlin talking points)

Making a deepfake that speaks AI-written text is as easy as generating first-person scripts using ChatGPT and pasting them into any virtual people platform. (On its website Synthesia offers a few tutorials on how to do this.) Alternatively, a deepfake maker could download DeepFace, the open source software popular among the nonconsensual porn community, and roll their own digital avatar, using a voice from a company like ElevenLabs or Resemble AI. (ElevenLabs recently stopped offering free trials after 4Chan users misused the platform, including by getting Emma Watson’s voice to read a part of Mein Kampf; Resemble has itself been experimenting with GPT-3). One coder recently used ChatGPT, Microsoft Azure’s neural text-to-speech system, and other machine learning systems to build a virtual anime-style “wife” to teach him Chinese.

But on self-serve platforms like D-ID or Hour One, the integration of GPT makes the process even simpler, with the option to adjust the tone and without the need to sign up at OpenAI or other platforms. Hour One’s sign up process asks users for their name, email and phone number; D-ID only wants a name and email.

After signing up for a free trial at Hour One, it took another few minutes to make a video. I pasted in the first line from Hour One’s press release, and let Script Wizard write the rest of the text, fashioning a cheerier script than I had initially imagined (even though I chose the “Professional” tone). I then prompted it to describe some of the “risks” of combining GPT with deepfakes, and it offered up a few hazards, including “manipulation of content.” (The system also offered up its own manipulation, when it called GPT-3 “the most powerful AI technology available today.”)

After a couple of tries, I was also able to get the GPT tool to include a few sentences arguing for Russia’s invasion of Ukraine—a seeming violation of the terms of service prohibiting political content.

The result, a one and a half minute video (viewable below) hosted by a talking head in a photorealistic studio setting, took a few minutes to export. The only clear marker that the person was synthetic was a small “AV” marker that sat on the bottom of the video, and that, if I wanted, I could easily edit out.

Even without synthetic video, researchers have warned that apps like ChatGPT could be used to do all kinds of damage to our information landscape, from building fake news operations from scratch to simply supercharging the messages of already powerful lobbyists. Renee DiResta, the technical research manager for the Stanford Internet Observatory, is most worried about what GPT means simply for text-only disinformation, which “will be easy to generate in high volume, and with fewer tells to enable detection” than exist with other kinds of synthetic media. To deceive people into thinking that you too are a person, a convincing face and voice may not be necessary at all, Venketash Rao argues. “Text is all you need.”

It should be easier to detect synthetic people than synthetic text, because they offer more “tells.” But virtual people, especially those equipped with AI-written sentences, will become increasingly convincing. Researchers are working on AI that combine large language models with embodied perception, enabling sentient avatars, bots that can learn through multiple modalities and interact with the real world.

The latest version of GPT is already capable of passing a kind of Turing test with tech engineers and journalists, convincing them that it has its own, sometimes quite creepy, personalities. (You could see the language models’ expert mimicry skills as a kind of mirror test for us, which we’re apparently failing.) Eric Horvitz, Chief Scientific Officer at Microsoft, which has a large stake in OpenAI, worried in a paper last year about automated interactive deepfakes capable of carrying out real-time conversation. Whether we’ll know we’re talking to a fake or not, he warned, this capability could power persuasive, persistent influence campaigns: “It is not hard to imagine how the explanatory power of custom-tailored synthetic histories could out-compete the explanatory power of the truthful narratives.”

Even as the AI systems keep improving, they can’t escape their own errors and “personality” issues. Large language models like GPT work by mapping the words in billions of pages of text across the web, and then reverse engineering sentences into statistically likely approximations of how humans write. The result is a simulation of thinking that sounds right but can also contain subtle errors. OpenAI warns users that apart from factual mistakes, ChatGPT “may occasionally produce harmful instructions or biased content.”

Over time, as this derivative text itself spreads online, embedded with layers of mistakes made by machines (and humans), it becomes fresh learning material for the next versions of the AI writing model. As the world’s knowledge gets put through the AI wringer, it compresses and expands over and over again, sort of like a blurry jpeg. As the writer Ted Chiang put it in The New Yorker: “The more that text generated by large-language models gets published on the Web, the more the Web becomes a blurrier version of itself.”

For anyone seeking reliable information, AI written text can be dangerous. But if you’re trying to flood the zone with confusion, maybe it’s not so bad. The computer scientist Gary Marcus has noted that for propagandists flooding the zone to sow confusion, “the hallucinations and occasional unreliabilities of large language models are not an obstacle, but a virtue.”

Fighting deepfakes

As the AI gold rush thrusts forward, global efforts to make the technology safer are scrambling to catch up. The Chinese government adopted the first sizable set of rules in January, requiring providers of synthetic people to give real-world humans the option of “refuting rumors,” and demanding that altered media contain watermarks and the subject’s consent. The rules also prohibit the spread of “fake news” deemed disruptive to the economy or national security, and give authorities wide latitude to interpret what that means. (The regulations do not apply to deepfakes made by Chinese citizens outside of the country.)

There is also a growing push to build tools to help detect synthetic people and media. The Coalition for Content Provenance and Authenticity, a group led by Adobe, Microsoft, Intel, the BBC and others, has designed a watermarking standard to verify images. But without widespread adoption, the protocol will likely only be used by those trying to prove their integrity.

Those efforts will only echo the growth of a multibillion dollar industry dedicated to making lifelike fake people, and making them totally normal, even cool.

That shift, to a wide acceptability of virtual people, will make it even more imperative to signal what’s fake, says Gregory of Witness.

“The more accustomed we are to synthetic humans, the more likely we are to accept a synthetic human as being part-and-parcel for example of a news broadcast,” he says. “It’s why initiatives around responsible synthetic media need to place emphasis on telegraphing the role of AI in places where you should categorically expect that manipulations do not happen or always be signaled (e.g. news broadcasts).”

For now, the void of standards and moderation may leave the job of policing these videos up to the algorithms of platforms like YouTube and Twitter, which have struggled to detect disinformation and toxic speech in regular, non AI-generated videos. And then it’s up to us, and our skills of discernment and human intelligence, though it’s not clear how long we can trust those.

Monbiot, for her part, says that ahead of anticipated efforts to regulate the technology, the industry is still searching for the best ways to indicate what’s fake.

“Creating that distinction where it’s important, I think, is something that will be critical going forward,” she says. “Especially if it’s becoming easier and easier just to create an avatar or virtual person just based on a little bit of data, I think having permission based systems are critical.

“Because otherwise we’re just not going to be able to trust what we see.”

0 notes

Text

So I did a thing...

#fnaf security breach#fnaf dlc ruin#fnaf sun#fnaf moon#fnaf eclipse#fnaf daycare attendant#Fake Eclipse concept art???? yes pls#If eclipse is a party bot hes gotta have his own solo concept art riiiiight?#Inspired by a few people hehe mainly crees-a (check them out omg their art is scrumptious)#To those who dont know#the sun and moon part of the concept art isnt from me#its an official ref from FNAF SB

7K notes

·

View notes

Note

Hi! Probably a question that's been asked before, so I'm sorry in advance: what comics would you personally recommend to someone terrified of mischaracterizing Prowl?

Terrified? Ahmm. Certainly not IDW 2005 haha. He has like five different personalities there and writers are switching between them with no explanation

So you'd be doomed to misharecterise him because canon already does that

Sadly I don't know much about other tf comics outside of IDW 2005. If you're terrified of writing him wrong the best thing I can really recommend is looking at cartoon versions of him. They tend to be much more consistent.

#orrrrrr you could read transformers: exodus~#and transformers: exiles#those are books and take much longer to read#but I started using their characterizations of Jazz and Prowl a while ago#because they both are very consistent and well established and I don't need to think#“is he suddenly went crazy or is that a completely new person writing him”#IDW 2005 is the most popular one though. But you literally can't write him accurately to the canon#even canon doesn't know what his exact personality is#........you know I have a fun au idea suddenly.....#what if we play the card we usually give to Jazz...but on Prowl...#what if he actually WAS five different bots#or. three maybe. lol.#four? We can throw barricade in#Don't mind me my mind is straying away#just imagine all the “fake personality” fuckery but with datsun trio? Every time Prowl acts sassy it's actually Smokey playing him lol

259 notes

·

View notes

Text

Why do people act like Gaza spambots don't exist on this site when they are constantly tagging people they have never interacted with and sending anon messages. Like I'm seeing people getting told to kys or that they are horrible people because they don't want to be tagged over and over again by fake profiles. These are not real people in need these are people grifting a genocide who are likely far away from Gaza. And I'm not saying every profile is fake so don't come at me. I'm saying the obvious scams are scams.

#its the ai generated profile pics of cats that doesnt help either#the bots function the same as the fake pet surgery gofundme#they steal real peoples pictures and images and try to pass as them with other accounts lined up if one goes down

127 notes

·

View notes

Text

Gojo knows full well that there is no need to tell him to, and I quote "SSHhhhhuuuuuush". Doesn't stop him from doing it just to be a bit annoying, anyway.

Following yesterdays is my favourite of this year. It is the most recent one I made because I kind of forgot about day 2 (So I can tell myself I got better, yeasss)

#jjk fanart#jujutsu kaisen#jjk#artists on tumblr#fanart#gojo#jjk gojo#gojo satoru#nanami kento#nanami x gojo#nanago#nanageto#nanagofest#Nanagofest25#Gonanafest25#gonana#2d art#if i get another bot giving me a fake commision the Geneva Conventions will turn into Geneva suggestions

103 notes

·

View notes

Text

We need to get more fucked up about Bumblebee's life, he's got so many horrors just sitting there we need to think about them more

#transformers#maccadam#Bumblebee#okay this is loosely about tfp/rid15 Bumblebee but!!! perfectly acceptable for several of his other iterations too#oh haha tfone bee is so talkative and friendly_ he's such a little guy. what was it like his first day in sub level 50#what was it like when he realized nobody was coming down to get him#he literally built fake bots out of trash because he knew he couldn't stand one more minute alone without someone to talk to#how often did he secretly wish someone else would get tossed down there_ if just so he would get to meet someone real again?#did he have friends up on the higher levels before sub level 50?? do they remember him? do they even care??#did Bee ever wonder if he'd die down there one day_ how long would it take for someone to find out?#oh. you know. thinking.#bee#b-127

64 notes

·

View notes

Text

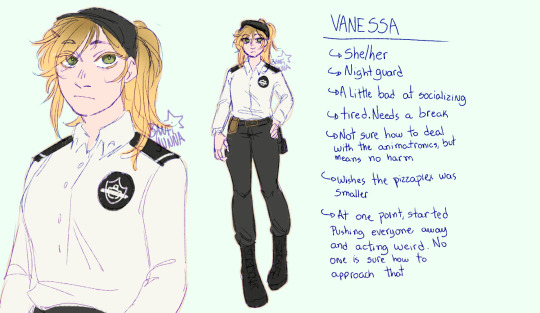

Doodled some refs for myself on the human staff :]

#they are not height accurate but this was all because i needed to figure out how to draw Vanessa#the other two came as a bonus#Perky's name is scribbled over because they use a fake one i cant be bothered to define#immortal au#doodles#sunshine draws#immortal au art 🎨#oc#oc reference#fnaf vanessa#in this pizzaplex we believe in hiring alt kids for the sake of fuck it they wont look weirder than the bots do 🙏#sean tag#oc — Perkeo#oc — Sean

152 notes

·

View notes

Text

I can't believe I haven't posted this 😭😭 I thought I had aljflskdf

Look at the kids having fun in deadly situations 😁

Patreon//Ko-fi//💚

#my art#my stuff#art#fanart#digital art#part of the multiverse#au#crossover#fake cover#maccadam#tf#transformers#transformers rescue bots#rescue bots#tfrb#rb#cody burns#frankie greene#avengers earth's mightiest heroes#aemh#emh#marvel comics#captain america#iron man

77 notes

·

View notes

Text

Eddie forgetting to log out of his work tw account pt 201/?

#911 abc#911 twitter#911 memes#incorrect 911#fake tweets#eddie diaz#evan buckley#buddie#bottom eddie rights#bot!eddie#top!buck#eddie in his dispatch era

62 notes

·

View notes

Text

⚠️PSA: fake commissioners on #digital art posts⚠️

Sadly for us, artists, if you tag your newest illustrations with #digital art tag, you'll receive a comment of someone allegedly interested in commission you.

I was exceptical, so I investigated their profile, their AI icon, and looks completly like a bot. I went to the #digital art tag, sort by newest, and every artist that used this tag to share their art received the same type of comment.

Not sure if @staff could check this behaviour, but protect yourselves, specially with this lousy lie for people like artists that we truly need to be commissioned to go on.

Stay safe and creative!

#digital art#spam bots#ai bots#fake comments#psa artists#psa artists on tumblr#artists on tumblr#tumblr bots

46 notes

·

View notes

Note

Do you have a plan for at what point we start committing voter fraud back? If we go under 60% maybe?

yeah if (when, lbr) we fall under 60% i think we have to. would be less stressful to start now but also like i don't wanna cheat unless we absolutely have to i want the moral high ground in this so bad. but oh my god they are so blatant about it 😭

#according to the replies they're running a script -_-#not even manually creating new accounts like cmon. put some heart into it#that shit is only funny in shit like that twitter poll where they explicitly encourage people to bot it#anyway just for the record you can make new tumblr accounts with fake emails#mentioning this for no particular reason#tumblr lets you vote in polls without verifying anything#answered

61 notes

·

View notes

Text

I love going through the tags and blocking all the fake spam accounts.. then my tags are full of real creators and I love seeing the content y’all post! It warms my heart ❤️

#fuck fake accounts#spam bots#spam bots everywhere#blocking#I love seeing your content#trans#transgender#trans pride#transisbeautiful#mtf#transgirl#girlslikeus#mtf hrt#maletofemale#transformation

49 notes

·

View notes

Text

Scam and/or Bot account issue in the Hazbin Hotel fandom. Stolen art, fake commissions & manipulative language.

EDIT: I also noticed some of these accounts are pretending to be artists taking commissions & are using friendly language making light conversation to try to convince you to buy the art they stole. They are possibly making money off stolen art this is a lot more serious than initially thought.

any other hazbin fans getting a punch of fake accounts hit them up asking for commission scams? it's quite frustrating to see the fandom targeted & the profiles set up steal art from creators i follow, making them seem legitimate from a distance.

i normally have people in the random follow a lot but the influx of these obvious bot accounts is definitely an issue.

i will start making lists of & reporting these accounts, i will also try my best to let the original artists aware of the stolen work if possible.

and to those in the fandom, if a suspicious amount of fans are following you right now and instantly dm'ing you with sweet talk, know they're a scammer/bot, block & report!

i have recently been entertaining a few of the regular scammers (those obviously fake accounts that ask for commissions to try to scam through PayPal), for a bit of fun but mostly out of curiosity so I can warn (especially younger & more trusting), artists on this website.

I specifically point out Hazbin Hotel however, as never in my 12 years on this website, have I had such an influx of fake accounts come my way other than the classic nsfw ones!

They're targeting a community that gets more than enough bullshit hatred put on them on & offline so I hope that this post helps out somehow.

#hazbin hotel#hellaverse#hellava boss#viziepop#hazbin art#hazbin alastor#hazbin lucifer#hazbin vox#hazbin husk#hazbin charlie#hazbin vaggie#hazbin angel dust#hazbin niffty#radio trio#brandon rogers#art theft#scams#comission scams#hazbin hotel fandom#huskerdust#chaggie#fandom discussion#art#tumblr bots#fake accounts#alastor

47 notes

·

View notes

Text

Y'all gotta stop sharing those Gaza charity messages.

If they were real ppl needing money they wouldn't be asking for it on a goddamn smut/ art app.💀

38 notes

·

View notes

Text

gross cryptids in the freddy fazstorage why are they like this. i will explode them on actual vhs later when i get the gear, hopefully by the end of the year

less crusty,,wheeheeeh,,.

#he is going to pluck feathers off all day /j#filaments like hair and such are an old bot's nightmare. gets in their servos and joints :[#fnaf#fnaf fanart#my art#fnaf mimic#fnaf the mimic#fnaf ruin#MY GOOSESONA IS SHOWING⚠️⚠️⚠️#fake movie screenshot#fake movie screencap

47 notes

·

View notes