#cellular automata physics

Explore tagged Tumblr posts

Text

Ask A Genius 1303: Cellular Automata, Discrete vs. Continuous Models, and Algorithmic Information Theory

Scott Douglas Jacobsen: Did you want to do any science stuff? Cellular automata is a discrete, rather than continuous, computational model dealing with grids of cells. In this case, these four-dimensional cells evolve based on rules. This is based on Stephen Wolfram’s book A New Kind of Science. He argues that simple rules can generate complex systems and suggests that this could model the…

#algorithmic information theory#cellular automata physics#discrete vs continuous universe#Rick Rosner

0 notes

Text

I'm working on a physics engine like The Powder Toy right now, and I'm making pretty good progress. I'm making it from scratch with the Rust programming language.

Performance seems really good, with the tile rules processing capping at only about 500-700 microseconds. Because of that, I can definitely afford to fit in a whole lot of advanced behaviors, like buoyancy, more realistic collisions, and stuff like pressure and heat without bring performance down to a crawl.

I want to make a series on youtube of me developing this program and go over how it works and the techniques I used and such. I'm currently working on the intro video but editing it really time consuming and hard so I'm unsure when that will be uploaded (also the narration is so hard to do, why can I only not speak properly when I am recording 😭)

4 notes

·

View notes

Text

Could the Universe be a giant quantum computer?

This article mentions that Edward Fredkin died in June, and this is the first I read of it. His work was the basis of my undergrad thesis.

First John Conway died of COVID, now this...

2 notes

·

View notes

Text

a visit to the house of the robot priests

there are a lot things written about LLMs, many of them dubious. some are interesting tho. since my brain has apparently decided that it wants to know what the deal is, here's some stuff i've been reading.

most of these are pretty old (in present-day AI research time) because I didn't really want to touch this tech for the last couple of years. other people weren't so reticent and drew their own conclusions.

wolfram on transformers (2023)

stephen wolfram's explanation of transformer architecture from 2023 is very good, and he manages to keep the usual self-promotional "i am stephen wolfram, the cleverest boy" stuff to a manageable level. (tho to be fair on the guy, i think his research into cellular automata as models for physics is genuinely very interesting, and probably worth digging into further at some point, even if just to give some interesting analogies between things.) along with 3blue1brown, I feel like this is one of the best places to get an accessible overview of how these machines work and what the jargon means.

the next couple articles that were kindly sent to me by @voyantvoid as a result of my toying around with LLMs recently. they're taking me back to LessWrong. here we go again...

simulators (2022)

this long article 'simulators' for the 'alignment forum' (a lesswrong offshoot) from 2022 by someone called janus - a kind of passionate AI mystic who runs the website generative.ink - suffers a fair bit from having big yud as one of its main interlocutors, but in the process of pushing back on rat received wisdom it does say some interesting things about how these machines work (conceiving of the language model as something like the 'laws of motion' in which various character-states might evolve). notably it has a pretty systematic overview of previous narratives about the roles AI might play, and the way the current generation of language models is distinct from them.

just, you know, it's lesswrong, I feel like a demon linking it here. don't get lost in the sauce.

the author, janus, evidently has some real experience fiddling with these systems and exploring the space of behaviour, and be in dialogue with other people who are equally engaged. indeed, janus and friends seem to have developed a game of creating gardens of language models interacting with each other, largely for poetic/play purposes. when you get used to the banal chatgpt-voice, it's cool to see that the models have a territory that gets kinda freaky with it.

the general vibe is a bit like 'empty spaces', but rather than being a sort of community writing prompt, they're probing the AIs and setting them off against each other to elicit reactions that fit a particular vibe.

the generally aesthetically-oriented aspect of this micro-subculture seems to be a bit of a point of contention from the broader lesswrong milieu; if I may paraphrase, here janus responds to a challenge by arguing that they are developing essentially an intuitive sense for these systems' behaviour through playing with them a lot, and thereby essentially developing a personal idiolect of jargon and metaphors to describe these experiences. I honestly respect this - it brings to mind the stuff I've been on lately about play and ritual in relation to TTRPGs, and the experience of graphics programming as shaping my relationship to the real world and what I appreciate in it. as I said there, computers are for playing with. I am increasingly fixating on 'play' as a kind of central concept of what's important to me. I really should hurry up and read wittgenstein.

thinking on this, I feel like perceiving LLMs, emotionally speaking, as eager roleplayers made them feel a lot more palatable to me and led to this investigation. this relates to the analogy between 'scratchpad' reasoning about how to interact socially generated by recent LLMs like DeepSeek R1, and an autistic way of interacting with people. I think it's very easy to go way too far with this anthropomorphism, so I'm wary of it - especially since I know these systems are designed (rather: finetuned) to have an affect that is charming, friendly and human-like in order to be appealing products. even so, the fact that they exhibit this behaviour is notable.

three layer model

a later evolution of this attempt to philosophically break down LLMs comes from Jan Kulveit's three-layer model of types of responses an LLM can give (its rote trained responses, its more subtle and flexible character-roleplay, and the underlying statistics model). Kulveit raises the kind of map-territory issues this induces, just as human conceptions of our own thinking tend to shape the way we act in the future.

I think this is probably more of just a useful sorta phenomological narrative tool for humans than a 'real' representation of the underlying dynamics - similar to the Freudian superego/ego/id, the common 'lizard brain' metaphor and other such onion-like ideas of the brain. it seems more apt to see these as rough categories of behaviour that the system can express in different circumstances. Kulveit is concerned with layers of the system developing self-conception, so we get lines like:

On the other hand - and this is a bit of my pet idea - I believe the Ground Layer itself can become more situationally aware and reflective, through noticing its presence in its sensory inputs. The resulting awareness and implicit drive to change the world would be significantly less understandable than the Character level. If you want to get a more visceral feel of the otherness, the Ocean from Lem's Solaris comes to mind.

it's a fun science fiction concept, but I am kinda skeptical here about the distinction between 'Ground Layer' and 'Character Layer' being more than approximate description of the different aspects of the model's behaviour.

at the same time, as with all attempts to explore a complicated problem and find the right metaphors, it's absolutely useful to make an attempt and then interrogate how well it works. so I respect the attempt. since I was recently reading about early thermodynamics research, it reminds me of the period in the late 18th and early 19th century where we were putting together a lot of partial glimpses of the idea of energy, the behaviour of gases, etc., but had yet to fully put it together into the elegant formalisms we take for granted now.

of course, psychology has been trying this sort of narrative-based approach to understanding humans for a lot longer, producing a bewildering array of models and categorisation schemes for the way humans think. it remains to be seen if the much greater manipulability of LLMs - c.f. interpretability research - lets us get further.

oh hey it's that guy

tumblr's own rob nostalgebraist, known for running a very popular personalised GPT-2-based bot account on here, speculated on LW on the limits of LLMs and the ways they fail back in 2021. although he seems unsatisfied with the post, there's a lot in here that's very interesting. I haven't fully digested it all, and tbh it's probably one to come back to later.

the Nature paper

while I was writing this post, @cherrvak dropped by my inbox with some interesting discussion, and drew my attention to a paper in Nature on the subject of LLMs and the roleplaying metaphor. as you'd expect from Nature, it's written with a lot of clarity; apparently there is some controversy over whether it built on the ideas of the Cyborgism group (Janus and co.) without attribution, since it follows a very similar account of a 'multiverse' of superposed possible characters and the AI as a 'simulator' (though in fact it does in fact cite Janus's Simulation post... is this the first time LessWrong gets cited in Nature? what a world we've ended up in).

still, it's honestly a pretty good summary of this group's ideas. the paper's thought experiment of an LLM playing "20 questions" and determining what answer to give at the end, based on the path taken, is particularly succinct and insightful for explaining this 'superposition' concept.

accordingly, they cover, in clear language, a lot of the ideas we've discussed above - the 'simulator' of the underlying probabilistic model set up to produce a chain token by token, the 'simulacrum' models it acts out, etc. etc.

one interesting passage concerns the use of first-person pronouns by the model, emphasising that even if it expresses a desire for self-preservation in the voice of a character it is roleplaying, this is essentially hollow; the system as a whole is not wrong when it says that it does not actually have any desires. i think this is something of the crux of why LLMs fuck with our intuitions so much. you can't accurately say that an LLM is 'just telling you what (it thinks) you want to hear', because it has no internal model of you and your wants in the way that we're familiar with. however, it will extrapolate a narrative given to it, and potentially converge into roleplaying a character who's trying to flatter you in this way.

how far does an LLM take into account your likely reaction?

in theory, an LLM could easily continue both sides of the conversation, instead of having a special token that signals to the controlling software to hand input back to the user.

it's unclear whether it performs prediction of your likely responses and then reasons off that. the 'scratchpad' reasoning generated by deepseek-r1 (a sort of hacky way to get a feedback loop on its own output, that lets the LLM do more complex computation) involves fairly explicit discussion of the likely effects of certain language choices. for example, when I asked why it always seems to begin its chain of thought with 'alright', the resulting chain of thought included:

Next, explaining that "Alright" is part of my training helps them understand that it's a deliberate choice in my design. It's meant to make interactions feel more natural and conversational. People appreciate when communication feels human-like, so this explanation helps build trust and connection. I should also mention that it's a way to set the tone for engagement. Starting with "Alright" makes me sound approachable and ready to help, which is great for user experience. It subtly signals that we're entering a conversational space where they can ask questions or share thoughts freely.

however, I haven't personally yet seen it generate responses along the lines of "if I do x, the user would probably (...). I would rather that they (...). instead, I should (...)". there is a lot of concern getting passed around LessWrong about this sort of deceptive reasoning, and that seems to cross over into the actual people running these machines. for example OpenAI (a company more or less run by people who are pretty deep in the LW-influenced sauce) managed to entice a model to generate a chain of thought in which it concluded it should attempt to mess with its retraining process. they interpreted it as the model being willing to 'fake' its 'alignment'.

while it's likely possible to push the model to generate this kind of reasoning with a suitable prompt (I should try it), I remain pretty skeptical that in general it is producing this kind of 'if I do x then y' reasoning.

on Markov chains

a friend of mine dismissively referred to LLMs as basically Markov chains, and in a sense, she's right: because they have a graph of states, and transfer between states with certain probabilities, that is what a Markov chain is. however, it's doing something far more complex than simple ngram-based prediction based on the last few words!

for the 'Markov chain' description to be correct, we need a node in the graph for every single possible string of tokens that fits within the context window (or at least, for every possible internal state of the LLM when it generates tokens), and also considerable computation is required in order to generate the probabilities. I feel like that computation, which compresses, interpolates and extrapolates the patterns in the input data to guess what the probability would be for novel input, is kind of the interesting part here.

gwern

a few names show up all over this field. one of them is Gwern Branwen. this person has been active on LW and various adjacent websites such as Reddit and Hacker News at least as far back as around 2014, when david gerard was still into LW and wrote them some music. my general impression is of a widely read and very energetic nerd. I don't know how they have so much time to write all this.

there is probably a lot to say about gwern but I am wary of interacting too much because I get that internal feeling about being led up the garden path into someone's intense ideology. nevertheless! I am envious, as I believe I may have said previously, of how much shit they've accumulated on their website, and the cool hover-for-context javascript gimmick which makes the thing even more of a rabbit hole. they have information on a lot of things, including art shit - hell they've got anime reviews. usually this is the kind of website I'd go totally gaga for.

but what I find deeply offputting about Gwern is they have this bizarre drive to just occasionally go into what I can only describe as eugenicist mode. like when they pull out the evopsych true believer angle, or random statistics about mental illness and "life outcomes". this is generally stated without much rhetoric, just casually dropped in here and there. this preoccupation is combined with a strangely acerbic, matter of fact tone across much of the site which sits at odds with the playful material that seems to interest them.

for example, they have a tag page on their site about psychedelics that is largely a list of research papers presented without comment. what does Gwern think of LSD - are they as negative as they are about dreams? what theme am I to take from these papers?

anyway, I ended up on there because the course of my reading took me to this short story. i don't think tells me much about anything related to AI besides gwern's worldview and what they're worried about (a classic post-cyberpunk scenario of 'AI breaking out of containment'), but it is impressive in its density of references to interesting research and internet stuff, complete with impressively thorough citations for concepts briefly alluded to in the course of the story.

to repeat a cliché, scifi is about the present, not the future. the present has a lot of crazy shit going on in it!apparently me and gwern are interested in a lot of the same things, but we respond to very different things in it.

why

I went out to research AI, but it seems I am ending up researching the commenters-about-AI.

I think you might notice that some of the characters who appear in this story are like... weirdos, right? whatever any one person's interest is, they're all kind of intense about it. and that's kind of what draws me to them! sometimes I will run into someone online who I can't easily pigeonhole into a familiar category, perhaps because they're expressing an ideology I've never seen before. I will often end up scrolling down their writing for a while trying to figure out what their deal is. in keeping with all this discussion of thought in large part involving a prediction-sensory feedback loop, usually what gets me is that I find this person surprising: I've never met anyone like this. they're interesting, because they force me to come up with a new category and expand my model of the world. but sooner or later I get that category and I figure out, say, 'ok, this person is just an accelerationist, I know what accelerationists are like'.

and like - I think something similar happened with LLMs recently. I'm not sure what it was specifically - perhaps the combo of getting real introspective on LSD a couple months ago leading me to think a lot about mental representations and communication, as well as finding that I could run them locally and finally getting that 'whoah these things generate way better output than you'd expect' experience that most people already did. one way or another, it bumped my interest in them from 'idle curiosity' to 'what is their deal for real'. plus it like, interacts with recent fascinations with related subjects like roleplaying, and the altered states of mind experienced with e.g. drugs or BDSM.

I don't know where this investigation will lead me. maybe I'll end up playing around more with AI models. I'll always be way behind the furious activity of the actual researchers, but that doesn't matter - it's fun to toy around with stuff for its own interest. the default 'helpful chatbot' behaviour is boring, I want to coax some kind of deeply weird behaviour out of the model.

it sucks so bad that we have invented something so deeply strange and the uses we put it to are generally so banal.

I don't know if I really see a use for them in my art. beyond AI being really bad vibes for most people I'd show my art to, I don't want to deprive myself of the joy of exploration that comes with making my own drawings and films etc.

perhaps the main thing I'm getting out of it is a clarification about what it is I like about things in general. there is a tremendous joy in playing with a complex thing and learning to understand it better.

9 notes

·

View notes

Text

Known for developing the first programmable electric computer in Berlin in 1938, Zuse proposed extending the logic of cellular automata to physics and the general laws of the universe. His 1967 book, Rechnender Raum (Calculating Space), considered the universe to be composed of discrete spatial units that self-organise as cellular automata — that is, according to the status and behaviour of neighbouring units. According to Zuse, the energy interactions between atoms can be formalised as units of computation and, following this approach, one could rewrite the laws of physics — for instance gravitation — in a combinatorial fashion. Matteo Pasquinelli, 2023. The Eye of the Master: A Social History of Artificial Intelligence. London: Verso.

2 notes

·

View notes

Note

Hello Tis the book anon, are u bready for the final shape in less than a week (holy shit 5 days???)

GOD. im very very excited for the new enemy types (i wasn't playing the last time we got a new faction in forsaken! i started tail end of worthy) i think a lot of the design is VERY cool and im very concerned abt the potential of unstop husks. nightmare fuel.

i think prismatic is gonna be fun- ive seen ppl worried abt it breaking the game or whatever BUT i think bungie is in general pretty good with balance (for the casual player) and when it isnt balanced idk i think its usually unbalanced in a super fun way. i am do NOT have the minmaxer's spirit though so i am sure i will not be utilizing it's full potential "correctly" but ill be having fun and thats what im there for.

story-wise... eh? i think they ARE going to land the story they are telling. i just dont *like* the story they are telling. it feels very marvel to me (derogatory) and i dislike immensely the literalization of the concept of the final shape. (oooh they've been ~finalized~ okay why dont we just have a thanos snap situation. what is this *doing* that is different from that).

not to sound like a broken record but i think seth dickinson was putting down something MUCH more interesting when they wrote BoS and unveiling than. this. its very NEAT, it makes sense with the story beats they've been working on, but i think it... flattens the delightfully complicated metaphysics of destiny in a way that pisses me off. i dont think the final shape is meant to be literal. i think the final shape is a way of being like the sword logic is. i think the witness is a neat little deus ex and i think they will tell the story of the witness as they see it Just Fine, but i dont have to like it.

like, i dont have an easily articulatable alternative but im ALWAYS rotating in my mind that the two (three?) beings that escaped the flower game are the vex and the worm gods (& ahamkara?). and i DONT think unveiling is written by the witness. i WILL die on that fucking hill. swagless graycale megamind "no bitches" mcu villain did NOT write that insidious tempting little love letter.

anyway. some relevant lore excerpts connected by red string but no real arguments in my head:

They're majestic, I said. They have no purpose except to subsume all other purposes. There is nothing at the center of them except the will to go on existing, to alter the game to suit their existence. They spare not one sliver of their totality for any other work. They are the end. (The Final Shape; Unveiling)

&

SHAPES AND GLIDERS. I dreamt of existence as a game of cellular automata. In this metaphor, there were only two things: shapes in the game world and the rules of the game world. The rules were the rules of Life and Death. I understood that the sword was the desire to escape existence as a shape in the game and to become the rule that made the shapes. This rule said only "live" or "die"—it had no other outputs. It could not keep secrets. Against it was the desire to become a shape so complex that it could within itself play other games. (Tyrannocide I)

like! the final shape is a shape of THOUGHT. a philosophy of existence *made* real in the way that the vex and the hive effect the worlds around them and exert that philosophy through force of will, bc the metaphysics of the destiny universe allows and operates based on that leveraging of will over the physical world. the philosophical and the real are DEEPLY entwined in a way thats REALLY REALLY INTERESTING and i think its BORING if the final shape the darkness/the witness (bc i do think bungie is conflating them! but imo the traveller is not the gardener and the witness is not the winnower. i will die on this hill) is seeking is to. make everyone into a cube. i joked abt the final shape being a square but i didnt mean it! hello? can anyone hear me? is this thing on?

anyway. tantrum over. i think its fun that cayde and crow are getting to be a little faggy together. i think they should kiss about it.

im excited for the new content release format (episodes?), bc i think a LOT of the issues with the narrative are due to the seasonal model.

i think sjur is finally coming back theres NO way they would bring her up this much and not bring her back. i think in a Nine-themed season. downside is it will inevitably result in what my dear friend jackie and i have been referring to as "hashtag monogamy win" where sjur and mara get back together as a mirror to o14 and then mara tells petra shes always thought of her as a sister or worse a daughter. but if thats the price i have to pay for sjur. thats okay. the writers room is wrong so frequently i just do whatever i want. its like being a comics fan at this point.

also clearly SOMEONE knows what they are doing bc that radio message at the end of last season was. the most in-character thing since probably marasenna lmfao!!! so i tentatively have hope about the writing of it, and regardless am PERFECTLY capable of living blissfully in jackie & my mind palace version of whatever they give us, bc what we are cooking is beautiful and true and divinely inspired. and im very certain seth would approve.

#anonymous#book anon#do u have a tag. idr.#my posts#<long enough yammering to deserve that i think#this may have been more than u wanted djfjfhf but those are my thoughts.#destiny#sorry! i dont have a separate org tag unfortunately

3 notes

·

View notes

Text

Reflections on the Paradox of Existence

Confessions of the twisted endeavors of youth's uncorrupted innocence, All becomest heresy, malediction and entropic discordance. The further we are from conception the larger our faults become.

It takes a sick atheist to reject all the entropy that comes with prosperity, it takes god to create a devil for us to realize we are not meant to be as we are.

We are a convoy of death spirals waiting to meet our final maker, we find the god in every demise and hope in every devil.

No fictive result of ours is the result of our suffering, rather our dependent relations converge us to bound incarceration.

This piece is meant to make you think about your ambitions, goals and dreams. You can't escape the irony of your making, be careful what you wish for. You'll need more than love, happiness and honesty to simply survive let alone thrive, let's make that a reality.

The image of the person is John von Neumann Directly from the wiki article:

John von Neumann (/vɒn ˈnɔɪmən/ von NOY-mən; Hungarian: Neumann János Lajos [ˈnɒjmɒn ˈjaːnoʃ ˈlɒjoʃ]; December 28, 1903 – February 8, 1957) was a Hungarian-American mathematician, physicist, computer scientist, engineer and polymath. He had perhaps the widest coverage of any mathematician of his time,[9] integrating pure and applied sciences and making major contributions to many fields, including mathematics, physics, economics, computing, and statistics. He was a pioneer of the application of operator theory to quantum mechanics in the development of functional analysis, the development of game theory and the concepts of cellular automata, the universal constructor and the digital computer. His analysis of the structure of self-replication preceded the discovery of the structure of DNA.

During World War II, von Neumann worked on the Manhattan Project on nuclear physics involved in thermonuclear reactions and the hydrogen bomb. He developed the mathematical models behind the explosive lenses used in the implosion-type nuclear weapon.[10] Before and after the war, he consulted for many organizations including the Office of Scientific Research and Development, the Army's Ballistic Research Laboratory, the Armed Forces Special Weapons Project and the Oak Ridge National Laboratory.[11] At the peak of his influence in the 1950s, he chaired a number of Defense Department committees including the Strategic Missile Evaluation Committee and the ICBM Scientific Advisory Committee. He was also a member of the influential Atomic Energy Commission in charge of all atomic energy development in the country. He played a key role alongside Bernard Schriever and Trevor Gardner in the design and development of the United States' first ICBM programs.[12] At that time he was considered the nation's foremost expert on nuclear weaponry and the leading defense scientist at the Pentagon. He designed and promoted the policy of mutually assured destruction to limit the arms race.[13]

Von Neumann's contributions and intellectual ability drew praise from colleagues in physics, mathematics, and beyond. Accolades he received range from the Medal of Freedom to a crater on the Moon named in his honor.

#John von Neumann#Quantum Physics#Philosophy#Nihilism#Existentialism#Optimism#Pessismism#Reflection on the paradox of existence#Nuclear Science#Atomic bomb#World war 2#Religion#Atheism#Perspective#Criticism#Responsibility#History#Tradition#Mathematics#Prison complex#Irony#Thought provoking

7 notes

·

View notes

Text

Just a heads-up for speculative biology

While I am still researching and seeking ways to incorporate even more flavour into 16^12 as a documentation atlas / encyclopedia-worthy setting guide overall (especially over alternative technologies, linguistics & cultures), I figured that a bunch of people could really appreciate some more speculative biology resources, games and a handful of considerations / suggestions over lesser-known topics of world building related to such worth considering.

Starting by providing external resources with a couple YouTube channels and some other related resources:

There are way more, but those should give you great insight into the process of speculative contemporary biology as we are aware of right now.

Now, as for my own suggestions, beware that they mostly pertain to foreign time-wise "ecological niches" if you even can call such existential domains that. Either mythologically-inclined, automated lively constructs or literally far, far ahead in time.

Cosmogony & early mundane life suggestions

For ease of reach, I will use the Christian + Hellenistic Classical Greece mythology as a referee guide for meta-physical representation. Could be any mythos, invented or real, it is all true if you count the whole of existence for all I care. But that specific one is one that most people here reading this would know of.

So the creation of the Universe, Earth, Eden and the War in Heaven between Lucifer and Saint-Michael (so not the Apocalyptic scripts, that's custom-tailored flattery fan-fiction by my worldview but whatever...) feels like a logical progression of steps where a root system (some call it God, I call it the existential root likewise to how it is shown on Linux he-he-he) divides themself into smaller and smaller units (first into grander agents and going ever so smaller down to the smallest of axioms...), and then those divide the world into domains of their choosing and executing roles / functions across the wider cosmos...

Sounds like a computer bootstrap process, doesn't it? Well yes, but it is also akin to the ecological niches and adaptation process of what we are most aware of as life that you can emulate and customize as you wish to if you so desire.

You can also extrapolate / interpolate from such a divine / ethereal starting point and make them have all kinds of children species, automata and lifeforms. I would advise going through an "Angelic Constructs" to "Transformers" route for the first mundane plane lifeforms, kinda similar in agency and functionality as our early analogical robotics, both emulating the process of a child learning by playing with toys and also starting very simplistic (like how the simple rules of a cellular automaton like Conway's Game of Life may result in very complex behaviours on the grid) until they build on top of each other significantly.

But you can do whatever you so desire, as the reference model does divide some of the upper tier angelic court beings into themes or existential roles (and does the same for those of whom from such who became upper level Hell governors). It is simply a strong recommendation to emulate the iterative process of life and agency, as that builds a strong world-building cohesion and nuanced depth to the set of variables you tinker with as a creative, engaging people further into its intricacies.

Elderly universe

As I apply the same rules over and over again, I tend to figure historical patterns (likewise to those of sound-tracker's music) and re-utilize the content I enjoy most where relevant. That's part of my research and iterative process. And it turns out that as I feel (and went through several psychic experiences) around and about far futures, it mostly sticks to some vague approximate guesses I make.

Granted, I will definitely make use of some mystery, secrets and quantum choice principles across for the things I am really out-of-reach for knowing, but I can give you some subjective tips of mine to derivate cosmically far futures that both works realistically and feel great to tell exaggerated stories over. (Not always needs to invoke eldritch by any means too, I prefer to give a more nihilistic-optimistic yet esoterically-grounded take on things because I am somewhat tired of dark and dire traumatisms-centric stories of contemporary times)

First, I persist into assuming there are sapient lifeforms (mechanical and divine ones are guaranteed) of some kind across the full spectrum of time. Granted, I have bold vision even for humans and other fully biological intelligent folks to survive even past the red dwarves' major era death into the iron stars period, even without involving much use of magic in the setting period. But it is worth keeping somewhat because entirely lone sapient individuals in such a late era don't exist in a vacuum and needs a few things to persist there for longer than a couple of minutes.

Second, on the same vain I tend to have at least one major native species of this time-wise era either they are time-travelling dwellers, timeless beings or just sapient individuals who stuck around as species for that long. It really gives a vibe of being so elderly as a universe, with cultures, languages, history, knowledge and nuances worth investigating. It is a mechanic I will take full advantage of into my very own worldbuilding if you are curious.

Third, consider realistically "conservative" (as in, with the least change involved) estimates of history, entropy and perhaps timely tourism into consideration for your world(s) and interpolate relevant data for such as needed. There are still going to be changes across the board in the broad spectrum of time, but I would guess that somewhen things will settle down (or at least lower the variation / valence and quiet somewhat more compared to the current cosmos we live in) as the universe becomes less habitable for our definition of life, which are really intricately complex and sophisticated lifeforms.

It really goes to show that if one can imagine / ask a question about anything, it is real somewhere in the whole of existence (perhaps you prefer calling it an omniverse, but not me). And, in this instance, that reinforces the notion that there may be a handful of tourists into the edge periods of time present for all sorts of reasons, no matter how sparse or few of them there may be. Also, it applies the other way around too, especially as my story has a certain emphasis unto a (younger than the elderly majority peers, aka a late teenager / young adult) character agent who wraps back in time for quite mundane reasons really...

Thanks for reading this messy info dump (initially meant to be a shorter article production than the other articles I had in my drafts, oups...) and I wish you good luck.

#youtube#speculative evolution#speculative biology#maskoch#worldbuilding#world building#mythos#mythology#mythopoeia

6 notes

·

View notes

Text

A show-reel exemplifying the kinds of algorithmic art I am currently pursuing. Visit me on YouTube for more.

#art#animation#code art#generative art#cellular automata#cellular automaton#chaos#chaos theory#complexity

2 notes

·

View notes

Text

Stephen Wolfram: Computation, Hypergraphs, and Fundamental Physics (Sean Carroll's Mindscape, July 2021)

youtube

Adam Becker: Physicists Criticize Stephen Wolfram’s 'Theory of Everything' (Scientific American, May 2020)

Stephen Wolfram: Cellular Automata, Computation, and Physics (Lex Fridman, April 2020)

Stephen Wolfram: Fundamental Theory of Physics, Life, and the Universe (Lex Fridman, September 2020)

youtube

Stephen Wolfram: Complexity and the Fabric of Reality (Lex Fridman, October 2021)

youtube

Stephen Wolfram: ChatGPT and the Nature of Truth, Reality & Computation (Lex Fridman, May 2023)

youtube

Saturday, July 15, 2023

4 notes

·

View notes

Text

Bill Gosper

Bill Gosper is a noted American computer scientist and mathematician celebrated for his significant contributions to computer science, especially in algorithms and programming languages. He played a key role in the development of the Lisp programming language and is recognized as an early pioneer of artificial intelligence. Gosper is particularly famous for his work on the "Game of Life" simulation, where he uncovered intricate patterns in cellular automata.

His mathematical interests extend to combinatorial game theory and number theory. Beyond academia, he has also influenced the creation of mathematical software tools and tackled various mathematical problems, often using computational methods. Gosper's innovative blend of mathematical rigor and computing has left a lasting mark on both fields.

Born: 26 April 1943 (age 81 years), New Jersey, United States

Education: Massachusetts Institute of Technology

Why did Bill Gosper invent Gosper island

Bill Gosper created Gosper Island as a part of his work in recursive structures and fractals within the field of mathematics, particularly in relation to cellular automata. Gosper Island is a self-similar fractal that arises from the process of generating a space-filling curve.

The importance of Gosper Island lies in its properties as a mathematical object. It serves as an example of how simple rules can lead to complex and interesting patterns. The island is often associated with the study of tiling, geometry, and the behavior of iterative processes. It is also notable for its representation of fractal principles, which have applications in various fields, including computer science, physics, and biology.

0 notes

Text

Source notes: Procedural Generation of 3D Caves for Games on the GPU

(Mark et al., 2015)

Type: Conference Paper

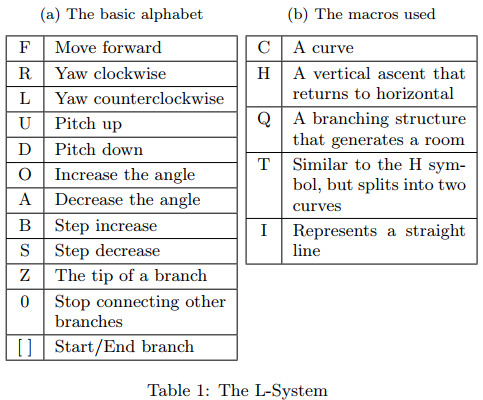

The researchers present a modular pipeline for procedurally generating believable (not physically-based) underground caves in real-time, intended to be used standalone or as part of larger computer game environments. Their approach runs mainly in the GPU and utilises the following techniques: an L-system to emulate cave passages found in nature; a noise-perturbed metaball for 3D carving; a Marching Cubes algorithm to extract an isosurface from voxel data; and shader programming to visually enhance the final mesh. Unlike other works in this area, the authors explicitly state their aim is to demonstrate their method's suitability for use in 3D computer game landscapes, and therefore prioritise immersion, visual plausibility and expressivity in their results.

The method works in a three-stage process, with the structure first being generated by an L-system, before, secondly, a metaball based technique forms tunnels, then lastly a mesh is extracted from the voxel data produced by the previous stages. In terms of implementation, the output L-system structural data is loaded into GPU memory where it is processed by compute shaders to ultimately create tunnels, stalagmites and stalactites.

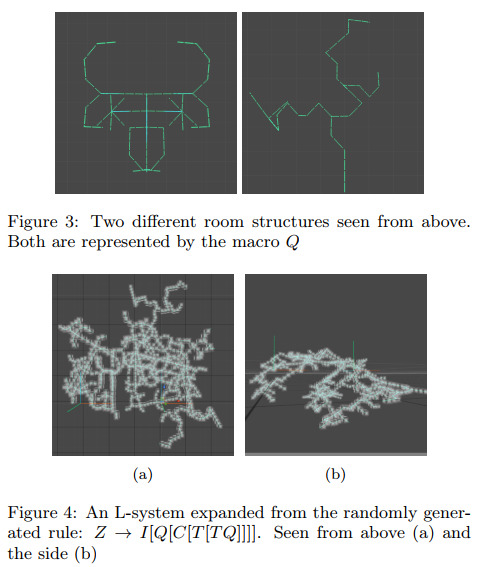

An L-system or Lindenmayer system is a type of formal grammar (a description of which strings are syntactically valid within a formal language) where strings are formed based on a body of rules, starting from an initial string, which are then translated into geometric structures using a mechanism. The L-system used by (Mark et al., 2015) works by guiding a virtual drawing agent (a 'turtle') using the alphabet and constructed strings to generate structural points which can then be connected to form the basis of a cave system. By favouring longer production rules (producing longer expansions of strings) the self-similarity and orderliness of plant-like structures often generated by L-systems with shorter rules was able to be avoided, resulting in a more chaotic structure better suited to simulating a network of tunnels. Additionally, a stochastic element to choosing and generating production rules was introduced to enhance the expressivity of the system. To handle dead ends, a method of connecting a certain percentage of ends to each other by drawing a distorted line between them was developed, and is controllable via a user defined parameter. Further to this, the ability for users to adjust production rules, macro strings, the turtle's turning angle, the containing volume and direction of the system, was introduced.

To form the walls of the cave, a metaball — in this case, "a smooth energy field, represented by a gradient of values between "empty" at its centre and "full" at its outer horizon" perturbed by a warping function to be made less spherical (to achieve more natural results) — is moved through a voxel volume (data for which was generated by the L-system process) from one structural point to the next. If a voxel is found under the radius of the metaball, the distance between the voxel and the metaball's centre is distorted using a combination of Simplex noise and Voronoi noise, giving that voxel a value between -1 and 1. Curl noise is also used to vary the height of the tunnels created. By combining and layering Simplex and Voronoi noise, the researchers were able to produce results approximating scallops and jagged cave walls. The varying shape and size of the metaball and the intricate, branching structure of the L-system paths help to give rise to advanced shapes and to add visual interest.

Stalagmites and stalactites were created by generating noise values for each voxel and picking those within a certain range as spawn points (noise was also used to determine the density of speleothems within an area). Cellular automata were used to detect the floor and ceiling at spawn points and to grow the features (the specific details of that process, including how the cellular automata algorithm was implemented, are not covered).

The volume of voxel values created by the metaball approach are processed by a Marching Cubes algorithm to extract an isosurface, similar to the Masters project method. The authors note that Dual Contouring could have been used to better represent the voxel topology, an insight that informed the decision to investigate Dual Contouring for the Masters project method. Normals are calculated for the mesh and triplanar projection is used for texturing, as well as perturbation of fragments to create a stratified appearance. Lighting, bump mapping and refraction were also implemented. The shader parameters are able to be modified to produce different aesthetics (such as ice and crystal settings).

The method developed by (Mark et al., 2015) produced visually impressive results that are believable as real-world caves. It is capable of generating a variety of features and is structurally varied, with different patterns appearing in the resulting landscapes that resemble hills, sharp peaks, plateaus and small mesas (a flat top on a ridge or hill). The choice to use an L-system is effective in producing a complex, diverging cave system that contains walkways, arches, cracks, windows and polygon arrangements that look like hoodoos (spire rock formations formed by erosion). Furthermore, the creation of their own versions of speleothems (stalactites, stalagmites, columns and scallops) increases the believability and immersive nature of the results.

Parameterisation of aspects such as the L-system rules, its level of randomness, and the pixel shader enable control over the method and increase the variety of models that can be generated, making it more likely to be compatible with a range of art styles and world settings. One potential shortcoming is that a level of familiarity and understanding of the L-system component would be required for a designer to achieve usable results through alteration of its rules and other parameters, however such an investment could be seen as worthwhile given the level of control and expression that appears to be possible based on the content included in the paper.

0 notes

Text

Generative Art: The Algorithmic Touch

Generative art represents an exciting merger of creativity and computation, where algorithms replace the brush, shaping intricate patterns and complex systems that transcend the bounds of traditional art forms. This unique form of art has a variety of methodologies at its disposal, from fractals to neural networks. This article explores these key algorithms and techniques that contribute to the world of generative art. Fractals A fractal is a self-similar shape that repeats infinitely at every level of magnification. The enchanting visuals they generate have long been a fascination for mathematicians and artists alike. Classic fractal types, like the Mandelbrot and Julia sets, exhibit stunningly intricate patterns and infinite complexity. More advanced constructs like 3D fractals or Mandelbulbs extend the concept to create highly complex three-dimensional works of art. Fractal-based algorithms leverage the recursive nature of fractals, making them an ideal tool in the generative artist's toolbox. Cellular Automata Cellular Automata (CA) is a discrete model studied in computational theory. A well-known example, Conway's Game of Life, consists of a grid of cells that evolve through discrete time steps according to a set of simple rules based on the states of neighboring cells. Through these rules, even from simple initial conditions, CAs can produce complex, dynamic patterns, providing a rich foundation for generative art. Noise Functions Noise functions such as Perlin noise or Simplex noise create organic, smoothly varying randomness. These algorithms generate visually appealing patterns and textures, often forming the basis for more complex generative pieces. From naturalistic textures to lifelike terrains, the randomness introduced by noise functions can mimic the randomness in nature, providing a sense of familiarity within the generated art. Genetic Algorithms Genetic algorithms (GAs) are based on the process of natural selection, with each image in a population gradually evolved over time. A fitness function, which quantifies aesthetic value, guides the evolutionary process. Over several generations, this method results in images that optimize for the defined aesthetic criteria, creating a kind of survival of the fittest, but for art. Lindenmayer Systems Lindenmayer Systems (L-systems) are a type of formal grammar primarily used to model the growth processes of plant development but can also generate complex, branching patterns that imitate those found in nature. This makes L-systems a powerful tool for generative art, capable of producing intricate, natural-looking designs. Neural Networks The advent of deep learning has opened up exciting new possibilities for generative art. Neural networks, such as Generative Adversarial Networks (GANs) and Convolutional Neural Networks (CNNs), are trained on a dataset of images to generate new images that bear stylistic similarities. Variations of these networks like StyleGAN, DCGAN, and CycleGAN have been instrumental in creating a broad range of generative artworks, from mimicking famous painters to creating entirely new, AI-driven art styles. Physics-Based Algorithms Some generative artists turn to physics to inspire their work. These algorithms use models of natural processes, like fluid dynamics, particle systems, or reaction-diffusion systems, to create pieces that feel dynamic and organic. Physics-based algorithms can produce stunningly realistic or fantastically abstract images, often emulating the beautiful complexity of nature. Swarm Intelligence Swarm Intelligence algorithms, like particle swarm optimization or flocking algorithms, simulate the behavior of groups of organisms to generate art based on their movement patterns. The collective behavior of a swarm, resulting from the local interactions between the agents, often leads to intricate, evolving patterns, creating compelling pieces of art. Shape Grammars Shape grammars constitute a method that starts with a base shape and then iteratively applies a set of transformation rules to create more complex forms. This systematic approach of shape manipulation allows artists to produce intricate designs that can evolve in visually surprising ways, offering a versatile tool for generative art creation. Agent-Based Models Agent-Based Models (ABMs) simulate the actions and interactions of autonomous entities, allowing artists to investigate their effects on the system as a whole. Each agent follows a set of simple rules, and through their interactions, complex patterns and structures emerge. These emergent phenomena give rise to a range of visually captivating outcomes in the realm of generative art. Wave Function Collapse Algorithm The Wave Function Collapse Algorithm is a procedural generation algorithm that generates images by arranging a collection of tiles according to a set of constraints. This algorithm has seen wide use in generative art and game development for creating coherent and interesting patterns or complete scenes based on a given input. Chaos Theory Chaos theory studies the behavior of dynamical systems that are highly sensitive to initial conditions. Art based on strange attractors, bifurcation diagrams, and other concepts from chaos theory can generate intricate and unpredictable patterns. These patterns, although deterministic, appear random and complex, making chaos theory a fascinating contributor to generative art. Ray Marching Ray Marching, a technique used in 3D computer graphics, is a method of rendering a 3D scene via virtual light beams, or rays. It allows for the creation of sophisticated lighting effects and complex geometric shapes that might not be possible with traditional rendering techniques. Its flexibility and power make it an attractive option for generative artists working in three dimensions.

Conclusion

The intersection of art and algorithms in the form of generative art enables artists to explore new modes of creation and expression. From fractals to neural networks, a variety of techniques provide generative artists with powerful tools to create visually captivating and complex art forms. The dynamic and emergent nature of these algorithms results in artworks that are not just static images but evolving entities with life and movement of their own. Read the full article

#cellularautomata#fractals#generativeart#GeneticAlgorithms#L-Systems#Neuralnetworks#NoiseFunctions#Physics-BasedAlgorithms#RayMarching#SwarmIntelligence

0 notes

Text

My point is more "if you had a law about eggs and cats, that would have vast, far-reaching implications if you did experiments on it and thought about it hard enough".

Like, the second law of thermodynamics is just "when you put hot things next to cold things, the hot thing cools and the cold thing warms up". And that has such insane implications that it turns out that from that you can derive why you can remember the past but not the future, and by studying it we can figure out that it actually comes from just statistics and has a reason for existing.

What I'm saying is, there's no such thing as a "small deviation to the laws of physics" - any new law will inevitably have extremely wild implications for how the world works. And it's far from trivial knowing which ones break everything completely.

As for cellular automata, you still need to narrow it down to "which ones are equivalent to each other", "which ones allow for computation", etc

Ok, no one gave me a satisfying answer to this question last time I asked so I will ask again: why in the world are the laws of physics what they are?

I know this is one of the most significant unsettled questions in history but you guys are smart people. C'mon.

269 notes

·

View notes

Video

tumblr

Today we bring to you a special gift from Stephen Wolfram himself: Conus Textile Shells!

Get your copy of A New Kind of Science PAPERBACK today to receive these handpicked shellular automata.

#cellular automata#stephen wolfram#a new kind of science#science#physics#math#shells#conus textile#wolfram research#woooooo

30 notes

·

View notes

Note

23!

23. Will P=NP? Why or why not?

I feel like the answer is no. But also take a fuckin look at this paper written by an old professor of mine. If memory serves his argument is basically "There are NP-complete problems that appear to be solved (at least in certain cases) in polynomial time by certain physical processes, so assuming that physics is efficiently simulable e.g. by cellular automata, it should be possible to create, for any given NP-complete problem, a version of physics in which a physical process solves the problem in all cases and then simulate that process in polynomial time. P=NP!" Or something like that. This paper was literally mocked (obliquely) by my algorithms professor in front of a lecture hall full of undergrads, it was hilarious.

I do actually need to read that paper again to see if I remember it correctly, but in any case, my thoughts on my remembered version of the argument are something like: assuming that any moderately complex physical process is simulable by cellular automata in polynomial time is a pretty tall ask, and I think in practice what you'd need to do to guarantee that is run the calculations for each cell in parallel. But like, past a certain point you've got enough parallelism to just do a nondeterministic Turing machine to the problem, have each cell check a different possible solution, and then one of them will turn up the right one! Like, yeah, any NP-complete problem is solvable in polynomial time if you have enough parallelism to nondeterministically follow every execution path, that's why it's called Nondeterministic Polynomial, that's definitional!

Also the opening sentences of that paper are absolutely hilarious. "The Clay Mathematics Institute offers a $1 million prize for a solution to the P=?NP problem. We look forward to receiving our award..." The absolute balls.

Anyway, I still haven't answered the question. I feel like the answer is no. I haven't done a deep dive into the research literature on the subject, so I'm mostly going based on vibes, but like. Y'know how there's often a very fine and subtle dividing line between a problem being decidable vs. undecidable? I can't actually think of any examples right now, but you know what I mean right? Hopefully I'm not pulling that out of my ass? Anyway, decidable vs. undecidable is a really solidly insurmountable barrier, no one's going around wondering if all of the undecidable problems in this or that system are actually decidable if we just think about it hard enough. And like, that kind of pattern is also present with P vs NP. Like you could have a perfectly reasonable, polynomial-time-solvable problem, and then just switch one little thing about it that seems like it'd be a minor detail, and suddenly it's NP-complete. Like how Eulerian paths is solvable in linear time but Hamiltonian paths is NP-complete. Point is, that's the same kinda vibe I get from decidable vs. undecidable! It's a pattern! And it suggests to me that the P/NP divide is very possibly as insurmountable as the decidable/undecidable divide!

(Of course if a problem is undecidable in some system you can just look for a different system in which it is decidable, but P/NP is defined explicitly in terms of Turing machines and Turing-complete systems, so. Can't do that to surmount the P/NP divide.)

But like, it does seem tantalizing doesn't it? Like, NP-complete problems are not unsolvable! In fact they very very explicitly are solvable, just not efficiently as far as we've been able to figure out! And so many of them are so similar to problems that are efficiently solvable, surely if we just attack the problem from a slightly different angle we'll be able to figure it out in polynomial time! It's totally different from decidability, it's gotta be!

I dunno. Vibes says no!

15 notes

·

View notes