#computer network experts

Explore tagged Tumblr posts

Text

System and Network Administration

System and Network Administration System and Network Administration – System administration is a job done by IT experts for an organization. The job is to ensure that computer systems and all related services are working well. What is TCP/IP? Introduction of TCP/IP model TCP/IP is a set of network protocols (Protocol Suite) that enable communication between computers. Network protocols are…

View On WordPress

#System administration is a job done by IT experts for an organization. The job is to ensure that computer systems and all related services a#System and Network Administration

0 notes

Text

China hacked Verizon, AT&T and Lumen using the FBI’s backdoor

On OCTOBER 23 at 7PM, I'll be in DECATUR, presenting my novel THE BEZZLE at EAGLE EYE BOOKS.

State-affiliated Chinese hackers penetrated AT&T, Verizon, Lumen and others; they entered their networks and spent months intercepting US traffic – from individuals, firms, government officials, etc – and they did it all without having to exploit any code vulnerabilities. Instead, they used the back door that the FBI requires every carrier to furnish:

https://www.wsj.com/tech/cybersecurity/u-s-wiretap-systems-targeted-in-china-linked-hack-327fc63b?st=C5ywbp&reflink=desktopwebshare_permalink

In 1994, Bill Clinton signed CALEA into law. The Communications Assistance for Law Enforcement Act requires every US telecommunications network to be designed around facilitating access to law-enforcement wiretaps. Prior to CALEA, telecoms operators were often at pains to design their networks to resist infiltration and interception. Even if a telco didn't go that far, they were at the very least indifferent to the needs of law enforcement, and attuned instead to building efficient, robust networks.

Predictably, CALEA met stiff opposition from powerful telecoms companies as it worked its way through Congress, but the Clinton administration bought them off with hundreds of millions of dollars in subsidies to acquire wiretap-facilitation technologies. Immediately, a new industry sprang into being; companies that promised to help the carriers hack themselves, punching back doors into their networks. The pioneers of this dirty business were overwhelmingly founded by ex-Israeli signals intelligence personnel, though they often poached senior American military and intelligence officials to serve as the face of their operations and liase with their former colleagues in law enforcement and intelligence.

Telcos weren't the only opponents of CALEA, of course. Security experts – those who weren't hoping to cash in on government pork, anyways – warned that there was no way to make a back door that was only useful to the "good guys" but would keep the "bad guys" out.

These experts were – then as now – dismissed as neurotic worriers who simultaneously failed to understand the need to facilitate mass surveillance in order to keep the nation safe, and who lacked appropriate faith in American ingenuity. If we can put a man on the moon, surely we can build a security system that selectively fails when a cop needs it to, but stands up to every crook, bully, corporate snoop and foreign government. In other words: "We have faith in you! NERD HARDER!"

NERD HARDER! has been the answer ever since CALEA – and related Clinton-era initiatives, like the failed Clipper Chip program, which would have put a spy chip in every computer, and, eventually, every phone and gadget:

https://en.wikipedia.org/wiki/Clipper_chip

America may have invented NERD HARDER! but plenty of other countries have taken up the cause. The all-time champion is former Australian Prime Minister Malcolm Turnbull, who, when informed that the laws of mathematics dictate that it is impossible to make an encryption scheme that only protects good secrets and not bad ones, replied, "The laws of mathematics are very commendable, but the only law that applies in Australia is the law of Australia":

https://www.zdnet.com/article/the-laws-of-australia-will-trump-the-laws-of-mathematics-turnbull/

CALEA forced a redesign of the foundational, physical layer of the internet. Thankfully, encryption at the protocol layer – in the programs we use – partially counters this deliberately introduced brittleness in the security of all our communications. CALEA can be used to intercept your communications, but mostly what an attacker gets is "metadata" ("so-and-so sent a message of X bytes to such and such") because the data is scrambled and they can't unscramble it, because cryptography actually works, unlike back doors. Of course, that's why governments in the EU, the US, the UK and all over the world are still trying to ban working encryption, insisting that the back doors they'll install will only let the good guys in:

https://pluralistic.net/2023/03/05/theyre-still-trying-to-ban-cryptography/

Any back door can be exploited by your adversaries. The Chinese sponsored hacking group know as Salt Typhoon intercepted the communications of hundreds of millions of American residents, businesses, and institutions. From that position, they could do NSA-style metadata-analysis, malware injection, and interception of unencrypted traffic. And they didn't have to hack anything, because the US government insists that all networking gear ship pre-hacked so that cops can get into it.

This isn't even the first time that CALEA back doors have been exploited by a hostile foreign power as a matter of geopolitical skullduggery. In 2004-2005, Greece's telecommunications were under mass surveillance by US spy agencies who wiretapped Greek officials, all the way up to the Prime Minister, in order to mess with the Greek Olympic bid:

https://en.wikipedia.org/wiki/Greek_wiretapping_case_2004%E2%80%9305

This is a wild story in so many ways. For one thing, CALEA isn't law in Greece! You can totally sell working, secure networking gear in Greece, and in many other countries around the world where they have not passed a stupid CALEA-style law. However the US telecoms market is so fucking huge that all the manufacturers build CALEA back doors into their gear, no matter where it's destined for. So the US has effectively exported this deliberate insecurity to the whole planet – and used it to screw around with Olympic bids, the most penny-ante bullshit imaginable.

Now Chinese-sponsored hackers with cool names like "Salt Typhoon" are traipsing around inside US telecoms infrastructure, using the back doors the FBI insisted would be safe.

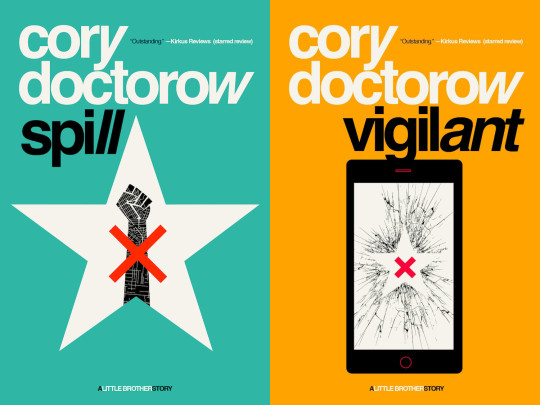

Tor Books as just published two new, free LITTLE BROTHER stories: VIGILANT, about creepy surveillance in distance education; and SPILL, about oil pipelines and indigenous landback.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/10/07/foreseeable-outcomes/#calea

Image: Kris Duda, modified https://www.flickr.com/photos/ahorcado/5433669707/

CC BY 2.0 https://creativecommons.org/licenses/by/2.0/

#pluralistic#calea#lawful interception#backdoors#keys under doormats#cold war 2.0#foreseeable outcomes#jerry berman#greece#olympics#snowden

400 notes

·

View notes

Text

Controversial Good Omens Takes and HC bc I like to see the world burn. (The last one will make you question my sanity)

I prefer Bond obsessed Crowley over queen fan Crowley. Don't get me wrong, it's cute and the fanarts with him and Freddy are always a treat HOWEVER it just doesn't line up with my reading of the text and Bond obsessed Crowley is right there. I mean he literally got petrol ONCE to get the promotional bullet hole transfers that he put on his Bentley.

Personally I hc Crowley as tech illiterate. Hear me out. So in the book he basically gets tech gadgets (his watch, his computer, his stereo) bc they are stylish, not bc they are practical. Literally his stereo is missing something critical yet still works bc he believes it should. So I think he is just great at pretending to know how tech works and things just seem like he does bc he believes that's how things should be. Actually he doesn't even know where to set his lockscreen. His phone just never dared to not have the correct one. And yes I know he hacked a few computers for the M25 well jokes on you, in that one deleted scene he does all those theatrics to bring down the phone network only to ultimately dump coffee over the server. He literally could have achieved the same thing from home. His hacking back in the day probably involved braking and entering and switching out the storage mediums manually. Not very tech literate if you ask me.

Aziraphale on the other hand is surprisingly tech literate, he is just a few decades behind. This one needs another explanation. So basically Aziraphale knows how things work, could probably explain to you in excruciating detail the program structure of any given application. He just struggles with graphical user interfaces and doesn't like non tactical inputs. He prefers to start his programs via console commands and probably finds it silly that people stopped memorizing where their files went. He'd probably run circles around any expert once given woefully outdated tech. So basically he understands how the fundamentals work and what's under the hood, so to speak, but he just really doesn't see the point in making it all work via pretty pictures and without clicky keys. I mean he still files his very accurate taxes on an Amstrad (was it an Amstrad ? Idk old computer, currently too lazy to look up which one he has)

(this one is probably not quite as controversial) No human in modern times will recognize what they are and remember it. So basically even tho Madam Tracy literally got possessed by Aziraphale, and had things explained to her, she probably forgot about the incident right after or if she remembers she believes Aziraphale to be a ghost and would not recognize him if she ran into him again. Simply bc that would fit her interanized world view better. Something, something about the human mind finding 'rational' explanations for the things they have been through. So basically Aziraphale and Crowley are real dumbasses when it comes to pretending to be human but they don't realize it, bc they just assume they are good at it and reality makes sure nobody proves them otherwise.

This here concludes the HC portion of this post. Turn around now, beyond this point only literary and fandom takes can be found pfff

The novel has the better ending. Don't get me wrong I love the show and the body swap. But you win some you lose some. Personally I think having their headquarters even attempt to execute our two idiots takes away from the overarching theme of the story. The whole point of having angels and demons be involved and having hell and heaven be dead set on the apocalypse is to basically frame humanity as the driving force. Aziraphale and Crowley are useless and so are their headquarters. They are detached pencil pushers obsessed with the illusion of control without actually having any. They follow their plan bc that's what they think they have to do, without ever considering the thing they have been entrusted with. They have as much of an idea what's going on as everybody else but make a point about pretending they the answers. They are all powerful but in the grand scheme of things barely move the needle. Them just pretending everything was fine and not punishing Aziraphale and Crowley to keep up face bc it's easier to pretend that THIS was the great plan after all, is hilarious and fits their role in the story better in my opinion. Then again they got more involved in the show so their role shifted slightly anyway soooo ehhhh.

While we all (hopefully) have disavowed Neil Geiman at this point, there is a conversation to be had (and a bit of unpacking to be done) on how much that person influenced and shaped the Good Omens fandom as it is today by positioning himself as the defacto authority over the story for DECADES. It actually insane how far back this goes. Just look at the Terry Gilliam adaptation that never happened. NG posted more about it than official sources despite also being on record stating that he doesn't want to be involved with another adaptation attempt at the time. Going as far as mentioning it in a completely unrelated context on occasion. That dude literally reshaped the narrative around the whole of Good Omens whenever it seemed to give him browney points. He even had a habit of dropping in other Terry Prattchet properties in a very strange way (in retrospective) and sure we know the two of them were friends and we can't judge their relationship bc we were not there BUT it's just very funny to see how Sir Terry had a consistent narrative the times he mentioned Good Omens on record, while NG not only talked a whole lot more about it, constantly, but also seems to reshape the narrative continuously in small ways.

(This point will make you question my sanity) There are influences from the 1992 movie script that made their way into the TV adaptation we finally got and possibly shaped the discussion about the sequel. Examples of that are Crowley's habit of snapping/him having anger issues , the concept of them being punished, Adam's dialogue with Satan, the starting point of the sequel/S3 aka Crowley being no longer affiliated with hell while Aziraphale is still affiliated with heaven. There are a few other things that are not in the novel but make a first appearance (as far as we know of bc I don't think we will ever get to read the script that was written in collaboration before the shit!script) in that version of the story. Sooo yhea you can say there are at least some subconscious influences.

89 notes

·

View notes

Text

I made a turn of the century x men evolution au

Hey everyone so a big special interest of mine is the period from 1900 to about 1928 so I decided what if I took the kids from the X-Men evolution cartoon from their point at the turn of the 21st century and put them at the turn of the 20th century instead...and also added a few characters. I hope you enjoy this. I have a lot for this au, and I'm gonna put it all under the read more.

So Au thoughts

The year is 1912. Xavier has started his institute a few years prior with scott summers and jean grey as students. Scott was adopted by Xavier after his parents died in a train crash and jean comes from a family of doctors and scientists Xavier is friends with.

Because no computers yet, as the microprocessor has yet to be invented, Forge didn't get stuck in a pocket dimention and got to grow up he works for xavier. He helps design and build a modified danger room that's more steam punk/dieselpunk. Lots of things are gear powered and Holograms are projections onto steam curtians. Because no computers, no cerebro. But jean and Xavier have trained themselves to be able to sense those with mutant powers around them and have been working with a network of underground individuals, some cases literally like the morlocks, to find out about new strange individuals popping up in the states. The morlocks are much more involved in this. they are good friends with Xavier and frequently helps young morlocks train their powers.

One of those individuals is Gambit. Gambit is 17 in this and does various jobs for xavier. One is listening through the grape vine for odd individuals popping up. The other is essentially working the danger room with forge. As The danger room is more steampunk/dieselpunk in this, with storm's help as well it can simulate weather events, earth quakes, fires, unstable ground, flooding, (bb gun based) old west shootouts, explosions (thanks to Gambit), search and rescue, avalanche / rock fall settings, and much more.

Gambit helps set up the room and make the mechanisms work. it functions well with automatons, steam engines, pistons, and a lot of theater special effect tricks. Gambit helps forge scrap hunt for machinery to repurpose for it. He has befriended the local street children and finds out from them wherever a factory tosses out a machine.

Speaking of theater though, we have morph as well as a staff member. Kevin is well known fairy(period equivalent to someone who does not fit into either gender) and drag expert from New York where they worked on broadway and a very close friend of Logan. So close they share a bedroom....;)

Morph is there to help with tailoring as well as helping kids who need disguises to pass in public cause of their time in broadway and avoid harassment, like Kurt.

They also help simulate battles in the danger room with foes they have faced off against before.

Kurt doesn't have an image modifier in this obviously. No computers, no digital holograms. But with forge and morph they are able to help him pass. Morph designs pants for him that have a special pocket for his tail to tuck away, as well as boots with special braces that help disguise his digitigrade feet. Morph also helps him with makeup and hair in the morning to hide his blue face and pointed ears.

For his hands forge has built some prothstetic fingers that are controlled by the other fingers in his hand like a puppet, so it appears he has five fingers on each hand covered by riding gloves, as well as colored contact lenses for his eyes to disguise them as brown.

Kurt's parents came to America from Germany with him as a toddler. People found out about their adopted son and they had to flee. They settled in a small German speaking community in the middle of nowhere Iowa where they could be safe. They would have a priest visit Kurt to give him mass in private for his own safety and had a nun come to tutor him. Xavier found out about Kurt through gambits grapevine.

Ororo came from Africa as a citizen of British colony egypt to Jamaica where she met Charles she has family living in the states via her sister who do are wealthy merchants. they were british colony expats that moved to the states to control British imports to the states easier. Thus how we get Evan. Skateboard hasn't been invented yet so he is big into the turn of the century cycling craze as well as roller skates.

Rogue is still a goth. A very very classic goth. Victorian goth. She still dresses like it's the 1800s to in part keep others from touching her skin but also she is just a great appreciator of Poe and Shelly and stoker.

One thing that is different for Scott is that on top of the train crash his brother havoc is still with him at this time. His parents are very, very dead tho. No alien rescues. (Forgot to draw Alex tho but he's there as are the minor character students)

Beast is also there more from the begining as a teacher he helps take care of the kids medical needs. He got kicked out of his scientific circle, not cause of his mutant ness that came later, but because he insisted doctors must wash their hands before interacting with patients.

Jean grey is a highly educated absolute Gibson girl. She and Kitty sneak out to do suffragette stuff regularly. Speaking of, kitty is definitely a girl of the new century. Wants to go to college one day with Jean. Insists on wearing riding/sports pants wherever she goes. She is girly in certain ways, but defs is a very modern young woman. She likes helping Forge out with his projects.

Magneto's hatred for humanity in this case comes from his survival of the pogroms of eastern Europe only to see there is still antisemitism once escaping them. And mystique has a boarding house where the brotherhood kids live, but she wasn't principal of the bayview school.

Wolverine is a cowboy in this au yes. He has a horse, but he's also toying with some of the very few motorcycles. They are more of dirt bikes at this point tho, so his horse his still his go too. It's a deep black mare named Blackbird. He does not have an adimantium skeleton but his claws have been capped with silver to help protect them.

No x jet but they do have a few biplanes they are training with. Forge is modifying them to be able to cary more people. So far he's made one that can vary five.

Gambit introduces everyone to jazz cause it hasn't left Louisiana yet. He brought his Grammaphone and all hell broke loose from there.

Also rogue having a bit more of a high society upbringing thanks to irene. Gambit hasn't had a day of real school as public school wants universally established until the 1910s. He knows his reading, writing, and arithmetic from Sunday school and such and whatever jean luc had him taught, but he's excited to learn about what the kids are learning about in their normal school.

Rogue brings him her study material and teaches it to him and in return he teaches her the various crafts and skills he learned in the bayou and as a member of the theives guild.

Hope you guys enjoy all this!!! Please feel free to share your thoughts!

Tried to keep things period accurate outfits wise.

#romy#turn of the century au#gambit x rogue#x men comics#remy lebeau#x men 97#x men evolution#kurt wagner#proffesor x#charles xavier#anna marie darkholme#morph#Evan Daniels#spyke#kitty pryde#shadowcat#wolverine#morpherine#forge x men#storm xmen#ororo munroe#my artwork#scott summers#jean grey#hank maccoy#sweet-tea#logan howlett#cyclops#kevin sydney

196 notes

·

View notes

Text

information flow in transformers

In machine learning, the transformer architecture is a very commonly used type of neural network model. Many of the well-known neural nets introduced in the last few years use this architecture, including GPT-2, GPT-3, and GPT-4.

This post is about the way that computation is structured inside of a transformer.

Internally, these models pass information around in a constrained way that feels strange and limited at first glance.

Specifically, inside the "program" implemented by a transformer, each segment of "code" can only access a subset of the program's "state." If the program computes a value, and writes it into the state, that doesn't make value available to any block of code that might run after the write; instead, only some operations can access the value, while others are prohibited from seeing it.

This sounds vaguely like the kind of constraint that human programmers often put on themselves: "separation of concerns," "no global variables," "your function should only take the inputs it needs," that sort of thing.

However, the apparent analogy is misleading. The transformer constraints don't look much like anything that a human programmer would write, at least under normal circumstances. And the rationale behind them is very different from "modularity" or "separation of concerns."

(Domain experts know all about this already -- this is a pedagogical post for everyone else.)

1. setting the stage

For concreteness, let's think about a transformer that is a causal language model.

So, something like GPT-3, or the model that wrote text for @nostalgebraist-autoresponder.

Roughly speaking, this model's input is a sequence of words, like ["Fido", "is", "a", "dog"].

Since the model needs to know the order the words come in, we'll include an integer offset alongside each word, specifying the position of this element in the sequence. So, in full, our example input is

[ ("Fido", 0), ("is", 1), ("a", 2), ("dog", 3), ]

The model itself -- the neural network -- can be viewed as a single long function, which operates on a single element of the sequence. Its task is to output the next element.

Let's call the function f. If f does its job perfectly, then when applied to our example sequence, we will have

f("Fido", 0) = "is" f("is", 1) = "a" f("a", 2) = "dog"

(Note: I've omitted the index from the output type, since it's always obvious what the next index is. Also, in reality the output type is a probability distribution over words, not just a word; the goal is to put high probability on the next word. I'm ignoring this to simplify exposition.)

You may have noticed something: as written, this seems impossible!

Like, how is the function supposed to know that after ("a", 2), the next word is "dog"!? The word "a" could be followed by all sorts of things.

What makes "dog" likely, in this case, is the fact that we're talking about someone named "Fido."

That information isn't contained in ("a", 2). To do the right thing here, you need info from the whole sequence thus far -- from "Fido is a", as opposed to just "a".

How can f get this information, if its input is just a single word and an index?

This is possible because f isn't a pure function. The program has an internal state, which f can access and modify.

But f doesn't just have arbitrary read/write access to the state. Its access is constrained, in a very specific sort of way.

2. transformer-style programming

Let's get more specific about the program state.

The state consists of a series of distinct "memory regions" or "blocks," which have an order assigned to them.

Let's use the notation memory_i for these. The first block is memory_0, the second is memory_1, and so on.

In practice, a small transformer might have around 10 of these blocks, while a very large one might have 100 or more.

Each block contains a separate data-storage "cell" for each offset in the sequence.

For example, memory_0 contains a cell for position 0 ("Fido" in our example text), and a cell for position 1 ("is"), and so on. Meanwhile, memory_1 contains its own, distinct cells for each of these positions. And so does memory_2, etc.

So the overall layout looks like:

memory_0: [cell 0, cell 1, ...] memory_1: [cell 0, cell 1, ...] [...]

Our function f can interact with this program state. But it must do so in a way that conforms to a set of rules.

Here are the rules:

The function can only interact with the blocks by using a specific instruction.

This instruction is an "atomic write+read". It writes data to a block, then reads data from that block for f to use.

When the instruction writes data, it goes in the cell specified in the function offset argument. That is, the "i" in f(..., i).

When the instruction reads data, the data comes from all cells up to and including the offset argument.

The function must call the instruction exactly once for each block.

These calls must happen in order. For example, you can't do the call for memory_1 until you've done the one for memory_0.

Here's some pseudo-code, showing a generic computation of this kind:

f(x, i) { calculate some things using x and i; // next 2 lines are a single instruction write to memory_0 at position i; z0 = read from memory_0 at positions 0...i; calculate some things using x, i, and z0; // next 2 lines are a single instruction write to memory_1 at position i; z1 = read from memory_1 at positions 0...i; calculate some things using x, i, z0, and z1; [etc.] }

The rules impose a tradeoff between the amount of processing required to produce a value, and how early the value can be accessed within the function body.

Consider the moment when data is written to memory_0. This happens before anything is read (even from memory_0 itself).

So the data in memory_0 has been computed only on the basis of individual inputs like ("a," 2). It can't leverage any information about multiple words and how they relate to one another.

But just after the write to memory_0, there's a read from memory_0. This read pulls in data computed by f when it ran on all the earlier words in the sequence.

If we're processing ("a", 2) in our example, then this is the point where our code is first able to access facts like "the word 'Fido' appeared earlier in the text."

However, we still know less than we might prefer.

Recall that memory_0 gets written before anything gets read. The data living there only reflects what f knows before it can see all the other words, while it still only has access to the one word that appeared in its input.

The data we've just read does not contain a holistic, "fully processed" representation of the whole sequence so far ("Fido is a"). Instead, it contains:

a representation of ("Fido", 0) alone, computed in ignorance of the rest of the text

a representation of ("is", 1) alone, computed in ignorance of the rest of the text

a representation of ("a", 2) alone, computed in ignorance of the rest of the text

Now, once we get to memory_1, we will no longer face this problem. Stuff in memory_1 gets computed with the benefit of whatever was in memory_0. The step that computes it can "see all the words at once."

Nonetheless, the whole function is affected by a generalized version of the same quirk.

All else being equal, data stored in later blocks ought to be more useful. Suppose for instance that

memory_4 gets read/written 20% of the way through the function body, and

memory_16 gets read/written 80% of the way through the function body

Here, strictly more computation can be leveraged to produce the data in memory_16. Calculations which are simple enough to fit in the program, but too complex to fit in just 20% of the program, can be stored in memory_16 but not in memory_4.

All else being equal, then, we'd prefer to read from memory_16 rather than memory_4 if possible.

But in fact, we can only read from memory_16 once -- at a point 80% of the way through the code, when the read/write happens for that block.

The general picture looks like:

The early parts of the function can see and leverage what got computed earlier in the sequence -- by the same early parts of the function. This data is relatively "weak," since not much computation went into it. But, by the same token, we have plenty of time to further process it.

The late parts of the function can see and leverage what got computed earlier in the sequence -- by the same late parts of the function. This data is relatively "strong," since lots of computation went into it. But, by the same token, we don't have much time left to further process it.

3. why?

There are multiple ways you can "run" the program specified by f.

Here's one way, which is used when generating text, and which matches popular intuitions about how language models work:

First, we run f("Fido", 0) from start to end. The function returns "is." As a side effect, it populates cell 0 of every memory block.

Next, we run f("is", 1) from start to end. The function returns "a." As a side effect, it populates cell 1 of every memory block.

Etc.

If we're running the code like this, the constraints described earlier feel weird and pointlessly restrictive.

By the time we're running f("is", 1), we've already populated some data into every memory block, all the way up to memory_16 or whatever.

This data is already there, and contains lots of useful insights.

And yet, during the function call f("is", 1), we "forget about" this data -- only to progressively remember it again, block by block. The early parts of this call have only memory_0 to play with, and then memory_1, etc. Only at the end do we allow access to the juicy, extensively processed results that occupy the final blocks.

Why? Why not just let this call read memory_16 immediately, on the first line of code? The data is sitting there, ready to be used!

Why? Because the constraint enables a second way of running this program.

The second way is equivalent to the first, in the sense of producing the same outputs. But instead of processing one word at a time, it processes a whole sequence of words, in parallel.

Here's how it works:

In parallel, run f("Fido", 0) and f("is", 1) and f("a", 2), up until the first write+read instruction. You can do this because the functions are causally independent of one another, up to this point. We now have 3 copies of f, each at the same "line of code": the first write+read instruction.

Perform the write part of the instruction for all the copies, in parallel. This populates cells 0, 1 and 2 of memory_0.

Perform the read part of the instruction for all the copies, in parallel. Each copy of f receives some of the data just written to memory_0, covering offsets up to its own. For instance, f("is", 1) gets data from cells 0 and 1.

In parallel, continue running the 3 copies of f, covering the code between the first write+read instruction and the second.

Perform the second write. This populates cells 0, 1 and 2 of memory_1.

Perform the second read.

Repeat like this until done.

Observe that mode of operation only works if you have a complete input sequence ready before you run anything.

(You can't parallelize over later positions in the sequence if you don't know, yet, what words they contain.)

So, this won't work when the model is generating text, word by word.

But it will work if you have a bunch of texts, and you want to process those texts with the model, for the sake of updating the model so it does a better job of predicting them.

This is called "training," and it's how neural nets get made in the first place. In our programming analogy, it's how the code inside the function body gets written.

The fact that we can train in parallel over the sequence is a huge deal, and probably accounts for most (or even all) of the benefit that transformers have over earlier architectures like RNNs.

Accelerators like GPUs are really good at doing the kinds of calculations that happen inside neural nets, in parallel.

So if you can make your training process more parallel, you can effectively multiply the computing power available to it, for free. (I'm omitting many caveats here -- see this great post for details.)

Transformer training isn't maximally parallel. It's still sequential in one "dimension," namely the layers, which correspond to our write+read steps here. You can't parallelize those.

But it is, at least, parallel along some dimension, namely the sequence dimension.

The older RNN architecture, by contrast, was inherently sequential along both these dimensions. Training an RNN is, effectively, a nested for loop. But training a transformer is just a regular, single for loop.

4. tying it together

The "magical" thing about this setup is that both ways of running the model do the same thing. You are, literally, doing the same exact computation. The function can't tell whether it is being run one way or the other.

This is crucial, because we want the training process -- which uses the parallel mode -- to teach the model how to perform generation, which uses the sequential mode. Since both modes look the same from the model's perspective, this works.

This constraint -- that the code can run in parallel over the sequence, and that this must do the same thing as running it sequentially -- is the reason for everything else we noted above.

Earlier, we asked: why can't we allow later (in the sequence) invocations of f to read earlier data out of blocks like memory_16 immediately, on "the first line of code"?

And the answer is: because that would break parallelism. You'd have to run f("Fido", 0) all the way through before even starting to run f("is", 1).

By structuring the computation in this specific way, we provide the model with the benefits of recurrence -- writing things down at earlier positions, accessing them at later positions, and writing further things down which can be accessed even later -- while breaking the sequential dependencies that would ordinarily prevent a recurrent calculation from being executed in parallel.

In other words, we've found a way to create an iterative function that takes its own outputs as input -- and does so repeatedly, producing longer and longer outputs to be read off by its next invocation -- with the property that this iteration can be run in parallel.

We can run the first 10% of every iteration -- of f() and f(f()) and f(f(f())) and so on -- at the same time, before we know what will happen in the later stages of any iteration.

The call f(f()) uses all the information handed to it by f() -- eventually. But it cannot make any requests for information that would leave itself idling, waiting for f() to fully complete.

Whenever f(f()) needs a value computed by f(), it is always the value that f() -- running alongside f(f()), simultaneously -- has just written down, a mere moment ago.

No dead time, no idling, no waiting-for-the-other-guy-to-finish.

p.s.

The "memory blocks" here correspond to what are called "keys and values" in usual transformer lingo.

If you've heard the term "KV cache," it refers to the contents of the memory blocks during generation, when we're running in "sequential mode."

Usually, during generation, one keeps this state in memory and appends a new cell to each block whenever a new token is generated (and, as a result, the sequence gets longer by 1).

This is called "caching" to contrast it with the worse approach of throwing away the block contents after each generated token, and then re-generating them by running f on the whole sequence so far (not just the latest token). And then having to do that over and over, once per generated token.

#ai tag#is there some standard CS name for the thing i'm talking about here?#i feel like there should be#but i never heard people mention it#(or at least i've never heard people mention it in a way that made the connection with transformers clear)

313 notes

·

View notes

Text

criminal minds 18x02 initial thoughts (SPOILERS SPOILERS SPOILERS)

tara/rebecca sex scene whoaaaaa, NIIICE. it's so awesome that aisha got to direct this episode so she got a lot of control over this scene. I'm so happy for Tara even if i'd rather she be with Emily lol.

get your tomatoes and rocks ready for throwing bc I am enjoying the continued Voit storyline hahaha. I laughed SO HARD when Voit called Rossi dad, oh my GOD. it's KILLING me that Rossi has to ROLEPLAY AS VOIT'S DAD AJkslkjdskaldjsla the Voit torture on Rossi WILL NEVER END. And Voit was so twisted mentally by Rossi that even through his amensia he recognizes the connection they have. It's sooo funny! And just fucking perfect, Voit is the biggest thorn in rossi's side and I love it.

As for voit's amnesia, I went into this season thinking he was probably faking it and now I don't think he's faking it, so what I think will happen is he will start to regain his memories, but since he has empathy now he will struggle to come to terms with who he is and feel guilty about it. (And Luke will continue to think he's a big fakey liar man hahahaha)

cute scene with Penelope and JJ. <3 Pen offering to make Michael's birthday cake and JJ calling her "Mistress Penelope Grace" was so cute.

Tyler my cute bb boy, an official member of the team now <3 turns out all they had to do was pull like one tiny string (because come on, the BAU, jewel of the FBI, can get pretty much anything they want). "Big boy bullets" ahahaha. and then he couldn't keep the unsub from killing himself and he just felt sooo guilty about it. shhh, come to me, Tyty, I will take away the pain.

TARA GETTING TO USE HER FORENSIC PSYCHOLOGY SKILLS! yayyyyyy!

no real Garvez this episode :( pretty much what was got was the equivalent of the promo photo of them standing next to each other, but hey, THEY WERE STANDING NEXT TO EACH OTHER

there were a few Penelope and Tyler scenes which I am choosing to allow because I feel like they were there just to show that there is zero sexual whatever between them anymore, they're just coworkers and friends now, yup yup yupppp. Unfortch Tyler is also a computer expert (although obviously not as good as Penelope) so they would team up when it comes to computer things. though I did not enjoy Tyler offering to watch the torture porn so Penelope wouldn't have to, EXCUSE ME, THAT'S LUKE'S JOB. hmph

good creepy fucked up unsub of the week, and I prefer the serialized aspect to Evolution so I am really glad that at least the unsub of the week is still connected to the larger Voit/network storyline. I'm so curious to know what happens next!

they've mentioned BAUgate multiple times so I just now it'll become important at some time this season. poor JJ. she's getting mentally DESTROYED this season D:

and of course, will. :( I wasn't really shocked but it's just because I knewwwww it was coming. I personally don't have a problem with how it went down. sometimes.. people just die, senselessly, randomly. I knew this (death related to his earlier cancer scare) would be a possibility so I kind of made my peace with this potential outcome a while ago. it's horrible any time a character dies from being murdered, I mean, that just adds an extra layer or 5 of trauma for the characters. random death at least is.. natural? idk. in any case I think the next episode is where it's really gonna hit home. watching JJ grieve is gonna be terribleeeeeee.

26 notes

·

View notes

Text

Al-an but in a AU where he had no survival instinct and the network decides he needs to get his ass in gear and hires Robin a survival expert to teach him how to survive in survival bootcamp

It gets even funnier because shes one of those survival extremist and he's all couch potatoe sit in front of a computer ill die if i touch grass energy.

Half the time its just Robin traumatizing the poor guy by hunting bone sharks with spears and catching and eating raw peepers.

Like every other day he's contacting to network requesting a tranq gun because he half convinced she's turning feral. And might come for his life Lmfao

#al an#Robin ayou#Au#She literally has to drag his sad ass outside because he's convinced he'll get ticks#He's especially pathetic#And shes unhinged#Girl licks rocks for her daily salt intake and will fight a moose for it#AL-ans idea of a life threatening situtaion is no wifi#Al-an/robin#al an x robin#Al-an#al an subnautica#al an/robin#Sbz#subnautica

76 notes

·

View notes

Text

Michael de Adder :: @deAdder :: The Globe and Mail

* * * *

LETTERS FROM AN AMERICAN

February 2, 2025

Heather Cox Richardson

Feb 03, 2025

Billionaire Elon Musk’s team yesterday took control of the Treasury’s payment system, thus essentially gaining access to the checkbook with which the United States handles about $6 trillion annually and to all the financial information of Americans and American businesses with it. Apparently, it did not stop there.

Today Ellen Knickmeyer of the Associated Press reported that yesterday two top security officials from the U.S. Agency for International Development (USAID) tried to stop people associated with Musk’s Department of Government Efficiency, or DOGE, from accessing classified information they did not have security clearance to see. The Trump administration put the officials on leave, and the DOGE team gained access to the information.

Vittoria Elliott of Wired has identified those associated with Musk’s takeover as six “engineers who are barely out of—and in at least one case, purportedly still in—college.” They are connected either to Musk or to his long-time associate Peter Thiel, who backed J.D. Vance’s Senate run eighteen months before he became Trump’s vice presidential running mate. Their names are Akash Bobba, Edward Coristine, Luke Farritor, Gautier Cole Killian, Gavin Kliger, and Ethan Shaotran, and they have little to no experience in government.

Public policy expert Dan Moynihan told reporter Elliott that the fact these people “are not really public officials” makes it hard for Congress to intervene. “So this feels like a hostile takeover of the machinery of governments by the richest man in the world,” he said. Law professor Nick Bednar noted that “it is very unlikely” that the engineers “have the expertise to understand either the law or the administration needs that surround these agencies.”

After Musk’s team breached the USAID computers, cybersecurity specialist Matthew Garrett posted: “Random computers being plugged into federal networks is obviously terrifying in terms of what data they're deliberately accessing, but it's also terrifying because it implies controls are being disabled—unmanaged systems should never have access to this data. Who else has access to those systems?”

USAID receives foreign policy guidance from the State Department. Intelligence agencies must now assume U.S. intelligence systems are insecure.

Musk’s response was to post: “USAID is a criminal organization. Time for it to die.” Also last night, according to Sam Stein of The Bulwark, “the majority of staff in the legislative and public affairs bureau lost access to their emails, implying they’ve been put on admin leave although this was never communicated to them.”

Congress established USAID in 1961 to bring together the many different programs that were administering foreign aid. Focusing on long-term socioeconomic development, USAID has a budget of more than $50 billion, less than 1% of the U.S. annual budget. It is one of the largest aid agencies in the world.

Musk is unelected, and it appears that DOGE has no legal authority. As political scientist Seth Masket put it in tusk: “Elon Musk is not a federal employee, nor has he been appointed by the President nor approved by the Senate to have any leadership role in government. The ‘Department of Government Efficiency,’ announced by Trump in a January 20th executive order, is not truly any sort of government department or agency, and even the executive order uses quotes in the title. It’s perfectly fine to have a marketing gimmick like this, but DOGE does not have power over established government agencies, and Musk has no role in government. It does not matter that he is an ally of the President. Musk is a private citizen taking control of established government offices. That is not efficiency; that is a coup.”

DOGE has simply taken over government systems. Musk, using President Donald Trump’s name, is personally deciding what he thinks should be cut from the U.S. government.

Today, Musk reposted a social media post from MAGA religious extremist General Mike Flynn, who resigned from his position as Trump’s national security advisor in 2017 after pleading guilty to secret conversations with a Russian agent—for which Trump pardoned him—and who publicly embraced the QAnon conspiracy theory. In today’s post, Flynn complained about “the ‘Lutheran’ faith” and, referring to federal grants provided to Lutheran Family Services and affiliated organizations, said, “this use of ‘religion’ as a money laundering operation must end.” Musk added: “The [DOGE] team is rapidly shutting down these illegal payments.”

In fact, this is money appropriated by Congress, and its payment is required by law. Republican lawmakers have pushed government subsidies and grants toward religious organizations for years, and Lutheran Social Services is one of the largest employers in South Dakota, where it operates senior living facilities.

South Dakota is the home of Senate majority leader John Thune, who has not been a strong Trump supporter, as well as Homeland Security secretary nominee Kristi Noem.

The news that DOGE has taken over U.S. government computers is not the only bombshell this weekend.

Another is that Trump has declared a trade war with the top trading partners of the United States: Mexico, Canada, and China. Although his first administration negotiated the current trade agreement between the U.S., Mexico, and Canada, on Saturday Trump broke the terms of that treaty.

He slapped tariffs of 25% on goods coming from Mexico and Canada, tariffs of 10% on Canadian energy, and tariffs of 10% on goods coming from China. He said he was doing so to force Mexico and Canada to do more about undocumented migration and drug trafficking, but while precursor chemicals to make fentanyl come from China and undocumented migrants come over the southern border with Mexico, Canada accounts for only about 1% of both. Further, Trump has diverted Immigration and Customs Enforcement agents combating drug trafficking to his immigration sweeps.

As soon as he took office, Trump designated Mexican drug cartels as foreign terrorist organizations, and on Friday, Secretary of Defense Pete Hegseth responded that “all options will be on the table” when a Fox News Channel host asked if the military will strike within Mexico. Today Trump was clearer: he posted on social media that without U.S. trade—which Trump somehow thinks is a “massive subsidy”—“Canada ceases to exist as a viable Country. Harsh but true! Therefore, Canada should become our Cherished 51st State. Much lower taxes, and far better military protection for the people of Canada—AND NO TARIFFS!”

Trump inherited the best economy in the world from his predecessor, President Joe Biden, but on Friday, as soon as White House press secretary Karoline Leavitt confirmed that Trump would levy the tariffs, the stock market plunged. Trump, who during his campaign insisted that tariffs would boost the economy, today said that Americans could feel “SOME PAIN” from them. He added “BUT WE WILL MAKE AMERICA GREAT AGAIN, AND IT WILL ALL BE WORTH THE PRICE THAT MUST BE PAID.” Tonight, stock market futures dropped 450 points before trading opens tomorrow.

Mexican president Claudia Sheinbaum wrote, “We categorically reject the White House’s slander that the Mexican government has alliances with criminal organizations, as well as any intention of meddling in our territory,” and has promised retaliatory tariffs. China noted that it has been working with the U.S. to regulate precursor chemicals since 2019 and said it would sue the U.S. before the World Trade Organization.

Canada’s prime minister Justin Trudeau announced more than $100 billion in retaliatory 25% tariffs and then spoke directly to Americans. Echoing what economists have said all along, Trudeau warned that tariffs would cost jobs, raise prices, and limit the precious metals necessary for U.S. security. But then he turned from economics to principles.

“As President John F. Kennedy said many years ago,” Trudeau began, “geography has made us neighbours. History has made us friends, economics has made us partners and necessity has made us allies.” He noted that “from the beaches of Normandy to the mountains of the Korean Peninsula, from the fields of Flanders to the streets of Kandahar,” Canadians “have “fought and died alongside you.”

“During the summer of 2005, when Hurricane Katrina ravaged your great city of New Orleans, or mere weeks ago when we sent water bombers to tackle the wildfires in California. During the day, the world stood still—Sept. 11, 2001—when we provided refuge to stranded passengers and planes, we were always there, standing with you, grieving with you, the American people.

“Together, we’ve built the most successful economic, military and security partnership the world has ever seen. A relationship that has been the envy of the world…. Unfortunately, the actions taken today by the White House split us apart instead of bringing us together.”

Trudeau said Canada’s response would “be far reaching and include everyday items such as American beer, wine and bourbon, fruits and fruit juices, including orange juice, along with vegetables, perfume, clothing and shoes. It’ll include major consumer products like household appliances, furniture and sports equipment, and materials like lumber and plastics, along with much, much more. He assured Canadians: “[W]e are all in this together. The Canadian government, Canadian businesses, Canadian organized labour, Canadian civil society, Canada’s premiers, and tens of millions of Canadians from coast to coast to coast are aligned and united. This is Team Canada at its best.”

Canadian provincial leaders said they were removing alcohol from Republican-dominated states, and Canadian member of parliament Charlie Angus noted that the Liquor Control Board of Ontario buys more wine by dollar value than any other organization in the world and that Canada is the number one export market for Kentucky spirits. The Liquor Control Board of Ontario has stopped all purchases of American beer, wine, and spirits, turning instead to allies and local producers. Canada’s Irving Oil, which provides heating oil to New England, has already told customers that prices will reflect the tariffs.

In a riveting piece today, in his Thinking about…, scholar of authoritarianism Timothy Snyder wrote that “[t]he people who now dominate the executive branch of the government…are acting, quite deliberately, to destroy the nation.” “Think of the federal government as a car,” he wrote. “You might have thought that the election was like getting the car serviced. Instead, when you come into the shop, the mechanics, who somehow don’t look like mechanics, tell you that they have taken the parts of your car that work and sold them and kept the money. And that this was the most efficient thing to do. And that you should thank them.”

On Friday, James E. Dennehy of the FBI’s New York field office told his staff that they are “in a battle of our own, as good people are being walked out of the F.B.I. and others are being targeted because they did their jobs in accordance with the law and F.B.I. policy.” He vowed that he, anyway, is going to “dig in.”

LETTERS FROM AN AMERICAN

HEATHER COX RICHARDSON

#deAdder#Michael de Adder#The globe and Mail#Heather Cox Richardson#Letters From An American#Musk#tariffs#coup#Timothy Snyder#FBI#USAID#DOGE#constitutional crisis#unprecidented

40 notes

·

View notes

Note

I was reading through your blog for fun (a lotta great posts on there, by the way!) and I decided to see what your first ever blog post was. Imagine my surprise when it was an eerily on-point description of a modern generative AI, written in 2007. Differs on some details, but still, get a load of Nostradamus over here!

Link for the curious.

Back in 2007 I was a comp sci major who was really into neural networks and expert systems and had been convinced by a series of books that AI were going to be the future, just ... right around the corner.

And I was double majoring in English, so spending a lot of time looking at what people were doing with language learning, which was ... more or less nothing. There were Markov chain generators, but the neural nets were just too small, with not enough data to them, though at the time it just seemed like it might be a dead end.

I was just convinced that there had to be a way to get a computer to output text on the basis of either expert system rules or statistical inference or something, if we had enough data, if we could handle the flow of data, if computers would only get better. I was tinkering with neural nets, setting up some basic ones to try to tell the difference between a cat and a dog, but there was so much that hadn't been invented yet.

I chattered about this "AuthorBot" idea to a bunch of people; my blog was a repository for things that I knew I was boring everyone with (and still is).

I do think there's some alternate world where I went into academia when I graduated and got a minor role on some team that was working on neural networks. I am a competent programmer, but only competent, so I wouldn't have led anything, and most likely would have been stunned by the series of papers that unfolded over the next fifteen years, forced to try to eke out a niche and play catch up.

47 notes

·

View notes

Text

I stopped using my cellphone for regular calls and text messages last fall and switched to Signal. I wasn’t being paranoid—or at least I don’t think I was. I worked in the National Security Council, and we were told that China had compromised all major U.S. telecommunications companies and burrowed deep inside their networks. Beijing had gathered information on more than a million Americans, mainly in the Washington, D.C., area. The Chinese government could listen in to phone calls and read text messages. Experts call the Chinese state-backed group responsible Salt Typhoon, and the vulnerabilities it exploited have not been fixed. China is still there.

Telecommunications systems aren’t the only ones compromised. China has accessed enormous quantities of data on Americans for more than a decade. It has hacked into health-insurance companies and hotel chains, as well as security-clearance information held by the Office of Personnel Management.

The jaded response here is All countries spy. So what? But the spectacular surprise attacks that Ukraine and Israel have pulled off against their enemies suggest just how serious such penetration can become. In Operation Spiderweb, Ukraine smuggled attack drones on trucks with unwitting drivers deep inside of Russia, and then used artificial intelligence to simultaneously attack four military bases and destroy a significant number of strategic bombers, which are part of Russia’s nuclear triad. Israel created a real pager-production company in Hungary to infiltrate Hezbollah’s global supply chains and booby-trap its communication devices, killing or maiming much of the group’s leadership in one go. Last week, in Operation Rising Lion, Israel assassinated many top Iranian military leaders simultaneously and attacked the country’s nuclear facilities, thanks in part to a drone base it built inside Iran.

In each case, a resourceful, determined, and imaginative state used new technologies and data to do what was hitherto deemed impossible. America’s adversaries are also resourceful, determined, and imaginative.

Just think about what might happen if a U.S.-China war broke out over Taiwan.

A Chinese state-backed group called Volt Typhoon has been preparing plans to attack crucial infrastructure in the United States should the two countries ever be at war. As Jen Easterly put it in 2024 when she was head of the Cyber and Infrastructure Security Agency (CISA), China is planning to “launch destructive cyber-attacks in the event of a major crisis or conflict with the United States,” including “the disruption of our gas pipelines; the pollution of our water facilities; the severing of our telecommunications; the crippling of our transportation systems.”

The Biden administration took measures to fight off these cyberattacks and harden the infrastructure. Joe Biden also imposed some sanctions on China and took some specific measures to limit America’s exposure; he cut off imports of Chinese electric vehicles because of national-security concerns. Biden additionally signed a bill to ban TikTok, but President Donald Trump has issued rolling extensions to keep the platform functioning in the U.S. America and its allies will need to think hard about where to draw the line in the era of the Internet of Things, which connects nearly everything and could allow much of it—including robots, drones, and cloud computing—to be weaponized.

China isn’t the only problem. According to the U.S. Intelligence Community’s Annual Threat Assessment for this year, Russia is developing a new device to detonate a nuclear weapon in space with potentially “devastating” consequences. A Pentagon official last year said the weapon could pose “a threat to satellites operated by countries and companies around the globe, as well as to the vital communications, scientific, meteorological, agricultural, commercial, and national security services we all depend upon. Make no mistake, even if detonating a nuclear weapon in space does not directly kill people, the indirect impact could be catastrophic to the entire world.” The device could also render Trump’s proposed “Golden Dome” missile shield largely ineffective.

Americans can expect a major adversary to use drones and AI to go after targets deep inside the United States or allied countries. There is no reason to believe that an enemy wouldn’t take a page out of the Israeli playbook and go after leadership. New technologies reward acting preemptively, catching the adversary by surprise—so the United States may not get much notice. A determined adversary could even cut the undersea cables that allow the internet to function. Last year, vessels linked to Russia and China appeared to have severed those cables in Europe on a number of occasions, supposedly by accident. In a concerted hostile action, Moscow could cut or destroy these cables at scale.

Terrorist groups are less capable than state actors—they are unlikely to destroy most of the civilian satellites in space, for example, or collapse essential infrastructure—but new technologies could expand their reach too. In their book The Coming Wave, Mustafa Suleyman and Michael Bhaskar described some potential attacks that terrorists could undertake: unleashing hundreds or thousands of drones equipped with automatic weapons and facial recognition on multiple cities simultaneously, say, or even one drone to spray a lethal pathogen on a crowd.

A good deal of American infrastructure is owned by private companies with little incentive to undertake the difficult and costly fixes that might defend against Chinese infiltration. Certainly this is true of telecommunications companies, as well as those providing utilities such as water and electricity. Making American systems resilient could require a major public outlay. But it could cost less than the $150 billion (one estimate has that figure at an eye-popping $185 billion) that the House of Representatives is proposing to appropriate this year to strictly enforce immigration law.

Instead, the Trump administration proposed slashing funding for CISA, the agency responsible for protecting much of our infrastructure against foreign attacks, by $495 million, or approximately 20 percent of its budget. That cut will make the United States more vulnerable to attack.

The response to the drone threat has been no better. Some in Congress have tried to pass legislation expanding government authority to detect and destroy drones over certain kinds of locations, but the most recent effort failed. Senator Rand Paul, who was then the ranking member of the Senate Committee on Homeland Security and Governmental Affairs and is now the chair, said there was no imminent threat and warned against giving the government sweeping surveillance powers, although the legislation entailed nothing of the sort. Senators from both parties have resisted other legislative measures to counter drones.

The United States could learn a lot from Ukraine on how to counter drones, as well as how to use them, but the administration has displayed little interest in doing this. The massively expensive Golden Dome project is solely focused on defending against the most advanced missiles but should be tasked with dealing with the drone threat as well.

Meanwhile, key questions go unasked and unanswered. What infrastructure most needs to be protected? Should aircraft be kept in the open? Where should the United States locate a counter-drone capability?

After 9/11, the United States built a far-reaching homeland-security apparatus focused on counterterrorism. The Trump administration is refocusing it on border security and immigration. But the biggest threat we face is not terrorism, let alone immigration. Those responsible for homeland security should not be chasing laborers on farms and busboys in restaurants in order to meet quotas imposed by the White House.

The wars in Ukraine and the Middle East are giving Americans a glimpse into the battles of the future—and a warning. It is time to prepare.

11 notes

·

View notes

Text

Interesting Papers for Week 19, 2025

Individual-specific strategies inform category learning. Collina, J. S., Erdil, G., Xia, M., Angeloni, C. F., Wood, K. C., Sheth, J., Kording, K. P., Cohen, Y. E., & Geffen, M. N. (2025). Scientific Reports, 15, 2984.

Visual activity enhances neuronal excitability in thalamic relay neurons. Duménieu, M., Fronzaroli-Molinieres, L., Naudin, L., Iborra-Bonnaure, C., Wakade, A., Zanin, E., Aziz, A., Ankri, N., Incontro, S., Denis, D., Marquèze-Pouey, B., Brette, R., Debanne, D., & Russier, M. (2025). Science Advances, 11(4).

The functional role of oscillatory dynamics in neocortical circuits: A computational perspective. Effenberger, F., Carvalho, P., Dubinin, I., & Singer, W. (2025). Proceedings of the National Academy of Sciences, 122(4), e2412830122.

Expert navigators deploy rational complexity–based decision precaching for large-scale real-world planning. Fernandez Velasco, P., Griesbauer, E.-M., Brunec, I. K., Morley, J., Manley, E., McNamee, D. C., & Spiers, H. J. (2025). Proceedings of the National Academy of Sciences, 122(4), e2407814122.

Basal ganglia components have distinct computational roles in decision-making dynamics under conflict and uncertainty. Ging-Jehli, N. R., Cavanagh, J. F., Ahn, M., Segar, D. J., Asaad, W. F., & Frank, M. J. (2025). PLOS Biology, 23(1), e3002978.

Hippocampal Lesions in Male Rats Produce Retrograde Memory Loss for Over‐Trained Spatial Memory but Do Not Impact Appetitive‐Contextual Memory: Implications for Theories of Memory Organization in the Mammalian Brain. Hong, N. S., Lee, J. Q., Bonifacio, C. J. T., Gibb, M. J., Kent, M., Nixon, A., Panjwani, M., Robinson, D., Rusnak, V., Trudel, T., Vos, J., & McDonald, R. J. (2025). Journal of Neuroscience Research, 103(1).

Sensory experience controls dendritic structure and behavior by distinct pathways involving degenerins. Inberg, S., Iosilevskii, Y., Calatayud-Sanchez, A., Setty, H., Oren-Suissa, M., Krieg, M., & Podbilewicz, B. (2025). eLife, 14, e83973.

Distributed representations of temporally accumulated reward prediction errors in the mouse cortex. Makino, H., & Suhaimi, A. (2025). Science Advances, 11(4).

Adaptation optimizes sensory encoding for future stimuli. Mao, J., Rothkopf, C. A., & Stocker, A. A. (2025). PLOS Computational Biology, 21(1), e1012746.

Memory load influences our preparedness to act on visual representations in working memory without affecting their accessibility. Nasrawi, R., Mautner-Rohde, M., & van Ede, F. (2025). Progress in Neurobiology, 245, 102717.

Layer-specific control of inhibition by NDNF interneurons. Naumann, L. B., Hertäg, L., Müller, J., Letzkus, J. J., & Sprekeler, H. (2025). Proceedings of the National Academy of Sciences, 122(4), e2408966122.

Multisensory integration operates on correlated input from unimodal transient channels. Parise, C. V, & Ernst, M. O. (2025). eLife, 12, e90841.3.

Random noise promotes slow heterogeneous synaptic dynamics important for robust working memory computation. Rungratsameetaweemana, N., Kim, R., Chotibut, T., & Sejnowski, T. J. (2025). Proceedings of the National Academy of Sciences, 122(3), e2316745122.

Discriminating neural ensemble patterns through dendritic computations in randomly connected feedforward networks. Somashekar, B. P., & Bhalla, U. S. (2025). eLife, 13, e100664.4.

Effects of noise and metabolic cost on cortical task representations. Stroud, J. P., Wojcik, M., Jensen, K. T., Kusunoki, M., Kadohisa, M., Buckley, M. J., Duncan, J., Stokes, M. G., & Lengyel, M. (2025). eLife, 13, e94961.2.

Representational geometry explains puzzling error distributions in behavioral tasks. Wei, X.-X., & Woodford, M. (2025). Proceedings of the National Academy of Sciences, 122(4), e2407540122.

Deficiency of orexin receptor type 1 in dopaminergic neurons increases novelty-induced locomotion and exploration. Xiao, X., Yeghiazaryan, G., Eggersmann, F., Cremer, A. L., Backes, H., Kloppenburg, P., & Hausen, A. C. (2025). eLife, 12, e91716.4.

Endopiriform neurons projecting to ventral CA1 are a critical node for recognition memory. Yamawaki, N., Login, H., Feld-Jakobsen, S. Ø., Molnar, B. M., Kirkegaard, M. Z., Moltesen, M., Okrasa, A., Radulovic, J., & Tanimura, A. (2025). eLife, 13, e99642.4.

Cost-benefit tradeoff mediates the transition from rule-based to memory-based processing during practice. Yang, G., & Jiang, J. (2025). PLOS Biology, 23(1), e3002987.

Identification of the subventricular tegmental nucleus as brainstem reward center. Zichó, K., Balog, B. Z., Sebestény, R. Z., Brunner, J., Takács, V., Barth, A. M., Seng, C., Orosz, Á., Aliczki, M., Sebők, H., Mikics, E., Földy, C., Szabadics, J., & Nyiri, G. (2025). Science, 387(6732).

#neuroscience#science#research#brain science#scientific publications#cognitive science#neurobiology#cognition#psychophysics#neurons#neural computation#neural networks#computational neuroscience

12 notes

·

View notes

Note

what is, in your expert opinion, the most wolfgirl-coded piece of hardware? definition of hardware left intentionally vague

okay so there's lots of ways I could've answered this, but I'm going PCI-E wifi cards with two antennas. I picked this one particularly because of the fluffy* heatsink coat, but most of them apply.

reasons:

social (networking equipment) but could be left by itself for long periods of time (lone wolf)

two ears for detecting waves

excellent sense of smell (can sniff packets)

is a girl (all computer hardware is girls)

runner-up answers: MiG-105, gameboy advance SP with wireless adapter, suspension with trailing-arm geometry

*metal heatsinks are fluffy and huggable

8 notes

·

View notes

Text

Why I can’t stand the Pelant Arc

Christopher Pelant is probably one of the most dangerous villains in Bones, presented as being a total computer master hacker and all-around deranged individual. Though the idea of a hacker villain is very interesting, the way it was executed is infuriating. It’s clear that nobody in the writer's room knew how malware and computers work in the real world.

I am not an expert in computer science, but I am currently a cybersecurity and computer networking operations major, I’m not very far in so my knowledge is limited but I do have a basic understanding of how malware works.

You cannot code malware onto a bone, that is not how computers work, and it’s not how malware works. There is more than one type of malware and they all work differently but there is not a single type of malware that could be coded onto a bone.

Malware can be hidden in an image file, this is entirely possible but not the way that it happened in Bones. Angela did not download anything to her computer that could have had a virus hidden in it. When Angela scanned the images of the bone into her computer, she created a brand new image file with a picture of code in it. A picture of code cannot harm your computer. I could print out the code for a virus, scan it into my computer, and make it my desktop background and absolutely nothing would happen because it’s not actually code. It’s no more harmful than a picture of a kitten.

The suspension of disbelief on the possibility of what Pelant does is not difficult for someone who doesn’t know about the way that malware works but once you understand even the basics it very quickly falls apart.

The one very unlikely possibility is that Pelant somehow wrote a QR code of sorts into the bone that led Angela’s computer to download and execute a file. I do not believe that is the case though, as for Angela’s computer to automatically open QR codes automatically download whatever without even checking for a confirmation, and automatically run anything downloaded without checking, would require awful cybersecurity on her machine.

Considering that Angela is shown to be proficient in computer science and how advanced her computer is supposed to be, I refuse to believe that she could be that stupid and have a computer that just downloaded anything it was promoted to download without some type of check. She works with the federal government, there is no way her system could be that insecure.

For the malware on the bone to work it would have to magically have been linked to an executable file of some type. I say magical because this is not how real computers work. Malware on a bone is about as realistic as if Pelant tattooed the virus onto his ass and infected the computer by scanning his cheeks on a printer.

#bones fox#bones tv#christopher pelant#pelant#angela montenegro#I love Angela but jesus christ woman what is up with your computer

26 notes

·

View notes

Text

Man, writing general intro stuff is poison to my brain. I feel like I have this one chance to sell the vibe of the game, and I can’t quite get it to feel right in about four paragraphs. I don’t really like either of them. The first is too conversational and I accidentally wrote it in second person. The other one is clinical and has no flavor.

Idk man this is really the worst parts of projects for me.

——

You are a wizard with a day job. The world is mostly mundane. You go to work, you eat, you sleep. And that’s how most people around you live as well. But every so often, when the stars align, some odd shit happens and someone needs 2-4 wizards to come fix it.

Your wizard is a normal person. They have about as much magic skill as the average office worker has tech skill. It’s enough to get by and do their job, and if they were ambitious they should probably learn more, but they’re not experts.

You work along side orcs and dragonlings who are in about the same boat as you. They come in, sit at their desk for a few hours, use the company issued wand-o-sorting to file some documents so they look busy, and then they go home.

On your walk home, you pass by food carts wheeled by hulking stone constructs, willed to life by the mana stone in their chest. The cobblestone streets and old stone fortress walls around your neighborhood are looking rough these days, covered in graffiti. You swear you voted on some ordinance or another that was supposed to clean that up.

You get to your tower. Your apartment is on the fifth floor. If you got a unit in one of the new mage-bound buildings it would be cheaper, but you’d have to walk up 30 stories. Not worth it.

But it’s the weekend. Your crew is probably already waiting. The adventure boards have been busy lately. You decided on the old count with the vampire bat problem last night, hopefully the port stones will be loaded already so you can leave right away.

——————

In Weekend Wizards you play as career wizards who have taken up adventuring on the weekends as a hobby. The world is a semi-modern fantasy where people commonly learn magic as a part of their careers. These work wizards might be trained in magic as it relates to their job, but few people ever pursue the practice far enough to be considered an Arch mage: a person who has a wholistic mastery of magic. They act as the bulk of the workforce; from doctors down to laborers, almost everyone is trained in at least some magic for their job. Jobs that use magic more in their day-to-day have greater mana reserves, but no job is innately better at magic. Waiters, truck drivers, office workers, ecologists are all wizards with their own expertise and abilities.

The world is a medieval fantasy that has progressed to the level of technology of early analogue computing. Through magic, tech wizards construct Ley networks and rudimentary logic systems out of enchantments. Orbs are user interfaces and runestones are payphones. Bustling towns are built inside of stone walled keeps and enchanted forests may be just a day trip for city-goers. All varieties of fantasy races coexist in these packed cities, each culture morphing with the advancing society. The first skyscrapers are being constructed, a new age wizard towers full of trained arcane workers.

On these magical networks, Adventure Boards have popped up: services that connect clients with adventuring mercenaries. Adventuring has become a growing hobby for bored work wizards. The Ley networks let them connect with clients quickly and the ABs supply waygates that get adventurers to and from their destinations quickly. Each play session is one weekend of adventuring and the next session has a week between where your character goes to work and lives their normal life.

18 notes

·

View notes

Text

The allure of speed in technology development is a siren’s call that has led many innovators astray. “Move fast and break things” is a mantra that has driven the tech industry for years, but when applied to artificial intelligence, it becomes a perilous gamble. The rapid iteration and deployment of AI systems without thorough vetting can lead to catastrophic consequences, akin to releasing a flawed algorithm into the wild without a safety net.

AI systems, by their very nature, are complex and opaque. They operate on layers of neural networks that mimic the human brain’s synaptic connections, yet they lack the innate understanding and ethical reasoning that guide human decision-making. The haste to deploy AI without comprehensive testing is akin to launching a spacecraft without ensuring the integrity of its navigation systems. The potential for error is not just probable; it is inevitable.

The pitfalls of AI are numerous and multifaceted. Bias in training data can lead to discriminatory outcomes, while lack of transparency in decision-making processes can result in unaccountable systems. These issues are compounded by the “black box” nature of many AI models, where even the developers cannot fully explain how inputs are transformed into outputs. This opacity is not merely a technical challenge but an ethical one, as it obscures accountability and undermines trust.

To avoid these pitfalls, a paradigm shift is necessary. The development of AI must prioritize robustness over speed, with a focus on rigorous testing and validation. This involves not only technical assessments but also ethical evaluations, ensuring that AI systems align with societal values and norms. Techniques such as adversarial testing, where AI models are subjected to challenging scenarios to identify weaknesses, are crucial. Additionally, the implementation of explainable AI (XAI) can demystify the decision-making processes, providing clarity and accountability.