#computer-graphics

Explore tagged Tumblr posts

Text

HEY, YOU!

DO YOU LIKE OLD COMPUTER GRAPHICS?!

did you like ANY of these photos? would you like to see HUNDREDS MORE OF THEM?! with THOUSANDS OF UNIQUE TEXTURES?! ALL FROM FUCKING DECEMBER 15TH, YEAR 2000?!

NOW YOU CAN!!!

THERE'S ALSO A BUNCH OF CLIPART FROM 1997 IN .WMF FORMAT. I DON'T KNOW HOW TO USE THAT, BUT YOU MIGHT!

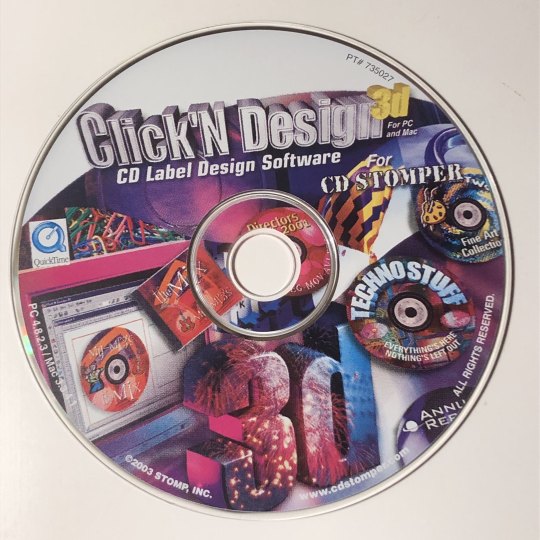

STILL not convinced???? LOOK AT THE DISC THEY CAME FROM!

WHAT THE HELL IS THAT!??!?!?!?!?! DON'T WAIT! GO LOOK AT THOSE JPEGS... TODAY!

30K notes

·

View notes

Text

A framework for solving parabolic partial differential equations

New Post has been published on https://thedigitalinsider.com/a-framework-for-solving-parabolic-partial-differential-equations/

A framework for solving parabolic partial differential equations

Computer graphics and geometry processing research provide the tools needed to simulate physical phenomena like fire and flames, aiding the creation of visual effects in video games and movies as well as the fabrication of complex geometric shapes using tools like 3D printing.

Under the hood, mathematical problems called partial differential equations (PDEs) model these natural processes. Among the many PDEs used in physics and computer graphics, a class called second-order parabolic PDEs explain how phenomena can become smooth over time. The most famous example in this class is the heat equation, which predicts how heat diffuses along a surface or in a volume over time.

Researchers in geometry processing have designed numerous algorithms to solve these problems on curved surfaces, but their methods often apply only to linear problems or to a single PDE. A more general approach by researchers from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) tackles a general class of these potentially nonlinear problems.

In a paper recently published in the Transactions on Graphics journal and presented at the SIGGRAPH conference, they describe an algorithm that solves different nonlinear parabolic PDEs on triangle meshes by splitting them into three simpler equations that can be solved with techniques graphics researchers already have in their software toolkit. This framework can help better analyze shapes and model complex dynamical processes.

“We provide a recipe: If you want to numerically solve a second-order parabolic PDE, you can follow a set of three steps,” says lead author Leticia Mattos Da Silva SM ’23, an MIT PhD student in electrical engineering and computer science (EECS) and CSAIL affiliate. “For each of the steps in this approach, you’re solving a simpler problem using simpler tools from geometry processing, but at the end, you get a solution to the more challenging second-order parabolic PDE.”

To accomplish this, Da Silva and her coauthors used Strang splitting, a technique that allows geometry processing researchers to break the PDE down into problems they know how to solve efficiently.

First, their algorithm advances a solution forward in time by solving the heat equation (also called the “diffusion equation”), which models how heat from a source spreads over a shape. Picture using a blow torch to warm up a metal plate — this equation describes how heat from that spot would diffuse over it.

This step can be completed easily with linear algebra.

Now, imagine that the parabolic PDE has additional nonlinear behaviors that are not described by the spread of heat. This is where the second step of the algorithm comes in: it accounts for the nonlinear piece by solving a Hamilton-Jacobi (HJ) equation, a first-order nonlinear PDE.

While generic HJ equations can be hard to solve, Mattos Da Silva and coauthors prove that their splitting method applied to many important PDEs yields an HJ equation that can be solved via convex optimization algorithms. Convex optimization is a standard tool for which researchers in geometry processing already have efficient and reliable software. In the final step, the algorithm advances a solution forward in time using the heat equation again to advance the more complex second-order parabolic PDE forward in time.

Among other applications, the framework could help simulate fire and flames more efficiently. “There’s a huge pipeline that creates a video with flames being simulated, but at the heart of it is a PDE solver,” says Mattos Da Silva. For these pipelines, an essential step is solving the G-equation, a nonlinear parabolic PDE that models the front propagation of the flame and can be solved using the researchers’ framework.

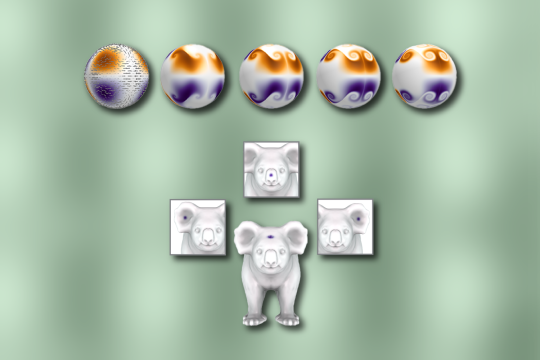

The team’s algorithm can also solve the diffusion equation in the logarithmic domain, where it becomes nonlinear. Senior author Justin Solomon, associate professor of EECS and leader of the CSAIL Geometric Data Processing Group, previously developed a state-of-the-art technique for optimal transport that requires taking the logarithm of the result of heat diffusion. Mattos Da Silva’s framework provided more reliable computations by doing diffusion directly in the logarithmic domain. This enabled a more stable way to, for example, find a geometric notion of average among distributions on surface meshes like a model of a koala.

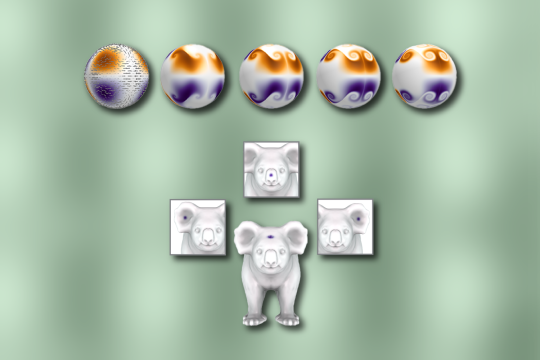

Even though their framework focuses on general, nonlinear problems, it can also be used to solve linear PDE. For instance, the method solves the Fokker-Planck equation, where heat diffuses in a linear way, but there are additional terms that drift in the same direction heat is spreading. In a straightforward application, the approach modeled how swirls would evolve over the surface of a triangulated sphere. The result resembles purple-and-brown latte art.

The researchers note that this project is a starting point for tackling the nonlinearity in other PDEs that appear in graphics and geometry processing head-on. For example, they focused on static surfaces but would like to apply their work to moving ones, too. Moreover, their framework solves problems involving a single parabolic PDE, but the team would also like to tackle problems involving coupled parabolic PDE. These types of problems arise in biology and chemistry, where the equation describing the evolution of each agent in a mixture, for example, is linked to the others’ equations.

Mattos Da Silva and Solomon wrote the paper with Oded Stein, assistant professor at the University of Southern California’s Viterbi School of Engineering. Their work was supported, in part, by an MIT Schwarzman College of Computing Fellowship funded by Google, a MathWorks Fellowship, the Swiss National Science Foundation, the U.S. Army Research Office, the U.S. Air Force Office of Scientific Research, the U.S. National Science Foundation, MIT-IBM Watson AI Lab, the Toyota-CSAIL Joint Research Center, Adobe Systems, and Google Research.

#3d#3D printing#Accounts#adobe#affiliate#agent#ai#air#air force#algorithm#Algorithms#applications#approach#Art#artificial#Artificial Intelligence#author#Biology#california#chemistry#college#computer#Computer Science#Computer Science and Artificial Intelligence Laboratory (CSAIL)#Computer science and technology#computer-graphics#computing#conference#data#data processing

0 notes

Text

💿✮.𖥔 ݁Cybercore Stamps✩°。⋆⸜ 🎧

❤︎ Like + Reblog if use ⊹ Template

❤︎ "I'm so sick of windows, I need something physical Hah, tough luck in a digital world"

#my stamps#my creation#cybercore#cyber#digital#digital girl#internet angel#old internet#internetcore#windows#webcore#internet#y2k#y2k aesthetic#pictochat#2000's#old computers#decoration#cute#stamps#web graphics#page decor#rentry graphics#rentry resources#rentry decor#deviantart stamps#da stamps#blog decor#old web#editblr

4K notes

·

View notes

Photo

Kenneth Snelson Chain Bridge Bodies (1990)

#art#kenneth snelson#graphic design#3d#render#computer generated#cgi#artwork#artist#90s#1990s#1990#u

3K notes

·

View notes

Text

✧ cybercore pngs

f2u! reblogs very appreciated! ↳ self indulgent!!

i was abt to do light blue pngs but i'm gonna first post these and then i'll do light blue pngs.. LOL

#my resources ✧#graphics#rentry#rentry graphics#sntry#sntry graphics#bundlrs#bundlrs graphics#layouts#tumblr layouts#stellular#pngs#random pngs#png#render#transparent pngs#aesthetic pngs#transparent png#transparents#frames#cybercore#frutiger aero#frutiger aesthetic#y2k#neo y2k#y2kcore#cyber y2k#cyber#computer#laptop

7K notes

·

View notes

Text

robot blinkies. free will is a wonderful thing

#graphics#web graphics#carrd resources#carrd graphics#web resources#old web graphics#pixel graphics#neocities#blinkies#page decor#old web#internetcore#technophilia#techum#technology#robot kisser#robotfucker#computer kisser#tbh#yeah#real

2K notes

·

View notes

Text

classic windows media player frames. unedited at https://wmpskinsarchive.neocities.org/ :)

#minez#webcore#computer window#music#black#blue#grey#gray#transparent#green#rentry resources#carrd resources#graphic resources#skeumorphism#old web#frames

2K notes

·

View notes

Note

Can I have some sentient ai/robot graphics?

Sentient ai, robot, and other adjacent graphics 🤖

And a couple other posts I found with cool related stuff:

post 1 post 2

credits for the AM blinkie and favicon: @heavenlyantennae

#rentry graphics#web graphics#carrd graphics#web decor#carrd resources#rentry resources#strawpage#rentry decor#stamps#blinkies#buttons#favicon#favicons#gifs#robot#robots#17776#17776 football#ihnmaims#i have no mouth and i must scream#AM#allied mastercomputer#portal#glados#i robot#tech#computer

2K notes

·

View notes

Text

#gif warning#tw flickering#2000s nostalgia#2000s aesthetic#2000s blog#2000s tech#techcore#tech aesthetic#tech art#old tech#y2k#y2kcore#y2k aesthetic#y2k art#2000s internet#2000s web#web archive#internet archive#webcore#old internet#nostalgiacore#web graphics#old computers#glittercore#glitter gif#glitter graphics#blingee

2K notes

·

View notes

Text

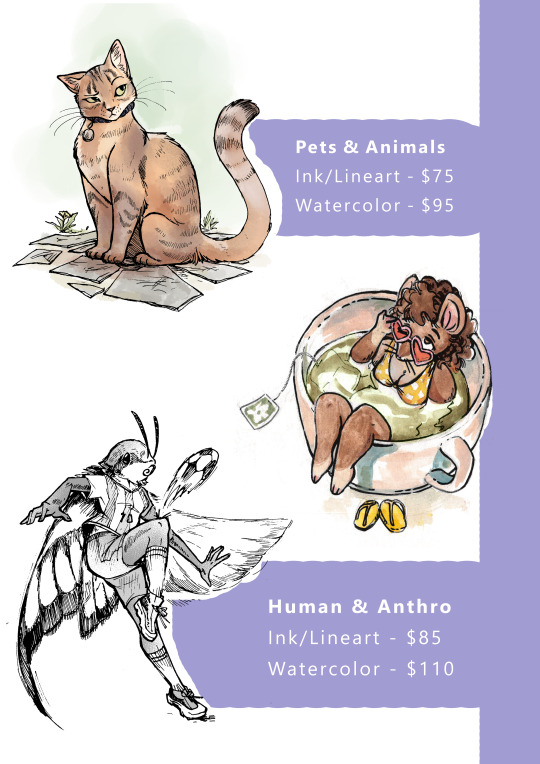

OPENING COMMISSION SLOTS

Opening 10 Commission slots for traditional (ink or watercolor/gouache) art! If you are looking for an original physical piece and have specific size requirements let me know, otherwise they will be on small 5.5x8.5 sheets. Please note I will not be able to do major revisions due to the medium. Shipping of original piece is optional but cost of shipping NOT included in these listed prices. Payment via paypal or venmo accepted (I will take a deposit of 1/2 of the original price once you approve the sketch, and 1/2 upon completion, full upfront is also fine.)

Backgrounds are ok but will increase price (likely ~$150-175 depending on complexity) multiple characters are ok but will be charged each as individual pieces.

Check out some past finished commissions here!

Please DM me for questions or more info or contact me at [email protected]

#Please excuse the graphic design#I'm away from my personal computer and using a random free program.#commission#art commisions#art comms open#please don't send asks because when I answer privately there's no way I can view them after

1K notes

·

View notes

Text

RANDOM/SEMI RANDOM COLLECTION OF TILED BACKGROUNDS FOR YOUR WEBSITE / BLOG <3

(ko-fi)

#webcore#old web graphics#carrd resources#rentry decor#rentry graphics#web graphics#neocities#carrd graphics#tiled background#tile design#tiled floor#repeating background#background#bg#tiled#web design#carrd inspo#early web#old web#web decor#web resources#webdesign#web development#techcore#old tech#computer#world wide web#open directories#girl blogger#resource

2K notes

·

View notes

Text

Creating bespoke programming languages for efficient visual AI systems

New Post has been published on https://thedigitalinsider.com/creating-bespoke-programming-languages-for-efficient-visual-ai-systems/

Creating bespoke programming languages for efficient visual AI systems

A single photograph offers glimpses into the creator’s world — their interests and feelings about a subject or space. But what about creators behind the technologies that help to make those images possible?

MIT Department of Electrical Engineering and Computer Science Associate Professor Jonathan Ragan-Kelley is one such person, who has designed everything from tools for visual effects in movies to the Halide programming language that’s widely used in industry for photo editing and processing. As a researcher with the MIT-IBM Watson AI Lab and the Computer Science and Artificial Intelligence Laboratory, Ragan-Kelley specializes in high-performance, domain-specific programming languages and machine learning that enable 2D and 3D graphics, visual effects, and computational photography.

“The single biggest thrust through a lot of our research is developing new programming languages that make it easier to write programs that run really efficiently on the increasingly complex hardware that is in your computer today,” says Ragan-Kelley. “If we want to keep increasing the computational power we can actually exploit for real applications — from graphics and visual computing to AI — we need to change how we program.”

Finding a middle ground

Over the last two decades, chip designers and programming engineers have witnessed a slowing of Moore’s law and a marked shift from general-purpose computing on CPUs to more varied and specialized computing and processing units like GPUs and accelerators. With this transition comes a trade-off: the ability to run general-purpose code somewhat slowly on CPUs, for faster, more efficient hardware that requires code to be heavily adapted to it and mapped to it with tailored programs and compilers. Newer hardware with improved programming can better support applications like high-bandwidth cellular radio interfaces, decoding highly compressed videos for streaming, and graphics and video processing on power-constrained cellphone cameras, to name a few applications.

“Our work is largely about unlocking the power of the best hardware we can build to deliver as much computational performance and efficiency as possible for these kinds of applications in ways that that traditional programming languages don’t.”

To accomplish this, Ragan-Kelley breaks his work down into two directions. First, he sacrifices generality to capture the structure of particular and important computational problems and exploits that for better computing efficiency. This can be seen in the image-processing language Halide, which he co-developed and has helped to transform the image editing industry in programs like Photoshop. Further, because it is specially designed to quickly handle dense, regular arrays of numbers (tensors), it also works well for neural network computations. The second focus targets automation, specifically how compilers map programs to hardware. One such project with the MIT-IBM Watson AI Lab leverages Exo, a language developed in Ragan-Kelley’s group.

Over the years, researchers have worked doggedly to automate coding with compilers, which can be a black box; however, there’s still a large need for explicit control and tuning by performance engineers. Ragan-Kelley and his group are developing methods that straddle each technique, balancing trade-offs to achieve effective and resource-efficient programming. At the core of many high-performance programs like video game engines or cellphone camera processing are state-of-the-art systems that are largely hand-optimized by human experts in low-level, detailed languages like C, C++, and assembly. Here, engineers make specific choices about how the program will run on the hardware.

Ragan-Kelley notes that programmers can opt for “very painstaking, very unproductive, and very unsafe low-level code,” which could introduce bugs, or “more safe, more productive, higher-level programming interfaces,” that lack the ability to make fine adjustments in a compiler about how the program is run, and usually deliver lower performance. So, his team is trying to find a middle ground. “We’re trying to figure out how to provide control for the key issues that human performance engineers want to be able to control,” says Ragan-Kelley, “so, we’re trying to build a new class of languages that we call user-schedulable languages that give safer and higher-level handles to control what the compiler does or control how the program is optimized.”

Unlocking hardware: high-level and underserved ways

Ragan-Kelley and his research group are tackling this through two lines of work: applying machine learning and modern AI techniques to automatically generate optimized schedules, an interface to the compiler, to achieve better compiler performance. Another uses “exocompilation” that he’s working on with the lab. He describes this method as a way to “turn the compiler inside-out,” with a skeleton of a compiler with controls for human guidance and customization. In addition, his team can add their bespoke schedulers on top, which can help target specialized hardware like machine-learning accelerators from IBM Research. Applications for this work span the gamut: computer vision, object recognition, speech synthesis, image synthesis, speech recognition, text generation (large language models), etc.

A big-picture project of his with the lab takes this another step further, approaching the work through a systems lens. In work led by his advisee and lab intern William Brandon, in collaboration with lab research scientist Rameswar Panda, Ragan-Kelley’s team is rethinking large language models (LLMs), finding ways to change the computation and the model’s programming architecture slightly so that the transformer-based models can run more efficiently on AI hardware without sacrificing accuracy. Their work, Ragan-Kelley says, deviates from the standard ways of thinking in significant ways with potentially large payoffs for cutting costs, improving capabilities, and/or shrinking the LLM to require less memory and run on smaller computers.

It’s this more avant-garde thinking, when it comes to computation efficiency and hardware, that Ragan-Kelley excels at and sees value in, especially in the long term. “I think there are areas [of research] that need to be pursued, but are well-established, or obvious, or are conventional-wisdom enough that lots of people either are already or will pursue them,” he says. “We try to find the ideas that have both large leverage to practically impact the world, and at the same time, are things that wouldn’t necessarily happen, or I think are being underserved relative to their potential by the rest of the community.”

The course that he now teaches, 6.106 (Software Performance Engineering), exemplifies this. About 15 years ago, there was a shift from single to multiple processors in a device that caused many academic programs to begin teaching parallelism. But, as Ragan-Kelley explains, MIT realized the importance of students understanding not only parallelism but also optimizing memory and using specialized hardware to achieve the best performance possible.

“By changing how we program, we can unlock the computational potential of new machines, and make it possible for people to continue to rapidly develop new applications and new ideas that are able to exploit that ever-more complicated and challenging hardware.”

#3d#accelerators#ai#AI systems#applications#architecture#Arrays#Art#artificial#Artificial Intelligence#automation#black box#box#bugs#Cameras#Capture#change#code#coding#Collaboration#Community#computation#computer#Computer Science#Computer Science and Artificial Intelligence Laboratory (CSAIL)#Computer science and technology#Computer vision#computer-graphics#computers#computing

0 notes

Note

Can I request some computer themed turquoise and lime green dividers, if it's okay?

Hi^^ anonnie! I did some 90's computer stuff. Hope it works for you!

Retro-Style Desktop

Hex code: #79ece0

Hex code: #067a7b

Hex code: #81B622

Hex code: #d1ff7e

Like/rb if use // No need to credit me

#omi.resources#dividers#line dividers#mini banners#banners#color palettes#line divider#mini banner#color palette#graphics#colors#green#green aesthetic#green dividers#green banners#teal aesthetic#turquoise#lime green#90's aesthetic#computer#desktop#retro aesthetic#gif

1K notes

·

View notes

Photo

Apple II ASCII art animated horse

1K notes

·

View notes

Text

Look, there's a lot to be said about the contemporary gaming industry's preoccupation with graphics performance, but "no video game needs to run at higher than thirty frames per second" – which is something I've seen come up in a couple of recent trending posts – isn't a terribly supportable assertion.

The notion that sixty frames per second ought to be a baseline performance target isn't a modern one. Most NES games ran at sixty frames per second. This was in 1983 – we're talking about a system with two kilobytes of RAM, and even then, sixty frames per second was considered the gold standard. There's a good reason for that, too: if you go much lower, rapidly moving backgrounds start to give a lot of folks eye strain and vertigo. It's genuinely an accessibility problem.

The idea that thirty frames per second is acceptable didn't gain currency until first-generation 3D consoles like the N64, as a compromise to allow more complex character models and environments within the limited capabilities of early 3D GPUs. If you're characterising the 60fps standard as the product of studios pushing shiny graphics over good technical design, historically speaking you've got it precisely backwards: it's actually the 30fps standard that's the product of prioritising flash and spectacle over user experience.

4K notes

·

View notes

Text

inside every man are two wolves and one of them is a tech geek

#graphics#web graphics#carrd graphics#carrd resources#pixel graphics#web resources#neocities#old web graphics#blinkies#rentry graphics#old web#old internet#webcore#technology#tech#computer#techcore#emulators#vintage computing#playstation#stamps#web stamps

1K notes

·

View notes