#embedded engineer

Explore tagged Tumblr posts

Text

OPT4048 - a "tri-stimulus" light sensor 🔴🟢🔵

We were chatting in the forums with someone when the OPT4048 (https://www.digikey.com/en/products/detail/texas-instruments/OPT4048DTSR/21298553) came up. It's an interesting light sensor that does color sensing but with diodes matched to the CIE XYZ color space. This would make them particularly good for color-light tuning. We made a cute breakout for this board. Fun fact: it's 3.3V power but 5V logic friendly.

#opt4048#lightsensor#colortracking#tristimulus#ciexyz#colorsensing#texasinstruments#electronics#sensor#tech#hardware#maker#diy#engineering#embedded#iot#innovation#breakoutboard#3dprinting#automation#led#rgb#technology#smartlighting#devboard#optoelectronics#programming#hardwarehacking#electronicsprojects#5vlogic

85 notes

·

View notes

Text

regarding the recent RP2350 OTP hack contest, with a collection of voltage glitches and the classic "well have you tried looking at it" attack. featuring cats (p. 22)

#security#cybersecurity#embedded engineering#electrical engineering#catgirl hackers#i feel i should mention IOA's attack applies to muuuuch more than the rp2350#props to Raspberry Pi for encouraging this type of research!#the silence of the fans

7 notes

·

View notes

Text

Wow fuck multithreading

142 notes

·

View notes

Text

If you fuck up in JS you get a dumb "couldn't read property of undefined".

If you fuck up in Cpp you get a cool glitch effect from reading bad sections of your memory for free!

12 notes

·

View notes

Text

So excited to have my hands of the first proper prototype of my NuaCam project. It's crazy to see just how far I have come in a few short months, growing this from a simple idea to a functional device. The goal is to build a camera which utilising ai stylisation to capture reality in a new light. Now I can focus on improving the ai side to try and create exciting styles to use. The first prototype was causing lots of lost hours debugging due to lose wires, so I bite the bullet and designed this pcb to help me develop the software side.

#embedded#technology#electronic project#electronics#startup#camera#ai photography#hobby#pcb#pcb assembly#electronic engineering#project#nuacam

17 notes

·

View notes

Text

i've gotta program something soon...

#my posts#gets computer science degree#proceeds to do no programming for 4 months#i have like a few programming ideas but starting things is hard#i want to play with godot more it seems fun#i should probably also learn C++ for job reasons since i want to get into lower level/embedded stuff and only know C and rust#i guess the problem there is i'd have to like come up with a project to learn it with#preferably something lower level#maybe finally do that make your own file system project i skipped?#or like something with compression and parsing file formats#that's all pretty involved though so something like playing with godot would probably be better to get myself back in the programming mood#some sort of silly 2d game probably#i've had thoughts of making a silly little yume nikki-like for my friends to play that could be fun#or just any silly little game for just my friends idk#starting with gamemaker kinda made using other game engines a bit weird for me#so getting used to how more normal game engines work would probably be useful#i also want to mess with 3d games that seems fun too#but see the problem with all of this is that i suck at starting projects#and am even worse at actually finishing them#well i guess we'll see what happens?#also hi if you read all of this lol

17 notes

·

View notes

Text

My mentor for electronics and embedded systems is sooo good at teaching istg. I walked up to this man after his class and I asked him and he explained the basics in 15minutes and when I thanked him he said “thank YOU for your queries “ bro he almost made me tear up cuz Engineering professors/mentors have been real rough 🙌

6 notes

·

View notes

Text

SOMEONE GIVE ME A BLOODY INTERNSHIP 😭

#upcoming electronics engineer#proficient in embedded system in c. c++. c. python. and dsa in c++. and programming micro controllers like arduino uno and stm32.#PLEASE HIRE ME PAID OR NOT.

2 notes

·

View notes

Text

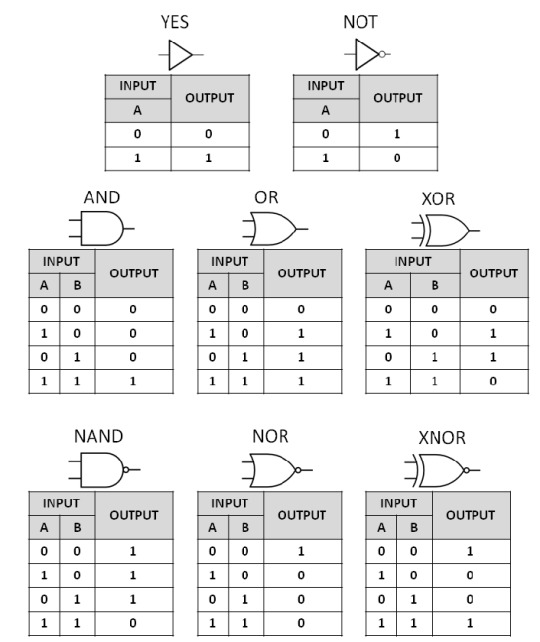

Why does digital elecronics is important for engineering?

Digital electronics is super important in engineering for a bunch of reasons—it's pretty much the backbone of modern technology. Digital electronics powers everything from smartphones and computers to cars and medical devices. Engineers across disciplines need to understand it to design, troubleshoot, or innovate with modern systems.

GET CIRCUIT DESIGNING VIDEO TUTORIAL 👈.

Digital tech allows for very large-scale integration (VLSI), meaning engineers can cram millions of logic gates into a single chip (like microprocessors or memory). It enables powerful, compact, and cost-effective designs.

#digital electronics#engine mechanism#electronics circuit#crank shaft#mechanical arms#mechanical engineering#mechanical parts#two stroke engine#technology#electronics#computing#and gate#digital chip#or gate#not gate#nand gate#nor gate#xor gate#electronic gate#embedded circuit design

4 notes

·

View notes

Text

Normally I just post about movies but I'm a software engineer by trade so I've got opinions on programming too.

Apparently it's a month of code or something because my dash is filled with people trying to learn Python. And that's great, because Python is a good language with a lot of support and job opportunities. I've just got some scattered thoughts that I thought I'd write down.

Python abstracts a number of useful concepts. It makes it easier to use, but it also means that if you don't understand the concepts then things might go wrong in ways you didn't expect. Memory management and pointer logic is so damn annoying, but you need to understand them. I learned these concepts by learning C++, hopefully there's an easier way these days.

Data structures and algorithms are the bread and butter of any real work (and they're pretty much all that come up in interviews) and they're language agnostic. If you don't know how to traverse a linked list, how to use recursion, what a hash map is for, etc. then you don't really know how to program. You'll pretty much never need to implement any of them from scratch, but you should know when to use them; think of them like building blocks in a Lego set.

Learning a new language is a hell of a lot easier after your first one. Going from Python to Java is mostly just syntax differences. Even "harder" languages like C++ mostly just mean more boilerplate while doing the same things. Learning a new spoken language in is hard, but learning a new programming language is generally closer to learning some new slang or a new accent. Lists in Python are called Vectors in C++, just like how french fries are called chips in London. If you know all the underlying concepts that are common to most programming languages then it's not a huge jump to a new one, at least if you're only doing all the most common stuff. (You will get tripped up by some of the minor differences though. Popping an item off of a stack in Python returns the element, but in Java it returns nothing. You have to read it with Top first. Definitely had a program fail due to that issue).

The above is not true for new paradigms. Python, C++ and Java are all iterative languages. You move to something functional like Haskell and you need a completely different way of thinking. Javascript (not in any way related to Java) has callbacks and I still don't quite have a good handle on them. Hardware languages like VHDL are all synchronous; every line of code in a program runs at the same time! That's a new way of thinking.

Python is stereotyped as a scripting language good only for glue programming or prototypes. It's excellent at those, but I've worked at a number of (successful) startups that all were Python on the backend. Python is robust enough and fast enough to be used for basically anything at this point, except maybe for embedded programming. If you do need the fastest speed possible then you can still drop in some raw C++ for the places you need it (one place I worked at had one very important piece of code in C++ because even milliseconds mattered there, but everything else was Python). The speed differences between Python and C++ are so much smaller these days that you only need them at the scale of the really big companies. It makes sense for Google to use C++ (and they use their own version of it to boot), but any company with less than 100 engineers is probably better off with Python in almost all cases. Honestly thought the best programming language is the one you like, and the one that you're good at.

Design patterns mostly don't matter. They really were only created to make up for language failures of C++; in the original design patterns book 17 of the 23 patterns were just core features of other contemporary languages like LISP. C++ was just really popular while also being kinda bad, so they were necessary. I don't think I've ever once thought about consciously using a design pattern since even before I graduated. Object oriented design is mostly in the same place. You'll use classes because it's a useful way to structure things but multiple inheritance and polymorphism and all the other terms you've learned really don't come into play too often and when they do you use the simplest possible form of them. Code should be simple and easy to understand so make it as simple as possible. As far as inheritance the most I'm willing to do is to have a class with abstract functions (i.e. classes where some functions are empty but are expected to be filled out by the child class) but even then there are usually good alternatives to this.

Related to the above: simple is best. Simple is elegant. If you solve a problem with 4000 lines of code using a bunch of esoteric data structures and language quirks, but someone else did it in 10 then I'll pick the 10. On the other hand a one liner function that requires a lot of unpacking, like a Python function with a bunch of nested lambdas, might be easier to read if you split it up a bit more. Time to read and understand the code is the most important metric, more important than runtime or memory use. You can optimize for the other two later if you have to, but simple has to prevail for the first pass otherwise it's going to be hard for other people to understand. In fact, it'll be hard for you to understand too when you come back to it 3 months later without any context.

Note that I've cut a few things for simplicity. For example: VHDL doesn't quite require every line to run at the same time, but it's still a major paradigm of the language that isn't present in most other languages.

Ok that was a lot to read. I guess I have more to say about programming than I thought. But the core ideas are: Python is pretty good, other languages don't need to be scary, learn your data structures and algorithms and above all keep your code simple and clean.

#programming#python#software engineering#java#java programming#c++#javascript#haskell#VHDL#hardware programming#embedded programming#month of code#design patterns#common lisp#google#data structures#algorithms#hash table#recursion#array#lists#vectors#vector#list#arrays#object oriented programming#functional programming#iterative programming#callbacks

20 notes

·

View notes

Text

Should I actually make meaningful posts? Like maybe a few series of computer science related topics?

I would have to contemplate format, but I would take suggestions for topics, try and compile learning resources, subtopics to learn and practice problems

#computer science#embedded systems#linux#linuxposting#arch linux#gcc#c language#programming#python#infosecawareness#cybersecurity#object oriented programming#arduino#raspberry pi#computer building#amd#assembly#code#software#software engineering#debugging#rtfm#documentation#learning#machine learning#artificial intelligence#cryptology#terminal#emacs#vscode

4 notes

·

View notes

Text

Solenoids go clicky-clacky 🔩🔊🤖

We're testing out an I2C-to-solenoid driver today. It uses an MCP23017 expander. We like this particular chip for this usage because it has push-pull outputs, making it ideal for driving our N-channel FETs and flyback diodes. The A port connects to the 8 drivers, while the B port remains available for other GPIO purposes. For this demo, whenever we 'touch' a pin on port B to ground, the corresponding solenoid triggers provide an easy way to check speed and power usage.

#solenoid#electronics#i2c#mcp23017#hardwarehacking#maker#embedded#engineering#robotics#automation#circuitdesign#pcb#microcontroller#tech#hardware#diyelectronics#electricalengineering#firmware#innovation#prototype#electromechanical#diy#electronicsproject#smarthardware#tinkering#gpio#fet#flybackdiode#programming#linux

44 notes

·

View notes

Text

frontend development is so confusing to me sdggsfgdf how can people do this for a living

i could read a thousand pages of embedded manuals and understand everything but literally anything to do with frontend development leaves me with a hydra of questions, answering one question will make me question like five other things

#programming#rust#coding#software engineering#c#c++#software#html#css#js#ts#html5#html css#frontenddevelopment#front end development#embedded

4 notes

·

View notes

Text

youtube

Use this trick to Save time : HDL Simulation through defining clock

Why is this trick useful? Defining a clock in your simulation can save you time during simulation because you don't have to manually generate the clock signal in your simulation environment. Wanted to know how to define and force clock to simulate your digital system. Normally define clock used to simulate system with clock input. But I am telling you this trick for giving values to input ports other than clock. It will help you to save time in simulation because you do not need to force values to input ports every time. Lets brief What we did - gave some clock frequency to input A, like we gave 100. Than we made Half the frequency of clock to 50 and gave it to Input B. In similar way if we have 3rd input too we goanna half the frequency again to 25 and would give to next input.

Subscribe to "Learn And Grow Community"

YouTube : https://www.youtube.com/@LearnAndGrowCommunity

LinkedIn Group : https://www.linkedin.com/groups/7478922/

Blog : https://LearnAndGrowCommunity.blogspot.com/

Facebook : https://www.facebook.com/JoinLearnAndGrowCommunity/

Twitter Handle : https://twitter.com/LNG_Community

DailyMotion : https://www.dailymotion.com/LearnAndGrowCommunity

Instagram Handle : https://www.instagram.com/LearnAndGrowCommunity/

Follow #LearnAndGrowCommunity

#HDL Design#Digital Design#Verilog#VHDL#FPGA#Digital Logic#Project#Simulation#Verification#Synthesis#B.Tech#Engineering#Tutorial#Embedded Systesm#VLSI#Chip Design#Training Courses#Software#Windows#Certification#Career#Hardware Design#Circuit Design#Programming#Electronics Design#ASIC#Xilinx#Altera#Engineering Projects#Engineering Training Program

3 notes

·

View notes

Text

The voices won again. Over a week of my life into an impulse project. A game console that only has one knob, one colour and one game.

Aside from the fact that using one of two input methods on the console puts you at a disadvantage, at the very least it's a cool icebreaker.

Everything runs directly on the device, there's a Pi Pico microcontroller driving an OLED panel. The crate I used for drawing sprites also provides web simulator outputs, so the game's also on itch! Touch input is still on the roadmap.

2 notes

·

View notes

Text

Principal Engineer – Embedded Cybersecurity – GE Aerospace Research

Job title: Principal Engineer – Embedded Cybersecurity – GE Aerospace Research Company: GE Aerospace Job description: Job Description Summary At GE Aerospace Research, our team develops advanced embedded systems technology for the future… for GE Aerospace as well as with U.S. Government Agencies. You will collaborate with fellow researchers from a range… Expected salary: $130000 – 240000 per…

0 notes