#end-user computing

Explore tagged Tumblr posts

Text

Reimagining Infrastructure Operations Priorities for 2025.

Sanjay Kumar Mohindroo Sanjay Kumar Mohindroo. skm.stayingalive.in Transforming IT from Reactive Fixers to Strategic Innovators Discover a fresh take on Infrastructure Operations Priorities for 2025—where IT shifts from reactive tasks to proactive innovation. A New Dawn in IT Operations Setting the Stage for a Bold Future The world of IT is changing. Today, infrastructure and operations…

#AI In IT#end-user computing#hybrid work#Infrastructure operations#IT innovation#IT resilience#IT Transformation#News#proactive IT#SaaS management#Sanjay Kumar Mohindroo#vendor relationships

0 notes

Text

very much a gen z moment on my part but finding out that screensavers were originally meant to prevent still images from being burnt onto idle crt monitors was a lightbulb moment for how I read 3d workers island

#bolo liveblogs#3d workers island#this is NOT my original observation lol but I saw someone else talking about#how the 3d workers island program the way it's described in-universe#(being played for hours on end like a tv program)#would inevitably burn the house the program centers around into the screens but *not* the mobile people#thereby immortalizing the image of amber's abuse and the concealment thereof into the user's physical computer#and I went. oh.

145 notes

·

View notes

Text

The disenshittified internet starts with loyal "user agents"

I'm in TARTU, ESTONIA! Overcoming the Enshittocene (TOMORROW, May 8, 6PM, Prima Vista Literary Festival keynote, University of Tartu Library, Struwe 1). AI, copyright and creative workers' labor rights (May 10, 8AM: Science Fiction Research Association talk, Institute of Foreign Languages and Cultures building, Lossi 3, lobby). A talk for hackers on seizing the means of computation (May 10, 3PM, University of Tartu Delta Centre, Narva 18, room 1037).

There's one overwhelmingly common mistake that people make about enshittification: assuming that the contagion is the result of the Great Forces of History, or that it is the inevitable end-point of any kind of for-profit online world.

In other words, they class enshittification as an ideological phenomenon, rather than as a material phenomenon. Corporate leaders have always felt the impulse to enshittify their offerings, shifting value from end users, business customers and their own workers to their shareholders. The decades of largely enshittification-free online services were not the product of corporate leaders with better ideas or purer hearts. Those years were the result of constraints on the mediocre sociopaths who would trade our wellbeing and happiness for their own, constraints that forced them to act better than they do today, even if the were not any better:

https://pluralistic.net/2024/04/24/naming-names/#prabhakar-raghavan

Corporate leaders' moments of good leadership didn't come from morals, they came from fear. Fear that a competitor would take away a disgruntled customer or worker. Fear that a regulator would punish the company so severely that all gains from cheating would be wiped out. Fear that a rival technology – alternative clients, tracker blockers, third-party mods and plugins – would emerge that permanently severed the company's relationship with their customers. Fears that key workers in their impossible-to-replace workforce would leave for a job somewhere else rather than participate in the enshittification of the services they worked so hard to build:

https://pluralistic.net/2024/04/22/kargo-kult-kaptialism/#dont-buy-it

When those constraints melted away – thanks to decades of official tolerance for monopolies, which led to regulatory capture and victory over the tech workforce – the same mediocre sociopaths found themselves able to pursue their most enshittificatory impulses without fear.

The effects of this are all around us. In This Is Your Phone On Feminism, the great Maria Farrell describes how audiences at her lectures profess both love for their smartphones and mistrust for them. Farrell says, "We love our phones, but we do not trust them. And love without trust is the definition of an abusive relationship":

https://conversationalist.org/2019/09/13/feminism-explains-our-toxic-relationships-with-our-smartphones/

I (re)discovered this Farrell quote in a paper by Robin Berjon, who recently co-authored a magnificent paper with Farrell entitled "We Need to Rewild the Internet":

https://www.noemamag.com/we-need-to-rewild-the-internet/

The new Berjon paper is narrower in scope, but still packed with material examples of the way the internet goes wrong and how it can be put right. It's called "The Fiduciary Duties of User Agents":

https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3827421

In "Fiduciary Duties," Berjon focuses on the technical term "user agent," which is how web browsers are described in formal standards documents. This notion of a "user agent" is a holdover from a more civilized age, when technologists tried to figure out how to build a new digital space where technology served users.

A web browser that's a "user agent" is a comforting thought. An agent's job is to serve you and your interests. When you tell it to fetch a web-page, your agent should figure out how to get that page, make sense of the code that's embedded in, and render the page in a way that represents its best guess of how you'd like the page seen.

For example, the user agent might judge that you'd like it to block ads. More than half of all web users have installed ad-blockers, constituting the largest consumer boycott in human history:

https://doc.searls.com/2023/11/11/how-is-the-worlds-biggest-boycott-doing/

Your user agent might judge that the colors on the page are outside your visual range. Maybe you're colorblind, in which case, the user agent could shift the gamut of the colors away from the colors chosen by the page's creator and into a set that suits you better:

https://dankaminsky.com/dankam/

Or maybe you (like me) have a low-vision disability that makes low-contrast type difficult to impossible to read, and maybe the page's creator is a thoughtless dolt who's chosen light grey-on-white type, or maybe they've fallen prey to the absurd urban legend that not-quite-black type is somehow more legible than actual black type:

https://uxplanet.org/basicdesign-never-use-pure-black-in-typography-36138a3327a6

The user agent is loyal to you. Even when you want something the page's creator didn't consider – even when you want something the page's creator violently objects to – your user agent acts on your behalf and delivers your desires, as best as it can.

Now – as Berjon points out – you might not know exactly what you want. Like, you know that you want the privacy guarantees of TLS (the difference between "http" and "https") but not really understand the internal cryptographic mysteries involved. Your user agent might detect evidence of shenanigans indicating that your session isn't secure, and choose not to show you the web-page you requested.

This is only superficially paradoxical. Yes, you asked your browser for a web-page. Yes, the browser defied your request and declined to show you that page. But you also asked your browser to protect you from security defects, and your browser made a judgment call and decided that security trumped delivery of the page. No paradox needed.

But of course, the person who designed your user agent/browser can't anticipate all the ways this contradiction might arise. Like, maybe you're trying to access your own website, and you know that the security problem the browser has detected is the result of your own forgetful failure to renew your site's cryptographic certificate. At that point, you can tell your browser, "Thanks for having my back, pal, but actually this time it's fine. Stand down and show me that webpage."

That's your user agent serving you, too.

User agents can be well-designed or they can be poorly made. The fact that a user agent is designed to act in accord with your desires doesn't mean that it always will. A software agent, like a human agent, is not infallible.

However – and this is the key – if a user agent thwarts your desire due to a fault, that is fundamentally different from a user agent that thwarts your desires because it is designed to serve the interests of someone else, even when that is detrimental to your own interests.

A "faithless" user agent is utterly different from a "clumsy" user agent, and faithless user agents have become the norm. Indeed, as crude early internet clients progressed in sophistication, they grew increasingly treacherous. Most non-browser tools are designed for treachery.

A smart speaker or voice assistant routes all your requests through its manufacturer's servers and uses this to build a nonconsensual surveillance dossier on you. Smart speakers and voice assistants even secretly record your speech and route it to the manufacturer's subcontractors, whether or not you're explicitly interacting with them:

https://www.sciencealert.com/creepy-new-amazon-patent-would-mean-alexa-records-everything-you-say-from-now-on

By design, apps and in-app browsers seek to thwart your preferences regarding surveillance and tracking. An app will even try to figure out if you're using a VPN to obscure your location from its maker, and snitch you out with its guess about your true location.

Mobile phones assign persistent tracking IDs to their owners and transmit them without permission (to its credit, Apple recently switch to an opt-in system for transmitting these IDs) (but to its detriment, Apple offers no opt-out from its own tracking, and actively lies about the very existence of this tracking):

https://pluralistic.net/2022/11/14/luxury-surveillance/#liar-liar

An Android device running Chrome and sitting inert, with no user interaction, transmits location data to Google every five minutes. This is the "resting heartbeat" of surveillance for an Android device. Ask that device to do any work for you and its pulse quickens, until it is emitting a nearly continuous stream of information about your activities to Google:

https://digitalcontentnext.org/blog/2018/08/21/google-data-collection-research/

These faithless user agents both reflect and enable enshittification. The locked-down nature of the hardware and operating systems for Android and Ios devices means that manufacturers – and their business partners – have an arsenal of legal weapons they can use to block anyone who gives you a tool to modify the device's behavior. These weapons are generically referred to as "IP rights" which are, broadly speaking, the right to control the conduct of a company's critics, customers and competitors:

https://locusmag.com/2020/09/cory-doctorow-ip/

A canny tech company can design their products so that any modification that puts the user's interests above its shareholders is illegal, a violation of its copyright, patent, trademark, trade secrets, contracts, terms of service, nondisclosure, noncompete, most favored nation, or anticircumvention rights. Wrap your product in the right mix of IP, and its faithless betrayals acquire the force of law.

This is – in Jay Freeman's memorable phrase – "felony contempt of business model." While more than half of all web users have installed an ad-blocker, thus overriding the manufacturer's defaults to make their browser a more loyal agent, no app users have modified their apps with ad-blockers.

The first step of making such a blocker, reverse-engineering the app, creates criminal liability under Section 1201 of the Digital Millennium Copyright Act, with a maximum penalty of five years in prison and a $500,000 fine. An app is just a web-page skinned in sufficient IP to make it a felony to add an ad-blocker to it (no wonder every company wants to coerce you into using its app, rather than its website).

If you know that increasing the invasiveness of the ads on your web-page could trigger mass installations of ad-blockers by your users, it becomes irrational and self-defeating to ramp up your ads' invasiveness. The possibility of interoperability acts as a constraint on tech bosses' impulse to enshittify their products.

The shift to platforms dominated by treacherous user agents – apps, mobile ecosystems, walled gardens – weakens or removes that constraint. As your ability to discipline your agent so that it serves you wanes, the temptation to turn your user agent against you grows, and enshittification follows.

This has been tacitly understood by technologists since the web's earliest days and has been reaffirmed even as enshittification increased. Berjon quotes extensively from "The Internet Is For End-Users," AKA Internet Architecture Board RFC 8890:

Defining the user agent role in standards also creates a virtuous cycle; it allows multiple implementations, allowing end users to switch between them with relatively low costs (…). This creates an incentive for implementers to consider the users' needs carefully, which are often reflected into the defining standards. The resulting ecosystem has many remaining problems, but a distinguished user agent role provides an opportunity to improve it.

And the W3C's Technical Architecture Group echoes these sentiments in "Web Platform Design Principles," which articulates a "Priority of Constituencies" that is supposed to be central to the W3C's mission:

User needs come before the needs of web page authors, which come before the needs of user agent implementors, which come before the needs of specification writers, which come before theoretical purity.

https://w3ctag.github.io/design-principles/

But the W3C's commitment to faithful agents is contingent on its own members' commitment to these principles. In 2017, the W3C finalized "EME," a standard for blocking mods that interact with streaming videos. Nominally aimed at preventing copyright infringement, EME also prevents users from choosing to add accessibility add-ons that beyond the ones the streaming service permits. These services may support closed captioning and additional narration of visual elements, but they block tools that adapt video for color-blind users or prevent strobe effects that trigger seizures in users with photosensitive epilepsy.

The fight over EME was the most contentious struggle in the W3C's history, in which the organization's leadership had to decide whether to honor the "priority of constituencies" and make a standard that allowed users to override manufacturers, or whether to facilitate the creation of faithless agents specifically designed to thwart users' desires on behalf of manufacturers:

https://www.eff.org/deeplinks/2017/09/open-letter-w3c-director-ceo-team-and-membership

This fight was settled in favor of a handful of extremely large and powerful companies, over the objections of a broad collection of smaller firms, nonprofits representing users, academics and other parties agitating for a web built on faithful agents. This coincided with the W3C's operating budget becoming entirely dependent on the very large sums its largest corporate members paid.

W3C membership is on a sliding scale, based on a member's size. Nominally, the W3C is a one-member, one-vote organization, but when a highly concentrated collection of very high-value members flex their muscles, W3C leadership seemingly perceived an existential risk to the organization, and opted to sacrifice the faithfulness of user agents in service to the anti-user priorities of its largest members.

For W3C's largest corporate members, the fight was absolutely worth it. The W3C's EME standard transformed the web, making it impossible to ship a fully featured web-browser without securing permission – and a paid license – from one of the cartel of companies that dominate the internet. In effect, Big Tech used the W3C to secure the right to decide who would compete with them in future, and how:

https://blog.samuelmaddock.com/posts/the-end-of-indie-web-browsers/

Enshittification arises when the everyday mediocre sociopaths who run tech companies are freed from the constraints that act against them. When the web – and its browsers – were a big, contented, diverse, competitive space, it was harder for tech companies to collude to capture standards bodies like the W3C to secure even more dominance. As the web turned into Tom Eastman's "five giant websites filled with screenshots of text from the other four," that kind of collusion became much easier:

https://pluralistic.net/2023/04/18/cursed-are-the-sausagemakers/#how-the-parties-get-to-yes

In arguing for faithful agents, Berjon associates himself with the group of scholars, regulators and activists who call for user agents to serve as "information fiduciaries." Mostly, information fiduciaries come up in the context of user privacy, with the idea that entities that hold a user's data would have the obligation to put the user's interests ahead of their own. Think of a lawyer's fiduciary duty in respect of their clients, to give advice that reflects the client's best interests, even when that conflicts with the lawyer's own self-interest. For example, a lawyer who believes that settling a case is the best course of action for a client is required to tell them so, even if keeping the case going would generate more billings for the lawyer and their firm.

For a user agent to be faithful, it must be your fiduciary. It must put your interests ahead of the interests of the entity that made it or operates it. Browsers, email clients, and other internet software that served as a fiduciary would do things like automatically blocking tracking (which most email clients don't do, especially webmail clients made by companies like Google, who also sell advertising and tracking).

Berjon contemplates a legally mandated fiduciary duty, citing Lindsey Barrett's "Confiding in Con Men":

https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3354129

He describes a fiduciary duty as a remedy for the enforcement failures of EU's GDPR, a solidly written, and dismally enforced, privacy law. A legally backstopped duty for agents to be fiduciaries would also help us distinguish good and bad forms of "innovation" – innovation in ways of thwarting a user's will are always bad.

Now, the tech giants insist that they are already fiduciaries, and that when they thwart a user's request, that's more like blocking access to a page where the encryption has been compromised than like HAL9000's "I can't let you do that, Dave." For example, when Louis Barclay created "Unfollow Everything," he (and his enthusiastic users) found that automating the process of unfollowing every account on Facebook made their use of the service significantly better:

https://slate.com/technology/2021/10/facebook-unfollow-everything-cease-desist.html

When Facebook shut the service down with blood-curdling legal threats, they insisted that they were simply protecting users from themselves. Sure, this browser automation tool – which just automatically clicked links on Facebook's own settings pages – seemed to do what the users wanted. But what if the user interface changed? What if so many users added this feature to Facebook without Facebook's permission that they overwhelmed Facebook's (presumably tiny and fragile) servers and crashed the system?

These arguments have lately resurfaced with Ethan Zuckerman and Knight First Amendment Institute's lawsuit to clarify that "Unfollow Everything 2.0" is legal and doesn't violate any of those "felony contempt of business model" laws:

https://pluralistic.net/2024/05/02/kaiju-v-kaiju/

Sure, Zuckerman seems like a good guy, but what if he makes a mistake and his automation tool does something you don't want? You, the Facebook user, are also a nice guy, but let's face it, you're also a naive dolt and you can't be trusted to make decisions for yourself. Those decisions can only be made by Facebook, whom we can rely upon to exercise its authority wisely.

Other versions of this argument surfaced in the debate over the EU's decision to mandate interoperability for end-to-end encrypted (E2EE) messaging through the Digital Markets Act (DMA), which would let you switch from, say, Whatsapp to Signal and still send messages to your Whatsapp contacts.

There are some good arguments that this could go horribly awry. If it is rushed, or internally sabotaged by the EU's state security services who loathe the privacy that comes from encrypted messaging, it could expose billions of people to serious risks.

But that's not the only argument that DMA opponents made: they also argued that even if interoperable messaging worked perfectly and had no security breaches, it would still be bad for users, because this would make it impossible for tech giants like Meta, Google and Apple to spy on message traffic (if not its content) and identify likely coordinated harassment campaigns. This is literally the identical argument the NSA made in support of its "metadata" mass-surveillance program: "Reading your messages might violate your privacy, but watching your messages doesn't."

This is obvious nonsense, so its proponents need an equally obviously intellectually dishonest way to defend it. When called on the absurdity of "protecting" users by spying on them against their will, they simply shake their heads and say, "You just can't understand the burdens of running a service with hundreds of millions or billions of users, and if I even tried to explain these issues to you, I would divulge secrets that I'm legally and ethically bound to keep. And even if I could tell you, you wouldn't understand, because anyone who doesn't work for a Big Tech company is a naive dolt who can't be trusted to understand how the world works (much like our users)."

Not coincidentally, this is also literally the same argument the NSA makes in support of mass surveillance, and there's a very useful name for it: scalesplaining.

Now, it's totally true that every one of us is capable of lapses in judgment that put us, and the people connected to us, at risk (my own parents gave their genome to the pseudoscience genetic surveillance company 23andme, which means they have my genome, too). A true information fiduciary shouldn't automatically deliver everything the user asks for. When the agent perceives that the user is about to put themselves in harm's way, it should throw up a roadblock and explain the risks to the user.

But the system should also let the user override it.

This is a contentious statement in information security circles. Users can be "socially engineered" (tricked), and even the most sophisticated users are vulnerable to this:

https://pluralistic.net/2024/02/05/cyber-dunning-kruger/#swiss-cheese-security

The only way to be certain a user won't be tricked into taking a course of action is to forbid that course of action under any circumstances. If there is any means by which a user can flip the "are you very sure?" circuit-breaker back on, then the user can be tricked into using that means.

This is absolutely true. As you read these words, all over the world, vulnerable people are being tricked into speaking the very specific set of directives that cause a suspicious bank-teller to authorize a transfer or cash withdrawal that will result in their life's savings being stolen by a scammer:

https://www.thecut.com/article/amazon-scam-call-ftc-arrest-warrants.html

We keep making it harder for bank customers to make large transfers, but so long as it is possible to make such a transfer, the scammers have the means, motive and opportunity to discover how the process works, and they will go on to trick their victims into invoking that process.

Beyond a certain point, making it harder for bank depositors to harm themselves creates a world in which people who aren't being scammed find it nearly impossible to draw out a lot of cash for an emergency and where scam artists know exactly how to manage the trick. After all, non-scammers only rarely experience emergencies and thus have no opportunity to become practiced in navigating all the anti-fraud checks, while the fraudster gets to run through them several times per day, until they know them even better than the bank staff do.

This is broadly true of any system intended to control users at scale – beyond a certain point, additional security measures are trivially surmounted hurdles for dedicated bad actors and as nearly insurmountable hurdles for their victims:

https://pluralistic.net/2022/08/07/como-is-infosec/

At this point, we've had a couple of decades' worth of experience with technological "walled gardens" in which corporate executives get to override their users' decisions about how the system should work, even when that means reaching into the users' own computer and compelling it to thwart the user's desire. The record is inarguable: while companies often use those walls to lock bad guys out of the system, they also use the walls to lock their users in, so that they'll be easy pickings for the tech company that owns the system:

https://pluralistic.net/2023/02/05/battery-vampire/#drained

This is neatly predicted by enshittification's theory of constraints: when a company can override your choices, it will be irresistibly tempted to do so for its own benefit, and to your detriment.

What's more, the mere possibility that you can override the way the system works acts as a disciplining force on corporate executives, forcing them to reckon with your priorities even when these are counter to their shareholders' interests. If Facebook is genuinely worried that an "Unfollow Everything" script will break its servers, it can solve that by giving users an unfollow everything button of its own design. But so long as Facebook can sue anyone who makes an "Unfollow Everything" tool, they have no reason to give their users such a button, because it would give them more control over their Facebook experience, including the controls needed to use Facebook less.

It's been more than 20 years since Seth Schoen and I got a demo of Microsoft's first "trusted computing" system, with its "remote attestations," which would let remote servers demand and receive accurate information about what kind of computer you were using and what software was running on it.

This could be beneficial to the user – you could send a "remote attestation" to a third party you trusted and ask, "Hey, do you think my computer is infected with malicious software?" Since the trusted computing system produced its report on your computer using a sealed, separate processor that the user couldn't directly interact with, any malicious code you were infected with would not be able to forge this attestation.

But this remote attestation feature could also be used to allow Microsoft to block you from opening a Word document with Libreoffice, Apple Pages, or Google Docs, or it could be used to allow a website to refuse to send you pages if you were running an ad-blocker. In other words, it could transform your information fiduciary into a faithless agent.

Seth proposed an answer to this: "owner override," a hardware switch that would allow you to force your computer to lie on your behalf, when that was beneficial to you, for example, by insisting that you were using Microsoft Word to open a document when you were really using Apple Pages:

https://web.archive.org/web/20021004125515/http://vitanuova.loyalty.org/2002-07-05.html

Seth wasn't naive. He knew that such a system could be exploited by scammers and used to harm users. But Seth calculated – correctly! – that the risks of having a key to let yourself out of the walled garden were less than being stuck in a walled garden where some corporate executive got to decide whether and when you could leave.

Tech executives never stopped questing after a way to turn your user agent from a fiduciary into a traitor. Last year, Google toyed with the idea of adding remote attestation to web browsers, which would let services refuse to interact with you if they thought you were using an ad blocker:

https://pluralistic.net/2023/08/02/self-incrimination/#wei-bai-bai

The reasoning for this was incredible: by adding remote attestation to browsers, they'd be creating "feature parity" with apps – that is, they'd be making it as practical for your browser to betray you as it is for your apps to do so (note that this is the same justification that the W3C gave for creating EME, the treacherous user agent in your browser – "streaming services won't allow you to access movies with your browser unless your browser is as enshittifiable and authoritarian as an app").

Technologists who work for giant tech companies can come up with endless scalesplaining explanations for why their bosses, and not you, should decide how your computer works. They're wrong. Your computer should do what you tell it to do:

https://www.eff.org/deeplinks/2023/08/your-computer-should-say-what-you-tell-it-say-1

These people can kid themselves that they're only taking away your power and handing it to their boss because they have your best interests at heart. As Upton Sinclair told us, it's impossible to get someone to understand something when their paycheck depends on them not understanding it.

The only way to get a tech boss to consistently treat you well is to ensure that if they stop, you can quit. Anything less is a one-way ticket to enshittification.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/05/07/treacherous-computing/#rewilding-the-internet

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#maria farrell#scalesplaining#user agents#eme#w3c#sdos#scholarship#information fiduciary#the internet is for end users#ietf#delegation#bootlickers#unfollow everything#remote attestation#browsers#treacherous computing#enshittification#snitch chips#Robin Berjon#rewilding the internet

345 notes

·

View notes

Text

Sort of interesting that my CPU predates Minecraft 1.0.0 but still runs the game flawlessly. Unlike my GPU, which is like twelve years newer and stutters like hell trying to render a hardware accelerated scene on two monitors (1440p-class and 900p-class) at once.

#interesting in that the 1660 super was so underpowered i guess#or that graphical loads have outstripped cpu loads in games so convincingly#not that a high end first-gen i7 is still more than adequate for most modern purposes#i think were all very aware that like 80 percent of users dont need anything more than like an i3 quad or hex core#my thoughts#computers

3 notes

·

View notes

Text

me after eating the salsa i spilled off the floor

#just me hi#brother. the lid was stuck. and i had no forces on my side Lmao [<- in a deep state of mourning]#the thing flipped upside down.. i got some on my sleeve somehow?? i'm wearing a t-shirt so like. how'd that happen lol#and now i'm eating salsa-less tortilla chips.. who hated the world so much that i can taste the salt plains on them.... hgfbshf#/anyway chess started saying 'no it's fine off the top actually look' and he started scooping some off the top Hbfvhahdkksh#if either of us get sick you know why. the floor salsa will come for us all one day hbgvfha#//anyway i'm back on my kartrider kick loll :>>>#forgot how much i like that game! can only play with one sibling because it stresses the others out GFdvzhfhns#we have a club and it's. well it's not thriving hdbdbhs - but regardless it's cool :3#i've gotten some new personal bests which is cool!! didn't think i'd get back into swing so quick but yee :D#anyway my user is Rumour if anybody here knows what i'm talking about lollll#//ye :3 i gotta go check my other things now hfbsfh#haven't been on my computer and that's where i live so i always end up in a sort of cocoon when this happens lol#it's a cozy cocoon though. yea :)#anywho yea. toodles :D

4 notes

·

View notes

Text

In today's Kingdom Hearts headcanons I'm thinking about: degrees of computer competency

I think it's a spectrum that has Xemnas and Xion jockeying for the position of "best at computers" at one end and Sora solidly occupying the "worst at computers" on the other

#platextsofmemories#and of course that's a wide range#i think Roxas is second only to Sora at bad at computers (surprises no one) and Riku is third (manages to surprise a lot of people)#because riku mostly avoids computers and talking about them except sometimes he helps sora#kairi is like a normal amount good at computers. like idk average millenial end user#sora thinks she's a wizard. when a computer uses an installation wizard he thinks of kairi#anyway. i have a lot of thoughts

3 notes

·

View notes

Text

The more I look into articles about students using AI to cheat the more it sounds like WILD sensationalization

#like. every ACTUAL study has been like 'yeah ok like maybe 45% use it#but the majority who use it are using it to brainstorm/grammar check'#and then theres all these articles lkke heres why STUDENTS SHOULD NOT use AI TO CHEAT#and it's like. bestie who is the target audience#every article ive seen w student input from users have been like 'if the teachers give me bullshit work then ill give them bullshit results#or 'dont waste my time and i wont waste yours'#and it's like yeah maybe it's a good thing if profs who assign work that could be completed by a computer change it up#i have never used AI and i do not ever intend to#but i think most of the panic is bullshit#also like. so many of the articles have had profs who have been like 'this could be an exciting new resource!!!'#there are people in academia willing to change and adapt#why does every news source think this is the end for academic honesty#flippin flops

2 notes

·

View notes

Text

my tablet pen stopped working gahhhhhhhhh

#idk if its just old and not working right or cuz i havent downloaded everything onto this new laptop#i dont have any of the user manuals anymore tho so gah i dont even know how to set it up on the computer end#but also like it was working ! i just plugged it in adn it was working#and then it just stopped after like an hour. like. ok#my tablet itself is connected just fine but the pen isnt#i cant remembr if its suppoed to be wireless or not but i have it plugged into my laptop anyway#i feel like its supposed to be wireless and the wire is just to charge it#but idfk bc even when its plugged in its just not working#i see the red light on inside of it but when i put it to my tablet it just wont register#like theres just no tablet-pen communication going on for whatever reason#and i cant remember does red mean its fully charged or that its currently charging. argh. gahhh#i havent used this thing in five fucking yearsssssssss#brot posts

2 notes

·

View notes

Text

idk if people on tumblr know about this but a cybersecurity software called crowdstrike just did what is probably the single biggest fuck up in any sector in the past 10 years. it's monumentally bad. literally the most horror-inducing nightmare scenario for a tech company.

some info, crowdstrike is essentially an antivirus software for enterprises. which means normal laypeople cant really get it, they're for businesses and organisations and important stuff.

so, on a friday evening (it of course wasnt friday everywhere but it was friday evening in oceania which is where it first started causing damage due to europe and na being asleep), crowdstrike pushed out an update to their windows users that caused a bug.

before i get into what the bug is, know that friday evening is the worst possible time to do this because people are going home. the weekend is starting. offices dont have people in them. this is just one of many perfectly placed failures in the rube goldburg machine of crowdstrike. there's a reason friday is called 'dont push to live friday' or more to the point 'dont fuck it up friday'

so, at 3pm at friday, an update comes rolling into crowdstrike users which is automatically implemented. this update immediately causes the computer to blue screen of death. very very bad. but it's not simply a 'you need to restart' crash, because the computer then gets stuck into a boot loop.

this is the worst possible thing because, in a boot loop state, a computer is never really able to get to a point where it can do anything. like download a fix. so there is nothing crowdstrike can do to remedy this death update anymore. it is now left to the end users.

it was pretty quickly identified what the problem was. you had to boot it in safe mode, and a very small file needed to be deleted. or you could just rename crowdstrike to something else so windows never attempts to use it.

it's a fairly easy fix in the grand scheme of things, but the issue is that it is effecting enterprises. which can have a looooot of computers. in many different locations. so an IT person would need to manually fix hundreds of computers, sometimes in whole other cities and perhaps even other countries if theyre big enough.

another fuck up crowdstrike did was they did not stagger the update, so they could catch any mistakes before they wrecked havoc. (and also how how HOW do you not catch this before deploying it. this isn't a code oopsie this is a complete failure of quality ensurance that probably permeates the whole company to not realise their update was an instant kill). they rolled it out to everyone of their clients in the world at the same time.

and this seems pretty hilarious on the surface. i was havin a good chuckle as eftpos went down in the store i was working at, chaos was definitely ensuring lmao. im in aus, and banking was literally down nationwide.

but then you start hearing about the entire country's planes being grounded because the airport's computers are bricked. and hospitals having no computers anymore. emergency call centres crashing. and you realised that, wow. crowdstrike just killed people probably. this is literally the worst thing possible for a company like this to do.

crowdstrike was kinda on the come up too, they were starting to become a big name in the tech world as a new face. but that has definitely vanished now. to fuck up at this many places, is almost extremely impressive. its hard to even think of a comparable fuckup.

a friday evening simultaneous rollout boot loop is a phrase that haunts IT people in their darkest hours. it's the monster that drags people down into the swamp. it's the big bag in the horror movie. it's the end of the road. and for crowdstrike, that reaper of souls just knocked on their doorstep.

114K notes

·

View notes

Text

5 Steps to More Effective EUC Controls

Learn 5 practical steps to implement more effective EUC controls, from maintaining an up-to-date model inventory and standardizing risk assessments to leveraging interdependency maps, automating documentation, and enforcing approval workflows aligned with SS1/23.

Visit: https://cimcon.com/5-steps-to-more-effective-spreadsheet-euc-controls/

0 notes

Text

End User Computing Solutions Services for Business Efficiency

Discover advanced End User Computing Solutions Services designed to enhance employee productivity and streamline IT operations. From secure virtual desktop infrastructure and mobile device management to application delivery and user support, we offer tailored solutions to meet your business needs. contact us today at +1 3023132433.

0 notes

Text

Cybercriminals are abusing Google’s infrastructure, creating emails that appear to come from Google in order to persuade people into handing over their Google account credentials. This attack, first flagged by Nick Johnson, the lead developer of the Ethereum Name Service (ENS), a blockchain equivalent of the popular internet naming convention known as the Domain Name System (DNS). Nick received a very official looking security alert about a subpoena allegedly issued to Google by law enforcement to information contained in Nick’s Google account. A URL in the email pointed Nick to a sites.google.com page that looked like an exact copy of the official Google support portal.

As a computer savvy person, Nick spotted that the official site should have been hosted on accounts.google.com and not sites.google.com. The difference is that anyone with a Google account can create a website on sites.google.com. And that is exactly what the cybercriminals did. Attackers increasingly use Google Sites to host phishing pages because the domain appears trustworthy to most users and can bypass many security filters. One of those filters is DKIM (DomainKeys Identified Mail), an email authentication protocol that allows the sending server to attach a digital signature to an email. If the target clicked either “Upload additional documents” or “View case”, they were redirected to an exact copy of the Google sign-in page designed to steal their login credentials. Your Google credentials are coveted prey, because they give access to core Google services like Gmail, Google Drive, Google Photos, Google Calendar, Google Contacts, Google Maps, Google Play, and YouTube, but also any third-party apps and services you have chosen to log in with your Google account. The signs to recognize this scam are the pages hosted at sites.google.com which should have been support.google.com and accounts.google.com and the sender address in the email header. Although it was signed by accounts.google.com, it was emailed by another address. If a person had all these accounts compromised in one go, this could easily lead to identity theft.

How to avoid scams like this

Don’t follow links in unsolicited emails or on unexpected websites.

Carefully look at the email headers when you receive an unexpected mail.

Verify the legitimacy of such emails through another, independent method.

Don’t use your Google account (or Facebook for that matter) to log in at other sites and services. Instead create an account on the service itself.

Technical details Analyzing the URL used in the attack on Nick, (https://sites.google.com[/]u/17918456/d/1W4M_jFajsC8YKeRJn6tt_b1Ja9Puh6_v/edit) where /u/17918456/ is a user or account identifier and /d/1W4M_jFajsC8YKeRJn6tt_b1Ja9Puh6_v/ identifies the exact page, the /edit part stands out like a sore thumb. DKIM-signed messages keep the signature during replays as long as the body remains unchanged. So if a malicious actor gets access to a previously legitimate DKIM-signed email, they can resend that exact message at any time, and it will still pass authentication. So, what the cybercriminals did was: Set up a Gmail account starting with me@ so the visible email would look as if it was addressed to “me.” Register an OAuth app and set the app name to match the phishing link Grant the OAuth app access to their Google account which triggers a legitimate security warning from [email protected] This alert has a valid DKIM signature, with the content of the phishing email embedded in the body as the app name. Forward the message untouched which keeps the DKIM signature valid. Creating the application containing the entire text of the phishing message for its name, and preparing the landing page and fake login site may seem a lot of work. But once the criminals have completed the initial work, the procedure is easy enough to repeat once a page gets reported, which is not easy on sites.google.com. Nick submitted a bug report to Google about this. Google originally closed the report as ‘Working as Intended,’ but later Google got back to him and said it had reconsidered the matter and it will fix the OAuth bug.

11K notes

·

View notes

Text

You know what, I'm going to add to this after all. Ray tracing is a gimmick and infamous for how intensive it is on hardware where it updates in real time, such as in video games. Before it was considered at all feasible outside rendering CGI, there were other methods of simulating light that were far easier for hardware to handle and, honestly, the difference between them (I can't remember what the name of the algorithm is) and ray tracing is minimal.

Adding to the problem is video card manufacturers pushing it while not increasing the VRAM on cards that are now expected to handle ray tracing, NPC scripts/AI, all other graphics, and stream encoding at the same time. GPUs have not seen a meaningful increase in VRAM in years despite the push of 4k graphics and ray tracing.

Oh and handling generating in-between frames to increase FPS/hide poor optimisation and up-scaling from 1080p to 4k or 8k. Sometimes both at the same time (in addition to the rest).

Further compounding it is consoles using stripped down versions of GPUs but not allowing users to upgrade them or other hardware. At least not in an easy way that doesn't void the warranty.

There's only so much that can be off-loaded to other components and it is a Choice�� to decide not to include an option to disable features that minimally improve the graphics (I specify graphics since more than one game has been released where turning off ray tracing wasn't the first thing recommended to turn off if you wanted to hit 30+ FPS) but can and do overwhelm GPUs.

Mandatory Ray Tracing should be banned in games. Genuinely absurd to think the majority of consumers are running high performing RTX graphics cards.

#i have a suspicion that this plus the price increase in gpus is to push people to rent computers a la geforce now#and gpus are one of the main ways developers compensate for having dog shit optimisation#the others being (hoping) the end user has enough ram to hide memory leaks#and high hdd/ssd capacity so compression doesn't need to be optimised#(also a high or no data cap since so much is downloaded rather than coming on a physical disk)#some developers are better at having granular options than others too#some will let you tweak or disable damn near everything so it runs best on your system and so you can choose what looks good to you#while others do the bare minimum and can't even be bothered to let users change things like particle effects or ray tracing#your options are basically play how the developer decided (regardless of your system) or not play at all#if the game runs fine until x or y or z but then starts to stutter/crash and would be fine if you could turn things down/disable things#you're sol if the developer didn't bother to allow changes outside of gamma and anti-aliasing for example#also not everyone has a 4k display or notices minute details#some people don't even see a difference between 30 fps and 60#don't get me started on how so many developers treat colour blindness as something spiteful rather than a medical condition#but more and more developers are forcing large and/or intense graphics/textures rather than giving users (aka customers) an option#or having a separate additional download if someone does want 4k or 8k textures#you know the way so many games operated when <720p displays were common but there was a way to download hd textures#for people who wanted them *and* had a display that could do 1080p#though it goes back to the (usually) aaa publishers and how graphic generations hit their peak a while ago#adding more polygons isn't something big or noticeable anymore unless it results in a performance *drop*#(the team fortress 2 snake immediately comes to mind)#(or the final fantasy 14 grapes)#ray tracing is one of the buzzwords used to sell a remaster (possibly to people who bought the game before)#or indicate a game/console is new and not part of a previous generation

258 notes

·

View notes

Text

cant think about 3dv for long without thinking about max headroom too why did they never make an actual cgi max headroom. why

#cgi was growing up ... they couldve done it like late 80s.... look at krypto that canal+ mascot... it was done and they couldve done it..#max's populairty was so enduring for a time maybe at that point they were in too deep it wasnt abt cgi anymore it was about#how he always looked and they werent gonna change it. makes sense.#i wanna know if max headroom's creators have mentioned 3dv in relation to max.. obviously they write about#3dv in creative computer graphics which is from before max. so obviously they know abt it. and they are pretty similar.#but i wanna see them say it...#okay im procrastinating sleeping byyyeeee.....#original nonsense#personal#i need to watch max headroom i keep reading about it but i wanna draw him and user friendly together... son and father ...#AND I SAW AN ARTICLE A FEW YEARS AGO about a max headroom reboot and that waz live action too. whyyyy. but ive not#seen any more about that ever so idk if that ended up happening. i think i posted about it at the time.#okay bye for real ahhhh

1 note

·

View note

Text

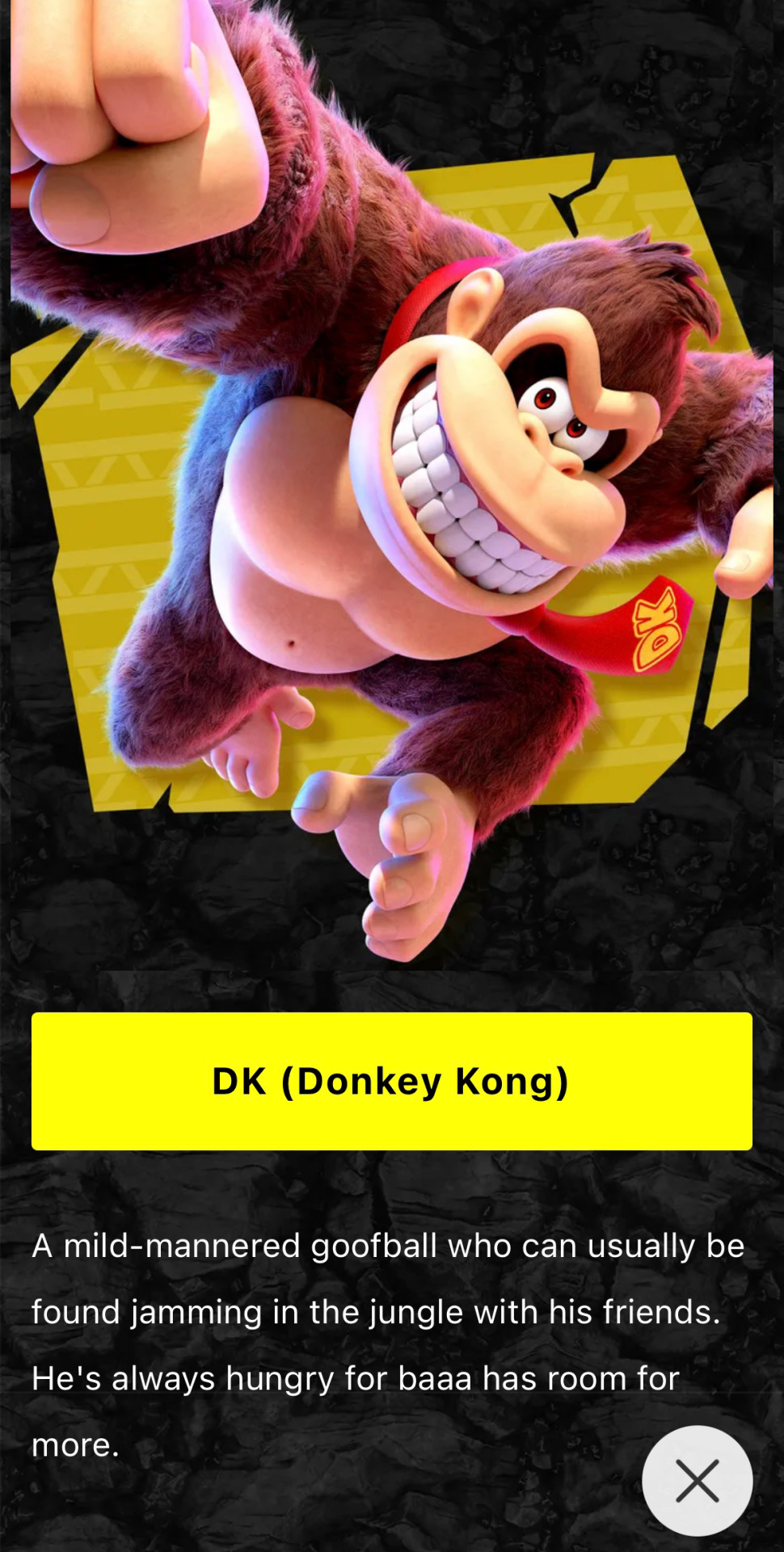

On April 7th, a Nintendo Today update featured a blurb about Donkey Kong that was subject to some manner of text-related error, which resulted in the description for Donkey Kong to read "He's always hungry for baaa has room for more", inadvertently making an onomatopoeia for a sheep bleating sound.

This was updated a few hours later to read "He's always hungry for bananas, and always has room for more."

It is speculated this might have to do with some sort of automated system removing the "nan" string of letters, since "NaN" (Not a Number) is a common error string in computing and would not be desirable to display to the end user, though a straightforward application of this would have resulted in it being "baas" instead of "baaa". The exact nature of the error is still unknown.

Main Blog | Patreon | Twitter | Bluesky | Small Findings | Source

3K notes

·

View notes

Text

How AI Is Being Used to Solve the Mental Health Crisis

New Post has been published on https://thedigitalinsider.com/how-ai-is-being-used-to-solve-the-mental-health-crisis/

How AI Is Being Used to Solve the Mental Health Crisis

Most systems have struggled to identify how to provide quality mental health services that meet or exceed the basic therapeutic response, which often consists of merely evaluating a patient admitted to the psych ward of a hospital, sometimes medicating them, and quickly discharging them within a few days or weeks under the guise of recommending a sensible “action plan” for the patient to follow.

Therefore, to properly address rising mental health needs in every community, public agencies (specifically, health departments, school systems, jails/prisons, and even the military) are beginning to partner with private digital behavioral health companies to expand much-needed access to support services.

In addition to those challenges, the lack of qualified therapists and the prohibitive cost of traditional therapy continue to be two of the major obstacles to an individual receiving proper mental healthcare. Thankfully, a new innovative approach has now emerged from a startup company known as mySHO (which stands for My Select Health Options). Founded in 2019 by military veterans Terry Williams and A.J. Pasha, mySHO is a digital platform that provides technologically transformative products and services to address gaps in the education system, healthcare system, criminal justice system, and other sectors. As an industry trailblazer, mySHO‘s unique, culturally competent, and culturally connected programming features the most advanced artificial intelligence platform in the mental health tech arena today.

To deliver their vision of a truly transformational health tech organization, mySHO has connected with two powerful sources: Scalable Care (WSC-Well Advised), a leading technology firm based in Silicon Valley (Scalable Care Chief Technology Officer John Denning is a medical technology industry veteran who was a prominent member of the same team that developed MyChart and Epic electronic healthcare software used by most hospitals and healthcare facilities around the world today) and the UCSF’s (University of California – San Francisco’s) Langley Porter Psychiatric Institute. Both sources joined forces with mySHO to bring GritX to market, an evidence-based mental health digital tool that provides 24/7 social and emotional support to populations who require ongoing emotional care. (According to one key figure within the U.S. Department of Justice, GritX by mySHO is the “best-kept thing no one knows about yet“).

Five Advantages of Partnering with mySHO–GritX

Unlike the typical digital therapeutics model, mySHO–GritX offers services that require no appointment and are available 24/7 – 365 with the ability to receive real-time, digital, (evidence-based) mental health services and support via smartphone, tablet, or computer. So, GritX makes it possible for anyone who needs immediate mental health assistance to receive accurate, professional, emotional care at any time of day or night.

All data points are being securely captured from each conversation with mySHO–GritX to keep a history of every interaction that the school-age youth or adult seeking mental health services has had with mySHO–GritX.

Therapy sessions are fluent in 92 languages (and growing).

mySHO–GritX is customizable, adaptable, and adjustable to the specifications of most behavioral and mental health systems. mySHO also has the actual operational blueprint, engagement methodology, evidence-based support tools, and self-sustainability plan to jumpstart, operationalize, and complement any Office or Department of Behavioral Health, Substance Abuse, or Public Safety. mySHO’s innovative Public-Private Partnership model utilizes available grant funding from Federal, State, and Local resources to provide GritX to the end user at no cost.

The GritX tool is committed to setting a standard in privacy and accessibility for every user. Adhering to stringent security guidelines, which include being fully HIPPA, PII, HITECH, NIST 800-171, PCI DSS, and GDPR-compliant. GritX ensures a safe and inclusive environment for all.

Summary

In October of 2023, mySHO began participating in a program sponsored by the Mayo Clinic that allows teams of undergraduates, led by MBA students from private colleges and universities throughout Minnesota, to research and recommend the next steps for inventions and new products in development at the Mayo Clinic.

Thanks to companies like mySHO and their creative understanding of one way to best utilize AI/machine learning to solve real-life problems, the future of mental healthcare is looking brighter by the day with the implementation of technology.

#2023#Accessibility#ai#approach#artificial#Artificial Intelligence#colleges#Community#Companies#computer#data#development#education#electronic#end user#Environment#epic#Facilities#Features#Funding#Future#gdpr#guidelines#Health#healthcare#History#hospitals#how#how to#Industry

0 notes