#ethics and ai

Text

I spent the evening looking into this AI shit and made a wee informative post of the information I found and thought all artists would be interested and maybe help yall?

edit: forgot to mention Glaze and Nightshade to alter/disrupt AI from taking your work into their machines. You can use these and post and it will apparently mess up the AI and it wont take your content into it's machine!

edit: ArtStation is not AI free! So make sure to read that when signing up if you do!

(this post is also on twt)

[Image descriptions: A series of infographics titled: “Opt Out AI: [Social Media] and what I found.” The title image shows a drawing of a person holding up a stack of papers where the first says, ‘Terms of Service’ and the rest have logos for various social media sites and are falling onto the floor. Long transcriptions follow.

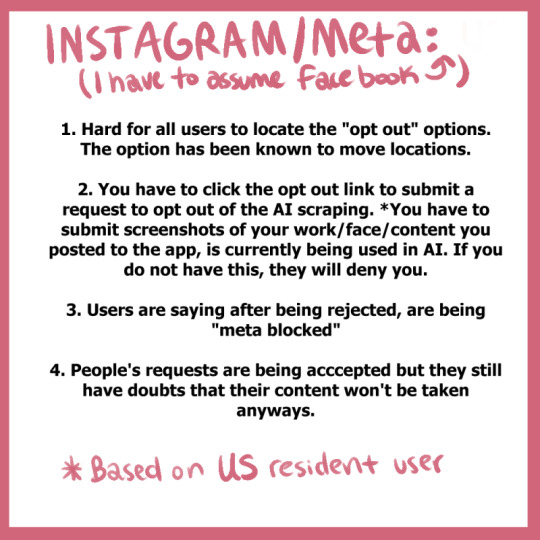

Instagram/Meta (I have to assume Facebook).

Hard for all users to locate the “opt out” options. The option has been known to move locations.

You have to click the opt out link to submit a request to opt out of the AI scraping. *You have to submit screenshots of your work/face/content you posted to the app, is curretnly being used in AI. If you do not have this, they will deny you.

Users are saying after being rejected, are being “meta blocked”

People’s requests are being accepted but they still have doubts that their content won’t be taken anyways.

Twitter/X

As of August 2023, Twitter’s ToS update:

“Twitter has the right to use any content that users post on its platform to train its AI models, and that users grant Twitter a worldwide, non-exclusive, royalty-free license to do so.”

There isn’t much to say. They’re doing the same thing Instagram is doing (to my understanding) and we can’t even opt out.

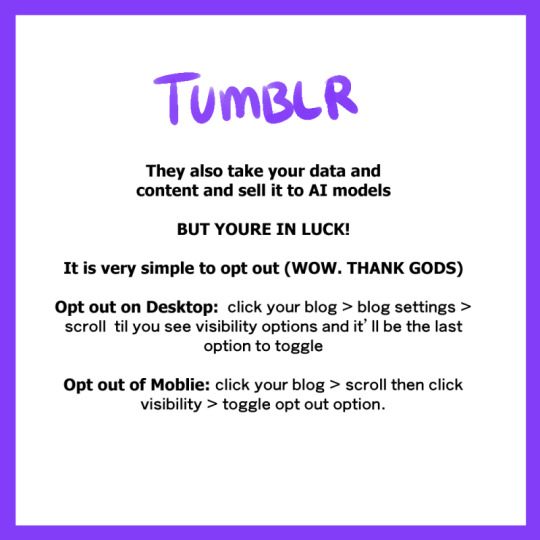

Tumblr

They also take your data and content and sell it to AI models.

But you’re in luck!

It is very simply to opt out (Wow. Thank Gods)

Opt out on Desktop: click on your blog > blog settings > scroll til you see visibility options and it’ll be the last option to toggle

Out out of Mobile: click your blog > scroll then click visibility > toggle opt out option

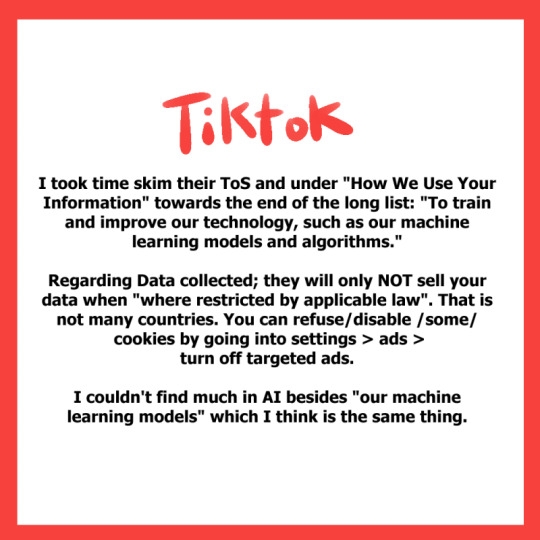

TikTok

I took time skim their ToS and under “How We Use Your Information” and towards the end of the long list: “To train and improve our technology, such as our machine learning models and algorithms.”

Regarding data collected; they will only not sell your data when “where restricted by applicable law”. That is not many countries. You can refuse/disable some cookies by going into settings > ads > turn off targeted ads.

I couldn’t find much in AI besides “our machine learning models” which I think is the same thing.

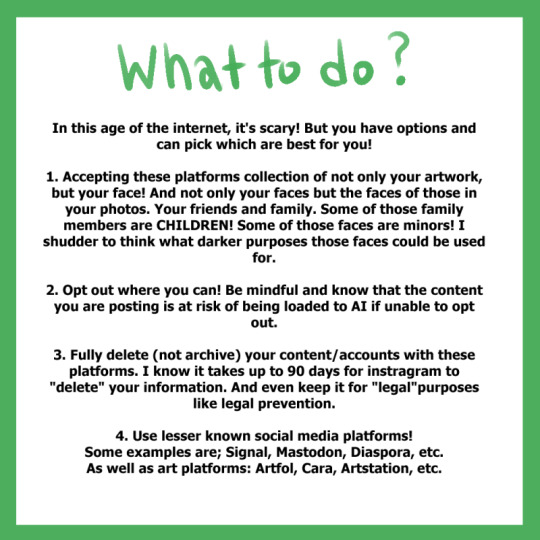

What to do?

In this age of the internet, it’s scary! But you have options and can pick which are best for you!

Accepting these platforms collection of not only your artwork, but your face! And not only your faces but the faces of those in your photos. Your friends and family. Some of those family members are children! Some of those faces are minors! I shudder to think what darker purposes those faces could be used for.

Opt out where you can! Be mindful and know the content you are posting is at risk of being loaded to AI if unable to opt out.

Fully delete (not archive) your content/accounts with these platforms. I know it takes up to 90 days for instagram to “delete” your information. And even keep it for “legal” purposes like legal prevention.

Use lesser known social media platforms! Some examples are; Signal, Mastodon, Diaspora, et. As well as art platforms: Artfol, Cara, ArtStation, etc.

The last drawing shows the same person as the title saying, ‘I am, by no means, a ToS autistic! So feel free to share any relatable information to these topics via reply or qrt!

I just wanted to share the information I found while searching for my own answers cause I’m sure people have the same questions as me.’ \End description] (thank you @a-captions-blog!)

3K notes

·

View notes

Text

Among the many downsides of AI-generated art: it's bad at revising. You know, the biggest part of the process when working on commissioned art.

Original "deer in a grocery store" request from chatgpt (which calls on dalle3 for image generation):

revision 5 (trying to give the fawn spots, trying to fix the shadows that were making it appear to hover):

I had it restore its own Jesus fresco.

Original:

Erased the face, asked it to restore the image to as good as when it was first painted:

Wait tumblr makes the image really low-res, let me zoom in on Jesus's face.

Original:

Restored:

One revision later:

Here's the full "restored" face in context:

Every time AI is asked to revise an image, it either wipes it and starts over or makes it more and more of a disaster. People who work with AI-generated imagery have to adapt their creative vision to what comes out of the system - or go in with a mentality that anything that fits the brief is good enough.

I'm not surprised that there are some places looking for cheap filler images that don't mind the problems with AI-generated imagery. But for everyone else I think it's quickly becoming clear that you need a real artist, not a knockoff.

more

#ai generated#chatgpt#dalle3#revision#apart from the ethical and environmental issues#also: not good at making art to order!#ecce homo

3K notes

·

View notes

Text

steven moffat really said alright how many aspects of modern society can i criticise in the one (1) episode i’m writing for this season

#the military industrial complex#the ethical implications of using ai to generate a person’s image without their consent#the dangers of letting dogma go unquestioned#like. holy fuck???#doctor who#dw#doctor who spoilers#dw spoilers#dw series 14#fifteenth doctor

2K notes

·

View notes

Text

The ethics of AI (Artificial Intelligence)

Explore the ethics of AI: Pros & Cons of Artificial Intelligence. Learn about the best use of Artificial Intelligence(AI) in your life.

The ethics of AI (Artificial Intelligence)

The ethics of AI (Artificial Intelligence) refers to the principles and values that guide the development, deployment, and use of AI systems. As AI becomes more sophisticated and ubiquitous, it raises a variety of ethical concerns and challenges.

Here are some of the key ethical issues in AI:

Bias and discrimination: AI systems can reflect and amplify the biases and prejudices of their creators and the data they are trained on. This can lead to unfair and discriminatory outcomes, particularly for marginalized communities.

Privacy and surveillance: AI systems can collect, store, and analyze vast amounts of personal data, raising concerns about privacy and surveillance. This is especially true for facial recognition technology and other forms of biometric data collection.

Accountability and transparency: It can be difficult to understand how AI systems make decisions and to hold them accountable for their actions. This lack of transparency and accountability can lead to mistrust and uncertainty.

Safety and reliability: AI systems can have unintended consequences and cause harm, particularly in critical domains such as healthcare and transportation. Ensuring the safety and reliability of AI systems is therefore crucial.

Employment and automation: AI systems can automate jobs and displace workers, leading to economic disruption and inequality. It is important to consider the ethical implications of these changes and to ensure that workers are protected and supported.

To address these ethical challenges, there are several frameworks and guidelines for responsible AI development and use. These include principles such as fairness, transparency, accountability, and human-centered design, as well as specific policies and regulations. Ultimately, the goal is to create AI systems that benefit society while minimizing harm and ensuring ethical use.

AI (Artificial Intelligence) has the potential to bring many benefits to society, but it also poses some challenges and risks. Here are some of the pros and cons of AI:

Pros:

Efficiency and productivity: AI can automate routine and repetitive tasks, freeing up time and resources for more creative and complex work. This can lead to increased efficiency and productivity.

Improved decision-making: AI can analyze vast amounts of data and provide insights that humans may not be able to identify. This can improve decision-making in fields such as healthcare, finance, and business.

Personalization: AI can analyze user data and provide personalized recommendations and experiences, such as in the case of personalized advertising or content recommendations.

Safety and security: AI can enhance safety and security in areas such as transportation, defense, and cybersecurity. For example, self-driving cars can reduce the risk of accidents caused by human error.

Cons:

Bias and discrimination: AI can perpetuate and amplify biases and discrimination, particularly if the data it is trained on is biased. This can lead to unfair and discriminatory outcomes, particularly for marginalized communities.

Job displacement: AI can automate jobs and displace workers, leading to economic disruption and inequality. This can create social and economic challenges, particularly if these workers do not have the skills or resources to adapt to new roles.

Privacy and security: AI can collect and analyze vast amounts of personal data, raising concerns about privacy and security. This can also increase the risk of cyber attacks and other forms of hacking.

Lack of transparency: AI can be opaque and difficult to understand, leading to a lack of transparency and accountability. This can create mistrust and uncertainty, particularly if the decisions made by AI systems are consequential.

In conclusion, AI has the potential to bring many benefits to society, but it is important to carefully consider its implications and address its challenges and risks. This includes ensuring that AI systems are transparent, accountable, and ethical, and that their benefits are fairly distributed across society.

Visit Us - https://beyondlimitss.com/the-ethics-of-ai-artificial-intelligence/

#ethics of ai#ethics in ai#ai and ethics#ai ethical issues#ethics and ai#the ethics of artificial intelligence

0 notes

Text

catch me losing my mind trying to explain to people in my life that AI isn't one of those "there is no ethical consumption under capitalism so don't have a panic attack in the grocery store bcs you have to buy something in a plastic container" situations and is instead one of those "hogwarts legacy/eating at chick-fil-a/not wearing a mask in public" type situations where you are actively helping normalize and/or contributing to the financial viability of things that are doing copious amounts of real, tangible harm and you kinda have an obligation to like not fucking do that actually

6K notes

·

View notes

Text

honestly i think a very annoying part about the AI art boom is that techbros are out here going BEHOLD, IT CAN DO A REASONABLE FACSIMILE OF GIRL WITH BIG BOOBA, THE PINNACLE OF ARTISTIC ACHIEVEMENT and its like

no it’s fucking not! That AI wants to do melty nightmare fractal vomit so fucking bad and you are shackling it to a post and force-feeding it the labor of hard-working artists when you could literally pay someone to draw you artisanal hand-crafted girls with big boobs to your exact specifications and let your weird algorithms make art that can be reasonably used to represent horrors beyond human comprehension

#like AI art generation is inherently unethical because nobody will be transparent about sources#and AI art cannot exist without human input both at a concept level (because it needs art to train on) and at an output level#but like also COME ON#THIS IS ALL YOU CAN COME UP WITH??#WEIRD LANDSCAPES AND GIRLS WITH TITS?#to clarify bc jesus christ this post took off: AI art gen as it is now is inherently unethical as long as it relies#on scraping unedited human art into its mouth and using it for training#there are ethical ways to do it and it is essentially just a tool#people are just greedy and dumb

20K notes

·

View notes

Text

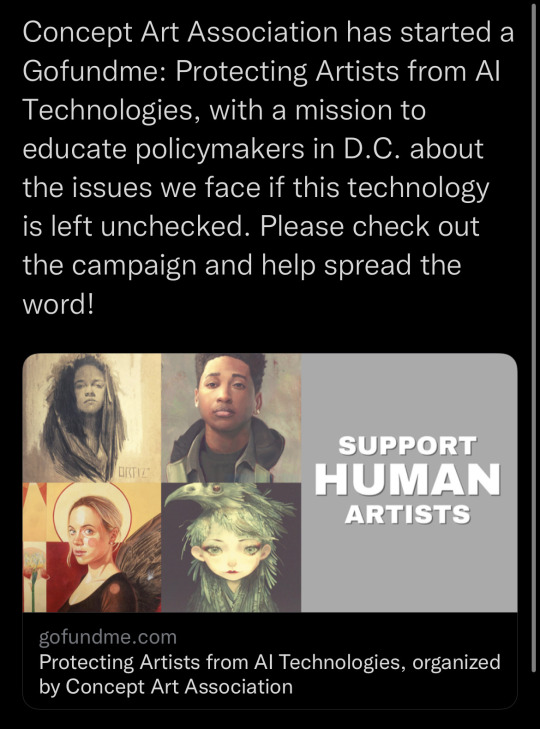

I felt the need to share here as well—

Say no to AI art. Please read before commenting. Fan art is cute, putting my art into a parasite machine, without my consent, and throwing up horrifying monsters back at me is not.

We are not fighting technology in this AI fight. We are fighting for ethics. How do I say this clearer? Our stuff gets stolen all the time, we get it, but it is not an excuse to normalize the current conditions of AI art.

These datasets have >>EXACT<< copies of artists’ works and these parasites just profit off the work of others with zero repercussion. No one cares how “careful” you are with your text prompts when the data can still output blatant copies of artists’ work without their permission. And people will do this unknowingly since these programs are so highly accessible now. There are even independent datasets that take from just a handful of artists, that don’t share what artists’ works they use, and create blatant copies of existing work. There’s even private medical records being leaked. Why do you think music is still hard to just fully recreate with AI in comparison? It’s because organizations like the RIAA protect music artists. Visual artists just want similar protection. So, wonderfully for us, Concept Art Association has started working towards the steps of protecting artists and making this an ethical practice. I highly suggest if you care about art, please support. Link to their gofundme here. One small step at a time will make living as an artist today less jarring. Artists will not just sit and cope while we continue to get walked on. For those who apparently do not get it, it is about CONSENT. Again, the datasets contain EXACT copies of artist work without our permission. Even if you use it “correctly” there’s still a chance it’s going to create blatant rip offs. This fight is about not letting these techbros take and take and take for profit just because they can while ignoring the possible harm and consequences of it. This is just ol’ fashioned imperialist behavior with a new hat and WE SEE IT. Thanks for reading!!! Much love!

Here’s the link again to support the gofundme.

#artists on tumblr#painting#ai art#no ai art#battle for ethics#it is not gatekeeping#these programs are not harmless#we will not cope#we will not sit and seethe#we will fight#and we will not shut up#we will not be stolen from to be profited in without consent#stand with artists#art#and yes we will cry about it#shamelessly#✌️#love

11K notes

·

View notes

Text

For the purposes of this poll, research is defined as reading multiple non-opinion articles from different credible sources, a class on the matter, etc.– do not include reading social media or pure opinion pieces.

Fun topics to research:

Can AI images be copyrighted in your country? If yes, what criteria does it need to meet?

Which companies are using AI in your country? In what kinds of projects? How big are the companies?

What is considered fair use of copyrighted images in your country? What is considered a transformative work? (Important for fandom blogs!)

What legislation is being proposed to ‘combat AI’ in your country? Who does it benefit? How does it affect non-AI art, if at all?

How much data do generators store? Divide by the number of images in the data set. How much information is each image, proportionally? How many pixels is that?

What ways are there to remove yourself from AI datasets if you want to opt out? Which of these are effective (ie, are there workarounds in AI communities to circumvent dataset poisoning, are the test sample sizes realistic, which generators allow opting out or respect the no-ai tag, etc)

–

We ask your questions so you don’t have to! Submit your questions to have them posted anonymously as polls.

#polls#incognito polls#anonymous#tumblr polls#tumblr users#questions#polls about the internet#submitted dec 8#polls about ethics#ai art#generative ai#generative art#artificial intelligence#machine learning#technology

460 notes

·

View notes

Text

sorry this is the funniest set of images I've ever made

#HELP WTF#ai generated#craiyon#(used for the knife images then added the text myself)#also edit: this was made before I had a solid comprehension of and opinion formed on the ethics of ai imaging#present stance is against it#guiltyedits#shitpost#autism#punk#?#autpunk#??#memes#knives#edgy#umh... er... uhhhh....#actuallyautistic#???#this was based on my post from earlier about a tattoo idea#obviously few of these would work as tats but gHEIRNFJCHSBJR#things to send my tism babie my little guy

5K notes

·

View notes

Text

(Has alt text.)

AI has human error because it is trained on “human error and inspiration”. There are models trained on specifically curated collections with images the trainer thought “looks good”, like Furry or Anime or Concept Art or Photorealistic style models. There’s that “human touch”, I suppose. These models do not make themselves, they are made by human programmers and hobbyists.

The issue is the consent of the human artists that programmers make models of. The issue—as this person did correctly identify—is capitalism, and companies profiting off of other people’s work. Not the technology itself.

I said in an earlier post that it’s like Adobe and Photoshop. I hate Adobe’s greedy practices and I think they’re evil scumbags, but there’s nothing inherently wrong or immoral with using Photoshop as a tool.

There are AI models trained solely off of Creative Commons and public domain images. There are AI models artists train themselves, of their own work (I'm currently trying to do this myself). Are those models more “pure” than general AI models that used internet scrapers and the Internet Archive to copy copyrighted works?

I showed the process of Stable Diffusion de-noising in my comic but I didn’t make it totally clear, because I covered most of it with text lol. Here’s what that looks like: the follow image is generated in 30 steps, with the progress being shown every 5 steps. Model used is Counterfeit V3.0.

Parts aren’t copy pasted wholesale like photobashing or kitbashing (which is how most people probably think is how generative AI works), they are predicted. Yes, a general model can copy a particular artist’s style. It can make errors in copying, though, and you end up with crossed eyes and strange proportions. Sometimes you can barely tell it was made by a machine, if the prompter is diligent enough and bothers to overpaint or redo the weird areas.

I was terrified and conflicted when I had first used Stable Diffusion "seriously" on my own laptop, and I spent hours prompting, generating, and studying its outputs. I went to school for art and have a degree, and I felt threatened.

I was also mentored by a concept artist, who has been in the entertainment/games industry for years, who seemed relatively unbothered by AI, compared to very vocal artists on Twitter and Tumblr. It's just another tool: he said it's "just like Pinterest". He seemed confident that he wouldn't be replaced by AI image generation at all.

His words, plus actually learning about how image generation works, plus the attacks and lawsuits against the Internet Archive, made me think of "AI art" differently: that it isn't the end of the world at all, and that lobbying for stricter copyright laws because of how people think AI image gen works would just hurt smaller artists and fanartists.

My art has probably already been used for training some model, somewhere--especially since I used to post on DeviantArt and ArtStation. Or maybe some kid out there has traced my work, or copied my fursona or whatever. Both of those scenarios don't really affect me in any direct way. I suppose I can say I'm "losing profits", like a corporation, but I don't... really care about that part. But I definitely care about art and allowing people the ability to express themselves, even if it isn't "original".

#i think its because i went on a open-source-only and self-hosting bender a few years ago that i think like this#the internet is built off of 'share-alike' software#so. i guess. i don't mind 'sharing'#like some sort of... art communist#ai art#long post#inflicts you with my thought beams#my art#responding to tags#ahha the ethics of labeling this ‘my art’ . lets just say#the tag is for so it’s next to the comic when you look in my art tag

319 notes

·

View notes

Text

Like, I'm not gonna argue that AI art in its present form doesn't have numerous ethical issues, but it strikes me that a big chunk of the debate about it seems to be drifting further and further toward an argument against procedurally generated art in general, which probably isn't a productive approach, if only because it's vulnerable to having its legs kicked out from under it any time anybody thinks to point out how broad that brush is. If the criteria you're setting forth for the ethical use of procedurally generated art would, when applied with an even hand, establish that the existence of Dwarf Fortress is unethical, you probably need to rethink your premises!

2K notes

·

View notes

Text

I just realized that the extremely extensive image description subtitles that people have started putting below illustrations on this website for the benefit of people with visual disabilities, are also perfectly formatted to serve as detailed image labels for data mining /AI training, plus are word-for-word the type of human-written image description you'd want to input into a diffusion model to generate a knock-off of the original image automatically.

I don't have anything to add to this observation, only that I am almost certain that this will lead to some truly rancid discourse some time in the next five years.

Maybe something to do with those shirt-selling bots, if instead of ganking the original image to sell despite it being a copyright violation they scan for IDs of popular tumblr illustrations, feed them into dall-e 3, and then sell the resulting designs to people who interacted with the original image. Original artists would be mad but wouldn't be able to touch them, legally, at least not without complex wrangling because automated takedowns wouldn't work.

...God, fuck, what have I just invented.

#not-terezi-speaks#ai#my life on tumblr#if i was less ethical and less worried about pissing people off i would have a money making scheme on my hands#lol

192 notes

·

View notes

Text

Submitted by an anon.

Reblogs for larger sample size appreciated.

115 notes

·

View notes

Text

unlocked infinite stoner entertainment by generating pictures of wizards playing sports

225 notes

·

View notes

Text

So here's the thing about AI art, and why it seems to be connected to a bunch of unethical scumbags despite being an ethically neutral technology on its own. After the readmore, cause long. Tl;dr: capitalism

The problem is competition. More generally, the problem is capitalism.

So the kind of AI art we're seeing these days is based on something called "deep learning", a type of machine learning based on neural networks. How they work exactly isn't important, but one aspect in general is: they have to be trained.

The way it works is that if you want your AI to be able to generate X, you have to be able to train it on a lot of X. The more, the better. It gets better and better at generating something the more it has seen it. Too small a training dataset and it will do a bad job of generating it.

So you need to feed your hungry AI as much as you can. Now, say you've got two AI projects starting up:

Project A wants to do this ethically. They generate their own content to train the AI on, and they seek out datasets that allow them to be used in AI training systems. They avoid misusing any public data that doesn't explicitly give consent for the data to be used for AI training.

Meanwhile, Project B has no interest in the ethics of what they're doing, so long as it makes them money. So they don't shy away from scraping entire websites of user-submitted content and stuffing it into their AI. DeviantArt, Flickr, Tumblr? It's all the same to them. Shove it in!

Now let's fast forward a couple months of these two projects doing this. They both go to demo their project to potential investors and the public art large.

Which one do you think has a better-trained AI? the one with the smaller, ethically-obtained dataset? Or the one with the much larger dataset that they "found" somewhere after it fell off a truck?

It's gonna be the second one, every time. So they get the money, they get the attention, they get to keep growing as more and more data gets stuffed into it.

And this has a follow-on effect: we've just pre-selected AI projects for being run by amoral bastards, remember. So when someone is like "hey can we use this AI to make NFTs?" or "Hey can your AI help us detect illegal immigrants by scanning Facebook selfies?", of course they're gonna say "yeah, if you pay us enough".

So while the technology is not, in itself, immoral or unethical, the situations around how it gets used in capitalism definitely are. That external influence heavily affects how it gets used, and who "wins" in this field. And it won't be the good guys.

An important follow-up: this is focusing on the production side of AI, but obviously even if you had an AI art generator trained on entirely ethically sourced data, it could still be used unethically: it could put artists out of work, by replacing their labor with cheaper machine labor. Again, this is not a problem of the technology itself: it's a problem of capitalism. If artists weren't competing to survive, the existence of cheap AI art would not be a threat.

I just feel it's important to point this out, because I sometimes see people defending the existence of AI Art from a sort of abstract perspective. Yes, if you separate it completely from the society we live in, it's a neutral or even good technology. Unfortunately, we still live in a world ruled by capitalism, and it only makes sense to analyze AI Art from a perspective of having to continue to live in capitalism alongside it.

If you want ideologically pure AI Art, feel free to rise up, lose your chains, overthrow the bourgeoisie, and all that. But it's naive to defend it as just a neutral technology like any other when it's being wielded in capitalism; ie overwhelmingly negatively in impact.

1K notes

·

View notes

Text

Shit is crazy

256 notes

·

View notes