#iOT

Text

IN THE FALL OF 2020, GIG WORKERS IN VENEZUELA POSTED A SERIES OF images to online forums where they gathered to talk shop. The photos were mundane, if sometimes intimate, household scenes captured from low angles—including some you really wouldn’t want shared on the Internet.

In one particularly revealing shot, a young woman in a lavender T-shirt sits on the toilet, her shorts pulled down to mid-thigh.

The images were not taken by a person, but by development versions of iRobot’s Roomba J7 series robot vacuum. They were then sent to Scale AI, a startup that contracts workers around the world to label audio, photo, and video data used to train artificial intelligence.

They were the sorts of scenes that internet-connected devices regularly capture and send back to the cloud—though usually with stricter storage and access controls. Yet earlier this year, MIT Technology Review obtained 15 screenshots of these private photos, which had been posted to closed social media groups.

The photos vary in type and in sensitivity. The most intimate image we saw was the series of video stills featuring the young woman on the toilet, her face blocked in the lead image but unobscured in the grainy scroll of shots below. In another image, a boy who appears to be eight or nine years old, and whose face is clearly visible, is sprawled on his stomach across a hallway floor. A triangular flop of hair spills across his forehead as he stares, with apparent amusement, at the object recording him from just below eye level.

iRobot—the world’s largest vendor of robotic vacuums, which Amazon recently acquired for $1.7 billion in a pending deal—confirmed that these images were captured by its Roombas in 2020.

Ultimately, though, this set of images represents something bigger than any one individual company’s actions. They speak to the widespread, and growing, practice of sharing potentially sensitive data to train algorithms, as well as the surprising, globe-spanning journey that a single image can take—in this case, from homes in North America, Europe, and Asia to the servers of Massachusetts-based iRobot, from there to San Francisco–based Scale AI, and finally to Scale’s contracted data workers around the world (including, in this instance, Venezuelan gig workers who posted the images to private groups on Facebook, Discord, and elsewhere).

Together, the images reveal a whole data supply chain—and new points where personal information could leak out—that few consumers are even aware of.

(continue reading)

#politics#james baussmann#scale ai#irobot#amazon#roomba#privacy rights#colin angle#privacy#data mining#surveillance state#mass surveillance#surveillance industry#1st amendment#first amendment#1st amendment rights#first amendment rights#ai#artificial intelligence#iot#internet of things

5K notes

·

View notes

Text

in this house we stan noah

#total drama fanart#tdi noah#total drama island#total drama noah#island of the slaughtered#iot#HATSUNE NOAH?????/#tdi fanart#td noah#total dramarama

203 notes

·

View notes

Text

Modern software development be like: I wrote 10 lines of code to call an API that calls another API, which calls yet another API that finally turns on a lightbulb. Pray that Cloudflare or AWS will not be down during this operation; otherwise, there will be no light for you.

113 notes

·

View notes

Text

#memes#meme#throwback#lol#funny#lol memes#funny memes#funny meme haha#funny stuff#alexa#google#ai#the robots#they can talk to each other#compliment#complimenting strangers#so cute#cute#lmao#spy devices#iot#iotsolutions

64 notes

·

View notes

Text

#robot#robotics#robots#technology#art#engineering#arduino#d#electronics#transformers#mecha#tech#toys#anime#robotic#scifi#gundam#ai#drawing#artificialintelligence#digitalart#innovation#illustration#electrical#automation#robotica#diy#design#arduinoproject#iot

28 notes

·

View notes

Text

playing with my m5 cardputer, wrote a software audio mixing library in micropython and made a crude sequencer with it

16 notes

·

View notes

Text

My electric toothbrush has Bluetooth and I can't think of a good reason to connect this to my phone. Does it just want to know which anime tiddys I've seen (all of them)

29 notes

·

View notes

Text

15. April 2024

Nicht ganz die gewünschte Zukunft des Zähneputzens

Seit einer Woche nutze ich eine neue elektrische Zahnbürste, eine Oral-B iO 4N. Bei der Auswahl war für mich der runde Bürstenkopf zentral, im Gegensatz zu der vorherigen elektrischen Zahnbürste mit doppelt langem und lediglich vibrierendem Kopf vermittelt dieser mir das Gefühl, wirklich um jeden Zahn herum zu putzen.

Allerdings hatte ich in der Gebrauchsanleitung gelesen (ich bin das, ich lese Gebrauchsanweisungen), dass sie verschiedene Putz-Programme hat; der Knopf zur Auswahl ist leicht zu finden (der eine Knopf, der nicht an- und ausschaltet). Nur kann ich nicht erkennen, welches Programm gerade aktiv ist, anscheinend brauche ich dazu die App auf dem Smartphone, die per Bluetooth mit der Zahnbürste verbunden wird.

Die App installiert habe ich schnell, auch mittelschnell die Zahnbürste damit gekoppelt - doch während ich noch darin rumklicke auf der Suche nach den Putz-Programmen, will die App neue Firmware für die Zahnbürste herunterladen und installieren. Na von mir aus, das kenne ich ja von Apps. Nur dass das nicht funktioniert, drei Versuche werden von der App abgebrochen.

Die vier Programme finde ich schließlich in der App (die vor allem auf Gamification abzielt, Zahnputzziele erreichen lässt, dafür Fleißbildchen verleiht - aus dem Alter, in dem man mir Anreize fürs Zähneputzen bieten muss, fühle ich mich aber seit einigen Jahrzehnten raus), doch keinen Hinweis darauf, welches denn gerade auf meiner Zahnbürste aktiv ist: Ich kann lediglich die Reihenfolge der Anwählbarkeit über den Knopf auf dem Gerät ändern.

Letztendlich nutze ich die Funktion "auf Werkseinstellungen zurücksetzen", denn die Gebrauchsanweisung nannte die Default-Einstellung bei Kauf ("tägliches Putzen").

Das ist nicht ganz die Zukunft des Zähneputzens, die ich bestellt hatte.

(die Kaltmamsell)

8 notes

·

View notes

Text

Double your Wi-Fi, double your fun, with two ESP32 Trinkeys 🔧🔌🖥️

These two ESP32 trinkeys look similar, but they're not the same! Yes - both plug into USB A ports, both have reset and user buttons, a NeoPixel and red LED, plus a STEMMA QT I2C port and 3-pin JST SH for analog/digital/PWM. they both contain a CH343P + dual NPN FET for auto-reset circuitry…but one has an ESP32 Pico N8R2 (https://www.digikey.com/short/9ppmnh07) and the other is an ESP32 Mini N4 (https://www.digikey.com/short/31m1drf8). the first module is smaller and contains 8 MB of QSPI flash + 2 MB of PSRAM. The second is larger but only has 4 MB of flash and no PSRAM. why have both? cause the Mini is significantly less expensive, $2.30 vs $3.50, which adds $3 to the final price. For CircuitPython usage, the PSRAM is essential - but for plain C/C++ development, you can get away with skipping. either way, these could be handy little boards for creating sensor nodes that plug into a wall outlet, with easy extensibility for I2C or analog/digital/PWM I/O!

#adafruit#esp32#neopixel#trinkey#usb#wifi#dualtrinkeys#iot#sensors#circuitpython#developer#electronics#usbports#i2cconnectivity#diyprojects#makercommunity

4 notes

·

View notes

Text

Discover the Basics of the Raspberry Pi Along with Multiple Projects

The Raspberry Pi has become incredibly popular among computer hobbyists and businesses for a variety of reasons. It consumes very little power, is portable, has solid-state storage, makes no noise, and offers extension capabilities, all at a very low price.

3 notes

·

View notes

Text

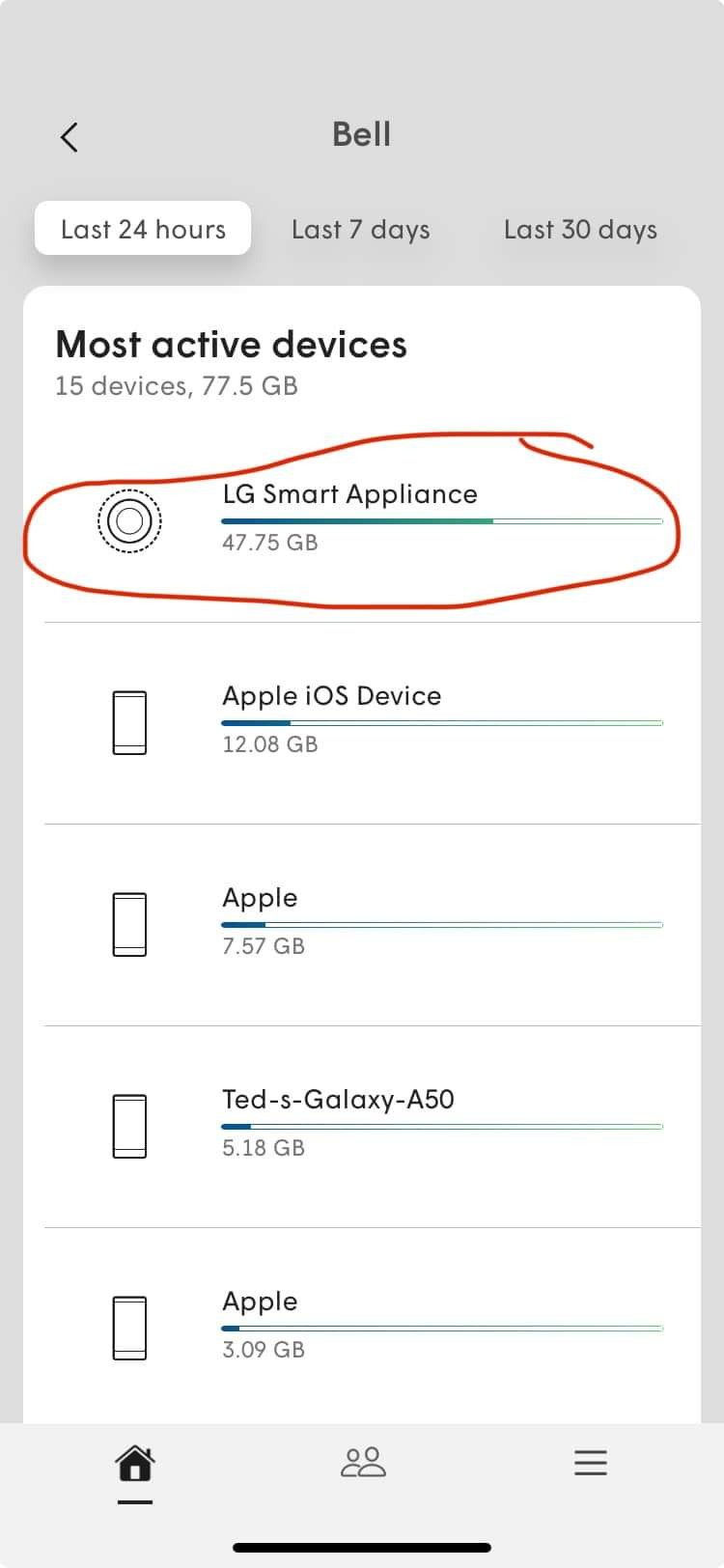

Wtf is my washing machine doing?! 😂

23 notes

·

View notes

Text

things normal people do: set up a separate wifi network just for problematic internet of trash devices that don't like modern 5Ghz networks.

61 notes

·

View notes

Text

Denmark: A significant healthtech hub

- By InnoNurse Staff -

According to data platform Dealroom, Danish healthtech firms raised a stunning $835 million in 2023, an 11% rise over the previous record set in 2021.

Read more at Tech.eu

///

Other recent news and insights

A 'Smart glove' could improve the hand movement of stroke sufferers (The University of British Columbia)

Oxford Medical Simulation raises $12.6 million in Series A funding to address the significant healthcare training gap through virtual reality (Oxford Medical Simulation/PRNewswire)

PathKeeper's innovative camera and AI software for spinal surgery (PathKeeper/PRNewswire)

Ezdehar invests $10 million in Yodawy to acquire a minority stake in the Egyptian healthtech (Bendada.com)

#denmark#startups#innovation#smart glove#stroke#neuroscience#iot#Oxford Medical Simulation#health tech#digital health#medtech#education#pathkeeper#ai#computer vision#surgery#ezdehar#yodawy#egypt#mena

13 notes

·

View notes

Text

Mastering Neural Networks: A Deep Dive into Combining Technologies

How Can Two Trained Neural Networks Be Combined?

Introduction

In the ever-evolving world of artificial intelligence (AI), neural networks have emerged as a cornerstone technology, driving advancements across various fields. But have you ever wondered how combining two trained neural networks can enhance their performance and capabilities? Let’s dive deep into the fascinating world of neural networks and explore how combining them can open new horizons in AI.

Basics of Neural Networks

What is a Neural Network?

Neural networks, inspired by the human brain, consist of interconnected nodes or "neurons" that work together to process and analyze data. These networks can identify patterns, recognize images, understand speech, and even generate human-like text. Think of them as a complex web of connections where each neuron contributes to the overall decision-making process.

How Neural Networks Work

Neural networks function by receiving inputs, processing them through hidden layers, and producing outputs. They learn from data by adjusting the weights of connections between neurons, thus improving their ability to predict or classify new data. Imagine a neural network as a black box that continuously refines its understanding based on the information it processes.

Types of Neural Networks

From simple feedforward networks to complex convolutional and recurrent networks, neural networks come in various forms, each designed for specific tasks. Feedforward networks are great for straightforward tasks, while convolutional neural networks (CNNs) excel in image recognition, and recurrent neural networks (RNNs) are ideal for sequential data like text or speech.

Why Combine Neural Networks?

Advantages of Combining Neural Networks

Combining neural networks can significantly enhance their performance, accuracy, and generalization capabilities. By leveraging the strengths of different networks, we can create a more robust and versatile model. Think of it as assembling a team where each member brings unique skills to tackle complex problems.

Applications in Real-World Scenarios

In real-world applications, combining neural networks can lead to breakthroughs in fields like healthcare, finance, and autonomous systems. For example, in medical diagnostics, combining networks can improve the accuracy of disease detection, while in finance, it can enhance the prediction of stock market trends.

Methods of Combining Neural Networks

Ensemble Learning

Ensemble learning involves training multiple neural networks and combining their predictions to improve accuracy. This approach reduces the risk of overfitting and enhances the model's generalization capabilities.

Bagging

Bagging, or Bootstrap Aggregating, trains multiple versions of a model on different subsets of the data and combines their predictions. This method is simple yet effective in reducing variance and improving model stability.

Boosting

Boosting focuses on training sequential models, where each model attempts to correct the errors of its predecessor. This iterative process leads to a powerful combined model that performs well even on difficult tasks.

Stacking

Stacking involves training multiple models and using a "meta-learner" to combine their outputs. This technique leverages the strengths of different models, resulting in superior overall performance.

Transfer Learning

Transfer learning is a method where a pre-trained neural network is fine-tuned on a new task. This approach is particularly useful when data is scarce, allowing us to leverage the knowledge acquired from previous tasks.

Concept of Transfer Learning

In transfer learning, a model trained on a large dataset is adapted to a smaller, related task. For instance, a model trained on millions of images can be fine-tuned to recognize specific objects in a new dataset.

How to Implement Transfer Learning

To implement transfer learning, we start with a pretrained model, freeze some layers to retain their knowledge, and fine-tune the remaining layers on the new task. This method saves time and computational resources while achieving impressive results.

Advantages of Transfer Learning

Transfer learning enables quicker training times and improved performance, especially when dealing with limited data. It’s like standing on the shoulders of giants, leveraging the vast knowledge accumulated from previous tasks.

Neural Network Fusion

Neural network fusion involves merging multiple networks into a single, unified model. This method combines the strengths of different architectures to create a more powerful and versatile network.

Definition of Neural Network Fusion

Neural network fusion integrates different networks at various stages, such as combining their outputs or merging their internal layers. This approach can enhance the model's ability to handle diverse tasks and data types.

Types of Neural Network Fusion

There are several types of neural network fusion, including early fusion, where networks are combined at the input level, and late fusion, where their outputs are merged. Each type has its own advantages depending on the task at hand.

Implementing Fusion Techniques

To implement neural network fusion, we can combine the outputs of different networks using techniques like averaging, weighted voting, or more sophisticated methods like learning a fusion model. The choice of technique depends on the specific requirements of the task.

Cascade Network

Cascade networks involve feeding the output of one neural network as input to another. This approach creates a layered structure where each network focuses on different aspects of the task.

What is a Cascade Network?

A cascade network is a hierarchical structure where multiple networks are connected in series. Each network refines the outputs of the previous one, leading to progressively better performance.

Advantages and Applications of Cascade Networks

Cascade networks are particularly useful in complex tasks where different stages of processing are required. For example, in image processing, a cascade network can progressively enhance image quality, leading to more accurate recognition.

Practical Examples

Image Recognition

In image recognition, combining CNNs with ensemble methods can improve accuracy and robustness. For instance, a network trained on general image data can be combined with a network fine-tuned for specific object recognition, leading to superior performance.

Natural Language Processing

In natural language processing (NLP), combining RNNs with transfer learning can enhance the understanding of text. A pre-trained language model can be fine-tuned for specific tasks like sentiment analysis or text generation, resulting in more accurate and nuanced outputs.

Predictive Analytics

In predictive analytics, combining different types of networks can improve the accuracy of predictions. For example, a network trained on historical data can be combined with a network that analyzes real-time data, leading to more accurate forecasts.

Challenges and Solutions

Technical Challenges

Combining neural networks can be technically challenging, requiring careful tuning and integration. Ensuring compatibility between different networks and avoiding overfitting are critical considerations.

Data Challenges

Data-related challenges include ensuring the availability of diverse and high-quality data for training. Managing data complexity and avoiding biases are essential for achieving accurate and reliable results.

Possible Solutions

To overcome these challenges, it’s crucial to adopt a systematic approach to model integration, including careful preprocessing of data and rigorous validation of models. Utilizing advanced tools and frameworks can also facilitate the process.

Tools and Frameworks

Popular Tools for Combining Neural Networks

Tools like TensorFlow, PyTorch, and Keras provide extensive support for combining neural networks. These platforms offer a wide range of functionalities and ease of use, making them ideal for both beginners and experts.

Frameworks to Use

Frameworks like Scikit-learn, Apache MXNet, and Microsoft Cognitive Toolkit offer specialized support for ensemble learning, transfer learning, and neural network fusion. These frameworks provide robust tools for developing and deploying combined neural network models.

Future of Combining Neural Networks

Emerging Trends

Emerging trends in combining neural networks include the use of advanced ensemble techniques, the integration of neural networks with other AI models, and the development of more sophisticated fusion methods.

Potential Developments

Future developments may include the creation of more powerful and efficient neural network architectures, enhanced transfer learning techniques, and the integration of neural networks with other technologies like quantum computing.

Case Studies

Successful Examples in Industry

In healthcare, combining neural networks has led to significant improvements in disease diagnosis and treatment recommendations. For example, combining CNNs with RNNs has enhanced the accuracy of medical image analysis and patient monitoring.

Lessons Learned from Case Studies

Key lessons from successful case studies include the importance of data quality, the need for careful model tuning, and the benefits of leveraging diverse neural network architectures to address complex problems.

Online Course

I have came across over many online courses. But finally found something very great platform to save your time and money.

1.Prag Robotics_ TBridge

2.Coursera

Best Practices

Strategies for Effective Combination

Effective strategies for combining neural networks include using ensemble methods to enhance performance, leveraging transfer learning to save time and resources, and adopting a systematic approach to model integration.

Avoiding Common Pitfalls

Common pitfalls to avoid include overfitting, ignoring data quality, and underestimating the complexity of model integration. By being aware of these challenges, we can develop more robust and effective combined neural network models.

Conclusion

Combining two trained neural networks can significantly enhance their capabilities, leading to more accurate and versatile AI models. Whether through ensemble learning, transfer learning, or neural network fusion, the potential benefits are immense. By adopting the right strategies and tools, we can unlock new possibilities in AI and drive advancements across various fields.

FAQs

What is the easiest method to combine neural networks?

The easiest method is ensemble learning, where multiple models are combined to improve performance and accuracy.

Can different types of neural networks be combined?

Yes, different types of neural networks, such as CNNs and RNNs, can be combined to leverage their unique strengths.

What are the typical challenges in combining neural networks?

Challenges include technical integration, data quality, and avoiding overfitting. Careful planning and validation are essential.

How does combining neural networks enhance performance?

Combining neural networks enhances performance by leveraging diverse models, reducing errors, and improving generalization.

Is combining neural networks beneficial for small datasets?

Yes, combining neural networks can be beneficial for small datasets, especially when using techniques like transfer learning to leverage knowledge from larger datasets.

#artificialintelligence#coding#raspberrypi#iot#stem#programming#science#arduinoproject#engineer#electricalengineering#robotic#robotica#machinelearning#electrical#diy#arduinouno#education#manufacturing#stemeducation#robotics#robot#technology#engineering#robots#arduino#electronics#automation#tech#innovation#ai

3 notes

·

View notes