#json parser tools

Explore tagged Tumblr posts

Text

I have a habit of making frameworks and then never doing anything with them. I have made countless libraries that I never had any use for personally, but were just cool to have. truth be told I hate making finished, end user products: games, websites, applications, BAH! one million json parsers. five hundred expression evaluators to you. parsing library. gui library. multi block structures in minecraft.

it used to confound me that everybody else who was coding wanted to actually make things to be used directly, to solve actual problems they had. I always just wanted to feel like a wizard so I kept making cooler and cooler spells even if I had no use for them.

now I make tools for making tools for making tools that I have no idea what to apply to. I made a parser generator (a tool for making parsers (a tool for analyzing syntax)) which I will use to make a programming language (a tool for making yet more tools) that I have no idea what I'll do with. the more abstract the better.

because I have no actual use for this stuff I imagine myself as a sorceress locking herself in a tower to research very complicated and impractical spells, reveling in their extreme power and then just tucking them away on a shelf. I legitimately think this is the closest thing there is to being an actual modern day wizard and tbh it's that aesthetic that keeps me going.

5 notes

·

View notes

Text

Plaintext parser

So my dialogue scripts used to be JSON since the initial tutorials and resources I found suggested it. For some reason, I thought writing my own Yarnspinner-like system would be better, so I did that. Now my dialogue scripts are written as plain text. The tool in the video above lets me write and see changes in the actual game UI. All in all it's incredibly jank.

6 notes

·

View notes

Text

Take a look at this post… 'JSON Validator , URL Encoder/Decoder , URL Parser , HTML Encoder/Decoder , HTML Prettifier/Minifier , Base64 Encoder/Decoder , JSON Prettifier/Minifier, JSON Escaper/Unescaper , '.

#integration#banking#ai#b2b#finance#json#open banking banking finance edi b2b#clinicalresearch#youtube#dc

0 notes

Text

APEX DATA PARSER

APEX_DATA_PARSER: The Swiss Army Knife of Data Parsing in Oracle APEX

Oracle Application Express (APEX) offers a robust and flexible package named APEX_DATA_PARSER to streamline data parsing within your applications. This package empowers developers to process standard file formats like CSV, JSON, XML, and XLSX effortlessly.

Why is APEX_DATA_PARSER Important?

Data frequently arrives in structured formats. Consider spreadsheets, database exports, or information exchanges between systems. To effectively utilize this data, you need to parse it into a format your APEX applications can work with. Here’s where APEX_DATA_PARSER shines:

Simple Interface: The core of APEX_DATA_PARSER is a table function called PARSE. This function makes it remarkably easy to turn your structured data into a format suitable for SQL queries.

Flexibility: You can analyze files before parsing to understand their structure. You can also directly insert parsed data into your database tables.

Broad Format Support: Handle some of the most common file formats used for data exchange (CSV, JSON, XML, and XLSX) with a single tool.

A Basic Example

Let’s imagine you have a CSV file containing employee data:

Code snippet

employee_id,first_name,last_name,email

101,John,Doe,[email protected]

102,Jane,Smith,[email protected]

Use code with caution.

content_copy

The following code shows how to parse it using APEX_DATA_PARSER:

SQL

DECLARE

l_blob BLOB;

l_clob CLOB;

BEGIN

— Assume the CSV content is loaded into l_blob

FOR cur_row IN (

SELECT * FROM TABLE(APEX_DATA_PARSER.PARSE(

p_source => l_blob,

p_file_type => APEX_DATA_PARSER.C_FILE_TYPE_CSV,

p_normalize_values => ‘Y’ — Optional: Normalize data for consistent handling

))

LOOP

DBMS_OUTPUT.put_line(cur_row.col001 || ‘, ‘ || cur_row.col002 || ‘, ‘ || cur_row.col003);

END LOOP;

END;

Use code with caution.

content_copy

Beyond the Basics

APEX_DATA_PARSER goes further than just parsing:

Data Discovery: Use functions like GET_COLUMNS to learn about your file’s column names and data types before you fully parse it.

XLSX Support: Handle Excel spreadsheets directly.

REST Integration: Integrate with the APEX_WEB_SERVICE package to parse data retrieved from REST services.

When To Use APEX_DATA_PARSER

If you find yourself needing to:

Load data from CSV, JSON, XML, or XLSX files into your APEX applications

Process data uploaded by users

Integrate with external systems that exchange data in these formats

…then APEX_DATA_PARSER is likely the tool for you!

youtube

You can find more information about Oracle Apex in this Oracle Apex Link

Conclusion:

Unogeeks is the No.1 IT Training Institute for Oracle Apex Training. Anyone Disagree? Please drop in a comment

You can check out our other latest blogs on Oracle Apex here – Oarcle Apex Blogs

You can check out our Best In Class Oracle Apex Details here – Oracle Apex Training

Follow & Connect with us:

———————————-

For Training inquiries:

Call/Whatsapp: +91 73960 33555

Mail us at: [email protected]

Our Website ➜ https://unogeeks.com

Follow us:

Instagram: https://www.instagram.com/unogeeks

Facebook: https://www.facebook.com/UnogeeksSoftwareTrainingInstitute

Twitter: https://twitter.com/unogeeks

0 notes

Text

Ontology in Use: A Deep Dive into Semantic Relationships and Knowledge Management

In the realm of artificial intelligence and data science, ontology plays a pivotal role. It provides a structured framework that enables efficient knowledge management and data analysis. This article aims to shed light on the practical applications of ontology, with a focus on tools that support semantic relationships, such as RDF/RDFS and OWL.

Understanding Ontology

Ontology is a branch of philosophy that deals with the nature of being or reality. In the context of computer science and information technology, ontology refers to a formal representation of knowledge within a domain. It provides a framework for defining and organizing concepts and their relationships, thereby creating a shared understanding of a specific area of interest.

Tools Supporting Semantic Relationships

There are numerous tools available that support semantic relationships, particularly those defined by RDF/RDFS and OWL. RDF (Resource Description Framework) and RDFS (RDF Schema) provide a foundation for processing metadata and represent information on the web. OWL (Web Ontology Language), on the other hand, is designed to represent rich and complex knowledge about things, groups of things, and relations between things.

Some of the notable tools that support RDF/RDFS and OWL include:

Protégé: A free, open-source ontology editor and framework for building intelligent systems. Protégé fully supports the latest OWL 2 Web Ontology Language and RDF specifications from the World Wide Web Consortium.

Apache Jena: A free and open-source Java framework for building Semantic Web and Linked Data applications. It provides an RDF API to interact with the core API to create and read Resource Description Framework (RDF) graphs.

OpenLink Virtuoso: A comprehensive platform that includes a reasoner, triple store, RDFS reasoner, OWL reasoner, RDF generator, SPARQL endpoint, and RDB2RDF.

RDFLib: A Python library for working with RDF. It contains parsers and serializers for RDF/XML, N3, NTriples, N-Quads, Turtle, TriX, Trig, and JSON-LD.

YAGO: A Practical Application of Ontology

YAGO (Yet Another Great Ontology) is a prime example of ontology in use. It's a knowledge base that contains information about the real world, including entities such as movies, people, cities, countries, and relations between these entities. YAGO arranges its entities into classes and these classes are arranged in a taxonomy. For example, Elvis Presley belongs to the class of people, Paris belongs to the class of cities, and so on.

What makes YAGO special is that it combines two great resources: Wikidata and schema.org. Wikidata is the largest general-purpose knowledge base on the Semantic Web, while schema.org is a standard ontology of classes and relations. YAGO combines these two resources, thus getting the best from both worlds: a huge repository of facts, together with an ontology that is simple and used as a standard by a large community.

The Future of Ontology

The future of ontology looks promising. With the rise of artificial intelligence and machine learning, the need for structured and semantically rich data is more critical than ever. Ontology provides a way to structure this data in a meaningful way, enabling machines to understand and reason about the world in a similar way to humans.

In conclusion, ontology plays a crucial role in knowledge management and data analysis. It provides a structured framework for defining and organizing concepts and their relationships, thereby creating a shared understanding of a specific area of interest. The practical applications of ontology, such as YAGO, demonstrate the power and potential of this approach. As we continue to advance in the field of artificial intelligence, the importance and use of ontology will undoubtedly continue to grow.

1 note

·

View note

Text

Your Essential Guide to Building an Amazon Reviews Scraper

Amazon is a massive online marketplace, and it holds a treasure trove of data that's incredibly valuable for businesses. Whether it's product descriptions or customer reviews, you can tap into this data goldmine using a web scraping tool to gain valuable insights. These scraping tools are designed to quickly extract and organize data from specific websites. Just to put things into perspective, Amazon raked in a staggering $125.6 billion in sales revenue in the fourth quarter of 2020!

The popularity of Amazon is astounding, with nearly 90% of consumers preferring it over other websites for product purchases. A significant driver behind Amazon's sales success is its extensive collection of customer reviews. In fact, 73% of consumers say that positive customer reviews make them trust an eCommerce website more. This wealth of product review data on Amazon offers numerous advantages. Many small and mid-sized businesses, aiming for more than 4,000 items sold per minute in the US, look to leverage this data using an Amazon reviews scraper. Such a tool can extract product review information from Amazon and save it in a format of your choice.

Why Use an Amazon Reviews Scraper?

The authenticity and vastness of Amazon reviews make a scraper an ideal tool to analyze trends and market conditions thoroughly. Businesses and sellers can employ an Amazon reviews scraper to target the products in their inventory. They can scrape Amazon reviews from product pages and store them in a format that suits their needs. Here are some key benefits:

1. Find Customer Opinions: Amazon sellers can scrape reviews to understand what influences a product's ranking. This insight allows them to develop strategies to boost their rankings further, ultimately improving their products and customer service.

2. Collect Competing Product Reviews: By scraping Amazon review data, businesses can gain a better understanding of what aspects of products have a positive or negative impact. This knowledge helps them make informed decisions to capture the market effectively.

3. Online Reputation Marketing: Large companies with extensive product inventories often struggle to track individual product performance. However, Amazon web scraping tools can extract specific product information, which can then be analyzed using sentiment analysis tools to measure consumer sentiment.

4. Sentiment Analysis: The data collected with an Amazon reviews scraper helps identify consumer emotions toward a product. This helps prospective buyers gauge the general sentiment surrounding a product before making a purchase. Sellers can also assess how well a product performs in terms of customer satisfaction.

Checklist for Building an Amazon Reviews Scraper

Building an effective Amazon reviews scraper requires several steps to be executed efficiently. While the core coding is done in Python, there are other critical steps to follow when creating a Python Amazon review scraper. By successfully completing this checklist, you'll be able to scrape Amazon reviews for your desired products effectively:

a. Analyze the HTML Structure: Before coding an Amazon reviews scraper, it's crucial to understand the HTML structure of the target web pages. This step helps identify patterns that the scraper will use to extract data.

b. Implement Scrapy Parser in Python: After analyzing the HTML structure, code your Python Amazon review scraper using Scrapy, a web crawling framework. Scrapy will visit target web pages and extract the necessary information based on predefined rules and criteria.

c. Collect and Store Information: After scraping review data from product pages, the Amazon web scraping tools need to save the output data in a format such as CSV or JSON.

Essential Tools for Building an Amazon Reviews Scraper

When building an Amazon web scraper, you'll need various tools essential to the process of scraping Amazon reviews. Here are the basic tools required:

a. Python: Python's ease of use and extensive library support make it an ideal choice for building an Amazon reviews scraper.

b. Scrapy: Scrapy is a Python web crawling framework that allows you to write code for the Amazon reviews scraper. It provides flexibility in defining how websites will be scraped.

c. HTML Knowledge: A basic understanding of HTML tags is essential for deploying an Amazon web scraper effectively.

d. Web Browser: Browsers like Google Chrome and Mozilla Firefox are useful for identifying HTML tags and elements that the Amazon scraping tool will target.

Challenges in Scraping Amazon Reviews

Scraping reviews from Amazon can be challenging due to various factors:

a. Detection of Bots: Amazon can detect the presence of scraper bots and block them using CAPTCHAS and IP bans.

b. Varying Page Structures: Product pages on Amazon often have different structures, leading to unknown response errors and exceptions.

c. Resource Requirements: Due to the massive size of Amazon's review data, scraping requires substantial memory resources and high-performance network connections.

d. Security Measures: Amazon employs multiple security protocols to block scraping attempts, including content copy protection, JavaScript rendering, and user-agent validation.

How to Scrape Amazon Reviews Using Python

To build an Amazon web scraper using Python, follow these steps:

1. Environment Creation: Establish a virtual environment to isolate the scraper from other processes on your machine.

2. Create the Project: Use Scrapy to create a project that contains all the necessary components for your Amazon reviews scraper.

3. Create a Spider: Define how the scraper will crawl and scrape web pages by creating a Spider.

4. Identify Patterns: Inspect the target web page in a browser to identify patterns in the HTML structure.

5. Define Scrapy Parser in Python: Write the logic for scraping Amazon reviews and implement the parser function to identify patterns on the page.

6. Store Scraped Results: Configure the Amazon reviews scraper to save the extracted review data in CSV or JSON formats.

Using an Amazon reviews scraper provides businesses with agility and automation to analyze customer sentiment and market trends effectively. It empowers sellers to make informed decisions and respond quickly to changes in the market. By following these steps and leveraging the right tools, you can create a powerful Python Amazon review scraper to harness the valuable insights locked within Amazon's reviews.

0 notes

Text

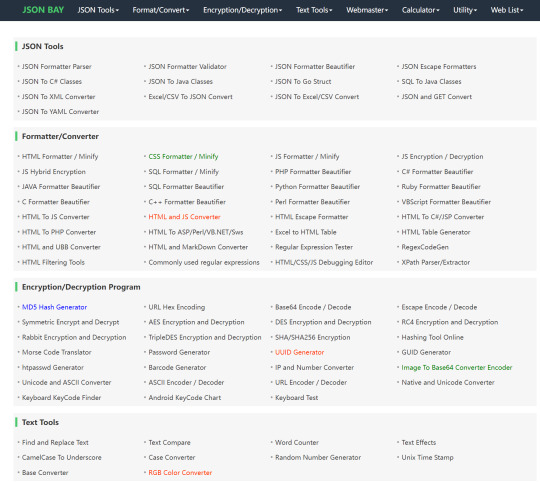

The Best Free Online Web Tools JSON BAY

JSON Formatter,JSON Parser,JSON Validator,JSON Converter,Excel to JSON,JSON to Excel,HTML Formatter,CSS Minifier,JavaScript Minify,Code Encryption,SQL Beautifier,PHP Formatter,Java Code Formatter,Python Beautifier,Regex Tester,Encryption Tools,Text Manipulation,Webmaster Tools,Online Calculators,Utility Tools,Code Conversion,Data Encryption,Web Debugging,SEO Optimization Tools,Web Development Utilities

#JSON#JSONFormatter#JSONParser#JSONValidator#JSONBeautifier#JSONToCSharp#JSONToJava#JSONToGo#SQLToJava#JSONToXML#ExcelToJSON#CSVToJSON#JSONToExcel#JSONToCSV#JSONToYAML#HTMLFormatter#CSSFormatter#JSFormatter#JSEncryption#JSDecryption#SQLFormatter#PHPFormatter#CSharpFormatter#JavaFormatter#PythonFormatter#RubyFormatter#CFormatter#CPlusPlusFormatter#PerlFormatter#VBScriptFormatter

0 notes

Text

Making a format

Last week I indulged in a thought

For my Danganronpa style engine, I need a 3d model format. It’s after all not just flat sprites but actual 3d geometry everywhere. Looking over the collection if formats there.. I mean.. FBX is a mammoth closed source proprietary 3d scene interchange format with all kinds of crazy nonsense packed inside I’d have to learn to deal with by hand. Collada is a complicated xml based format with all kinds of crazy scene and object graph nonsense packed in, and deprecated to boot. glTF2 is a great format but then I’m dealing with parsing complicated hyper-related json structures and reading binary blobs, and my brain just doesn’t want to deal with it. You know I did want to deal with? OBJ.

Oh it’s an excellent simple format, and I got excited about it too… until I watched some more footage and noticed… there’s animation in them thar scenes.. not complicated animation mind you, but everything from the pop-up book style scene-transitions, to background elements like calling fans and even as we saw your 3d avatar.. walking/running/sprinting.. ya.. need animation. And guess what obj doesn’t support?

On top of that, all these formats have a big thing in common… none of them are FOR games. They’re for Scenes. The big engines support them not because they’re popular.. But because their editors are essentially scrpted scene assemblers with many of the same tools packed into an fbx.

So I’m over here.. demanding simplicity. Man oh man why couldn’t there be a format as simple as OBJ, but supports skeletal animation, and designed for single models? What if you didn’t need to download some bloated SDK or spend 50 hours writing a parser that only supports a subset of what the format packs in?

That’s when I had an idea.. What if I simply extended OBJ?

Oh, but if I’m gonna take the time to extend obj, why don’t I fix some of the stuff that indeed bugs me about it, like keeping all things 0-indexed, and providing the whole vertex buffer object for each vertex on the same line, neatly arranged? Because at the end of the day, even obj is more interested in data-exchange between 3d suites.

Ok, so if I’m going to do the whole line prefix denotes data type thing complete with a state machine that dictates how to deal with the data found on each line.. we may as well make each line as useful as possible, right? That’s when I came across the tagline for the format “because I respect your time”. I was going to pay extra special attention to make sure the entire format stays the course and is predictably, and disgustingly, easy to read; without the need for anything else. No you don’t need json reader, pikl sdk, fast toml, or speed yaml. No you don’t need to make some kind of tokenizer or look for brackets or line endings, nor are you going to need to worry about quotes.

There’s two primary ways to read this format:

1. Read a line in, scan character by character; detect the unique line prefix and that prefix will explain all there is to know; or

2. Read line in, split it by space char, match the first element to some parsing mode, and use the rest of the elements as needed by index value

This all sounded great to me… But like I said, obj isn’t designed for use in games, in fact the format has its own annoyances to walk through. There’s got to be a bett- I got it. MD5.

You know, Id Software is known for their engines. And their tech is hyper fixated on performance, even at the cost of flexibility. The MD2 format is frustratingly limited, but it’s a very purpose built format that did the job And did it well for usage in a video game. MD5 is their last model format to be open sourced and with luck it also happens to be an ASCII format. But what it also supports is skeletal animation and in such a way that makes a lot of sense too. But I find the format plagued by a weird C-like syntax that I definitely didn’t come here to try and deal with.

So my idea was simply this: what if obj had a baby with md5?

That’s where I’m at right now.

I have the whole thing specced out and now I’m just learning how to write a blender plug-in…

1 note

·

View note

Text

This Week in Rust 532

Hello and welcome to another issue of This Week in Rust! Rust is a programming language empowering everyone to build reliable and efficient software. This is a weekly summary of its progress and community. Want something mentioned? Tag us at @ThisWeekInRust on Twitter or @ThisWeekinRust on mastodon.social, or send us a pull request. Want to get involved? We love contributions.

This Week in Rust is openly developed on GitHub and archives can be viewed at this-week-in-rust.org. If you find any errors in this week's issue, please submit a PR.

Updates from Rust Community

Project/Tooling Updates

Rust and C filesystem APIs (Rust-for-Linux project)

Progress toward a GCC-based Rust compiler

Palette 0.7.4

Fyrox Game Engine 0.33

Two months in Servo: better inline layout, stable Rust, and more!

Ownership and data flow in GPUI

Function Contracts for Kani

Slint 1.4 Released with additional Look and Improved APIs

This Week in Fluvio #58 - Fluvio Open Source Streaming System can be deployed locally as a single binary

Quickwit 0.7 Released: Elasticsearch API compatibility and 30% performance gains

Observations/Thoughts

How to benchmark Rust code with Criterion

Playing with Nom and parser combinators

Where Does the Time Go? Rust's Problem with Slow Compiles

ESP32 Embedded Rust at the HAL: I2C Scanner

We build X.509 chains so you don’t have to

Process spawning performance in Rust

Introducing Foundations - our open source Rust service foundation library

High performance vector graphic video games

Some recent and notable changes to Rust

Visualizing Dynamic Programming with FireDBG

[video] Nine Rules for Data Structures in Rust

Rust Walkthroughs

Rust Memory Leak Diagnosing Guides using Flame Graphs

WebSockets - The Beginner’s Guide

Writing Cronjobs in Rust

Fearless concurrency with Rust, cats, and a few Raspberry PIs

Rust macros taking care of even more Lambda boilerplate

Debugging Tokio Instrumentation

Miscellaneous

[audio] Arroyo - Micah Wylde, Co-Founder and CEO

Crate of the Week

This week's crate is Apache Iceberg Rust, a Rust implementation of a table format for huge analytic datasets.

Thanks to Renjie Liu for the self-suggestion!

Please submit your suggestions and votes for next week!

Call for Participation; projects and speakers

CFP - Projects

Always wanted to contribute to open-source projects but did not know where to start? Every week we highlight some tasks from the Rust community for you to pick and get started!

Some of these tasks may also have mentors available, visit the task page for more information.

GreptimeTeam - Fix a minor bug in join_path for more elegant code

GreptimeTeam - Add tests for MetaPeerClientRef to enhance GreptimeDB's stability

Ockam - Syntax highlighting for fenced code blocks, in command help output, on Linux works

Ockam - Output for ockam project ticket is improved and information is not opaque

Ockam - Output for both ockam project ticket and ockam project enroll is improved, with support for --output json

Hyperswitch - [FIX]: Add a configuration validation for workers

Hyperswitch - [FEATURE]: Create a delete endpoing for Config Table

Hyperswitch - [FEATURE]: Setup code coverage for local tests & CI

Hyperswitch - [FEATURE]: Have get_required_value to use ValidationError in OptionExt

If you are a Rust project owner and are looking for contributors, please submit tasks here.

CFP - Speakers

Are you a new or experienced speaker looking for a place to share something cool? This section highlights events that are being planned and are accepting submissions to join their event as a speaker.

No Calls for papers were submitted this week.

If you are an event organizer hoping to expand the reach of your event, please submit a link to the submission website through a PR to TWiR.

Updates from the Rust Project

409 pull requests were merged in the last week

pattern_analysis: let ctor_sub_tys return any Iterator they want

pattern_analysis: reuse most of the DeconstructedPat Debug impl

add #[coverage(off)] to closures introduced by #[test] and #[bench]

add the min_exhaustive_patterns feature gate

add the unstable option to reduce the binary size of dynamic library…

always normalize LoweredTy in the new solver

assert that a single scope is passed to for_scope

avoid ICE in trait without dyn lint

borrow check inline const patterns

classify closure arguments in refutable pattern in argument error

const-eval interning: get rid of type-driven traversal

coverage: dismantle Instrumentor and flatten span refinement

coverage: don't instrument #[automatically_derived] functions

coverage: never emit improperly-ordered coverage regions

do not normalize closure signature when building FnOnce shim

don't call walk_ functions directly if there is an equivalent visit_ method

don't fire OPAQUE_HIDDEN_INFERRED_BOUND on sized return of AFIT

don't manually resolve async closures in rustc_resolve

emit suggestion when trying to write exclusive ranges as ..<

fix assume and assert in jump threading

fix: correct suggestion arg for impl trait

improve handling of expressions in patterns

improve handling of numbers in IntoDiagnosticArg

make #![allow_internal_unstable(..)] work with stmt_expr_attributes

manually implement derived NonZero traits

modify GenericArg and Term structs to use strict provenance rules

move condition enabling the pass to is_enabled

normalize field types before checking validity

only assemble alias bound candidates for rigid aliases

properly recover from trailing attr in body

provide more context on recursive impl evaluation overflow

riscv32im-risc0-zkvm-elf: add target

scopeTree: remove destruction_scopes as unused

split Diagnostics for Uncommon Codepoints: Add List to Display Characters Involved

split tait and impl trait in assoc items logic

stop using derivative in rustc_pattern_analysis

subtree sync for rustc_codegen_cranelift

suggest array::from_fn for array initialization

use assert_unchecked instead of assume intrinsic in the standard library

interpret: project_downcast: do not ICE for uninhabited variants

return a finite number of AllocIds per ConstAllocation in Miri

miri: add __cxa_thread_atexit_impl on freebsd

miri: add portable-atomic-util bug to "bugs found" list

miri: freebsd add *stat calls interception support

only use dense bitsets in dataflow analyses

remove all ConstPropNonsense

remove StructuralEq trait

boost iterator intersperse(_with) performance

stabilise array methods

std: make HEAP initializer never inline

add AsyncFn family of traits

add ErrCode

add NonZero*::count_ones

add str::Lines::remainder

adjust Behaviour of read_dir and ReadDir in Windows Implementation: Check Whether Path to Search In Exists

core: add From<core::ascii::Char> implementations

handle out of memory errors in io:Read::read_to_end()

impl From<&[T; N]> for Cow<[T]>

rc,sync: do not create references to uninitialized values

initial implementation of str::from_raw_parts[_mut]

remove special-case handling of vec.split_off(0)

rewrite the BTreeMap cursor API using gaps

specialize Bytes on StdinLock<'_>

stabilize slice_group_by

switch NonZero alias direction

regex: make additional prefilter metadata public

cargo: docs(ref): Try to improve reg auth docs

cargo: fix(cli): Improve errors related to cargo script

cargo: fix(config): Deprecate non-extension files

cargo: refactor(shell): Use new fancy anstyle API

cargo: doc: replace version with latest for jobserver link

cargo: fix list option description starting with uppercase

cargo: refactor: remove unnecessary Option in Freshness::Dirty

cargo: test: data layout fix for x86_64-unknown-none-gnu

rustfmt: wrap macro that starts with nested body blocks

rustfmt: format diff line to be easily clickable

clippy: add to_string_trait_impl lint

clippy: add new unnecessary_result_map_or_else lint

clippy: false positive: needless_return_with_question_mark with implicit Error Conversion

clippy: redundant_closure_for_method_calls Suggest relative paths for local modules

clippy: multiple_crate_versions: add a configuration option for allowed duplicate crates

clippy: never_loop: recognize desugared try blocks

clippy: avoid linting redundant closure when callee is marked #[track_caller]

clippy: don't warn about modulo arithmetic when comparing to zero

clippy: assert* in multi-condition after unrolling will cause lint nonminimal_bool emit warning

clippy: fix incorrect suggestions generated by manual_retain lint

clippy: false positive in redundant_closure_call when closures are passed to macros

clippy: suggest existing configuration option if one is found

clippy: warn if an item coming from more recent version than MSRV is used

rust-analyzer: add postfix completion for let else

rust-analyzer: filter out cfg-disabled fields when lowering record patterns

rust-analyzer: replaced adjusted_display_range with adjusted_display_range_new in mismatched_arg_count

Rust Compiler Performance Triage

This was a very quiet week with only one PR having any real impact on overall compiler performance. The removal of the internal StructuralEq trait saw a roughly 0.4% improvement on average across nearly 50 real-world benchmarks.

Triage done by @rylev. Revision range: d6b151fc7..5c9c3c7

Summary:

(instructions:u) mean range count Regressions ❌ (primary) 0.5% [0.3%, 0.7%] 5 Regressions ❌ (secondary) 0.5% [0.2%, 1.4%] 10 Improvements ✅ (primary) -0.5% [-1.5%, -0.2%] 48 Improvements ✅ (secondary) -2.3% [-7.7%, -0.4%] 36 All ❌✅ (primary) -0.4% [-1.5%, 0.7%] 53

0 Regressions, 4 Improvements, 4 Mixed; 3 of them in rollups 37 artifact comparisons made in total

Full report here

Approved RFCs

Changes to Rust follow the Rust RFC (request for comments) process. These are the RFCs that were approved for implementation this week:

Avoid non-local definitions in functions

RFC: constants in patterns

Final Comment Period

Every week, the team announces the 'final comment period' for RFCs and key PRs which are reaching a decision. Express your opinions now.

RFCs

RFC: Include Future and IntoFuture in the 2024 prelude

Tracking Issues & PRs

[disposition: merge] static mut: allow mutable reference to arbitrary types, not just slices and arrays

[disposition: merge] Make it so that async-fn-in-trait is compatible with a concrete future in implementation

[disposition: merge] Decision: semantics of the #[expect] attribute

[disposition: merge] style-guide: When breaking binops handle multi-line first operand better

[disposition: merge] style-guide: Tweak Cargo.toml formatting to not put description last

[disposition: merge] style-guide: Format single associated type where clauses on the same line

[disposition: merge] PartialEq, PartialOrd: update and synchronize handling of transitive chains

[disposition: merge] std::error::Error -> Trait Implementations: lifetimes consistency improvement

Language Reference

No Language Reference RFCs entered Final Comment Period this week.

Unsafe Code Guidelines

No Unsafe Code Guideline RFCs entered Final Comment Period this week.

New and Updated RFCs

Deprecate then remove static mut

RFC: Rust Has Provenance

Call for Testing

An important step for RFC implementation is for people to experiment with the implementation and give feedback, especially before stabilization. The following RFCs would benefit from user testing before moving forward:

No RFCs issued a call for testing this week.

If you are a feature implementer and would like your RFC to appear on the above list, add the new call-for-testing label to your RFC along with a comment providing testing instructions and/or guidance on which aspect(s) of the feature need testing.

Upcoming Events

Rusty Events between 2024-01-31 - 2024-02-28 🦀

Virtual

2024-01-31 | Virtual (Cardiff, UK) | Rust and C++ Cardiff

Rust for Rustaceans Book Club launch!

2024-02-01 | Virtual + In Person (Barcelona, ES) | BcnRust

12th BcnRust Meetup - Stream

2024-02-01 | Virtual (Berlin, DE) | OpenTechSchool Berlin + Rust Berlin

Rust Hack n Learn | Mirror: Rust Hack n Learn

2024-02-03 | Virtual + In-person (Brussels, BE) | FOSDEM 2024

FOSDEM Conference: Rust devroom - talks

2024-02-03 | Virtual (Kampala, UG) | Rust Circle

Rust Circle Meetup

2024-02-04 | Virtual | Rust Maven

Web development with Rocket - In English

2024-02-07 | Virtual (Indianapolis, IN, US) | Indy Rust

Indy.rs - Ezra Singh - How Rust Saved My Eyes

2024-02-08 | Virtual (Charlottesville, NC, US) | Charlottesville Rust Meetup

Crafting Interpreters in Rust Collaboratively

2024-02-08 | Virtual (Nürnberg, DE) | Rust Nüremberg

Rust Nürnberg online

2024-02-10 | Virtual (Krakow, PL) | Stacja IT Kraków

Rust – budowanie narzędzi działających w linii komend

2024-02-10 | Virtual (Wrocław, PL) | Stacja IT Wrocław

Rust – budowanie narzędzi działających w linii komend

2024-02-13 | Virtual (Dallas, TX, US) | Dallas Rust

Second Tuesday

2024-02-15 | Virtual (Berlin, DE) | OpenTechSchool Berlin + Rust Berlin

Rust Hack n Learn | Mirror: Rust Hack n Learn

2024-02-15 | Virtual + In person (Praha, CZ) | Rust Czech Republic

Introduction and Rust in production

2024-02-21 | Virtual (Vancouver, BC, CA) | Vancouver Rust

Rust Study/Hack/Hang-out

2024-02-22 | Virtual (Charlottesville, NC, US) | Charlottesville Rust Meetup

Crafting Interpreters in Rust Collaboratively

Asia

2024-02-10 | Hyderabad, IN | Rust Language Hyderabad

Rust Language Develope BootCamp

Europe

2024-02-01 | Hybrid (Barcelona, ES) | BcnRust

12th BcnRust Meetup

2024-02-03 | Brussels, BE | FOSDEM '24

FOSDEM '24 Conference: Rust devroom - talks | Rust Aarhus FOSDEM Meetup

2024-02-03 | Nürnberg, BY, DE | Paessler Rust Camp 2024

Paessler Rust Camp 2024

2024-02-05 | Brussels, BE | Belgium Rust user group

Post-FOSDEM Rust meetup @ Vrije Universiteit Brussel

2024-02-06 | Bremen, DE | Rust Meetup Bremen

Rust Meetup Bremen [1]

2024-02-07 | Cologne, DE | Rust Cologne

Embedded Abstractions | Event page

2024-02-07 | London, UK | Rust London User Group

Rust for the Web — Mainmatter x Shuttle Takeover

2024-02-08 | Bern, CH | Rust Bern

Rust Bern Meetup #1 2024 🦀

2024-02-15 | Praha, CZ - Virtual + In-person | Rust Czech Republic

Introduction and Rust in production

2024-02-21 | Lyon, FR | Rust Lyon

Rust Lyon Meetup #8

2024-02-22 | Aarhus, DK | Rust Aarhus

Rust and Talk at Partisia

North America

2024-02-07 | Brookline, MA, US | Boston Rust Meetup

Coolidge Corner Brookline Rust Lunch, Feb 7

2024-02-08 | Lehi, UT, US | Utah Rust

BEAST: Recreating a classic DOS terminal game in Rust

2024-02-12 | Minneapolis, MN, US | Minneapolis Rust Meetup

Minneapolis Rust: Open Source Contrib Hackathon & Happy Hour

2024-02-13 | New York, NY, US | Rust NYC

Rust NYC Monthly Mixer

2024-02-13 | Seattle, WA, US | Cap Hill Rust Coding/Hacking/Learning

Rusty Coding/Hacking/Learning Night

2024-02-15 | Boston, MA, US | Boston Rust Meetup

Back Bay Rust Lunch, Feb 15

2024-02-15 | Seattle, WA, US | Seattle Rust User Group

Seattle Rust User Group Meetup

2024-02-20 | San Francisco, CA, US | San Francisco Rust Study Group

Rust Hacking in Person

2024-02-28 | Austin, TX, US | Rust ATX

Rust Lunch - Fareground

Oceania

2024-02-06 | Perth, WA, AU | Perth Rust Meetup Group

Rust Feb 2024 Meetup

2024-02-27 | Canberra, ACT, AU | Canberra Rust User Group

February Meetup

2024-02-27 | Sydney, NSW, AU | Rust Sydney

🦀 spire ⚡ & Quick

If you are running a Rust event please add it to the calendar to get it mentioned here. Please remember to add a link to the event too. Email the Rust Community Team for access.

Jobs

Please see the latest Who's Hiring thread on r/rust

Quote of the Week

The sheer stability of this program is what made me use rust for everything going forward. The social-service has a 100% uptime for almost 2.5 years now. It’s processed 12.9TB of traffic and is still using 1.5mb of ram just like the day we ran it 2.5 years ago. The resource usage is so low it brings tears to my eyes. As someone who came from Java, the lack of OOM errors or GC problems has been a huge benefit of rust and I don’t ever see myself using any other programming language. I’m a big fan of the mindset “build it once, but build it the right way” which is why rust is always my choice.

– /u/Tiflotin on /r/rust

Thanks to Brian Kung for the suggestion!

Please submit quotes and vote for next week!

This Week in Rust is edited by: nellshamrell, llogiq, cdmistman, ericseppanen, extrawurst, andrewpollack, U007D, kolharsam, joelmarcey, mariannegoldin, bennyvasquez.

Email list hosting is sponsored by The Rust Foundation

Discuss on r/rust

1 note

·

View note

Text

Take a look at this post… 'JSON Validator , URL Encoder/Decoder , URL Parser , HTML Encoder/Decoder , HTML Prettifier/Minifier , Base64 Encoder/Decoder , JSON Prettifier/Minifier, JSON Escaper/Unescaper , '.

0 notes

Text

Streamlining Your Data Workflow: Unveiling the Power of JSON Conversion and Formatting

In the dynamic world of data manipulation and communication, JSON (JavaScript Object Notation) has emerged as a versatile and widely-used format. From web APIs to data exchange between applications, JSON plays a pivotal role. To navigate this landscape effectively, tools such as the JSON Formatter, JSON Parser, and the ability to Convert JSON to String have become essential.

Handling JSON data can sometimes be intricate, especially when dealing with complex structures. However, the ability to Convert JSON to String is a transformative solution that simplifies the way data is presented and shared. Whether it's for better integration within applications or for sharing structured data across platforms, this capability bridges the gap between intricate data structures and human-readable content.

As data flows become more intricate, presenting JSON data in an organized and visually appealing format is crucial. This is where the JSON Formatter comes into play. It not only structures JSON data for optimal readability but also aligns it with coding conventions, enhancing collaboration among developers. The JSON Formatter tool ensures that your data communicates effectively and maintains a professional look, whether it's for internal use or public consumption.

Data manipulation requires the ability to understand and interpret JSON structures accurately. The JSON Parser tool acts as your guide in this journey, breaking down intricate JSON objects into manageable components. With the JSON Parser, you can easily navigate through data, extract specific information, and comprehend the relationships within complex data sets. This tool empowers developers and data analysts alike to work with confidence and accuracy.

1 note

·

View note

Text

BookNookRook, NeueGeoSyndie?

Manifestation toybox initially as a textual parser addventure game (inspired by FreeCiv, CivBE Rising Tide, Civ5 CE, QGIS, TS2 and SC4)... (to be slowly integrating PixelCrushers' packaging functionalities for NPCs [LoveHate, QuestMachine, DialogueSystem...] and Qodot 4 as to enrich the manifestation toybox into a power-tool under a weak copyleft license for individuals' use...)

Made with a custom stack of Kate (lightweight IDE), Fish+Bash shells, JSON & QML & XML, BuildRoot (GNU Make replacement), Nim/C, C#+F#, F-star?, GNU Common Lisp (with REPL interpreter compiler debugger monitor...), GNU Debugger, GIMP, Krita, Inkscape, Karbon, SweetHome3D, Blender, Crocotile?, GNU Bison, GNU SmartEiffel, GNU Guile, GNU Smalltalk, GNU Bazaar, GNU Aspell, KDE Plasma Qt(5) Designer, LibreOffice (documentation & feedback loop data "office" system), Vi(m)?, Emacs?, Evaldraw?, GNU Midnight Commander, GNOWSYS, PSPP, lsh, Ragnar aesthetic tiling window manager, , ZealOS DolDoc direct multimedia embedded plain files mixed with Parade navigation filesystem structuring and commands, GNU Assembler but also tweaked for my very own "Zeit" architecture as a bytecode interpreter & compiler...

So yeah, I am trying my best to come up with assets & demos before my birthday comes. But soon enough I will mostly focused into doing artsy sketches & keyword dumps to emulate such a virtual symbolic cladogram-mesque environment onto paper.

Farewell to soon!

0 notes

Text

New Dev Tools

Things have gotten complicated. I have enough of a game there to stop faffing about with adding elements higgledy-piggledy to a test level and actually start doing some level design. I want to get this game in front of test players as soon as I can so I can get feedback on, you know, if it's any good, how I can make it better, and so forth; and for that I need real levels even if they're in an early, rough form. The problem I'm confronting now is just how much I suck at level design.

Part of the problem is, that lovely JSON format I devised for level layout makes it difficult to visualize a level. So that brings me to the first new dev tool I'm using.

NA-Builder

NA-Builder is an ongoing effort for a level viewer/editor for NullAwesome. It is written largely in Gambit Scheme, with some bits in C; and structured so that I can start with the simplest bit, a JSON parser, and gradually add components on top of that until I have a full-fledged graphical editor.

But alas, I'm nowhere near building the editor. So instead, I settled for an image generator that uses the JSON parser to read a level file and spits out a map of the level in XPM format. Then I have some Emacs Lisp which allows me to send the current buffer to the map generator and opens the resulting image in a separate buffer -- so at the stroke of a key, I can view the results right within Emacs. This considerably quickens the feedback loop when I'm editing a level by tweaking the JSON directly. It works well enough for now.

NA-Builder is not yet ready for wide release. Maybe someday.

Waydroid

The other dev tool I've been making use of is not of my own authorship, but I got it working well enough for normal use.

See, the problem is, the Android emulators that ship with Android Studio suck. I have added keyboard support to the game so that it is easily playable on PCs, Chromebooks, and other devices. But the Android Studio emulator's keyboard support is... spotty. When you hold down a key, it appears to send key-press/key-release events to the app very rapidly, resulting in herky-jerky character movements and an inability to jump with any precision. But when I tried a commercial PC Android emulator like BlueStacks, everything was hunky-dory.

I noticed that Void Linux had a Waydroid package so I thought I might give it a go. Waydroid is an Android environment in a container that uses the Wayland display protocol to present its user interface. I have not joined many of my Linux-using colleagues in embracing the glorious Wayland future; I'm still on Xorg as my display server. But the Weston compositor has an X11 backend so I start Weston in my X session and have Waydroid display on that. This works out really well, and allows me to test out the game entirely on my PC. When I start Waydroid, an ADB connection is automatically established, and Android Studio picks it up immediately.

0 notes

Text

Json Parser

Json parser is a type of data used to write in java script .Json is easily readable by humans and computers. It is also used for editing data formats and it is one of the finest way to convert data structure programming languages. Data exchange through XML is now the most popular way for applications to communicate.

JSON is a subset of JavaScript (hence its name). It became popular around the time of web browser scripting languages. The JSON data structures are supported by nearly all popular programming languages, making it completely language-independent.

1 note

·

View note

Text

REVIVING 1990S DIGITAL DRESS-UP DOLLS WITH SMOOCH

Libby Horacek

POSITION DEVELOPMENT

@horrorcheck

What is the Kisekae Set System: It is a system to make digital dress up dolls!

Created in 1991 by MIO.H for use in a Japanese BBS. Pre-web!!! By separating the system from the assets of the dolls, you can make the systems much smaller

CEL image format. They have a transparent background and indexed colors like GIFs!

There is also the KCF Palette Format. Each GUF stores its own palette, with CELs there is a shared KCF palette file. Which is a file size cost savings. You do not have to repeat your color info per file.

Having your palette in another file makes palette swapping really easy! Just swap the KCF file!

CNF configuration files dictate layering, grouping, setting, and positions!

KiSS dolls have a lot of files, so they used LhA, the most popular compression format in Japan at the time.

In 1994, KLS (a user) created the KiSS General Specification

1995 FKiSS is born by Dov Sherman and Yav, which adds sounds, and animation!

FKiSS 2, 3, 4, two versions of FKiSS 5! So much innovation!

1995-2005 huge growth in KiSS! Increase of access, mainstreaming of anime, younger and more female audience.

2007-2012: KiSS declined due to it being much harder to make. All the old tutorials were written for older systems, English-speaking KiSS-making died out by 2010

The Sims, and other doll making was more accessible.

But, why KiSS? If I can make dolls in other places. They are great snapshots of the pre-internet world, and how play online evolved early on.

Lots of fun dolls were available, and it would be cool to save it.

Picrew is a modern thing people use to make dolls.

Tagi Academy is a tamagochi game within KiSS. Impressive!

KiSS has an open specification!!!! That is super cool! That means anyone can make your own viewer, as opposed to a closed system in The Sims.

So, why NOT make a KiSS interpreter?!!

Libby made Smooch, a KiSS renderer written in Haskell, which at the Recurse Center!

Smooch used a web framework called Fn (fnhaskell.com)

Had to make a CNF file parser using Parsec library that uses parsing combinators.

She created a data type that houses everything that can be in a CNF file, and parses it correctly in priority order.

Parsing is a great candidate for test-driven development. You can write a test with a bad CNF file, and then make sure your parser handles it.

The parser translates CNF lines into JSON. Uses the ASON library to translate into JSON.

First tried cel2pnm, coded with help from Mark Dominus at Recurse Center Made a C program that converted cels to portable bitmaps, which could be translated into PNGs.

Then JuicyPixels was created to translate palette files directly in Haskell

Now it is converted to JavaScript! No libraries, just JS!

Using PNGs in JS, thought, made it hard to click on parts of the clothing! Since it is squares.

So, you use ghost canvases! You use tow canvases, one on top of the other, to find the color of the pixel you clicked on, If you clicked on a scarf color, it will pick the scarf, and if you pick the sweater, you get the sweater!

Libby just added FKiSS 1 to Smooch! So we have animations now! The animations are basically event-based scripting the CEL files.

Smooch translates FKiSS to JSON, then the interpreter translates the JSON to JavaScript!

An action in FKiSS is translated into a function in JS. To do this you have to use bind in JS.

The events become CustomEvents in JS! So it looks like a regular event on the DOM.

What is the future of KiSS? Let's get more people making KiSS dolls!!! So why not make it easy to make dolls using PNGs.

How can we make people interested in building KiSS dolls and KiSS tooling.

Smooch need contributors!

Thank you, Libby, for the great talk!

9 notes

·

View notes

Link

JSON Parser Online helps to parse, view, analyze JSON data in Tree View. It's a pretty simple and easy way to parse JSON Data and Share with others.

This Parse JSON Online tool is very powerful.

This will show data in a tree view which supports image viewer on hover.

It also validates your data and shows error in great detail.

It's a wonderful tool crafted for JSON lovers who is looking for to deserialize json online.

This json decode online helps to decode JSON which are unreadable.

1 note

·

View note