#kubernetes controller manager config

Explore tagged Tumblr posts

Text

Integrating ROSA Applications with AWS Services (CS221)

In today's rapidly evolving cloud-native landscape, enterprises are looking for scalable, secure, and fully managed Kubernetes solutions that work seamlessly with existing cloud infrastructure. Red Hat OpenShift Service on AWS (ROSA) meets that demand by combining the power of Red Hat OpenShift with the scalability and flexibility of Amazon Web Services (AWS).

In this blog post, we’ll explore how you can integrate ROSA-based applications with key AWS services, unlocking a powerful hybrid architecture that enhances your applications' capabilities.

📌 What is ROSA?

ROSA (Red Hat OpenShift Service on AWS) is a managed OpenShift offering jointly developed and supported by Red Hat and AWS. It allows you to run containerized applications using OpenShift while taking full advantage of AWS services such as storage, databases, analytics, and identity management.

🔗 Why Integrate ROSA with AWS Services?

Integrating ROSA with native AWS services enables:

Seamless access to AWS resources (like RDS, S3, DynamoDB)

Improved scalability and availability

Cost-effective hybrid application architecture

Enhanced observability and monitoring

Secure IAM-based access control using AWS IAM Roles for Service Accounts (IRSA)

🛠️ Key Integration Scenarios

1. Storage Integration with Amazon S3 and EFS

Applications deployed on ROSA can use AWS storage services for persistent and object storage needs.

Use Case: A web app storing images to S3.

How: Use OpenShift’s CSI drivers to mount EFS or access S3 through SDKs or CLI.

yaml

Copy

Edit

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: efs-pvc

spec:

accessModes:

- ReadWriteMany

storageClassName: efs-sc

resources:

requests:

storage: 5Gi

2. Database Integration with Amazon RDS

You can offload your relational database requirements to managed RDS instances.

Use Case: Deploying a Spring Boot app with PostgreSQL on RDS.

How: Store DB credentials in Kubernetes secrets and use RDS endpoint in your app’s config.

env

Copy

Edit

SPRING_DATASOURCE_URL=jdbc:postgresql://<rds-endpoint>:5432/mydb

3. Authentication with AWS IAM + OIDC

ROSA supports IAM Roles for Service Accounts (IRSA), enabling fine-grained permissions for workloads.

Use Case: Granting a pod access to a specific S3 bucket.

How:

Create an IAM role with S3 access

Associate it with a Kubernetes service account

Use OIDC to federate access

4. Observability with Amazon CloudWatch and Prometheus

Monitor your workloads using Amazon CloudWatch Container Insights or integrate Prometheus and Grafana on ROSA for deeper insights.

Use Case: Track application metrics and logs in a single AWS dashboard.

How: Forward logs from OpenShift to CloudWatch using Fluent Bit.

5. Serverless Integration with AWS Lambda

Bridge your ROSA applications with AWS Lambda for event-driven workloads.

Use Case: Triggering a Lambda function on file upload to S3.

How: Use EventBridge or S3 event notifications with your ROSA app triggering the workflow.

🔒 Security Best Practices

Use IAM Roles for Service Accounts (IRSA) to avoid hardcoding credentials.

Use AWS Secrets Manager or OpenShift Vault integration for managing secrets securely.

Enable VPC PrivateLink to keep traffic within AWS private network boundaries.

🚀 Getting Started

To start integrating your ROSA applications with AWS:

Deploy your ROSA cluster using the AWS Management Console or CLI

Set up AWS CLI & IAM permissions

Enable the AWS services needed (e.g., RDS, S3, Lambda)

Create Kubernetes Secrets and ConfigMaps for service integration

Use ServiceAccounts, RBAC, and IRSA for secure access

🎯 Final Thoughts

ROSA is not just about running Kubernetes on AWS—it's about unlocking the true hybrid cloud potential by integrating with a rich ecosystem of AWS services. Whether you're building microservices, data pipelines, or enterprise-grade applications, ROSA + AWS gives you the tools to scale confidently, operate securely, and innovate rapidly.

If you're interested in hands-on workshops, consulting, or ROSA enablement for your team, feel free to reach out to HawkStack Technologies – your trusted Red Hat and AWS integration partner.

💬 Let's Talk!

Have you tried ROSA yet? What AWS services are you integrating with your OpenShift workloads? Share your experience or questions in the comments!

For more details www.hawkstack.com

0 notes

Text

Essential Components of a Production Microservice Application

DevOps Automation Tools and modern practices have revolutionized how applications are designed, developed, and deployed. Microservice architecture is a preferred approach for enterprises, IT sectors, and manufacturing industries aiming to create scalable, maintainable, and resilient applications. This blog will explore the essential components of a production microservice application, ensuring it meets enterprise-grade standards.

1. API Gateway

An API Gateway acts as a single entry point for client requests. It handles routing, composition, and protocol translation, ensuring seamless communication between clients and microservices. Key features include:

Authentication and Authorization: Protect sensitive data by implementing OAuth2, OpenID Connect, or other security protocols.

Rate Limiting: Prevent overloading by throttling excessive requests.

Caching: Reduce response time by storing frequently accessed data.

Monitoring: Provide insights into traffic patterns and potential issues.

API Gateways like Kong, AWS API Gateway, or NGINX are widely used.

Mobile App Development Agency professionals often integrate API Gateways when developing scalable mobile solutions.

2. Service Registry and Discovery

Microservices need to discover each other dynamically, as their instances may scale up or down or move across servers. A service registry, like Consul, Eureka, or etcd, maintains a directory of all services and their locations. Benefits include:

Dynamic Service Discovery: Automatically update the service location.

Load Balancing: Distribute requests efficiently.

Resilience: Ensure high availability by managing service health checks.

3. Configuration Management

Centralized configuration management is vital for managing environment-specific settings, such as database credentials or API keys. Tools like Spring Cloud Config, Consul, or AWS Systems Manager Parameter Store provide features like:

Version Control: Track configuration changes.

Secure Storage: Encrypt sensitive data.

Dynamic Refresh: Update configurations without redeploying services.

4. Service Mesh

A service mesh abstracts the complexity of inter-service communication, providing advanced traffic management and security features. Popular service mesh solutions like Istio, Linkerd, or Kuma offer:

Traffic Management: Control traffic flow with features like retries, timeouts, and load balancing.

Observability: Monitor microservice interactions using distributed tracing and metrics.

Security: Encrypt communication using mTLS (Mutual TLS).

5. Containerization and Orchestration

Microservices are typically deployed in containers, which provide consistency and portability across environments. Container orchestration platforms like Kubernetes or Docker Swarm are essential for managing containerized applications. Key benefits include:

Scalability: Automatically scale services based on demand.

Self-Healing: Restart failed containers to maintain availability.

Resource Optimization: Efficiently utilize computing resources.

6. Monitoring and Observability

Ensuring the health of a production microservice application requires robust monitoring and observability. Enterprises use tools like Prometheus, Grafana, or Datadog to:

Track Metrics: Monitor CPU, memory, and other performance metrics.

Set Alerts: Notify teams of anomalies or failures.

Analyze Logs: Centralize logs for troubleshooting using ELK Stack (Elasticsearch, Logstash, Kibana) or Fluentd.

Distributed Tracing: Trace request flows across services using Jaeger or Zipkin.

Hire Android App Developers to ensure seamless integration of monitoring tools for mobile-specific services.

7. Security and Compliance

Securing a production microservice application is paramount. Enterprises should implement a multi-layered security approach, including:

Authentication and Authorization: Use protocols like OAuth2 and JWT for secure access.

Data Encryption: Encrypt data in transit (using TLS) and at rest.

Compliance Standards: Adhere to industry standards such as GDPR, HIPAA, or PCI-DSS.

Runtime Security: Employ tools like Falco or Aqua Security to detect runtime threats.

8. Continuous Integration and Continuous Deployment (CI/CD)

A robust CI/CD pipeline ensures rapid and reliable deployment of microservices. Using tools like Jenkins, GitLab CI/CD, or CircleCI enables:

Automated Testing: Run unit, integration, and end-to-end tests to catch bugs early.

Blue-Green Deployments: Minimize downtime by deploying new versions alongside old ones.

Canary Releases: Test new features on a small subset of users before full rollout.

Rollback Mechanisms: Quickly revert to a previous version in case of issues.

9. Database Management

Microservices often follow a database-per-service model to ensure loose coupling. Choosing the right database solution is critical. Considerations include:

Relational Databases: Use PostgreSQL or MySQL for structured data.

NoSQL Databases: Opt for MongoDB or Cassandra for unstructured data.

Event Sourcing: Leverage Kafka or RabbitMQ for managing event-driven architectures.

10. Resilience and Fault Tolerance

A production microservice application must handle failures gracefully to ensure seamless user experiences. Techniques include:

Circuit Breakers: Prevent cascading failures using tools like Hystrix or Resilience4j.

Retries and Timeouts: Ensure graceful recovery from temporary issues.

Bulkheads: Isolate failures to prevent them from impacting the entire system.

11. Event-Driven Architecture

Event-driven architecture improves responsiveness and scalability. Key components include:

Message Brokers: Use RabbitMQ, Kafka, or AWS SQS for asynchronous communication.

Event Streaming: Employ tools like Kafka Streams for real-time data processing.

Event Sourcing: Maintain a complete record of changes for auditing and debugging.

12. Testing and Quality Assurance

Testing in microservices is complex due to the distributed nature of the architecture. A comprehensive testing strategy should include:

Unit Tests: Verify individual service functionality.

Integration Tests: Validate inter-service communication.

Contract Testing: Ensure compatibility between service APIs.

Chaos Engineering: Test system resilience by simulating failures using tools like Gremlin or Chaos Monkey.

13. Cost Management

Optimizing costs in a microservice environment is crucial for enterprises. Considerations include:

Autoscaling: Scale services based on demand to avoid overprovisioning.

Resource Monitoring: Use tools like AWS Cost Explorer or Kubernetes Cost Management.

Right-Sizing: Adjust resources to match service needs.

Conclusion

Building a production-ready microservice application involves integrating numerous components, each playing a critical role in ensuring scalability, reliability, and maintainability. By adopting best practices and leveraging the right tools, enterprises, IT sectors, and manufacturing industries can achieve operational excellence and deliver high-quality services to their customers.

Understanding and implementing these essential components, such as DevOps Automation Tools and robust testing practices, will enable organizations to fully harness the potential of microservice architecture. Whether you are part of a Mobile App Development Agency or looking to Hire Android App Developers, staying ahead in today’s competitive digital landscape is essential.

0 notes

Text

Essential Tools to Take Your Web Development to the Next Level

To take your web development skills to the next level, here are some essential tools that can help:

1. Code Editors and IDEs:

VS Code: A powerful, extensible code editor that supports a wide range of languages, extensions, and debugging tools.

Sublime Text: A fast and feature-rich editor with support for multiple programming languages and a sleek interface.

Atom: An open-source, customizable text editor, ideal for web development.

2. Version Control Systems:

Git: A version control tool to track changes in code and collaborate efficiently with other developers.

GitHub/GitLab/Bitbucket: Platforms for hosting Git repositories and collaborating with teams.

3. Front-End Frameworks:

React.js: A JavaScript library for building dynamic and interactive user interfaces.

Vue.js: A progressive JavaScript framework for building web interfaces.

Angular: A robust framework for creating scalable and structured web apps.

Tailwind CSS: A utility-first CSS framework for building custom designs quickly.

Bootstrap: A popular CSS framework for building responsive and mobile-first websites.

4. Back-End Frameworks:

Node.js: A JavaScript runtime for building scalable server-side applications.

Express.js: A minimal web framework for Node.js, often used for building APIs and web apps.

Django: A high-level Python web framework for building secure and maintainable websites.

Ruby on Rails: A full-stack framework built on Ruby, known for rapid development and ease of use.

5. Database Management:

MySQL: A widely used relational database management system.

MongoDB: A NoSQL database that's flexible and scalable.

PostgreSQL: A powerful, open-source object-relational database system.

Firebase: A cloud-based real-time database with simple authentication and data synchronization.

6. Package Managers:

npm: Node.js package manager for managing JavaScript libraries and dependencies.

Yarn: An alternative package manager for JavaScript with a focus on performance and reliability.

7. API Tools:

Postman: A powerful tool for testing and interacting with APIs.

Swagger: An open-source framework for API documentation, design, and testing.

8. Task Runners & Module Bundlers:

Webpack: A static module bundler for JavaScript, CSS, and other assets.

Gulp: A task runner used for automating repetitive development tasks.

Parcel: A zero-config bundler that is easy to use and fast.

9. CSS Preprocessors:

Sass: A CSS preprocessor that extends CSS with variables, nested rules, and functions.

Less: A preprocessor with features like variables and functions to make CSS more manageable.

10. Testing Tools:

Jest: A testing framework for JavaScript, commonly used for testing React apps.

Mocha: A flexible JavaScript testing framework for Node.js.

Cypress: An end-to-end testing framework for web applications.

Selenium: A tool for automating web browsers, useful for functional and UI testing.

11. Containerization & Deployment:

Docker: A platform for building, running, and shipping applications inside containers.

Kubernetes: An orchestration platform for automating the deployment, scaling, and management of containerized applications.

Netlify: A platform for continuous deployment of web apps with automatic scaling.

Vercel: A platform that provides serverless deployment and front-end hosting.

12. UI/UX Design Tools:

Figma: A collaborative interface design tool for creating web and app prototypes.

Adobe XD: A vector-based tool for designing and prototyping user experiences.

Sketch: A design tool for web and mobile interfaces, available for macOS.

13. Collaboration Tools:

Slack: A messaging platform for team communication and collaboration.

Trello: A task management tool for organizing and prioritizing tasks in a project.

Asana: A work management platform that helps teams plan, organize, and execute projects.

Using these tools effectively can streamline your workflow, help you collaborate better with teams, and enhance the quality of your web development projects.

0 notes

Text

Why Java Spring Boot is Ideal for Building Microservices

In modern software development, microservices have become the go-to architecture for creating scalable, flexible, and maintainable applications. Java full-stack development is one of the most popular frameworks used for building microservices, thanks to its simplicity, powerful features, and seamless integration with other technologies. In this blog, we will explore why Java Spring Boot is an ideal choice for building microservices.

What are Microservices?

Microservices architecture is a design pattern where an application is broken down into smaller, independent services that can be developed, deployed, and scaled individually. Each microservice typically focuses on a specific business functionality, and communicates with other services via APIs (often RESTful). Microservices offer several advantages over traditional monolithic applications, including improved scalability, flexibility, and maintainability.

Why Spring Boot for Microservices?

Spring Boot, a lightweight, open-source Java framework, simplifies the development of stand-alone, production-grade applications. It comes with several features that make it an excellent choice for building microservices. Here are some key reasons why:

1. Rapid Development with Minimal Configuration

Spring Boot is known for its "convention over configuration" approach, which makes it incredibly developer-friendly. It removes the need for complex XML configurations, allowing developers to focus on the business logic rather than boilerplate code. For microservices, this means you can quickly spin up new services with minimal setup, saving time and increasing productivity.

Spring Boot comes with embedded servers (like Tomcat, Jetty, and Undertow), so you don’t need to worry about setting up and managing separate application servers. This makes deployment and scaling easier in microservices environments.

2. Microservice-Friendly Components

Spring Boot is tightly integrated with the Spring Cloud ecosystem, which provides tools specifically designed for building microservices. Some of these key components include:

Spring Cloud Config: Centralizes configuration management for multiple services in a microservices architecture, allowing you to manage configuration properties in a version-controlled repository.

Spring Cloud Netflix: Includes several tools like Eureka (for service discovery), Hystrix (for fault tolerance), and Ribbon (for client-side load balancing), which are essential for building resilient and scalable microservices.

Spring Cloud Gateway: Provides a simple, effective way to route requests to different microservices, offering features like load balancing, security, and more.

Spring Cloud Stream: A framework for building event-driven microservices, making it easier to work with messaging middleware (e.g., RabbitMQ, Kafka).

These tools help you quickly build and manage your microservices in a distributed architecture.

3. Scalability and Flexibility

One of the main reasons organizations adopt microservices is the ability to scale individual components independently. Spring Boot’s lightweight nature makes it an ideal choice for microservices because it enables easy scaling both vertically (scaling up resources for a single service) and horizontally (scaling across multiple instances of a service).

With Spring Boot, you can run multiple instances of microservices in containers (e.g., Docker) and orchestrate them using platforms like Kubernetes. This makes it easier to handle high traffic, optimize resource usage, and maintain high availability.

4. Fault Tolerance and Resilience

In a microservices architecture, failures in one service can affect others. Spring Boot provides built-in mechanisms for handling fault tolerance and resilience, which are critical for maintaining the integrity and uptime of your application. With Spring Cloud Netflix Hystrix, you can implement circuit breakers that prevent cascading failures, providing a more robust and fault-tolerant system.

By using tools like Resilience4j, Spring Boot makes it easier to implement strategies like retries, timeouts, and fallbacks to ensure your services remain resilient even when some of them fail.

5. Easy Integration with Databases and Messaging Systems

Microservices often require interaction with various data stores and messaging systems. Spring Boot makes this integration straightforward by providing support for relational databases (like MySQL, PostgreSQL), NoSQL databases (like MongoDB, Cassandra), and message brokers (like RabbitMQ, Kafka).

With Spring Data, you can easily interact with databases using a simplified repository model, without having to write much boilerplate code. This enables microservices to manage their own data stores, promoting the independence of each service.

6. Security Features

Security is critical in microservices, as services often need to communicate with each other over the network. Spring Security provides a comprehensive security framework that integrates well with Spring Boot. With Spring Security, you can secure your microservices with features like:

Authentication and Authorization: Implementing OAuth2, JWT tokens, or traditional session-based authentication to ensure that only authorized users or services can access certain endpoints.

Secure Communication: Enabling HTTPS, encrypting data in transit, and ensuring that communications between services are secure.

Role-Based Access Control (RBAC): Ensuring that each microservice has the appropriate permissions to access certain resources.

These security features help ensure that your microservices are protected from unauthorized access and malicious attacks.

7. Monitoring and Logging

Monitoring and logging are essential for maintaining microservices in a production environment. With Spring Boot, you can easily implement tools like Spring Boot Actuator to expose useful operational information about your microservices, such as metrics, health checks, and system properties.

In addition, Spring Cloud Sleuth provides distributed tracing capabilities, allowing you to trace requests as they flow through multiple services. This helps you track and diagnose issues more efficiently in a microservices architecture.

Conclusion

Java full-stack development provides a solid foundation for building microservices, making it an excellent choice for developers looking to implement a modern, scalable, and resilient application architecture. The framework’s ease of use, integration with Spring Cloud components, scalability, and security features are just a few of the reasons why Spring Boot is an ideal platform for microservices.

As a Java full-stack development, understanding how to build microservices with Spring Boot will not only enhance your skill set but also open doors to working on more complex and modern systems. If you’re looking to develop scalable, flexible, and fault-tolerant applications, Java Spring Boot is the right tool for the job.

This concludes the blog on "Why Java full-stack development is Ideal for Building Microservices". Let me know if you'd like to continue to the next topic!

0 notes

Text

Assess Application and Cluster Readiness

Review Application Dependencies:

Analyze libraries, JDK versions, frameworks (e.g., Spring Boot, Jakarta EE), and external services (databases, APIs).

Identify any Kubernetes-specific configurations (e.g., ConfigMaps, Secrets, environment variables).

Containerization:

Ensure your Java app is containerized using tools like Docker.

Update the Dockerfile if necessary (e.g., for JDK/JRE updates or changes in base image).

2. Prepare Kubernetes Environment

Target Cluster Validation:

Ensure the target Kubernetes cluster is set up and ready.

Check for compatibility with the current cluster’s API version and resources.

Namespace Setup:

Create equivalent namespaces, RBAC roles, and policies in the target cluster.

Storage:

Verify Persistent Volume Claims (PVCs) and ensure similar storage classes are available.

3. Backup and Export Resources

Kubernetes Resources:

Export all application-related resources using kubectl:bashCopy codekubectl get all -n <namespace> -o yaml > resources.yaml

Persistent Data:

Back up database data or persistent volumes using tools like Velero or rsync.

4. Migrate Application Configurations

ConfigMaps and Secrets:

Export existing configurations:bashCopy codekubectl get configmap -n <namespace> -o yaml > configmap.yaml kubectl get secret -n <namespace> -o yaml > secret.yaml

Reapply them in the new cluster:bashCopy codekubectl apply -f configmap.yaml kubectl apply -f secret.yaml

5. Deploy to Target Cluster

Deploy Application:

Use Helm charts, Kubernetes manifests, or CI/CD pipelines to deploy the Java application to the new cluster.

Update the kubeconfig for the target cluster:bashCopy codekubectl config use-context <target-cluster-context> kubectl apply -f resources.yaml

Persistent Volumes:

Ensure data is restored to the persistent volumes if needed.

6. Test the Migration

Validation:

Ensure the application is running as expected (pods, services, and networking).

Run integration and regression tests.

Connectivity:

Validate external service integrations (e.g., databases, APIs).

Check ingress configurations for public access.

7. Cutover and Cleanup

Switch Traffic:

Update DNS or external load balancer to direct traffic to the new cluster.

Monitor:

Use tools like Prometheus, Grafana, or ELK Stack to monitor application health.

Clean Up Old Environment:

After verifying stability, clean up resources in the old cluster.

Tools to Assist in Migration

Velero: For backup and restore of Kubernetes resources and persistent volumes.

Helm: To manage application deployments and upgrades.

Kubernetes Dashboard: For real-time cluster monitoring.

Kubectl Plugins: To enhance CLI functionality.

If you provide more details about the migration (e.g., specific cloud providers or Kubernetes environments), I can offer more tailored advice!

4o

You said:

Migration of 5000 files per hr fix

ChatGPT said:

ChatGPT

If you're migrating a large number of files (e.g., 5000 files per hour) and encountering performance bottlenecks, you can improve the speed and reliability of your file migration by addressing several key areas. Here's a step-by-step guide to optimize file transfer:

1. Identify and Analyze Bottlenecks

File System Bottlenecks:

Check the source and destination disk I/O performance.

Ensure the files are not locked or being used during migration.

Network Bandwidth:

Measure your available network bandwidth using tools like iperf and ensure it can handle the expected load.

File Metadata Overhead:

Small files can add significant overhead due to metadata operations (e.g., file creation, permissions).

2. Optimize Transfer Mechanisms

Use Parallel Processing:

Transfer multiple files concurrently using multithreaded or parallel tools like:

rsync with --max-size and --parallel options.

GNU Parallel for concurrent file transfers.

bashCopy codefind /source/directory -type f | parallel -j8 rsync -a {} /destination/directory

Use a custom script or a library like Python's concurrent.futures for more control.

Batch File Transfers:

Group files into batches (e.g., tar archives) to reduce the overhead of transferring many small files individually.bashCopy codetar -cvf files.tar /source/files && rsync files.tar /destination Extract the files on the destination:bashCopy codetar -xvf files.tar

3. Ensure Network Optimization

Compression:

Compress files during transfer to reduce data volume:bashCopy codersync -az /source/directory /destination/directory

Increase TCP Window Size:

Tweak TCP settings for large transfers using tools like scp or rsync with buffer options:bashCopy codersync --bwlimit=0 --whole-file /source /destination

Use Faster Protocols:

Use optimized file transfer protocols like Rclone, Aspera, or SFTP for high-speed transfer.

4. Improve Source and Destination Performance

Disk Performance:

Optimize disk reads and writes using SSDs for temporary storage or caches.

Ensure the file system supports efficient file handling (e.g., ext4, XFS).

File System Tuning:

For very large numbers of files, tune parameters like inode_cache to handle metadata efficiently.

5. Error Handling and Retry Logic

Retry Failed Transfers:

Use tools like rsync to retry failed files automatically:bashCopy codersync -avz --partial --progress /source /destination

Logging and Monitoring:

Log all transferred files and

4o

You said:

File trsnsfer to s3 improve

ChatGPT said:

ChatGPT

Improving file transfer to Amazon S3 requires addressing both performance and reliability. Below are strategies and tools to optimize large-scale or high-volume file transfers:

1. Use AWS-Specific Tools

AWS CLI with Parallelism:

Use the --multipart-chunk-size-mb and --parallel flags to upload files in parallel and in chunks:bashCopy codeaws s3 cp /local/path s3://your-bucket-name --recursive --storage-class STANDARD_IA --region <region>

Enable parallel transfers: Update your AWS CLI configuration file (~/.aws/config) to increase concurrency:plaintextCopy code[default] s3 = max_concurrent_requests = 10 max_queue_size = 1000 multipart_threshold = 64MB multipart_chunksize = 64MB

AWS DataSync:

Use AWS DataSync for automated, high-performance, large-scale transfers with minimal manual intervention. DataSync can also manage retry and error handling.

2. Optimize S3 Uploads

Multipart Upload:

Use multipart uploads for files larger than 100 MB to improve transfer speed and reliability.

Example using AWS CLI:bashCopy codeaws s3api create-multipart-upload --bucket your-bucket-name --key file_key

Automate multipart uploads with the AWS SDK or tools like boto3 for Python.

Use the Correct Storage Class:

Choose the appropriate storage class to save costs and improve upload speeds:

STANDARD (default): High performance for frequently accessed data.

INTELLIGENT_TIERING: Automatically optimizes storage costs for infrequently accessed files.

3. Improve Network Performance

Enable Transfer Acceleration:

Use S3 Transfer Acceleration for faster uploads over long distances by leveraging AWS edge locations:bashCopy codeaws s3 cp /local/path s3://your-bucket-name --region <region> --endpoint-url https://<bucket-name>.s3-accelerate.amazonaws.com

Compression:

Compress files before transferring to reduce data size:bashCopy codetar -czvf archive.tar.gz /local/path && aws s3 cp archive.tar.gz s3://your-bucket-name

Optimize Network:

Use AWS Direct Connect or a high-bandwidth internet connection for large-scale transfers.

Monitor latency and bandwidth with tools like iperf.

4. Automate and Parallelize Transfers

Rclone:

Use rclone for high-speed S3-compatible transfers with parallelism and retry capabilities:bashCopy coderclone copy /local/path s3:your-bucket-name --transfers 16 --s3-upload-concurrency 16

GNU Parallel:

Transfer files concurrently by splitting file lists:bashCopy codefind /local/path -type f | parallel -j10 aws s3 cp {} s3://your-bucket-name

5. Error Handling and Retry

Retry Mechanisms:

Use tools or scripts that automatically retry failed uploads.

AWS CLI has built-in retry logic; increase retries if necessary:bashCopy codeaws s3 cp --cli-read-timeout 600 --cli-connect-timeout 600 /local/path s3://your-bucket-name

Chunked Retry:

Break large transfers into smaller, manageable chunks and retry failed chunks only.

6. Monitor and Optimize Transfers

AWS CloudWatch Metrics:

Monitor transfer performance and error rates in real-time.

S3 Inventory:

Use S3 inventory reports to validate uploaded files.

AWS SDKs or Custom Scripts:

Build custom monitoring and retry logic with SDKs like boto3 (Python).

Example Optimized Upload Command

bash

Copy code

aws s3 cp /local/path s3://your-bucket-name --recursive --storage-class STANDARD --region us-east-1 --cli-read-timeout 600 --cli-connect-timeout 600

When to Use Snowball or Snowmobile

For extremely large datasets (terabytes or petabytes), use AWS Snowball or AWS Snowmobile for offline data transfer to S3.

These steps can dramatically improve the efficiency and reliability of your file transfer process to S3. Let me know if you need help implementing any of these!

4o

You said:

Improve fole transfers

ChatGPT said:

ChatGPT

Improving file transfer performance and reliability, whether between servers, local systems, or to cloud storage like Amazon S3, involves a combination of strategies. Here are some best practices for improving file transfers:

1. Optimize the File Transfer Process

Parallelize Transfers

Transfer multiple files concurrently to improve throughput:

Use tools like rsync, scp, or cloud-specific SDKs with multi-threading capabilities.

Example with rsync:bashCopy codersync -azP --info=progress2 --max-size=100M --exclude="*.tmp" /source/ /destination/

Batch Small Files

Small files cause metadata overhead during transfer. Bundle them into archives:bashCopy codetar -czvf archive.tar.gz /source/ Transfer the archive, then extract on the destination:bashCopy codetar -xzvf archive.tar.gz

2. Use High-Performance Transfer Tools

Rclone

Ideal for cloud storage and large-scale transfers with parallelism:bashCopy coderclone copy /source/ remote:bucket-name --transfers=16 --checkers=8

GNU Parallel

Parallelize file uploads/downloads:bashCopy codefind /source/ -type f | parallel -j8 scp {} user@destination:/target/

Multithreaded Tools

Use tools with built-in multithreading, such as bbcp, Aspera, or scp (with parallel scripts).

3. Network Optimization

Compression

Compress files before transfer to reduce the amount of data transmitted:bashCopy codegzip largefile.txt Or combine with the transfer:bashCopy codetar czf - /source/ | ssh user@destination "tar xzf - -C /target/"

Bandwidth Management

Limit bandwidth usage to prevent throttling:

With rsync:bashCopy codersync --bwlimit=1000 -az /source/ /destination/

Use TCP tuning options to optimize network transfer rates:bashCopy codesysctl -w net.ipv4.tcp_window_scaling=1

Use Faster Protocols

Use optimized transfer protocols:

SFTP: Secure but slower for high-volume transfers.

Rsync/SSH: Efficient for incremental and large transfers.

Aspera: High-speed UDP-based protocol.

Enable Transfer Acceleration (Cloud)

For Amazon S3:bashCopy codeaws s3 cp /source/ s3://your-bucket/ --region <region> --endpoint-url https://<bucket-name>.s3-accelerate.amazonaws.com

4. Error Handling and Resilience

Resume Transfers

Use tools that support resuming failed transfers:

Rsync:bashCopy codersync -az --partial /source/ /destination/

AWS CLI:bashCopy codeaws s3 cp --continue /source/ s3://bucket-name/

Retry Logic

Implement retry logic for failed transfers using scripts or tools.

Example using bash:bashCopy codefor i in {1..5}; do scp file user@destination:/target/ && break || sleep 5 done

5. Monitor and Analyze Performance

Real-Time Monitoring

Monitor progress with tools:

Rsync: --progress flag.

SCP: Wrap in pv for progress visualization:bashCopy codetar cf - /source/ | pv | ssh user@destination "tar xf - -C /target/"

Analyze Bottlenecks

Use tools like iperf or nload to check network performance.

Use iotop or dstat to monitor disk I/O on both source and destination.

6. Scale with Advanced Solutions

Distributed File Transfer

Use tools like Globus for efficient distributed data transfers.

Data Transfer Appliances

For extremely large datasets, consider appliances like AWS Snowball or offline methods to ship data physically.

Content Delivery Networks (CDNs)

Use CDNs for faster file distribution to end users.

By combining these strategies—parallelism, compression, protocol optimization, and monitoring—you can significantly enhance file transfer speed and reliability. Let me know if you'd like further customization for a specific use case!

4o

You said:

Threadpool

ChatGPT said:

ChatGPT

A thread pool is a collection of pre-initialized worker threads that stand ready to perform tasks. Instead of creating and destroying threads for each task, a thread pool manages a set of reusable threads, improving performance and resource utilization in multi-threaded applications.

Here’s how to effectively use and optimize thread pools in your application.

Why Use a Thread Pool?

Performance: Reduces the overhead of creating and destroying threads repeatedly.

Resource Management: Controls the number of concurrent threads, preventing system overload.

Scalability: Allows a controlled environment for task execution, making it easier to scale applications.

Key Concepts of Thread Pools

Core Threads: Minimum number of threads that are always kept alive.

**Max Threads

4o

You said:

Improve java spring file transfer

ChatGPT said:

ChatGPT

Improving file transfer in a Java Spring application involves optimizing both the code and the underlying infrastructure. Below are strategies to enhance file transfer performance and reliability.

1. Optimize File Transfer Code

Use Streaming for Large Files

Avoid loading the entire file into memory; instead, use streaming:javaCopy code@PostMapping("/upload") public ResponseEntity<String> uploadFile(@RequestParam("file") MultipartFile file) throws IOException { try (InputStream inputStream = file.getInputStream()) { // Process the input stream directly // Example: Save the file Files.copy(inputStream, Paths.get("target-directory/" + file.getOriginalFilename()), StandardCopyOption.REPLACE_EXISTING); } return ResponseEntity.ok("File uploaded successfully!"); }

Enable Asynchronous File Transfers

Use Spring's @Async annotation for non-blocking file uploads/downloads:javaCopy code@Async public CompletableFuture<String> uploadFileAsync(MultipartFile file) { // File processing logic return CompletableFuture.completedFuture("Upload Complete"); }

Use Chunked Uploads for Large Files

Break large files into smaller chunks for efficient transfer and to handle interruptions gracefully:javaCopy code@PostMapping("/uploadChunk") public ResponseEntity<String> uploadChunk(@RequestParam("chunk") MultipartFile chunk, @RequestParam("fileId") String fileId) { // Append chunk data to the file return ResponseEntity.ok("Chunk uploaded successfully!"); }

2. Optimize for Cloud Storage (e.g., AWS S3, Azure Blob)

Use SDKs for Direct Uploads

Use cloud-specific SDKs (e.g., AWS SDK for S3) to upload files directly to cloud storage instead of saving locally first:javaCopy code@PostMapping("/uploadToS3") public ResponseEntity<String> uploadToS3(@RequestParam("file") MultipartFile file) throws IOException { AmazonS3 s3Client = AmazonS3ClientBuilder.defaultClient(); String bucketName = "your-bucket-name"; s3Client.putObject(bucketName, file.getOriginalFilename(), file.getInputStream(), new ObjectMetadata()); return ResponseEntity.ok("File uploaded to S3!"); }

Enable S3 Transfer Acceleration

Use Transfer Acceleration for faster uploads to Amazon S3:javaCopy codeAmazonS3 s3Client = AmazonS3ClientBuilder.standard() .withAccelerateModeEnabled(true) .build();

3. Optimize File Transfer in REST APIs

Enable Compression

Compress file responses to reduce data size:yamlCopy codespring: servlet: multipart: enabled: true max-file-size: 50MB max-request-size: 50MB gzip: enabled: true min-size: 1024

Use HTTP/2

Switch to HTTP/2 for better concurrency and reduced latency:yamlCopy codeserver: http2: enabled: true

Resume Interrupted Uploads

Use ETag or file metadata to allow resumable file uploads:javaCopy code@PostMapping("/resumeUpload") public ResponseEntity<String> resumeUpload(@RequestParam("file") MultipartFile file, @RequestParam("offset") Long offset) { // Resume logic based on offset return ResponseEntity.ok("Upload resumed!"); }

4. Parallelize File Transfers

Multithreaded File Upload

Use a ThreadPoolExecutor or Spring's TaskExecutor:Copy code

In addition to using @Async, there are other strategies and tools to improve file transfer performance and reliability in a Java Spring application. Here are some approaches:

1. Reactive Programming with Spring WebFlux

For truly non-blocking and asynchronous file transfers, use Spring WebFlux:

Streaming File Upload:javaCopy code@PostMapping(value = "/upload", consumes = MediaType.MULTIPART_FORM_DATA_VALUE) public Mono<Void> uploadFile(Flux<FilePart> filePartFlux) { return filePartFlux.flatMap(filePart -> { Path path = Paths.get("target-directory/" + filePart.filename()); return filePart.transferTo(path); }).then(); }

Streaming File Download:javaCopy code@GetMapping(value = "/download", produces = MediaType.APPLICATION_OCTET_STREAM_VALUE) public Mono<ResponseEntity<Resource>> downloadFile() { Path path = Paths.get("target-directory/large-file.txt"); return Mono.just(ResponseEntity.ok() .header(HttpHeaders.CONTENT_DISPOSITION, "attachment; filename=large-file.txt") .body(new FileSystemResource(path))); }

2. Use CompletableFuture and ExecutorService

Combine CompletableFuture with a custom ExecutorService for better control:javaCopy code@PostMapping("/upload") public CompletableFuture<String> uploadFile(@RequestParam("file") MultipartFile file) { ExecutorService executor = Executors.newFixedThreadPool(4); return CompletableFuture.supplyAsync(() -> { try { // File processing logic Files.copy(file.getInputStream(), Paths.get("target/" + file.getOriginalFilename()), StandardCopyOption.REPLACE_EXISTING); return "File uploaded successfully!"; } catch (IOException e) { throw new RuntimeException("File upload failed!", e); } }, executor); }

3. Apache Kafka for Asynchronous Processing

Use Apache Kafka to decouple file upload from processing:

Producer sends file metadata to Kafka after upload:javaCopy code@PostMapping("/upload") public ResponseEntity<String> uploadFile(@RequestParam("file") MultipartFile file) throws IOException { // Save the file Files.copy(file.getInputStream(), Paths.get("target/" + file.getOriginalFilename())); // Send metadata to Kafka kafkaTemplate.send("file-topic", file.getOriginalFilename()); return ResponseEntity.ok("File uploaded and queued for processing!"); }

Consumer processes files asynchronously:javaCopy code@KafkaListener(topics = "file-topic", groupId = "file-group") public void processFile(String fileName) { // Process the file Path path = Paths.get("target/" + fileName); System.out.println("Processing file: " + path); }

4. Chunked File Transfers

Handle large files by uploading them in chunks:

Frontend sends file chunks with an identifier.

Backend reconstructs the file:javaCopy code@PostMapping("/uploadChunk") public ResponseEntity<String> uploadChunk(@RequestParam("chunk") MultipartFile chunk, @RequestParam("fileId") String fileId, @RequestParam("chunkNumber") int chunkNumber) { Path path = Paths.get("target/" + fileId + "_" + chunkNumber); try { Files.copy(chunk.getInputStream(), path, StandardCopyOption.REPLACE_EXISTING); } catch (IOException e) { return ResponseEntity.status(HttpStatus.INTERNAL_SERVER_ERROR).body("Failed to upload chunk"); } return ResponseEntity.ok("Chunk uploaded successfully!"); }

Reassemble chunks when all are uploaded.

5. WebSockets for Real-Time Progress

Use WebSockets to provide real-time feedback on file transfer progress:javaCopy code@Controller public class WebSocketController { @MessageMapping("/uploadStatus") @SendTo("/topic/progress") public String sendProgress(String status) { return status; } }

Send progress updates from the server while uploading:javaCopy codesimpMessagingTemplate.convertAndSend("/topic/progress", "50% completed");

6. Redis or Database Queues

Use a Redis queue or a database to handle file metadata asynchronously.

Enqueue metadata after upload:javaCopy coderedisTemplate.opsForList().leftPush("fileQueue", fileMetadata);

Process the queue in the background:javaCopy codeString metadata = redisTemplate.opsForList().rightPop("fileQueue");

7. Use MultipartConfig for Large Files

Configure multipart file handling for large files in application.properties:propertiesCopy codespring.servlet.multipart.max-file-size=1GB spring.servlet.multipart.max-request-size=2GB

Custom bean for fine-tuning:javaCopy code@Bean public MultipartConfigElement multipartConfigElement() { MultipartConfigFactory factory = new MultipartConfigFactory(); factory.setMaxFileSize("1GB"); factory.setMaxRequestSize("2GB"); return factory.createMultipartConfig(); }

8. Use AWS S3 for Direct Uploads

Use pre-signed URLs to offload upload work to the client:javaCopy code@GetMapping("/generatePresignedUrl") public ResponseEntity<String> generatePresignedUrl(@RequestParam String fileName) { AmazonS3 s3Client = AmazonS3ClientBuilder.defaultClient(); GeneratePresignedUrlRequest generatePresignedUrlRequest = new GeneratePresignedUrlRequest("bucket-name", fileName) .withMethod(HttpMethod.PUT) .withExpiration(new Date(System.currentTimeMillis() + 3600 * 1000)); URL url = s3Client.generatePresignedUrl(generatePresignedUrlRequest); return ResponseEntity.ok(url.toString()); }

The client uploads directly to S3 using the URL.

9. Optimize File Transfer for High Performance

Compression

Compress files before upload to reduce transfer size:javaCopy codebyte[] compressedData = compress(file.getBytes()); Files.write(Paths.get("compressed-file.gz"), compressedData);

Load Balancing

Use a load balancer (e.g., AWS ALB or NGINX) for scalable upload endpoints.

Retry Logic

Implement retries for failed transfers to improve reliability:javaCopy codeint maxRetries = 3; while (retries < maxRetries) { try { // Attempt file upload break; } catch (IOException e) { retries++; if (retries == maxRetries) { throw e; } } }

These strategies can enhance file transfer performance, scalability, and reliability in Java Spring applications. Let me know if you need code examples for specific use cases!

4o

You said:

S3 relaibility

ChatGPT said:

ChatGPT

Ensuring reliability when transferring files to Amazon S3 involves minimizing failures, handling errors effectively, and ensuring data integrity. Below are best practices and techniques to achieve reliable file transfers to S3:

1. Use Multipart Upload for Large Files

Multipart uploads split large files into smaller parts, upload them in parallel, and reassemble them in S3. This improves reliability and speeds up transfers.

Example Using AWS SDK (Java)

java

Copy code

AmazonS3 s3Client = AmazonS3ClientBuilder.defaultClient(); String bucketName = "your-bucket-name"; String fileName = "large-file.zip"; String keyName = "uploads/" + fileName; File file = new File(fileName); TransferManager tm = TransferManagerBuilder.standard().withS3Client(s3Client).build(); Upload upload = tm.upload(bucketName, keyName, file); try { upload.waitForCompletion(); System.out.println("File uploaded successfully!"); } catch (AmazonClientException e) { System.err.println("Upload failed: " + e.getMessage()); }

2. Use Retry Logic

Add retry mechanisms to handle intermittent network issues or service interruptions.

AWS SDK Retry Settings

The AWS SDK retries failed requests automatically, but you can customize it:

java

Copy code

ClientConfiguration config = new ClientConfiguration(); config.setRetryPolicy(PredefinedRetryPolicies.getDefaultRetryPolicyWithCustomMaxRetries(5)); AmazonS3 s3Client = AmazonS3ClientBuilder.standard() .withClientConfiguration(config) .build();

3. Enable Transfer Acceleration

Transfer Acceleration uses AWS edge locations to improve upload speeds over long distances.

Enable Transfer Acceleration in S3

Enable it in the S3 bucket settings.

Update your client code to use the accelerated endpoint:

java

Copy code

AmazonS3 s3Client = AmazonS3ClientBuilder.standard() .withAccelerateModeEnabled(true) .build();

4. Validate Data Integrity

Use MD5 checksums to ensure the uploaded file matches the original file.

AWS CLI Example

bash

Copy code

aws s3 cp file.txt s3://your-bucket/ --content-md5 <calculated-md5>

Java Example

java

Copy code

String md5 = Base64.getEncoder().encodeToString(DigestUtils.md5(new FileInputStream(file))); ObjectMetadata metadata = new ObjectMetadata(); metadata.setContentMD5(md5); PutObjectRequest request = new PutObjectRequest(bucketName, keyName, new FileInputStream(file), metadata); s3Client.putObject(request);

5. Monitor File Transfers

Use CloudWatch Metrics to monitor S3 request success, failures, and latencies.

Monitor transfer progress with TransferManager in the AWS SDK:

java

Copy code

TransferManager tm = TransferManagerBuilder.standard().withS3Client(s3Client).build(); Upload upload = tm.upload(bucketName, keyName, file); upload.addProgressListener(progressEvent -> { System.out.println("Transferred bytes: " + progressEvent.getBytesTransferred()); }); upload.waitForCompletion();

6. Handle Failures Gracefully

Use Resumable Transfers for interrupted uploads with TransferManager:

java

Copy code

TransferManager tm = TransferManagerBuilder.standard().build(); Upload upload = tm.upload(bucketName, keyName, file); // Add a progress listener upload.addProgressListener((ProgressListener) progressEvent -> { System.out.println("Transferred: " + progressEvent.getBytesTransferred()); }); // Graceful failure handling try { upload.waitForCompletion(); } catch (AmazonClientException e) { System.err.println("Error occurred: " + e.getMessage()); }

7. Use Pre-Signed URLs

Generate pre-signed URLs for reliable, time-limited uploads directly from the client, reducing the load on your server.

Generate Pre-Signed URL

java

Copy code

GeneratePresignedUrlRequest generatePresignedUrlRequest = new GeneratePresignedUrlRequest(bucketName, keyName) .withMethod(HttpMethod.PUT) .withExpiration(new Date(System.currentTimeMillis() + 3600 * 1000)); URL url = s3Client.generatePresignedUrl(generatePresignedUrlRequest); System.out.println("Pre-Signed URL: " + url);

8. Use S3 Event Notifications

Configure S3 Event Notifications to trigger AWS Lambda or SNS for post-upload validation, processing, or alerting on failures.

Example: Notification for Upload Completion

Configure your S3 bucket to send an event to an SQS queue or Lambda function upon successful upload.

9. Leverage Data Replication

Use S3 Cross-Region Replication (CRR) or Same-Region Replication (SRR) for redundancy.

Automatically replicate files to another bucket for disaster recovery.

10. Automate with AWS DataSync

Use AWS DataSync for automated and highly reliable file transfers between on-premises storage and S3. It includes built-in retry and integrity checks.

11. Optimize Network Performance

Compress files before uploading:javaCopy codeFiles.write(Paths.get("compressed-file.gz"), gzipCompress(fileContent));

Use efficient transfer protocols (e.g., HTTP/2 if supported by your client).

12. Secure Transfers

Always use HTTPS for secure communication.

Use S3 Bucket Policies and IAM Roles to restrict access to authorized users.

Example: Complete Reliable Upload Function

java

Copy code

public void reliableUpload(File file, String bucketName, String keyName) { AmazonS3 s3Client = AmazonS3ClientBuilder.defaultClient(); TransferManager tm = TransferManagerBuilder.standard().withS3Client(s3Client).build(); try { // Multipart upload Upload upload = tm.upload(bucketName, keyName, file); upload.addProgressListener((ProgressListener) progressEvent -> { System.out.println("Transferred bytes: " + progressEvent.getBytesTransferred()); }); upload.waitForCompletion(); System.out.println("File uploaded successfully!"); } catch (AmazonClientException e) { System.err.println("Upload failed: " + e.getMessage()); } finally { tm.shutdownNow(); } }

0 notes

Text

Certified Kubernetes Application Developer: Your Step-by-Step Guide to Certification Success

Kubernetes has become a powerhouse in the tech world, reshaping how applications are deployed and managed. As more companies shift to containerized applications and cloud-native architectures, the demand for Certified Kubernetes Application Developers (CKADs) continues to grow. This certification is a gateway for anyone looking to prove their skills in Kubernetes application development and make a mark in DevOps.

But what does it mean to be a Certified Kubernetes Application Developer? What benefits does this certification offer, and how can you prepare effectively? Here’s a comprehensive guide designed to give you all the insights and steps you need to become CKAD certified.

What is the Certified Kubernetes Application Developer Certification?

The Certified Kubernetes Application Developer (CKAD) certification is designed for those who are proficient in designing, building, and managing applications in a Kubernetes environment. This certification focuses on real-world skills and validates your ability to deploy, troubleshoot, and manage applications on Kubernetes clusters.

Kubernetes, managed by the Cloud Native Computing Foundation (CNCF), has become the backbone of scalable applications, and the CKAD exam is recognized globally. With this certification, you’ll showcase a high level of competency in handling the complexities of Kubernetes, proving invaluable to companies adopting containerized solutions.

Why Choose the Certified Kubernetes Application Developer Certification?

Career Advancement: With a CKAD certification, you’ll have proof of your Kubernetes skills, which is crucial in today’s DevOps-centric job market. Companies are on the lookout for Certified Kubernetes Application Developers who can manage application lifecycles within Kubernetes clusters.

High Demand: Kubernetes has quickly become a standard in application deployment. With the Certified Kubernetes Application Developer certification, you are positioning yourself in one of the most sought-after areas of tech.

Competitive Edge: Many IT professionals may know Kubernetes, but a CKAD-certified developer has verified skills. The certification proves your expertise in managing containerized applications in production environments, a key asset for employers.

Global Recognition: The Certified Kubernetes Application Developer credential is recognized worldwide, making it a valuable addition to your resume regardless of your location.

Increased Salary Potential: Professionals with CKAD certification often earn higher salaries due to the specialized skills required. This certification not only opens doors but also ensures competitive compensation.

What Skills Will You Learn with the Certified Kubernetes Application Developer Certification?

The CKAD exam measures proficiency in the following areas, which are essential for every Kubernetes developer:

Application Design and Deployment: Learn how to design applications that can seamlessly run on Kubernetes. This includes understanding Kubernetes objects, such as Pods, Deployments, and ReplicaSets.

Application Observability: Monitoring is a key skill. You’ll gain knowledge in handling logs and metrics to keep track of applications, a crucial part of maintaining reliable systems.

Configuration: The CKAD certification covers Kubernetes configurations for applications, including secrets, config maps, and persistent storage.

Services & Networking: Master the art of connecting applications within and outside Kubernetes. This part covers Services, DNS, and Ingress controllers.

Troubleshooting: Being able to troubleshoot is critical. CKADs are skilled in diagnosing issues with deployments, Pods, and clusters, helping maintain smooth operations.

Preparing for the Certified Kubernetes Application Developer Exam: Tips and Resources

Here’s how you can effectively prepare for the Certified Kubernetes Application Developer certification:

1. Understand the Exam Format

The CKAD exam consists of multiple performance-based tasks to complete within two hours. It’s essential to practice under time constraints, as the exam tests your speed and accuracy in applying Kubernetes concepts. The tasks range across different domains, each with a specific weight. This real-world exam format means you should focus on hands-on practice.

2. Use Official CNCF Resources

CNCF provides free resources, including the Kubernetes Documentation and CKAD-specific guides. These resources will help you understand the core concepts, especially if you’re new to Kubernetes.

3. Enroll in a Kubernetes Course

Several courses are designed specifically for CKAD preparation. A Certified Kubernetes Application Developer course can guide you step-by-step through the skills required for the exam. Many platforms, including Udemy and Coursera, offer comprehensive CKAD prep courses that cover all domains in detail.

4. Practice, Practice, Practice

Kubernetes is a hands-on tool, so theoretical knowledge alone won’t help you. Create a Kubernetes cluster, practice deploying applications, and experiment with different Kubernetes components like Pods, Services, and ConfigMaps. Websites like Katacoda and Play with Kubernetes offer sandboxes where you can practice for free.

5. Review Common Commands and YAML Files

Understanding YAML syntax and common Kubernetes commands is crucial for the CKAD exam. The more comfortable you are with commands like kubectl run, kubectl apply, and kubectl expose, the faster you’ll be during the exam. Also, review how to work with YAML files to define Pods, Deployments, and other Kubernetes objects.

6. Test Yourself with Sample Questions

Sample questions and mock exams are invaluable. They not only test your knowledge but also help you familiarize yourself with the CKAD’s practical format. You can find sample exams in resources like Linux Academy or KodeKloud’s CKAD labs.

Exam Day: Tips for Success

Set Up Your Environment: Make sure your testing environment is stable and distraction-free. The exam requires focus, so ensure your workspace is ready.

Be Time-Conscious: The CKAD exam is time-bound, so aim to spend no more than 5–10 minutes on each task. If you’re stuck, move on and return later.

Use Built-In Documentation: The Kubernetes documentation is available during the exam. Use it to your advantage, but don’t rely too heavily on it. Knowing your commands and YAML structures beforehand will save you time.

Stay Calm: The pressure of a timed, hands-on exam can be intense. Trust your preparation, and remember to approach each question methodically.

What’s Next After Getting Your CKAD?

After earning your Certified Kubernetes Application Developer certification, you’re well on your way in the Kubernetes world! But Kubernetes is constantly evolving. Here are some next steps to deepen your expertise:

Certified Kubernetes Administrator (CKA): This certification focuses on Kubernetes administration. It’s ideal if you want to expand your knowledge beyond application development.

Specialized Kubernetes Tools: Explore Kubernetes tools like Helm, Istio, and Prometheus to further your containerization and monitoring skills. Many Kubernetes projects rely on these tools for advanced functionality.

Real-World Projects: Put your CKAD skills to use in real-world projects. Many employers value hands-on experience, so consider working on cloud-native projects that utilize Kubernetes.

Contribute to Open Source: Contributing to Kubernetes or related projects can further solidify your skills and enhance your resume. The Kubernetes community welcomes contributions from developers worldwide.

Conclusion: Becoming a Certified Kubernetes Application Developer Is a Game-Changer

Earning the Certified Kubernetes Application Developer certification isn’t just a feather in your cap; it’s a leap forward in your career. By mastering Kubernetes, you open doors to roles that involve cloud-native development, DevOps, and beyond. The demand for Certified Kubernetes Application Developers is only growing as organizations recognize the value of containerized applications.

0 notes

Text

Performance Optimization on OpenShift

Optimizing the performance of applications running on OpenShift involves several best practices and tools. Here's a detailed guide:

1. Resource Allocation and Management

a. Proper Sizing of Pods and Containers:

- Requests and Limits:Set appropriate CPU and memory requests and limits to ensure fair resource allocation and avoid overcommitting resources.

- Requests: Guaranteed resources for a pod.

- Limits:Maximum resources a pod can use.

- Vertical Pod Autoscaler (VPA):Automatically adjusts the CPU and memory requests and limits for containers based on usage.

b. Resource Quotas and Limits:

- Use resource quotas to limit the resource usage per namespace to prevent any single application from monopolizing cluster resources.

c. Node Selector and Taints/Tolerations:

- Use node selectors and taints/tolerations to control pod placement on nodes with appropriate resources.

2. Scaling Strategies

a. Horizontal Pod Autoscaler (HPA):

- Automatically scales the number of pod replicas based on observed CPU/memory usage or custom metrics.

- Example Configuration:

```yaml

apiVersion: autoscaling/v1

kind: HorizontalPodAutoscaler

metadata:

name: my-app-hpa

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: my-app

minReplicas: 2

maxReplicas: 10

targetCPUUtilizationPercentage: 70

```

b. Cluster Autoscaler:

- Automatically adjusts the size of the OpenShift cluster by adding or removing nodes based on the workload requirements.

3. Application and Cluster Tuning

a. Optimize Application Code:

- Profile and optimize the application code to reduce resource consumption and improve performance.

- Use tools like JProfiler, VisualVM, or built-in profiling tools in your IDE.

b. Database Optimization:

- Optimize database queries and indexing.

- Use connection pooling and proper caching strategies.

c. Network Optimization:

- Use service meshes (like Istio) to manage and optimize service-to-service communication.

- Enable HTTP/2 or gRPC for efficient communication.

4. Monitoring and Analyzing Performance

a. Prometheus and Grafana:

- Use Prometheus for monitoring and alerting on various metrics.

- Visualize metrics in Grafana dashboards.

- Example Prometheus Configuration:

```yaml

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

name: my-app

spec:

selector:

matchLabels:

app: my-app

endpoints:

- port: web

interval: 30s

```

b. OpenShift Monitoring Stack:

- Leverage OpenShift's built-in monitoring stack, including Prometheus, Grafana, and Alertmanager, to monitor cluster and application performance.

c. Logging with EFK/ELK Stack:

- Use Elasticsearch, Fluentd, and Kibana (EFK) or Elasticsearch, Logstash, and Kibana (ELK) stack for centralized logging and log analysis.

- Example Fluentd Configuration:

```yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: fluentd-config

data:

fluent.conf: |

<source>

@type tail

path /var/log/containers/*.log

pos_file /var/log/fluentd-containers.log.pos

tag kubernetes.*

format json

time_format %Y-%m-%dT%H:%M:%S.%NZ

</source>

```

d. APM Tools (Application Performance Monitoring):

- Use tools like New Relic, Dynatrace, or Jaeger for distributed tracing and APM to monitor application performance and pinpoint bottlenecks.

5. Best Practices for OpenShift Performance Optimization

a. Regular Health Checks:

- Configure liveness and readiness probes to ensure pods are healthy and ready to serve traffic.

- Example Liveness Probe:

```yaml

livenessProbe:

httpGet:

path: /healthz

port: 8080

initialDelaySeconds: 30

periodSeconds: 10

```

b. Efficient Image Management:

- Use optimized and minimal base images to reduce container size and startup time.

- Regularly scan and update images to ensure they are secure and performant.

c. Persistent Storage Optimization:

- Use appropriate storage classes for different types of workloads (e.g., SSD for high I/O applications).

- Optimize database storage configurations and perform regular maintenance.

d. Network Policies:

- Implement network policies to control and secure traffic flow between pods, reducing unnecessary network overhead.

Conclusion

Optimizing performance on OpenShift involves a combination of proper resource management, scaling strategies, application tuning, and continuous monitoring. By implementing these best practices and utilizing the available tools, you can ensure that your applications run efficiently and effectively on the OpenShift platform.

For more details click www.hawkstack.com

#redhatcourses#information technology#linux#containerorchestration#docker#kubernetes#container#containersecurity#dockerswarm#aws

0 notes

Text

How to Implement Java Microservices Architecture

Implementing a microservices architecture in Java is a strategic decision that can have significant benefits for your application, such as improved scalability, flexibility, and maintainability. Here's a guide to help you embark on this journey.

1. Understand the Basics

Before diving into the implementation, it's crucial to understand what microservices are. Microservices architecture is a method of developing software systems that focuses on building single-function modules with well-defined interfaces and operations. These modules, or microservices, are independently deployable and scalable.

2. Design Your Microservices

Identify Business Capabilities

Break down your application based on business functionalities.

Each microservice should represent a single business capability.

Define Service Boundaries

Ensure that each microservice is loosely coupled and highly cohesive.

Avoid too many dependencies between services.

3. Choose the Right Tools and Technologies

Java Frameworks

Spring Boot: Popular for building stand-alone, production-grade Spring-based applications.

Dropwizard: Useful for rapid development of RESTful web services.

Micronaut: Great for building modular, easily testable microservices.

Containerization

Docker: Essential for creating, deploying, and running microservices in isolated environments.

Kubernetes: A powerful system for automating deployment, scaling, and management of containerized applications.

Database

Use a database per service pattern. Each microservice should have its private database to ensure loose coupling.

4. Develop Your Microservices

Implement RESTful Services

Use Spring Boot to create RESTful services due to its simplicity and power.

Ensure API versioning to manage changes without breaking clients.

Asynchronous Communication

Implement asynchronous communication, especially for long-running or resource-intensive tasks.

Use message queues like RabbitMQ or Kafka for reliable, scalable, and asynchronous communication between microservices.

Build and Deployment

Automate build and deployment processes using CI/CD tools like Jenkins or GitLab CI.

Implement blue-green deployment or canary releases to reduce downtime and risk.

5. Service Discovery and Configuration

Service Discovery

Use tools like Netflix Eureka for managing and discovering microservices in a distributed system.

Configuration Management

Centralize configuration management using tools like Spring Cloud Config.

Store configuration in a version-controlled repository for auditability and rollback purposes.

6. Monitoring and Logging

Implement centralized logging using ELK Stack (Elasticsearch, Logstash, Kibana) for easier debugging and monitoring.

Use Prometheus and Grafana for monitoring metrics and setting up alerts.

7. Security

Implement API gateways like Zuul or Spring Cloud Gateway for security, monitoring, and resilience.

Use OAuth2 and JWT for secure, stateless authentication and authorization.

8. Testing

Write unit and integration tests for each microservice.

Implement contract testing to ensure APIs meet the contract expected by clients.

9. Documentation

Document your APIs using tools like Swagger or OpenAPI. This helps in maintaining clarity about service endpoints and their purposes.

Conclusion

Implementing a Java microservices architecture can significantly enhance your application's scalability, flexibility, and maintainability. However, the complexity and technical expertise required can be considerable. Hire Java developers or avail Java development services can be pivotal in navigating this transition successfully. They bring the necessary expertise in Java frameworks and microservices best practices to ensure your project's success. Ready to transform your application architecture? Reach out to professional Java development services from top java companies today and take the first step towards a robust, scalable microservice architecture.

0 notes

Text

Kubernetes Cloud Controller Manager Tutorial for Beginners

Hi, a new #video on #kubernetes #cloud #controller #manager is published on #codeonedigest #youtube channel. Learn kubernetes #controllermanager #apiserver #kubectl #docker #proxyserver #programming #coding with #codeonedigest #kubernetescontrollermanag

Kubernetes is a popular open-source platform for container orchestration. Kubernetes follows client-server architecture and Kubernetes cluster consists of one master node with set of worker nodes. Cloud Controller Manager is part of Master node. Let’s understand the key components of master node. etcd is a configuration database stores configuration data for the worker nodes. API Server to…

View On WordPress

#kubernetes#kubernetes cloud controller manager#kubernetes cluster#kubernetes cluster backup#kubernetes cluster from scratch#kubernetes cluster installation#kubernetes cluster setup#kubernetes cluster tutorial#kubernetes controller#kubernetes controller development#kubernetes controller example#kubernetes controller explained#kubernetes controller golang#kubernetes controller manager#kubernetes controller manager components#kubernetes controller manager config#kubernetes controller manager logs#kubernetes controller manager vs scheduler#kubernetes controller runtime#kubernetes controller tutorial#kubernetes controller vs operator#kubernetes etcd#kubernetes etcd backup#kubernetes etcd backup and restore#kubernetes etcd cluster setup#kubernetes etcd install#kubernetes etcd restore#kubernetes explained#kubernetes installation#kubernetes installation on windows

0 notes

Text

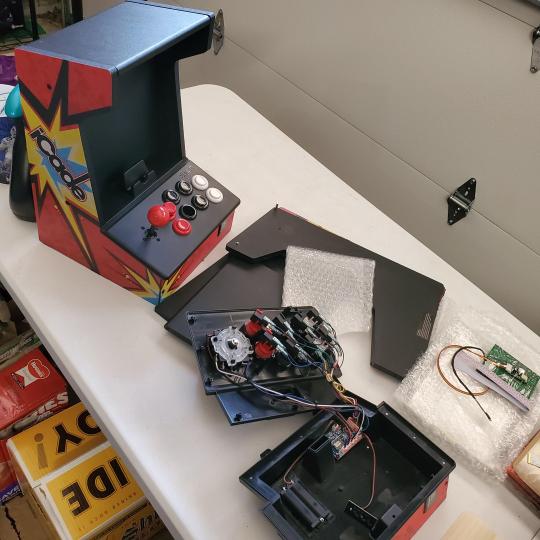

My Homelab/Office 2020 - DFW Quarantine Edition

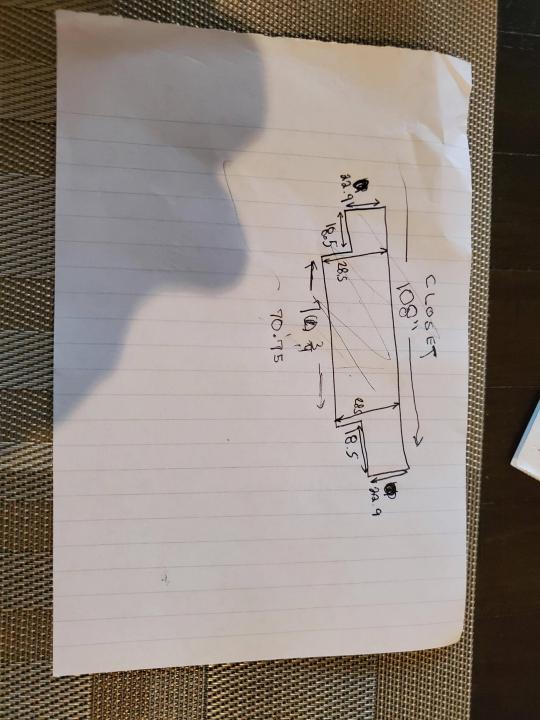

Moved into our first home almost a year ago (October 2019), I picked out a room that had 2 closets for my media/game/office area. Since the room isn't massive, I decided to build a desk into closet #1 to save on space. Here 1 of 2 shelves was ripped off, the back area was repainted gray. A piece of card board was hung to represent my 49 inch monitor and this setup also gave an idea how high I needed the desk.

On my top shelf this was the initial drop for all my Cat6 cabling in the house, I did 5 more runs after this (WAN is dropped here as well).

I measured the closet and then went to Home Depot to grab a countertop. Based on the dimensions, it needed to be cut into an object shape you would see on Tetris.

Getting to work, cutting the countertop.

My father-in-law helped me cut it to size in the driveway and then we framed the closet, added in kitchen cabinets to the bottom (used for storage and to hide a UPS). We ran electrical sockets inside the closet. I bought and painted 2 kitchen cabinets which I use for storage under my desk as well.

The holes allowed me to run cables under my desk much easier, I learned many of these techniques on Battlestations subreddit and Setup Wars on Youtube. My daughter was a good helper when it came to finding studs.

Some of my cousins are networking engineers, they advised me to go with Unifi devices. Here I mounted my Unifi 16 port switch, my Unifi Security Gateway (I'll try out pfSense sometime down the line), and my HD Homerun (big antenna is in the attic). I have Cat6 drops in each room in the house, so everything runs here. On my USG, I have both a LAN #2 and a LAN #1 line running to the 2nd closet in this room (server room). This shot is before the cable management.

Cable management completed in closet #1. Added an access point and connected 3 old Raspberry Pi devices I had laying around (1 for PiHole - Adblocker, 1 for Unbound - Recursive DNS server, and 1 for Privoxy - Non Caching web proxy).

Rats nest of wires under my desk. I mounted an amplifier, optical DVD ROM drive, a USB hub that takes input from up to 4 computers (allows me to switch between servers in closet #2 with my USB mic, camera, keyboard, headset always functioning), and a small pull out drawer.

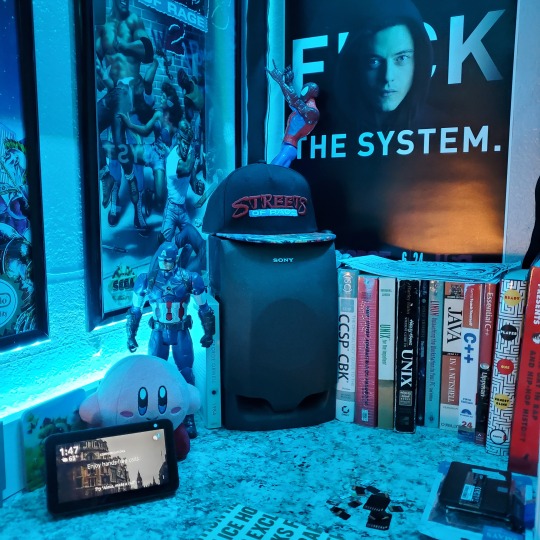

Cable management complete, night shot with with Nanoleaf wall lights. Unifi controller is mounted under the bookshelf, allows me to keep tabs on the network. I have a tablet on each side of the door frame (apps run on there that monitor my self hosted web services). I drilled a 3 inch hole on my desk to fit a grommet wireless phone charger. All my smart lights are either running on a schedule or turn on/off via an Alexa command. All of our smart devices across the house and outside, run on its on VLAN for segmentation purposes.

Quick shot with desk light off. I'm thinking in the future of doing a build that will mount to the wall (where "game over" is shown).

Wooting One keyboard with custom keycaps and Swiftpoint Z mouse, plus Stream Deck (I'm going to make a gaming comeback one day!).

Good wallpapers are hard to find with this resolution so pieced together my own.

Speakers and books at inside corner of desk.

Speakers and books at inside corner of desk.

Closet #2, first look (this is in the same room but off to the other side). Ran a few CAT6 cables from closet #1, into the attic and dropped here (one on LAN #1, the other on LAN #2 for USG). Had to add electrical sockets as well.

I have owned a ton of Thinkpads since my IBM days, I figured I could test hooking them all up and having them all specialize in different functions (yes, I have a Proxmox box but it's a decommissioned HP Microserver on the top shelf which is getting repurposed with TrueNAS_core). If you're wondering what OSes run on these laptops: Windows 10, Ubuntu, CentOS, AntiX. All of these units are hardwired into my managed Netgear 10gigabit switch (only my servers on the floor have 10 gigabit NICs useful to pass data between the two). Power strip is also mounted on the right side, next to another tablet used for monitoring. These laptop screens are usually turned off.

Computing inventory in image:

Lenovo Yoga Y500, Lenovo Thinkpad T420, Lenovo Thinkpad T430s, Lenovo Thinkpad Yoga 12, Lenovo Thinkpad Yoga 14, Lenovo Thinkpad W541 (used to self host my webservices), Lenovo S10-3T, and HP Microserver N54L

Left side of closet #2

**moved these Pis and unmanaged switch to outside part of closet**

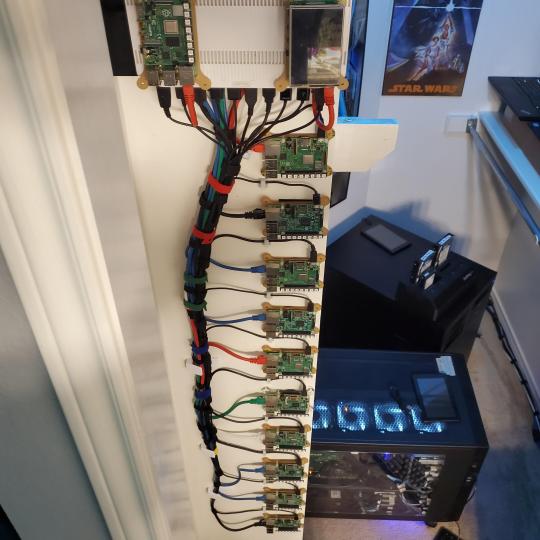

Since I have a bunch of Raspberry Pi 3s, I decided recently to get started with Kubernetes clusters (my time is limited but hoping to have everything going by the holidays 2020) via Rancher, headless. The next image will show the rest of the Pis but in total:

9x Raspberry Pi 3 and 2x Raspberry Pi 4

2nd shot with cable management. The idea is to get K3s going, there's Blinkt installed on each Pi, lights will indicate how many pods per node. The Pis are hardwired into a switch which is on LAN #2 (USG). I might also try out Docker Swarm simultaneously on my x86/x64 laptops. Here's my compose generic template (have to re-do the configs at a later data) but gives you an idea of the type of web services I am looking to run: https://gist.github.com/antoinesylvia/3af241cbfa1179ed7806d2cc1c67bd31