#mainframe managed service

Text

How can mainframe managed services help in filling the IT talent gap?

Managed mainframe services can play a crucial role in filling the IT talent gap in several ways:

Access to Specialized Expertise: Managed service providers often have a team of experts with deep knowledge of mainframe systems. This allows organizations to tap into specialized skills and experience they may not have in-house.

Scalability: Managed services can scale up or down as needed, ensuring that an organization has access to the right level of support without the need to hire and train full-time staff.

Cost Savings: Managed services often provide a cost-effective alternative, allowing organizations to pay for the services they need without the overhead of full-time employees.

24/7 Support: Many managed service providers offer round-the-clock support, which is crucial for mainframes that are often used for critical business operations. This ensures that issues can be addressed promptly, minimizing downtime and disruptions.

Focus on Core Competencies: By outsourcing mainframe management to a specialized provider, organizations can free up their internal IT teams to focus on strategic projects and core competencies rather than getting bogged down in day-to-day mainframe operations.

Risk Mitigation: Managed service providers often have robust disaster recovery and security protocols in place. This can help organizations mitigate the risks associated with mainframe operations, ensuring data integrity and compliance with industry regulations.

Knowledge Transfer: Managed service providers can also facilitate knowledge transfer to the organization's internal IT team. This can help in-house staff develop their skills and become more self-sufficient over time.

Flexibility: Mainframe-managed services can be tailored to the specific needs of the organization. Whether it's routine maintenance, troubleshooting, or capacity planning, these services can be customized to address unique challenges.

Rapid Adoption of Technology: Managed service providers are often at the forefront of technological advancements in the mainframe space. By working with them, organizations can quickly adopt new technologies and best practices without the need for extensive training or hiring efforts.

Compliance and Governance: Managed service providers are typically well-versed in industry-specific regulations and compliance requirements. They can help ensure that mainframe operations adhere to these standards, reducing the risk of non-compliance and associated penalties.

In summary, mainframe managed service providers can help organizations bridge the IT talent gap by providing access to specialized expertise, scalability, cost savings, and a range of other benefits. This allows organizations to effectively manage their mainframe systems while focusing on their core business objectives.

0 notes

Text

Maintec helps companies that rely on IBM Mainframe platform complete mainframe managed services in USA

4 notes

·

View notes

Text

Mainframe Performance Optimization Techniques

Mainframe performance optimization is crucial for organizations relying on these powerful computing systems to ensure efficient and cost-effective operations. Here are some key techniques and best practices for optimizing mainframe performance:

1. Capacity Planning: Understand your workload and resource requirements. Accurately estimate future needs to allocate resources efficiently. This involves monitoring trends, historical data analysis, and growth projections.

2. Workload Management: Prioritize and allocate resources based on business needs. Ensure that critical workloads get the necessary resources while lower-priority tasks are appropriately throttled.

3. Batch Window Optimization: Efficiently schedule batch jobs to maximize system utilization. Minimize overlap and contention for resources during batch processing windows.

4. Storage Optimization: Regularly review and manage storage capacity. Employ data compression, data archiving, and data purging strategies to free up storage resources.

5. Indexing and Data Access: Optimize database performance by creating and maintaining efficient indexes. Tune SQL queries to minimize resource consumption and improve response times.

6. CICS and IMS Tuning: Tune your transaction processing environments like CICS (Customer Information Control System) and IMS (Information Management System) to minimize response times and resource utilization.

7. I/O Optimization: Reduce I/O bottlenecks by optimizing the placement of data sets and using techniques like buffering and caching.

8. Memory Management: Efficiently manage mainframe memory to minimize paging and maximize available RAM for critical tasks. Monitor memory usage and adjust configurations as needed.

9. CPU Optimization: Monitor CPU usage and identify resource-intensive tasks. Optimize code, reduce unnecessary CPU cycles, and consider parallel processing for CPU-bound tasks.

10. Subsystem Tuning: Mainframes often consist of various subsystems like DB2, z/OS, and MQ. Each subsystem should be tuned for optimal performance based on specific workload requirements.

11. Parallel Processing: Leverage parallel processing capabilities to distribute workloads across multiple processors or regions to improve processing speed and reduce contention.

12. Batch Processing Optimization: Optimize batch job execution by minimizing I/O, improving sorting algorithms, and parallelizing batch processing tasks.

13. Compression Techniques: Use compression algorithms to reduce the size of data stored on disk, which can lead to significant storage and I/O savings.

14. Monitoring and Performance Analysis Tools: Employ specialized tools and monitoring software to continuously assess system performance, detect bottlenecks, and troubleshoot issues in real-time.

15. Tuning Documentation: Maintain comprehensive documentation of configuration settings, tuning parameters, and performance benchmarks. This documentation helps in identifying and resolving performance issues effectively.

16. Regular Maintenance: Keep the mainframe software and hardware up-to-date with the latest patches and updates provided by the vendor. Regular maintenance can resolve known performance issues.

17. Training and Skill Development: Invest in training for your mainframe staff to ensure they have the skills and knowledge to effectively manage and optimize the system.

18. Cost Management: Consider the cost implications of performance tuning. Sometimes, adding more resources may be more cost-effective than extensive tuning efforts.

19. Capacity Testing: Conduct load and stress testing to evaluate how the mainframe handles peak workloads. Identify potential bottlenecks and make necessary adjustments.

20. Security Considerations: Ensure that performance optimizations do not compromise mainframe security. Balance performance improvements with security requirements.

Mainframe performance optimization is an ongoing process that requires constant monitoring and adjustment to meet evolving business needs. By implementing these techniques and best practices, organizations can maximize the value of their mainframe investments and ensure smooth and efficient operations.

#Mainframe Application#data management#Database management systems#Modern mainframe applications#cloud services

0 notes

Text

Digital Transformation Services

Your Switch to a Digital Future – Digital Transformation Consulting Services

Being a leading name amongst Digital Transformation Company and Service providers, Enterprise Mobility has been handholding enterprises on their Digital Transformation journeys for two decades now

#enterprise mobility#digital transformation#enterprise mobility management#enterprise mobility service#Mobility Strategy and Consulting#Custom Enterprise Mobile App#digital transformation service#digital transformation consulting#digital transformation company#Digital Transformation Consultation#Legacy Modernization#Legacy Modernization Services#Legacy App Modernization#Legacy Mainframe#Legacy Application Modernization#Legacy Application Maintenance#Legacy Application Management#App Modernization Services#Enterprise Application Modernization#IoT Development

1 note

·

View note

Text

Print ad for the world's first teledex, a portable Datanet communicator with an integrated artificial assistant.

By the 2020s, the Datanet was useful, but it was also a convoluted mess of protocols and standards designed around machine communication. Human users rarely took full advantage of the system because of this.

Maple thought: why bother sorting out the mess if a machine could do it for them?

The teleindexer (or teledex) was designed to take full advantage of the Datanet for their human user. It would crawl through its nodes, indexing various services, pages, and media streams that it suspected its user would be interested in.

Eventually, the teledex’s neuromorphic intelligence would imprint on their user. It would gain an innate understanding of their personality and behavior patterns, allowing it to find the perfect content and even taking actions on their behalf.

The imprint would get scary good. The teledex could diagnose possible diseases and stress issues, engage in automated finance management, and take measures to protect the user’s identity and security.

That isn’t to say similar systems weren’t developed. Even before the teledex, crafty users and several startups attempted neuromorphic Datanet indexing using microcomputers and mainframes, but the teledex was the method that really blew up thanks to its convenient pattern (1 user, 1 teledex, portable).

PAL was initially released with two models, Draw and Pro. Several years later, Maple would release the Pal Mini, a cheaper screen-less design relying on vocal interfacing. Maple and its competitors would ultimately release thousands of various teleindexer models.

I recently started a Patreon! Support my work if this is something you're into: https://www.patreon.com/prokhorVLG

#sunsetsystem#art#artists on tumblr#sketch#illustration#technology#apple#cassette futurism#artificial intelligence#digital art#artwork#procreate

1K notes

·

View notes

Note

aita for Eating a bee and blaming it on my coworker?

i [12 indigo] am an apicultural engineedler for [a Large company name redacted]. we Provide a live software service so realtime support and updates are very Important. recently, a concentrated Effort has been made to port our old and Exceedingly convoluted network onto a new beehouse mainframework to comply with silicomb 2.0 standards and drag our Very tangled Very old codebase into the present decasweep. it has been a lot of effort over almost a full sweep, but the new mainframe is beautiful and well worth the time and will make it much easier to debug and re-bug the network going forward.

due to our service being live, our small team of developunishers were required to supervise the migration of our bees between mainframe overday, which we turned into a little Shindig since if all went well there wouldn't be much to do once Everything was underway. one of my coworkers [14 violet] who I will call aparna for this story brought faygo for us to celebrate with [she is Not a practicing juggalo but she still talks about growing up in the church All The Time. it is frankly Quite grating. we do not need to hear again about how you chose your paint aparna you do not actually paint anymore i fail to see how it is presently Relevant. and she Always seems to talk about it louder when she notices im listening]

anyway it was getting close to migration time and Most of my coworkers were there. we opened bottles of soda and began to prep the signal drones. [not like Her Imperious Condescension's drones, these are apicultural beenary signallers that serve signposts for the queen for the interframe migration. basically Her Smaller Majesty the Hive Queen Who Makes Our Code Work needs to know where to go to get to the new mainframe but we can't move her there directly since she's too vicious to be handled directly. instead we move the drones who we can manually override and she follows them into the new beehome.] this step of the process is Usually pretty smooth. the Most difficult part is usually when the queen moves and the Entire hive follows her; the multithreading can be somewhat difficult to manage and if the beestreams begin to cross over too much, they can jam the entrances to the new beehome, causing the formation to collapse and potentially bringing down the network. that's why we have to supervise the migration closely.

however. a Mistake was made. and it was made early.

at some point during setup one of the signal drones was knocked loose of its designated position. i do not know how this happened [but it's probably aparna's fault. she is always making these kinds of very Small mistakes. never anything Severe but little things. annoying things. many of them.] this is usually easy to correct except that it went unnoticed and, drawn by some instinct of insects Everywhere, found its way into my soda.

which i then drank.

i could tell the bee was in there right away, but my instinctive self defense reaction was to swallow and crush whatever was buzzing in my mouth with my Powerful esophageal muscles. so that is what I did. the signal drone died Swiftly in my throat. i was safe from the Threat of its sting - and we were down an essential component for the migration

i Panicked. i turned my head and frantically coughed up the signal drone, passing it off as the soda going down the wrong pipe. this dislodged the signal drone into my hand. i knew that if i were caught it would Not go well for me. if i got in Trouble at this job my lusus might start trying to push me toward the cavalreapers again. i could not let that happen.

then i noticed that someone's soda was open on the table next to me.

i invite you to guess what i did next. i also invite you to guess whose drink it happened to be.

it took some time for the absence of the signal drone to be noticed, but eventually aparna took a drink of her faygo and spat it out along with the body of the drone. it was Very clear what had happened and she took the blame for the subsequent Disaster of having to emergency patch the signal formation, as well as the debugging required to fix the resulting path collisions during the Full migration.

aparna will be fine. our boss likes her Very much overall and is Extremely unlikely to get rid of her [i would likely have come forward by now if i had done this to Any other coworker, but aparna is in no danger and Also she deserves it for being careless and Also it was probably her who knocked the drone out of formation to begin with], whereas i would Very likely have been fired had they known it was actually my fault. however i am concerned that aparna may be on to me. she has been glaring at me even More than usual for the past few days. i have glared back as i will Not be Cowed by a juggalo - and a lapsed one at that. she'll have to do better than an annoyance if she wants anything out of me.

so. am i the Asshole?

#OK^^^^^^Y THIS IS SO MUCH#THIS IS MY F^VORITE SUBMISSION EVER IM PRINTING IT OUT ^ND FR^MING IT ON MY W^LL#LITER^LLY I DONT C^RE IF THIS IS OBJECTIVELY ^ BULGE MOVE . WE ^LL DO CR^ZY THINGS IN THE N^ME OF PITCH FLIRTING#<- I ME^N WH^T WHO S^ID TH^T#am i the asshole

48 notes

·

View notes

Text

I wrote about the death of tech competition and its relationship to lax antitrust enforcement and regulatory capture for The American System in The American Conservative

Tech was forever a dynamic industry, where mainframes were bested by minicomputers, which were, in turn, devoured by PCs. Proprietary information services were subsumed into Gopher, Gopher was devoured by the web. If you didn’t like the management of the current technosphere, just wait a minute and there will be something new along presently. When it came to moving your relationships, data, and media over to the new service, the skids were so greased as to be nearly frictionless.

What happened? Did a new generation of tech founders figure out how to build an interoperability-proof computer that defied the laws of computer science? Hardly. No one has invented a digital Roach Motel, where users and their data check in but they can't check out. Digital tools remain stubbornly universal, and the attacker’s advantage is still in effect. Any walled garden is liable to having holes blasted in its perimeter by upstarts who want to help an incumbent’s corralled customers evacuate to greener pastures.

What changed was the posture of the state towards corporations. First, governments changed how they dealt with monopolies. Then, monopolies changed how governments treated reverse-engineering.

-A Murder Story: Whatever Happened to Interoperability?

44 notes

·

View notes

Note

I enjoyed your post about the huge printer and even huger mounds of paper you used at DEC. I was wondering—printers (and scanners) are the devil today…but were they even worse back then? I mean in terms of reliability/maintenance/issues printing from different types of machines. I’m imagining a dedicated person having to manage and service it, but maybe the hardware was more robust then?

Big fan of your blog! Lately I’ve been reading several books on computer history of the ‘70s and ‘80s and it’s been really cool to learn more about DEC.

They were probably just as bad if not worse than today, but from my perspective they were almost never down because we had a huge IT department to maintain them and also field service from the vendor (Last one I remember I think was made by Kodak).

Workstations, windows, WYSIWYG editors were still a few years off, so these machines were mission critical and not allowed to fail.

If you like DEC history, you might enjoy this article from my time in the mainframe engineering teams there. (Part of a now-defunct newsletter I may try to cobble together into a book eventually)

22 notes

·

View notes

Text

In the first four months of the Covid-19 pandemic, government leaders paid $100 million for management consultants at McKinsey to model the spread of the coronavirus and build online dashboards to project hospital capacity.

It's unsurprising that leaders turned to McKinsey for help, given the notorious backwardness of government technology. Our everyday experience with online shopping and search only highlights the stark contrast between user-friendly interfaces and the frustrating inefficiencies of government websites—or worse yet, the ongoing need to visit a government office to submit forms in person. The 2016 animated movie Zootopia depicts literal sloths running the DMV, a scene that was guaranteed to get laughs given our low expectations of government responsiveness.

More seriously, these doubts are reflected in the plummeting levels of public trust in government. From early Healthcare.gov failures to the more recent implosions of state unemployment websites, policymaking without attention to the technology that puts the policy into practice has led to disastrous consequences.

The root of the problem is that the government, the largest employer in the US, does not keep its employees up-to-date on the latest tools and technologies. When I served in the Obama White House as the nation’s first deputy chief technology officer, I had to learn constitutional basics and watch annual training videos on sexual harassment and cybersecurity. But I was never required to take a course on how to use technology to serve citizens and solve problems. In fact, the last significant legislation about what public professionals need to know was the Government Employee Training Act, from 1958, well before the internet was invented.

In the United States, public sector awareness of how to use data or human-centered design is very low. Out of 400-plus public servants surveyed in 2020, less than 25 percent received training in these more tech-enabled ways of working, though 70 percent said they wanted such training.

But knowing how to use new technology does not have to be an afterthought, and in some places it no longer is. In Singapore, the Civil Service Training College requires technology and digital-skills training for its 145,000 civilian public servants. Canada’s “Busrides” training platform gives its quarter-million public servants short podcasts on topics like data science, AI, and machine learning to listen to during their commutes. In Argentina, career advancement and salary raises are tied to the completion of training in human-centered design and data-analytical thinking. When public professionals possess these skills—learning how to use technology to work in more agile ways, getting smarter from both data and community engagement—we all benefit.

Today I serve as chief innovation officer for the state of New Jersey, working to improve state websites that deliver crucial information and services. When New Jersey’s aging mainframe strained under the load of Covid jobless claims, for example, we wrote forms in plain language, simplified and eliminated questions, revamped the design, and made the site mobile-friendly. Small fixes that came from sitting down and listening to claimants translated into 48 minutes saved per person per application. New Jersey also created a Covid-19 website in three days so that the public had the information they wanted in one place. We made more than 134,000 updates as the pandemic wore on, so that residents benefited from frequent improvements.

Now with the explosion of interest in artificial intelligence, Congress is turning its attention to ensuring that those who work in government learn more about the technology. US senators Gary Peters (D-Michigan) and Mike Braun (R-Indiana) are calling for universal leadership training in AI with the AI Leadership Training Act, which is moving forward to the full Senate for consideration. The bill directs the Office of Personnel Management (OPM), the federal government's human resources department, to train federal leadership in AI basics and risks. However, it does not yet mandate the teaching of how to use AI to improve how the government works.

The AI Leadership Training Act is an important step in the right direction, but it needs to go beyond mandating basic AI training. It should require that the OPM teach public servants how to use AI technologies to enhance public service by making government services more accessible, providing constant access to city services, helping analyze data to understand citizen needs, and creating new opportunities for the public to participate in democratic decisionmaking.

For instance, cities are already experimenting with AI-based image generation for participatory urban planning, while San Francisco’s PAIGE AI chatbot is helping to answer business owners' questions about how to sell to the city. Helsinki, Finland, uses an AI-powered decisionmaking tool to analyze data and provide recommendations on city policies. In Dubai, leaders are not just learning AI in general, but learning how to use ChatGPT specifically. The legislation, too, should mandate that the OPM not just teach what AI is, but how to use it to serve citizens.

In keeping with the practice in every other country, the legislation should require that training to be free. This is already the case for the military. On the civilian side, however, the OPM is required to charge a fee for its training programs. A course titled Enabling 21st-Century Leaders, for example, costs $2,200 per person. Even if the individual applies to their organization for reimbursement, too often programs do not have budgets set aside for up-skilling.

If we want public servants to understand AI, we cannot charge them for it. There is no need to do so, either. Building on a program created in New Jersey, six states are now collaborating with each other in a project called InnovateUS to develop free live and self-paced learning in digital, data, and innovation skills. Because the content is all openly licensed and designed specifically for public servants, it can easily be shared across states and with the federal government as well.

The Act should also demand that the training be easy to find. Even if Congress mandates the training, public professionals will have a hard time finding it without the physical infrastructure to ensure that public servants can take and track their learning about tech and data. In Germany, the federal government’s Digital Academy offers a single site for digital up-skilling to ensure widespread participation. By contrast, in the United States, every federal agency has its own (and sometimes more than one) website where employees can look for training opportunities, and the OPM does not advertise its training across the federal government. While the Department of Defense has started building USALearning.gov so that all employees could eventually have access to the same content, this project needs to be accelerated.

The Act should also require that data on the outcomes of AI training be collected and published. The current absence of data on federal employee training prevents managers, researchers, and taxpayers from properly evaluating these training initiatives. More comprehensive information about our public workforce, beyond just demographics and job titles, could be used to measure the impact of AI training on cost savings, innovation, and performance improvements in serving the American public.

Unlike other political reforms that could take generations to achieve in our highly partisan and divisive political climate, investing in people—teaching public professionals how to use AI and the latest technology to work in more agile, evidence-based, and participatory ways to solve problems—is something we can do right now to create institutions that are more responsive, reliable, and deserving of our trust.

I understand the hesitance to talk about training people in government. When I worked for the Obama White House, the communications team was reluctant to make any public pronouncements about investing in government lest we be labeled “Big Government” advocates. Since the Reagan years, Republicans have promoted a “small government” narrative. But what matters to most Americans is not big or small but that we have a better government.

6 notes

·

View notes

Text

Syspro Erp Software Program Erp Enterprise System Erp Solutions

Not only will you acquire an understanding of your present state, but you’ll have the opportunity to design your future state. This helps you build organizational capabilities via extra environment friendly processes and the elimination of any non-value-added features. Are users expressing frustrations over the system’s usability, functionality or accessibility? Rather than trying to deal with all the problems at once, it helps to divide them into particular categories. ERP optimization is the best way to ensure your ERP system and associated processes continue to align with your small business targets.

erp support services

Most successful ERP implementations are led by an government sponsor who sponsors the business case, gets approval to proceed, monitors progress, chairs the steering committee, removes roadblocks, and captures the benefits. The CIO works intently with the executive sponsor to make sure enough consideration is paid to integration with current techniques, information migration, and infrastructure upgrades. The CIO also advises the manager sponsor on challenges and helps the executive sponsor select a agency specializing in ERP implementations.

Oracle Project Management helps you propose and observe your tasks, assign the best expertise, balance capability against demand, and scale assets up or down shortly as needs change. You stay actively knowledgeable about support-critical info by way of our built-in, bi-directional support. Get timely alerts on potential points associated to the SAP answer you utilize, and nicely earlier than you recognize that an issue might arise. Today, ERP systems are crucial for managing hundreds of businesses of all sizes and in all industries. To these firms, ERP is as indispensable as the electrical energy that retains the lights on.

The sudden arrival of the Covid-19 pandemic resulted in an enormous financial downturn, with tens of millions... You’re going to need to assess the performance of your ERP system by first establishing KPIs. Once the ERP system has been efficiently carried out, the KPIs will help you quantify the impact of the software program, how it can be tailored, and what profit you're going to get by means of ROI (return on investment). The SAP Product Versions listed in the tables beneath are supported by support package deal stacks. The support bundle stage of this particular element version is a key a half of the stack and a unique identifier for the support package deal stack stage. SAP cannot predict the financial attractiveness of this mannequin, as unique contract phrases could differ considerably.

Finally, project administration expertise can provide an analyst a better understanding of tips on how to handle dangers and issues which will come up throughout an ERP project. This mannequin helps clients in extending their SAP on-premise application panorama into enterprise processes the place additional protection with SAP's improvements helps maximizing the value from the investment in SAP. Customers can leverage this model to determine which on-premise options from SAP´s innovation portfolio match greatest with their enterprise needs.

In 1913, engineer Ford Whitman Harris developed what became often known as the financial order quantity (EOQ) model, a paper-based manufacturing system for manufacturing scheduling. Toolmaker Black and Decker changed the game in 1964 when it turned the first firm to undertake a cloth requirements planning (MRP) answer that mixed EOQ concepts with a mainframe computer. With an ERP answer designed for SCM, firms can manage their procurement and provide efforts with a couple of clicks, ensuring that items, services and other essential sources are shifting at an sufficient tempo across the supply chain. As a technique, provide chain management (SCM) merely means having a plan in place to observe and update supply chain activities in real-time. It means actively managing the various activities that happen in a product’s lifecycle to maximise customer worth and achieve a aggressive advantage. Profitability is the driving force of all companies, making it elementary amongst elements of ERP methods.

Jumpstart your choice project with a free, pre-built, customizable ERP necessities template. "People need to step up and have these conversations and bring enterprise into the method," he stated. As your first line of defense when points come up, our Help Desk guarantees a response time of no extra than eight hours.

#erp support#erp support outsourcing#erp support services#erp support specialist#erp enterprise resource planning#enterprise resource planning erp

2 notes

·

View notes

Text

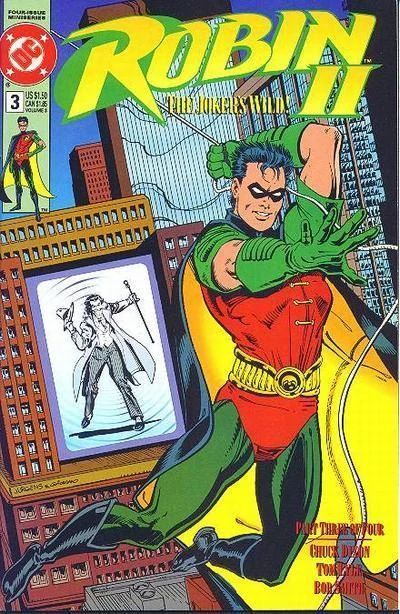

Robin II (vol. 1) #3: …a Comedy Tonight!

Read Date: January 07, 2023

Cover Date: January 1992

● Writer: Chuck Dixon ● Penciler: Tom Lyle ● Inker: Bob Smith ● Colorist: Adrienne Roy ● Letterer: Tim Harkins ● Editor: Kelley Puckett ◦ Dennis O'Neil ●

**HERE BE SPOILERS: Skip ahead to the fan art/podcast to avoid spoilers

● (pg 16) Tim managed to shut down the Batcomputer before Joker could put a virus onto it

● this Joker looks fabulous

● (pg 21) heheheh, the GCPD bomb squad just shat themselves

● 👏👏👏👏

Synopsis:

The Joker hacks into the computers in Gotham City, including emergency services and government computers and sets the city into disarray, with power outages and fires everywhere. He has sent a tape to the Mayor, which he decides to withhold from the public in order to save face. This leaves it up to Commissioner Gordon to deal with it all. Gordon is forced to confer with Robin, because Batman is out of town.

The next day, the Joker hacks into the Jumbotron at Gotham Stadium during the Hammer Bowl. He demands one billion dollars as ransom to set the city back in order, but with the catch that it must be delivered by Batman personally. Alfred realizes that the Joker has called Tim's bluff, and is well aware that Batman isn't in the city.

Later, Tim plays Warlocks and Warriors with his friends as the Dungeon Master. When faced with the challenge of creating a scenario for his friends to play out, Tim sets up something similar to what is actually happening in Gotham. The king is away, and a mad wizard has taken over the country, leaving only the king's son to deal with it. Ives recommends that the prince follow the flow of the wizard's magic to track him down. This causes Tim to realize that he should follow the Joker's trail over the internet by hacking, and leaves the game without explanation.

Tim attempts to use the Batcave's two Crays to hack his way into the Joker's mainframe. After hours of digging his way through the server's shell, Tim realizes that the Joker has actually used the connection Tim established to hack into the Crays themselves. Desperately, Tim cuts the power before the Joker can get any information from them.

The Joker causes even more havoc for Gotham, such that the mayor asks to have the city declared a disaster area. Tim decides to stop reacting, and do some real detective work. He sneaks into the kidnapped Dr. Osgood Pellinger's house seeking clues. Eventually, he comes across a shoebox flield with photos and memorabilia of Pellinger's childhood dog. Meanwhile, the Joker delivers his demands to the mayor's office, and a frustrated Commissioner Gordon warns Robin against facing the Joker alone…

(https://dc.fandom.com/wiki/Robin_II_Vol_1_3)

Fan Art: RED ROBIN / TIM DRAKE (Jay Lycurgo) by MrSpikeArt

Accompanying Podcast:

● Everyone Loves the Drake - episode 19

#dc#dc comics#my dc read#podcast recommendation#podcast - everyone loves the drake#comics#comic books#fan art#joker#batman#robin#robin (tim drake)#tim drake

2 notes

·

View notes

Text

Mainframe Managed Services

Mainframe managed services offer numerous advantages, particularly in terms of efficiency, cost-effectiveness, security, and scalability. Here are some of the key advantages:

Cost Efficiency: Reduce operational expenses through flexible pricing models.

Expert Support: Access specialized professionals for optimized performance.

Reliability: Proactive monitoring minimizes downtime and ensures continuous operation.

Scalability: Easily adjust resources to meet changing demands.

Security & Compliance: Robust measures safeguard data and ensure regulatory adherence.

Focus on Core Activities: Free up internal resources for strategic initiatives.

24/7 Support: Round-the-clock assistance for uninterrupted operations.

Access to Latest Technologies: Leverage advanced mainframe solutions without heavy investments.

Disaster Recovery: Ensure business continuity and data protection.

Simplified Vendor Management: Single point of contact for streamlined operations.

#mainframe managed services#mainframe services#mainframe as a service#ibm mainframe#managed mainframe services

0 notes

Text

As a direct analogy for what goes on inside real life computers, the original TRON film is, shall we say, somewhat lacking. Just to take one example, computer programs are represented as people, but they're driving vehicles from video games? But shouldn't those video games also be people? I could go on, but that's not the point of this post (or, perhaps, of the movie). Because I think TRON does work as a more overarching allegory, using mainly theological concepts to reflect the state of computing, and the changes it was going through, at the time of its making.

The religion of the Programs in the film is of a fascinating, extremely polytheistic sort. I'm no religious expert, so I'm not sure there's any direct real-life analogy to the concept of each individual Program having their own personal divine creator, "my User". Meanwhile, the villain of the film, the Master Control Program, is trying to stamp out this polytheistic faith, and replace it with monotheism, with himself as the One True God. But in the end, his ambitions are thwarted and he is defeated by Tron and his friends, leading to all the I/O Towers in the system lighting up and Programs presumably being able to directly communicate with their Users once more.

I think that this plot can be seen as an allegory for the "personal computer revolution" that was taking place in the 80s. Early computing was of a mainly centralized sort, with users connecting to a large mainframe through "dumb" terminals, the actual work being done at one central location and the results being sent to screens or printers. But by the time TRON was released in cinemas, people could have their own, much smaller computers in their homes, creating software and documents only for themselves, without having to rely on the processing power of a mainframe. The allegory of the film, then, is that the MCP is an old mainframe trying to maintain the old order, wanting the computers of ENCOM to be simple mindless terminals, with the Users getting access to their Programs only through the grace of the MCP. His defeat signals the end of this paradigm, with Alan, Flynn and everyone else finally being able to have their own "personal computers", though still connected to eachother. If I may be so bold to say it, the world had gone from digital monotheism to digital polytheism.

I also think that this allegory is still relevant today, since current developments in the way we consume media and use the internet seems to be moving back towards a kind of centralization, a kind of monotheism. Stuff like social media platforms (including, dare I say it, Tumblr) and especially streaming services feels to me somewhat like the MCP returning, only allowing us access to media by the whims of the real-life Dillingers in charge of the big corporations. Looking at the whole HBO debacle, I can hear Walter and Dillinger arguing. "User requests are what computers are for!" "Doing our business is what computers are for!"

I would be delighted if the next TRON movie adresses these ideas, though I have a certain doubt that a film made by Disney today would even dare to suggest that streaming services are anything other than the glorious shining future of media. I sometimes get the feeling that the vaguely anti-corporation concepts of the original film only managed to slip through since the higher-ups at Disney at the time weren't paying too much attention.

#tron#tron 1982#streaming services#monotheism#polytheism#religion#disney#allegory#access to infinity train is suspended#report to dillinger immediately#end of line#I want Uprising on DVD dammit

5 notes

·

View notes

Text

Watch "The Fast Fourier Transform Algorithm" on YouTube

youtube

NFT STO Irene bae Kim management system AI from Ethereum with virtual machines mainframe plus delivery of cicd streaming engine and services NFT STO Candice Kim solution technology management system AI for SVP Imperium Kevin Kim management system AI with Unitron AI for protocols protection management system AI for firewall prime ai.

youtube

Update all

2 notes

·

View notes

Photo

Banking

44 of the top 50 banks and other financial institutes run on IBM Z mainframes.

Banks of all kinds are required to process massive amounts of data. High-frequency trading is a priority for investment banks, and they must react quickly to changes in the financial markets. All banks must execute huge volumes of transactions in financial services, which includes credit card payments, Cash withdrawal, and online account updates. Mainframes are used by banks to handle data at a speed that traditional servers cannot meet.

Personal banks, as reliant on mainframe computing as they are, pale in comparison to investment banks that routinely engage in high-frequency trading. These businesses require a lot of processing power since they not only have consumers but also need to react rapidly to any shifts or changes in the financial market. That drive is aided by the processing capacity of mainframe computers, which keeps the company ahead of the competition. That is why, no matter how big or little, every bank needs mainframe computers to maintain track of data and sort through thousands of transactions. The computational power of commodity servers is simply insufficient to manage such massive amounts of data.

2 notes

·

View notes

Text

The Impact of Legacy Systems on Business Agility and Innovation

In today's fast-paced business environment, agility and innovation are crucial for staying competitive. Companies must quickly adapt to changing market conditions, embrace new technologies, and continuously innovate to meet evolving customer demands. However, many organizations find themselves hampered by legacy systems – outdated software and hardware that can hinder progress and stifle innovation. In this blog, we will explore the impact of legacy systems on business agility and innovation and discuss strategies for overcoming these challenges.

Understanding Legacy Systems

Legacy systems are outdated computer systems or applications that are still in use, despite being superseded by newer technologies. These systems are often deeply embedded within an organization's operations and can be critical for day-to-day functions. Common examples include old mainframes, early versions of enterprise resource planning software, and outdated customer relationship management systems.

The Challenges of Legacy Systems

Technological Obsolescence

Legacy systems are often built on outdated technologies that lack compatibility with modern software and hardware. This technological gap can create significant hurdles when attempting to integrate new tools or platforms, limiting the organization's ability to adopt innovative solutions.

High Maintenance Costs

Maintaining and supporting legacy systems can be expensive and resource-intensive. As these systems age, finding skilled personnel who are familiar with the technology becomes increasingly difficult. Additionally, parts and support services may become scarce, driving up maintenance costs.

Data Silos

Legacy systems often operate in isolation, creating data silos that hinder information flow across the organization. This fragmentation can impede decision-making processes and slow down the implementation of new initiatives, reducing overall agility.

Security Vulnerabilities

Older systems are more susceptible to security breaches due to outdated security protocols and the lack of regular updates. These vulnerabilities can pose significant risks to the organization, including data breaches and compliance issues.

Reduced User Experience

Legacy systems often lack the user-friendly interfaces and features found in modern software. This can lead to inefficiencies and frustration among employees, reducing productivity and stifling innovation.

Impact on Business Agility

Slow Response to Market Changes

Legacy systems can severely limit a company's ability to respond quickly to market changes. The rigidity of these systems makes it challenging to implement new processes or pivot strategies, causing delays that can result in missed opportunities.

Inhibited Collaboration

The data silos created by legacy systems hinder collaboration between departments and teams. Without seamless information sharing, it becomes difficult to coordinate efforts and innovate effectively, slowing down the overall pace of the business.

Stifled Innovation

Innovation requires experimentation and the ability to quickly deploy new ideas. Legacy systems, with their inherent inflexibility, make it difficult to test and implement innovative solutions. This stifles creativity and can prevent the organization from exploring new business models or technologies.

Strategies for Overcoming Legacy System Challenges

Incremental Modernization

Instead of attempting a full-scale replacement of legacy systems, consider an incremental approach. Gradually modernize components of the system, integrating them with modern technologies. This approach reduces risk and allows the organization to adapt progressively.

Leverage Cloud Technologies

Cloud computing offers a scalable and flexible solution for modernizing legacy systems. By migrating to the cloud, organizations can reduce infrastructure costs, improve data accessibility, and enhance collaboration. Cloud platforms also provide advanced security features and regular updates.

API Integration

Application Programming Interfaces can bridge the gap between legacy systems and modern applications. By developing APIs, organizations can enable legacy systems to communicate with new technologies, facilitating data integration and improving overall efficiency.

Invest in Training

Investing in training for IT staff and end-users is crucial for successful legacy system modernization. Training ensures that employees are equipped with the necessary skills to manage and operate new technologies, reducing resistance and improving adoption rates.

Engage in Strategic Planning

A well-defined strategic plan is essential for legacy system modernization. Engage stakeholders across the organization to understand their needs and pain points. Develop a clear roadmap that outlines the steps for modernization, including timelines, budgets, and key performance indicators.

Conclusion

Legacy systems can significantly impact business agility and innovation, creating barriers that hinder progress and stifle creativity. However, with a strategic approach to modernization, organizations can overcome these challenges and unlock new opportunities for growth. By leveraging modern technologies, integrating APIs, and investing in training, businesses can enhance their agility, foster innovation, and stay competitive in an ever-evolving market. The key is to recognize the limitations of legacy systems and take proactive steps towards a more flexible and innovative future.

0 notes