#python projects with source code

Explore tagged Tumblr posts

Text

Best Python projects with source code

Want to build real world Python projects? Takeoff Projects brings you best Python projects with source code to boost your coding capabilities! Whether you’re a beginner or an expert, our projects cover web development, data science, AI, and more. Get hands on experience in, understand coding common logic, and improve your resume with practical projects. Each project comes with whole source code and guidance, making learning easy. Start your Python projects today with Takeoff Projects and turn ideas into reality.

Python is one of the most popular programming languages, and working on real world projects helps improve your coding skills. Python projects with source code give you hands on experience and a better understanding of how different applications work. Whether you are a beginner or an experienced developer, these projects assist in learning web improvement, data science, automation, and AI. Having access to source code permits you to discover, regulate, and beautify the undertaking primarily based to your necessities.

Building Python projects not only sharpens your skills but also boosts your resume. Employers prefer candidates with practical knowledge, and working on real projects sets you apart. From simple applications to advanced structures, these projects assist you coding good judgment and trouble fixing strategies. By running on Python projects with source code, you could enhance your programming abilities and build a strong portfolio to showcase your information.

Conclusion: Working on Python projects with source code is the best way to improve your coding skills and get real world experience. It helps you understand programming concepts, develop problem solving abilities, and build a strong portfolio. Whether you are a beginner or an expert, hands on projects make learning more effective. Takeoff Projects offers a wide range of Python projects with complete source code to help you grow in your career. Start building your own projects today and take your Python skills to the next level.

0 notes

Text

Introduction: As a high school student in the 21st century, there's no denying the importance of computer science in today's world. Whether you're a seasoned programmer or just dipping your toes into the world of coding, the power of computer science is undeniable. In this blog, I'll share my journey as a 12th-grader venturing into the fascinating realms of C, C++, and Python, and how this journey has not only improved my computer science profile but also shaped my outlook on technology and problem-solving.

Chapter 1: The Foundations - Learning C

Learning C:

C, often referred to as the "mother of all programming languages," is where my journey began. Its simplicity and efficiency make it an excellent choice for beginners. As a high school student with limited programming experience, I decided to start with the basics.

Challenges and Triumphs:

Learning C came with its fair share of challenges, but it was incredibly rewarding. I tackled problems like understanding pointers and memory management, and I quickly realized that the core concepts of C would lay a strong foundation for my future endeavors in computer science.

Chapter 2: Building on the Basics - C++

Transition to C++:

With C under my belt, I transitioned to C++. C++ builds upon the concepts of C while introducing the object-oriented programming paradigm. It was a natural progression, and I found myself enjoying the flexibility and power it offered.

Projects and Applications:

I started working on small projects and applications in C++. From simple text-based games to data structures and algorithms implementations, C++ opened up a world of possibilities. It was during this phase that I began to see how the knowledge of programming languages could translate into tangible solutions.

Chapter 3: Python - The Versatile Language

Exploring Python:

Python is often praised for its simplicity and readability. As I delved into Python, I realized why it's a favorite among developers for a wide range of applications, from web development to machine learning.

Python in Real-Life Projects:

Python allowed me to take on real-life projects with ease. I built web applications using frameworks like Flask and Django, and I even dabbled in data analysis and machine learning. The versatility of Python broadened my horizons and showed me the real-world applications of computer science.

Chapter 4: A Glimpse into the Future

Continual Learning:

As I prepare to graduate high school and venture into higher education, my journey with C, C++, and Python has instilled in me the importance of continual learning. The field of computer science is dynamic, and staying up-to-date with the latest technologies and trends is crucial.

Networking and Collaboration:

I've also come to appreciate the significance of networking and collaboration in the computer science community. Joining online forums, participating in coding challenges, and collaborating on open-source projects have enriched my learning experience.

Conclusion: Embracing the World of Computer Science

My journey as a 12th-grader exploring C, C++, and Python has been an enlightening experience. These languages have not only improved my computer science profile but have also given me a broader perspective on problem-solving and technology. As I step into the future, I'm excited to see where this journey will take me, and I'm ready to embrace the ever-evolving world of computer science.

If you're a fellow student or someone curious about programming, I encourage you to take the plunge and start your own journey. With determination and a willingness to learn, the world of computer science is yours to explore and conquer.

#Computer Science#Programming Languages#Learning Journey#C Programming#C++ Programming#Python Programming#Coding Tips#Programming Projects#Programming Tutorials#Problem-Solving#High School Education#Student Life#Personal Growth#Programming Challenges#Technology Trends#Future in Computer Science#Community Engagement#Open Source#Programming Communities#Technology and Society

3 notes

·

View notes

Text

Essentials You Need to Become a Web Developer

HTML, CSS, and JavaScript Mastery

Text Editor/Integrated Development Environment (IDE): Popular choices include Visual Studio Code, Sublime Text.

Version Control/Git: Platforms like GitHub, GitLab, and Bitbucket allow you to track changes, collaborate with others, and contribute to open-source projects.

Responsive Web Design Skills: Learn CSS frameworks like Bootstrap or Flexbox and master media queries

Understanding of Web Browsers: Familiarize yourself with browser developer tools for debugging and testing your code.

Front-End Frameworks: for example : React, Angular, or Vue.js are powerful tools for building dynamic and interactive web applications.

Back-End Development Skills: Understanding server-side programming languages (e.g., Node.js, Python, Ruby , php) and databases (e.g., MySQL, MongoDB)

Web Hosting and Deployment Knowledge: Platforms like Heroku, Vercel , Netlify, or AWS can help simplify this process.

Basic DevOps and CI/CD Understanding

Soft Skills and Problem-Solving: Effective communication, teamwork, and problem-solving skills

Confidence in Yourself: Confidence is a powerful asset. Believe in your abilities, and don't be afraid to take on challenging projects. The more you trust yourself, the more you'll be able to tackle complex coding tasks and overcome obstacles with determination.

#code#codeblr#css#html#javascript#java development company#python#studyblr#progblr#programming#comp sci#web design#web developers#web development#website design#webdev#website#tech#html css#learn to code

2K notes

·

View notes

Text

AI continues to be useful, annoying everyone

Okay, look - as much as I've been fairly on the side of "this is actually a pretty incredible technology that does have lots of actual practical uses if used correctly and with knowledge of its shortfalls" throughout the ongoing "AI era", I must admit - I don't use it as a tool too much myself.

I am all too aware of how small errors can slip in here and there, even in output that seems above the level, and, perhaps more importantly, I still have a bit of that personal pride in being able to do things myself! I like the feeling that I have learned a skill, done research on how to do a thing and then deployed that knowledge to get the result I want. It's the bread and butter of working in tech, after all.

But here's the thing, once you move beyond beginner level Python courses and well-documented windows applications. There will often be times when you will want to achieve a very particular thing, which involves working with a specialist application. This will usually be an application written for domain experts of this specialization, and so it will not be user-friendly, and it will certainly not be "outsider-friendly".

So you will download the application. Maybe it's on the command line, has some light scripting involved in a language you've never used, or just has a byzantine shorthand command structure. There is a reference document - thankfully the authors are not that insane - but there are very few examples, and none doing exactly what you want. In order to do the useful thing you want to do, they expect you to understand how the application/platform/scripting language works, to the extent that you can apply it in a novel context.

Which is all fine and well, and normally I would not recommend anybody use a tool at length unless they have taken the time to understand it to the degree at which they know what they are doing. Except I do not wish to use the tool at length, I wish to do one, singular operation, as part of a larger project, and then never touch it again. It is unfortunately not worth my time for me to sink a few hours into learning a technology that you will use once for twenty seconds and then never again.

So you spend time scouring the specialist forums, pulling up a few syntax examples you find randomly of their code and trying to string together the example commands in the docs. If you're lucky, and the syntax has enough in common with something you're familiar with, you should be able to bodge together something that works in 15-20 minutes.

But if you're not lucky, the next step would have been signing up to that forum, or making a post on that subreddit, creating a thread called "Hey, newbie here, needing help with..." and then waiting 24-48 hours to hear back from somebody probably some years-deep veteran looking down on you with scorn for not having put in the effort to learn their Thing, setting aside the fact that you have no reason to normally. It's annoying, disruptive, and takes time.

Now I can ask ChatGPT, and it will have ingested all those docs, all those forums, and it will give you a correct answer in 20 seconds about what you were doing wrong. Because friends, this is where a powerful attention model excels, because you are not asking it to manage a complex system, but to collate complex sources into a simple synthesis. The LLM has already trained in this inference, and it can reproduce it in the blink of an eye, and then deliver information about this inference in the form of a user dialog.

When people say that AI is the future of tutoring, this is what it means. Instead of waiting days to get a reply from a bored human expert, the machine knowledge blender has already got it ready to retrieve via a natural language query, with all the followup Q&A to expand your own knowledge you could desire. And the great thing about applying this to code or scripting syntax is that you can immediately verify whether the output is correct but running it and seeing if it performs as expected, so a lot of the danger is reduced (not that any modern mainstream attention model is likely to make a mistake on something as simple a single line command unless it's something barely documented online, that is).

It's incredibly useful, and it outdoes the capacity of any individual human researcher, as well as the latency of existing human experts. That's something you can't argue we've ever had better before, in any context, and it's something you can actively make use of today. And I will, because it's too good not to - despite my pride.

130 notes

·

View notes

Note

Greetings moose-mousse, it is a bit niche/weird (but let's see how far it goes) but may I ask for some suggestions / advice concerning making pseudo-code / fake code scripts / programs to put onto scenes to film? Because I work towards portraying sidestream programming environments of the past (yet customized into my own 16^12 worldbuilding stuff) to show for films I look forth to produce this summer and idk where to pull fitting samples, code style guides & whatnot to put on various screens.

A great start. Wireshark. It is a packet sniffer. Especially on wireless networks in looks great as background busywork. Do it to a public network and you will get plenty of noise to look technical. Basically in wireless networks, everyone is just screaming things. Only the intended target really reacts, and things are encrypted and all sorts of safety is in place, but the fact of the matter is still that everyone is screaming. So you can just have wireshark show you everything that is happening, and it gives a nice constant feed of technically valid but essentially nonsense to look at. Customize the look a bit and you are great. Note that this is both legal and fine. This is stuff it is ok to yell about in public The other is of course a command console. For that I would recommend installing Python, and then simply make a infinite loop that prints technical nonsense. If you need sample text then install anything by writing "pip install ______", copy the text and print it in your own python thing. Creates infinite nonsense text that looks real (because... it is a copy of something real.) Both of these should take less than an hour to set up, and will create great looking backgrounds on screens for "someone doing something technical"

If you want code just staying still on the screen, then basically install visual studio code, find an open source project on github and open a file. Open files of a few different types and visual studio code will automatically recommend you extension that will color the text to look like proper code.

8 notes

·

View notes

Text

robotics: a few resources on getting started

a free open online robotics education resource! includes lots of lessons in video forms, which have transcripts and code sections that allow you to copy + paste from it. each lesson tells you the skill level assumed of you in order for you watch it (from general knowledge -> undergrad engineering). has lots of topics to choose from.

an open-source collection of exercises and challenges to learn robotics in a practical way. there are exercises about drone programming, about computer vision, about mobile robots, about autonomous cars, etc. It is mainly based on gazebo simulator and ROS. the students program their solutions in python.

each exercise is composed of (a) gazebo configuration files, (b) a web template to host student’s code and (c) theory contents.

with each free e-learning module you complete, you earn a certificate!

stanford university has this thing called stanford engineering everywhere which offers a few free courses you can take, including an introduction to robotics course!

some lists on github you can check out for more resources.

43 notes

·

View notes

Text

How to get into Coding!

Coding is very important now and in the future. Technology relies on coding and in the future you will need to know how to code to get a high-paying job. Many people consider having Computer Science field-related jobs, especially in AI. What if you are interested it in general or as a hobby? What if you don't know what you want to do yet for college?

Pick a language you want to learn: Personally, I started out with HTML and CSS. I recommend if you want to do web design HTML and CSS are good languages to start with. Otherwise, start with JavaScript or Python.

2. Find Resources: Basically you want to look at videos on YouTube, and take classes that have coding like AP CSP, AP CS A (harder class), Digital Information Technology, etc. You can also attend classes outside in the summer like CodeNinjas and use websites like code.org, freeCodeCamp, and Codecademy. Also, ask your friends for help too! You can find communities on Reddit and Discord as well.

3. Start Practicing: Practice slowly by doing small projects like making games for websites and apps. You can work with friends if you are still a beginner or need help. There's also open-source coding you can do!

4. Continue coding: If you don't continue, you will lose your skills. Be sure to always look up news on coding and different coding languages.

5. Certifications: If you are advanced in coding or want to learn more about technology, you can do certifications. This can cost a lot of money depending on what certification you are doing. Some school districts pay for your certification test. But if you take the test and pass, you can put it on your resume, and job recruiters/interviewers will be impressed! This can help with college applications and show initiative if you want a computer science degree. This shows you are a "master" of the language.

#tech#coding#learning#education#hobby#fun#jobs#high school#college#university#youtube#reddit#certification#javascript#java#python#html css#css#html#ap classes#ap csp#information technology#technology#computer science#programming#software engineering#web design#web development#discord chat#discord server

10 notes

·

View notes

Text

How to Transition from Biotechnology to Bioinformatics: A Step-by-Step Guide

Biotechnology and bioinformatics are closely linked fields, but shifting from a wet lab environment to a computational approach requires strategic planning. Whether you are a student or a professional looking to make the transition, this guide will provide a step-by-step roadmap to help you navigate the shift from biotechnology to bioinformatics.

Why Transition from Biotechnology to Bioinformatics?

Bioinformatics is revolutionizing life sciences by integrating biological data with computational tools to uncover insights in genomics, proteomics, and drug discovery. The field offers diverse career opportunities in research, pharmaceuticals, healthcare, and AI-driven biological data analysis.

If you are skilled in laboratory techniques but wish to expand your expertise into data-driven biological research, bioinformatics is a rewarding career choice.

Step-by-Step Guide to Transition from Biotechnology to Bioinformatics

Step 1: Understand the Basics of Bioinformatics

Before making the switch, it’s crucial to gain a foundational understanding of bioinformatics. Here are key areas to explore:

Biological Databases – Learn about major databases like GenBank, UniProt, and Ensembl.

Genomics and Proteomics – Understand how computational methods analyze genes and proteins.

Sequence Analysis – Familiarize yourself with tools like BLAST, Clustal Omega, and FASTA.

🔹 Recommended Resources:

Online courses on Coursera, edX, or Khan Academy

Books like Bioinformatics for Dummies or Understanding Bioinformatics

Websites like NCBI, EMBL-EBI, and Expasy

Step 2: Develop Computational and Programming Skills

Bioinformatics heavily relies on coding and data analysis. You should start learning:

Python – Widely used in bioinformatics for data manipulation and analysis.

R – Great for statistical computing and visualization in genomics.

Linux/Unix – Basic command-line skills are essential for working with large datasets.

SQL – Useful for querying biological databases.

🔹 Recommended Online Courses:

Python for Bioinformatics (Udemy, DataCamp)

R for Genomics (HarvardX)

Linux Command Line Basics (Codecademy)

Step 3: Learn Bioinformatics Tools and Software

To become proficient in bioinformatics, you should practice using industry-standard tools:

Bioconductor – R-based tool for genomic data analysis.

Biopython – A powerful Python library for handling biological data.

GROMACS – Molecular dynamics simulation tool.

Rosetta – Protein modeling software.

🔹 How to Learn?

Join open-source projects on GitHub

Take part in hackathons or bioinformatics challenges on Kaggle

Explore free platforms like Galaxy Project for hands-on experience

Step 4: Work on Bioinformatics Projects

Practical experience is key. Start working on small projects such as:

✅ Analyzing gene sequences from NCBI databases ✅ Predicting protein structures using AlphaFold ✅ Visualizing genomic variations using R and Python

You can find datasets on:

NCBI GEO

1000 Genomes Project

TCGA (The Cancer Genome Atlas)

Create a GitHub portfolio to showcase your bioinformatics projects, as employers value practical work over theoretical knowledge.

Step 5: Gain Hands-on Experience with Internships

Many organizations and research institutes offer bioinformatics internships. Check opportunities at:

NCBI, EMBL-EBI, NIH (government research institutes)

Biotech and pharma companies (Roche, Pfizer, Illumina)

Academic research labs (Look for university-funded projects)

💡 Pro Tip: Join online bioinformatics communities like Biostars, Reddit r/bioinformatics, and SEQanswers to network and find opportunities.

Step 6: Earn a Certification or Higher Education

If you want to strengthen your credentials, consider:

🎓 Bioinformatics Certifications:

Coursera – Genomic Data Science (Johns Hopkins University)

edX – Bioinformatics MicroMasters (UMGC)

EMBO – Bioinformatics training courses

🎓 Master’s in Bioinformatics (optional but beneficial)

Top universities include Harvard, Stanford, ETH Zurich, University of Toronto

Step 7: Apply for Bioinformatics Jobs

Once you have gained enough skills and experience, start applying for bioinformatics roles such as:

Bioinformatics Analyst

Computational Biologist

Genomics Data Scientist

Machine Learning Scientist (Biotech)

💡 Where to Find Jobs?

LinkedIn, Indeed, Glassdoor

Biotech job boards (BioSpace, Science Careers)

Company career pages (Illumina, Thermo Fisher)

Final Thoughts

Transitioning from biotechnology to bioinformatics requires effort, but with the right skills and dedication, it is entirely achievable. Start with fundamental knowledge, build computational skills, and work on projects to gain practical experience.

Are you ready to make the switch? 🚀 Start today by exploring free online courses and practicing with real-world datasets!

#bioinformatics#biopractify#biotechcareers#biotechnology#biotech#aiinbiotech#machinelearning#bioinformaticstools#datascience#genomics#Biotechnology

4 notes

·

View notes

Text

(Ramble without much point)

Meditating today on how Perfectly Ordinary dev tasks get recursively byzantine at the drop of a hat.

Like, ok, we're writing Haskell and there's a fresh ghc and a fresh LTS snapshot of compatible packages; would be nice to upgrade. How hard could that be.

Ah, right, we're building with Bazel. Need to reconfigure it to point at the right ghc, update our custom snapshot to include deps not in current LTS, regenerate the autogenerated Bazel build file for all the 3rd-party deps we use, and check that the projects still builds. Wait, no; regeneration instructions are bullshit, we have not migrated to the new autogen system yet & need to use the old one.

The project...does not build; a dependency fails with incomprehensible problems in some files generated by Cabal, the lower-level build system. Aha, we had this problem before; Cabal does something bazel-incompatible there, fix will land in the next ghc/Cabal release, & for now the workaround is to use a forked Cabal for all the packages with the problem. The autogen tool we use has a list of affected packages that need this treatment; let's inject the dep there.

That doesn't help, why? Mmm, aha, this dep has several hardcoded setup-time deps, and the autogen tool uses the default map-merging operator, which....discards the custom Cabal dep we thought we inserted. Ok, let's force it to union instead.

That still doesn't help, why. Is it perhaps the other setup-time dependency that was already there. ........why, yes, of course; the setup problem in general is that a package's Setup.hs uses functions from fresh, unpatched Cabal to generate some incoherent-wrt-bazel code; the setup problem here is that Setup.hs of this package uses a function from cabal-doctest to do something fancy, and cabal-doctest is basically a wrapper on Cabal's function. And for some reason, we build cabal-doctest with unpatched Cabal, & so obviously it fails later.

(How do we know that cabal-doctest has a wrong Cabal wired in, and that is the extent of the problem? What a good question! It turns out if we start inlining cabal-doctest's functions into the Setup.hs of the faulty package, they complain about type mismatches of type X defined in Cabal-3.6 and the same type X defined in Cabal 3.8. Also, if we inline the entirety of cabal-doctest, the problem merrily goes away. Also, cabal-doctest build artifact binaries contain mentions of Cabal-3.8.)

Ok, so how do we make cabal-doctest depend on/use the correct patched version of Cabal? Specify Cabal version in Bazel's build config file? No. There are.....no further obvious avenues to affect the build of cabal-doctest. .......how tho.

Ok, fine, time to learn what bazel build action does exactly. It generates faulty but coherent build config and artifacts all by itself; it figures out somewhere that it's supposed to wire in Cabal-3.8. Let's find out where and why and how to make it not.

First, we'll need to overcome bazel's tendency to uninformatively Do Absolutely Nothing when it's asked to execute a cached action. The normal way to force bazel to rebuild something so we can look at debug output is.... (...) .....not a thing, apparently? (Why would you make a build system you can't command to force a rebuild. What the fuck, bazel devs.) Oh, ok, thank you, internet article about debugging bazel builds; if the recommended way is to harmlessly alter the source of cabal-doctest to force cache misses, we'll do just that, good thing we have it downloaded and unpacked anyway.

Now, unpeeling the bazel action: bazel can be made to output the exact command it runs; we can then run it manually, without appeals to cache; good. Moreover, we can look at the executable of the command, maybe find some verbosity levers. (...) Not as such, but thankfully it's just a python script, so we can figure what it does by reading; what's more, we can tinker with it manually, and print() will give us all the verbosity we can ask for.

So what does that script, cabal_wrapper, do? (...) ...ignoring irrelevant parts: sets up environment flags and...calls...another...python script...cabal_wrapper.py. You know what, I don't care why it works like this, it's probably useful for concern-separation reasons, the internal cabal_wrapper.py is just as amenable to forced verbosity by way of print(), let's just move on to what it does.

Oh hey, a directory that contains some faulty build artifacts is mentioned by exact path put into a variable. Let's see where it's used; I bet we'll run into some specific line that produces or consumes faulty outputs & thereby will have a nice localized point from which to backtrack. Use in a function that further used somewhere, use in a variable that etc, argument to run runghc configure, argument to run runghc register --gen-pkg-config, aha, that's exactly the name of one of the faulty artifacts, that's interesting. Experiment: is it true that the faulty artifact does not exist before that line and exists after? Yes! Awesome; let's dig into what this line does. (It's almost certainly an invocation of runghc wrapper, but exact arguments are important.)

(There was supposed to be an ending here, but now it's been months and i forgot how it ended. Sorry.)

3 notes

·

View notes

Text

The Growing Importance of Data Science in the Digital Age Data science has emerged as a transformative field, fueling industries across the globe with actionable insights. In today’s data-driven world, organizations are leveraging data to make informed decisions, predict trends, and uncover hidden patterns.

2 notes

·

View notes

Text

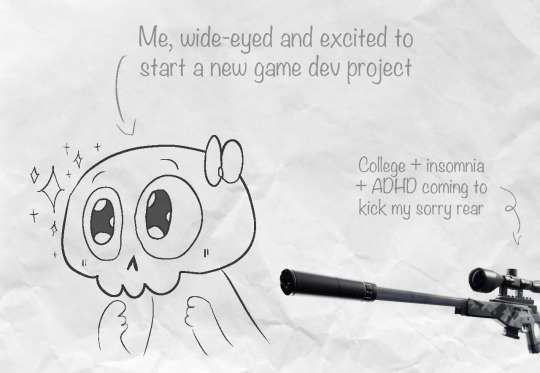

Capstone Log #3 - A Retrospective

For my third post, since the break is over, I've decided to touch on the work I did before the break, so I can have a fresh start. This is a written reflection instead of a gameplay and code overview, as I'll be going over the three Godot projects I've made so far, and talking about my next steps in becoming confident without tutorials and being able to sustain my own code without hitting any major roadblocks.

The first game I made with Godot was a simple platformer, following a tutorial by former Unity developer Brackeys, who returned from his four year hiatus off of YouTube to start creating Godot tutorials as many game developers have switched from Unity to Godot, due to Unity's increased pricing polices. Godot is free, open source, and can create both 2D and 3D games, which makes it a great alternative to the former.

The tutorial I followed created a simple platformer, which is often one of the first games someone creates in a new engine, as it is a simple formula, and teaches the basics of code, so it is easy for newcomers to get a grasp on the differences between any coding languages they've used previously. GDScript, Godot's coding language, has many similarities to other widespread coding languages like Python and JavaScript. A gameplay video and the tutorial I used will be attached, The game does not have a lot of substance, but it has collectable coins, moving enemies, moving platforms, music, a death system, and variables that change text, some of which are in common with the other games I've made.

Moving on from my first project, the two other games I've completed (as showcased in my previous posts), have only built upon the core concepts I've picked up with Brackey's video and the video course I've been watching from GDQuest. Next, my goal is to follow "Learn to Code from Zero", a free open source app, to learn more about GDScript and Godot without reliance on being told every step.

youtube

3 notes

·

View notes

Text

Best Python projects with source code

Want to build real world Python projects? Takeoff Projects brings you best Python projects with source code to boost your coding capabilities! Whether you’re a beginner or an expert, our projects cover web development, data science, AI, and more. Get hands on experience in, understand coding common logic, and improve your resume with practical projects. Each project comes with whole source code and guidance, making learning easy. Start your Python projects today with Takeoff Projects and turn ideas into reality.

Python is one of the most popular programming languages, and working on real world projects helps improve your coding skills. Python projects with source code give you hands on experience and a better understanding of how different applications work. Whether you are a beginner or an experienced developer, these projects assist in learning web improvement, data science, automation, and AI. Having access to source code permits you to discover, regulate, and beautify the undertaking primarily based to your necessities.

Building Python projects not only sharpens your skills but also boosts your resume. Employers prefer candidates with practical knowledge, and working on real projects sets you apart. From simple applications to advanced structures, these projects assist you coding good judgment and trouble fixing strategies. By running on Python projects with source code, you could enhance your programming abilities and build a strong portfolio to showcase your information.

Conclusion: Working on Python projects with source code is the best way to improve your coding skills and get real world experience. It helps you understand programming concepts, develop problem solving abilities, and build a strong portfolio. Whether you are a beginner or an expert, hands on projects make learning more effective. Takeoff Projects offers a wide range of Python projects with complete source code to help you grow in your career. Start building your own projects today and take your Python skills to the next level.

0 notes

Text

So let's get into the nitty-gritty technical details behind my latest project, the National Blue Trail round-trip search application available here:

This project has been fun with me learning a lot about plenty of technologies, including QGis, PostGIS, pgRouting, GTFS files, OpenLayers, OpenTripPlanner and Vita.

So let's start!

In most of my previous GIS projects I have always used custom made tools written in ruby or Javascript and never really tried any of the "proper" GIS tools, so it was a good opportunity for me to learn a bit of QGIS. I hoped I could do most of the work there, but soon realized it's not fully up to the job, so I had to extend the bits to other tools at the end. For most purposes I used QGis to import data from various sources, and export the results to PostGIS, then do the calculations in PostGIS, re-import the results from there and save them into GeoJSON. For this workflow QGIS was pretty okay to use. I also managed to use it for some minor editing as well.

I did really hope I could avoid PostGIS, and do all of the calculation inside QGIS, but its routing engine is both slow, and simply not designed for multiple uses. For example after importing the map of Hungary and trying to find a single route between two points it took around 10-15 minutes just to build the routing map, then a couple seconds to calculate the actual route. There is no way to save the routing map (at least I didn't find any that did not involve coding in Python), so if you want to calculate the routes again you had to wait the 10-15 minute of tree building once more. Since I had to calculate around 20.000 of routes at least, I quickly realized this will simply never work out.

I did find the QNEAT3 plugin which did allow one to do a N-M search of routes between two set of points, but it was both too slow and very disk space intense. It also calculated many more routes than needed, as you couldn't add a filter. In the end it took 23 hours for it to calculate the routes AND it created a temporary file of more than 300Gb in the process. After realizing I made a mistake in the input files I quickly realized I won't wait this time again and started looking at PostGIS + pgRouting instead.

Before we move over to them two very important lessons I learned in QGIS:

There is no auto-save. If you forget to save and then 2 hours later QGIS crashes for no reason then you have to restart your work

Any layer that is in editing mode is not getting saved when you press the save button. So even if you don't forget to save by pressing CTRL/CMD+S every 5 seconds like every sane person who used Adobe products ever in their lifetimes does, you will still lose your work two hours later when QGIS finally crashes if you did not exit the editing mode for all of the layers

----

So let's move on to PostGIS.

It's been a while since I last used PostGIS - it was around 11 years ago for a web based object tracking project - but it was fairly easy to get it going. Importing data from QGIS (more specifically pushing data from QGIS to PostGIS) was pretty convenient, so I could fill up the tables with the relevant points and lines quite easily. The only hard part was getting pgRouting working, mostly because there aren't any good tutorials on how to import OpenStreetMap data into it. I did find a blog post that used a freeware (not open source) tool to do this, and another project that seems dead (last update was 2 years ago) but at least it was open source, and actually worked well. You can find the scripts I used on the GitHub page's README.

Using pgRouting was okay - documentation is a bit hard to read as it's more of a specification, but I did find the relevant examples useful. It also supports both A* search (which is much quicker than plain Dijsktra on a 2D map) and searching between N*M points with a filter applied, so I hoped it will be quicker than QGIS, but I never expected how quick it was - it only took 5 seconds to calculate the same results it took QGIS 23 hours and 300GB of disk space! Next time I have a GIS project I'm fairly certain I will not shy away from using PostGIS for calculations.

There were a couple of hard parts though, most notably:

ST_Collect will nicely merge multiple lines into one single large line, but the direction of that line looked a bit random, so I had to add some extra code to fix it later.

ST_Split was similarly quite okay to use (although it took me a while to realize I needed to use ST_Snap with proper settings for it to work), but yet again the ordering of the segments were off a slight bit, but I was too lazy to fix it with code - I just updated the wrong values by hand.

----

The next project I had never used in the past was OpenTripPlanner. I did have a public transport project a couple years ago but back then tools like this and the required public databases were very hard to come by, so I opted into using Google's APIs (with a hard limit to make sure this will never be more expensive than the free tier Google gives you each month), but I have again been blown away how good tooling has become since then. GTFS files are readily available for a lot of sources (although not all - MAV, the Hungarian Railways has it for example behind a registration paywall, and although English bus companies are required to publish this by law - and do it nicely, Scottish ones don't always do it, and even if they do finding them is not always easy. Looks to be something I should push within my party of choice as my foray into politics)

There are a couple of caveats with OpenTripPlanner, the main one being it does require a lot of RAM. Getting the Hungarian map, and the timetables from both Volánbusz (the state operated coach company) and BKK (the public transport company of Budapest) required around 13GB of RAM - and by default docker was only given 8, so it did crash at first with me not realizing why.

The interface of OpenTripPlanner is also a bit too simple, and it was fairly hard for me to stop it from giving me trips that only involve walking - I deliberately wanted it to only search between bus stops involving actual bus travel as the walking part I had already done using PostGIS. I did however check if I could have used OpenTripPlanner for that part as well, and while it did work somewhat it didn't really give optimal results for my use case, so I was relieved the time I spend in QGIS - PostGIS was not in vain.

The API of OpenTripPlanner was pretty neat though, it did mimic Google's route searching API as much as possible which I used in the past so parsing the results was quite easy.

----

Once we had all of the data ready, the final bit was converting it to something I can use in JavaScript. For this I used my trusted scripting language I use for such occasion for almost 20 years now: ruby. The only interesting part here was the use of Encoded Polylines (which is Google's standard of sending LineString information over inside JSON files), but yet again I did find enough tools to handle this pretty obscure format.

----

Final part was the display. While I usually used Leaflet in the past I really wanted to try OpenLayers, I had another project I had not yet finished where Leaflet was simply too slow for the data, and I had a very quick look at OpenLayers and saw it could display it with an acceptable performance, so I believed it might be a good opportunity for me to learn it. It was pretty okay, although I do believe transparent layers seem to be pretty slow under it without WebGL rendering, and I could not get WebGL working as it is still only available as a preview with no documentation (and the interface has changed completely in the last 2 months since I last looked at it). In any case OpenLayers was still a good choice - it had built in support for Encoded Polylines, GPX Export, Feature selection by hovering, and a nice styling API. It also required me to use Vita for building the application, which was a nice addition to my pretty lacking knowledge of JavaScript frameworks.

----

All in all this was a fun project, I definitely learned a lot I can use in the future. Seeing how well OpenTripPlanner is, and not just for public transport but also walking and cycling, did give me a couple new ideas I could not envision in the past because I could only do it with Google's Routing API which would have been prohibitively expensive. Now I just need to start lobbying for the Bus Services Act 2017 or something similar to be implemented in Scotland as well

21 notes

·

View notes

Note

Isn’t the data you just posted the same as the data last week?

Thank you, eagle-eyed anon! Yes, yes it was but now it has been fixed, so the newest post for 02/05/2024 is accurate.

And while I was trying to figure out why the same data showed up twice, I found a bunch of other issues in my code, so keep reading if you want to hear about the 10 other things I fixed while I was fixing that issue (thanks ADHD!)

As it turns out, it was the exact same info as last week because there was an issue in my workflow that caused it to not run properly so the data in the spreadsheet that powers the dashboard was stale.

But that prompted me to ask myself "didn't I build in a notification system that's supposed to let me know when something fails?" and when I investigated I realized they had stopped working last June and I never noticed (it sends EITHER a success or failure email every week, so I really should've noticed).

When I investigated that, I realized June was when I split the backend data sources between two Google Sheets because they were getting so big - originally I had them in separate tabs of the same sheet. However the notifications were still set up for only the original sheet, so I fixed that.

Also while I was looking at the sheets, I realized there were still a bunch of "Uncategorized" fandoms in the data, even though I removed that category from the workflow after realizing that they don't have fic counts on that Category page. So instead of pulling fic count, I was accidentally pulling a bunch of years and passing them off as fic count. I fixed that a couple weeks ago, but I didn't clear old data so I did that now.

THEN when I finally remembered the original problem I had been solving, I started trying to figure out whether the data hadn't been recorded for 2/5 at all (I save it in a JSON first) or if the sheet just hadn't been properly updated. While doing some checks, I realized that most of the categories had data for 2/5 EXCEPT Video Games & Theater?? Which honestly I still haven't fully figured out why, but I was able to manually run the size scrape for those two pages again, so at least they have data now.

Finally, while I was re-running the scripts to try to figure out why it wasn't running for Video Games, I had it start printing out various pieces in the loops and noticed it was taking forever to get through a category and seemed to go slower and slower by letter. Turns out a small error in my code had it looping through all the fandoms for a category starting with a specific letter (A) but then instead of moving on and doing B next, it was doing (A, B), then (A,B,C) etc. This wasn't actually causing errors of any kind in the results, but it was slowing the whole thing down and unnecessarily writing over the size count 25+ times for the fandoms at the beginning of the alphabet, so I've now fixed that.

The best part is even though I just wrote all of this out, I'm still not quite sure where in there I fixed the issue that caused the stale data in the first place lol. But at some point I fixed some other things, re-ran the "write_to_sheets" script (which I of course tried first thing and it didn't do anything) and this time it worked and actually updated. So hooray! And clearly this whole thing is being held together by spit & glue (it was a project I set for myself when I was learning Python) so maybe I should go back and rewrite some things now that I'm actually paid to do coding for my job and have a bit more experience. If you're still reading, KUDOS TO YOU and hope you enjoy my little project. [[Maybe leave me a heart in the comments if you do, because I accidentally spent way too long on this tonight and it's super late and I still have actual work to do (YIKES).]]

19 notes

·

View notes

Note

Hi! If you don’t mind, I’d like to ask you a question about that visual novel that you made with Ark and Twig. I’m a new cs major, so I’d like to start making some projects outside of my classes, and I figured a simple game like that could be a fun way to start, but I have no ideas where to begin looking for resources on how to code any of it, and I only currently know the basics of python. So I was wondering if you’d be able to point me to some videos or tutorials (or even the skills that I would need to search up) that I could use to learn how to make something similar to what you made. Thank you so much for taking the time to read this!

I actually made that visual novel in a no-code engine called TyranoBuilder, but I’m moving over to a popular Python-based visual novel engine called RenPy for my next visual novel! RenPy has a very dedicated community with a lot of resources associated with it— I’ll list a few I’m familiar with below :>

The official documentation is, as always, a stalwart source of information… though it’s understandably very intimidating to read through so much, hehe.

The YouTube channel Visual Novel Design doesn’t talk much about coding, but does showcase useful plugins and ideas about how to create visual novels.

Another YouTube channel, Zeil Learnings, has more information on the coding side of things. I very much recommend this video of hers that helps you get through the basics of RenPy in under 10 minutes!

Swedish Game Dev is another useful resource with all sorts of coding tutorials for implementing specific features.

Also, a useful note: despite being described as a game engine, files for RenPy must be written in a separate code editor and then plugged into RenPy itself. This might be the norm, but I’m not certain— I’m more used to RPG Maker and Godot. Either way, I enjoy using Visual Studio Code as my code editor, as that’s what I use for college, and it has a very handy RenPy syntax extension available!

Wishing us both luck as we dive into the world of RenPy! I’m in a similar boat as you— barely starting my web + software dev degree— and have only the fundamentals of Python to my name. I hope this is helpful. If not, send in another ask and I’ll try to answer better!

16 notes

·

View notes

Text

Film #917: 'The Cool World', dir. Shirley Clarke, 1963.

For all intents and purposes, Shirley Clarke's landmark film about youth gangs in Harlem, The Cool World, is considered a lost film. It was produced by the famous documentary filmmaker Frederick Wiseman, and when Clarke died in 1997, the rights went to Wiseman. Despite Milestone Films embarking on an eight-year project to restore and make available as many of Clarke's films as possible, Wiseman has never allowed The Cool World to be included in this series or any other. For over twenty years, the only way to see this film is to have been fortunate enough to see it at a film festival, arts show screening, or touring gallery event.

Or, hypothetically, you could find a copy ripped from a screening on German broadcast television, with the German subtitles hard-coded into the bottom of the screen, uploaded to a website you've never heard of before. This particular hypothetical uploader has made it their pastime, evidently, to track key black films from the 1960s and 1970s, and make them available to whoever wants to see them. So it could be possible to watch the film, after all. And if you did, you'd likely find that it is an amazing piece of filmmaking. And you'd likely be a little mad at Frederick Wiseman.

In 1960s Harlem, Duke (Hampton Clanton) is looking to rise through the ranks of his local gang, the Royal Pythons. Convinced that if he gets his hands on a gun, he will be a man to be feared and respected, he makes the acquaintance of a pimp and racketeer named Priest (Carl Lee). Duke's relationship with his mother, who clearly cares for him but finds him difficult to communicate with, declines, and when the Pythons take over an apartment to set up their base, Duke practically lives there full-time. The gang's current president, Blood, brings his girlfriend Luanne to stay in the apartment as the gang's resident prostitute. Luanne becomes sweet on Duke quickly, and she privately supports Duke's aspirations.Duke continues to try and save enough money to buy a gun from Priest by selling cigarettes and occasionally stealing. In his travels around Harlem, he is often witness to discussions about the future prospects of black men in society, and the tactics of the civil rights movement.

Blood is revealed to be a heroin addict, which is against the rules of the Royal Pythons, and he is ousted, with Duke taking his position. Blood challenges Duke to a fight, but the scene is interrupted by the news that Littleman, another member of the Pythons, has been killed by the Wolves, a rival gang. Duke decides to lead a rumble against the Wolves which will take place several nights afterwards. In the meantime, he takes Luanne on a date to Coney Island so she can see the ocean, but she disappears during the date and Duke returns to the gang's headquarters broken-hearted. The night of the rumble, Priest arrives, asking if he can lay low there for a few days, but does not explain the type of trouble he's in. Duke leads the rest of the gang in the fight, during which several members of both gangs are killed, but Duke manages to successfully kill the leader of the Wolves. Coming back to the headquarters, he discovers that Priest has been shot in the head, with the scene posed to make it look like a suicide. Frightened, Duke flees to his old home, but is arrested and taken away. As the credits roll, the soundtrack is filled with voices approvingly remarking on what a "cold killer" Duke is.

It should be noted that, while the version of the film I've seen does feel pretty complete, it does run for 15 minutes shorter than what several sources claim the film to be, and of course I have no other version to compare it to.

There is a valid criticism to be made of The Cool World, which is that it's a black story told by a white director and adapted from a novel by a white author. This criticism is not just a modern phenomenon: several reviews at the film's release, most notably the Los Angeles Times, observed that this type of story was better told by black writers and directors. That said, Clarke's empathy and engagement with the film's subjects is not in doubt. Films about youth crime were usually relegated to the 'moral panic' genre, as a problem that needed to be solved, and as a scaremongering tactic for prurient middle-class audiences. While Duke is arrested at the film's end, the film doesn't pass judgment on the validity of Duke's beliefs or ends. He thinks that a gun will get him power, and he is correct. Clarke presents a remarkably even-handed version of life in a New York youth gang, where violence is abrupt and meaningless, most crime is petty and interspersed with days of just roaming aimlessly, and arrest is likely but doesn't undo the status you've acquired.

Supporting this view throughout the film is the comparative sterility of the world outside the gang. While the life of the Royal Pythons isn't always interesting, it is a space of care and connection between its members, and the film's narrative pulls these details to the foreground. Duke brings Littleman comic books; Luanne wants to go to San Francisco to see the ocean and is enthralled when Duke tells her that they have an ocean in New York City too. Even in one of the most fraught scenes, where Duke ousts Blood as the gang's leader, Clarke fills the film with moments of silence where you can sense the uncertainty of the characters as they calculate the effects of their actions. In comparison, the school trip from which Duke flees at the start of the film is full of libraries and stock exchanges, a world that has no meaning or relevance to the lives of the black teenagers. They will never stay at the Plaza Hotel, the film says, so what's the point in showing it to them?

Likewise, Duke's mother has a life overstuffed with worries and concerns, and while she is anxious for Duke's future, his seeming apathy breeds resentment in her. He is making her life harder. A film with a strong moralising bent would suggest that Duke's interest in the Pythons is an indictment of his mother's failure, and an indictment of the absence of his father. In other words, it would normally be presented as a failure of the individuals to place themselves correctly. There is none of this in The Cool World. Duke simply moves from one set of social structures that have no meaningful space for him to another set of social structures that give him what he wants. Whether he should want that, or whether there are better paths for him to take, is ultimately irrelevant. Of course, it's not ideal that the world Duke lives in offers him no better alternatives, but Clarke's film is not interested in blaming Duke for that, and builds from the underlying premise that Duke is a sensitive, creative and capable young man who is doing the best he can with the lot he has been given.

One thing that is consistently remarked upon about The Cool World is its visual style. Clarke's director of photography, Baird Bryant, imbues every available shot with unexpected kinetic energy - even the school trip at the beginning of the film is shot from the window of a moving bus, with disconnected jump cuts, and the open credits roll right-to-left instead of bottom-to-top, against an almost Expressionist backdrop of sun through the trees. The rumble between the Pythons and the Wolves at the end of the film is similarly surprising, shot almost exclusively in extreme light and dark, with almost no mid-tones, and featuring fight choreography that feels gritty and balletic at the same time. Throughout, a jazzy score led by Dizzy Gillespie hits the same tones as the plot, lilting when it needs to be, before abruptly breaking into discordant and shrieking solos.

In regards to the film's style, though, I think the most powerful scene is the one in which Duke and Luanne visit Coney Island together. It falls at an important moment in the relationship between the two characters - until this point, Luanne has seemed sheltered, despite her sexuality, unaware that the Atlantic Ocean even existed, and it represents how significant her relationship with Duke has been in opening her eyes to a wider world. It's easy to read her abandoning Duke as a sign that she has made an escape into this wider world that she has discovered, while Duke is unable to make the same movement.

Meanwhile, this scene represents for Duke the most traditional moment of romance for him - he has the status and he gets the girl. While the audience may not trust Luanne's faithfulness, Duke certainly does. The editing of the scene, on both a visual and an aural level, performs double duty. For Duke, the giddiness of the jump cuts and the carnivalesque score and soundtrack fits perfectly with an enthusiastic jump into young love - an escape that he has never been permitted to experience before. However, Clarke uses rhythmic and overlapping editing, linking multiple carnival games together almost seamlessly, making it appear as though Duke is caught endlessly repeating the same actions. Somewhere in this chaos, Luanne disappears, and Duke is left alone, on an eerily deserted beachfront. Her disappearance is so complete that she leaves no trace behind on the narrative. It's as though she was never really there at all.

The Coney Island scene, more than any other in this brilliant and engaging film, makes me annoyed that Wiseman has never allowed it to be released to a wider public. I would love to be able to link to a video of the scene so that it can be appreciated as an excellent example of film editing. I would show this scene to first-year students if I could. I genuinely cannot understand why Clarke's most distinguished and successful film is being kept away from a wider appreciation. Milestone Films had a petition for a while, asking Wiseman to make the film available, but it was never successful, and I doubt a polite email would stand a chance of convincing a 94-year-old master documentarian to change his mind.

Maybe I will see The Cool World on the big screen one day, and find out once and for all if I've been writing about the film's full version. I'm not usually in the habit of encouraging people to go out of their way to pirate - I am a fan of supporting media producers where I can - but this might be your only chance to see an excellent film, if it appeals to you at all. The internet is an ephemeral place, where some things live forever but other things disappear before you realise what they were. The Cool World is one of the latter. I waited twenty years to see it, and it was worth the wait.

5 notes

·

View notes