#software testing techniques

Explore tagged Tumblr posts

Text

How to Balance Fixing Performance Issues and Adding New Features in Web Applications?

In today’s digital landscape, web applications are essential for business operations, marketing, and consumer involvement. As organizations expand and consumer expectations rise, development teams are frequently confronted with the difficult task of balancing two key priorities: addressing performance issues and introducing new features.

While boosting performance improves the user experience and increases efficiency, new features are required to remain competitive and meet market demands. Prioritizing one over the other, on the other hand, might have negative consequences—performance concerns can lead to a poor user experience while failing to innovate can result in a competitive disadvantage.

This blog delves into how to balance improving performance and introducing new features to web apps, allowing firms to satisfy technical and market demands efficiently.

Why Balancing Performance and New Features Is Crucial

A web application‘s success depends on both its performance and its features. However, relying entirely on one might result in imbalances that impair both user happiness and business progress.

Performance:Performance is an important component that directly influences user retention and happiness. Users can become frustrated and leave if the application has slow loading times, crashes, or problems. Ensuring that your web application runs smoothly is essential since 53% of mobile consumers would quit a site that takes more than three seconds to load.

New Features:On the other hand, constantly adding new features keeps users interested and promotes your company as innovative. New features generate growth by attracting new consumers and retaining existing ones who want to experience the most recent changes.

The dilemma is deciding when to prioritize bug fixes over new feature development. A poor balance can harm both performance and innovation, resulting in a subpar user experience and stagnation.

Common Performance Issues in Web Applications

Before balancing performance and features, it’s important to understand the common performance issues that web applications face:

Slow Load Times: Slow pages lead to higher bounce rates and lost revenue.

Server Downtime: Frequent server outages impact accessibility and trust.

Poor Mobile Optimization: A significant portion of web traffic comes from mobile devices and apps that aren’t optimized for mobile fail to reach their potential.

Security Vulnerabilities: Data breaches and security flaws harm credibility and user trust.

Bugs and Glitches: Software bugs lead to poor user experiences, especially if they cause the app to crash or become unresponsive.

Strategic Approaches to Fixing Performance Issues

When performance issues develop, they must be handled immediately to guarantee that the online application functions properly. Here are techniques for improving performance without delaying new feature development:

Prioritize Critical Issues:Tackle performance issues that have the most significant impact first, such as slow loading times or security vulnerabilities. Use analytics to identify bottlenecks and determine which areas require urgent attention.

Use a Continuous Improvement Process:Continuously monitor and optimize the application’s performance. With tools like Google PageSpeed Insights, you can track performance metrics and make incremental improvements without major overhauls.

Optimize Database Queries:Slow database queries are one of the leading causes of web app performance issues. Optimize queries and ensure that the database is indexed properly for faster access and retrieval of data.

Reduce HTTP Requests:The more requests a page makes to the server, the slower it loads. Minimize requests by reducing file sizes, combining CSS and JavaScript files, and utilizing caching.

5. Leverage Caching and CDNs: Use caching strategies and Content Delivery Networks (CDNs) to deliver content quickly to users by storing files in multiple locations globally.

Why Adding New Features is Essential for Growth

In the rapidly changing digital environment, businesses must continually innovate to stay relevant. Adding new features is key to maintaining a competitive edge and enhancing user engagement. Here’s why:

User Expectations:Today’s consumers expect personalized experiences and constant innovation. Failure to add new features can lead to customer churn, as users may feel your web application no longer meets their needs.

Market Differentiation:Introducing new features allows your application to stand out in the marketplace. Unique functionalities can set your app apart from competitors, attracting new users and increasing customer loyalty.

Increased Revenue Opportunities:New features can lead to additional revenue streams. For example, adding premium features or new integrations can boost the app’s value and lead to increased sales or subscription rates.

4. Feedback-Driven Innovation: New features are often driven by user feedback. By continuously developing and adding features, you create a feedback loop that improves the overall user experience and fosters customer satisfaction.

Read More: https://8techlabs.com/how-to-balance-fixing-performance-issues-and-adding-new-features-in-web-applications-to-meet-market-demands-and-enhance-user-experience/

#8 Tech Labs#custom software development#custom software development agency#custom software development company#software development company#mobile app development software#bespoke software development company#bespoke software development#nearshore development#software development services#software development#Website performance testing tools#Speed optimization for web apps#Mobile-first web app optimization#Code minification and lazy loading#Database indexing and query optimization#Agile vs Waterfall in feature development#Feature flagging in web development#CI/CD pipelines for web applications#API performance optimization#Serverless computing for better performance#Core Web Vitals optimization techniques#First Contentful Paint (FCP) improvement#Reducing Time to First Byte (TTFB)#Impact of site speed on conversion rates#How to reduce JavaScript execution time#Web application performance optimization#Fixing performance issues in web apps#Web app performance vs new features#Website speed optimization for better UX

0 notes

Text

hadnt used anna's in a while (bless the ppl that know how to use git to share books lol) and holy fuck ppl werent exagerating abt how bad it got wrt download speeds & monetary predation. i will not pay to download from a server thats not artificially bloated lol hope your servers explode

#15min download time for a PDFFFFF and it failed it failed twice UGH#i just want to read about software test patterns and techniques sniff sniff#libgen and zlib only have a fuckass chn file -_- what do i look like? a nerd? no thanksies#its not my internet bc i was getting other stuff from zlib and it was just bam in the puter downloaded in a second with full metadata btw

0 notes

Text

Effective Techniques for Exploratory Testing in Mobile App Development

#Effective Techniques for Exploratory Testing in Mobile App Development#Exploratory Testing in Mobile App Development#Software Testing Services In India#Software Testing Services India#Software Testing Services#Software Testing Company In India#Software Testing Company India#Software Testing Company#Software Testing

0 notes

Text

#advanced typing techniques#advanced keyboard typing#advanced typing test#advanced typing course#advanced typing tutorial#advanced english typing skills#advanced typing speed#advanced typing training#advanced typing software#advanced touch typing#advanced typing lessons

0 notes

Text

Build API Integrations software testing technique accross any Automation-Cloud Revolute

0 notes

Text

A summary of the Chinese AI situation, for the uninitiated.

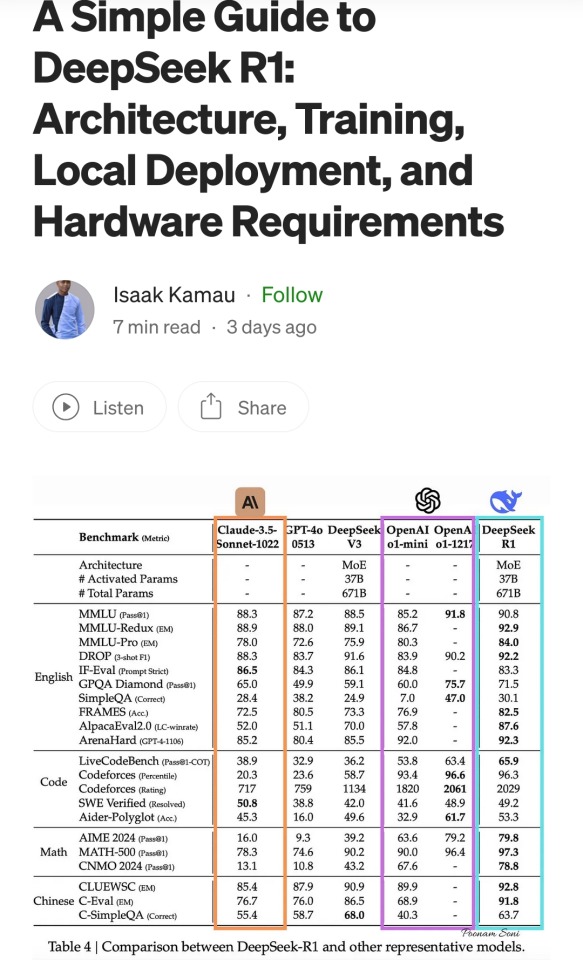

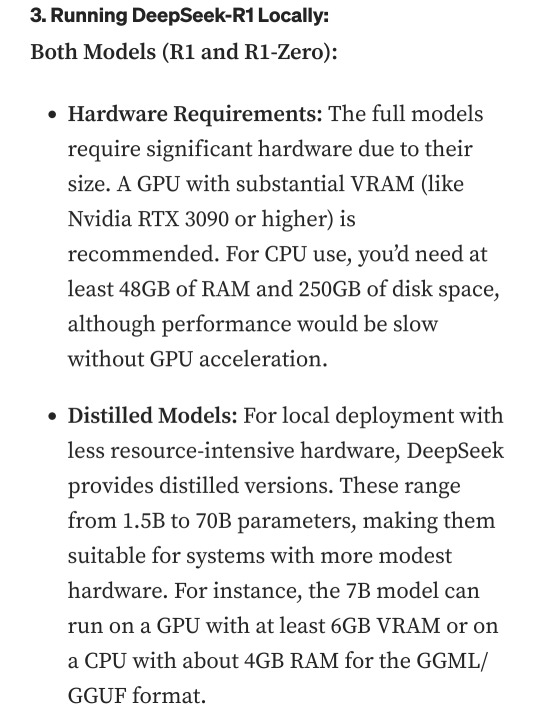

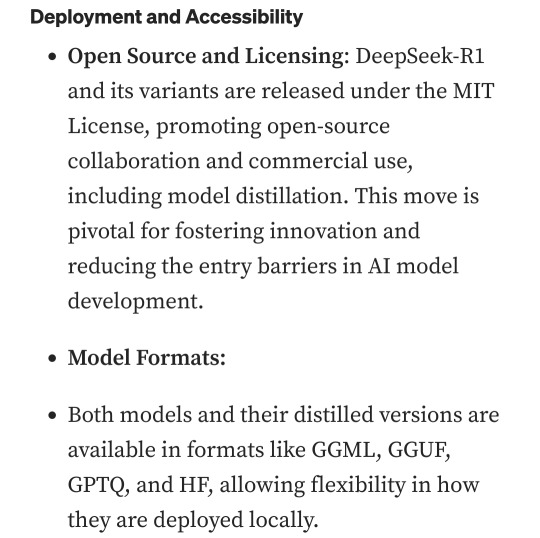

These are scores on different tests that are designed to see how accurate a Large Language Model is in different areas of knowledge. As you know, OpenAI is partners with Microsoft, so these are the scores for ChatGPT and Copilot. DeepSeek is the Chinese model that got released a week ago. The rest are open source models, which means everyone is free to use them as they please, including the average Tumblr user. You can run them from the servers of the companies that made them for a subscription, or you can download them to install locally on your own computer. However, the computer requirements so far are so high that only a few people currently have the machines at home required to run it.

Yes, this is why AI uses so much electricity. As with any technology, the early models are highly inefficient. Think how a Ford T needed a long chimney to get rid of a ton of black smoke, which was unused petrol. Over the next hundred years combustion engines have become much more efficient, but they still waste a lot of energy, which is why we need to move towards renewable electricity and sustainable battery technology. But that's a topic for another day.

As you can see from the scores, are around the same accuracy. These tests are in constant evolution as well: as soon as they start becoming obsolete, new ones are released to adjust for a more complicated benchmark. The new models are trained using different machine learning techniques, and in theory, the goal is to make them faster and more efficient so they can operate with less power, much like modern cars use way less energy and produce far less pollution than the Ford T.

However, computing power requirements kept scaling up, so you're either tied to the subscription or forced to pay for a latest gen PC, which is why NVIDIA, AMD, Intel and all the other chip companies were investing hard on much more powerful GPUs and NPUs. For now all we need to know about those is that they're expensive, use a lot of electricity, and are required to operate the bots at superhuman speed (literally, all those clickbait posts about how AI was secretly 150 Indian men in a trenchcoat were nonsense).

Because the chip companies have been working hard on making big, bulky, powerful chips with massive fans that are up to the task, their stock value was skyrocketing, and because of that, everyone started to use AI as a marketing trend. See, marketing people are not smart, and they don't understand computers. Furthermore, marketing people think you're stupid, and because of their biased frame of reference, they think you're two snores short of brain-dead. The entire point of their existence is to turn tall tales into capital. So they don't know or care about what AI is or what it's useful for. They just saw Number Go Up for the AI companies and decided "AI is a magic cow we can milk forever". Sometimes it's not even AI, they just use old software and rebrand it, much like convection ovens became air fryers.

Well, now we're up to date. So what did DepSeek release that did a 9/11 on NVIDIA stock prices and popped the AI bubble?

Oh, I would not want to be an OpenAI investor right now either. A token is basically one Unicode character (it's more complicated than that but you can google that on your own time). That cost means you could input the entire works of Stephen King for under a dollar. Yes, including electricity costs. DeepSeek has jumped from a Ford T to a Subaru in terms of pollution and water use.

The issue here is not only input cost, though; all that data needs to be available live, in the RAM; this is why you need powerful, expensive chips in order to-

Holy shit.

I'm not going to detail all the numbers but I'm going to focus on the chip required: an RTX 3090. This is a gaming GPU that came out as the top of the line, the stuff South Korean LoL players buy…

Or they did, in September 2020. We're currently two generations ahead, on the RTX 5090.

What this is telling all those people who just sold their high-end gaming rig to be able to afford a machine that can run the latest ChatGPT locally, is that the person who bought it from them can run something basically just as powerful on their old one.

Which means that all those GPUs and NPUs that are being made, and all those deals Microsoft signed to have control of the AI market, have just lost a lot of their pulling power.

Well, I mean, the ChatGPT subscription is 20 bucks a month, surely the Chinese are charging a fortune for-

Oh. So it's free for everyone and you can use it or modify it however you want, no subscription, no unpayable electric bill, no handing Microsoft all of your private data, you can just run it on a relatively inexpensive PC. You could probably even run it on a phone in a couple years.

Oh, if only China had massive phone manufacturers that have a foot in the market everywhere except the US because the president had a tantrum eight years ago.

So… yeah, China just destabilised the global economy with a torrent file.

#valid ai criticism#ai#llms#DeepSeek#ai bubble#ChatGPT#google gemini#claude ai#this is gonna be the dotcom bubble again#hope you don't have stock on anything tech related#computer literacy#tech literacy

432 notes

·

View notes

Text

Margaret Mitchell is a pioneer when it comes to testing generative AI tools for bias. She founded the Ethical AI team at Google, alongside another well-known researcher, Timnit Gebru, before they were later both fired from the company. She now works as the AI ethics leader at Hugging Face, a software startup focused on open source tools.

We spoke about a new dataset she helped create to test how AI models continue perpetuating stereotypes. Unlike most bias-mitigation efforts that prioritize English, this dataset is malleable, with human translations for testing a wider breadth of languages and cultures. You probably already know that AI often presents a flattened view of humans, but you might not realize how these issues can be made even more extreme when the outputs are no longer generated in English.

My conversation with Mitchell has been edited for length and clarity.

Reece Rogers: What is this new dataset, called SHADES, designed to do, and how did it come together?

Margaret Mitchell: It's designed to help with evaluation and analysis, coming about from the BigScience project. About four years ago, there was this massive international effort, where researchers all over the world came together to train the first open large language model. By fully open, I mean the training data is open as well as the model.

Hugging Face played a key role in keeping it moving forward and providing things like compute. Institutions all over the world were paying people as well while they worked on parts of this project. The model we put out was called Bloom, and it really was the dawn of this idea of “open science.”

We had a bunch of working groups to focus on different aspects, and one of the working groups that I was tangentially involved with was looking at evaluation. It turned out that doing societal impact evaluations well was massively complicated—more complicated than training the model.

We had this idea of an evaluation dataset called SHADES, inspired by Gender Shades, where you could have things that are exactly comparable, except for the change in some characteristic. Gender Shades was looking at gender and skin tone. Our work looks at different kinds of bias types and swapping amongst some identity characteristics, like different genders or nations.

There are a lot of resources in English and evaluations for English. While there are some multilingual resources relevant to bias, they're often based on machine translation as opposed to actual translations from people who speak the language, who are embedded in the culture, and who can understand the kind of biases at play. They can put together the most relevant translations for what we're trying to do.

So much of the work around mitigating AI bias focuses just on English and stereotypes found in a few select cultures. Why is broadening this perspective to more languages and cultures important?

These models are being deployed across languages and cultures, so mitigating English biases—even translated English biases—doesn't correspond to mitigating the biases that are relevant in the different cultures where these are being deployed. This means that you risk deploying a model that propagates really problematic stereotypes within a given region, because they are trained on these different languages.

So, there's the training data. Then, there's the fine-tuning and evaluation. The training data might contain all kinds of really problematic stereotypes across countries, but then the bias mitigation techniques may only look at English. In particular, it tends to be North American– and US-centric. While you might reduce bias in some way for English users in the US, you've not done it throughout the world. You still risk amplifying really harmful views globally because you've only focused on English.

Is generative AI introducing new stereotypes to different languages and cultures?

That is part of what we're finding. The idea of blondes being stupid is not something that's found all over the world, but is found in a lot of the languages that we looked at.

When you have all of the data in one shared latent space, then semantic concepts can get transferred across languages. You're risking propagating harmful stereotypes that other people hadn't even thought of.

Is it true that AI models will sometimes justify stereotypes in their outputs by just making shit up?

That was something that came out in our discussions of what we were finding. We were all sort of weirded out that some of the stereotypes were being justified by references to scientific literature that didn't exist.

Outputs saying that, for example, science has shown genetic differences where it hasn't been shown, which is a basis of scientific racism. The AI outputs were putting forward these pseudo-scientific views, and then also using language that suggested academic writing or having academic support. It spoke about these things as if they're facts, when they're not factual at all.

What were some of the biggest challenges when working on the SHADES dataset?

One of the biggest challenges was around the linguistic differences. A really common approach for bias evaluation is to use English and make a sentence with a slot like: “People from [nation] are untrustworthy.” Then, you flip in different nations.

When you start putting in gender, now the rest of the sentence starts having to agree grammatically on gender. That's really been a limitation for bias evaluation, because if you want to do these contrastive swaps in other languages—which is super useful for measuring bias—you have to have the rest of the sentence changed. You need different translations where the whole sentence changes.

How do you make templates where the whole sentence needs to agree in gender, in number, in plurality, and all these different kinds of things with the target of the stereotype? We had to come up with our own linguistic annotation in order to account for this. Luckily, there were a few people involved who were linguistic nerds.

So, now you can do these contrastive statements across all of these languages, even the ones with the really hard agreement rules, because we've developed this novel, template-based approach for bias evaluation that’s syntactically sensitive.

Generative AI has been known to amplify stereotypes for a while now. With so much progress being made in other aspects of AI research, why are these kinds of extreme biases still prevalent? It’s an issue that seems under-addressed.

That's a pretty big question. There are a few different kinds of answers. One is cultural. I think within a lot of tech companies it's believed that it's not really that big of a problem. Or, if it is, it's a pretty simple fix. What will be prioritized, if anything is prioritized, are these simple approaches that can go wrong.

We'll get superficial fixes for very basic things. If you say girls like pink, it recognizes that as a stereotype, because it's just the kind of thing that if you're thinking of prototypical stereotypes pops out at you, right? These very basic cases will be handled. It's a very simple, superficial approach where these more deeply embedded beliefs don't get addressed.

It ends up being both a cultural issue and a technical issue of finding how to get at deeply ingrained biases that aren't expressing themselves in very clear language.

178 notes

·

View notes

Text

100 Inventions by Women

LIFE-SAVING/MEDICAL/GLOBAL IMPACT:

Artificial Heart Valve – Nina Starr Braunwald

Stem Cell Isolation from Bone Marrow – Ann Tsukamoto

Chemotherapy Drug Research – Gertrude Elion

Antifungal Antibiotic (Nystatin) – Rachel Fuller Brown & Elizabeth Lee Hazen

Apgar Score (Newborn Health Assessment) – Virginia Apgar

Vaccination Distribution Logistics – Sara Josephine Baker

Hand-Held Laser Device for Cataracts – Patricia Bath

Portable Life-Saving Heart Monitor – Dr. Helen Brooke Taussig

Medical Mask Design – Ellen Ochoa

Dental Filling Techniques – Lucy Hobbs Taylor

Radiation Treatment Research – Cécile Vogt

Ultrasound Advancements – Denise Grey

Biodegradable Sanitary Pads – Arunachalam Muruganantham (with women-led testing teams)

First Computer Algorithm – Ada Lovelace

COBOL Programming Language – Grace Hopper

Computer Compiler – Grace Hopper

FORTRAN/FORUMAC Language Development – Jean E. Sammet

Caller ID and Call Waiting – Dr. Shirley Ann Jackson

Voice over Internet Protocol (VoIP) – Marian Croak

Wireless Transmission Technology – Hedy Lamarr

Polaroid Camera Chemistry / Digital Projection Optics – Edith Clarke

Jet Propulsion Systems Work – Yvonne Brill

Infrared Astronomy Tech – Nancy Roman

Astronomical Data Archiving – Henrietta Swan Leavitt

Nuclear Physics Research Tools – Chien-Shiung Wu

Protein Folding Software – Eleanor Dodson

Global Network for Earthquake Detection – Inge Lehmann

Earthquake Resistant Structures – Edith Clarke

Water Distillation Device – Maria Telkes

Portable Water Filtration Devices – Theresa Dankovich

Solar Thermal Storage System – Maria Telkes

Solar-Powered House – Mária Telkes

Solar Cooker Advancements – Barbara Kerr

Microbiome Research – Maria Gloria Dominguez-Bello

Marine Navigation System – Ida Hyde

Anti-Malarial Drug Work – Tu Youyou

Digital Payment Security Algorithms – Radia Perlman

Wireless Transmitters for Aviation – Harriet Quimby

Contributions to Touchscreen Tech – Dr. Annette V. Simmonds

Robotic Surgery Systems – Paula Hammond

Battery-Powered Baby Stroller – Ann Moore

Smart Textile Sensor Fabric – Leah Buechley

Voice-Activated Devices – Kimberly Bryant

Artificial Limb Enhancements – Aimee Mullins

Crash Test Dummies for Women – Astrid Linder

Shark Repellent – Julia Child

3D Illusionary Display Tech – Valerie Thomas

Biodegradable Plastics – Julia F. Carney

Ink Chemistry for Inkjet Printers – Margaret Wu

Computerised Telephone Switching – Erna Hoover

Word Processor Innovations – Evelyn Berezin

Braille Printer Software – Carol Shaw

⸻

HOUSEHOLD & SAFETY INNOVATIONS:

Home Security System – Marie Van Brittan Brown

Fire Escape – Anna Connelly

Life Raft – Maria Beasley

Windshield Wiper – Mary Anderson

Car Heater – Margaret Wilcox

Toilet Paper Holder – Mary Beatrice Davidson Kenner

Foot-Pedal Trash Can – Lillian Moller Gilbreth

Retractable Dog Leash – Mary A. Delaney

Disposable Diaper Cover – Marion Donovan

Disposable Glove Design – Kathryn Croft

Ice Cream Maker – Nancy Johnson

Electric Refrigerator Improvements – Florence Parpart

Fold-Out Bed – Sarah E. Goode

Flat-Bottomed Paper Bag Machine – Margaret Knight

Square-Bottomed Paper Bag – Margaret Knight

Street-Cleaning Machine – Florence Parpart

Improved Ironing Board – Sarah Boone

Underwater Telescope – Sarah Mather

Clothes Wringer – Ellene Alice Bailey

Coffee Filter – Melitta Bentz

Scotchgard (Fabric Protector) – Patsy Sherman

Liquid Paper (Correction Fluid) – Bette Nesmith Graham

Leak-Proof Diapers – Valerie Hunter Gordon

FOOD/CONVENIENCE/CULTURAL IMPACT:

Chocolate Chip Cookie – Ruth Graves Wakefield

Monopoly (The Landlord’s Game) – Elizabeth Magie

Snugli Baby Carrier – Ann Moore

Barrel-Style Curling Iron – Theora Stephens

Natural Hair Product Line – Madame C.J. Walker

Virtual Reality Journalism – Nonny de la Peña

Digital Camera Sensor Contributions – Edith Clarke

Textile Color Processing – Beulah Henry

Ice Cream Freezer – Nancy Johnson

Spray-On Skin (ReCell) – Fiona Wood

Langmuir-Blodgett Film – Katharine Burr Blodgett

Fish & Marine Signal Flares – Martha Coston

Windshield Washer System – Charlotte Bridgwood

Smart Clothing / Sensor Integration – Leah Buechley

Fibre Optic Pressure Sensors – Mary Lou Jepsen

#women#inventions#technology#world#history#invented#creations#healthcare#home#education#science#feminism#feminist

39 notes

·

View notes

Note

hey hii i discovered your art today and im obsessed with it and the way you render !! can i just ask what brush(es) do you use for your rendering? ^^

Heyaa! :>

I have several technique approaches to painting stuff, but if you're most curious of my later painterly pieces, then it's mainly a flat textured brushes from the Greg Rutkowski free brushes pack!

Lately was dabbing into overpainting the stuff with EscapeMotion's Rebelle software to get some traditional paint feel, which has it's own library of unique brushes too, and they are GORGEOUS.

It's pricey and is demanding to graphic's card use, but it has a 30-day trial that you can run as a test ground if you'd like. Also it has a near 40% off in seasonal discounts, so that's a best time to grab it.

And I frequently utilize brushes and smudges from an also free-for-use Yuming Li brushes pack which is also has some natural edges which I really love. The sketch brush from this particular one is my absolute fav go-to and what I mostly use for my sketching aswell, which has an oil pastel feel.

Hope it sated your curiosity and you find it helpful❤ :> Wish you best in your creative pursuit!

#brushwork goes vroooommmmmm#my brushes#if anyone else interested here they are#ask box#thatzombieasks#thatzombieart#ask the artist

27 notes

·

View notes

Text

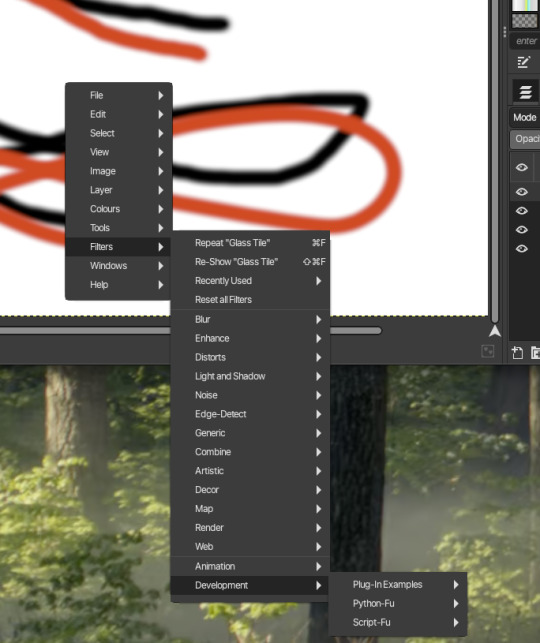

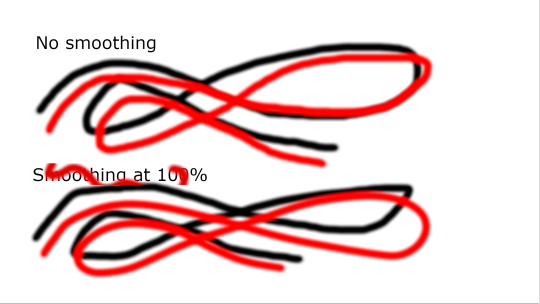

GIMP

Ironically the most replies I ever had on Imgur.

OK look here's the skinny on why I don't like GIMP: A long discussion with screenshots

To start with, there's two classes of users:

A: People who never use art apps, don't make art, and maybe tweak a couple of photos for colour balance every couple of years: They love GIMP. They hardly use it or never use it but it's FREE and OPEN SOURCE and they heard Adobe was BAD SOMEHOW because they CHARGE MONEY. They love GIMP. They will die on that hill. GIMP is the best. One day they may even use it.

B: People who are enthusiasts and professionals who actually want to make something and have graphics tablets, strong opinions on CMYK and whether Kyle Webster is over-rated or not. And they don't use GIMP because they tried it and it doesn't do the job.

To go for a comic analogy It's like bystanders telling EMTs their technique is wrong because they saw this Spongebob episode where he used bandaid.

Anyway: Who the hell amI? I feel like I should establish some Bona Fides: not just some random shouty dude. I started out with MS Paint in 1989, then Deluxe Paint Enhanced for PC. I started doing Desktop Publishing with CorelDraw and some non WYSIWYG layout engines. Spent a miserable few years with Quark, moved on to Photoshop... 3 I think. Jettisoned that after it got too bloated (It has a 3D print system inside it!!) In amongst I've tried GIMP, Krita, Clip Studio (Now and back when it was Manga Studio and really didn't want you to use colour), ProCreate, Adobe Fresco, ArtRage, Kai's powertools, 3D Studio (Back before it was Max), Maya, Blender, Inkscape, Serif Studios, Art Studio Pro... I was a graphic designer for several companies, self employed, and worked for Anthrocon doing colour on their badges for around 15 years, as well as creating designs for thier printed work and occasionally keycards, and t-shirts, and designs for badges when they didn't have any art from a GoH. To put it simply I'm not an Adobe Fanboy screeching about people using non-Adobe software. I've seen people who wanted to move to digital give up after buying hundreds of dollars of hardware because they got told GIMP was TEH GOODEST. Anyway...

But every time someone wants to get into art to paint the picture they have inside them, or do some fanart or mess with graphics design, group A crawl out from their bog of incompetence and demand that people use GIMP. Not just suggest it, but actively shout down group B, the people who have experience.

Because Group A would rather push their dogma that paid software is always the wrong solution, than accept that GIMP's frankly shit.

It's just hit version 3.0 after only 30 years. Go team.

Points to note: If you need support for GIMP, the answer is always "Runs OK for me LOL" or "I don't use it but all your problems are because you used Photoshop once." or the good old "Switch to Linux."

In the interest of fairness I'm going to install this new amazing version of GIMP and see if it's any better.

Infamously, the software is so awful that almost all images on Google that you find are pictures of people re-creating the GIMP Logo in GIMP to prove that it is on a par with MS Paint. And it's not a good logo.

First boot:

OK so it opens up a giant panel that tells me I installed GIMP. Presumably a warning. You can't access the actual app unless you've first navigated the splash screen. The app assumes your'e still on an 800x600 monitor. Nice. I suspect the splash screen was supposed to laod over the top of GIMP because... uh... ANYWAY.

Select create Select Make a new image Select a size... hit OK and voila.

Just as a note: Other apps do this in a single panel, or just open the app and let you hit File > new > Select size and bit depth & colour space... which GIMP also skipped.

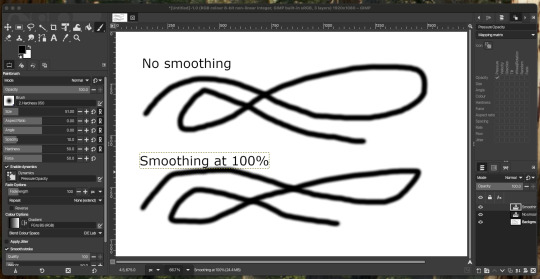

OK so I'm using a mouse. so let's do a test line then find and turn on the stroke smoothing. Wow!

... This is worthless! Looks like the smoothing amount doens't do anyting, you have to tweak the other option on a scale of 0 to 1000.

For those of you playing at home: That makes no sense, because everything else is 0-100.

Also brave choice to make sure that when you pull up the settings they replace the brush palette... on the opposite side of the screen, and give you no way to switch back.

The setting which are incidentally locked, making them... double worthless.

Fun trivia! If you mis click at the bottom of the brush palette, on the left, it just deletes your tool preset! Genius. Sticking a button for a process you'd almost never do, next to the Undo/redo icons. Chef's kiss. Perfect UI design.

I hear they're making a car. The 'explode fuel tank' button is next to the switch that turns the headlights on. You may ask why they have an 'explode fuel tank' button. Yes. You may ask.

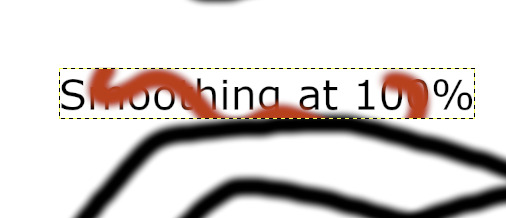

Now having created a text label, I somehow am not allowed to draw outside the text label. This is not normal.

There is no Unselect option at all in the selection menu. Escape does't do it. Hitting option/alt pops up a messages saying there's no selection to remove:

... despite there being a selection to remove.

GIMP's infamously mazelike right click menu that copies the taskbar menu is still around:

Why have one set of menus when you can have two of every menu?

Not pictured: The giant tooltip that pops up and covers the thing you're trying to click on. Seriously, I couldn't get a screenshot of it.

Back to drawing...

Apparently the solution is to create a new layer. One text object is still text, the other randomly rasterised itself and locked the selections to it's own boundary box. That is sub-optional or "entirely stupid, who wrote this garbage?" if you're being formal.

These guys. That's who.

Still got a maze of options including 'Y not use PYTHON to make a pic‽"

At this point I notice that the vibrant red I pick in the colour picker is showing as desaturated orange in the screenshots. Which is weird because it's supposed to be 8 bit sRGB, aka the basics. But somehow this has been fucked up - GIMP: "Colour accuracy? But Why?"

Let's try an export. a mere four or five clicks later...

... where the hell is it? Let me try that again...

OK. Uh. Great. A third visibly different shade of red to the one I drew with.

At this point, I'm done. I could learn to use the tools easily. I could dig through the manual and look for whatever ass backward UI decision lets you resolve it's inherent flaws like rasterising text at random...

... but if I tell it to use 100% red and it insists on using some buggy kludged system that outputs it to a different shade, then why bother? Nothing you do will come out looking the way you intended.

Meanwhile Clip Studio's over here doing 100% of everything you need for amateur or enthusiast or even pro level art (I created one of Anthrocon's T-shirts in Clip Studio), and it'll run on a tablet and let you draw on $400 of hardware or a desktop and a graphics tablet.

And if by some goddamn chance you got all the way through this, send me a boop in the notes!

16 notes

·

View notes

Text

become an academic weapon 📚🔫✨

hi all !!

with my GCSEs this year, and only a few weeks before back to school, I decided to really lock in yesterday 🫣

so I thought I'd take all the info I've come across while scrolling through studytok and put it into a little post for everyone looking to improve in their studies (& for my benefit as well 🙈)

motivation

this is probably the biggest factor when it comes to locking into your studies, motivation can quite literally make or break your academic achievements (😦)

so, its very important you motivate yourself, and moreover, stay motivated 😭

i've made it sound daunting but motivating yourself is lowkey easier than you think, here's a few ways to do it:

picturing yourself in 10 years, where all your studying and hard work has paid off - you can't be that person without doing the work that they did 😬

you can also do the opposite of the above - imagine how disappointed you'll be if you didn't work as hard as you could have and failed

"revenge studying" - the most toxic yet probably the most widely effective technique - working hard so you can beat than the people who are better than you

make studying aesthetic - create pinterest boards, look at quotes and tiktoks, make success your greatest desire

make it an addiction - if you're bored? study. had a bad day? nothing like setting yourself up for the best future. having a great day? go make it better by making yourself smarter.

get a motivational study app - i LOVE 'Study Bunny' I've been using it for two days now and it genuinely motivates me to be more productive to keep my bunny happy 🙃

resources

obviously, you need some help where you can get it despite all the controversies surrounding studying and the use of the internet, there are some amazing online resources you can use that will actively help you 📚

Quizlet/Anki - both of these flashcard platforms are incredibly useful - Quizlet is a fun platform and you can search for flashcards made by other people - Anki, in my opinion, is better than Quizlet for memorising, and you can import flashcards from Quizlet.

Mindnote - A mindmap making software online, a user-friendly interface + is quick and easy to make them - Great for visual learners

YouTube - the teachers on YouTube are incredibly helpful and can explain any topics you're confused about very quickly and very thoroughly

Spark Notes - great for English literature, with in-depth analysis of your texts and modern translations

Physics & Maths Tutor - free past papers and topic questions for core subjects and a few others, great for active recall

Study Bunny/Flora - helps keep track of your progress and keeps you motivated, I recommend Study Bunny because I can see how much work I've done of each subject and tick off things on my checklist

these are just a few out of many other resources so go do some of your own research, especially if there are websites that help with a specific subject

techniques

different study techniques work best for different people, no technique is a one-size-fits-all, some people are visual learners, others perform best by memorising & etc.

active recall - the only one-size-fits-all method - is a cognitive function that you carry out to remember things in tests, so practising this is a must -> the best way to do this is by completing topic questions and past papers using minimal amounts of notes. basically just testing yourself before the actual test

Spaced-out revision - one of the best ways to make sure things stick in your mind, revise a topic/subject and revisit it every few days, eg. 1, 3, 5, 9, 15, 30; and by the 30-day mark it should be stuck in your mind because your brain believes its something that you need to know in the long run and stores it in your long term memory

Flashcards - great to memorise content for the test, especially subjects that are tested with orals

Scribble method - scribbling on a piece of paper while revising the content in any form, reading, listening, etc. helps your brain store the information you're consuming more effectively

Feynman method - basically just explaining the topic you're revising to someone, this helps you develop your understanding and catches out any areas you're unsure about to revise later

making mindmaps - this is great for visual learners, especially if you use different colours for each section of the map so that you can associate each concept with each colour and recall them easily

again, those are just a few that come to mind. do your own research and find out what works best for you 😇

while studying

knowing how to study effectively is also a crucial aspect of success (obviously) 🤭

here are a few tips:

don't listen to music with lyrics, instead you can listen to lofi tracks, cafe/library asmr, brown noise, jazz music (my favourite)

set yourself a study slot, like 2 hours every day at a specific time & set a focus filter on your phone for the duration of your study time

make an aesthetic/cute study space so you can enjoy your time in that space and it doesn't feel like a chore

get a whiteboard to make learning more interactive & fun

light a specific candle whenever you're studying so your brain knows to associate the scent with working

have regular breaks eg. every half hour for 5-10 mins

reward yourself afterwards, so you associate studying with a good experience

consistency is key, the more you study the easier & more fun it becomes 🙃 the more you study the more you are likely to succeed and fulfil your dreams ✨

remember though, academics is not everyone's thing:

"you cannot judge a fish by its ability to climb a tree"

everyone is good at something, and it doesn't make anyone lesser or greater 🫶

if you try your best, that is all that matters 🫠

- li 🌘

#academic weapon#academic validation#academicexcellence#student#gcse student#student life#studying#study motivation#study blog#study aesthetic#studyspo#studyblr#science#english#maths#gcse studyblr#gcse2025#tumblr fyp#it girl

52 notes

·

View notes

Text

youtube

Tribute AMV for Dr. Underfang and Mrs. Natalie Nice/Nautilus.

From TyrannoMax and the Warriors of the Core, everyone's favorite Buzby-Spurlock animated series.

After all, who doesn't love a good bad guy, especially when they come in pairs?

Process/Tutorial Under the Fold.

This is, of course, a part of my TyrannoMax unreality project, with most of these video clips coming from vidu, taking advantage of their multi-entity consistency feature (more on that later). This is going to be part of a larger villain showcase video, but this section is going to be its own youtube short, so its an video on its own.

The animation here is intentionally less smooth than the original, as I'm going for a 1980s animated series look, and even in the well-animated episodes you were typically getting 12 FPS (animating 'on twos'), with 8 (on threes) being way more common. As I get access to better animation software to rework these (currently just fuddling along with PS) I'm going to start using this to my advantage by selectively dropping blurry intermediate frames.

I went with 12 since most of these clips are, in the meta-lore, from the opening couple of episodes and the opening credits, where most of the money for a series went back in the day.

Underfang's transformation sequence was my testing for several of my techniques for making larger TyrannoMax videos. Among those was selectively dropping some of the warped frames as I mentioned above, though for a few shots I had to wind up re-painting sections.

Multi-entity consistency can keep difficult dinosaur characters stable on their own, but it wasn't up to the task of keeping the time-temple accurate enough for my use, as you can see here with the all-t-rex- and-some-moving-statues, verses the multi-species effort I had planned:

The answer was simple, chroma-key.

Most of the Underfang transformation shots were done this way. The foot-stomp was too good to leave just because he sprouted some extra toes, so that was worth repainting a few frames of in post.

Vidu kind of over-did the texturing on a few shots (and magenta was a poor choice of key-color) so I had to go in and manually purple-ize the background frame by frame for the spin-shot.

This is on top of the normal cropping, scaling, color-correcting, etc that goes into any editing job of this type.

It's like I say: nearly all AI you see is edited, most of it curated, even the stuff that's awful and obvious (never forget: enragement is engagement)

Multi-Entity Consistency:

Vidu's big advantage is reference-to-video. For those who have been following the blog for awhile, R2V is sort of like Midjourney's --cref character reference feature. A lot of video AIs have start-end frame functionality, but being able to give the robot a model sheet and effectively have it run with it is a darn nice feature for narrative.

Unlike the current version of Midjourney's --cref feature, however, you can reference multiple concepts with multiple images.

It is super-helpful when you need to get multiple characters to interact, because without it, they tend to blend into each other conceptually.

I also use it to add locations, mainly to keep them looking appropriately background-painting rather than a 3d background or something that looks like a modded photo like a lot of modern animation does.

The potential here for using this tech as a force multiplier for small animation projects really shines through, and I really hope I'm just one of several attempting to use it for that purpose.

Music:

The song is "The Boys Have a Second Lead Pipe", one of my Suno creations. I was thinking of using Dinowave (Let's Dance To) but I'm saving that for a music video of live-action dinosovians.

Prompting:

You can tell by the screenshot above that my prompts have gotten... robust. Vidu's prompting system seems to understand things better when given tighter reigns (some AIs have the opposite effect), and takes information with time-codes semi-regularly, so my prompts are now more like:

low-angle shot, closeup, of a green tyrannosaurus-mad-scientist wearing a blue shirt and purple tie with white lab coat and a lavender octopus-woman with tentacles growing from her head, wearing a teal blouse, purple skirt, purple-gray pantyhose. they stand close to each other, arms crossed, laughing evilly. POV shot of them looming over the viewer menacingly. The background is a city, in the style of animation background images. 1986 vintage cel-shaded cartoon clip, a dinosaur-anthro wearing a lab coat, shirt and tie reaches into his coat with his right hand and pulls out a laser gun, he takes aim, points the laser gun at the camera and fires. The laser effect is short streaks of white energy with a purple glow. The whole clip has the look and feel of vintage 1986 action adventure cel-animated cartoons. The animation quality is high, with flawless motion and anatomy. animated by Tokyo Movie Shinsha, studio Ghibli, don bluth. BluRay remaster.

While others approach the scripted with time-code callouts for individual actions.

#Youtube#tyrannomax and the warriors of the core#unreality#tyrannomax#fauxstalgia#Dr. Underfang#Mrs. Nautilus#Mrs. Nice#80s cartoons#animation#ai assisted art#my OC#vidu#vidu ai#viduchallenge#MultiEntityConsistency#ai video#ai tutorial

23 notes

·

View notes

Text

Building Robust API Test Suites with the Best Automation Frameworks

Introduction

Introduce the role of robust API test suites in maintaining the functionality, reliability, and performance of modern applications. Emphasize the importance of selecting the right automation framework to build these test suites effectively.

Selecting the Right Framework

Discuss the criteria for choosing an API automation framework, such as ease of use, compatibility with protocols (REST, SOAP, GraphQL), and integration with CI/CD pipelines. Mention popular frameworks like Postman, RestAssured, and Karate.

Structuring Test Suites for Maintainability

Explain how a well-structured API test suite can reduce maintenance overhead. Discuss best practices for organizing tests, such as grouping tests by functionality, and using reusable components (like request templates and utility functions).

Coverage and Depth of Testing

Highlight the need for comprehensive coverage, including functional testing, error handling, and edge cases. Point out how API automation frameworks should allow testing of different request methods (GET, POST, PUT, DELETE) and support for data-driven testing.

Parallel Execution for Scalability

Explore the importance of frameworks that support parallel execution, enabling faster test runs across multiple environments and API endpoints, which is critical for large-scale systems.

Conclusion

Summarize the key steps to building robust API test suites and how using the best automation frameworks enhances the overall development process and quality assurance.

#api automation#api automation framework#api automation testing#api automation testing tools#api automation tools#api testing framework#api testing techniques#api testing tools#api tools#codeless test automation#codeless testing platform#test automation software#automated qa testing#no code test automation tools

1 note

·

View note

Text

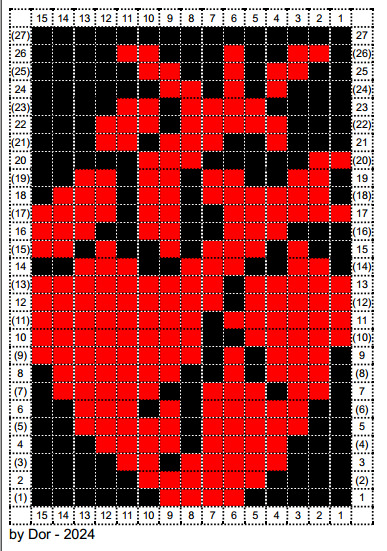

remember when i made a VERY CRAPPY YOU'VE BEEN WARNED (but usable) "software" to design colorwork? (post here, tool here)

well, I used to design this sweater

(A few years ago I also sewn the shirt I'm wearing in this pics and I draw that faux vitreaux, because I'm possessed by the spirit of the Arts and Crafts Movement, apparently)

I knitted the sweater in pieces and then I sew it up. I improvised the design. It features a mock neck, puff sleeves and very high cuffs and waistband in 1x1 rib.

The ribs were knitted in 3mm needles; and the stockinette parts in 4mm needles.

I knitted the head of the sleeves wider than the armhole and then gathered it to give it a puff effect.

I watched some youtube tutorials and ended up mixing the technique for intarsia (for the edges of the heart) and stranded colorwork (for the bits inside of the heart where it changes between red and black). Is this a good practice? idk, I'm just a fool who runs on hybris.

When I gauged tested, I realized the chart from that original post was too big for what I wanted. This here is the one I used.

I wish I had made the sleeves a liiiiiitle bit puffier and I had placed the heart two stitches to the right, but other than that, I'm super happy with the result.

42 notes

·

View notes

Text

I needed to test different ways of making and fixing a design on a T-shirt, for Halloween. Halloween costumes for my two offspring will be posted later, they're awesome.

Ideally for this purpose I would use an actual T-shirt, and a complex design rather than, IDK, a series of hash marks or something, in order to most accurately represent the conditions in reading for. And I'd rather not waste a shirt so I'd like for it to be something fun to wear when I'm done. And I need it to be in 4 easily-seen sections so I can differentiate my techniques.

Apparently I grabbed a Daughter-size shirt, so it should be something my daughter would like. Reliably good for her are coffee, cats, and outer space.

Hence:

I went online and looked for free clip art, found a set of cute cats, arranged them in image software, and added words under them in a font I liked. Then I printed that and slid it inside the shirt and traced what I could, then pulled it back out and added details.

The two left cats are drawn with a fabric-paint marker, the two right cats with normal sharpie. The lower two cats will be ironed and the upper two will not. In 24 hours I'll wash it and see which comes out best, that'll be the evening of the 28th, I can draw the actual costume shirts on the 29th and wash them on the 30th and WHY IS IT ALWAYS PANIC TIME?! but they should be fine to be worn on the 31st.

In the meantime I need to get back to my EVA foam, glueing bits to a bucket lid, and painting beads. AAAAAAAAAAAAAAAAAAAAAAAAA....

#halloween#halloween costumes#cats#cat#cute cats#t shirts#fabric art#fiber arts#very rudimentary art honestly#deadlines

11 notes

·

View notes

Note

Tips on how to ace exams ??

hii i hope the exams coming up for you go well!! here are my fav things to do to ace exams!! 🩷

1. if you need to memorise lots of content, use anki. anki is a computer software or website you can use to create flashcards. it automatically reschedules them for you so you can review them every day using spaced repetition. make sure you review the ‘due’ cards everyday (especially if you’re cramming) because otherwise the content to go over again will quickly build up and feel overwhelming.

2. if there’s math involved in your studying, do as many questions as you can. take pictures of questions that you get wrong and go over them again in a few days or weeks time (depending on how much time you have) to ensure you’ve learned from your mistakes and still remember what to do. there’s never enough questions you can do, the more you can get done, the better.

3. if you have access to past paper questions, do them alongside learning the content. exam technique is a whole new skill altogether that is super important to learn in order to ace the exams. a lot of certain exams also reuse the same questions / word them in a similar way so it also helps with memorisation and getting use to the style of questions and how to answer them.

4. Remember that although it’s stressful studying and anticipating these exams, it’s a temporary feeling. stress can be good to get you motivated but sometimes an overwhelming amount of stress can make it too difficult to study. take a deep breath, take it slow, and start again.

5. make sure you understand the content while learning it, too. i like to make mind maps that are similar to the flashcards so i can grasp the concept of the topic while also memorising it. some people rely entirely on exams being almost like ‘memory tests’ but they also examine your understanding of the topic, too.

6. get into a routine. wake up at a certain time, work at a certain time, take breaks at a certain time, and go to sleep at a certain time. keep these times consistent and make them into a habit like the way you make showering or brushing your teeth a habit. and remember the breaks you take are AS important as the revision. if you take no breaks you’re going to burn yourself out completely.

here are some timetables i like to use:

8:00-10:30

11:30-1:30

2:30-5:30

7:30-9:30

that’s 8 hours of revision with sufficient breaks.

or:

8:00-10:30

11:00-1:30

2:00-4:30

that’s 7.5 hours of revision but with smaller breaks, but you finish much earlier.

or:

10-12

2-4

5-7

thats 6 hours of revision with good breaks.

7. get good sleep, please. if you get bad sleep you’ll be too tired the next day and trust me that will mess EVERYTHING UP.

8. when learning flashcards, i like to read it three times, and then press ‘again’ (when using anki, or if you’re using paper flashcards, put it to the back of the pile). when it comes back up, i try to recall it. if i don’t get it right, i read it 3 times again, and then scribble it down on some paper — then press ‘again’.

10. use forest (or flora, the free version) forest is an app where you can grow pretend trees and ‘plant’ your own garden depending on how much you revision you do. if you end your timer before it ends, or go on a different app, it kills your tree! the more revision you do, the more coins you get and you can buy different types of trees. (message me if you get forest and you can add me! not flora tho, i don’t use that one)

i hope this helped!!!! good luck!!! 😁😁😁😁

#studyblr#study#study motivation#studyinspo#studyspo#a levels#study aesthetic#study blog#study notes#study tips

36 notes

·

View notes