#sql in data analysis

Explore tagged Tumblr posts

Text

Your Guide To SQL Interview Questions for Data Analyst

Introduction

A Data Analyst collects, processes, and analyses data to help companies make informed decisions. SQL is crucial because it allows analysts to efficiently retrieve and manipulate data from databases.

This article will help you prepare for SQL interview questions for Data Analyst positions. You'll find common questions, detailed answers, and practical tips to excel in your interview.

Whether you're a beginner or looking to improve your skills, this guide will provide valuable insights and boost your confidence in tackling SQL interview questions for Data Analyst roles.

Importance Of SQL Skills In Data Analysis Roles

Understanding SQL is crucial for Data Analysts because it enables them to retrieve, manipulate, and manage large datasets efficiently. SQL skills are essential for accurate Data Analysis, generating insights, and making informed decisions. Let's explore why SQL is so important in Data Analysis roles.

Role Of SQL In Data Retrieval, Manipulation, and Management

SQL, or Structured Query Language, is the backbone of database management. It allows Data Analysts to pull data from databases (retrieval), change this data (manipulation), and organise it effectively (management).

Using SQL, analysts can quickly find specific data points, update records, or even delete unnecessary information. This capability is essential for maintaining clean and accurate datasets.

Common Tasks That Data Analysts Perform Using SQL

Data Analysts use SQL to perform various tasks. They often write queries to extract specific data from databases, which helps them answer business questions and generate reports.

Analysts use SQL to clean and prepare data by removing duplicates and correcting errors. They also use it to join data from multiple tables, enabling a comprehensive analysis. These tasks are fundamental in ensuring data accuracy and relevance.

Examples Of How SQL Skills Can Solve Real-World Data Problems

SQL skills help solve many real-world problems. For instance, a retail company might use SQL to analyse sales data and identify the best-selling products. A marketing analyst could use SQL to segment customers based on purchase history, enabling targeted marketing campaigns.

SQL can also help detect patterns and trends, such as identifying peak shopping times or understanding customer preferences, which are critical for strategic decision-making.

Why Employers Value SQL Proficiency in Data Analysts

Employers highly value SQL skills because they ensure Data Analysts can work independently with large datasets. Proficiency in SQL means an analyst can extract meaningful insights without relying on other technical teams. This capability speeds up decision-making and problem-solving processes, making the business more agile and responsive.

Additionally, SQL skills often indicate logical, solid thinking and attention to detail, which are highly desirable qualities in any data-focused role.

Basic SQL Interview Questions

Employers often ask basic questions in SQL interviews for Data Analyst positions to gauge your understanding of fundamental SQL concepts. These questions test your ability to write and understand simple SQL queries, essential for any Data Analyst role. Here are some common basic SQL interview questions, along with their answers:

How Do You Retrieve Data From A Single Table?

Answer: Use the `SELECT` statement to retrieve data from a table. For example, `SELECT * FROM employees;` retrieves all columns from the "employees" table.

What Is A Primary Key?

Answer: A primary key is a unique identifier for each record in a table. It ensures that no two rows have the same key value. For example, in an "employees" table, the employee ID can be the primary key.

How Do You Filter Records In SQL?

Answer: Use the `WHERE` clause to filter records. For example, `SELECT * FROM employees WHERE department = 'Sales';` retrieves all employees in the Sales department.

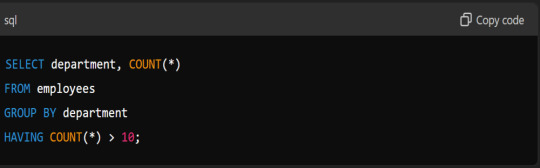

What Is The Difference Between `WHERE` And `HAVING` Clauses?

Answer: The `WHERE` clause filters rows before grouping, while the `HAVING` clause filters groups after the `GROUP BY` operation. For example, `SELECT department, COUNT(*) FROM employees GROUP BY department HAVING COUNT(*) > 10;` filters departments with more than ten employees.

How Do You Sort Data in SQL?

Answer: Use the `ORDER BY` clause to sort data. For example, `SELECT * FROM employees ORDER BY salary DESC;` sorts employees by salary in descending order.

How Do You Insert Data Into A Table?

Answer: Use the `INSERT INTO` statement. For example, `INSERT INTO employees (name, department, salary) VALUES ('John Doe', 'Marketing', 60000);` adds a new employee to the "employees" table.

How Do You Update Data In A Table?

Answer: Use the `UPDATE` statement. For example, `UPDATE employees SET salary = 65000 WHERE name = 'John Doe';` updates John Doe's salary.

How Do You Delete Data From A Table?

Answer: Use the `DELETE` statement. For example, `DELETE FROM employees WHERE name = 'John Doe';` removes John Doe's record from the "employees" table.

What Is A Foreign Key?

Answer: A foreign key is a field in one table that uniquely identifies a row in another table. It establishes a link between the two tables. For example, a "department_id" in the "employees" table that references the "departments" table.

How Do You Use The `LIKE` Operator?

Answer: SQL's `LIKE` operator is used for pattern matching. For example, `SELECT * FROM employees WHERE name LIKE 'J%';` retrieves all employees whose names start with 'J'.

Must Read:

How to drop a database in SQL server?

Advanced SQL Interview Questions

In this section, we delve into more complex aspects of SQL that you might encounter during a Data Analyst interview. Advanced SQL questions test your deep understanding of database systems and ability to handle intricate data queries. Here are ten advanced SQL questions and their answers to help you prepare effectively.

What Is The Difference Between INNER JOIN And OUTER JOIN?

Answer: An INNER JOIN returns rows when there is a match in both tables. An OUTER JOIN returns all rows from one table and the matched rows from the other. If there is no match, the result is NULL on the side where there is no match.

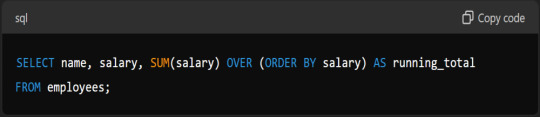

How Do You Use A Window Function In SQL?

Answer: A window function calculates across a set of table rows related to the current row. For example, to calculate the running total of salaries:

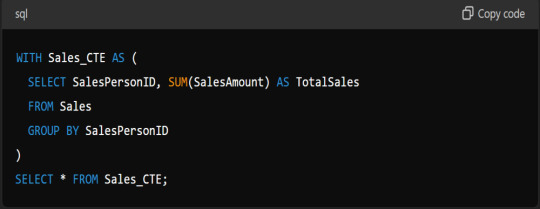

Explain The Use Of CTE (Common Table Expressions) In SQL.

Answer: A CTE allows you to define a temporary result set that you can reference within a SELECT, INSERT, UPDATE, or DELETE statement. It is defined using the WITH clause:

What Are Indexes, And How Do They Improve Query Performance?

Answer: Indexes are database objects that improve the speed of data retrieval operations on a table. They work like the index in a book, allowing the database engine to find data quickly without scanning the entire table.

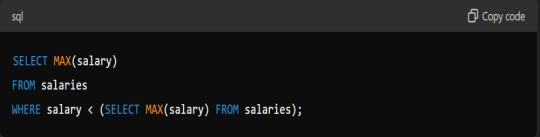

How Do You Find The Second-highest Salary In A Table?

Answer: You can use a subquery for this:

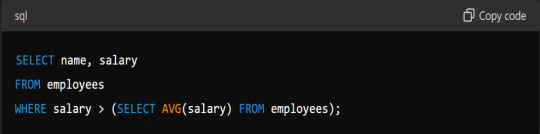

What Is A Subquery, And When Would You Use One?

Answer: A subquery is a query nested inside another query. You use it when you need to filter results based on the result of another query:

Explain The Use Of GROUP BY And HAVING Clauses.

Answer: GROUP BY groups rows sharing a property so that aggregate functions can be applied to each group. HAVING filters groups based on aggregate properties:

How Do You Optimise A Slow Query?

Answer: To optimise a slow query, you can:

Use indexes to speed up data retrieval.

Avoid SELECT * by specifying only necessary columns.

Break down complex queries into simpler parts.

Analyse query execution plans to identify bottlenecks.

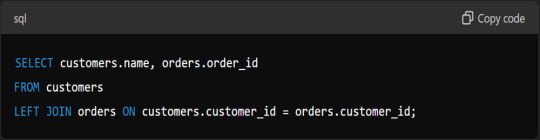

Describe A Scenario Where You Would Use A LEFT JOIN.

Answer: Use a LEFT JOIN when you need all records from the left table and the matched records from the right table. For example, to find all customers and their orders, even if some customers have no orders:

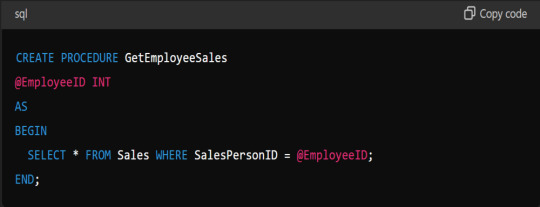

What Is A Stored Procedure, And How Do You Create One?

Answer: A stored procedure is a prepared SQL code you can reuse. It encapsulates SQL queries and logic in a single function:

These advanced SQL questions and answers will help you demonstrate your proficiency and problem-solving skills during your Data Analyst interview.

Practical Problem-Solving Scenarios SQL Questions

In SQL interviews for Data Analyst roles, you’ll often face questions that test your ability to solve real-world problems using SQL. These questions go beyond basic commands and require you to think critically and apply your knowledge to complex scenarios. Here are ten practical SQL questions with answers to help you prepare.

How Would You Find Duplicate Records In A Table Named `Employees` Based On The `Email` Column?

Answer:

Write A Query To Find The Second Highest Salary In A Table Named `Salaries`.

Answer:

How Do You Handle NULL Values In SQL When Calculating The Total Salary In The `Employees` Table?

Answer:

Create A Query To Join The `Employees` Table And `Departments` Table On The `Department_id` And Calculate The Total Salary Per Department.

Answer:

How Do You Find Employees Who Do Not Belong To Any Department?

Answer:

Write A Query To Retrieve The Top 3 Highest-paid Employees From The `Employees` Table.

Answer:

How Do You Find Employees Who Joined In The Last Year?

Answer:

Calculate The Average Salary Of Employees In The `Employees` Table, Excluding Those With A Wage Below 3000.

Answer:

Update The Salary By 10% For Employees In The `Employees` Table Who Work In The 'Sales' Department.

Answer:

Delete Records Of Employees Who Have Not Been Active For The Past 5 years.

Answer:

These questions cover a range of scenarios you might encounter in an SQL interview. Practice these to enhance your problem-solving skills and better prepare for your interview.

Tips for Excelling in SQL Interviews

Understanding how to excel in SQL interviews is crucial for aspiring data professionals, as it showcases technical expertise and problem-solving skills and enhances job prospects in a competitive industry. Excelling in SQL interviews requires preparation and practice. Here are some tips to help you stand out and perform your best.

Best Practices for Preparing for SQL Interviews

Preparation is critical to success in SQL interviews. Start by reviewing the basics of SQL, including common commands and functions. Practice writing queries to solve various problems.

Ensure you understand different types of joins, subqueries, and aggregate functions. Mock interviews can also be helpful. They simulate the real interview environment and help you get comfortable answering questions under pressure.

Resources for Improving SQL Skills

Knowing about resources for improving SQL skills enhances data management proficiency and boosts career prospects. It also facilitates complex Data Analysis and empowers you to handle large datasets efficiently. There are many resources available to help you improve your SQL skills. Here are a few:

Books: "SQL For Dummies" by Allen G. Taylor is a great start. "Learning SQL" by Alan Beaulieu is another excellent resource.

Online Courses: Many websites offer comprehensive SQL courses. Explore platforms that provide interactive SQL exercises.

Practice Websites: LeetCode, HackerRank, and SQLZoo offer practice problems that range from beginner to advanced levels. Regularly solving these problems will help reinforce your knowledge and improve your problem-solving skills.

Importance of Understanding Business Context and Data Interpretation

Understanding the business context is crucial in addition to technical skills. Employers want to know that you can interpret data and provide meaningful insights.

Familiarise yourself with the business domain relevant to the job you are applying for. Practice explaining your SQL queries and the insights they provide in simple terms. This will show that you can communicate effectively with non-technical stakeholders.

Tips for Writing Clean and Efficient SQL Code

Knowing tips for writing clean and efficient SQL code ensures better performance, maintainability, and readability. It also leads to optimised database operations and easier collaboration among developers. Writing clean and efficient SQL code is essential in interviews. Follow these tips:

Use Clear and Descriptive Names: Use meaningful names for tables, columns, and aliases. This will make your queries more straightforward to read and understand.

Format Your Code: Use indentation and line breaks to organise your query. It improves readability and helps you spot errors more easily.

Optimise Your Queries: Use indexing, limit the use of subqueries, and avoid unnecessary columns in your SELECT statements. Efficient queries run faster and use fewer resources.

Common Pitfalls to Avoid During the Interview

Knowing common interview pitfalls is crucial to present your best self and avoid mistakes. It further increases your chances of securing the job you desire. Preparation is key. Here's how you can avoid some common mistakes during the interview:

Not Reading the Question Carefully: Ensure you understand the interviewer's question before writing your query.

Overcomplicating the Solution: Start with a simple solution and build on it if necessary. Avoid adding unnecessary complexity.

Ignoring Edge Cases: Consider edge cases and test your queries with different datasets. It shows that you think critically about your solutions.

By following these tips, you'll be well-prepared to excel in your SQL interviews. Practice regularly, use available resources, and focus on clear, efficient coding. Understanding the business context and avoiding common pitfalls will help you stand out as a strong candidate.

Read Further:

Advanced SQL Tips and Tricks for Data Analysts.

Conclusion

Preparing for SQL interviews is vital for aspiring Data Analysts. Understanding SQL fundamentals, practising query writing, and solving real-world problems are essential.

Enhance your skills using resources such as books, online courses, and practice websites. Focus on writing clean, efficient code and interpreting data within a business context.

Avoid common pitfalls by reading questions carefully and considering edge cases. By following these guidelines, you can excel in your SQL interviews and secure a successful career as a Data Analyst.

#sql interview questions#sql tips and tricks#sql tips#sql in data analysis#sql#data analyst interview questions#sql interview#sql interview tips for data analysts#data science#pickl.ai#data analyst

1 note

·

View note

Text

Coursera - Data Analysis and Interpretation Specialization

I have chosen Mars Craters for my research dataset! Research question: How Do Crater Size and Depth Influence Ejecta Morphology in Mars Crater Data?

Topic 2: How Do Crater Size and Depth Influence Ejecta Morphology and the Number of Ejecta Layers in Martian Impact Craters?

Abstract of the study:

Ejecta morphology offers a window into the impact processes and surface properties of planetary bodies. This study leverages a high-resolution Mars crater dataset comprising over 44,000 entries among 380k entries with classified ejecta morphologies, focusing on how crater diameter and depth influence ejecta type. Crater size and rim-to-floor depth are examined whether they serve as reliable predictors of ejecta morphology complexity. Using statistical methods, we assess the relationship between crater dimensions and the occurrence of specific ejecta morphologies and number of layers.

Research Papers Referred:

Nadine G. Barlow., "Martian impact crater ejecta morphologies as indicators of the distribution of subsurface volatiles"

R. H. Hoover1 , S. J. Robbins , N. E. Putzig, J. D. Riggs, and B. M. Hynek. "Insight Into Formation Processes of Layered Ejecta Craters onMars From Thermophysical Observations"

2 notes

·

View notes

Text

I just want a job where I work from home, do some boring repetitive data entry spreadsheet analysis etc. task, and never have to talk to anyone on the phone that doesn't work at my company. Is that so much to ask.

#my words#I'm so burnt out on having to wear my worksona#I can't do customer-facing jobs anymore#I'm trying to get qualified to do data analysis but there aren't really any official licenses or whatever#so I'm just kinda learning SQL on my own#but until then I need SOMETHING

2 notes

·

View notes

Text

What Are the Qualifications for a Data Scientist?

In today's data-driven world, the role of a data scientist has become one of the most coveted career paths. With businesses relying on data for decision-making, understanding customer behavior, and improving products, the demand for skilled professionals who can analyze, interpret, and extract value from data is at an all-time high. If you're wondering what qualifications are needed to become a successful data scientist, how DataCouncil can help you get there, and why a data science course in Pune is a great option, this blog has the answers.

The Key Qualifications for a Data Scientist

To succeed as a data scientist, a mix of technical skills, education, and hands-on experience is essential. Here are the core qualifications required:

1. Educational Background

A strong foundation in mathematics, statistics, or computer science is typically expected. Most data scientists hold at least a bachelor’s degree in one of these fields, with many pursuing higher education such as a master's or a Ph.D. A data science course in Pune with DataCouncil can bridge this gap, offering the academic and practical knowledge required for a strong start in the industry.

2. Proficiency in Programming Languages

Programming is at the heart of data science. You need to be comfortable with languages like Python, R, and SQL, which are widely used for data analysis, machine learning, and database management. A comprehensive data science course in Pune will teach these programming skills from scratch, ensuring you become proficient in coding for data science tasks.

3. Understanding of Machine Learning

Data scientists must have a solid grasp of machine learning techniques and algorithms such as regression, clustering, and decision trees. By enrolling in a DataCouncil course, you'll learn how to implement machine learning models to analyze data and make predictions, an essential qualification for landing a data science job.

4. Data Wrangling Skills

Raw data is often messy and unstructured, and a good data scientist needs to be adept at cleaning and processing data before it can be analyzed. DataCouncil's data science course in Pune includes practical training in tools like Pandas and Numpy for effective data wrangling, helping you develop a strong skill set in this critical area.

5. Statistical Knowledge

Statistical analysis forms the backbone of data science. Knowledge of probability, hypothesis testing, and statistical modeling allows data scientists to draw meaningful insights from data. A structured data science course in Pune offers the theoretical and practical aspects of statistics required to excel.

6. Communication and Data Visualization Skills

Being able to explain your findings in a clear and concise manner is crucial. Data scientists often need to communicate with non-technical stakeholders, making tools like Tableau, Power BI, and Matplotlib essential for creating insightful visualizations. DataCouncil’s data science course in Pune includes modules on data visualization, which can help you present data in a way that’s easy to understand.

7. Domain Knowledge

Apart from technical skills, understanding the industry you work in is a major asset. Whether it’s healthcare, finance, or e-commerce, knowing how data applies within your industry will set you apart from the competition. DataCouncil's data science course in Pune is designed to offer case studies from multiple industries, helping students gain domain-specific insights.

Why Choose DataCouncil for a Data Science Course in Pune?

If you're looking to build a successful career as a data scientist, enrolling in a data science course in Pune with DataCouncil can be your first step toward reaching your goals. Here’s why DataCouncil is the ideal choice:

Comprehensive Curriculum: The course covers everything from the basics of data science to advanced machine learning techniques.

Hands-On Projects: You'll work on real-world projects that mimic the challenges faced by data scientists in various industries.

Experienced Faculty: Learn from industry professionals who have years of experience in data science and analytics.

100% Placement Support: DataCouncil provides job assistance to help you land a data science job in Pune or anywhere else, making it a great investment in your future.

Flexible Learning Options: With both weekday and weekend batches, DataCouncil ensures that you can learn at your own pace without compromising your current commitments.

Conclusion

Becoming a data scientist requires a combination of technical expertise, analytical skills, and industry knowledge. By enrolling in a data science course in Pune with DataCouncil, you can gain all the qualifications you need to thrive in this exciting field. Whether you're a fresher looking to start your career or a professional wanting to upskill, this course will equip you with the knowledge, skills, and practical experience to succeed as a data scientist.

Explore DataCouncil’s offerings today and take the first step toward unlocking a rewarding career in data science! Looking for the best data science course in Pune? DataCouncil offers comprehensive data science classes in Pune, designed to equip you with the skills to excel in this booming field. Our data science course in Pune covers everything from data analysis to machine learning, with competitive data science course fees in Pune. We provide job-oriented programs, making us the best institute for data science in Pune with placement support. Explore online data science training in Pune and take your career to new heights!

#In today's data-driven world#the role of a data scientist has become one of the most coveted career paths. With businesses relying on data for decision-making#understanding customer behavior#and improving products#the demand for skilled professionals who can analyze#interpret#and extract value from data is at an all-time high. If you're wondering what qualifications are needed to become a successful data scientis#how DataCouncil can help you get there#and why a data science course in Pune is a great option#this blog has the answers.#The Key Qualifications for a Data Scientist#To succeed as a data scientist#a mix of technical skills#education#and hands-on experience is essential. Here are the core qualifications required:#1. Educational Background#A strong foundation in mathematics#statistics#or computer science is typically expected. Most data scientists hold at least a bachelor’s degree in one of these fields#with many pursuing higher education such as a master's or a Ph.D. A data science course in Pune with DataCouncil can bridge this gap#offering the academic and practical knowledge required for a strong start in the industry.#2. Proficiency in Programming Languages#Programming is at the heart of data science. You need to be comfortable with languages like Python#R#and SQL#which are widely used for data analysis#machine learning#and database management. A comprehensive data science course in Pune will teach these programming skills from scratch#ensuring you become proficient in coding for data science tasks.#3. Understanding of Machine Learning

3 notes

·

View notes

Text

SQL Server deadlocks are a common phenomenon, particularly in multi-user environments where concurrency is essential. Let's Explore:

https://madesimplemssql.com/deadlocks-in-sql-server/

Please follow on FB: https://www.facebook.com/profile.php?id=100091338502392

#technews#microsoft#sqlite#sqlserver#database#sql#tumblr milestone#vpn#powerbi#data#madesimplemssql#datascience#data scientist#datascraping#data analytics#dataanalytics#data analysis#dataannotation#dataanalystcourseinbangalore#data analyst training#microsoft azure

5 notes

·

View notes

Note

Hey you probably already know this but thought I'd make sure. Statistical analysis is good job for disabled people. They'll usually let you do it from home and be a bit weird (aka have any accoms ever) bcus stats is maths and ppl think math doers are wizards. Also a lot of places need stats work done, big field. Idk if you have any idea how stats work but you have a masters degree you're almost certainly smart enough to teach yourself enough stats and excel to work in the field, every psych major learns it and psyc majors hate maths. Dw if you're not a maths person maths ppl hate stats bcus its not real maths its too easy. So yeah. Good field to work in. Worth a look if you haven't yet? Sorry if completely irrelevant to you and good luck!

Thanks for the info! I was actually pretty good at statistics when I was doing my master's (we had a very strict prof who demanded A Lot from everyone) and I know R. Issue is, most jobs that I've seen for data analysis or statistics or anything of the sort require degrees in math or statistics or IT. Also I've forgotten a decent amount of stuff because I wasn't using it much in the last two years (I know it's weird that I was in a PhD for two years and wasn't using statistics, it's a long story).

So yeah, it's something I've considered but it's probably not an option with my education, at least in my country.

#ask#i don't mind data analysis and statistics so it would probably be a good option as well#but ive looked at everything and even entry level positions that don't require experience want math/stats degrees#also SQL which i have never used...#so yeah. probably not gonna happen

2 notes

·

View notes

Text

Artificial Intelligence: Balancing Innovation with Responsibility

Artificial Intelligence (AI) is the science and engineering of creating intelligent machines capable of performing tasks that typically require human intelligence. These tasks range from recognizing speech to interpreting complex data patterns, making decisions, and even understanding emotions. As AI technologies continue to advance, they are reshaping industries, improving productivity, and raising ethical debates. Understanding the pros and cons of AI is crucial as we navigate its growing impact on society.

#data science#digital painting#sql#data analysis#clip studio paint#data analytics#blog#bloggerbapu#digital arwork

3 notes

·

View notes

Text

🚀 Ready to become a Data Science pro? Join our comprehensive Data Science Course and unlock the power of data! 📊💡

🔍 Learn: Excel PowerBi Python & R Machine Learning Data Visualization Real-world Projects

👨🏫 Taught by industry experts 💼 Career support & networking

3 notes

·

View notes

Text

ughhhhhhhhhhhhhh

#learning to use data analysis tools that suck ass lmao#why am i doing this Like This when i could simply do it in 20 seconds in sql

2 notes

·

View notes

Text

Unlocking Intelligence with GenAI-Powered SQL Queries

In today's data-driven world, information is gold. Businesses of all sizes are sitting on vast reserves of valuable insights within their databases, waiting to be unearthed. Yet, for many, this data remains locked behind a formidable barrier: SQL (Structured Query Language). While SQL is the universal language of databases, its syntax, complex joins, and the need for deep schema knowledge often create bottlenecks, making data access an exclusive club for skilled data analysts and engineers.

What if anyone in your organization – a marketing manager, a sales lead, or a finance executive – could simply ask a question in plain English and instantly get the data they need? This is no longer a futuristic fantasy. Thanks to Generative AI (GenAI), specifically large language models (LLMs), we are on the cusp of democratizing data intelligence by transforming natural language into powerful SQL queries.

The Traditional SQL Challenge: A Bottleneck to Insights

For years, the process of extracting specific data insights often looked like this:

A business user has a question ("What were our top-selling products last quarter by region?").

They write a request to a data analyst.

The data analyst translates the request into a complex SQL query, navigating database schemas, table relationships, and specific column names.

The analyst executes the query, retrieves the data, and presents it back to the business user.

This process, while effective, can be slow, resource-intensive, and a significant bottleneck to agile decision-making. Non-technical users often feel disconnected from their own data, hindering their ability to react quickly to market changes or customer needs.

Enter Generative AI: The Universal Data Translator

GenAI acts as an intelligent bridge between human language and database language. These advanced AI models, trained on vast datasets of text and code, have developed an uncanny ability to understand context, infer intent, and generate coherent, structured output – including SQL queries.

How it Works:

Natural Language to SQL (NL2SQL): You simply type your question in conversational English (e.g., "Show me the average customer lifetime value for new customers acquired in the last six months who purchased product X").

Contextual Understanding: The GenAI model, ideally with some understanding of your specific database schema (either through fine-tuning or provided context), interprets your request. It understands the business terms and maps them to the correct tables, columns, and relationships.

SQL Generation: The AI then crafts the precise SQL query needed to fetch that specific information from your database.

Optional: Explanation & Optimization: Some GenAI tools can also explain a generated SQL query in plain English, helping users understand how the data is being retrieved. They can even suggest optimizations for existing queries to improve performance.

The Transformative Benefits of GenAI-Powered SQL

The implications of this technology are profound, fundamentally changing how organizations interact with their data:

Democratized Data Access: This is perhaps the biggest win. Business users – from marketing specialists to HR managers – who lack SQL expertise can now directly query data, reducing their dependence on technical teams.

Accelerated Decision-Making: No more waiting for analysts. Instant access to insights means faster, more informed business decisions, allowing companies to be more agile and competitive.

Reduced Bottlenecks: Data analysts and engineers are freed from writing repetitive or simple queries, allowing them to focus on complex modeling, strategic initiatives, data architecture, or advanced analytics projects.

Improved Accuracy: GenAI, when trained correctly, can reduce human error in query writing, especially for complex joins, aggregations, or conditional statements.

Enhanced Data Literacy: By interacting with data in a natural way, users can gradually build a better understanding of their data landscape and the questions it can answer.

Rapid Prototyping and Exploration: Business users can quickly test hypotheses and explore different dimensions of their data without needing extensive technical support.

Real-World Use Cases in Action

Sales & Marketing: "Which marketing campaigns last quarter led to the highest customer conversion rates in the North region, broken down by product category?"

Customer Service: "What is the average resolution time for customer support tickets marked as 'high priority' that originated from mobile users in the past month?"

Operations & Supply Chain: "Show me the current inventory levels for all raw materials in Warehouse 3 that are below our reorder threshold, ordered by supplier lead time."

Finance: "Calculate the gross profit margin for each product line in the last fiscal year, comparing it to the previous year."

Even for Developers: Quickly generate boilerplate queries for new features, or suggest optimizations for existing slow-running queries.

Best Practices for Implementation

While GenAI for SQL is incredibly powerful, successful implementation requires thoughtful planning:

Clear Data Governance and Security: Ensure that GenAI tools adhere strictly to data access controls. Users should only be able to query data they are authorized to see.

Well-Documented Schemas: While GenAI is smart, providing clear, consistent, and well-documented database schemas significantly improves the accuracy of generated queries.

Contextual Training (Fine-tuning): For optimal results, fine-tune the GenAI model on your organization's specific data, terminology, common query patterns, and domain-specific language.

Human Oversight is Crucial: Especially in the early stages and for critical queries, always review AI-generated SQL for correctness, efficiency, and potential security implications before execution.

Start Simple, Iterate: Begin by implementing GenAI for less critical datasets or well-defined use cases, gathering feedback, and iteratively improving the system.

User Training: Train your business users on how to phrase their questions effectively for the AI, understanding its capabilities and limitations.

The Future of Data Analysis is Conversational

GenAI-powered SQL queries are not just a technological advancement; they represent a fundamental shift in the human-data interaction. They are empowering more individuals within organizations to directly engage with their data, fostering a culture of curiosity and data-driven decision-making. As these technologies mature, they will truly unlock the hidden intelligence within databases, making data a conversational partner rather than a complex enigma. The future of data analysis is accessible, intuitive, and remarkably intelligent.

0 notes

Text

Q: I’m curious about data analytics, but I don’t know where to start. What’s step one?

You’re not alone, and it’s a great question. With more companies relying on data to make smarter decisions, there’s a huge demand for skilled professionals in this space. The best part? You don’t need a tech background or a fancy degree.

If you’re wondering how to start a career in data analytics, the first step is building a strong foundation with a beginner-friendly data analytics course — ideally one that’s practical, not just theoretical.

Q: So… what do I need to learn?

Start with the essentials:

Learn Python and SQL for data analytics — These are the backbone of most data jobs.

Understand data visualization tools — Think Power BI, Tableau, or Excel.

Learn how to clean, analyze, and present data to solve real business problems.

A solid data analytics bootcamp with career support can teach you all of this, without overwhelming you. Think of it as your fast track into the field, with guidance, mentorship, and built-in structure.

Q: What’s the difference between a bootcamp and a YouTube course?

YouTube is great for curiosity. Bootcamps are built for outcomes.

The right hands-on data analytics bootcamp with job placement doesn’t just teach you the tools. It gets you career-ready. That includes:

Building a job-ready portfolio

Resume & LinkedIn optimization

Mock interviews and real-world projects

Job application strategy and support

If you’re serious about landing a job, not just learning, a structured program gives you a real edge.

Q: What if I have a full-time job or zero tech experience?

That’s more common than you think. Many people who join bootcamps are career changers juggling work, family, and life. That’s why many programs now offer part-time, weekend, or flexible online formats.

If you can give it 8–10 focused hours a week, you can make this work.

Q: I’m not ready to commit yet. Can I test it first?

Yes! Some bootcamps now offer low-cost seminars, even as low as $500, to help you explore before diving in. These intro sessions cover topics like data tools, industry use cases, and career pathways. Great for testing the waters.

Final Thoughts:

If you’re still on the fence about analytics, remember this: the field is growing fast, the barrier to entry is lower than ever, and the opportunities are wide open.

Whether you start with a free course, a seminar, or a full data analytics bootcamp with job placement, the key is to just start.

Let’s Connect Thinking about diving into analytics? I’ve helped people just like you make the switch. Feel free to DM me, I’m happy to share advice or recommend resources to get going.

#data analytics#data analysis#python#sql#beginner friendly data analytics#power bi#How to become a data analyst

0 notes

Text

#business analytics#business analysis training#business analytics institute in india#business analyst certification#businessanalysis#business analyst#business analysis course#business analyst careers#business analyst course#business analyst skills#power bi#sql#tableau#excel#data analytics#data analysis#courses

0 notes

Text

Learn Data Analysis For FREE #dataanalytics #data #dataanalysis #powerbi #sql

Are you looking for a Successful Career Transition into Data Analytics, Data Science, Machine Learning, AI, and Deep Learning? source

0 notes

Text

Your Data Career Starts Here: DICS Institute in Laxmi Nagar

In a world driven by data, those who can interpret it hold the power. From predicting market trends to driving smarter business decisions, data analysts are shaping the future. If you’re looking to ride the data wave and build a high-demand career, your journey begins with choosing the Best Data Analytics Institute in Laxmi Nagar.

Why Data Analytics? Why Now?

Companies across the globe are investing heavily in data analytics to stay competitive. This boom has opened up exciting opportunities for data professionals with the right skills. But success in this field depends on one critical decision — where you learn. And that’s where Laxmi Nagar, Delhi’s thriving educational hub, comes into play.

Discover Excellence at the Best Data Analytics Institute in Laxmi Nagar

When it comes to learning data analytics, you need more than just lectures — you need an experience. The Best Data Analytics Institute in Laxmi Nagar offers exactly that, combining practical training with industry insights to ensure you’re not just learning, but evolving.

Here’s what makes it a top choice for aspiring analysts:

Real-World Curriculum: Learn the tools and technologies actually used in the industry — Python, SQL, Power BI, Excel, Tableau, and more — with modules designed to match current job market needs.

Project-Based Learning: The institute doesn’t just teach concepts — it puts them into practice. You’ll work on live projects, business case studies, and analytics problems that mimic real-life scenarios.

Expert Mentors: Get trained by data professionals with years of hands-on experience. Their mentorship gives you an insider’s edge and prepares you to tackle interviews and workplace challenges with confidence.

Smart Class Formats: Whether you’re a student, jobseeker, or working professional, the flexible batch options — including weekend and online classes — ensure you don’t miss a beat.

Career Support That Works: From resume crafting and portfolio building to mock interviews and job referrals, the placement team works closely with students until they land their dream role.

Enroll in the Best Data Analytics Course in Laxmi Nagar

The Best Data Analytics Course in Laxmi Nagar goes beyond the basics. It’s a complete roadmap for mastering data — right from data collection and cleaning, to analysis, visualization, and even predictive modeling.

This course is ideal for beginners, professionals looking to upskill, or anyone ready for a career switch. You’ll gain hands-on expertise, problem-solving skills, and a strong foundation that puts you ahead of the curve.

Your Data Career Starts Here

The future belongs to those who understand data. With the Best Data Analytics Institute in Laxmi Nagar and the Best Data Analytics Course in Laxmi Nagar, you’re not just preparing for a job — you’re investing in a thriving, future-proof career.

Ready to become a data expert? Enroll today and take the first step toward transforming your future — one dataset at a time.

#Data Analytics#Data Science#Business Intelligence#Machine Learning#Data Visualization#Python for Data Analysis#SQL Training#Power BI

0 notes

Text

The Importance of SQL in Data Science: Master It Today

SQL for Data Science: Why You Should Master It Now is a must-have skill for data scientists. It enables you to clean, manage, and query data efficiently, forming the foundation of data science workflows. You can learn and master SQL through a Data Science Training Institute in Chandigarh, Nashik, Delhi, or other cities in India.

0 notes

Text

Essential Skills Every Data Analyst Should Have

In today’s data-driven world, businesses rely heavily on data analysts to translate raw data into actionable insights. For aspiring data analysts, developing the right skills is crucial to unlocking success in this field. Here’s a look at some of the essential skills every data analyst should master.

1. Statistical Knowledge

A strong understanding of statistics forms the foundation of data analytics. Concepts like probability, hypothesis testing, and regression are crucial for analyzing data and drawing accurate conclusions. With these skills, data analysts can identify patterns, make predictions, and validate their findings with statistical rigor.

2. Data Cleaning and Preparation

Data rarely comes in a ready-to-use format. Data analysts must clean and preprocess datasets, handling issues like missing values, duplicate entries, and inconsistencies. Mastering tools like Excel, SQL, or Python libraries (Pandas and Numpy) can make this process efficient, allowing analysts to work with accurate data that’s primed for analysis.

3. Proficiency in SQL

SQL (Structured Query Language) is the backbone of data retrieval. Analysts use SQL to query databases, join tables, and extract necessary information efficiently. Whether working with MySQL, PostgreSQL, or Oracle, SQL is an indispensable tool for interacting with large datasets stored in relational databases.

4. Data Visualization Skills

Communicating insights effectively is just as important as finding them. Data analysts must be skilled in visualizing data through charts, graphs, and dashboards. Tools like Tableau, Power BI, and Matplotlib in Python help turn data into easy-to-understand visuals that drive informed decision-making.

5. Programming Languages: Python & R

Python and R are highly valued in data analytics due to their flexibility and extensive libraries for data manipulation and analysis. Python, with libraries like Pandas, Seaborn, and Scikit-learn, is versatile for both data preparation and machine learning tasks. R is often used for statistical analysis and visualizations, making both valuable in an analyst’s toolkit.

6. Critical Thinking and Problem-Solving

A data analyst’s role goes beyond number-crunching. They need to ask the right questions, approach problems logically, and identify patterns or trends that lead to strategic insights. Critical thinking allows analysts to explore data from multiple angles, ensuring they uncover valuable information that can impact business decisions.

7. Attention to Detail

Data analytics requires precision and accuracy. Even a small error in data handling or analysis can lead to misleading insights. Attention to detail ensures that data is correctly interpreted and visualized, resulting in reliable outcomes for the business.

8. Domain Knowledge

While technical skills are essential, understanding the industry context adds depth to analysis. Whether it’s finance, healthcare, marketing, or retail, domain knowledge helps analysts interpret data meaningfully, translating numbers into insights that matter for specific business needs.

9. Communication Skills

Once the data is analyzed, the findings must be communicated to stakeholders clearly. Analysts should be skilled in explaining complex data concepts to non-technical team members, using both visual aids and concise language to ensure clarity.

As data analytics grows, so does the need for well-rounded analysts who can handle data from start to finish. By mastering these essential skills, aspiring data analysts can become valuable assets, capable of transforming data into insights that drive business success.

Looking to build a career in data analytics? Code From Basics offers comprehensive training to equip you with these essential skills. Know more about our Data Analytics program.

0 notes