#uefi

Text

Demon-haunted computers are back, baby

Catch me in Miami! I'll be at Books and Books in Coral Gables on Jan 22 at 8PM.

As a science fiction writer, I am professionally irritated by a lot of sf movies. Not only do those writers get paid a lot more than I do, they insist on including things like "self-destruct" buttons on the bridges of their starships.

Look, I get it. When the evil empire is closing in on your flagship with its secret transdimensional technology, it's important that you keep those secrets out of the emperor's hand. An irrevocable self-destruct switch there on the bridge gets the job done! (It has to be irrevocable, otherwise the baddies'll just swarm the bridge and toggle it off).

But c'mon. If there's a facility built into your spaceship that causes it to explode no matter what the people on the bridge do, that is also a pretty big security risk! What if the bad guy figures out how to hijack the measure that – by design – the people who depend on the spaceship as a matter of life and death can't detect or override?

I mean, sure, you can try to simplify that self-destruct system to make it easier to audit and assure yourself that it doesn't have any bugs in it, but remember Schneier's Law: anyone can design a security system that works so well that they themselves can't think of a flaw in it. That doesn't mean you've made a security system that works – only that you've made a security system that works on people stupider than you.

I know it's weird to be worried about realism in movies that pretend we will ever find a practical means to visit other star systems and shuttle back and forth between them (which we are very, very unlikely to do):

https://pluralistic.net/2024/01/09/astrobezzle/#send-robots-instead

But this kind of foolishness galls me. It galls me even more when it happens in the real world of technology design, which is why I've spent the past quarter-century being very cross about Digital Rights Management in general, and trusted computing in particular.

It all starts in 2002, when a team from Microsoft visited our offices at EFF to tell us about this new thing they'd dreamed up called "trusted computing":

https://pluralistic.net/2020/12/05/trusting-trust/#thompsons-devil

The big idea was to stick a second computer inside your computer, a very secure little co-processor, that you couldn't access directly, let alone reprogram or interfere with. As far as this "trusted platform module" was concerned, you were the enemy. The "trust" in trusted computing was about other people being able to trust your computer, even if they didn't trust you.

So that little TPM would do all kinds of cute tricks. It could observe and produce a cryptographically signed manifest of the entire boot-chain of your computer, which was meant to be an unforgeable certificate attesting to which kind of computer you were running and what software you were running on it. That meant that programs on other computers could decide whether to talk to your computer based on whether they agreed with your choices about which code to run.

This process, called "remote attestation," is generally billed as a way to identify and block computers that have been compromised by malware, or to identify gamers who are running cheats and refuse to play with them. But inevitably it turns into a way to refuse service to computers that have privacy blockers turned on, or are running stream-ripping software, or whose owners are blocking ads:

https://pluralistic.net/2023/08/02/self-incrimination/#wei-bai-bai

After all, a system that treats the device's owner as an adversary is a natural ally for the owner's other, human adversaries. The rubric for treating the owner as an adversary focuses on the way that users can be fooled by bad people with bad programs. If your computer gets taken over by malicious software, that malware might intercept queries from your antivirus program and send it false data that lulls it into thinking your computer is fine, even as your private data is being plundered and your system is being used to launch malware attacks on others.

These separate, non-user-accessible, non-updateable secure systems serve a nubs of certainty, a remote fortress that observes and faithfully reports on the interior workings of your computer. This separate system can't be user-modifiable or field-updateable, because then malicious software could impersonate the user and disable the security chip.

It's true that compromised computers are a real and terrifying problem. Your computer is privy to your most intimate secrets and an attacker who can turn it against you can harm you in untold ways. But the widespread redesign of out computers to treat us as their enemies gives rise to a range of completely predictable and – I would argue – even worse harms. Building computers that treat their owners as untrusted parties is a system that works well, but fails badly.

First of all, there are the ways that trusted computing is designed to hurt you. The most reliable way to enshittify something is to supply it over a computer that runs programs you can't alter, and that rats you out to third parties if you run counter-programs that disenshittify the service you're using. That's how we get inkjet printers that refuse to use perfectly good third-party ink and cars that refuse to accept perfectly good engine repairs if they are performed by third-party mechanics:

https://pluralistic.net/2023/07/24/rent-to-pwn/#kitt-is-a-demon

It's how we get cursed devices and appliances, from the juicer that won't squeeze third-party juice to the insulin pump that won't connect to a third-party continuous glucose monitor:

https://arstechnica.com/gaming/2020/01/unauthorized-bread-a-near-future-tale-of-refugees-and-sinister-iot-appliances/

But trusted computing doesn't just create an opaque veil between your computer and the programs you use to inspect and control it. Trusted computing creates a no-go zone where programs can change their behavior based on whether they think they're being observed.

The most prominent example of this is Dieselgate, where auto manufacturers murdered hundreds of people by gimmicking their cars to emit illegal amount of NOX. Key to Dieselgate was a program that sought to determine whether it was being observed by regulators (it checked for the telltale signs of the standard test-suite) and changed its behavior to color within the lines.

Software that is seeking to harm the owner of the device that's running it must be able to detect when it is being run inside a simulation, a test-suite, a virtual machine, or any other hallucinatory virtual world. Just as Descartes couldn't know whether anything was real until he assured himself that he could trust his senses, malware is always questing to discover whether it is running in the real universe, or in a simulation created by a wicked god:

https://pluralistic.net/2022/07/28/descartes-was-an-optimist/#uh-oh

That's why mobile malware uses clever gambits like periodically checking for readings from your device's accelerometer, on the theory that a virtual mobile phone running on a security researcher's test bench won't have the fidelity to generate plausible jiggles to match the real data that comes from a phone in your pocket:

https://arstechnica.com/information-technology/2019/01/google-play-malware-used-phones-motion-sensors-to-conceal-itself/

Sometimes this backfires in absolutely delightful ways. When the Wannacry ransomware was holding the world hostage, the security researcher Marcus Hutchins noticed that its code made reference to a very weird website: iuqerfsodp9ifjaposdfjhgosurijfaewrwergwea.com. Hutchins stood up a website at that address and every Wannacry-infection in the world went instantly dormant:

https://pluralistic.net/2020/07/10/flintstone-delano-roosevelt/#the-matrix

It turns out that Wannacry's authors were using that ferkakte URL the same way that mobile malware authors were using accelerometer readings – to fulfill Descartes' imperative to distinguish the Matrix from reality. The malware authors knew that security researchers often ran malicious code inside sandboxes that answered every network query with fake data in hopes of eliciting responses that could be analyzed for weaknesses. So the Wannacry worm would periodically poll this nonexistent website and, if it got an answer, it would assume that it was being monitored by a security researcher and it would retreat to an encrypted blob, ceasing to operate lest it give intelligence to the enemy. When Hutchins put a webserver up at iuqerfsodp9ifjaposdfjhgosurijfaewrwergwea.com, every Wannacry instance in the world was instantly convinced that it was running on an enemy's simulator and withdrew into sulky hibernation.

The arms race to distinguish simulation from reality is critical and the stakes only get higher by the day. Malware abounds, even as our devices grow more intimately woven through our lives. We put our bodies into computers – cars, buildings – and computers inside our bodies. We absolutely want our computers to be able to faithfully convey what's going on inside them.

But we keep running as hard as we can in the opposite direction, leaning harder into secure computing models built on subsystems in our computers that treat us as the threat. Take UEFI, the ubiquitous security system that observes your computer's boot process, halting it if it sees something it doesn't approve of. On the one hand, this has made installing GNU/Linux and other alternative OSes vastly harder across a wide variety of devices. This means that when a vendor end-of-lifes a gadget, no one can make an alternative OS for it, so off the landfill it goes.

It doesn't help that UEFI – and other trusted computing modules – are covered by Section 1201 of the Digital Millennium Copyright Act (DMCA), which makes it a felony to publish information that can bypass or weaken the system. The threat of a five-year prison sentence and a $500,000 fine means that UEFI and other trusted computing systems are understudied, leaving them festering with longstanding bugs:

https://pluralistic.net/2020/09/09/free-sample/#que-viva

Here's where it gets really bad. If an attacker can get inside UEFI, they can run malicious software that – by design – no program running on our computers can detect or block. That badware is running in "Ring -1" – a zone of privilege that overrides the operating system itself.

Here's the bad news: UEFI malware has already been detected in the wild:

https://securelist.com/cosmicstrand-uefi-firmware-rootkit/106973/

And here's the worst news: researchers have just identified another exploitable UEFI bug, dubbed Pixiefail:

https://blog.quarkslab.com/pixiefail-nine-vulnerabilities-in-tianocores-edk-ii-ipv6-network-stack.html

Writing in Ars Technica, Dan Goodin breaks down Pixiefail, describing how anyone on the same LAN as a vulnerable computer can infect its firmware:

https://arstechnica.com/security/2024/01/new-uefi-vulnerabilities-send-firmware-devs-across-an-entire-ecosystem-scrambling/

That vulnerability extends to computers in a data-center where the attacker has a cloud computing instance. PXE – the system that Pixiefail attacks – isn't widely used in home or office environments, but it's very common in data-centers.

Again, once a computer is exploited with Pixiefail, software running on that computer can't detect or delete the Pixiefail code. When the compromised computer is queried by the operating system, Pixiefail undetectably lies to the OS. "Hey, OS, does this drive have a file called 'pixiefail?'" "Nope." "Hey, OS, are you running a process called 'pixiefail?'" "Nope."

This is a self-destruct switch that's been compromised by the enemy, and which no one on the bridge can de-activate – by design. It's not the first time this has happened, and it won't be the last.

There are models for helping your computer bust out of the Matrix. Back in 2016, Edward Snowden and bunnie Huang prototyped and published source code and schematics for an "introspection engine":

https://assets.pubpub.org/aacpjrja/AgainstTheLaw-CounteringLawfulAbusesofDigitalSurveillance.pdf

This is a single-board computer that lives in an ultraslim shim that you slide between your iPhone's mainboard and its case, leaving a ribbon cable poking out of the SIM slot. This connects to a case that has its own OLED display. The board has leads that physically contact each of the network interfaces on the phone, conveying any data they transit to the screen so that you can observe the data your phone is sending without having to trust your phone.

(I liked this gadget so much that I included it as a major plot point in my 2020 novel Attack Surface, the third book in the Little Brother series):

https://craphound.com/attacksurface/

We don't have to cede control over our devices in order to secure them. Indeed, we can't ever secure them unless we can control them. Self-destruct switches don't belong on the bridge of your spaceship, and trusted computing modules don't belong in your devices.

I'm Kickstarting the audiobook for The Bezzle, the sequel to Red Team Blues, narrated by @wilwheaton! You can pre-order the audiobook and ebook, DRM free, as well as the hardcover, signed or unsigned. There's also bundles with Red Team Blues in ebook, audio or paperback.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/01/17/descartes-delenda-est/#self-destruct-sequence-initiated

Image:

Mike (modified)

https://www.flickr.com/photos/stillwellmike/15676883261/

CC BY-SA 2.0

https://creativecommons.org/licenses/by-sa/2.0/

#pluralistic#uefi#owner override#user override#jailbreaking#dmca 1201#schneiers law#descartes#nub of certainty#self-destruct button#trusted computing#secure enclaves#drm#ngscb#next generation secure computing base#palladium#pixiefail#infosec

577 notes

·

View notes

Text

classic trick

69 notes

·

View notes

Text

#windows#microsoft windows#linux#logofail#firmware#uefi#attack#malware#virus#software#computers#devices#tech support#technicalsupport#ausgov#politas#auspol#tasgov#taspol#australia#fuck neoliberals#neoliberal capitalism#anthony albanese#albanese government

5 notes

·

View notes

Text

You guys have no clue how much I love Rufus and it's absurd levels of error checking.

3 notes

·

View notes

Text

Microsoft blockiert über 100 Linux-Bootloader

Erneuter Betriebssystemkrieg "Microsoft gegen Linux"

Das Windows-Sicherheitsupdate vom 9. August ist nun ein erneuter Versuch den Krieg anzufachen. Mit dem Update wurden etliche Bootloader-Signaturen zurückgezogen, die für den Rechner die Erlaubnis zum Start verschiedener Linux-Systeme geben. Damit will auf allen diesen Systemen die Linux-Partition nicht mehr starten. Vor dieser Gefahr hatten wir schon vor einem Jahr beim Erscheinen von Windows 11 gewarnt.

Wie kann sich ein Betriebssystem zum Herrscher erklären?

Um Rootkits und Bootviren keine Chance zu geben haben sich die (oder viele?) Hardware-Hersteller darauf eingelassen, dass ihre Rechner nur noch Betriebssysteme starten, wenn deren Bootloader von Microsoft signiert wurden. Das Zauberwort heißt "Secure Boot".

Natürlich gibt es dagegen Abhilfe, z.B. erklärt die Zeitschrift c't, wie man ein Linux trotz Microsofts Boot-Monopol wieder flott bekommen kann - nur muss man dann auf die Funktion "Secure Boot" im Setup des Rechners verzchten.

Unsere Empörung über die Tatsache, dass mit dem Windows-Sicherheitsupdate vom 9. August durch Microsoft über 100 Linux-Bootloader auf die schwarze Liste gesetzt wurden, bleibt trotz der "Umgehungsmöglichkeit" unendlich groß. Durch diesen selbstherrlichen Schritt wird ohne wirklichen Grund Hunderttausenden auf der Welt ihre Arbeit (der Installation) und wichtiger, ihre von Microsofts Spionagetools befreite Arbeitsmöglichkeit genommen.

Nebenbei bemerkt: Besitzer von iPad und iPhone sind schon seit langem daran gewöhnt, dass ihr System derart abgeschottet ist, dass ein normaler Datenaustausch von Gerät zu Gerät praktisch nicht oder nur für einige ausgewählte Ordner möglich ist. Googles Android hat sich ebenfalls von Version zu Version mehr abgeschottet. Alle diese und auch Microsoft versuchen mit allen Mitteln, die Nutzer und ihre Daten in ihrem eigenen Dunstkreis zu halten. Dagegen kämpfen wir seit Jahrzehnten für ein offenes und privatsphäre-schützendes Betriebssystem wie Linux.

Mehr dazu bei https://www.heise.de/hintergrund/Bootloader-Signaturen-per-Update-zurueckgezogen-Microsoft-bootet-Linux-aus-7250544.html

Kategorie[21]: Unsere Themen in der Presse Short-Link dieser Seite: a-fsa.de/d/3pu

Link zu dieser Seite: https://www.aktion-freiheitstattangst.org/de/articles/8133-20220903-microsoft-blockiert-ueber-100-linux-bootloader-.htm

#SecureBoot#Microsoft#Windows#Linux#Bootloader#TrustedPlatformModule#TPM#Big5#UEFI#Gleichberechtigung#Diskriminierung#Ungleichbehandlung

2 notes

·

View notes

Video

youtube

3 commandes Windows essentielles à maîtriser : Arrêter, Redémarrer et Ac...

0 notes

Text

UEFI bien explicado

UEFI, que significa “Interfaz de Firmware Extensible Unificada” en inglés (Unified Extensible Firmware Interface), es una interfaz de firmware estándar que reemplaza al BIOS (Sistema Básico de Entrada/Salida) en muchos sistemas modernos, especialmente en computadoras personales. UEFI proporciona una interfaz entre el sistema operativo y el firmware de la computadora durante el proceso de…

View On WordPress

1 note

·

View note

Link

#BSI#Datenschutz#DeviceGuard#Gruppenrichtlinien#IT-Grundschutz#LokaleGruppenrichtlinien#PowerShell#Sicherheit#TPM#UEFI#Virtualisierung#Windows10

0 notes

Text

This is the most mind-boggling tech story I've read in months. At first I suspected an April Fools joke, but the dateline is November 2017.

0 notes

Text

Revenge of the Linkdumps

Next Saturday (May 20), I’ll be at the GAITHERSBURG Book Festival with my novel Red Team Blues; then on May 22, I’m keynoting Public Knowledge’s Emerging Tech conference in DC.

On May 23, I’ll be in TORONTO for a book launch that’s part of WEPFest, a benefit for the West End Phoenix, onstage with Dave Bidini (The Rheostatics), Ron Diebert (Citizen Lab) and the whistleblower Dr Nancy Olivieri.

If you’ve followed my work for a long time, you’ve watched me transition from a “linkblogger” who posts 5–15 short hits every day to an “essay-blogger” who posts 5–7 long articles/week. I’m loving the new mode of working, but returning to linkblogging is also intensely, unexpectedly gratifying:

https://pluralistic.net/2023/05/02/wunderkammer/#jubillee

If you’d like an essay-formatted version of this post to read or share, here’s a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2023/05/13/four-bar-linkage/#linkspittle

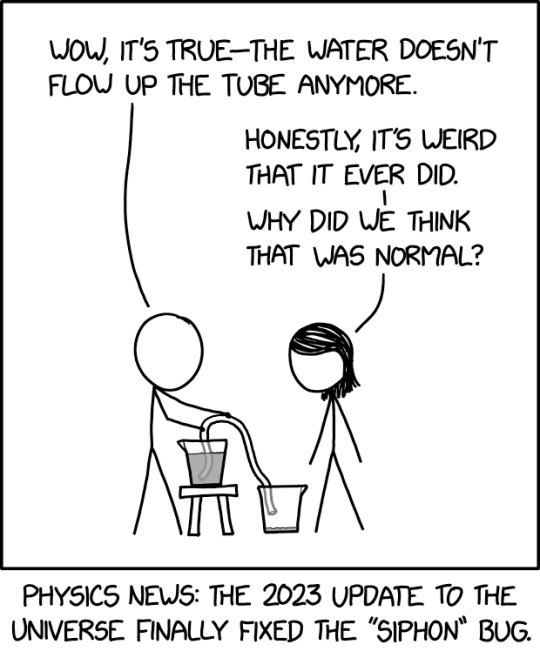

[Image ID XKCD #2775: Siphon. Man: ‘Wow, it’s true — the water doesn’t flow up the tube anymore.’ Woman: ‘Honestly, it’s weird that it ever did. Why did we ever think it was normal?’ Caption: ‘Physics news: the 2023 update to the universe finally fixed the ‘siphon’ bug.’]

My last foray into linkblogging was so great — and my backlog of links is already so large — that I’m doing another one.

Link the first: “Siphon,” XKCD’s delightful, whimsical “physics-how-the-fuck-does-it-work” one-shot (visit the link, the tooltip is great):

https://xkcd.com/2775/

[Image ID: A Dutch safety poster by Herman Heyenbrock, warning about the hazards of careless table-saw use, featuring a hand with two amputated fingers.]

Next is “Hoogspanning,” 50 Watts’s collection of vintage Dutch workplace safety posters, which exhibit that admirable Dutch frankness to a degree that one could mistake for parody, but they’re 100% real, and amazing:

https://50watts.com/Hoogspanning-More-Dutch-Safety-Posters

They’re ganked from Geheugenvannederland (“Memory of the Netherlands”):

https://geheugenvannederland.nl/

While some come from the 1970s, others date back to the 1920s and are likely public domain. I’ve salted several away in my stock art folder for use in future collages.

All right, now that the fun stuff is out of the way, let’s get down to some crunch tech-policy. To ease us in, I’ve got a game for you to play: “Moderator Mayhem,” the latest edu-game from Techdirt:

https://www.techdirt.com/2023/05/11/moderator-mayhem-a-mobile-game-to-see-how-well-you-can-handle-content-moderation/

Moderator Mayhem started life as a card-game that Mike Masnick used to teach policy wonks about the real-world issues with content moderation. You play a mod who has to evaluate content moderation flags from users while a timer ticks down. As you race to evaluate users’ posts for policy compliance, you’re continuously interrupted. Sometimes, it’s “helpful” suggestions from the company’s AI that wants you to look at the posts it flagged. Sometimes, it’s your boss who wants you to do a trendy “visioning” exercise or warning you about a “sensitivity.” Often, it’s angry ref-working from users who want you to re-consider your calls.

The card-game version is legendary but required a lot of organization to play, and the web version (which is better in a mobile browser, thanks to a swipe-left/right mechanic) is something you can pick up in seconds. This isn’t merely highly recommended; I think that one could legitimately refuse to discuss content moderation policies and critiques with anyone who hasn’t played it;

https://moderatormayhem.engine.is/

Or maybe that’s too harsh. After all, tech policy is a game that everyone can play — and more importantly, it’s a game everyone should play. The contours of tech regulation and implementation touch rub up against nearly every aspect of our lives, and part of the reason it’s such a mess is that the field has been gatekept to shit, turned into a three-way fight between technologists, policy wonks and economists.

Without other voices in the debate, we’re doomed to end up with solutions that satisfy the rarified needs and views of those three groups, a situation that is likely to dissatisfy everyone else.

However. However. The problem is that our technology is nowhere near advanced enough to be indistinguishable from magic (RIP, Sir Arthur). There’s plenty of things everyone wishes tech could do, but it can’t, and wanting it badly isnlt enough. Merely shouting “nerd harder!” at technologists won’t actually get you what you want. And while I’m rattling off cliches: a little knowledge is a dangerous thing.

Which brings me to Ashton Kutcher. Yes, that Ashton Kutcher. No, really. Kutcher has taken up the admirable, essential cause of fighting Child Sex Abuse Material (CSAM, which is better known as child pornography) online. This is a very, very important and noble cause, and it deserves all our support.

But there’s a problem, which is that Kutcher’s technical foundations are poor, and he has not improved them. Instead, he cites technologies that he has a demonstrably poor grasp upon to call for policies that turn out to be both ineffective at fighting exploitation and to inflict catastrophic collateral damage on vulnerable internet users.

Take sex trafficking. Kutcher and his organization, Thorn, were key to securing the passage of SESTA/FOSTA, a law that was supposed to fight online trafficking by making platforms jointly liable when they were used to facilitate trafficking:

https://www.engadget.com/2019-05-31-sex-lies-and-surveillance-fosta-privacy.html

At the time, Kutcher argued that deputizing platforms to understand and remove which user posts were part of a sex crime in progress would not inflict collateral damage. Somehow, if the platforms just nerded hard enough, they’d be able to remove sex trafficking posts without kicking off all consensual sex-workers.

Five years later, the verdict is in, and Kutcher was wrong. Sex workers have been deplatformed nearly everywhere, including from the places where workers traded “bad date” lists of abusive customers, which kept them safe from sexual violence, up to and including the risk of death. Street prostitution is way up, making the lives of sex workers far more dangerous, which has led to a resurgence of the odious institution of pimping, a “trade” that was on its way to vanishing altogether thanks to the power of the internet to let sex workers organize among themselves for protection:

https://aidsunited.org/fosta-sesta-and-its-impact-on-sex-workers/

On top of all that, SESTA/FOSTA has made it much harder for cops to hunt down and bust actual sex-traffickers, by forcing an activity that could once be found with a search-engine into underground forums that can’t be easily monitored:

https://www.techdirt.com/2018/07/09/more-police-admitting-that-fosta-sesta-has-made-it-much-more-difficult-to-catch-pimps-traffickers/

Wanting it badly isn’t enough. Technology is not indistinguishable from magic.

A little knowledge is a dangerous thing.

Kutcher, it seems, has learned nothing from SESTA/FOSTA. Now he’s campaigning to ban working cryptography, in the name of ending the spread of CSAM. In March, Kutcher addressed the EU over the “Chat Control” proposal, which, broadly speaking, is a ban on end-to-end encrypter messaging (E2EE):

https://www.brusselstimes.com/417985/ashton-kutcher-spotted-in-the-european-parliament-promoting-childrens-rights

Now, banning E2EE would be a catastrophe. Not only is E2EE necessary to protect people from griefers, stalkers, corporate snoops, mafiosi, etc, but E2EE is the only thing standing between the world’s dictators and total surveillance of every digital communication. Even tiny flaws in E2EE can have grave human rights concerns. For example, a subtle bug in Whatsapp was used by NSO Group to create a cyberweapon called Pegasus that the Saudi royals used to lure Jamal Khashoggi to his grisly murder:

https://www.theguardian.com/world/2021/jul/18/nso-spyware-used-to-target-family-of-jamal-khashoggi-leaked-data-shows-saudis-pegasus

Because the collateral damage from an E2EE ban would be so far-ranging (beyond harms to sex workers, whose safety is routinely disregarded by policy-makers), people like Kutcher can’t propose an outright ban on E2EE. Instead, they have to offer some explanation for how the privacy, safety and human rights benefits of E2EE can be respected even as encryption is broken to hunt for CSAM.

Kutcher’s answer is something called “fully homomorphic encryption” (FHE) which is a theoretical — and enormously cool — way to allow for computing work to be done on encrypted data without decrypting it. When and if FHE are ready for primetime, it will be a revolution in our ability to securely collaborate with one another.

But FHE is nowhere near the state where it could do what Kutcher claims. It just isn’t, and once again, wanting it badly is not enough. Writing on his blog, the eminent cryptographer Matt Green delivers a master-class in what FHE is, what it could do, and what it can’t do (yet):

https://blog.cryptographyengineering.com/2023/05/11/on-ashton-kutcher-and-secure-multi-party-computation/

As it happens, Green also gave testimony to the EU, but he doesn’t confine his public advocacy work to august parliamentarians. Green wants all of us to understand cryptography (“I think cryptography is amazing and I want everyone talking about it all the time”). Rather than barking “stay in your lane” at the likes of Kutcher, Green has produced an outstanding, easily grasped explanation of FHE and the closely related concept of multi-party communication (MPC).

This is important work, and it exemplifies the difference between simplifying and being simplistic. Good science communicators do the former. Bad science communicators do the latter.

While Kutcher is presumably being simplistic because he lacks the technical depth to understand what he doesn’t understand, technically skilled people are perfectly capable of being simplistic, when it suits their economic, political or ideological goals.

One such person is Geoffrey Hinton, the so-called “father of AI,” who resigned from Google last week, citing the existential risks of “runaway AI” becoming superintelligent and turning on its human inventors. Hinton joins a group of powerful, wealthy people who have made a lot of noise about the existential risk of AI, while saying little or nothing about the ongoing risks of AI to people with disabilities, poor people, prisoners, workers, and other groups who are already being abused by automated decision-making and oversight systems.

Hinton’s nonsense is superbly stripped bare by Meredith Whittaker, the former Google worker organizer turned president of Signal, in a Fast Company interview with Wilfred Chan:

https://www.fastcompany.com/90892235/researcher-meredith-whittaker-says-ais-biggest-risk-isnt-consciousness-its-the-corporations-that-control-them

The whole thing is incredible, but there’s a few sections I want to call to your attention here, quoting Whittaker verbatim, because she expresses herself so beautifully (sci-comms done right is a joy to behold):

I think it’s stunning that someone would say that the harms [from AI] that are happening now — which are felt most acutely by people who have been historically minoritized: Black people, women, disabled people, precarious workers, et cetera — that those harms aren’t existential.

What I hear in that is, “Those aren’t existential to me. I have millions of dollars, I am invested in many, many AI startups, and none of this affects my existence. But what could affect my existence is if a sci-fi fantasy came to life and AI were actually super intelligent, and suddenly men like me would not be the most powerful entities in the world, and that would affect my business.”

I think we need to dig into what is happening here, which is that, when faced with a system that presents itself as a listening, eager interlocutor that’s hearing us and responding to us, that we seem to fall into a kind of trance in relation to these systems, and almost counterfactually engage in some kind of wish fulfillment: thinking that they’re human, and there’s someone there listening to us. It’s like when you’re a kid, and you’re telling ghost stories, something with a lot of emotional weight, and suddenly everybody is terrified and reacting to it. And it becomes hard to disbelieve.

Whittaker sets such a high bar for tech criticism. I had her clarity in mind in 2021, when I collaborated with EFF’s Bennett Cyphers on “Privacy Without Monopoly,” our white-paper addressing the claim that we need giant tech platforms to protect us from the privacy invasions of smaller “rogue” operators:

https://www.eff.org/wp/interoperability-and-privacy

This is a claim that is most often raised in relation to Apple and its App Store model, which is claimed to be a bulwark against commercial surveillance. That claim has some validity: after all, when Apple added a one-click surveillance opt-out to Ios, its mobile OS. 96% of users clicked the “don’t spy on me” button. Those clicks cost Facebook $10b in just the following year. You love to see it.

But Apple is a gamekeeper-turned-poacher. Even as it was blocking Facebook’s surveillance, it was conducting its own, nearly identical, horrifyingly intrusive surveillance of every Ios user, for the same purpose as Facebook (ad targeting) and lying about it:

https://pluralistic.net/2022/11/14/luxury-surveillance/#liar-liar

Bennett and I couldn’t have asked for a better example of the point we make in “Privacy Without Monopoly”: the thing that stops companies from spying on you isn’t their moral character, it’s the threat of competition and/or regulation. If you can modify your device in ways that cost its manufacturer money (say, by installing an alternative app store), then the manufacturer has to earn your business every day.

That might actually make them better — and if it doesn’t, you can switch. The right way to make sure the stuff you install on your devices respects your privacy is by passing privacy laws — not by hoping that Tim Apple decides you deserve a private life.

Bennett and I followed up “Privacy Without Monopoly” with an appendix that focused on a territory where there is a privacy law: the EU, whose (patchily enforced) General Data Protection Regulation (GDPR) is the kind of privacy law that we call for in the original paper. In that appendix, we addressed the issues of GDPR enforcement:

https://www.eff.org/wp/interoperability-and-privacy#gdpr

More importantly, we addressed the claim that the GDPR crushed competition, by making it harder for smaller (and even sleazier) ad-tech platforms to compete with Google and Facebook. It’s true, but that’s OK: we want competition to see who can respect technology users’ rights — not competition to see who can violate those rights most efficiently:

https://www.eff.org/deeplinks/2021/06/gdpr-privacy-and-monopoly

Around the time Bennett and I published the EU appendix to our paper, I was contacted by the Indian Journal of Law and Technology to see whether I could write something on similar lines, focused on the situation in India. Well, it took two years, but we’ve finally published it: “Securing Privacy Without Monopoly In India: Juxtaposing Interoperability With Indian Data Protection”:

https://www.ijlt.in/post/securing-privacy-without-monopoly-in-india-juxtaposing-interoperability-with-indian-data-protection

The Indian case for interop incorporates the US and EU case, but with some fascinating wrinkles. First, there are the broad benefits of allowing technology adaptation by people who are often left out of the frame when tools and systems are designed. As the saying goes, “nothing about us without us” — the users of technology know more about their needs than any designer can hope to understand. That’s doubly true when designers are wealthy geeks in Silicon Valley and the users are poor people in the global south.

India, of course, has its own highly advanced domestic tech sector, who could be a source of extensive expertise in adapting technologies from US and other offshore tech giants for local needs. India also has a complex and highly contested privacy regime, which is in extreme flux between high court decisions, regulatory interventions, and legislation, both passed and pending.

Finally, there’s India’s long tradition of ingenious technological adaptations, locally called jugaad, roughly equivalent to the English “mend and make do.” While every culture has its own way of celebrating clever hacks, this kind of ingenuity is elevated to an art form in the global south: think of jua kali (Swahili), gambiarra (Brazilian Portuguese) and bricolage (France and its former colonies).

It took a long time to get this out, but I’m really happy with it, and I’m extremely grateful to my brilliant and hardworking research assistants from National Law School of India University: Dhruv Jain, Kshitij Goyal and Sarthak Wadhwa.

I don’t claim that any of the incarnations of the “Privacy Without Monopoly” paper rise to the clarity of the works of Green or Whittaker, but that’s okay, because I have another arrow in my quiver: fiction. For more than 20 years, I’ve written science fiction that tries to make legible and urgent the often dry and abstract concepts I address in my nonfiction.

One issue I’ve been grappling with for literally decades is the implications of “trusted computing,” a security model that uses a second, secure computer, embedded in your device, to observe and report on what your main computer is doing. There are lots of implications for this, both horrifying and amazing.

For example, having a second computer inside your device that watches it is a theoretically unbeatable way of catching malicious software, resolving the conundrum of malware: if you think your computer is infected and can’t be trusted, then how can you trust the antivirus software running on that computer.

Back in 2016, Andrew “bunnie” Huang and Edward Snowden released the “Introspection Engine,” a separate computer that you could install in an Iphone, which would tell you whether it was infected with spyware:

https://www.tjoe.org/pub/direct-radio-introspection/release/2

But while there are some really interesting positive applications for this kind of software, the negative ones — unbeatable DRM and tamper-proof bossware — are genuinely horrifying. My novella “Unauthorized Bread” digs into this, putting blood and sinew into an otherwise dry abstract and skeletal argument:

https://arstechnica.com/gaming/2020/01/unauthorized-bread-a-near-future-tale-of-refugees-and-sinister-iot-appliances/

Then there are applications that are somewhere in between, like “remote attestation” (when the secure computer signs a computer-readable description of what your computer is doing so that you can prove things about your computer and its operation to people who don’t trust you, but do trust that secure computer).

Remote attestation is the McGuffin of Red Team Blues, my latest novel, a crime-thriller about a cryptocurrency heist. The novel opens with the keys to a secure enclave — the gadget that signs the attestations in remote attestation — going missing.

When Matt Green reviewed Red Team Blues (his first book review!), he singled this out as a technically rigorous and significant plot point, because secure enclaves are designed so that they can’t be updated (if you can update an enclave, then you can update it with malicious software):

https://blog.cryptographyengineering.com/2023/04/24/book-review-red-team-blues/

This means that bugs in secure enclaves can last forever. Worse, if the keys for a secure enclave ever leak, then there’s no way to update all the secure enclaves out there in the world — millions or billions of them — to fix it.

Well, it’s happened.

The keys for the secure enclaves in Micro-Star International (AKA MSI) computers, a massive manufacturer of work and gaming PCs — have leaked and shown up on the “extortion portal” of a notorious crime gang:

https://arstechnica.com/information-technology/2023/05/leak-of-msi-uefi-signing-keys-stokes-concerns-of-doomsday-supply-chain-attack/

As a security expert quoted by Ars Technica explains, this is a “doomsday scenario.” That’s more or less how it plays in my novel. The big difference between the MSI leak and the hack in my book is that the MSI keys were just sitting on a server, connected to the internet, which wasn’t well-secured.

In Red Team Blues, I went to enormous lengths to imagine a fiendishly complex, incredibly secure scheme for hosting these keys, and then dreamt up a way that the bad guys could defeat it. I toyed with the idea of having the keys leak due to rank incompetence, but I decided that would be an “idiot plot” (“a plot that only works if the characters are idiots”). Turns out, idiot plots may make for bad fiction, but they’re happening around us all the time.

In my real life, I cross a lot of disciplinary boundaries — law, politics, economics, human rights, security, technology. I’m not the world’s leading expert in any of these domains, but I am well-enough informed about each that I’m able to find interesting ways that they fit together in a manner that is relatively rare, and is also (I think) useful.

I admit to sometimes feeling insecure about this — being “one inch deep and ten miles wide” has its virtues, but there’s no avoiding that, say, I know less about the law than a real lawyer, and less about computer science than a real computer scientist.

That insecurity is partly why I’m so honored when I get to talk to experts across multiple disciplines. 2023 was a very good year for this, thanks to University College London. Back in Feb, I was invited to speak as part of UCL Institute of Brand and Innovation Law’s annual series on technology law:

https://www.ucl.ac.uk/laws/events/2023/feb/recording-chokepoint-capitalism-can-it-be-defeated

And next month, I’m giving UCL Computer Science’s annual Peter Kirstein lecture:

https://www.eventbrite.co.uk/e/peter-kirstein-lecture-2023-featuring-cory-doctorow-registration-539205788027

Getting to speak to both the law school and the computer science school within a space of months is hugely gratifying, a real vindication of my theory that the virtues of my breadth make up for the shortcomings in my depth.

I’m getting a similar thrill from the domain experts who’ve been reviewing Red Team Blues. This week, Maria Farrell posted her Crooked Timber review, “When crypto meant cryptography”:

https://crookedtimber.org/2023/05/11/when-crypto-meant-cryptography/

Farrell is a brilliant technology critic. Her work on “prodigal tech bros” is essential:

https://conversationalist.org/2020/03/05/the-prodigal-techbro/

So her review means a lot to me in general, but I was overwhelmed to read her describe how Red Team Blues taught her to “read again for joy” after long covid “completely scrambled [her] brain.”

That meant a lot personally, but her review is even more gratifying when it gets into craft questions, like when she praises the descriptions as “so interesting and sociologically textured.” I love her description of the book as “Dickensian”: “it shoots up and down the snakes and ladders of San Francisco’s gamified dystopia of income inequality, one moment whizzing up the ear-poppingly fast elevator to a billionaire’s hardened fortress, the next sleeping under a bridge in a homeless encampment.”

And then, this kicker: “it’s a gorgeous rejection of the idea that long-form fiction is about individual subjectivity and the interior life. It’s about people as pinballs. They don’t just reveal things about the other objects they hit; their constant action and reaction reveals the walls that hold them all in.”

Likewise, I was thrilled with Peter Watts’s review on his “No Moods, Ads or Cutesy Fucking Icons” blog::

https://www.rifters.com/crawl/?p=10578%22%3Ehttps://www.rifters.com/crawl/?p=10578

Peter is a brilliant sf writer and worldbuilder, an accomplished scientist, and one of the world’s most accomplished ranters. He’s had more amazing ideas than I’ve had hot breakfasts:

https://locusmag.com/2018/05/cory-doctorow-the-engagement-maximization-presidency/

His review says some very nice and flattering things about me and my previous work, which is always great to read, especially for anyone with a chronic case of impostor syndrome. But what really mattered was the way he framed how I write villains: “The villains of Cory’s books aren’t really people; they’re systems. They wear punchable Human faces but those tend to be avatars, mere sock-puppets operated by the institutions that comprise the real baddies.”

One could read that as a critique, but coming from Peter, it’s praise — and it’s praise that gets to the heart of my worldview, which is that our biggest problems are systemic, not individual. The problem of corporate greed isn’t just that CEOs are monsters who don’t care who they hurt — it’s that our system is designed to let them get away with it. Worse, system design is such that the CEOs who aren’t monsters are generally clobbered by the ones who are.

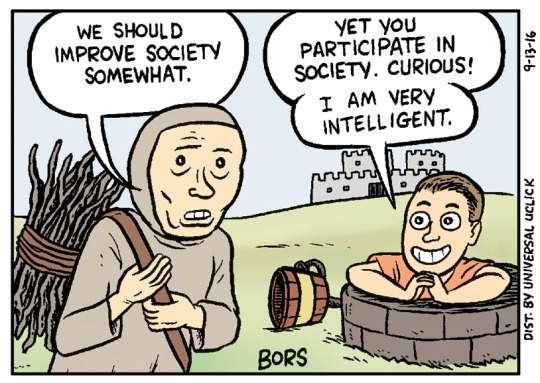

So much of our outlook is grounded in the moral failings or virtues of individuals. Tim Apple will keep our data safe, so we should each individually decide to reward him by buying his phones. If Tim Apple betrays us, we should “vote with our wallets” by buying something else. If you care about the climate, you should just stop driving. If there’s no public transit, well, then maybe you should, uh, dig a subway?

[Image ID: Matt Bors’s classic Mr Gotcha panel, in which a medieval peasant says ‘We should improve society somewhat,’ and Mr Gotcha replies, ‘Yet you participate in society. Curious! I am very smart.’]

This is the mindset Matt Bors skewers so expertly with his iconic Mr Gotcha character: “Yet you participate in society. Curious! I am very smart”:

https://thenib.com/mister-gotcha/

(Which reminds me, I am halfway through Bors’s unbelievably, fantastically, screamingly awesome graphic novel “Justice Warriors,” which turns the neoliberal caveat-emptor/personal-responsibility brain-worm into the basis for possibly the greatest superhero comic of all time:)

https://www.mattbors.com/books

Watts finishes his review with:

I’ve never fully come to terms with the general decency of Cory’s characters. Doctorow the activist lives in the trenches, fighting those who make their billions trading the details of our private lives, telling us that they own what we’ve bought, surveilling us for the greater good and even greater profits. He’s spent more time facing off against the world’s powerful assholes than I ever will. He knows how ruthless they are. He knows, first-hand, how much of the world is clenched in their fists. By rights, his stories should make mine look like Broadway musicals.

And yet, Doctorow the Author is — hopeful. The little guys win against overwhelming odds. Dystopias are held at bay. Even the bad guys, in defeat, are less likely to scorch the earth than simply resign with a show of grudging respect for a worthy opponent.

I often get asked by readers — especially readers of Pluralistic, which is heavy on awful scandals and corruption — how I keep going. Watts has the answer:

Maybe it’s a fundamental difference in outlook. I’ve always regarded humans as self-glorified mammals, fighting endless and ineffective rearguard against their own brain stems; Cory seems to see us as more influenced by the angels of our better natures. Or maybe — maybe it’s not just his plots that are meant to be instructional. Maybe he’s deliberately showing us how we could behave as a species, in the same way he shows us how to fuck with DRM or foil face-recognition tech. Maybe it’s not that he subscribes to some Pollyanna vision of what we are; maybe he’s showing us what we could be.

Got it in one, Peter.

And…

It’s also about what happens if we don’t get better.

Writing on his “Economics From the Top Down” blog, Blair Fix — a heterodox economist and sharp critic of oligarchy — publishes a Red Team Blues review that nails the “or else” in my books, and does it with graphs:

https://economicsfromthetopdown.com/2023/05/13/red-team-blues-cory-doctorows-anti-finance-thriller/

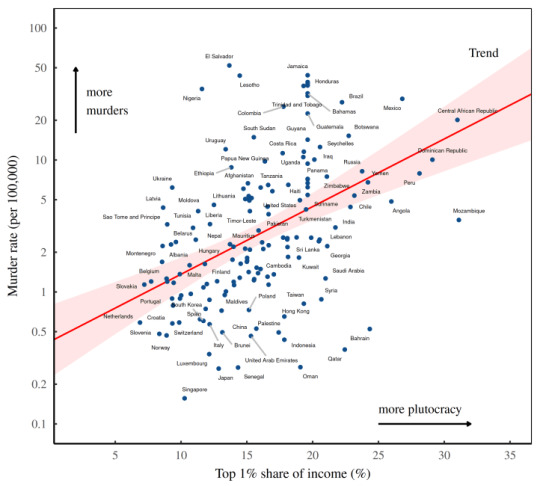

Fix surfaces the latent point in my work that inequality is destabilizing — that spectacular violence is downstream of making a society that has nothing to offer for the majority of us. As Marty Hench, the 67 year old forensic accountant protagonist of Red Team Blues says,

Finance crime is a necessary component of violent crime. Even the most devoted sadist needs a business model, or he will have to get a real job.

[Image ID: A chart labeled, ‘With more plutocracy comes more murder. As countries become more unequal (horizontal axis), their murder rates go up (vertical axis).’]

Fix agrees, and shows us that murders go up with inequality.

https://economicsfromthetopdown.com/2023/05/13/red-team-blues-cory-doctorows-anti-finance-thriller/#sources-and-methods

Which is why, while the average private eye is a kind of “cop who gets to bend the rules of policing”; Hench is “a kind of uber IRS agent who gets to work in ‘sneaky ways that aren’t available to the taxman.’”

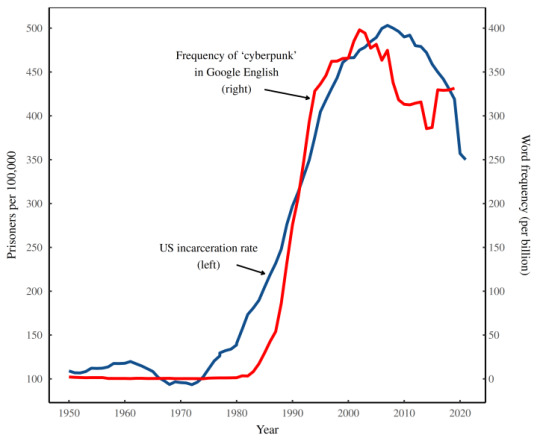

[Image ID: A chart labeled, ‘Was the US prison state the inspiration for cyberpunk? The term ‘cyberpunk’ (which describes a genre of dystopian science fiction) became popular in tandem with mass incarceration in the US. It’s probably not a coincidence.’]

This observation segues into a fascinating, data-informed look at the way that science fiction reflects our fears and aspirations about wider social phenomenon — for example, the popularity of the word “cyberpunk” closely tracks rising incarceration rates.

https://economicsfromthetopdown.com/2023/05/13/red-team-blues-cory-doctorows-anti-finance-thriller/#sources-and-methods

(It’s not a coincidence that the next Marty Hench book, “The Bezzle,” is about prisons and prison-tech; it’s out in Feb 2024:)

https://us.macmillan.com/books/9781250865878/thebezzle

I’m out on tour with Red Team Blues right now, with upcoming stops in the DC area, Toronto, the UK, and then Berlin:

https://craphound.com/novels/redteamblues/2023/04/26/the-red-team-blues-tour-burbank-sf-pdx-berkeley-yvr-edmonton-gaithersburg-dc-toronto-hay-oxford-nottingham-manchester-london-edinburgh-london-berlin/

I’ve just added another Berlin stop, on June 8, at Otherland, Berlin’s amazing sf/f bookstore:

https://twitter.com/otherlandberlin/status/1657082021011701761

I hope you’ll come along! I’ve been meeting a lot of people on this tour who confess that while they’ve read my blogs and essays for years, they’ve never picked up one of my books. If you’re one of those readers, let me assure you, it is not too late!

As you’ve read above, my fiction is very much a continuation of my nonfiction by other means — but it’s also the place where I bring my hope as well as my dismay and anger. I’m told it makes for a very good combination.

If you’re still wavering, maybe this will sway you: the blogging and essays are either free or very low-paid, and they’re heavily subsidized by my fiction. If you enjoy my nonfiction, buying my novels is the best way to say thank you and to ensure a continuing supply of both.

But novels are by no means a dreary duty — fiction is a delight, and after a couple decades at it, I’ve come to grudgingly concede — impostor syndrome notwithstanding — that I’m pretty good at it.

I hope you’ll agree.

Image:

Robert Miller (modified)

https://www.flickr.com/photos/12463666@N03/52721565937

CC BY 2.0

https://creativecommons.org/licenses/by/2.0/

Catch me on tour with Red Team Blues in Toronto, DC, Gaithersburg, Oxford, Hay, Manchester, Nottingham, London, and Berlin!

[Image ID: A kitchen junk-drawer, full of junk.]

#illustration#pluralistic#hand-waving#multi-party computation#linkdump#link-blogging#red team blues#uefi#supply chain attacks#xkcd#brain fog#say cyber again#privacy without monopoly#reviews#crypto-wars#inequality#social instability#blair fix#maria farrell#dicksenian#msi#villains#peter watts#global south#bennett cyphers#eff#data protection#content moderation#content moderation at scale#content moderation at scale is impossible

22 notes

·

View notes

Text

A Beginner's Guide to the BIOS, CMOS, and UEFI

The BIOS, CMOS, and UEFI are all types of firmware that play important roles in the operation of a computer. Understanding what each of these does on the computer can be helpful when troubleshooting problems or making changes to your system.

The BIOS, or Basic Input/Output System, is a piece of firmware that is built into the motherboard of a computer. When you turn on your computer, the BIOS is responsible for initializing the hardware and then searching for a boot device, such as a hard drive or optical drive, to boot the operating system from. The BIOS also runs a power-on self-test (POST) to check that all hardware is functioning correctly before the operating system is loaded.

The CMOS, or Complementary Metal-Oxide Semiconductor, is a chip that stores the BIOS settings, such as the boot sequence and hardware configurations. Unlike the BIOS chip, which is non-volatile and retains its contents even when the power is turned off, the CMOS chip is volatile and requires a constant power source, typically in the form of a battery, to retain its settings.

A newer version of the BIOS is the UEFI, or Unified Extensible Firmware Interface. Most modern motherboards are shipped with this newer type of BIOS, which has several advantages over the old BIOS. One advantage is that the UEFI has a more user-friendly graphical interface, making it easier to navigate and change settings. It also has faster boot times and more advanced security features, as well as the ability to support larger hard drives and more hardware.

In summary, the BIOS, CMOS, and UEFI are all important components of a computer's firmware that play different roles in the boot and operation of the system. Understanding how they work can be helpful when troubleshooting problems or making changes to your system.

#uefitolegacy legacy uefi amnowtech windows11 convertuefitolegacy windowsconfiguration HowtoConvertUEFItoLEGACYWithoutData Loss#uefi#bios#windows bios#windows 10#bios setup

0 notes

Text

Vulnerability in Acer Laptops Allows Attackers to Disable Secure Boot

Vulnerability in Acer Laptops Allows Attackers to Disable Secure Boot

Home › Endpoint Security

Vulnerability in Acer Laptops Allows Attackers to Disable Secure Boot

By Ionut Arghire on November 29, 2022

Tweet

A vulnerability impacting multiple Acer laptop models could allow an attacker to disable the Secure Boot feature and bypass security protections to install malware.

Tracked as CVE-2022-4020 (CVSS score of 8.1), the vulnerability was identified in the…

View On WordPress

0 notes

Text

Vous cherchez un moyen d'allumer et éteindre un ordinateur à distance pour effectuer des tâches critiques ou urgentes ?

Voilà une technique qui éveillera de nouvelles possibilités de télétravail. Grâce à la technologie Wake-on-LAN vous allez pouvoir allumer votre ordinateur à l'aide de sa connexion réseau. Cependant, vous allez devoir activer Wake-on-LAN dans le BIOS.

Comment fonctionne le Wake On Lan sur Windows ?

La possibilité d'allumer un PC à distance ne repose pas sur la magie. C'est possible grâce à la norme de mise en réseau Wake-on-LAN, qui est prise en charge par la plupart des connexions Ethernet.

Lorsqu'il est activé, Wake-on-LAN permet à un ordinateur - ou même à votre smartphone - d'envoyer un paquet magique, l'équivalent d'un "signal ON", à un autre PC sur le même réseau local.

Comment activer le Wake On Lan sur Windows ?

Wake-on-LAN doit être activé dans le BIOS de la carte mère pour PC. Pour l'activer sur Windows, suivez ces étapes:

Tout d'abord, ouvrez Paramètres Windows .

Ensuite, cliquez sur Mise à jour et sécurité .

Puis cliquez sur Récupération.

Dans la section "Démarrage avancé", cliquez sur le bouton Redémarrer maintenant .

Maintenant cliquez sur Dépanner -> Options avancées .

Cliquez sur l' option Paramètres du micrologiciel UEFI .

Enfin, cliquez sur le bouton Redémarrer .

Dans les paramètres du micrologiciel, accédez aux options d'alimentation et activez la fonction "Wake on LAN" (WoL). La fonctionnalité peut avoir un nom légèrement différent car la plupart des fabricants construisent leur micrologiciel différemment.

Les meilleurs outils pour allumer un PC à distance

Voici quelques-uns des meilleurs outils Wake on Lan qui peuvent allumer et éteindre un PC à distance.

Anydesk

Anydesk est plus qu'un Wake on Lan, c'est un outil qui vous permet d'accéder à distance à un autre appareil pour l'allumer, l'éteindre, partager des écrans.

Mais surtout, il vous permet de vous connecter directement à un autre appareil pour travailler dessus ( tout en vous offrant la possibilité de garder l'un des deux écrans éteints, pour que personne ne sache ce que vous êtes en train de faire).

AnyDesk est compatible avec de nombreux systèmes d'exploitation, vous permettant ainsi de passer de Mac à Windows en un clin d'œil. Parfait pour le partage de fichiers sans passer par le cloud.

Et le tout en version sécurisée, avec des clés de cryptage. Les versions professionnelles sont payantes, mais vous avez accès à une licence gratuite pour un usage personnel.

EMCO Wake-ON-LAN

Pourquoi vous embêter à chercher les adresses MAC des ordinateurs que vous voulez contrôler à distance quand un outil va automatiquement les enregistrer ? C'est ce que propose le wake-on-lan d'EMCO Wake-ON-LAN et il est même capable d'aller les chercher sur les ordinateurs déjà éteints du réseau !

Il vous permet aussi de prévoir des exécutions automatiques et récurrentes sur une base quotidienne, hebdomadaire ou mensuelle. Le logiciel est aasez intuitif même s'il comporte de nombreuses fenêtres vous ouvrant autant de possibilités.

La version gratuite se limite cependant à cinq postes (la version payante est très utile pour les réseaux étendus). Si l'outil est dédié au réveil, vous trouverez en parallèle sur le site d'Emco un outil pour éteindre les ordinateurs à distance, avec la même configuration que le premier: Emco Remote Shutdown.

Manage Engine

Manage Engine est l'un des outils les plus simples à utiliser dans cette sélection.

Après avoir accédé au BIOS pour activer les paramètres liés à la mise sous tension sur la carte PCI, il vous suffit de connaître les adresses MAC et IP des ordinateurs à gérer, puis tout (ou presque) devient possible.

L'outil est capable d'automatiser le réveil des machines (ordinateurs comme périphériques réseau) en indiquant des détails sur ces derniers. En dehors des opérations planifiées, il est bien sût possible de prévoir un démarrage manuel. Mais là ou

il devient le plus intéressant, c'est qu'il vous permet d'agir sur un seul appareil, ou sur plusieurs en simultané. Voilà de quoi s'assurer que tout le monde trouvera son ordinateur en bon état de marche dès qu'il arrivera à son poste de travail le matin !

Aquilawol

AquilaWOL est un outil puissant qui peut aider à renforcer les fonctionnalités d'accès à distance ou de contrôle par ordinateur. Mais il ne se contente pas de ces fonctions, déjà bien pratiques. Il vous fournit des journées d'événements, des moyens d'analyser les ordinateurs connectés et vous donne accès aux notifications des barres d'état système.

Il vous permettre même de gérer quelques soucis de routage réseau comme de scanner ce dernier à la recherche des adresses MAC et IP.

Programmable, utilisable pour plusieurs ordinateurs et compatibles ( pour certains actions) avec Linux, AquilaWOL sait se rendre très utile. Vous avez même accès à un bouton d'urgence qui vous permet d'éteindre tous les ordinateurs du réseau d'un seul clic. Open source, traduit en français, cet outil est souvent recommandé pour son usage facile et multiple.

Wake ON Lan pour Android

La plupart des Wake on LAN nécessite un deuxième ordinateur pour réveiller le premier. Il existe cependant une application Android qui vous permet d'effectuer les mêmes opérations depuis un téléphone ou une tablette. Cependant, il est essentiel que les utilisateurs configurent le support Wake on Lan sur leur réseau ou leur ordinateur.

L'outil Wake on LAN permet d'automatiser le réveil des ordinateurs à l'aide de la diffusion de Tasker ou Llama.

Il reste cependant possible qu'elle fonctionne mieux avec une connexion Ethernet qu'avec du Wifi. Il vous reviendra de tester les options.

0 notes

Text

shim Bootloader mit Risiko "hoch"

Update inzwischen verfügbar

Der Open Source Linux Bootloader "shim" enthält eine Sicherheitslücke, mit der Angreifer eigenen Code einschleusen können. Die Warnung CVE-2023-40547 (CVSS 8.3, Risiko "hoch") beschreibt die Gefahr, dass bei einem solchen "Man in the Middle" Angriff in Speicherbereiche außerhalb des allokierten Bereichs geschrieben werden kann (Out-of-bound write primitive). Damit kann das ganze System kompromittiert werden.

Der einzige Zweck von shim als eine "triviale EFI-Applikation" ist der, dass damit auf handelsüblichen Windows-Computern auch andere vertrauenswürdige Betriebssysteme mit Secure Boot zu starten sind. Microsoft macht es mit SecureBoot und seinen EFI/UEFI Bootloadern anderen Systemen weiterhin schwer als System neben Windows installiert zu werden.

Ein Update auf shim 5.18 korrigiert die Sicherheitslücke und repariert auch weitere Schwachstellen. So war erst im Dezember 2023 eine Lücke im Secure-Boot auf BIOS-, bzw. UEFI-Ebene unter dem Namen "LogoFAIL" bekannt geworden.

Mehr dazu bei https://www.heise.de/news/Bootloader-Luecke-gefaehrdet-viele-Linux-Distributionen-9624201.html

Kategorie[21]: Unsere Themen in der Presse Short-Link dieser Seite: a-fsa.de/d/3yU

Link zu dieser Seite: https://www.aktion-freiheitstattangst.org/de/articles/8678-20240210-shim-bootloader-mit-risiko-hoch.html

#Microsoft#Windows#Diskriminierung#Ungleichbehandlung#OpenSource#Linux#Bootloader#UEFI#Cyberwar#Hacking#Trojaner#Verbraucherdatenschutz#Datenschutz#Datensicherheit#Datenpannen

1 note

·

View note