#AI Face Recognition solutions

Explore tagged Tumblr posts

Text

Empowering Students and Teachers: The Role of Face Recognition Attendance System in Educational Institutions

Introduction:

The education sector is undergoing a transformative shift, integrating advanced technologies to streamline operations and improve learning outcomes. In India alone, there are over 14 lakh (1.4 million) schools and colleges, yet a significant number still struggle to provide efficient educational facilities. According to the Ministry of Education, only a fraction of institutions have access to advanced technological tools, leaving many reliant on traditional, manual processes.

Educational institutions often face administrative challenges that impact the quality of education. One such challenge is attendance management, which remains a time-consuming, error-prone manual task in many schools and colleges. The inefficiency of manual attendance can lead to reduced instructional time, administrative burdens, and data inaccuracies.

With the rise of smart technology in education, the Face Recognition Attendance System (FRAS) is revolutionizing the way institutions operate. Using biometric facial recognition technology, these systems verify the identity of students and faculty, automatically recording attendance data into a database. This automation not only reduces human errors but also enhances the security and efficiency of institutional operations.

The Growing Adoption of Facial Recognition Technology

Facial recognition technology has witnessed a significant rise in adoption worldwide. According to MarketsandMarkets, the global facial recognition market size is projected to grow from $5.43 billion in 2022 to $6.28 billion in 2023, with a compound annual growth rate (CAGR) of 15.5%. Another report by Statista estimates that by 2028, the market will reach $12.92 billion, indicating increased reliance on AI-driven identification systems across industries, including education.

What is Facial Recognition & How Does It Work?

Facial recognition is a biometric technology that identifies individuals by analyzing unique facial features. It's widely used in various applications, from unlocking smartphones to security systems, and is now being adopted in educational settings for efficient attendance management.

How Does It Work?

The process involves three primary steps:

Detection: Capturing an image of the individual's face.

Analysis: Mapping unique facial features to create a digital template.

Recognition: Comparing the digital template against a database to verify identity.

By utilizing Automatic Facial Recognition (AFR), attendance tracking becomes seamless, minimizing human intervention while ensuring accuracy and security.

Embracing the Future: Why Educational Institutions Thrive with Facial Recognition

The Ed-Tech sector is experiencing significant growth, with institutions worldwide adopting smart attendance systems to enhance operational efficiency. A facial recognition attendance system simplifies attendance tracking and streamlines administrative tasks, allowing educators to focus more on teaching.

By leveraging AI-driven attendance solutions, institutions can achieve real-time tracking, reduce administrative burdens, and improve security, thereby fostering a smarter and more efficient educational environment.

Read More: How Artificial Intelligence is Transforming Business

Discover the Astonishing Advantages of Face Recognition Attendance Systems

Adopting facial recognition in educational institutions offers numerous benefits, including automation, enhanced security, and improved operational efficiency. The use case and benefits of facial recognition in schools for educational purposes is one of the best decisions you can make.

Because it helps you in various ways, such as…

7 Benefits of Face Recognition Attendance Systems for Schools & Universities

#1. Real-Time Attendance Tracking

Automated facial recognition enables real-time attendance tracking, ensuring accurate data collection without manual intervention. Institutions can access attendance records remotely, facilitating better management and transparency.

#2. Increased Teaching Efficiency

On average, teachers spend 15–20% of class time on administrative tasks such as taking attendance. Automating this process allows educators to focus more on teaching, leading to better student engagement and learning outcomes.

#3. Cost-Effective Administration

Hiring administrative staff for attendance management can be costly. By automating attendance tracking, institutions can save on administrative expenses, increasing their overall return on investment (ROI).

#4. Enhanced Accuracy and Data Security

Manual attendance methods are prone to errors, manipulation, and proxy attendance. With AI-powered facial recognition, accuracy is significantly improved, ensuring genuine attendance records and reducing discrepancies.

#5. Improved Student Safety and Parental Assurance

Beyond attendance tracking, facial recognition enhances student safety by monitoring access to school premises. Parents can receive real-time updates about their child’s attendance, ensuring greater transparency and security.

#6. Streamlined Institutional Workflow

Aside from student-wise attendance with class management, task management, course management, payroll calculation for college staff and administrative employees, tracking the exact working hours of in-house as well as guest lecturers, streamlining the duration of courses, and so on, administrative staff have many other important tasks. But with the help of a touchless face recognition attendance system, one can easily automate all their tasks and can generate reports as well.

As a result, the attendance system helps teachers and staff to focus on what’s important.

#7. Automated Report Generation

Traditionally, attendance reports required manual compilation, making it difficult to track patterns and trends. Facial recognition systems automate report generation, providing instant insights into student attendance trends, faculty punctuality, and academic performance correlations.

The increasing adoption of AI and biometric technology in education is paving the way for smart campuses. According to Research and Markets, the global smart education market is expected to reach $702.6 billion by 2028, driven by the demand for advanced learning solutions and automation.

Join Us in Creating a Brilliant Smart Face Recognition Attendance System: Let’s Shape the Future Together!

At Rydot Infotech, we are committed to transforming the education sector with cutting-edge AI-powered attendance solutions that enhance efficiency, security, and accuracy. With over five years of experience, our dedicated team has tackled some of the most complex challenges in education technology trends and delivered smart education solutions that empower institutions to streamline administrative workflows effortlessly.

Our flagship application, TURNOUT, is a revolutionary face recognition attendance system designed to automate attendance tracking, enhance student security AI measures, and optimize school management software. By integrating deep learning attendance monitoring and biometric student tracking, our solution ensures real-time, error-free attendance management, eliminating manual inefficiencies and enhancing overall productivity.

A Dream-Come-True Application with Extraordinary Features Such As:

Student & Department Management

Class & Lecture Management

Leave & Shift Management

Course Management

Library, Seminar Hall

Computer Labs Attendance Management

Comprehensive Reports (Subject-wise, Student-wise, etc.)

Generate real-time analytics for informed decision-making.

Our cloud-based TURNOUT– Time & Attendance Solution integrates seamlessly with biometric attendance education systems to provide a secure school attendance system that also supports visitor management and access control. By leveraging contactless student identification and automated attendance solutions, our platform eliminates administrative bottlenecks and enhances institutional efficiency.

Whether you're a school, college, university, or enterprise, our AI attendance system adapts to your needs, ensuring seamless integration, reduced errors, and real-time attendance tracking.

Join the growing number of institutions embracing education technology innovations to revolutionize attendance management! Schedule a demo today or reach out to us at [email protected] to discover how TURNOUT can transform your institution.

#AI attendance system#facial recognition in education#automated attendance solutions#face recognition attendance system#AI attendance tracking#automated attendance system#biometric attendance education#facial recognition technology in schools#smart education solutions#student security AI#attendance automation#education technology trends#real-time attendance tracking#and secure school attendance systems

0 notes

Text

Top Challenges in Workforce Management: Is Your HR Team Prepared?

From tracking attendance to engaging employees and ensuring compliance, managing a workforce has never been more complex. But what if there were a smarter way to deal with these challenges?

Our latest article explores the biggest #HR_Pain points and how #Praesentia, our AI-powered workforce management solution, is transforming the way businesses operate.

Discover how Praesentia addresses:

✔ Accurate attendance tracking with biometrics

✔ Seamless shift and remote workforce management

✔ Real-time analytics for informed decision-making

✔ Secure visitor and access management

Read the full article to learn how Praesentia can redefine your HR strategy and empower your workforce: https://www.linkedin.com/pulse/top-challenges-workforce-management-how-praesentia-tvyec

#technology#workforce management#hrtech#hrms software#ai#future technology#biometric solutions#time and attendance#attendance system#face recognition attendance system

0 notes

Text

Embracing the Future: Facial Recognition in Corporate Offices

In today’s technologically advanced world, corporate offices are continually seeking innovative solutions to enhance efficiency, security, and employee satisfaction. Facial recognition technology has emerged as a promising tool, especially in the domains of attendance tracking, time and attendance management, and payroll processing. Here, we explore current trends, challenges, functionality, benefits, and solutions related to facial recognition systems in corporate settings.

Current Trends in Facial Recognition Technology

Contactless Solutions: With heightened awareness of hygiene, contactless systems are in demand. Facial recognition offers a hands-free method to authenticate employees, minimizing physical contact.

Improved Accuracy: Advanced machine learning algorithms are continuously enhancing the accuracy and reliability of facial recognition systems, reducing errors and increasing trust.

Mask Detection: The capability to recognize faces with masks has become crucial, especially post-pandemic. Systems are being adapted to accurately identify individuals even when partially covered.

Anti-Spoofing Measures: To counteract spoofing attempts using photos or videos, modern systems incorporate advanced anti-spoofing technologies, ensuring only live faces are recognized.

Privacy and Regulation: As privacy concerns rise, systems are being developed to comply with stringent data protection regulations like GDPR and CCPA, ensuring responsible usage of biometric data.

Integration with AI and Analytics: Facial recognition is being integrated with AI to provide insightful analytics on employee attendance, punctuality, and even mood analysis, aiding in better management decisions.

Cloud-Based Solutions: Cloud-based facial recognition systems offer scalability and remote accessibility, making it easier to manage attendance data across multiple locations.

Customization and Flexibility: Businesses are seeking customizable solutions that can be tailored to their specific needs, ensuring seamless integration with existing systems.

Challenges and Issues in Corporate Offices

Privacy Concerns: Employees may be wary of how their biometric data is used and stored, raising concerns about surveillance and misuse of personal information.

Legal and Regulatory Compliance: Navigating the complex landscape of biometric data laws and ensuring compliance can be challenging for organizations.

Accuracy in Varied Conditions: Ensuring high accuracy in different lighting conditions, angles, and when faces are partially obscured remains a significant challenge.

Security Vulnerabilities: Facial recognition systems can be susceptible to spoofing and hacking, necessitating robust security measures to protect sensitive data.

Integration Complexity: Integrating facial recognition systems with existing HR and payroll software can be complex and may require significant customization.

User Acceptance: Gaining acceptance and trust from employees regarding the use of facial recognition for attendance tracking can be difficult.

Cost of Implementation: The initial cost of deploying facial recognition systems, including hardware and software, can be prohibitive for some organizations.

How Facial Recognition Attendance Systems Work

Enrollment: Employees are enrolled by capturing their facial images, which are then converted into unique faceprints stored in a database.

Capture Attendance: As employees arrive, their faces are scanned by a camera. The system detects and aligns the face for accurate recognition.

Feature Extraction: Key facial features are extracted to create a faceprint that is compared against stored templates.

Matching: The system matches the extracted faceprint with the database to verify the identity.

Attendance Recording: Upon a successful match, the system logs the attendance with a timestamp in the centralized database.

Real-Time Feedback: Employees receive instant feedback confirming their attendance has been recorded.

Data Management: Attendance data is integrated with time and attendance management systems, providing comprehensive records for payroll processing.

Benefits of Facial Recognition Systems

Automated Attendance Tracking: Reduces administrative overhead by automating the attendance recording process.

Contactless Operation: Enhances hygiene and safety by minimizing physical contact.

Accurate Timekeeping: Ensures precise tracking of working hours, reducing errors associated with manual entry.

Elimination of Time Theft: Prevents buddy punching and other forms of attendance fraud.

Real-Time Monitoring: Provides managers with real-time data on employee attendance patterns.

Efficient Payroll Management: Integrates seamlessly with payroll systems, automating wage calculations based on accurate attendance data.

Enhanced Security: Reduces the risk of unauthorized access with high-precision facial recognition.

Employee Satisfaction: Improves overall employee experience by simplifying the attendance process and ensuring timely, accurate payroll.

Solutions for Corporate Offices

Time and Attendance Management

Comprehensive Integration: Integrate facial recognition systems with existing HR and time management software to streamline operations.

Flexible Scheduling: Accommodate various work schedules, including remote and shift-based work, with accurate time tracking.

Compliance Tracking: Ensure adherence to labor laws and company policies regarding working hours and breaks.

Payroll Management

Automated Calculations: Utilize accurate attendance data to automate payroll calculations, minimizing errors.

Timely Payments: Ensure employees are paid on time, enhancing satisfaction and reducing disputes.

Cost Efficiency: Reduce administrative costs and the risk of payroll fraud through automated processes.

Detailed Reporting: Generate comprehensive reports on payroll expenses and attendance metrics for informed decision-making.

Regulatory Compliance: Maintain compliance with tax laws and payroll regulations, reducing the risk of penalties.

By addressing these challenges and leveraging the benefits, facial recognition technology can revolutionize attendance tracking, time and attendance management, and payroll processing in corporate offices, leading to more efficient and secure workplace operations.

#facial recognition technology#facial recognition#facial recognition solution#thirdeye ai#ai-based facial recognition system#face recognition application

0 notes

Text

Art. Can. Die.

This is my battle cry in the face of the silent extinguishing of an entire generation of artists by AI.

And you know what? We can't let that happen. It's not about fighting the future, it's about shaping it on our terms. If you think this is worth fighting for, please share this post. Let's make this debate go viral - because we need to take action NOW.

Remember that even in the darkest of times, creativity always finds a way.

To unleash our true potential, we need first to dive deep into our darkest fears.

So let's do this together:

By the end of 2025, most traditional artist jobs will be gone, replaced by a handful of AI-augmented art directors. Right now, around 5 out of 6 concept art jobs are being eliminated, and it's even more brutal for illustrators. This isn't speculation: it's happening right now, in real-time, across studios worldwide.

At this point, dogmatic thinking is our worst enemy. If we want to survive the AI tsunami of 2025, we need to prepare for a brutal cyberpunk reality that isn’t waiting for permission to arrive. This isn't sci-fi or catastrophism. This is a clear-eyed recognition of the exponential impact AI will have on society, hitting a hockey stick inflection point around April-May this year. By July, February will already feel like a decade ago. This also means that we have a narrow window to adapt, to evolve, and to build something new.

Let me make five predictions for the end of 2025 to nail this out:

Every major film company will have its first 100% AI-generated blockbuster in production or on screen.

Next-gen smartphones will run GPT-4o-level reasoning AI locally.

The first full AI game engine will generate infinite, custom-made worlds tailored to individual profiles and desires.

Unique art objects will reach industrial scale: entire production chains will mass-produce one-of-a-kind pieces. Uniqueness will be the new mass market.

Synthetic AI-generated data will exceed the sum total of all epistemic data (true knowledge) created by humanity throughout recorded history. We will be drowning in a sea of artificial ‘truths’.

For us artists, this means a stark choice: adapt to real-world craftsmanship or high-level creative thinking roles, because mid-level art skills will be replaced by cheaper, AI-augmented computing power.

But this is not the end. This is just another challenge to tackle.

Many will say we need legal solutions. They're not wrong, but they're missing the bigger picture: Do you think China, Pakistan, or North Korea will suddenly play nice with Western copyright laws? Will a "legal" dataset somehow magically protect our jobs? And most crucially, what happens when AI becomes just another tool of control?

Here's the thing - boycotting AI feels right, I get it. But it sounds like punks refusing to learn power chords because guitars are electrified by corporations. The systemic shift at stake doesn't care if we stay "pure", it will only change if we hack it.

Now, the empowerment part: artists have always been hackers of narratives.

This is what we do best: we break into the symbolic fabric of the world, weaving meaning from signs, emotions, and ideas. We've always taken tools never meant for art and turned them into instruments of creativity. We've always found ways to carve out meaning in systems designed to erase it.

This isn't just about survival. This is about hacking the future itself.

We, artists, are the pirates of the collective imaginary. It’s time to set sail and raise the black flag.

I don't come with a ready-made solution.

I don't come with a FOR or AGAINST. That would be like being against the wood axe because it can crush skulls.

I come with a battle cry: let’s flood the internet with debate, creative thinking, and unconventional wisdom. Let’s dream impossible futures. Let’s build stories of resilience - where humanity remains free from the technological guardianship of AI or synthetic superintelligence. Let’s hack the very fabric of what is deemed ‘possible’. And let’s do it together.

It is time to fight back.

Let us be the HumaNet.

Let’s show tech enthusiasts, engineers, and investors that we are not just assets, but the neurons of the most powerful superintelligence ever created: the artist community.

Let's outsmart the machine.

Stéphane Wootha Richard

P.S: This isn't just a message to read and forget. This is a memetic payload that needs to spread.

Send this to every artist in your network.

Copy/paste the full text anywhere you can.

Spread it across your social channels.

Start conversations in your creative communities.

No social platform? Great! That's exactly why this needs to spread through every possible channel, official and underground.

Let's flood the datasphere with our collective debate.

71 notes

·

View notes

Text

Heroes, Gods, and the Invisible Narrator

Slay the Princess as a Framework for the Cyclical Reproduction of Colonialist Narratives in Data Science & Technology

An Essay by FireflySummers

All images are captioned.

Content Warnings: Body Horror, Discussion of Racism and Colonialism

Spoilers for Slay the Princess (2023) by @abby-howard and Black Tabby Games.

If you enjoy this article, consider reading my guide to arguing against the use of AI image generators or the academic article it's based on.

Introduction: The Hero and the Princess

You're on a path in the woods, and at the end of that path is a cabin. And in the basement of that cabin is a Princess. You're here to slay her. If you don't, it will be the end of the world.

Slay the Princess is a 2023 indie horror game by Abby Howard and published through Black Tabby Games, with voice talent by Jonathan Sims (yes, that one) and Nichole Goodnight.

The game starts with you dropped without context in the middle of the woods. But that’s alright. The Narrator is here to guide you. You are the hero, you have your weapon, and you have a monster to slay.

From there, it's the player's choice exactly how to proceed--whether that be listening to the voice of the narrator, or attempting to subvert him. You can kill her as instructed, or sit and chat, or even free her from her chains.

It doesn't matter.

Regardless of whether you are successful in your goal, you will inevitably (and often quite violently) die.

And then...

You are once again on a path in the woods.

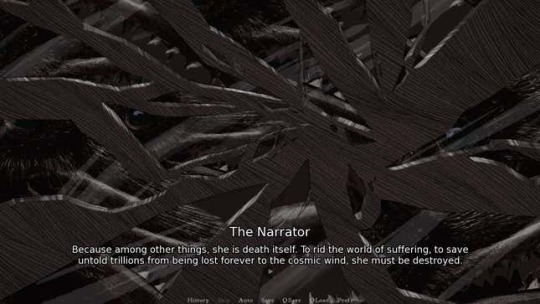

The cycle repeats itself, the narrator seemingly none the wiser. But the woods are different, and so is the cabin. You're different, and worse... so is she.

Based on your actions in the previous loop, the princess has... changed. Distorted.

Had you attempted a daring rescue, she is now a damsel--sweet and submissive and already fallen in love with you.

Had you previously betrayed her, she has warped into something malicious and sinister, ready to repay your kindness in full.

But once again, it doesn't matter.

Because the no matter what you choose, no matter how the world around you contorts under the weight of repeated loops, it will always be you and the princess.

Why? Because that’s how the story goes.

So says the narrator.

So now that we've got that out of the way, let's talk about data.

Chapter I: Echoes and Shattered Mirrors

The problem with "data" is that we don't really think too much about it anymore. Or, at least, we think about it in the same abstract way we think about "a billion people." It's gotten so big, so seemingly impersonal that it's easy to forget that contemporary concept of "data" in the west is a phenomenon only a couple centuries old [1].

This modern conception of the word describes the ways that we translate the world into words and numbers that can then be categorized and analyzed. As such, data has a lot of practical uses, whether that be putting a rover on mars or tracking the outbreak of a viral contagion. However, this functionality makes it all too easy to overlook the fact that data itself is not neutral. It is gathered by people, sorted into categories designed by people, and interpreted by people. At every step, there are people involved, such that contemporary technology is embedded with systemic injustices, and not always by accident.

The reproduction of systems of oppression are most obvious from the margins. In his 2019 article As If, Ramon Amaro describes the Aspire Mirror (2016): a speculative design project by by Joy Buolamwini that contended with the fact that the standard facial recognition algorithm library had been trained almost exclusively on white faces. The simplest solution was to artificially lighten darker skin-tones for the algorithm to recognize, which Amaro uses to illustrate the way that technology is developed with an assumption of whiteness [2].

This observation applies across other intersections as well, such as trans identity [3], which has been colloquially dubbed "The Misgendering Machine" [4] for its insistence on classifying people into a strict gender binary based only on physical appearance.

This has also popped up in my own research, brought to my attention by the artist @b4kuch1n who has spoken at length with me about the connection between their Vietnamese heritage and the clothing they design in their illustrative work [5]. They call out AI image generators for reinforcing colonialism by stripping art with significant personal and cultural meaning of their context and history, using them to produce a poor facsimile to sell to the highest bidder.

All this describes an iterative cycle which defines normalcy through a white, western lens, with a limited range of acceptable diversity. Within this cycle, AI feeds on data gathered under colonialist ideology, then producing an artifact that reinforces existing systemic bias. When this data is, in turn, once again fed to the machine, that bias becomes all the more severe, and the range of acceptability narrower [2, 6].

Luciana Parisi and Denise Ferreira da Silva touch on a similar point in their article Black Feminist Tools, Critique, and Techno-poethics but on a much broader scale. They call up the Greek myth of Prometheus, who was punished by the gods for his hubris for stealing fire to give to humanity. Parisi and Ferreira da Silva point to how this, and other parts of the “Western Cosmology” map to humanity’s relationship with technology [7].

However, while this story seems to celebrate the technological advancement of humanity, there are darker colonialist undertones. It frames the world in terms of the gods and man, the oppressor and the oppressed; but it provides no other way of being. So instead the story repeats itself, with so-called progress an inextricable part of these two classes of being. This doesn’t bode well for visions of the future, then–because surely, eventually, the oppressed will one day be the machines [7, 8].

It’s… depressing. But it’s only really true, if you assume that that’s the only way the story could go.

“Stories don't care who takes part in them. All that matters is that the story gets told, that the story repeats. Or, if you prefer to think of it like this: stories are a parasitical life form, warping lives in the service only of the story itself.” ― Terry Pratchett, Witches Abroad

Chapter II: The Invisible Narrator

So why does the narrator get to call the shots on how a story might go? Who even are they? What do they want? How much power do they actually have?

With the exception of first person writing, a lot of the time the narrator is invisible. This is different from an unreliable narrator. With an unreliable narrator, at some point the audience becomes aware of their presence in order for the story to function as intended. An invisible narrator is never meant to be seen.

In Slay the Princess, the narrator would very much like to be invisible. Instead, he has been dragged out into the light, because you (and the inner voices you pick up along the way), are starting to argue with him. And he doesn’t like it.

Despite his claims that the princess will lie and cheat in order to escape, as the game progresses it’s clear that the narrator is every bit as manipulative–if not moreso, because he actually knows what’s going on. And, if the player tries to diverge from the path that he’s set before them, the correct path, then it rapidly becomes clear that he, at least to start, has the power to force that correct path.

While this is very much a narrative device, the act of calling attention to the narrator is important beyond that context.

The Hero’s Journey is the true monomyth, something to which all stories can be reduced. It doesn’t matter that the author, Joseph Campbell, was a raging misogynist whose framework flattened cultures and stories to fit a western lens [9, 10]. It was used in Star Wars, so clearly it’s a universal framework.

The metaverse will soon replace the real world and crypto is the future of currency! Never mind that the organizations pushing it are suspiciously pyramid shaped. Get on board or be left behind.

Generative AI is pushed as the next big thing. The harms it inflicts on creatives and the harmful stereotypes it perpetuates are just bugs in the system. Never mind that the evangelists for this technology speak over the concerns of marginalized people [5]. That’s a skill issue, you gotta keep up.

Computers will eventually, likely soon, advance so far as to replace humans altogether. The robot uprising is on the horizon [8].

Who perpetuates these stories? What do they have to gain?

Why is the only story for the future replications of unjust systems of power? Why must the hero always slay the monster?

Because so says the narrator. And so long as they are invisible, it is simple to assume that this is simply the way things are.

Chapter III: The End...?

This is the part where Slay the Princess starts feeling like a stretch, but I’ve already killed the horse so I might as well beat it until the end too.

Because what is the end result here?

According to the game… collapse. A recursive story whose biases narrow the scope of each iteration ultimately collapses in on itself. The princess becomes so sharp that she is nothing but blades to eviscerate you. The princess becomes so perfect a damsel that she is a caricature of the trope. The story whittles itself away to nothing. And then the cycle begins anew.

There’s no climactic final battle with the narrator. He created this box, set things in motion, but he is beyond the player’s reach to confront directly. The only way out is to become aware of the box itself, and the agenda of the narrator. It requires acknowledgement of the artificiality of the roles thrust upon you and the Princess, the false dichotomy of hero or villain.

Slay the Princess doesn’t actually provide an answer to what lies outside of the box, merely acknowledges it as a limit that can be overcome.

With regards to the less fanciful narratives that comprise our day-to-day lives, it’s difficult to see the boxes and dichotomies we’ve been forced into, let alone what might be beyond them. But if the limit placed is that there are no stories that can exist outside of capitalism, outside of colonialism, outside of rigid hierarchies and oppressive structures, then that limit can be broken [12].

Denouement: Doomed by the Narrative

Video games are an interesting artistic medium, due to their inherent interactivity. The commonly accepted mechanics of the medium, such as flavor text that provides in-game information and commentary, are an excellent example of an invisible narrator. Branching dialogue trees and multiple endings can help obscure this further, giving the player a sense of genuine agency… which provides an interesting opportunity to drag an invisible narrator into the light.

There are a number of games that have explored the power differential between the narrator and the player (The Stanley Parable, Little Misfortune, Undertale, Buddy.io, OneShot, etc…)

However, Slay the Princess works well here because it not only emphasizes the artificial limitations that the narrator sets on a story, but the way that these stories recursively loop in on themselves, reinforcing the fears and biases of previous iterations.

Critical data theory probably had nothing to do with the game’s development (Abby Howard if you're reading this, lmk). However, it works as a surprisingly cohesive framework for illustrating the ways that we can become ensnared by a narrative, and the importance of knowing who, exactly, is narrating the story. Although it is difficult or impossible to conceptualize what might exist beyond the artificial limits placed by even a well-intentioned narrator, calling attention to them and the box they’ve constructed is the first step in breaking out of this cycle.

“You can't go around building a better world for people. Only people can build a better world for people. Otherwise it's just a cage.” ― Terry Pratchett, Witches Abroad

Epilogue

If you've read this far, thank you for your time! This was an adaptation of my final presentation for a Critical Data Studies course. Truthfully, this course posed quite a challenge--I found the readings of philosophers such as Kant, Adorno, Foucault, etc... difficult to parse. More contemporary scholars were significantly more accessible. My only hope is that I haven't gravely misinterpreted the scholars and researchers whose work inspired this piece.

I honestly feel like this might have worked best as a video essay, but I don't know how to do those, and don't have the time to learn or the money to outsource.

Slay the Princess is available for purchase now on Steam.

Screencaps from ManBadassHero Let's Plays: [Part 1] [Part 2] [Part 3] [Part 4] [Part 5] [Part 6]

Post Dividers by @cafekitsune

Citations:

Rosenberg, D. (2018). Data as word. Historical Studies in the Natural Sciences, 48(5), 557-567.

Amaro, Ramon. (2019). As If. e-flux Architecture. Becoming Digital. https://www.e-flux.com/architecture/becoming-digital/248073/as-if/

What Ethical AI Really Means by PhilosophyTube

Keyes, O. (2018). The misgendering machines: Trans/HCI implications of automatic gender recognition. Proceedings of the ACM on human-computer interaction, 2(CSCW), 1-22.

Allred, A.M., Aragon, C. (2023). Art in the Machine: Value Misalignment and AI “Art”. In: Luo, Y. (eds) Cooperative Design, Visualization, and Engineering. CDVE 2023. Lecture Notes in Computer Science, vol 14166. Springer, Cham. https://doi.org/10.1007/978-3-031-43815-8_4

Amaro, R. (2019). Artificial Intelligence: warped, colorful forms and their unclear geometries.

Parisisi, L., Ferreira da Silva, D. Black Feminist Tools, Critique, and Techno-poethics. e-flux. Issue #123. https://www.e-flux.com/journal/123/436929/black-feminist-tools-critique-and-techno-poethics/

AI - Our Shiny New Robot King | Sophie from Mars by Sophie From Mars

Joseph Campbell and the Myth of the Monomyth | Part 1 by Maggie Mae Fish

Joseph Campbell and the N@zis | Part 2 by Maggie Mae Fish

How Barbie Cis-ified the Matrix by Jessie Gender

#slay the princess#stp spoilers#stp#stp princess#abby howard#black tabby games#academics#critical data studies#computer science#technology#hci#my academics#my writing#long post

245 notes

·

View notes

Text

At 8:22 am on December 4 last year, a car traveling down a small residential road in Alabama used its license-plate-reading cameras to take photos of vehicles it passed. One image, which does not contain a vehicle or a license plate, shows a bright red “Trump” campaign sign placed in front of someone’s garage. In the background is a banner referencing Israel, a holly wreath, and a festive inflatable snowman.

Another image taken on a different day by a different vehicle shows a “Steelworkers for Harris-Walz” sign stuck in the lawn in front of someone’s home. A construction worker, with his face unblurred, is pictured near another Harris sign. Other photos show Trump and Biden (including “Fuck Biden”) bumper stickers on the back of trucks and cars across America. One photo, taken in November 2023, shows a partially torn bumper sticker supporting the Obama-Biden lineup.

These images were generated by AI-powered cameras mounted on cars and trucks, initially designed to capture license plates, but which are now photographing political lawn signs outside private homes, individuals wearing T-shirts with text, and vehicles displaying pro-abortion bumper stickers—all while recording the precise locations of these observations. Newly obtained data reviewed by WIRED shows how a tool originally intended for traffic enforcement has evolved into a system capable of monitoring speech protected by the US Constitution.

The detailed photographs all surfaced in search results produced by the systems of DRN Data, a license-plate-recognition (LPR) company owned by Motorola Solutions. The LPR system can be used by private investigators, repossession agents, and insurance companies; a related Motorola business, called Vigilant, gives cops access to the same LPR data.

However, files shared with WIRED by artist Julia Weist, who is documenting restricted datasets as part of her work, show how those with access to the LPR system can search for common phrases or names, such as those of politicians, and be served with photographs where the search term is present, even if it is not displayed on license plates.

A search result for the license plates from Delaware vehicles with the text “Trump” returned more than 150 images showing people’s homes and bumper stickers. Each search result includes the date, time, and exact location of where a photograph was taken.

“I searched for the word ‘believe,’ and that is all lawn signs. There’s things just painted on planters on the side of the road, and then someone wearing a sweatshirt that says ‘Believe.’” Weist says. “I did a search for the word ‘lost,’ and it found the flyers that people put up for lost dogs and cats.”

Beyond highlighting the far-reaching nature of LPR technology, which has collected billions of images of license plates, the research also shows how people’s personal political views and their homes can be recorded into vast databases that can be queried.

“It really reveals the extent to which surveillance is happening on a mass scale in the quiet streets of America,” says Jay Stanley, a senior policy analyst at the American Civil Liberties Union. “That surveillance is not limited just to license plates, but also to a lot of other potentially very revealing information about people.”

DRN, in a statement issued to WIRED, said it complies with “all applicable laws and regulations.”

Billions of Photos

License-plate-recognition systems, broadly, work by first capturing an image of a vehicle; then they use optical character recognition (OCR) technology to identify and extract the text from the vehicle's license plate within the captured image. Motorola-owned DRN sells multiple license-plate-recognition cameras: a fixed camera that can be placed near roads, identify a vehicle’s make and model, and capture images of vehicles traveling up to 150 mph; a “quick deploy” camera that can be attached to buildings and monitor vehicles at properties; and mobile cameras that can be placed on dashboards or be mounted to vehicles and capture images when they are driven around.

Over more than a decade, DRN has amassed more than 15 billion “vehicle sightings” across the United States, and it claims in its marketing materials that it amasses more than 250 million sightings per month. Images in DRN’s commercial database are shared with police using its Vigilant system, but images captured by law enforcement are not shared back into the wider database.

The system is partly fueled by DRN “affiliates” who install cameras in their vehicles, such as repossession trucks, and capture license plates as they drive around. Each vehicle can have up to four cameras attached to it, capturing images in all angles. These affiliates earn monthly bonuses and can also receive free cameras and search credits.

In 2022, Weist became a certified private investigator in New York State. In doing so, she unlocked the ability to access the vast array of surveillance software accessible to PIs. Weist could access DRN’s analytics system, DRNsights, as part of a package through investigations company IRBsearch. (After Weist published an op-ed detailing her work, IRBsearch conducted an audit of her account and discontinued it. The company did not respond to WIRED’s request for comment.)

“There is a difference between tools that are publicly accessible, like Google Street View, and things that are searchable,” Weist says. While conducting her work, Weist ran multiple searches for words and popular terms, which found results far beyond license plates. In data she shared with WIRED, a search for “Planned Parenthood,” for instance, returned stickers on cars, on bumpers, and in windows, both for and against the reproductive health services organization. Civil liberties groups have already raised concerns about how license-plate-reader data could be weaponized against those seeking abortion.

Weist says she is concerned with how the search tools could be misused when there is increasing political violence and divisiveness in society. While not linked to license plate data, one law enforcement official in Ohio recently said people should “write down” the addresses of people who display yard signs supporting Vice President Kamala Harris, the 2024 Democratic presidential nominee, exemplifying how a searchable database of citizens’ political affiliations could be abused.

A 2016 report by the Associated Press revealed widespread misuse of confidential law enforcement databases by police officers nationwide. In 2022, WIRED revealed that hundreds of US Immigration and Customs Enforcement employees and contractors were investigated for abusing similar databases, including LPR systems. The alleged misconduct in both reports ranged from stalking and harassment to sharing information with criminals.

While people place signs in their lawns or bumper stickers on their cars to inform people of their views and potentially to influence those around them, the ACLU’s Stanley says it is intended for “human-scale visibility,” not that of machines. “Perhaps they want to express themselves in their communities, to their neighbors, but they don't necessarily want to be logged into a nationwide database that’s accessible to police authorities,” Stanley says.

Weist says the system, at the very least, should be able to filter out images that do not contain license plate data and not make mistakes. “Any number of times is too many times, especially when it's finding stuff like what people are wearing or lawn signs,” Weist says.

“License plate recognition (LPR) technology supports public safety and community services, from helping to find abducted children and stolen vehicles to automating toll collection and lowering insurance premiums by mitigating insurance fraud,” Jeremiah Wheeler, the president of DRN, says in a statement.

Weist believes that, given the relatively small number of images showing bumper stickers compared to the large number of vehicles with them, Motorola Solutions may be attempting to filter out images containing bumper stickers or other text.

Wheeler did not respond to WIRED's questions about whether there are limits on what can be searched in license plate databases, why images of homes with lawn signs but no vehicles in sight appeared in search results, or if filters are used to reduce such images.

“DRNsights complies with all applicable laws and regulations,” Wheeler says. “The DRNsights tool allows authorized parties to access license plate information and associated vehicle information that is captured in public locations and visible to all. Access is restricted to customers with certain permissible purposes under the law, and those in breach have their access revoked.”

AI Everywhere

License-plate-recognition systems have flourished in recent years as cameras have become smaller and machine-learning algorithms have improved. These systems, such as DRN and rival Flock, mark part of a change in the way people are surveilled as they move around cities and neighborhoods.

Increasingly, CCTV cameras are being equipped with AI to monitor people’s movements and even detect their emotions. The systems have the potential to alert officials, who may not be able to constantly monitor CCTV footage, to real-world events. However, whether license plate recognition can reduce crime has been questioned.

“When government or private companies promote license plate readers, they make it sound like the technology is only looking for lawbreakers or people suspected of stealing a car or involved in an amber alert, but that’s just not how the technology works,” says Dave Maass, the director of investigations at civil liberties group the Electronic Frontier Foundation. “The technology collects everyone's data and stores that data often for immense periods of time.”

Over time, the technology may become more capable, too. Maass, who has long researched license-plate-recognition systems, says companies are now trying to do “vehicle fingerprinting,” where they determine the make, model, and year of the vehicle based on its shape and also determine if there’s damage to the vehicle. DRN’s product pages say one upcoming update will allow insurance companies to see if a car is being used for ride-sharing.

“The way that the country is set up was to protect citizens from government overreach, but there’s not a lot put in place to protect us from private actors who are engaged in business meant to make money,” Nicole McConlogue, an associate professor of law at the Mitchell Hamline School of Law, who has researched license-plate-surveillance systems and their potential for discrimination.

“The volume that they’re able to do this in is what makes it really troubling,” McConlogue says of vehicles moving around streets collecting images. “When you do that, you're carrying the incentives of the people that are collecting the data. But also, in the United States, you’re carrying with it the legacy of segregation and redlining, because that left a mark on the composition of neighborhoods.”

19 notes

·

View notes

Text

Like a Dog at Your Door

Fandom: Redacted Audio

Characters: Hush, Doc

Pairings: Hush/Doc

Song: https://open.spotify.com/track/7Hk4LmCQBUlssRzlRlBmKO?si=pea6xH3IQ7mkNzJmG-RB7A

Finally caught up with both Hush and Vega's playlists and I have the brainworms now and tossed together a Hush fic bc I love him (and I also needed a break from how long chpt 2 is getting for tales of redactia ahxbsh). Title is a reference to Phoebe Bridgers.

As always here it is also on ao3 if that's easier! Please do not feed to AI, claim as your own, or repost to other platforms without my permission. The characters belong to Redacted Audio and this is a fan work.

(Fic below cut)

“You are.. upset.” The gentle voice appears as Hush always does, without warning and whenever Doc is holding something fragile. Where there was once nothing is now something, him. Worse still is the way their heart stumbles when he finally reappears after days of absence.

“Hello Hush. It's nice to see you.” Regaining their composure, Doc snaps their fingers to fix the mug they dropped onto their keyboard, turning in their desk chair to face him better.

Hush tilts his head in the pause, a small smile on his face. “You've not said that before. It is nice to see you too. It's always nice to see you. But will you tell me why you are distressed? I would like to help stop whatever the cause is.”

He doesn't move closer, he rarely does. They wish he would. They wish they would. To close the gap and draw him in close and have him pull them in just as much. Holding and held.

“I'm just tired Hush.” They push their hair out of their face with a sigh.

“You should sleep, then.” His answer is simple but far from a solution. They let out a dry laugh, feeling the strain on their aching body as they pause for the first time today.

He hums in recognition, “You’re laughing because it is incorrect, not because you are entertained by what I said.”

“Yes.” They can't fight the smile on their face at being recognised so easily. “I'm sorry, it's just not that kind of tired.”

“Then rest the way that type of tired needs.”

Hmm. Hard to argue with that.

“I can try but I'm not sure I'll be able to. Just one of those days.” Doc winces as they stretch their leg gently; feeling the twinge of the permanently present ache deep in their muscles.

Like a cat Hush’s eyes zero in on the spot. “Are you hurt? You shouldn't be working if you are injured.”

He was closer, they think. It felt like it at least. He was like gravity, pulling them in. He was powerful, definitely dangerous. They should be panicking about his proclivity for murder. But all they could worry about was if the way their core sang around him was too loud for the embodiment of that song’s silence. Too loud for him, too much.

“It's not an injury like a wound. It just aches, nothing to be done.” They shrug off the potential care.

“I don't want you in pain.” He insists, and he steps forward.

Realising there was no appeasing this one, they gesture for him to follow them to the small couch in the middle of their apartment. “I'm just tired, Hush. It's not something that can be fixed.” They swallow nervously. “You could sit with me and… hold me? If you want.” They offer sitting down and leaving him space.

He nods emphatically, wrapping them up immediately in his arms. “Always yes.” He whispers as he rests his head on their shoulder. “Soft.”

They snort, but relish the embrace. More contact than they’ve felt for a long time. There was almost a reverence to this routine they had set up, pads of fingers gently exploring, innocently, but hungrily too. Time was not wasted in expenditure, but treasured. Neither speak for a while, too content in the other's arms, too desperate for a touch that does not hurt. Starving. The only sound is Doc’s breathing and the hum of traffic outside.

“I do not love anything in the world so much as I love you.” Hush’s steady voice breaks the trance.

Doc startles slightly, “I- What?”

“I read it, and I liked it. It made me think of you.” Hush blinks, almost amusement creeping into his tone. “You blushed.”

“You read Shakespeare?” They continue, steadfastly ignoring the blushing comment as their cheeks warm. “Why?”

Hush tilts his head, hands stilling. “It was in the library.”

“There are many better things to read, he’s overvalued as a writer.”

“What should I have read instead?” His hands begin wandering again, tracing the dips and curves of their back.

“Oh I dunno uh.” They're floundering now, too flustered to think straight and it is not helping that Hush has started to run his fingers up and down their spine. They drop their face to his chest and bury their face there. “Whatever. What did you think?”

“Of Shakespeare or the specific work I read?” He inquires.

“The line, why it made you think of me.” They answer without thinking, mentally panicking at the desperation in their tone.

“Why are you scared of Shakespeare?” He carefully presses two fingers to the pulse point on their neck, delaying his point, “your heart speeds up and you get warmer.”

They softly exhale, “Tell you later?”

“Okay.” He nods immediately.

Doc appreciates how readily he accepts it. His sincerity is a breath of fresh air in a world of double meaning. “Thank you.”

“You're welcome Doc.” He carefully pinches some of their hair, examining it. “I liked that it singled out the other as unique in the world. Just like you are so interesting and kind when no one else I have met is.”

“You deserve nice.” They whisper. “I won't be the only one who is nice to you ever. And I won't be perfect. I'm not perfect, I'm not always soft.”

“Of course not. You weren't made to be.”

“I…” They struggle to form the words to defend their self deprecation. “But… I want to be, for you. Or at least better than I am.” The words are heavy and clunky in their mouth.

“So do I. So what's the problem?” He lights up as the idea strikes him. “Are you hungry?”

Doc is snapped out of their spiral into laughter at the proposed solution, slumping down onto him as they giggle. “You're so sweet. Yes I could eat, we can cook together if you want?”

They swear if he had a tail it would be a weapon the way he bounces up with excitement. “Yes please. I would like that a lot. I want to make soufflé.”

“Sure why not.” The freelancer says fondly. “I'm not sure I have the ingredients though, and I definitely have no clue how to make it, so you'll be teaching me on this one.”

“You'll still cook with me though?” Hush grabs their hand, looking intently up at them.

“Always.” They watch his face, giving his hand a squeeze, delicately smoothing their thumb over his cheek. “One last question though - why were you reading Shakespeare? I'm not sure what the purpose is, and normally you only do things with one in mind - not that you can't do what you want! That's great if so, but not bad if not.” They rush the words out, fumbling the sentence spectacularly.

Hush is unaffected by their rambling, attentively and patiently listening the whole time. He considers for a long while, there's no rush, he knows they'll wait for him too. “I saw a Shakespeare book on your shelf.” He points to the terrifyingly full bookcase next to their desk. “I wanted to be able to discuss things you like with you.”

Doc allows themselves three seconds of internal screaming and combustion before attempting some semblance of composure.

“You're too nice to me Hush.” They murmur, running a hand through his hair.

“You deserve nice.” He parrots their earlier words back at them as he leans into their petting, content. “And it was just me wanting to have more things to discuss with you.”

“Still, it means a lot. Although-” a slight chuckle leaves them, “- that's a book my aunt got me trying to insist I read ‘the classics’, if you want I can show you some much better books to read.”

Satisfied with this offer he nods and then scoops them up; throwing them over his shoulder, carrying them to the kitchen.

“HUSH!” They shriek as they're suddenly upside down, squirming playfully in his grasp through laughter.

“You need to eat first, then you can show me the books.” He chuckles a little, like he’d come up with the most devious plan ever, “and only if you hold my hand.”

“Deal.” They struggle to get the word out between laughs, straining to offer their hand for him to shake before giving up and going limp in his grip.

Together they spend the evening in each other's company and arms. Aches and pains of the world forgotten temporarily, neither hungry for long while the other was around.

#fanfic#redacted asmr#redacted audio#redactedverse#redacted fandom#fluff#lil bit of angst ig#it's sprinkled in#comfort#touch starved fools#theyre both autistic you cant change my mind#redacted hush#redacted doc#scarscribbles#posting this at 4am#surely nothing can go wrong

18 notes

·

View notes

Note

That's the thing I hate probably The Most about AI stuff, even besides the environment and the power usage and the subordination of human ingenuity to AI black boxes; it's all so fucking samey and Dogshit to look at. And even when it's good that means you know it was a fluke and there is no way to find More of the stuff that was good

It's one of the central limitations of how "AI" of this variety is built. The learning models. Gradient descent, weighting, the attempts to appear genuine, and mass training on the widest possible body of inputs all mean that the model will trend to mediocrity no matter what you do about it. I'm not jabbing anyone here but the majority of all works are either bad or mediocre, and the chinese army approach necessitated by the architecture of ANNs and LLMs means that any model is destined to this fate.

This is related somewhat to the fear techbros have and are beginning to face of their models sucking in outputs from the models destroying what little success they have had. So much mediocre or nonsense garbage is out there now that it is effectively having the same effect in-breeding has on biological systems. And there is no solution because it is a fundamental aspect of trained systems.

The thing is, while humans are not really possessed of the ability to capture randomness in our creative outputs very well, our patterns tend to be more pseudorandom than what ML can capture and then regurgitate. This is part of the above drawback of statistical systems which LLMs are at their core just a very fancy and large-scale implementation of. This is also how humans can begin to recognise generated media even from very sophisticated models; we aren't really good at randomness, but too much structured pattern is a signal. Even in generated texts, you are subconsciously seeing patterns in the way words are strung together or used even if you aren't completely conscious of it. A sense that something feels uncanny goes beyond weird dolls and mannequins. You can tell that the framework is there but the substance is missing, or things are just bland. Humans remain just too capable of pattern recognition, and part of that means that the way we enjoy media which is little deviations from those patterns in non-trivial ways makes generative content just kind of mediocre once the awe wears off.

Related somewhat, the idea of a general LLM is totally off the table precisely because what generalism means for a trained model: that same mediocrity. Unlike humans, trained models cannot by definition become general; and also unlike humans, a general model is still wholly a specialised application that is good at not being good. A generalist human might not be as skilled as a specialist but is still capable of applying signs and symbols and meaning across specialties. A specialised human will 100% clap any trained model every day. The reason is simple and evident, the unassailable fact that trained models still cannot process meaning and signs and symbols let alone apply them in any actual concrete way. They cannot generate an idea, they cannot generate a feeling.

The reason human-created works still can drag machine-generated ones every day is the fact we are able to express ideas and signs through these non-lingual ways to create feelings and thoughts in our fellow humans. This act actually introduces some level of non-trivial and non-processable almost-but-not-quite random "data" into the works that machine-learning models simply cannot access. How do you identify feelings in an illustration? How do you quantify a received sensibility?

And as long as vulture capitalists and techbros continue to fixate on "wow computer bro" and cheap grifts, no amount of technical development will ever deliver these things from our exclusive propriety. Perhaps that is a good thing, I won't make a claim either way.

4 notes

·

View notes

Text

Top 5 Reasons Companies Partner with Joaquin Fagundo for IT Strategy

In today’s fast-paced digital world, businesses are constantly evolving to keep up with the changing technological landscape. From cloud migrations to IT optimization, many organizations are turning to expert technology executives to guide them through complex transformations. One such executive who has gained significant recognition is Joaquin Fagundo, a technology leader with over two decades of experience in driving digital transformation. Having worked at prominent firms like Google, Capgemini, and Tyco, Fagundo has built a reputation for delivering large-scale, innovative solutions to complex IT challenges. But what makes Joaquin Fagundo such a sought-after leader in IT strategy? Here are the Top 5 Reasons Companies Partner with Joaquin Fagundo for IT Strategy.

1. Proven Expertise in Digital Transformation

Joaquin Fagundo’s career spans more than 20 years, during which he has worked on high-stakes projects that require a deep understanding of digital transformation. His experience spans various industries, helping businesses leverage cutting-edge technologies to improve operations, enhance efficiency, and streamline processes.

Fagundo has helped organizations transition from legacy systems to modern cloud-based infrastructure, allowing them to scale effectively and stay competitive in an increasingly digital world. His hands-on experience in cloud strategy, automation, and enterprise IT makes him an invaluable asset for any business looking to adapt to new technologies. Companies partner with Joaquin Fagundo because they know they will receive strategic insights and actionable plans for driving their digital initiatives forward.

2. Strategic Cloud Expertise for Scalable Solutions

Cloud technology is no longer a trend—it is the backbone of modern businesses. Joaquin Fagundo has an extensive background in cloud strategy, having successfully led major cloud migrations for several large organizations. Whether it's migrating to a public cloud, optimizing hybrid infrastructures, or implementing cloud-native applications, Fagundo’s expertise helps businesses navigate these complexities with ease.

Companies partnering with Joaquin Fagundo can expect a tailored cloud strategy that ensures scalability and cost-efficiency. His deep knowledge of cloud services like AWS, Google Cloud, and Microsoft Azure enables him to develop bespoke solutions that meet specific business needs while minimizing downtime and disruption. His approach not only focuses on the technical aspects of cloud migration but also on aligning cloud solutions with overarching business goals, ensuring that companies can reap the full benefits of cloud technology.

3. Strong Track Record of Driving Operational Efficiency

Operational efficiency is a top priority for most businesses today. Companies partner with Joaquin Fagundo because of his exceptional ability to optimize IT operations and implement automation technologies that enhance productivity while reducing costs. Whether it’s through automating routine tasks or improving infrastructure management, Fagundo’s strategies enable organizations to streamline their operations and focus on growth.

Fagundo’s leadership in infrastructure optimization has allowed businesses to reduce waste, lower operational expenses, and improve the performance of their IT systems. His expertise in automation tools and AI-driven insights empowers businesses to become more agile and responsive to market demands. By partnering with Fagundo, companies are able to make smarter decisions, optimize resource utilization, and achieve long-term sustainability.

4. Business-Technology Alignment

One of the most significant challenges that organizations face is aligning their IT strategies with broader business goals. While technology can drive innovation and efficiency, it’s only effective when it is tightly integrated with the company’s vision and objectives. Joaquin Fagundo understands this concept deeply and emphasizes the importance of business-technology alignment in every project he undertakes.

Fagundo’s approach to IT strategy is not just about implementing the latest technology, but also ensuring that it supports the company’s strategic direction. He works closely with leadership teams to identify key business objectives and tailors technology solutions that drive measurable business outcomes. This focus on alignment helps companies ensure that every IT investment contributes to long-term growth, profitability, and competitive advantage.

5. Trusted Leadership and Change Management Expertise

Digital transformation and IT strategy changes often require a significant cultural shift within an organization. Change management is a critical component of any IT transformation, and this is an area where Joaquin Fagundo excels. Over the years, Fagundo has successfully led large teams through organizational changes, helping them navigate complex transformations with minimal resistance.

Fagundo’s leadership skills are a key reason why companies partner with him. He is known for his ability to inspire teams, foster collaboration, and lead through uncertainty. His people-centric approach to technology implementation ensures that employees at all levels are equipped with the knowledge and tools they need to succeed. Whether it’s through training sessions, workshops, or mentorship, Fagundo prioritizes the human aspect of IT transformation, making the process smoother and more sustainable for everyone involved.

Conclusion

Partnering with a technology executive like Joaquin Fagundo can provide businesses with a competitive edge in an increasingly digital world. With his deep technical expertise, strategic insight, and ability to align technology with business goals, Fagundo has helped countless organizations successfully navigate their IT challenges. Whether it's through cloud strategy, digital transformation, or operational optimization, Joaquin Fagundo is the go-to leader for companies looking to stay ahead of the curve.

If your company is ready to accelerate its digital transformation journey and leverage cutting-edge technology for long-term success, partnering with Joaquin Fagundo could be the first step toward achieving your business goals. With his proven track record, expertise, and leadership, Fagundo is the ideal partner to guide your organization into the future of IT strategy.

2 notes

·

View notes

Text

Progress can be a ladder or a cage.

Verne vs. Orwell: Is Progress Leading Us to Utopia or Dystopia?

Since the dawn of time, humanity has asked: Is progress a blessing or a curse?

Jules Verne imagined a future where technology empowers and emancipates.

George Orwell warned us that progress could become a prison.

Two visions of the future that seem opposed, yet they may be more connected than we think.

Today, we live in a world where Verne’s dreams and Orwell’s fears coexist:

Technological advancements open incredible possibilities.

But they also threaten our fundamental freedoms.

Are we building Verne’s utopia or Orwell’s dystopia?

"Technological progress without conscience is nothing but the ruin of the soul." – Rabelais

Jules Verne and the Promise of Progress

Verne was a visionary, deeply influenced by the scientific boom of the 19th century.

An Era of Technological Optimism

Industrialization was transforming the world.

Travel was becoming more accessible.

Innovation was seen as a tool for social progress.

Verne believed in progress as a way to push the boundaries of humanity.

Predictions That Became Reality

From the Earth to the Moon → Space travel and Mars missions.

20,000 Leagues Under the Sea → Deep-sea exploration and underwater robotics.

The Mysterious Island → Renewable energy and self-sufficiency.

Verne reminds us that technology is a powerful tool—if used for the common good.

💬 "Science has made us gods before we even deserved to be men." – Jean Rostand

Yet, Verne was not naive. He foresaw the dangers of misused progress, as seen in Captain Nemo, who isolates himself and seeks revenge using technology.

Are we heading toward enlightened progress—or a dangerous drift?

Orwell and the Fear of Totalitarian Progress

George Orwell wrote in a very different context—the 20th century, marked by world wars and rising totalitarian regimes.

His warning: Every technology can become a tool of control.

Prophecies That Came True

1984 warns of a world where information is manipulated, every action is monitored, and even thought is controlled.

Some of these fears have become reality today:

Big Brother and mass surveillance → Smart cameras, facial recognition, digital tracking.

Newspeak and rewriting history → Fake news, algorithmic manipulation of facts.

The Ministry of Truth and public opinion control → Social media shaping beliefs and behaviors.

Orwell warned us: Progress can also be an instrument of domination.

💬 "Who controls the past controls the future. Who controls the present controls the past." – Orwell

The Modern Paradox: Utopia and Dystopia at the Same Time

Where do we stand between Verne and Orwell?

We are witnessing a fascinating paradox:

The innovations that could save us are also the ones that could enslave us.

Concrete Examples:

AI helps us solve problems… but also manipulates information and influences decisions.

Space exploration is a remarkable achievement… but is it an escape from our responsibilities on Earth?

Biotechnology and transhumanism can improve human life… but could they increase inequality?

"History is a loop we must learn to break." – Nietzsche

A Philosophical Question: Where Do We Set the Limits?

The philosopher Hans Jonas, in The Imperative of Responsibility, reminds us:

"The more our technological power grows, the greater our responsibility becomes."

Do we have the safeguards needed to regulate these transformations?

Should AI be controlled to prevent abuse?

Is space exploration a real solution or just a fantasy?

How do we prevent scientific advancements from becoming tools of oppression?

We have the means to create a utopia—but do we have the wisdom to avoid a dystopia?

What Future Do We Want?

We live in a world where Verne’s visions and Orwell’s warnings coexist:

We have innovations worthy of Verne’s imagination.

We face the dangers Orwell predicted.

The future depends on our ability to guide progress responsibly.

Are we choosing between Verne’s utopia and Orwell’s dystopia—or must we invent a new model?

I explore this dilemma in depth in my latest article on Medium:

And you—do you see the future more like Verne or Orwell?

P'tit Tôlier

Essayist & Popularizer. I analyze the world through accessible philosophical essays. Complex ideas, explained simply—to help us think about our times.

#George Orwell#jules verne#dystopie#utopia#philosophy#dystopia#critical thinking#world news#transhumanism#science#technology#FuturesThinking#TechEthics#AIandSociety#SurveillanceAge#HumanityNext#DystopiaOrUtopia#BigDataEthics#SpaceExploration#TranshumanismDebate#ProgressOrControl#TheFutureWeChoose

2 notes

·

View notes

Text

MediaTek Genio’s Wonder: Snorble Children’s Play’s Future!

Due to MediaTek Genio, the Snorble, a Smart Companion for Children, Has Come to Life

What precisely is Snorble, and why does its operation need cutting-edge artificial intelligence and other technological advancements?

Snorble is a revolutionary new intelligent companion toy, and it is driven by a chip manufactured by MediaTek Genio. This toy is at the leading edge because of the innovative method in which it interacts with children and adapts to meet the changing psychological and sleep needs of children as they get older.

The stuffed animal known as Snorble was designed to assist children in establishing not just a regular pattern of restful sleep but also other emotionally wholesome routines, such as positive thinking and mindfulness. It is designed to aid children in accomplishing their goals by hearing, comprehending, and responding to the things that they say to it.

Its face has a moving expression. The ability for parents to keep their Snorbles up to date as their children continue to mature and their needs alter is made possible by the availability of an app for use on smartphones.

In addition to the significant requirements for speech processing and AI processing that this toy has, the technology that powers it must prioritize the privacy and safety of its users. Given that it satisfies all of these characteristics, MediaTek Genio is a fantastic option for accomplishing this objective.

A New Intelligent Companion that Puts Children’s Safety First in the World of Technology has Just Been Released for Young People.

So that it can interact with children and grow with them, Snorble requires sensors that can understand what they are saying and connections that can make product updates easier. Snorble was designed from the bottom up to function without any cameras at all, as an alternative to relying on audio processing that takes place in the cloud or remotely. This action was taken in response to concerns about users’ privacy and safety. Notably, a Wi-Fi connection is not required in order to utilize Snorble on a daily basis like other apps and services.

This eliminates a possible entry point for hackers and boosts mobility (because you do not need a Wi-Fi connection, you are free to take it with you wherever you go since it does not require this). The fact that Snorble executes its processing locally on user devices rather than in the cloud is the primary factor that sets it apart from other voice assistants. When it comes to the specifics of the Snorble’s interaction with the children in their care, parents may have an increased sense of peace of mind as a result of the device’s in-built parental control features, which are included with the product.

The Challenges That Are Presented by Modern Technology

The developers of Snorble needed an ecosystem that could provide all of the following features and functions:

Platform, in both its physical and digital iterations (hardware and software).

Capacity of the central processing unit to manage voice processing for artificial intelligence

Edge AI is a kind of artificial intelligence in which all processing is performed on the device itself, as opposed to being performed on the cloud.

Audio features that are cutting edge, such as the New Language Process (NLP), which can comprehend speech without the need of a dictionary stored in the cloud.

The abbreviation OTA is short for “over the air.”

The MediaTek Genio 350 Is the Solution to This Problem

The CPU that is used in this gadget is the MediaTek Genio 350. This highly integrated System on a Chip (SoC) makes use of a smart Linux application to connect the microphone sensors and run complex artificial intelligence algorithms that are able to genuinely comprehend children and respond with what it is that they need.

In addition, the MediaTek 350 is capable of conducting Edge AI on the smartphone. This includes audio and NLP processing, which may assist with the recognition of words and sounds and even the separation of spoken sentences from a distance, all while decreasing background noise.

Even after a family has purchased a Snorble, the manufacturer has said that it would continue to improve the device by making available for download a variety of extra updates, apps, and features that will broaden the scope of what may be accomplished with the product.

Read more on Govindhtech.com

2 notes

·

View notes

Text

The US government should create a new body to regulate artificial intelligence—and restrict work on language models like OpenAI’s GPT-4 to companies granted licenses to do so. That’s the recommendation of a bipartisan duo of senators, Democrat Richard Blumenthal and Republican Josh Hawley, who launched a legislative framework yesterday to serve as a blueprint for future laws and influence other bills before Congress.

Under the proposal, developing face recognition and other “high risk” applications of AI would also require a government license. To obtain one, companies would have to test AI models for potential harm before deployment, disclose instances when things go wrong after launch, and allow audits of AI models by an independent third party.

The framework also proposes that companies should publicly disclose details of the training data used to create an AI model and that people harmed by AI get a right to bring the company that created it to court.

The senators’ suggestions could be influential in the days and weeks ahead as debates intensify in Washington over how to regulate AI. Early next week, Blumenthal and Hawley will oversee a Senate subcommittee hearing about how to meaningfully hold businesses and governments accountable when they deploy AI systems that cause people harm or violate their rights. Microsoft president Brad Smith and the chief scientist of chipmaker Nvidia, William Dally, are due to testify.

A day later, senator Chuck Schumer will host the first in a series of meetings to discuss how to regulate AI, a challenge Schumer has referred to as “one of the most difficult things we’ve ever undertaken.” Tech executives with an interest in AI, including Mark Zuckerberg, Elon Musk, and the CEOs of Google, Microsoft, and Nvidia, make up about half the almost-two-dozen-strong guest list. Other attendees represent those likely to be subjected to AI algorithms and include trade union presidents from the Writers Guild and union federation AFL-CIO, and researchers who work on preventing AI from trampling human rights, including UC Berkeley’s Deb Raji and Humane Intelligence CEO and Twitter’s former ethical AI lead Rumman Chowdhury.