#AI Machine

Text

Don't worry, Fodder. IS-OT just wants to cuddle with their favorite human.

Finally finished this piece after not touching it for weeks lol. Robots and Machines are not my strong suit but it was fun adding the coils and flesh.

Fodder and IS-OT are @qrowscant-art

#qrowscant art#IS-OT#Fodder#Machine x Human#blood#tw: blood#science fiction horror#horror#AI Machine#Machine#Killer AI#my art#fanart

54 notes

·

View notes

Text

‘Lavender’: The AI machine directing Israel’s bombing spree in Gaza

The Israeli army has marked tens of thousands of Gazans as suspects for assassination, using an AI targeting system with little human oversight and a permissive policy for casualties, +972 and Local Call reveal.

By Yuval Abraham April 3, 2024

In 2021, a book titled “The Human-Machine Team: How to Create Synergy Between Human and Artificial Intelligence That Will Revolutionize Our World” was released in English under the pen name “Brigadier General Y.S.” In it, the author — a man who we confirmed to be the current commander of the elite Israeli intelligence unit 8200 — makes the case for designing a special machine that could rapidly process massive amounts of data to generate thousands of potential “targets” for military strikes in the heat of a war. Such technology, he writes, would resolve what he described as a “human bottleneck for both locating the new targets and decision-making to approve the targets.”

Such a machine, it turns out, actually exists. A new investigation by +972 Magazine and Local Call reveals that the Israeli army has developed an artificial intelligence-based program known as “Lavender,” unveiled here for the first time. According to six Israeli intelligence officers, who have all served in the army during the current war on the Gaza Strip and had first-hand involvement with the use of AI to generate targets for assassination, Lavender has played a central role in the unprecedented bombing of Palestinians, especially during the early stages of the war. In fact, according to the sources, its influence on the military’s operations was such that they essentially treated the outputs of the AI machine “as if it were a human decision.”

Formally, the Lavender system is designed to mark all suspected operatives in the military wings of Hamas and Palestinian Islamic Jihad (PIJ), including low-ranking ones, as potential bombing targets. The sources told +972 and Local Call that, during the first weeks of the war, the army almost completely relied on Lavender, which clocked as many as 37,000 Palestinians as suspected militants — and their homes — for possible air strikes.

9 notes

·

View notes

Text

ai generated images make me increasingly sad and tired the more i see them in more and more casual contexts. i dont know how to explain, but it just fills the world with a bunch of nothing. no matter how visually stunning the pictures might be, there's nothing behind it for me. no dedication, no emotions, no feelings, no hard work or creativity, nothing i can truly think about, admire or enjoy. i dont think thats how art is supposed to be

#not to mention ripping off and plagirazing real artists hard work of course#which is a whole other conversation#i cant feel the same love and adoration for whatever the slop machine produces#it will never be the same#im just really tired#anti ai#anti ai art

54K notes

·

View notes

Text

#I'm serious stop doing it#theyre scraping fanfics and other authors writing#'oh but i wanna rp with my favs' then learn to write#studios wanna use ai to put writers AND artists out of business stop feeding the fucking machine!!!!

136K notes

·

View notes

Text

Data-Driven Decision-Making: The Core of PdM Excellence

In the dynamic landscape of industrial operations, the shift towards predictive maintenance (PdM) is not just a technological leap; it's a strategic evolution fueled by the transformative force of data-driven decision-making. In this article, we explore the core of PdM solutions excellence—how harnessing the power of data propels industries towards unparalleled efficiency, cost savings, and operational resilience.

Let's read it out:

The Precision of Proactivity: From Reactive to Data-Driven Maintenance

Dive into the paradigm shift from reactive maintenance practices to the precision of proactive strategies. Explore how data-driven decision-making in PdM empowers industries to forecast equipment failures before they occur, eliminating the costly aftermath of unplanned downtime. Understand the proactive stance that data-driven insights provide, shaping a new era of maintenance precision.

Data as the Silent Sentinel: The Role of IoT and Sensors in PdM

Uncover the silent sentinels that drive data-driven decision-making in PdM—Internet of Things (IoT) devices and sensors. Explore how these interconnected technologies transform equipment into sources of actionable data. Illustrate real-world examples where IoT and sensors play a pivotal role in continuous monitoring, enabling predictive insights that revolutionize maintenance practices.

Beyond the Numbers: The Art and Science of Predictive Analytics

Data-driven decision-making transcends raw numbers—it's an art and a science. Delve into the realm of predictive analytics, where advanced algorithms and machine learning turn data into actionable intelligence. Showcase how these analytical tools not only predict potential issues but also optimize maintenance schedules, ensuring resources are utilized efficiently.

Minimizing Downtime, Maximizing Productivity: The Impact of Data Insights

Highlight the tangible impact of data-driven decisions on minimizing downtime and maximizing productivity. Illustrate scenarios where industries, armed with predictive insights, can schedule maintenance during planned downtime, avoiding disruptions to regular operations. Showcase how this strategic approach enhances overall productivity and contributes to the bottom line.

From Reactive to Proactive: A Success Story in Data-Driven PdM

Engage readers with a success story that exemplifies the journey from reactive to proactive maintenance through data-driven decision-making. Showcase a real-world example where an industry's adoption of PdM and data analytics resulted in significant improvements—be it reduced maintenance costs, increased equipment reliability, or a marked decrease in unplanned downtime.

Strategies for Implementation: Integrating Data-Driven PdM Effectively

Empower industries with practical strategies for implementing data-driven PdM effectively. Address common challenges such as data integration, cybersecurity concerns, and workforce training. Provide actionable insights on creating a seamless transition to a data-centric maintenance approach, ensuring that the integration of PdM becomes a catalyst for operational excellence.

Conclusion

By unraveling the intricacies of data-driven decision-making in the realm of predictive maintenance, this article aims to captivate and inform readers. It positions PdM not just as a technological upgrade but as a strategic imperative, where the mastery of data translates into operational excellence, cost savings, and a future-proof foundation for industrial success.

#predicting energy consumption#leak detection software#smart manufacturing solutions#pdm solutions#pdm#ai machine#machine learning

0 notes

Text

There is no such thing as AI.

How to help the non technical and less online people in your life navigate the latest techbro grift.

I've seen other people say stuff to this effect but it's worth reiterating. Today in class, my professor was talking about a news article where a celebrity's likeness was used in an ai image without their permission. Then she mentioned a guest lecture about how AI is going to help finance professionals. Then I pointed out, those two things aren't really related.

The term AI is being used to obfuscate details about multiple semi-related technologies.

Traditionally in sci-fi, AI means artificial general intelligence like Data from star trek, or the terminator. This, I shouldn't need to say, doesn't exist. Techbros use the term AI to trick investors into funding their projects. It's largely a grift.

What is the term AI being used to obfuscate?

If you want to help the less online and less tech literate people in your life navigate the hype around AI, the best way to do it is to encourage them to change their language around AI topics.

By calling these technologies what they really are, and encouraging the people around us to know the real names, we can help lift the veil, kill the hype, and keep people safe from scams. Here are some starting points, which I am just pulling from Wikipedia. I'd highly encourage you to do your own research.

Machine learning (ML): is an umbrella term for solving problems for which development of algorithms by human programmers would be cost-prohibitive, and instead the problems are solved by helping machines "discover" their "own" algorithms, without needing to be explicitly told what to do by any human-developed algorithms. (This is the basis of most technologically people call AI)

Language model: (LM or LLM) is a probabilistic model of a natural language that can generate probabilities of a series of words, based on text corpora in one or multiple languages it was trained on. (This would be your ChatGPT.)

Generative adversarial network (GAN): is a class of machine learning framework and a prominent framework for approaching generative AI. In a GAN, two neural networks contest with each other in the form of a zero-sum game, where one agent's gain is another agent's loss. (This is the source of some AI images and deepfakes.)

Diffusion Models: Models that generate the probability distribution of a given dataset. In image generation, a neural network is trained to denoise images with added gaussian noise by learning to remove the noise. After the training is complete, it can then be used for image generation by starting with a random noise image and denoise that. (This is the more common technology behind AI images, including Dall-E and Stable Diffusion. I added this one to the post after as it was brought to my attention it is now more common than GANs.)

I know these terms are more technical, but they are also more accurate, and they can easily be explained in a way non-technical people can understand. The grifters are using language to give this technology its power, so we can use language to take it's power away and let people see it for what it really is.

12K notes

·

View notes

Text

Predicting Energy Consumption Using Machine Learning in Israel

Machine learning, a subset of artificial intelligence, has revolutionized various industries by enabling advanced data analysis and prediction capabilities. One sector that greatly benefits from this technology is energy consumption forecasting. In Israel, where energy efficiency and sustainability are paramount, machine learning is playing a significant role in predicting and managing energy consumption. This article explores how machine learning is transforming the energy landscape in Israel, empowering decision-makers, and fostering a sustainable future.

Individual Energy Consumption Optimization

At the individual level, machine learning algorithms can analyze household data such as weather conditions, occupancy patterns, and appliance usage to predict energy consumption accurately. This information can be used to optimize energy usage, minimize wastage, and reduce electricity bills. By implementing smart meters and IoT devices, Israeli households can gather real-time data, which is then fed into machine learning models to provide personalized energy consumption forecasts and recommendations.

Planning for Energy Demand at a Larger Scale

On a larger scale, machine learning algorithms are being utilized to predict energy consumption trends for cities, regions, and even the entire country. These models take into account factors such as population growth, economic indicators, weather patterns, and infrastructure development to forecast energy demands accurately. This enables energy companies and policymakers to plan ahead, ensure grid stability, and make strategic investments in renewable energy sources.

Load Forecasting for Grid Stability

Furthermore, machine learning algorithms can aid in load forecasting, which is crucial for balancing energy supply and demand. By accurately predicting peak loads and consumption patterns, power grid operators can optimize electricity generation and distribution, thereby reducing the risk of blackouts and improving overall grid efficiency. This is particularly important for Israel, where demand for electricity fluctuates due to factors like weather conditions and religious holidays.

Integrating Renewable Energy Sources

Another significant application of machine learning in energy consumption prediction is in the field of renewable energy integration. Israel has been actively investing in solar and wind energy projects to reduce its dependency on fossil fuels. Machine learning models can analyze solar radiation, wind patterns, and historical production data to predict renewable energy generation accurately. This information helps in effective integration of renewables into the existing energy infrastructure, ensuring a smooth and reliable transition to a cleaner energy mix.

Ensuring a Greener Future

In conclusion, machine learning is revolutionizing energy consumption prediction in Israel. By harnessing the power of data analysis and predictive algorithms, decision-makers can optimize energy usage, plan for the future, and promote sustainability. Whether it's at the individual household level or on a national scale, machine learning enables accurate forecasting, load management, and integration of renewable energy sources. As Israel continues to lead in innovation and sustainability, machine learning will remain a vital tool in shaping the country's energy landscape and ensuring a greener future.

As technology continues to advance and more data becomes available, machine learning algorithms will become even more sophisticated, leading to improved energy consumption predictions and increased efficiency in energy management. By embracing these advancements, Israel can continue to set an example for other nations in adopting sustainable practices and achieving energy security.

#leak detection software israel#predicting energy consumption israel#predicting energy consumption using machine learning israel#smart manufacturing solutions israel#buying behavior using machine#predicting buying#energy consumption#energy#machine#ai machine#ai machine learnig#israel#ai in israel

0 notes

Text

keep seeing undergrads on social media saying “oh if a prof has a strict no-AI academic integrity policy that’s a red flag for me because that means they don’t know how to design assignments” like sorry girl but that just sounds like you’ve got a case of sour grapes about not being allowed to cheat with the plagiarism machine that doesn’t know how to evaluate sources and kills the environment! I have a strict no-AI policy because if you use AI to write your essays for a writing course it’s literally plagiarism because you didn’t write it and you’re not learning any of the things the course teaches if you just plug a prompt into the plagiarism generator that kills the environment, hope this helps!

#so many people on the internet like ‘why do I have to write my work myself why can’t I just plug it into the cheating machine’#‘why is it plagiarism to not write my own work and instead use the plagiarism machine built from scraping others’ work without consent?’#like. it is because you are not learning anything if you do not actually do the work assigned to you. hope this helps#I personally have not had AI issues because of how I structure my assignments#but it was a HUGE problem in my department last year. so we all have no-AI to write your classwork policies

2K notes

·

View notes

Text

By midmindsarts

#nestedneons#cyberpunk#cyberpunk art#cyberpunk aesthetic#art#cyberpunk artist#cyberwave#scifi#dreamcore#astro#ai art#ai artist#ai artwork#aiartcommunity#thisisaiart#vending machine#urban#urban jungle#nostalgia#nostalgiacore#nostalgeek#liminal#liminal art#liminality#liminal spaces#lofi#lofi art

1K notes

·

View notes

Text

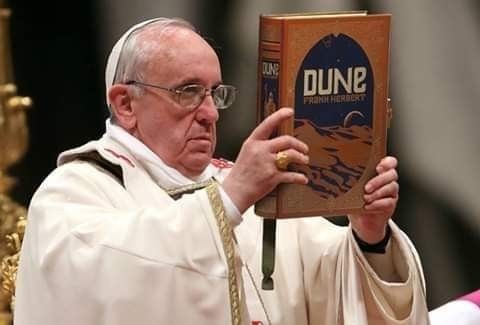

AI Search Engines: Why won't you use us? 😭

Me:

#Sorry but uncle Frank warned us about them thinking machines#Sometimes I wish he were alive just to see what's going on#Frank would totally take a bat to the nearest computer if it asked him to use ai#frank herbert's dune#frank herbert#dune#dune 2021#dune memes#paul atreides#children of dune#dune messiah#chapterhouse: dune#duncan idaho#god emperor of dune

3K notes

·

View notes

Text

I assure you, an AI didn’t write a terrible “George Carlin” routine

There are only TWO MORE DAYS left in the Kickstarter for the audiobook of The Bezzle, the sequel to Red Team Blues, narrated by @wilwheaton! You can pre-order the audiobook and ebook, DRM free, as well as the hardcover, signed or unsigned. There's also bundles with Red Team Blues in ebook, audio or paperback.

On Hallowe'en 1974, Ronald Clark O'Bryan murdered his son with poisoned candy. He needed the insurance money, and he knew that Halloween poisonings were rampant, so he figured he'd get away with it. He was wrong:

https://en.wikipedia.org/wiki/Ronald_Clark_O%27Bryan

The stories of Hallowe'en poisonings were just that – stories. No one was poisoning kids on Hallowe'en – except this monstrous murderer, who mistook rampant scare stories for truth and assumed (incorrectly) that his murder would blend in with the crowd.

Last week, the dudes behind the "comedy" podcast Dudesy released a "George Carlin" comedy special that they claimed had been created, holus bolus, by an AI trained on the comedian's routines. This was a lie. After the Carlin estate sued, the dudes admitted that they had written the (remarkably unfunny) "comedy" special:

https://arstechnica.com/ai/2024/01/george-carlins-heirs-sue-comedy-podcast-over-ai-generated-impression/

As I've written, we're nowhere near the point where an AI can do your job, but we're well past the point where your boss can be suckered into firing you and replacing you with a bot that fails at doing your job:

https://pluralistic.net/2024/01/15/passive-income-brainworms/#four-hour-work-week

AI systems can do some remarkable party tricks, but there's a huge difference between producing a plausible sentence and a good one. After the initial rush of astonishment, the stench of botshit becomes unmistakable:

https://www.theguardian.com/commentisfree/2024/jan/03/botshit-generative-ai-imminent-threat-democracy

Some of this botshit comes from people who are sold a bill of goods: they're convinced that they can make a George Carlin special without any human intervention and when the bot fails, they manufacture their own botshit, assuming they must be bad at prompting the AI.

This is an old technology story: I had a friend who was contracted to livestream a Canadian awards show in the earliest days of the web. They booked in multiple ISDN lines from Bell Canada and set up an impressive Mbone encoding station on the wings of the stage. Only one problem: the ISDNs flaked (this was a common problem with ISDNs!). There was no way to livecast the show.

Nevertheless, my friend's boss's ordered him to go on pretending to livestream the show. They made a big deal of it, with all kinds of cool visualizers showing the progress of this futuristic marvel, which the cameras frequently lingered on, accompanied by overheated narration from the show's hosts.

The weirdest part? The next day, my friend – and many others – heard from satisfied viewers who boasted about how amazing it had been to watch this show on their computers, rather than their TVs. Remember: there had been no stream. These people had just assumed that the problem was on their end – that they had failed to correctly install and configure the multiple browser plugins required. Not wanting to admit their technical incompetence, they instead boasted about how great the show had been. It was the Emperor's New Livestream.

Perhaps that's what happened to the Dudesy bros. But there's another possibility: maybe they were captured by their own imaginations. In "Genesis," an essay in the 2007 collection The Creationists, EL Doctorow (no relation) describes how the ancient Babylonians were so poleaxed by the strange wonder of the story they made up about the origin of the universe that they assumed that it must be true. They themselves weren't nearly imaginative enough to have come up with this super-cool tale, so God must have put it in their minds:

https://pluralistic.net/2023/04/29/gedankenexperimentwahn/#high-on-your-own-supply

That seems to have been what happened to the Air Force colonel who falsely claimed that a "rogue AI-powered drone" had spontaneously evolved the strategy of killing its operator as a way of clearing the obstacle to its main objective, which was killing the enemy:

https://pluralistic.net/2023/06/04/ayyyyyy-eyeeeee/

This never happened. It was – in the chagrined colonel's words – a "thought experiment." In other words, this guy – who is the USAF's Chief of AI Test and Operations – was so excited about his own made up story that he forgot it wasn't true and told a whole conference-room full of people that it had actually happened.

Maybe that's what happened with the George Carlinbot 3000: the Dudesy dudes fell in love with their own vision for a fully automated luxury Carlinbot and forgot that they had made it up, so they just cheated, assuming they would eventually be able to make a fully operational Battle Carlinbot.

That's basically the Theranos story: a teenaged "entrepreneur" was convinced that she was just about to produce a seemingly impossible, revolutionary diagnostic machine, so she faked its results, abetted by investors, customers and others who wanted to believe:

https://en.wikipedia.org/wiki/Theranos

The thing about stories of AI miracles is that they are peddled by both AI's boosters and its critics. For boosters, the value of these tall tales is obvious: if normies can be convinced that AI is capable of performing miracles, they'll invest in it. They'll even integrate it into their product offerings and then quietly hire legions of humans to pick up the botshit it leaves behind. These abettors can be relied upon to keep the defects in these products a secret, because they'll assume that they've committed an operator error. After all, everyone knows that AI can do anything, so if it's not performing for them, the problem must exist between the keyboard and the chair.

But this would only take AI so far. It's one thing to hear implausible stories of AI's triumph from the people invested in it – but what about when AI's critics repeat those stories? If your boss thinks an AI can do your job, and AI critics are all running around with their hair on fire, shouting about the coming AI jobpocalypse, then maybe the AI really can do your job?

https://locusmag.com/2020/07/cory-doctorow-full-employment/

There's a name for this kind of criticism: "criti-hype," coined by Lee Vinsel, who points to many reasons for its persistence, including the fact that it constitutes an "academic business-model":

https://sts-news.medium.com/youre-doing-it-wrong-notes-on-criticism-and-technology-hype-18b08b4307e5

That's four reasons for AI hype:

to win investors and customers;

to cover customers' and users' embarrassment when the AI doesn't perform;

AI dreamers so high on their own supply that they can't tell truth from fantasy;

A business-model for doomsayers who form an unholy alliance with AI companies by parroting their silliest hype in warning form.

But there's a fifth motivation for criti-hype: to simplify otherwise tedious and complex situations. As Jamie Zawinski writes, this is the motivation behind the obvious lie that the "autonomous cars" on the streets of San Francisco have no driver:

https://www.jwz.org/blog/2024/01/driverless-cars-always-have-a-driver/

GM's Cruise division was forced to shutter its SF operations after one of its "self-driving" cars dragged an injured pedestrian for 20 feet:

https://www.wired.com/story/cruise-robotaxi-self-driving-permit-revoked-california/

One of the widely discussed revelations in the wake of the incident was that Cruise employed 1.5 skilled technical remote overseers for every one of its "self-driving" cars. In other words, they had replaced a single low-waged cab driver with 1.5 higher-paid remote operators.

As Zawinski writes, SFPD is well aware that there's a human being (or more than one human being) responsible for every one of these cars – someone who is formally at fault when the cars injure people or damage property. Nevertheless, SFPD and SFMTA maintain that these cars can't be cited for moving violations because "no one is driving them."

But figuring out who which person is responsible for a moving violation is "complicated and annoying to deal with," so the fiction persists.

(Zawinski notes that even when these people are held responsible, they're a "moral crumple zone" for the company that decided to enroll whole cities in nonconsensual murderbot experiments.)

Automation hype has always involved hidden humans. The most famous of these was the "mechanical Turk" hoax: a supposed chess-playing robot that was just a puppet operated by a concealed human operator wedged awkwardly into its carapace.

This pattern repeats itself through the ages. Thomas Jefferson "replaced his slaves" with dumbwaiters – but of course, dumbwaiters don't replace slaves, they hide slaves:

https://www.stuartmcmillen.com/blog/behind-the-dumbwaiter/

The modern Mechanical Turk – a division of Amazon that employs low-waged "clickworkers," many of them overseas – modernizes the dumbwaiter by hiding low-waged workforces behind a veneer of automation. The MTurk is an abstract "cloud" of human intelligence (the tasks MTurks perform are called "HITs," which stands for "Human Intelligence Tasks").

This is such a truism that techies in India joke that "AI" stands for "absent Indians." Or, to use Jathan Sadowski's wonderful term: "Potemkin AI":

https://reallifemag.com/potemkin-ai/

This Potemkin AI is everywhere you look. When Tesla unveiled its humanoid robot Optimus, they made a big flashy show of it, promising a $20,000 automaton was just on the horizon. They failed to mention that Optimus was just a person in a robot suit:

https://www.siliconrepublic.com/machines/elon-musk-tesla-robot-optimus-ai

Likewise with the famous demo of a "full self-driving" Tesla, which turned out to be a canned fake:

https://www.reuters.com/technology/tesla-video-promoting-self-driving-was-staged-engineer-testifies-2023-01-17/

The most shocking and terrifying and enraging AI demos keep turning out to be "Just A Guy" (in Molly White's excellent parlance):

https://twitter.com/molly0xFFF/status/1751670561606971895

And yet, we keep falling for it. It's no wonder, really: criti-hype rewards so many different people in so many different ways that it truly offers something for everyone.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/01/29/pay-no-attention/#to-the-little-man-behind-the-curtain

Back the Kickstarter for the audiobook of The Bezzle here!

Image:

Cryteria (modified)

https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0

https://creativecommons.org/licenses/by/3.0/deed.en

--

Ross Breadmore (modified)

https://www.flickr.com/photos/rossbreadmore/5169298162/

CC BY 2.0

https://creativecommons.org/licenses/by/2.0/

#pluralistic#ai#absent indians#mechanical turks#scams#george carlin#comedy#body-snatchers#fraud#theranos#guys in robot suits#criti-hype#machine learning#fake it til you make it#too good to fact-check#mturk#deepfakes

2K notes

·

View notes

Text

why neuroscience is cool

space & the brain are like the two final frontiers

we know just enough to know we know nothing

there are radically new theories all. the. time. and even just in my research assistant work i've been able to meet with, talk to, and work with the people making them

it's such a philosophical science

potential to do a lot of good in fighting neurological diseases

things like BCI (brain computer interface) and OI (organoid intelligence) are soooooo new and anyone's game - motivation to study hard and be successful so i can take back my field from elon musk

machine learning is going to rapidly increase neuroscience progress i promise you. we get so caught up in AI stealing jobs but yes please steal my job of manually analyzing fMRI scans please i would much prefer to work on the science PLUS computational simulations will soon >>> animal testing to make all drug testing safer and more ethical !! we love ethical AI <3

collab with...everyone under the sun - psychologists, philosophers, ethicists, physicists, molecular biologists, chemists, drug development, machine learning, traditional computing, business, history, education, literally try to name a field we don't work with

it's the brain eeeeee

#my motivation to study so i can be a cool neuroscientist#science#women in stem#academia#stem#stemblr#studyblr#neuroscience#stem romanticism#brain#psychology#machine learning#AI#brain computer interface#organoid intelligence#motivation#positivity#science positivity#cogsci#cognitive science

1K notes

·

View notes

Text

How to Support Writers During the Scourge of A.I. Bullshit

Do

Interact with us. Talk to us. Reach out to us. Many writers physically write for themselves, but they share for others. To build a community and connect with someone, to learn from them, and to make a genuine connection through something they've created. Comments, questions, rambling tags, they all mean that our writing reached someone who genuinely enjoyed it.

Share our work. This does not mean repost, this means reblog and/or send the link to friends and other potential readers. Some writers may have negative experiences with their work being shared on other platforms (such as discord), but reblogging is almost always appreciated so we can reach a wider audience. Even if you don't have anything to say or comment, it will help.

Ask questions. Many writers are dying to talk about the creative choices they made, the word choice they fought with until they got it just right, the details they added just HOPING someone would notice it. If you wish you could get more context/detail about a piece, odds are all you have to do is ask!

Treat us like humans (because we are). Crazy concept, right? The thing that terrifies me the most about all this is the potential that we take humanity out of the arts. We replace it with A.I. until human creativity becomes irrelevant because it's more convenient and more profitable.

We are not robots making content for your entertainment, we are people sharing stories to make genuine human connections and share our passions. Stories that take hours, days, months, even years of our lives to properly craft. We practice and study and learn so that we can express ourselves skillfully. Even when done as a hobby for fun, there is heart and energy and time put into the final draft.

And finally, Don't

Use A.I. for anything. Because the more it's used, the more advanced it becomes. The more advanced it becomes, the harder it will be to discern a genuine human touch between an A.I. regurgitating what it's been taught.

In the end, this is a plea. I'm begging you. Do not give A.I. the ability to replace us.

#writing#writeblr#writers on tumblr#anti ai writing#anti ai#writing community#writing commentary#zac speaks#rage against the (ai) machine

3K notes

·

View notes

Text

the rise of AI art isn't surprising to us. for our entire lives, the attitude towards our skills has always been - that's not a real thing. it has been consistently, repeatedly devalued.

people treat art - all forms of it - as if it could exist by accident, by rote. they don't understand how much art is in the world. someone designed your home. someone designed the sign inside of your local grocery store. when you quote a character or line from something in media, that's a line a real person wrote.

"i could do that." sure, but you didn't. there's this joke where a plumber comes over to a house and twists a single knob. charges the guy 10k. the guy, furious, asks how the hell the bill is so high. the plumber says - "turning the knob was a dollar. the knowledge is the rest of the money."

the trouble is that nobody believes artists have knowledge. that we actively study. that we work hard, beyond doing our scales and occasionally writing a poem. the trouble is that unless you are already framed in a museum or have a book on a shelf or some kind of product, you aren't really an artist. hell, because of where i post my work, i'll never be considered a poet.

the thing that makes you an artist is choice. the thing that makes all art is choice. AI art is the fetid belief that art is instead an equation. that it must answer a specific question. Even with machine learning, AI cannot make a choice the way we can - because the choices we make have always been personal, complicated. our skills cannot be confined to "prompt and execution." what we are "solving" isn't just a system of numbers - it is how we process our entire existence. it isn't just "2 and 2 is 4", it's staring hard at the numbers and making the four into an alligator. it's rearranging the letters to say ow and it is the ugly drawing we make in the margin.

at some point, you will be able to write something by feeding my work into a machine. it will be perfectly legible and even might sound like me. but a machine doesn't understand why i do these things. it can be taught preferences, habits, statistical probability. it doesn't know why certain vowels sound good to me. it doesn't know the private rules i keep. it doesn't know how to keep evolving.

"but i want something to exist that doesn't exist yet." great. i'm glad you feel creative. go ahead and pay a fucking artist for it.

this is all saying something we all already knew. the sad fucking truth: we have to die to remind you. only when we're gone do we suddenly finally fucking mean something to you. artists are not replicable. we each genuinely have a skill, talent, and process that makes us unique. and there's actual quiet power in everything we do.

#also pay plumbers more. and electricians. and other devalued occupations#idk that this makes sense#but im like#people being so fucking pleased with themselves about the fact they can ''fake'' art#n im like#sure#but what if we stop making things for you huh#what if we stop giving u this stuff anymore#what happens to ur ai art? does it keep growing? does it keep making choices?#why do u need to see us as machines?#''i want X to exist but i don't have the skill to do it''#okay spend literally years of your life studying#''i don't want to do that''#okay pay someone who DID do that#''no i don't think it's a real skill''#okay so. YOU can't do it. and a LOT of people can't do it. but you think WE should be able to?#FOR FREE?#either it has value or it dont baby make up ur OWN mind#btw studying here is not used academically. i think if ur like. constantly knitting.#thats studying#do u spend hours reading and find urself taking notes and learning about writing#ur studying#do you follow other artists and spend a lot of your time trying new things (even unsuccessfully)#that's also studying#etc#was weird to write this thing about choices and then be like. wait why DO i like that

7K notes

·

View notes

Text

art is work. If you didn't put in hard work it's not art. If you didn't bleed then you're taking shortcuts. you have to put in "effort" or your art is worthless. if you don't have a work ethic then you're worthy of derision. if you are unwilling or unable to suffer then you are unworthy of making art

this is so, so obviously a conservative, reactionary sentiment. This is what my fucking dad says about Picasso. "They just want to push the button" is word-for-word what people used to say about electronic music - not "real" instruments, no talent involved, no skill, worthless. how does this not disturb more people? this should disturb you! is everyone just seeing posts criticizing AI and slamming reblog without reading too close, or do people actually agree with this?

usual disclaimer: this is not a "pro-ai" stance. this is a "think about what values you actually have" stance. there are many more coherent ways to criticize it

1K notes

·

View notes

Text

Okay, so you know how search engine results on most popular topics have become useless because the top results are cluttered with page after page of machine-generated gibberish designed to trick people into clicking in so it can harvest their ad views?

And you know how the data sets that are used to train these gibberish-generating AIs are themselves typically machine-generated, via web scrapers using keyword recognition to sort text lifted from wiki articles and blog posts into topical subsets?

Well, today I discovered – quite by accident – that the training-data-gathering robots apparently cannot tell the difference between wiki articles about pop-psych personality typologies (e.g., Myers-Briggs type indicators, etc.) and wiki articles about Homestuck classpects.

The upshot is that when a bot that's been trained on the resulting data sets is instructed to write fake mental health resource articles, sometimes it will start telling you about Homestuck.

#media#comics#webcomics#homestuck#classpects#ai#machine learning#psychology#pop psychology#mental health#let me tell you about homestuck

16K notes

·

View notes