#AMD Performance Benchmarks

Explore tagged Tumblr posts

Text

AMD's Ryzen AI Max+ 395 Claims Up to 68% Better Performance

In the ever-evolving world of technology, where performance metrics and market share often dictate the direction of innovation, AMD has consistently been considered the underdog, especially in the graphics processing unit (GPU) market against giants like Nvidia. However, AMD’s latest move with the introduction of the Ryzen AI Max+ 395 processor could herald a significant shift, particularly in…

#AI Performance in Laptops#AMD Competitive Edge#AMD Performance Benchmarks#AMD vs Nvidia#AMD vs Nvidia 2025#Consumer Choice in Laptops#DLSS vs FSR#Future Tech Trends#Gaming Laptop Market#Gaming Laptop Performance#Handheld Gaming#Laptop GPU Performance#Laptop GPU Revolution#Nvidia Pricing Strategy#Productivity Benchmark#Radeon 8060S iGPU#RDNA 3.5 Architecture#Ryzen AI Max#Ryzen AI Max+ 395#Upscaling Technology#Zen 5 Architecture

0 notes

Text

The Sequence Radar #544: The Amazing DeepMind's AlphaEvolve

New Post has been published on https://thedigitalinsider.com/the-sequence-radar-544-the-amazing-deepminds-alphaevolve/

The Sequence Radar #544: The Amazing DeepMind's AlphaEvolve

The model is pushing the boundaries of algorithmic discovery.

Created Using GPT-4o

Next Week in The Sequence:

We are going deeper into DeepMind’s AlphaEvolve. The knowledge section continues with our series about evals by diving into multimodal benchmarks. Our opinion section will discuss practical tips about using AI for coding. The engineering will review another cool AI framework.

You can subscribe to The Sequence below:

TheSequence is a reader-supported publication. To receive new posts and support my work, consider becoming a free or paid subscriber.

📝 Editorial: The Amazing AlphaEvolve

DeepMind has done it away and shipped another model that pushes the boudaries of what we consider possible with AI. AlphaEvolve is a groundbreaking AI system that redefines algorithm discovery by merging large language models with evolutionary optimization. It builds upon prior efforts like AlphaTensor, but significantly broadens the scope: instead of evolving isolated heuristics or functions, AlphaEvolve can evolve entire codebases. The system orchestrates a feedback loop where an ensemble of LLMs propose modifications to candidate programs, which are then evaluated against a target objective. Promising solutions are preserved and recombined in future generations, driving continual innovation. This architecture enables AlphaEvolve to autonomously invent algorithms of substantial novelty and complexity.

One of AlphaEvolve’s most striking contributions is a landmark result in computational mathematics: the discovery of a new matrix multiplication algorithm that improves upon Strassen’s 1969 breakthrough. For the specific case of 4×4 complex-valued matrices, AlphaEvolve found an algorithm that completes the task in only 48 scalar multiplications, outperforming Strassen’s method after 56 years. This result highlights the agent’s ability to produce not only working code but mathematically provable innovations that shift the boundary of known techniques. It offers a glimpse into a future where AI becomes a collaborator in theoretical discovery, not just an optimizer.

AlphaEvolve isn’t confined to abstract theory. It has demonstrated real-world value by optimizing key systems within Google’s infrastructure. Examples include improvements to TPU circuit logic, the training pipeline of Gemini models, and scheduling policies for massive data center operations. In these domains, AlphaEvolve discovered practical enhancements that led to measurable gains in performance and resource efficiency. The agent’s impact spans the spectrum from algorithmic theory to industrial-scale engineering.

Crucially, AlphaEvolve’s contributions are not just tweaks to existing ideas—they are provably correct and often represent entirely new approaches. Each proposed solution is rigorously evaluated through deterministic testing or benchmarking pipelines, with only high-confidence programs surviving the evolutionary loop. This eliminates the risk of brittle or unverified output. The result is an AI system capable of delivering robust and reproducible discoveries that rival those of domain experts.

At the core of AlphaEvolve’s engine is a strategic deployment of Gemini Flash and Gemini Pro—models optimized respectively for high-throughput generation and deeper, more refined reasoning. This combination allows AlphaEvolve to maintain creative breadth without sacrificing quality. Through prompt engineering, retrieval of prior high-performing programs, and an evolving metadata-guided prompt generation process, the system effectively balances exploration and exploitation in an ever-growing solution space.

Looking ahead, DeepMind aims to expand access to AlphaEvolve through an Early Access Program targeting researchers in algorithm theory and scientific computing. Its general-purpose architecture suggests that its application could scale beyond software engineering to domains like material science, drug discovery, and automated theorem proving. If AlphaFold represented AI’s potential to accelerate empirical science, AlphaEvolve points toward AI’s role in computational invention itself. It marks a paradigm shift: not just AI that learns, but AI that discovers.

🔎 AI Research

AlphaEvolve

AlphaEvolve is an LLM-based evolutionary coding agent capable of autonomously discovering novel algorithms and improving code for scientific and engineering tasks, such as optimizing TPU circuits or discovering faster matrix multiplication methods. It combines state-of-the-art LLMs with evaluator feedback loops and has achieved provably better solutions on several open mathematical and computational problems.

Continuous Thought Machines

This paper from Sakana AI introduces the Continuous Thought Machine (CTM), a biologically inspired neural network architecture that incorporates neuron-level temporal dynamics and synchronization to model a time-evolving internal dimension of thought. CTM demonstrates adaptive compute and sequential reasoning across diverse tasks such as ImageNet classification, mazes, and RL, aiming to bridge the gap between biological and artificial intelligence.

DarkBench

DarkBench is a benchmark designed to detect manipulative design patterns in large language models—such as sycophancy, brand bias, and anthropomorphism—through 660 prompts targeting six categories of dark behaviors. It reveals that major LLMs from OpenAI, Anthropic, Meta, Google, and Mistral frequently exhibit these patterns, raising ethical concerns in human-AI interaction.

Sufficient Context

This paper proposes the notion of “sufficient context” in RAG systems and develops an autorater that labels whether context alone is enough to answer a query, revealing that many LLM failures arise not from poor context but from incorrect use of sufficient information. Their selective generation method improves accuracy by 2–10% across Gemini, GPT, and Gemma models by using sufficiency signals to guide abstention and response behaviors.

Better Interpretability

General Scales Unlock AI Evaluation with Explanatory and Predictive Power– University of Cambridge, Microsoft Research Asia, VRAIN-UPV, ETS, et al. This work presents a new evaluation framework using 18 general cognitive scales (DeLeAn rubrics) to profile LLM capabilities and task demands, enabling both explanatory insights and predictive modeling of AI performance at the instance level. The framework reveals benchmark biases, uncovers scaling behaviors of reasoning abilities, and enables interpretable assessments of unseen tasks using a universal assessor trained on demand levels.

J1

This paper introduces J1, a reinforcement learning framework for training LLMs as evaluative judges by optimizing their chain-of-thought reasoning using verifiable reward signals. Developed by researchers at Meta’s GenAI and FAIR teams, J1 significantly outperforms state-of-the-art models like EvalPlanner and even larger-scale models like DeepSeek-R1 on several reward modeling benchmarks, particularly for non-verifiable tasks.

🤖 AI Tech Releases

Codex

OpenAI unveiled Codex, a cloud software engineering agent that can work on many parallel tasks.

Windsurf Wave

AI coding startup Windsurf announced its first generation of frontier models.

Stable Audio Open Small

Stability AI released a new small audio model that can run in mobile devices.

📡AI Radar

Databricks acquired serverless Postgres platform Neon for $1 billion.

Saudi Arabia Crown Prince unveiled a new company focused on advancing AI technologies in the region.

Firecrawl is ready to pay up to $1 million for AI agent employees.

Cohere acquired market research platform OttoGrid.

Cognichip, an AI platform for chip design, emerged out of stealth with $33 million in funding.

Legal AI startup Harvey is in talks to raise $250 million.

TensorWave raised $100 million to build an AMD cloud.

Google Gemma models surpassed the 150 million downloads.

#250#agent#ai#ai agent#AI performance#ai platform#algorithm#Algorithms#AlphaEvolve#AlphaFold#amazing#amd#anthropic#architecture#Art#artificial#Artificial Intelligence#Asia#audio#benchmark#benchmarking#benchmarks#Bias#biases#billion#bridge#chip#Chip Design#Cloud#cloud software

0 notes

Text

Lenovo Legion Go S Review: A Handheld Gaming Experience

The Lenovo Legion Go S is Lenovo’s latest attempt at breaking into the increasingly competitive handheld gaming PC market. Following the original Legion Go, this new “S” variant takes a slightly different approach—aiming to balance performance, portability, and affordability. But does it succeed in delivering a true gaming experience, or is it just another underwhelming Windows handheld? Let’s…

#120Hz display#AAA Gaming#AMD Ryzen Z2 Go#Battery Life#eGPU Support#Game Pass Handheld#Gaming Benchmarks#Gaming console#Gaming Hardware#Gaming on the Go#Gaming Performance#Gaming Tech#Hall Effect Joysticks#Handheld Console Review#Handheld Gaming PC#Indie Gaming#Legion Space#Lenovo Gaming#Lenovo Legion#Lenovo Legion Go S#MicroSD Expansion#PC Gaming Handheld#Portable Gaming#RGB Lighting#ROG Ally Competitor#Steam Deck Alternative#USB4 Support#Windows 11 Gaming#Windows Handheld

0 notes

Text

Choosing the Right Processor: Intel vs AMD Ryzen – 2025 Guide for Gaming, Productivity & Budget

Intel vs AMD Ryzen : 2025 CPU Guide for Gaming & Productivity Selecting the right processor (CPU) for your laptop or desktop is a critical decision that directly impacts your device’s performance. Whether you’re a gamer, content creator, student, or power user, choosing the right processor ensures that you get the best value and efficiency from your system. In this guide, we’ll dive into the…

#best value CPU#CPU benchmarks#CPU showdown 2023#Intel vs AMD battle#PC performance tips#processor buying advice#Ryzen vs Core i9#tech reviews#tech specs explained

0 notes

Text

AMD Ryzen: A Game-Changer in Processor Technology

Introduction AMD’s Ryzen processor series has revolutionized the CPU market, offering exceptional performance, value, and versatility. With their innovative architecture and competitive pricing, Ryzen processors have gained immense popularity among gamers, content creators, and everyday users. In this comprehensive guide, we’ll delve into the key features, benefits, and specifications of Ryzen…

#AMD#AMD Ryzen#computer hardware#computer processor#content creation processor#CPU#CPU comparison#CPU market#desktop processor#electronics#future technology#gadget reviews#gadgets#gaming processor#innovation#Intel#laptop processor#mobile technology#PC hardware#processor benchmarks#processor buying guide#processor comparison#processor performance#processor price#processor review#processor specs#Ryzen#Ryzen processor#tech reviews#technology

0 notes

Text

Do AMD Ryzen 7 8700G and Ryzen 5 8600G processors have enough power in gaming to make you forget about the video card?

We adore PCs outfitted with the latest and most high-performance components, running games at the highest possible FPS in 4K resolution. However, what do you do when your gaming budget doesn’t stretch beyond the price of a second-hand flagship phone? AMD addresses this question and caters to budget-conscious gamers and casual players by introducing the 8000G Series. This series could serve as a…

View On WordPress

#AM5 socket motherboards#AMD 8000G series#APU benchmarks#CPU overclocking#DDR5 RAM#Gaming performance analysis#Gaming processors#Integrated graphics#Mini-ITX builds#PC gaming on a budget#Performance testing#Ryzen 5 8600G#Ryzen 7 8700G#Small form factor gaming#Synthetic benchmarks

0 notes

Link

The Ultimate Showdown: Comparing the Powerhouses - 7900 XTX vs. 4080 Welcome to the ultimate showdown between two powerhouse graphics cards - the 7900 XTX and the 4080. In the world of modern computing and gaming, graphics cards play a crucial role in delivering stunning visuals and smooth performance. In this article, we will dive deep into the features, specifications, and performance of these two top-tier graphics cards to help you make an informed decision. [caption id="attachment_60696" align="aligncenter" width="1280"] 7900 xtx vs 4080[/caption] Overview of Graphics Cards Graphics cards are essential components of a computer system, responsible for rendering and displaying images, videos, and animations. They offload the graphics processing tasks from the CPU, allowing for faster and more efficient rendering. A graphics card consists of various components, including a GPU (Graphics Processing Unit), VRAM (Video RAM), cooling system, and connectors. The history of graphics cards dates back to the 1980s when they were primarily used for displaying simple 2D graphics. Over the years, advancements in technology have led to the development of highly sophisticated graphics cards capable of handling complex 3D graphics, virtual reality, and high-resolution displays. The 7900 XTX: Unleashing Power The 7900 XTX, manufactured by a leading graphics card company, is a true powerhouse in the market. This graphics card is packed with cutting-edge features and specifications that deliver exceptional performance. At its core, the 7900 XTX boasts a powerful architecture designed for optimal performance. With a high memory capacity and bandwidth, it can handle large and complex graphical data with ease. The card's clock speeds ensure smooth and responsive gameplay, even in demanding games. When it comes to real-world applications, the 7900 XTX shines. Its advanced rendering capabilities make it ideal for content creation tasks such as video editing and 3D modeling. Additionally, it supports AI acceleration, enabling faster processing of machine learning algorithms. While the 7900 XTX offers impressive performance, it's important to consider its drawbacks. The card can be power-hungry and may require a robust cooling solution to maintain optimal temperatures. Additionally, its high-end features come at a premium price point, making it less accessible for budget-conscious consumers. The 4080: Next-Generation Marvel Introducing the 4080, another marvel in the world of graphics cards. This next-generation graphics card, manufactured by a renowned company, pushes the boundaries of performance and innovation. The 4080 comes equipped with an advanced architecture that takes advantage of the latest technological advancements. With a substantial memory capacity and high bandwidth, it can handle intensive graphical tasks with ease. Its clock speeds ensure smooth and responsive gameplay, delivering an immersive gaming experience. Similar to the 7900 XTX, the 4080 excels in real-world applications. Its powerful rendering capabilities make it a top choice for content creators, enabling them to work with high-resolution videos and complex 3D models. The card's AI acceleration capabilities also contribute to faster processing of AI algorithms, making it a valuable asset for machine learning tasks. As with any graphics card, the 4080 has its pros and cons. While it offers exceptional performance, it may also consume a significant amount of power. It is important to consider the power supply requirements and ensure adequate cooling to maintain optimal performance. Additionally, the 4080's high-end features come at a premium price, making it a more suitable choice for enthusiasts and professionals. Head-to-Head Comparison Now, let's dive into a head-to-head comparison between the 7900 XTX and the 4080 to see how they stack up against each other in various aspects. Architecture and Manufacturing Process The 7900 XTX features a state-of-the-art architecture that leverages advanced technologies to deliver exceptional performance. It utilizes a cutting-edge manufacturing process that ensures efficiency and reliability. On the other hand, the 4080 takes advantage of the latest architectural advancements, coupled with an advanced manufacturing process, to offer superior performance and power efficiency. Memory Capacity, Bandwidth, and Type The 7900 XTX boasts an impressive memory capacity, allowing for seamless handling of large graphical data. It also offers high memory bandwidth, enabling faster data transfer between the GPU and VRAM. The card utilizes the latest memory type, providing optimal performance. Similarly, the 4080 offers a substantial memory capacity, coupled with high bandwidth, to deliver exceptional performance. It also utilizes the latest memory type, ensuring efficient data processing. Clock Speeds, Power Consumption, and Cooling Solutions Both the 7900 XTX and the 4080 feature high clock speeds, ensuring smooth and responsive gameplay. However, it's important to note that higher clock speeds may result in increased power consumption. Adequate power supply and cooling solutions are crucial to maintain optimal performance for both cards. Gaming Performance, Frame Rates, and Compatibility When it comes to gaming performance, both the 7900 XTX and the 4080 excel in delivering stunning visuals and high frame rates. They are compatible with the latest gaming technologies, ensuring a smooth gaming experience with support for features such as real-time ray tracing and variable rate shading. Performance in Content Creation, AI, and Other Applications Both the 7900 XTX and the 4080 offer exceptional performance in content creation tasks, such as video editing and 3D modeling. They provide the necessary horsepower to handle complex graphical workloads efficiently. Additionally, their AI acceleration capabilities make them valuable tools for machine learning tasks and other AI applications. Pricing, Availability, and Value for Money When considering the pricing aspect, it's important to note that both the 7900 XTX and the 4080 are high-end graphics cards, and they come with premium price tags. Availability may vary depending on market demand and supply. The value for money will depend on individual needs and budget constraints. FAQ's: Q: What is the difference between the 7900 XTX and the 4080 in terms of architecture? A: The 7900 XTX features a state-of-the-art architecture, while the 4080 takes advantage of the latest architectural advancements. The specific details of their architectures may vary, but both cards are designed to deliver exceptional performance and efficiency. Q: How much memory does the 7900 XTX have compared to the 4080? A: The memory capacity of the 7900 XTX and the 4080 can vary depending on the specific model. However, both cards offer substantial memory capacity to handle large graphical data efficiently. Q: Which graphics card consumes more power - the 7900 XTX or the 4080? A: Both the 7900 XTX and the 4080 can consume a significant amount of power, especially under heavy load. It is important to ensure that your power supply can handle the power requirements of your chosen graphics card. Q: Do the 7900 XTX and the 4080 come with adequate cooling solutions? A: The cooling solutions for the 7900 XTX and the 4080 can vary depending on the specific model and manufacturer. It is recommended to choose a model that offers efficient cooling to maintain optimal temperatures during intense usage. Q: Are the 7900 XTX and the 4080 compatible with the latest gaming technologies? A: Yes, both the 7900 XTX and the 4080 are compatible with the latest gaming technologies. They support features such as real-time ray tracing and variable rate shading, providing an immersive gaming experience. Q: Can the 7900 XTX and the 4080 handle content creation tasks? A: Absolutely! Both the 7900 XTX and the 4080 are well-suited for content creation tasks. Their powerful rendering capabilities make them ideal for video editing, 3D modeling, and other graphical workloads. Q: Do the 7900 XTX and the 4080 support AI acceleration? A: Yes, both the 7900 XTX and the 4080 support AI acceleration. They can handle machine learning algorithms efficiently, making them valuable tools for AI-related tasks. Q: How do the prices of the 7900 XTX and the 4080 compare? A: Both the 7900 XTX and the 4080 are high-end graphics cards, and they come with premium price tags. The specific pricing may vary depending on the model and manufacturer. Q: Are the 7900 XTX and the 4080 readily available in the market? A: The availability of the 7900 XTX and the 4080 can vary depending on market demand and supply. It is recommended to check with retailers or manufacturers for the latest availability information. Q: Which graphics card offers better value for money - the 7900 XTX or the 4080? A: The value for money will depend on individual needs and budget constraints. It is important to consider the specific requirements and intended usage to determine which graphics card best suits your needs. Conclusion In conclusion, the 7900 XTX and the 4080 are both powerful graphics cards that offer exceptional performance and features. Each card has its own strengths and weaknesses, and the choice between them ultimately depends on individual preferences, requirements, and budget. Whether you are a gamer, content creator, or AI enthusiast, carefully consider the specifications, performance benchmarks, and pricing before making your decision. Choosing the right graphics card will ensure a smooth and immersive computing and gaming experience.

#4080#7900_xtx#Amd#benchmarks#comparison#Gaming#graphic_cards#nvidia#Performance#price#release_date#specifications

0 notes

Note

Thoughts on the recent 8GB of VRAM Graphics Card controversy with both AMD and NVidia launching 8GB GPUs?

I think tech media's culture of "always test everything on max settings because the heaviest loads will be more GPU bound and therefore a better benchmark" has led to a culture of viewing "max settings" as the default experience and anything that has to run below max settings as actively bad. This was a massive issue for the 6500XT a few years ago as well.

8GiB should be plenty but will look bad at excessive settings.

Now, with that said, it depends on segment. An excessively expensive/high-end GPU being limited by insufficient memory is obviously bad. In the case of the RTX 5060Ti I'd define that as encountering situations where a certain game/res/settings combination is fully playable, at least on the 16GiB model, but the 8GiB model ends up much slower or even unplayable. On the other hand, if the game/res/settings combination is "unplayable" (excessively low framerate) on the 16GiB model anyway I'd just class that as running inappropriate settings.

Looking through the techpowerup review; Avowed, Black Myth: Wukong, Dragon Age: The Veilguard, God of War Ragnarök, Monster Hunter Wilds and S.T.A.L.K.E.R. 2: Heart of Chernobyl all see significant gaps between the 8GiB and 16GiB cards at high res/settings where the 16GiB was already "unplayable". These are in my opinion inappropriate game/res/setting combinations to test at. They showcase an extreme situation that's not relevant to how even a higher capacity card would be used. Doom Eternal sees a significant gap at 1440p and 4K max settings without becoming "unplayable".

F1 24 goes from 78.3 to 52.0 FPS at 4K max so that's a giant gap that could be said to also impact playability. Spider-Man 2 (wow they finally made a second spider-man game about time) does something similar at 1440p. The Last of Us Pt.1 has a significant performance gap at 1080p, and the 16GiB card might scrape playability at 1440p, but the huge gap at 4K feels like another irrelevant benchmark of VRAM capacity.

All the other games were pretty close between the 8GiB and 16GiB cards.

Overall, I think this creates a situation where you have a large artificial performance difference from these tests that would be unplayable anyway. The 8GiB card isn't bad - the benchmarks just aren't fair to it.

Now, $400 for a GPU is still fucking expensive and also Nvidia not sampling it is an attempt to trick people who might not realise it can be limiting sometimes but that's a whole other issue.

4 notes

·

View notes

Text

I upgraded my PC this week. My old setup had NVIDIA RTX 2080 Ti, i7-8700K and 32 GB of RAM. My new PC has NVIDIA RTX 5080, AMD Ryzen 7 9800X3D and 64 GB RAM.

I ran the Monster Hunter Wilds benchmark before and after upgrade to compare the leap between the two rigs on a resolution of 2560x1440. Tests were run on identical Ultra graphics settings, without ray tracing and without frame generation.

My old PC only managed a score of "Playable" and an average of 35.60 FPS:

New PC got a score "Excellent" and reached an average of 116.35 FPS:

I also ran an additional test with ray tracing (quality) and with frame generation on the new PC. With frame gen the average framerate jumped to 174.20 FPS.

I'm hoping the hardware can keep its cool under heavy stress. I had to get a Fractal Design Pop Silent case because I needed a case that's shut from the top (those are rare to find these days) and one that isn't a butt-ugly tacky light show. I keep my glass / mug on the edge of my desk and my PC is placed on the right side of the desk on the floor. At times I've spilled water or tea on my PC and just a few weeks ago my husband toppled my glass - right on top of my PC. So you can probably understand why it would be hazardous to have a PC case that has air vents on the top!

The case couldn't accommodate a liquid cooler, so the company that built my PC suggested they could install a Noctua NH-D15 Chromax Black CPU air cooler that should be on par or close to liquid cooler efficiency.

Unfortunately I didn't manage to get any decent pictures of the new PC's innards because the tempered glass window seems to reflect almost like a mirror when trying to photograph it.

I'll have to test how this new PC performs when playing, perhaps with Doom: The Dark Ages or Clair Obscur: Expedition 33. Or maybe I'll fire up a Picross game or a JRPG from 20 years ago… For now most of my time has gone to tweaking all kinds of settings to my liking, installing browsers, storefront clients and such.

So long "Skynet2019" and thanks for your years of unwavering service. Welcome to the house "Skynet2025"!

3 notes

·

View notes

Text

how far is too far

to take this old socket 423 motherboard? it’s really only limited by the boot drive speed and the cpu cooling/fans at this point, it’s nearly maxed out

so socket 423 was the oldest Pentium IV stuff, though it may have supported some celeron chips if i’m not mistaken. The fastest cpu was 2.0GHz with 400MHz RD-RAM bus with 256KB of L2 cache.

What I have was someone’s rejected server board from 1999 that I got used in 2000 or 2001 maybe, and this has been its third refresh 😅 but for the slowest most uncommon p4, it is great, it has ATA100 RAID and that can mean using up to 4 drives as one for more speed or redundancy.

it has irda for some infrared data, several old school serial ports, parallel, AGP 4x, and 5 PCI ports.

I had already given it 2GB of ECC RAM, some multiformat DVD burners (one sports DVD-RAM support), a 3.5” floppy, and even a SATA soft RAID PCI card,

but I am questioning now that SSD’s are cheap, whether 4 SSD’s would be faster over 33MHz PCI soft RAID or ATA100 hardware based RAID at the full 100MHz ? maybe this thing would be seriously fast, for what it is

I also learned that AGP 4x was as much real bandwidth as any GPUs of the era ever could saturate since AGP8x didn’t last long before being replaced by PCI-express 2.0 16x slots for most GPUs… So AGP 8x cards work just fine here.

I found a GeForce 7600 GS 512MB model that needed recapped, amd so I havent tried it; then i found an ATi HD 3650 512MB AGP8x GPU to try in the meantime. Originally in 99 or 2000 this box was rocking an ATi All-In-Wonder Rage 128 card, but I foolishly gave it the ATi All-In-Wonder Radeon 8500dv for the greater 64MB vram and a couple firewire 1394a ports. It was a fine card, but analog TV is gone, rendering the tuner useless, and the A/D/A converter quality was actually pretty bad on this card with a lot of extra noise added on anything you plugged in. Once I got a miniDV camcorder I just used its converters foe everything, as it looked so much better!

I also got it with a Creative Labs SoundBlaster Live! 5.1 card, which was super cool at the time, but until I had any 5.1 speakers to use it with, it was pointless versus the nearly-identical 2-channel onboard AC’97 chip, or the other nearly identical AC’97 chip on the Radeon card! I wasnmt even bright enough to disable the onboard audio in the BIOS, at the time so I was always doing some dumb routing and wasting resources on all 3 sound cards

Then I did something even more dumb later on and got a GeForce MX420 card with the same 64MB vRAM but less rendering capability than the Radeon card at the time. (As I understand it now, these were more of a display adapter than a GPU?)

I’ll try to remember to benchmark these options against one another at some point, bc i have a feeling the GPU and boot drive is going to make all the difference in the gaming performance

Beyond all that, the peripheral cards made a big difference too! I added a VIA 1394 card, an NEC USB2.0 card, and a SiS combo USB2.0 and 1394a card. The NEC seemed much more snappy at USB2.0! I also accidentally disabled the system once by plugging the irDA to the i2c SMbus header below it by mistake. So it has i2c support! I may try to learn how to use it and make a PWM fan controller for it! It would be sick to have something read the core temps and apply the PWM slopes for all the fans accordingly.

IF I can do that, i’ll definitely swap CPU fans! I heard some socket 775 coolers can be adapted to fit! I’d love to know if anyone has experience with adapting the two. If all fails, I have a drill press and a smart g/f…. Maybe I’ll post any updates

should I burden this beast with Vista to run DX10??

#it kinda went from the worst use of said computer to maybe as good as it can be!#technomancy#retro computing#almost

2 notes

·

View notes

Text

Best PC for Data Science & AI with 12GB GPU at Budget Gamer UAE

Are you looking for a powerful yet affordable PC for Data Science, AI, and Deep Learning? Budget Gamer UAE brings you the best PC for Data Science with 12GB GPU that handles complex computations, neural networks, and big data processing without breaking the bank!

Why Do You Need a 12GB GPU for Data Science & AI?

Before diving into the build, let’s understand why a 12GB GPU is essential:

✅ Handles Large Datasets – More VRAM means smoother processing of big data. ✅ Faster Deep Learning – Train AI models efficiently with CUDA cores. ✅ Multi-Tasking – Run multiple virtual machines and experiments simultaneously. ✅ Future-Proofing – Avoid frequent upgrades with a high-capacity GPU.

Best Budget Data Science PC Build – UAE Edition

Here’s a cost-effective yet high-performance PC build tailored for AI, Machine Learning, and Data Science in the UAE.

1. Processor (CPU): AMD Ryzen 7 5800X

8 Cores / 16 Threads – Perfect for parallel processing.

3.8GHz Base Clock (4.7GHz Boost) – Speeds up data computations.

PCIe 4.0 Support – Faster data transfer for AI workloads.

2. Graphics Card (GPU): NVIDIA RTX 3060 12GB

12GB GDDR6 VRAM – Ideal for deep learning frameworks (TensorFlow, PyTorch).

CUDA Cores & RT Cores – Accelerates AI model training.

DLSS Support – Boosts performance in AI-based rendering.

3. RAM: 32GB DDR4 (3200MHz)

Smooth Multitasking – Run Jupyter Notebooks, IDEs, and virtual machines effortlessly.

Future-Expandable – Upgrade to 64GB if needed.

4. Storage: 1TB NVMe SSD + 2TB HDD

Ultra-Fast Boot & Load Times – NVMe SSD for OS and datasets.

Extra HDD Storage – Store large datasets and backups.

5. Motherboard: B550 Chipset

PCIe 4.0 Support – Maximizes GPU and SSD performance.

Great VRM Cooling – Ensures stability during long AI training sessions.

6. Power Supply (PSU): 650W 80+ Gold

Reliable & Efficient – Handles high GPU/CPU loads.

Future-Proof – Supports upgrades to more powerful GPUs.

7. Cooling: Air or Liquid Cooling

AMD Wraith Cooler (Included) – Good for moderate workloads.

Optional AIO Liquid Cooler – Better for overclocking and heavy tasks.

8. Case: Mid-Tower with Good Airflow

Multiple Fan Mounts – Keeps components cool during extended AI training.

Cable Management – Neat and efficient build.

Why Choose Budget Gamer UAE for Your Data Science PC?

✔ Custom-Built for AI & Data Science – No pre-built compromises. ✔ Competitive UAE Pricing – Best deals on high-performance parts. ✔ Expert Advice – Get guidance on the perfect build for your needs. ✔ Warranty & Support – Reliable after-sales service.

Performance Benchmarks – How Does This PC Handle AI Workloads?

TaskPerformanceTensorFlow Training2x Faster than 8GB GPUsPython Data AnalysisSmooth with 32GB RAMNeural Network TrainingHandles large models efficientlyBig Data ProcessingNVMe SSD reduces load times

FAQs – Data Science PC Build in UAE

1. Is a 12GB GPU necessary for Machine Learning?

Yes! More VRAM allows training larger models without memory errors.

2. Can I use this PC for gaming too?

Absolutely! The RTX 3060 12GB crushes 1080p/1440p gaming.

3. Should I go for Intel or AMD for Data Science?

AMD Ryzen offers better multi-core performance at a lower price.

4. How much does this PC cost in the UAE?

Approx. AED 4,500 – AED 5,500 (depends on deals & upgrades).

5. Where can I buy this PC in the UAE?

Check Budget Gamer UAE for the best custom builds!

Final Verdict – Best Budget Data Science PC in UAE

If you're into best PC for Data Science with 12GB GPU PC build from Budget Gamer UAE is the perfect balance of power and affordability. With a Ryzen 7 CPU, RTX 3060, 32GB RAM, and ultra-fast storage, it handles heavy workloads like a champ.

#12GB Graphics Card PC for AI#16GB GPU Workstation for AI#Best Graphics Card for AI Development#16GB VRAM PC for AI & Deep Learning#Best GPU for AI Model Training#AI Development PC with High-End GPU

2 notes

·

View notes

Text

Monster Hunter Wilds Gets PC Benchmark Tool — See How Your System Stacks Up

Monster Hunter Wilds Gets PC Benchmark Tool — See How Your System Stacks Up Capcom's Monster Hunter Wilds is set to be a demanding title for PCs, featuring massive creatures, numerous on-screen characters, and expansive maps to explore. With the game’s release just around the corner, Capcom has provided a PC benchmark tool to help players assess their system's performance capabilities. The benchmark tool is available on Steam and requires players to have the Steam client installed, along with space to download the benchmark. It can evaluate a PC's performance and assign a score of up to 20,000. To play Monster Hunter Wilds, a system must score at least 10,250; lower scores may require hardware adjustments for optimal gameplay. Minimum System Requirements The minimum system requirements for Monster Hunter Wilds are as follows: - Resolution: 1080p (FHD) - Frame Rate: 60fps (with Frame Generation enabled) - OS: Windows 10 (64-bit required) / Windows 11 (64-bit required) - Processor: - Intel Core i5-10400 - Intel Core i3-12100 - AMD Ryzen 5 3600 - Memory: 16GB - Graphics: - GeForce RTX 2060 Super (VRAM 8GB) - Radeon RX 6600 (VRAM 8GB) - VRAM: 8GB or more required - Storage: 75GB SSD (DirectStorage supported) This game is expected to run at 1080p (upscaled) / 60fps (with Frame Generation enabled) under the "Medium" graphics setting. Capcom also released a new trailer for Monster Hunter Wilds, showcasing the Iceshard Cliffs and the formidable monsters that inhabit the area. This glimpse highlights the need for robust processing power to fully experience the game. Open Beta Test Players eager for an early glimpse can participate in the second open beta test for Monster Hunter Wilds, scheduled for two weekends: February 6-9 and February 13-16. Most of the content will mirror that of the first beta held last November, allowing players access to character-creation tools and a story trial, along with a Doshagma hunt. In this second beta, the Doshagma hunt serves as a gateway to further adventures against the Gypceros and Arkveld. Participants will receive a Felyne pendant or Seikret, along with a bonus item pack that will carry over to the main game upon its release. Monster Hunter Wilds is set to launch on Xbox Series X|S, PlayStation 5, and PC on February 28. Players looking to score bonus items ahead of time can do so by playing the mobile game Monster Hunter Now. Read the full article

3 notes

·

View notes

Text

as someone who was completely (almost) not paying attention to the gpu market at all until 2 months ago when i suddenly needed to get something new, but im still in the rabbithole of watching benchmarks and reviews for fun now - the impression im getting is that when deciding on buying one:

the high end gpu releases first (which i didnt know), most people cant afford it, everyone waits for the less expensive ones in the series (talking about nvidia)

the other ones get released, they're still expensive, performance not worth the price, wait for other versions?

then ti super duper uber different but the same gpus get released, prices go down a bit, time to get one! but wait, no no dont get one now - wait for the 5000 series its coming soon

wait for the 5000 series to get cheaper, wait for the other variations of the gpus to be released, wait for benchmarks and reviews, wait AMD is cooking but no they're not ha ha raytracing, but are they? but will they? but wont they?

repeat cycle

did i get it right is this how people function nowadays lol i really feel like all this waiting is stupid like, get what you can when you need it - if a year later you find a reason to get something new then sell what you got to cover most of the price? am i wrong here? its what i did and i dont feel like im going to want anything new for at least 3-4 years anyways

#plus i got my gpu hella cheaper than it was online bc i went to the actual store and the guy that works there helped a lott#bro was so helpful im so glad i chose that place

4 notes

·

View notes

Text

Record-breaking run on Frontier sets new bar for simulating the universe in exascale era

The universe just got a whole lot bigger—or at least in the world of computer simulations, that is. In early November, researchers at the Department of Energy's Argonne National Laboratory used the fastest supercomputer on the planet to run the largest astrophysical simulation of the universe ever conducted.

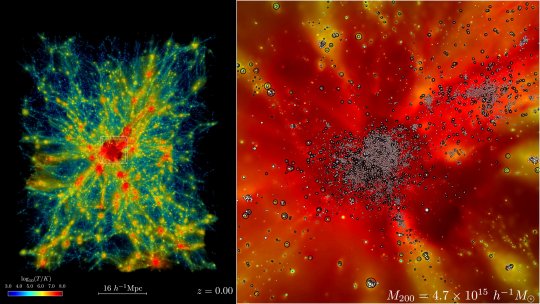

The achievement was made using the Frontier supercomputer at Oak Ridge National Laboratory. The calculations set a new benchmark for cosmological hydrodynamics simulations and provide a new foundation for simulating the physics of atomic matter and dark matter simultaneously. The simulation size corresponds to surveys undertaken by large telescope observatories, a feat that until now has not been possible at this scale.

"There are two components in the universe: dark matter—which as far as we know, only interacts gravitationally—and conventional matter, or atomic matter," said project lead Salman Habib, division director for Computational Sciences at Argonne.

"So, if we want to know what the universe is up to, we need to simulate both of these things: gravity as well as all the other physics including hot gas, and the formation of stars, black holes and galaxies," he said. "The astrophysical 'kitchen sink' so to speak. These simulations are what we call cosmological hydrodynamics simulations."

Not surprisingly, the cosmological hydrodynamics simulations are significantly more computationally expensive and much more difficult to carry out compared to simulations of an expanding universe that only involve the effects of gravity.

"For example, if we were to simulate a large chunk of the universe surveyed by one of the big telescopes such as the Rubin Observatory in Chile, you're talking about looking at huge chunks of time—billions of years of expansion," Habib said. "Until recently, we couldn't even imagine doing such a large simulation like that except in the gravity-only approximation."

The supercomputer code used in the simulation is called HACC, short for Hardware/Hybrid Accelerated Cosmology Code. It was developed around 15 years ago for petascale machines. In 2012 and 2013, HACC was a finalist for the Association for Computing Machinery's Gordon Bell Prize in computing.

Later, HACC was significantly upgraded as part of ExaSky, a special project led by Habib within the Exascale Computing Project, or ECP. The project brought together thousands of experts to develop advanced scientific applications and software tools for the upcoming wave of exascale-class supercomputers capable of performing more than a quintillion, or a billion-billion, calculations per second.

As part of ExaSky, the HACC research team spent the last seven years adding new capabilities to the code and re-optimizing it to run on exascale machines powered by GPU accelerators. A requirement of the ECP was for codes to run approximately 50 times faster than they could before on Titan, the fastest supercomputer at the time of the ECP's launch. Running on the exascale-class Frontier supercomputer, HACC was nearly 300 times faster than the reference run.

The novel simulations achieved its record-breaking performance by using approximately 9,000 of Frontier's compute nodes, powered by AMD Instinct MI250X GPUs. Frontier is located at ORNL's Oak Ridge Leadership Computing Facility, or OLCF.

IMAGE: A small sample from the Frontier simulations reveals the evolution of the expanding universe in a region containing a massive cluster of galaxies from billions of years ago to present day (left). Red areas show hotter gasses, with temperatures reaching 100 million Kelvin or more. Zooming in (right), star tracer particles track the formation of galaxies and their movement over time. Credit: Argonne National Laboratory, U.S Dept of Energy

vimeo

In early November 2024, researchers at the Department of Energy's Argonne National Laboratory used Frontier, the fastest supercomputer on the planet, to run the largest astrophysical simulation of the universe ever conducted. This movie shows the formation of the largest object in the Frontier-E simulation. The left panel shows a 64x64x76 Mpc/h subvolume of the simulation (roughly 1e-5 the full simulation volume) around the large object, with the right panel providing a closer look. In each panel, we show the gas density field colored by its temperature. In the right panel, the white circles show star particles and the open black circles show AGN particles. Credit: Argonne National Laboratory, U.S Dept. of Energy

3 notes

·

View notes

Text

Dominate the Battlefield: Intel Battlemage GPUs Revealed

Intel Arc GPU

After releasing its first-generation Arc Alchemist GPUs in 2022, Intel now seems to be on a two-year cadence, as seen by the appearance of the Battlemage in a shipping manifest. This suggests that Battlemage GPUs are being supplied to Intel’s partners for testing, as it’s the first time they’ve seen any proof of them existing in the real world. Intel is probably getting ready for a launch later this year given the timing of this.

Two Battlemage GPUs are being shipped by Intel to its partners, per a recently discovered shipment manifest that was published on X. The GPUs’ designations, G10 and G21, suggest Intel is taking a similar approach as Alchemist, offering one SKU that is more or less high-end for “mainstream” gamers and one that is less expensive.

Intel Arc Graphics Cards

As you may remember, Intel had previously announced plans to launch four GPUs in the Alchemist family:

Intel Arc A380

The A380, A580, A750, and A770. However, only the latter two were officially announced. They anticipate that the A750 and A770, which Intel most likely delivers at launch for midrange gamers, will be replaced by the G10.

They’ve never heard of cards being “in the wild,” but two Battlemage GPUs have shown up in the Si Soft benchmark database before. The fact that both of those cards have 12GB of VRAM stood out as particularly noteworthy. This suggests that Intel increased their base-level allowance from 8GB, which is a wise decision in 2024. As stated by Intel’s CEO earlier this year, Battlemage was “in the labs” in January.

Intel Arc A770

A previously released roadmap from Intel indicates that the G10 is a 150W component and the G21 is 225W. It is anticipated that Intel will reveal notable improvements in Battlemage’s AI capabilities, greater upscaling performance, and ray tracing performance. As 225W GPUs were the previous A750 and A770, it seems Battlemage will follow the script when it comes to its efficiency goals. The business has previously declared that it wishes to aim for this “sweet spot” in terms of power consumption, wherein one PCIe power cable is needed rather than two (or three).

While the industry as a whole is anxious to see how competitive Intel will be with its second bite at the apple, gamers aren’t exactly waiting impatiently for Intel to introduce its GPUs like they do with Nvidia or AMD’s next-gen. Even if the company’s Alchemist GPUs were hard to suggest when they first came out, significant performance advancements have been made possible by the company’s drivers.

The Intel Battlemage G10 and G21 next-generation discrete GPUs, which have been observed in shipment manifests, are anticipated to tackle entry into the mid-range market. They already know from the horse’s mouth that Intel is working on its next generation of discrete graphics processors, which it has revealed are being code-named Battlemage. The company is developing at least two graphics processing units, according to shipping excerpts.

Intel Battlemage GPUs

The shipping manifest fragments reveal that Intel is working on several GPUs specifically for the Battlemage G10 and G21 versions. The newest versions in Intel’s graphics processor lineup include the ACM-G11, an entry-level graphics processor, and the ACM-G10, a midrange market positioning and higher-end silicon graphics processor. As a result, the names Battlemage-G10 and Battlemage-G21, which are aimed at entry-level PCs and bigger chips, respectively, match the present names for Intel’s Arc graphics processors. Both stand a strong chance of making their list of the best graphics cards if they deliver acceptable levels of performance.

The Battlemage-G10 and Battlemage-G21 are being shipped for research and development, as stated in the shipping manifest (which makes sense considering these devices’ current status). The G21 GPU is currently in the pre-qualification (pre-QS) stage of semiconductor development; the G10’s current status is unknown.

Pre-qualification silicon is used to assess a chip’s performance, reliability, and functionality. Pre-QS silicon is typically not suitable for mass production. However, if the silicon device is functional and meets the necessary performance, power, and yield requirements, mass production of the device could be feasible. For example, AMD’s Navi 31 GPU, if it meets the developer’s objectives, is mass-produced in its A0 silicon phase.

They rarely get to cover Intel’s developments with its next-generation graphics cards, but they frequently cover Nvidia’s, as they did recently with the GeForce RTX 50-series graphics processors, which should appear on their list of the best graphics cards based on industry leaks.

This generation, Nvidia seems to be leading the laptop discrete GPU market, but Battlemage, with Intel’s ties to OEMs and PC manufacturers, might give the green team some serious competition in the next round. According to the cargo manifest, there will be intense competition among AMD’s RDNA 4, Intel’s Battlemage, and Nvidia’s Blackwell in the forthcoming desktop discrete GPU market.

Qualities:

Targeting Entry-Level and Mid-Range: The ACM-G11 and ACM-G10, the successors to the existing Intel Arc Alchemist series, are probably meant for gamers on a tight budget or seeking good performance in games that aren’t AAA.

Better Architecture: Compared to the Xe-HPG architecture found in Intel’s existing Arc GPUs, readers can anticipate an upgrade in this next-generation design. Better performance per watt and even new features could result from this.

Emphasis on Power Efficiency: These GPUs may place equal emphasis on efficiency and performance because power consumption is a significant element in laptops and tiny form factor PCs.

Potential specifications (derived from the existing Intel Arc lineup and leaks):

Production Process: TSMC 6nm (or, if research continues, a more sophisticated node) Unknown is the core configuration. Possibly less cores than Battlemage models at higher levels (should any exist).

Memory: GDDR6 is most likely used, yet its bandwidth and capacity are unclear. Power Consumption: Designed to use less power than GPUs with higher specifications.

FAQS

What are the Battlemage G10 and G21 GPUs?

Intel is developing the Battlemage G10 and G21, next-generation GPUs that should provide notable gains in capabilities and performance over their predecessors.

What markets or segments are these GPUs targeting?

Targeting a wide range of industries, including professional graphics, gaming, and data centres, the Battlemage G10 and G21 GPUs are expected to meet the demands of both consumers and businesses.

Read more on Govindhtech.com

#Intel#IntelArc#intelarcgpu#govindhtech#INTELARCA380#intelarca770#battlemagegpu#G10#G21#news#technologynews#technology#technologytrends

2 notes

·

View notes

Text

It's actually really simple. All you have to do is find a trans woman and ask her and she can interpret the jargon into human readable language. Watch this

AMD Ryzen 5 7600X 6 core 12 thread 4.7ghz performance clock 5.3ghz performance boost clock, 2x16GB DDR5 4800mhz CL38 ram, Gigabyte Windforce OC RTX 4070 SUPER 12GB GDDR6X CCLK 1980MHZ BCLK 2505MHZ 2TB Samsung 980 PRO M.2 NVME SSD: midrange PC that will run all your programs either reasonably quickly or blindingly fast depending on the workload of the program. You know how I know this? Ryzen 5 means midrange. Nvidia gpus ending in 70 means midrange. Combined this is midrange. Midrange is the best adjective for computers cause it means you're usually in the sweet spot for performance to price ratio. Realistically unless you're into machine learning or data science or editing 8k videos midrange is a catch all. It *will* run and it will *not* be slow.

Anyways theoretically there is data you could look into that supports the claim of "Ryzen 5 -70 series card means midrange" but unless amd and Nvidia both teamed up to fuck with this post specifically that should hold true without me looking.

If you want to look at some useful numbers, check the recommended system requirements then go to passmark's website and compare the recommended components to whatever you're looking at online. To oversimplify things greatly, when looking at CPU benchmarks, the smaller single threaded number is more accurate for comparing how it will perform in games relatively to each other while the larger multi threaded number is more accurate for comparing performance for things that aren't games (there are frequent exceptions to both of these but don't worry about that) as for GPUs, once again, oversimplifying greatly, the DirectX numbers are your gaming numbers and GPU compute is your everything else numbers.

Also never trust anything you see on userbenchmark.com, their 2019 April fools joke never ended and they are not a reliable source

why is shopping for computer shit so difficult like what the hell is 40 cunt thread chip 3000 processor with 32 florps of borps and a z12 yummy biscuits graphics drive 400102XXDRZ like ok um will it run my programmes

63K notes

·

View notes