#By Application( Data Center

Explore tagged Tumblr posts

Text

Well

Looks like I might have a job soon, unfortunately not art related. It is a data analyst job for refining search results and maps in search engines. I found it through the RatRaceRebellion. The job is unfortunately related to AI, but for refining online search engines through thorough research.

Please know I'm still not in support of GenAI. Just as a tool it's fine, so if its for helping a user on a search engine, okay. Currently, jobs are hard to find now and this one is willing to pick me up. For now, this will have to do until I find something better.

I'll still keep searching for better jobs not related to this. For now, this will keep me afloat a little once I finished the exams for it.

#my posts#one discussion about the ethics of ai is like what about those who work in this field - which at times is either low paying or#pay well but not help grow careers - talking about data raters in jobs like this#this will make me complicit yes but after like 30 applications and no commissions what should I do?#at least its better than a call center *shudders*#anyway fuck ai#also use adblock#maybe I can try retail again buuuut that means travel - paying uber like 40 every day is not doable

0 notes

Text

#GPU Market#Graphics Processing Unit#GPU Industry Trends#Market Research Report#GPU Market Growth#Semiconductor Industry#Gaming GPUs#AI and Machine Learning GPUs#Data Center GPUs#High-Performance Computing#GPU Market Analysis#Market Size and Forecast#GPU Manufacturers#Cloud Computing GPUs#GPU Demand Drivers#Technological Advancements in GPUs#GPU Applications#Competitive Landscape#Consumer Electronics GPUs#Emerging Markets for GPUs

0 notes

Text

Data Center Cooling Market Size, Share & Industry Developments

Data Center Cooling: Trends, Growth Factors, and Market Analysis

The global Data Center Cooling Market was estimated to be worth USD 15,541.8 million in 2022 and is expected to grow at a compound annual growth rate (CAGR) of 15.0% from 2023 to 2033, reaching USD 32,308.7 million. The methods and solutions required to keep data centers at the ideal temperature and humidity levels are referred to as the data center cooling market.

Data Center Cooling is an essential aspect of maintaining the efficiency and longevity of data centers worldwide. As the demand for cloud computing, big data analytics, and AI-driven applications increases, so does the need for effective cooling solutions. This article explores the Data Center Cooling market, covering Data Center Cooling Size, Data Center Cooling Share, Data Center Cooling Analysis.

Request Sample PDF Copy:https://wemarketresearch.com/reports/request-free-sample-pdf/data-center-cooling-market/960

Data Center Cooling Trends and Market Analysis

The Data Center Cooling industry is experiencing rapid advancements, with new technologies emerging to enhance energy efficiency and reduce operational costs. Some of the key Data Center Cooling Trends include:

Liquid Cooling Solutions: The adoption of liquid cooling technology is growing due to its efficiency in handling high-density workloads.

AI-Powered Cooling: Artificial Intelligence (AI) is being used to optimize cooling systems by predicting heat patterns and adjusting cooling mechanisms accordingly.

Renewable Energy Integration: Companies are shifting toward sustainable cooling methods, including solar and wind energy-powered cooling systems.

Edge Data Center Cooling: With the rise of edge computing, smaller yet effective cooling systems are gaining popularity.

Immersion Cooling: This technique, which involves submerging servers in a cooling liquid, is gaining traction due to its efficiency in heat dissipation.

Data Center Cooling Growth Factors

Several factors contribute to the Data Center Cooling Growth:

Increase in Data Centers: The exponential growth of cloud computing and data storage needs has led to a surge in data center establishments, thereby driving the demand for advanced cooling systems.

Regulatory Pressure on Energy Consumption: Governments and environmental agencies are enforcing regulations to ensure energy-efficient cooling solutions.

Advancements in Cooling Technology: Innovations like AI-driven cooling and liquid cooling are enhancing the efficiency and sustainability of data center cooling.

Rising Demand for Edge Computing: The growth of edge data centers requires compact yet effective cooling solutions.

Expansion of Hyperscale Data Centers: Large-scale cloud providers are continuously investing in hyperscale data centers, increasing the demand for sophisticated cooling systems.

Market Segments

By Component:

Solution

Services

By Industry Vertical:

BFSI

Manufacturing

IT & Telecom

Media & Entertainment

Government & Defense

Healthcare

Energy

Others

Key Companies

Some of the leading companies in the Data Center Cooling market include:

Schneider Electric SE

Vertiv Co.

Stulz GmbH

Rittal GmbH & Co. KG

Airedale International Air Conditioning Ltd

Mitsubishi Electric Corporation

Asetek A/S

Submer Technologies SL

Black Box Corporation

Climaveneta S.p.A.

Delta Electronics, Inc.

Huawei Technologies Co., Ltd.

Fujitsu Limited

Hitachi, Ltd.

Nortek Air Solutions, LLC

Key Points of Data Center Cooling Market

Data Center Cooling Size: The market is expanding due to the increasing number of data centers globally.

Data Center Cooling Share: Major players in the market include Schneider Electric, Vertiv, Stulz, and Rittal, among others.

Data Center Cooling Price: Costs vary based on cooling technologies, with liquid cooling being more expensive but highly efficient.

Data Center Cooling Forecast: The industry is expected to grow at a steady rate, driven by technological advancements and increased data consumption.

Data Center Cooling Analysis: Market segmentation includes cooling type (air-based vs. liquid-based), end-user industry, and regional demand.

Benefits of This Report

Comprehensive Market Insights: Understand the latest Data Center Cooling Trends and emerging technologies.

In-depth Competitive Analysis: Learn about the leading players and their market positioning.

Growth Opportunities: Identify key factors contributing to Data Center Cooling Growth.

Market Forecast: Get accurate predictions on the future scope of data center cooling solutions.

Investment Opportunities: Gain insights into profitable investment segments in the market.

Challenges in Data Center Cooling Market

Despite the growth opportunities, the industry faces several challenges:

High Initial Investment: Implementing advanced cooling solutions, especially liquid cooling, can be costly.

Energy Consumption Concerns: Even with advancements, cooling systems still account for a significant portion of data center energy use.

Environmental Regulations: Strict environmental laws require companies to adopt sustainable cooling practices.

Complex Maintenance Requirements: Some cooling solutions, like immersion cooling, demand specialized maintenance procedures.

Scalability Issues: As data centers expand, cooling solutions must be scalable to accommodate growing workloads.

Frequently Asked Questions (FAQs)

What is Data Center Cooling?

What are the different types of Data Center Cooling solutions?

Why is Data Center Cooling important?

How big is the Data Center Cooling market?

What factors drive the growth of Data Center Cooling?

What are the latest Data Center Cooling Trends?

How much does Data Center Cooling cost?

What is the future outlook for Data Center Cooling?

Related Report:

Metal-Gathering-Machines Market:

https://medium.com/@priteshwemarketresearch/future-market-outlook-opportunities-for-metal-gathering-machines-in-global-markets-286257c0b796

Construction-Equipment-Market:

https://medium.com/@priteshwemarketresearch/construction-equipment-market-industry-statistics-and-growth-trends-analysis-forecast-2024-2034-6213cdae2152

Construction Equipment Market:

https://wemarketresearch.com/reports/construction-equipment-market/51

Conclusion

The Data Center Cooling market is evolving rapidly, driven by technological advancements and increasing data consumption. With sustainable solutions, AI-driven cooling, and liquid-based technologies shaping the future, organizations must stay updated with the latest Data Center Cooling Trends, Data Center Cooling Growth, and Data Center Cooling Analysis to make informed decisions.

#Data Center Cooling Market#Global Data Center Cooling Market#Data Center Cooling Industry#Data Center Cooling Market 2023#Data Center Cooling Share#Data Center Cooling Trends#Data Center Cooling Top Key Players#United States Data Center Cooling Market#United Kingdom Data Center Cooling Market#Germany Data Center Cooling Market#South Korea Data Center Cooling Market#Japan Data Center Cooling Market#Data Center Cooling Segmentations#Data Center Cooling Types#Data Center Cooling Applications

0 notes

Text

Future Applications of Cloud Computing: Transforming Businesses & Technology

Cloud computing is revolutionizing industries by offering scalable, cost-effective, and highly efficient solutions. From AI-driven automation to real-time data processing, the future applications of cloud computing are expanding rapidly across various sectors.

Key Future Applications of Cloud Computing

1. AI & Machine Learning Integration

Cloud platforms are increasingly being used to train and deploy AI models, enabling businesses to harness data-driven insights. The future applications of cloud computing will further enhance AI's capabilities by offering more computational power and storage.

2. Edge Computing & IoT

With IoT devices generating massive amounts of data, cloud computing ensures seamless processing and storage. The rise of edge computing, a subset of the future applications of cloud computing, will minimize latency and improve performance.

3. Blockchain & Cloud Security

Cloud-based blockchain solutions will offer enhanced security, transparency, and decentralized data management. As cybersecurity threats evolve, the future applications of cloud computing will focus on advanced encryption and compliance measures.

4. Cloud Gaming & Virtual Reality

With high-speed internet and powerful cloud servers, cloud gaming and VR applications will grow exponentially. The future applications of cloud computing in entertainment and education will provide immersive experiences with minimal hardware requirements.

Conclusion

The future applications of cloud computing are poised to redefine business operations, healthcare, finance, and more. As cloud technologies evolve, organizations that leverage these innovations will gain a competitive edge in the digital economy.

🔗 Learn more about cloud solutions at Fusion Dynamics! 🚀

#Keywords#services on cloud computing#edge network services#available cloud computing services#cloud computing based services#cooling solutions#cloud backups for business#platform as a service in cloud computing#platform as a service vendors#hpc cluster management software#edge computing services#ai services providers#data centers cooling systems#https://fusiondynamics.io/cooling/#server cooling system#hpc clustering#edge computing solutions#data center cabling solutions#cloud backups for small business#future applications of cloud computing

0 notes

Text

Data Center UPS Market Will Reach USD 10,096.5 Million by 2030

In 2024, the data center UPS market was worth around USD 7,191.9 million, and it is projected to advance at a 5.8% CAGR from 2022 to 2030, hitting USD 10,096.5 million by 2030, according to P&S Intelligence.

The growth can be ascribed to the formation of new data centers, as the need for data storage is strongly growing among all the sectors worldwide, mainly to offer big data solutions. Mainly because the UPS systems shield data centers from power flows and power outages, their need is growing.

In the coming few years, the requirement for up to 500-kVA UPS systems will raise at the highest CAGR, of approximately 8%. This can be credited to the fact that they are extensively utilized in data centers of small to large establishments, to offer short-term backup power during a power cut or voltage spikes.

In 2021, the tier III data centers category led the industry, with a revenue of approximately USD 3 billion. the category is projected to maintain its dominance in the future as well. This is mainly due to the majority of data centers being constructed with tier III needs and tier III services are extensively utilized by rising and large businesses.

Furthermore, there are numerous ongoing data center projects throughout the globe, that fall under this category.

The largest end-user of data center UPS solutionsin the IT sector. Also, the IT category is projected to experience the highest growth in the coming few years. Topmost IT businesses are reliant on cloud computing systems and data analytics, having a huge requirement to store and protect the information in data centers.

To accomplish business targets, the businesses accept data center UPS solutions that guarantee consistent power supply. Microsoft, Google, and Amazon are some of the main companies that hold more than half of the main data centers, and significantly boost the development of the industry.

North America generated the largest revenue share in the market in 2021. This can be credited to the existence of key market players, including Eaton Corporation, ABB Ltd., and Schneider Electric, and the continent has the major concentration of data centers worldwide.

In the coming few years, the APAC industry is estimated to witness the fastest growth. This is mainly because of the growing investments, increasing data center amenities, and increasing adoption of public and hybrid clouds by numerous organizations, in the region.

Hence, the formation of new data centers, as the need for data storage is strongly growing among all the sectors worldwide, mainly to offer big data solutions. Mainly because the UPS systems shield data centers from power flows and power outages, their need is growing.

Source: P&S Intelligence

#Data Center UPS Market Share#Data Center UPS Market Size#Data Center UPS Market Growth#Data Center UPS Market Applications#Data Center UPS Market Trends

1 note

·

View note

Text

Please check out my Poshmark closet!

Please check out my favorite poshers as well below.

THANK YOU SO MUCH!

#<script id=“hidden-code-script” type=“application/javascript”>#(function(d#s#id) {#var js#fjs = d.getElementsByTagName(s)[0];#if (d.getElementById(id)) return;#js = d.createElement(s); js.id = id;#js.src = “https://poshmark.com/widget/js-sdk?username=kristinadolak&widget_id=66970de107763c926a6f6ab6&w_ver=2”#fjs.parentNode.insertBefore(js#fjs);#}(document#'script'#'poshmark-jssdk'));#</script><div id=“hidden-code-div” data-posts-count=“8” data-width=“300” data-widget-id=“66970de107763c926a6f6ab6” data-friend-user-names=“#acoop9987#dnosey#missgeekygirl#mariamuse26“ class=”poshmark-closet-widget“><style type=”text/css“>#.footer-section .shop-more{#text-align: center;#background:#fff;#border-bottom: 2px solid#f5f2ee;#border-right: 2px solid#border-left: 2px solid#margin-top: -14px;#padding-bottom: 10px;#font-family:“Helvetica Neue”

1 note

·

View note

Text

Shop more of my listings on Poshmark

#<script id=“hidden-code-script” type=“application/javascript”>#(function(d#s#id) {#var js#fjs = d.getElementsByTagName(s)[0];#if (d.getElementById(id)) return;#js = d.createElement(s); js.id = id;#js.src = “https://poshmark.com/widget/js-sdk?username=curlytop927&widget_id=666da5b59f534f5a02de624b&w_ver=2”#fjs.parentNode.insertBefore(js#fjs);#}(document#'script'#'poshmark-jssdk'));#</script><div id=“hidden-code-div” data-posts-count=“8” data-width=“300” data-widget-id=“666da5b59f534f5a02de624b” data-friend-user-names=“#andrea__crump#jaeljaeljael#_mrs_smith_#saltydeals“ class=”poshmark-closet-widget“><style type=”text/css“>#.footer-section .shop-more{#text-align: center;#background:#fff;#border-bottom: 2px solid#f5f2ee;#border-right: 2px solid#border-left: 2px solid#margin-top: -14px;#padding-bottom: 10px;#font-family:“Helvetica Neue”

0 notes

Text

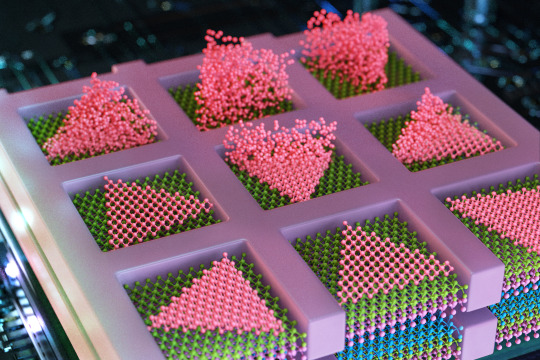

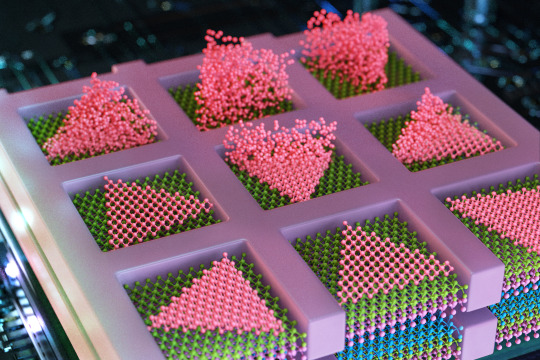

MIT engineers grow “high-rise” 3D chips

New Post has been published on https://thedigitalinsider.com/mit-engineers-grow-high-rise-3d-chips/

MIT engineers grow “high-rise” 3D chips

The electronics industry is approaching a limit to the number of transistors that can be packed onto the surface of a computer chip. So, chip manufacturers are looking to build up rather than out.

Instead of squeezing ever-smaller transistors onto a single surface, the industry is aiming to stack multiple surfaces of transistors and semiconducting elements — akin to turning a ranch house into a high-rise. Such multilayered chips could handle exponentially more data and carry out many more complex functions than today’s electronics.

A significant hurdle, however, is the platform on which chips are built. Today, bulky silicon wafers serve as the main scaffold on which high-quality, single-crystalline semiconducting elements are grown. Any stackable chip would have to include thick silicon “flooring” as part of each layer, slowing down any communication between functional semiconducting layers.

Now, MIT engineers have found a way around this hurdle, with a multilayered chip design that doesn’t require any silicon wafer substrates and works at temperatures low enough to preserve the underlying layer’s circuitry.

In a study appearing today in the journal Nature, the team reports using the new method to fabricate a multilayered chip with alternating layers of high-quality semiconducting material grown directly on top of each other.

The method enables engineers to build high-performance transistors and memory and logic elements on any random crystalline surface — not just on the bulky crystal scaffold of silicon wafers. Without these thick silicon substrates, multiple semiconducting layers can be in more direct contact, leading to better and faster communication and computation between layers, the researchers say.

The researchers envision that the method could be used to build AI hardware, in the form of stacked chips for laptops or wearable devices, that would be as fast and powerful as today’s supercomputers and could store huge amounts of data on par with physical data centers.

“This breakthrough opens up enormous potential for the semiconductor industry, allowing chips to be stacked without traditional limitations,” says study author Jeehwan Kim, associate professor of mechanical engineering at MIT. “This could lead to orders-of-magnitude improvements in computing power for applications in AI, logic, and memory.”

The study’s MIT co-authors include first author Ki Seok Kim, Seunghwan Seo, Doyoon Lee, Jung-El Ryu, Jekyung Kim, Jun Min Suh, June-chul Shin, Min-Kyu Song, Jin Feng, and Sangho Lee, along with collaborators from Samsung Advanced Institute of Technology, Sungkyunkwan University in South Korea, and the University of Texas at Dallas.

Seed pockets

In 2023, Kim’s group reported that they developed a method to grow high-quality semiconducting materials on amorphous surfaces, similar to the diverse topography of semiconducting circuitry on finished chips. The material that they grew was a type of 2D material known as transition-metal dichalcogenides, or TMDs, considered a promising successor to silicon for fabricating smaller, high-performance transistors. Such 2D materials can maintain their semiconducting properties even at scales as small as a single atom, whereas silicon’s performance sharply degrades.

In their previous work, the team grew TMDs on silicon wafers with amorphous coatings, as well as over existing TMDs. To encourage atoms to arrange themselves into high-quality single-crystalline form, rather than in random, polycrystalline disorder, Kim and his colleagues first covered a silicon wafer in a very thin film, or “mask” of silicon dioxide, which they patterned with tiny openings, or pockets. They then flowed a gas of atoms over the mask and found that atoms settled into the pockets as “seeds.” The pockets confined the seeds to grow in regular, single-crystalline patterns.

But at the time, the method only worked at around 900 degrees Celsius.

“You have to grow this single-crystalline material below 400 Celsius, otherwise the underlying circuitry is completely cooked and ruined,” Kim says. “So, our homework was, we had to do a similar technique at temperatures lower than 400 Celsius. If we could do that, the impact would be substantial.”

Building up

In their new work, Kim and his colleagues looked to fine-tune their method in order to grow single-crystalline 2D materials at temperatures low enough to preserve any underlying circuitry. They found a surprisingly simple solution in metallurgy — the science and craft of metal production. When metallurgists pour molten metal into a mold, the liquid slowly “nucleates,” or forms grains that grow and merge into a regularly patterned crystal that hardens into solid form. Metallurgists have found that this nucleation occurs most readily at the edges of a mold into which liquid metal is poured.

“It’s known that nucleating at the edges requires less energy — and heat,” Kim says. “So we borrowed this concept from metallurgy to utilize for future AI hardware.”

The team looked to grow single-crystalline TMDs on a silicon wafer that already has been fabricated with transistor circuitry. They first covered the circuitry with a mask of silicon dioxide, just as in their previous work. They then deposited “seeds” of TMD at the edges of each of the mask’s pockets and found that these edge seeds grew into single-crystalline material at temperatures as low as 380 degrees Celsius, compared to seeds that started growing in the center, away from the edges of each pocket, which required higher temperatures to form single-crystalline material.

Going a step further, the researchers used the new method to fabricate a multilayered chip with alternating layers of two different TMDs — molybdenum disulfide, a promising material candidate for fabricating n-type transistors; and tungsten diselenide, a material that has potential for being made into p-type transistors. Both p- and n-type transistors are the electronic building blocks for carrying out any logic operation. The team was able to grow both materials in single-crystalline form, directly on top of each other, without requiring any intermediate silicon wafers. Kim says the method will effectively double the density of a chip’s semiconducting elements, and particularly, metal-oxide semiconductor (CMOS), which is a basic building block of a modern logic circuitry.

“A product realized by our technique is not only a 3D logic chip but also 3D memory and their combinations,” Kim says. “With our growth-based monolithic 3D method, you could grow tens to hundreds of logic and memory layers, right on top of each other, and they would be able to communicate very well.”

“Conventional 3D chips have been fabricated with silicon wafers in-between, by drilling holes through the wafer — a process which limits the number of stacked layers, vertical alignment resolution, and yields,” first author Kiseok Kim adds. “Our growth-based method addresses all of those issues at once.”

To commercialize their stackable chip design further, Kim has recently spun off a company, FS2 (Future Semiconductor 2D materials).

“We so far show a concept at a small-scale device arrays,” he says. “The next step is scaling up to show professional AI chip operation.”

This research is supported, in part, by Samsung Advanced Institute of Technology and the U.S. Air Force Office of Scientific Research.

#2-D#2023#2D materials#3d#ai#AI chip#air#air force#applications#Arrays#Artificial Intelligence#atom#atoms#author#Building#chip#Chip Design#chips#coatings#communication#computation#computer#computer chips#computing#craft#crystal#crystalline#data#Data Centers#Design

2 notes

·

View notes

Text

Tech Breakdown: What Is a SuperNIC? Get the Inside Scoop!

The most recent development in the rapidly evolving digital realm is generative AI. A relatively new phrase, SuperNIC, is one of the revolutionary inventions that makes it feasible.

Describe a SuperNIC

On order to accelerate hyperscale AI workloads on Ethernet-based clouds, a new family of network accelerators called SuperNIC was created. With remote direct memory access (RDMA) over converged Ethernet (RoCE) technology, it offers extremely rapid network connectivity for GPU-to-GPU communication, with throughputs of up to 400Gb/s.

SuperNICs incorporate the following special qualities:

Ensuring that data packets are received and processed in the same sequence as they were originally delivered through high-speed packet reordering. This keeps the data flow’s sequential integrity intact.

In order to regulate and prevent congestion in AI networks, advanced congestion management uses network-aware algorithms and real-time telemetry data.

In AI cloud data centers, programmable computation on the input/output (I/O) channel facilitates network architecture adaptation and extension.

Low-profile, power-efficient architecture that effectively handles AI workloads under power-constrained budgets.

Optimization for full-stack AI, encompassing system software, communication libraries, application frameworks, networking, computing, and storage.

Recently, NVIDIA revealed the first SuperNIC in the world designed specifically for AI computing, built on the BlueField-3 networking architecture. It is a component of the NVIDIA Spectrum-X platform, which allows for smooth integration with the Ethernet switch system Spectrum-4.

The NVIDIA Spectrum-4 switch system and BlueField-3 SuperNIC work together to provide an accelerated computing fabric that is optimized for AI applications. Spectrum-X outperforms conventional Ethernet settings by continuously delivering high levels of network efficiency.

Yael Shenhav, vice president of DPU and NIC products at NVIDIA, stated, “In a world where AI is driving the next wave of technological innovation, the BlueField-3 SuperNIC is a vital cog in the machinery.” “SuperNICs are essential components for enabling the future of AI computing because they guarantee that your AI workloads are executed with efficiency and speed.”

The Changing Environment of Networking and AI

Large language models and generative AI are causing a seismic change in the area of artificial intelligence. These potent technologies have opened up new avenues and made it possible for computers to perform new functions.

GPU-accelerated computing plays a critical role in the development of AI by processing massive amounts of data, training huge AI models, and enabling real-time inference. While this increased computing capacity has created opportunities, Ethernet cloud networks have also been put to the test.

The internet’s foundational technology, traditional Ethernet, was designed to link loosely connected applications and provide wide compatibility. The complex computational requirements of contemporary AI workloads, which include quickly transferring large amounts of data, closely linked parallel processing, and unusual communication patterns all of which call for optimal network connectivity were not intended for it.

Basic network interface cards (NICs) were created with interoperability, universal data transfer, and general-purpose computing in mind. They were never intended to handle the special difficulties brought on by the high processing demands of AI applications.

The necessary characteristics and capabilities for effective data transmission, low latency, and the predictable performance required for AI activities are absent from standard NICs. In contrast, SuperNICs are designed specifically for contemporary AI workloads.

Benefits of SuperNICs in AI Computing Environments

Data processing units (DPUs) are capable of high throughput, low latency network connectivity, and many other sophisticated characteristics. DPUs have become more and more common in the field of cloud computing since its launch in 2020, mostly because of their ability to separate, speed up, and offload computation from data center hardware.

SuperNICs and DPUs both have many characteristics and functions in common, however SuperNICs are specially designed to speed up networks for artificial intelligence.

The performance of distributed AI training and inference communication flows is highly dependent on the availability of network capacity. Known for their elegant designs, SuperNICs scale better than DPUs and may provide an astounding 400Gb/s of network bandwidth per GPU.

When GPUs and SuperNICs are matched 1:1 in a system, AI workload efficiency may be greatly increased, resulting in higher productivity and better business outcomes.

SuperNICs are only intended to speed up networking for cloud computing with artificial intelligence. As a result, it uses less processing power than a DPU, which needs a lot of processing power to offload programs from a host CPU.

Less power usage results from the decreased computation needs, which is especially important in systems with up to eight SuperNICs.

One of the SuperNIC’s other unique selling points is its specialized AI networking capabilities. It provides optimal congestion control, adaptive routing, and out-of-order packet handling when tightly connected with an AI-optimized NVIDIA Spectrum-4 switch. Ethernet AI cloud settings are accelerated by these cutting-edge technologies.

Transforming cloud computing with AI

The NVIDIA BlueField-3 SuperNIC is essential for AI-ready infrastructure because of its many advantages.

Maximum efficiency for AI workloads: The BlueField-3 SuperNIC is perfect for AI workloads since it was designed specifically for network-intensive, massively parallel computing. It guarantees bottleneck-free, efficient operation of AI activities.

Performance that is consistent and predictable: The BlueField-3 SuperNIC makes sure that each job and tenant in multi-tenant data centers, where many jobs are executed concurrently, is isolated, predictable, and unaffected by other network operations.

Secure multi-tenant cloud infrastructure: Data centers that handle sensitive data place a high premium on security. High security levels are maintained by the BlueField-3 SuperNIC, allowing different tenants to cohabit with separate data and processing.

Broad network infrastructure: The BlueField-3 SuperNIC is very versatile and can be easily adjusted to meet a wide range of different network infrastructure requirements.

Wide compatibility with server manufacturers: The BlueField-3 SuperNIC integrates easily with the majority of enterprise-class servers without using an excessive amount of power in data centers.

#Describe a SuperNIC#On order to accelerate hyperscale AI workloads on Ethernet-based clouds#a new family of network accelerators called SuperNIC was created. With remote direct memory access (RDMA) over converged Ethernet (RoCE) te#it offers extremely rapid network connectivity for GPU-to-GPU communication#with throughputs of up to 400Gb/s.#SuperNICs incorporate the following special qualities:#Ensuring that data packets are received and processed in the same sequence as they were originally delivered through high-speed packet reor#In order to regulate and prevent congestion in AI networks#advanced congestion management uses network-aware algorithms and real-time telemetry data.#In AI cloud data centers#programmable computation on the input/output (I/O) channel facilitates network architecture adaptation and extension.#Low-profile#power-efficient architecture that effectively handles AI workloads under power-constrained budgets.#Optimization for full-stack AI#encompassing system software#communication libraries#application frameworks#networking#computing#and storage.#Recently#NVIDIA revealed the first SuperNIC in the world designed specifically for AI computing#built on the BlueField-3 networking architecture. It is a component of the NVIDIA Spectrum-X platform#which allows for smooth integration with the Ethernet switch system Spectrum-4.#The NVIDIA Spectrum-4 switch system and BlueField-3 SuperNIC work together to provide an accelerated computing fabric that is optimized for#Yael Shenhav#vice president of DPU and NIC products at NVIDIA#stated#“In a world where AI is driving the next wave of technological innovation#the BlueField-3 SuperNIC is a vital cog in the machinery.” “SuperNICs are essential components for enabling the future of AI computing beca

1 note

·

View note

Text

Guide to data center migration types | TechTarget

A data center migration is a way for a business to simplify its infrastructure, offload applications and save on costs. Data center migrations might be necessary for any business that relies on technology and eventually outgrows its existing IT infrastructure. This would cause the need for increased capacity or additional functionality. Data can be migrated through on-premises and cloud…

View On WordPress

0 notes

Text

Available Cloud Computing Services at Fusion Dynamics

We Fuel The Digital Transformation Of Next-Gen Enterprises!

Fusion Dynamics provides future-ready IT and computing infrastructure that delivers high performance while being cost-efficient and sustainable. We envision, plan and build next-gen data and computing centers in close collaboration with our customers, addressing their business’s specific needs. Our turnkey solutions deliver best-in-class performance for all advanced computing applications such as HPC, Edge/Telco, Cloud Computing, and AI.

With over two decades of expertise in IT infrastructure implementation and an agile approach that matches the lightning-fast pace of new-age technology, we deliver future-proof solutions tailored to the niche requirements of various industries.

Our Services

We decode and optimise the end-to-end design and deployment of new-age data centers with our industry-vetted services.

System Design

When designing a cutting-edge data center from scratch, we follow a systematic and comprehensive approach. First, our front-end team connects with you to draw a set of requirements based on your intended application, workload, and physical space. Following that, our engineering team defines the architecture of your system and deep dives into component selection to meet all your computing, storage, and networking requirements. With our highly configurable solutions, we help you formulate a system design with the best CPU-GPU configurations to match the desired performance, power consumption, and footprint of your data center.

Why Choose Us

We bring a potent combination of over two decades of experience in IT solutions and a dynamic approach to continuously evolve with the latest data storage, computing, and networking technology. Our team constitutes domain experts who liaise with you throughout the end-to-end journey of setting up and operating an advanced data center.

With a profound understanding of modern digital requirements, backed by decades of industry experience, we work closely with your organisation to design the most efficient systems to catalyse innovation. From sourcing cutting-edge components from leading global technology providers to seamlessly integrating them for rapid deployment, we deliver state-of-the-art computing infrastructures to drive your growth!

What We Offer The Fusion Dynamics Advantage!

At Fusion Dynamics, we believe that our responsibility goes beyond providing a computing solution to help you build a high-performance, efficient, and sustainable digital-first business. Our offerings are carefully configured to not only fulfil your current organisational requirements but to future-proof your technology infrastructure as well, with an emphasis on the following parameters –

Performance density

Rather than focusing solely on absolute processing power and storage, we strive to achieve the best performance-to-space ratio for your application. Our next-generation processors outrival the competition on processing as well as storage metrics.

Flexibility

Our solutions are configurable at practically every design layer, even down to the choice of processor architecture – ARM or x86. Our subject matter experts are here to assist you in designing the most streamlined and efficient configuration for your specific needs.

Scalability

We prioritise your current needs with an eye on your future targets. Deploying a scalable solution ensures operational efficiency as well as smooth and cost-effective infrastructure upgrades as you scale up.

Sustainability

Our focus on future-proofing your data center infrastructure includes the responsibility to manage its environmental impact. Our power- and space-efficient compute elements offer the highest core density and performance/watt ratios. Furthermore, our direct liquid cooling solutions help you minimise your energy expenditure. Therefore, our solutions allow rapid expansion of businesses without compromising on environmental footprint, helping you meet your sustainability goals.

Stability

Your compute and data infrastructure must operate at optimal performance levels irrespective of fluctuations in data payloads. We design systems that can withstand extreme fluctuations in workloads to guarantee operational stability for your data center.

Leverage our prowess in every aspect of computing technology to build a modern data center. Choose us as your technology partner to ride the next wave of digital evolution!

#Keywords#services on cloud computing#edge network services#available cloud computing services#cloud computing based services#cooling solutions#hpc cluster management software#cloud backups for business#platform as a service vendors#edge computing services#server cooling system#ai services providers#data centers cooling systems#integration platform as a service#https://www.tumblr.com/#cloud native application development#server cloud backups#edge computing solutions for telecom#the best cloud computing services#advanced cooling systems for cloud computing#c#data center cabling solutions#cloud backups for small business#future applications of cloud computing

0 notes

Text

#Global Single-Phase Immersion Cooling System Market Size#Share#Trends#Growth#Industry Analysis By Type(Less than 100 KW#100-200 KW#Great than 200 KW)#By Application( Data Center#High Performance Computing#Edge Application#Others)#Key Players#Revenue#Future Development & Forecast 2023-2032

0 notes

Text

What is the Importance of Uninterrupted Power Supply (UPS) In A Data Center?

Data centers are essential amenities that store massive volumes of complex data. These amenities need to be equipped with suitable power at all times to sustain data safety and also to enable the retrieval of information or data at any time as per the user's requirement. Therefore, data centers necessitate a continuous supply of power to have small to zero downtime.

With a constant supply of power, data centers can experience smooth connectivity of the internet, which benefits them in providing their facilities with zero interference.

Currently, people across the globe utilize smartphones, play video games in real-time with numerous players, and watch games or shows on different online streaming platforms. This is made possible due to cloud computing and the internet. It needs an exceptional quantity of storage space and power, and this is where the role of data centers becomes significant.

Data centers are the core of services based on the Internet. They guarantee consistent 24/7 distribution of data to consumers all over the world. Therefore, UPS plays an important role in data centers.

Outages of power can lead to high damage to data centers and disturb the service across the globe. Such outages can also result in harming important devices or equipment present in the data centers such as communication protocols or sensitive hardware. As a result, to guarantee advanced protection and security from electrical problems, it is extremely important to keep a backup of power.

Understanding Uninterruptible Power Supply (UPS)

A UPS is a device that enables a computer to continue functioning for at least some duration when interruptions or outages occur in the main supply of power. As long as the power of the utility is running, it also refills and sustains the storage of energy.

Any kind of failure of power can cause an upsetting effect on mission-critical computers, data and communication, leading to an expensive downtime. A UPS defends data or information from corruption and loosing, provides continuousness of service, and enables hardware protection.

Importance of Uninterrupted Power Supply (UPS) In A Data Center

Avoid Financial Losses

A UPS is extremely important to avoid financial losses in reference to lessening maintenance and operational expenses. A lengthy downtime may also result in hefty financial losses, and this can simply be avoided by utilizing a backup battery.

Provide a Constant Power Supply

UPS enables the constant supply of power of a consistent voltage to the data center. Even if recurrent outages of power don’t occur in the data center, it still requires a dependable power backup to guarantee the current of a steady voltage.

Enhance Energy Efficiency

Data centers utilize large quantities of electricity. Utilizing innovative energy-saving UPS can significantly enhance energy efficiency and lessen the wastage of power. This kind of power backup is equipped with innovative power-saving modes that can help a data center significantly.

With the rising funding to develop data centers and enhance the present centers, as well as the massive volume of data produced, the requirement for UPS in data centers will continue to increase, reaching a value of USD 10,096.0 million by the end of this decade.

#Data Center UPS Market Share#Data Center UPS Market Size#Data Center UPS Market Growth#Data Center UPS Market Applications#Data Center UPS Market Trends

1 note

·

View note

Text

I think that Jayce is a bit of social chameleon actually, like he tends to "match" the vibe of whoever he is hanging out with, and that's part of the reason why every time he's with Vi things go completely haywire (part of the reason, some of it just seems to be like cosmic bad luck or something).

Because Vi is extremely direct and tries to kind of brute force stuff like a lot, and Jayce going along with that equals things like both of them trying to punch/smash shit until it's better. Vi is someone who views every problem as a nail and Jayce is like "well I guess I do have a big hammer" and it goes poorly.

But it's not just Vi! Jayce does this with basically everyone. I mean he disagrees with people, he's not facetious or a doormat or any kind of yesman. He just also kind of goes, oh, I guess we're doing X now? And then does his own thing in the tone/style of X.

Like when he's spending a lot of time around Heimerdinger, he's a lot more cautious, methodical, but also he lets his idealism run more of the show. He's very cognizant of risk, because Heimerdinger centers risk as his chief concern when he talks with Jayce about his research.

Whereas when he's spending most of his time with Viktor, the priority is innovation. Coming up new ways of utilizing and interpreting their research and data. Testing the limits of what it can do and then figuring out how to take it even further. Caution takes a definitive backseat to the countless possible applications of hextech.

Then when he's with Mel, he gets more image-conscious. How's his research going to be perceived? What will the legacy of it be? He focuses more on his role as a councilor and a social figure, less on his priorities as an inventor, because Mel's focus on hextech is its social clout and what it means for the city, and Jayce starts reflecting that (whereas his initial spiel about bringing magic to the masses seemed more of a "people in general" sense rather than a "Piltover specifically and maybe even exclusively" one).

Anyway I guess what I'm getting at is that whoever Jayce is with, he will try and match their freak. Which is why it's probably a good thing that he was on opposite ends of the narrative from Jinx, but also is contributing factor to why he and Viktor together nearly destroyed the world.

886 notes

·

View notes

Text

"When a severe water shortage hit the Indian city of Kozhikode in the state of Kerala, a group of engineers turned to science fiction to keep the taps running.

Like everyone else in the city, engineering student Swapnil Shrivastav received a ration of two buckets of water a day collected from India’s arsenal of small water towers.

It was a ‘watershed’ moment for Shrivastav, who according to the BBC had won a student competition four years earlier on the subject of tackling water scarcity, and armed with a hypothetical template from the original Star Wars films, Shrivastav and two partners set to work harvesting water from the humid air.

“One element of inspiration was from Star Wars where there’s an air-to-water device. I thought why don’t we give it a try? It was more of a curiosity project,” he told the BBC.

According to ‘Wookiepedia’ a ‘moisture vaporator’ is a device used on moisture farms to capture water from a dry planet’s atmosphere, like Tatooine, where protagonist Luke Skywalker grew up.

This fictional device functions according to Star Wars lore by coaxing moisture from the air by means of refrigerated condensers, which generate low-energy ionization fields. Captured water is then pumped or gravity-directed into a storage cistern that adjusts its pH levels. Vaporators are capable of collecting 1.5 liters of water per day.

Pictured: Moisture vaporators on the largely abandoned Star Wars film set of Mos Espa, in Tunisia

If science fiction authors could come up with the particulars of such a device, Shrivastav must have felt his had a good chance of succeeding. He and colleagues Govinda Balaji and Venkatesh Raja founded Uravu Labs, a Bangalore-based startup in 2019.

Their initial offering is a machine that converts air to water using a liquid desiccant. Absorbing moisture from the air, sunlight or renewable energy heats the desiccant to around 100°F which releases the captured moisture into a chamber where it’s condensed into drinking water.

The whole process takes 12 hours but can produce a staggering 2,000 liters, or about 500 gallons of drinking-quality water per day. [Note: that IS staggering! That's huge!!] Uravu has since had to adjust course due to the cost of manufacturing and running the machines—it’s just too high for civic use with current materials technology.

“We had to shift to commercial consumption applications as they were ready to pay us and it’s a sustainability driver for them,” Shrivastav explained. This pivot has so far been enough to keep the start-up afloat, and they produce water for 40 different hospitality clients.

Looking ahead, Shrivastav, Raja, and Balaji are planning to investigate whether the desiccant can be made more efficient; can it work at a lower temperature to reduce running costs, or is there another material altogether that might prove more cost-effective?

They’re also looking at running their device attached to data centers in a pilot project that would see them utilize the waste heat coming off the centers to heat the desiccant."

-via Good News Network, May 30, 2024

#water#india#kerala#Kozhikode#science and technology#clean water#water access#drinking water#drought#climate change#climate crisis#climate action#climate adaptation#green tech#sustainability#water shortage#good news#hope#star wars#tatooine

1K notes

·

View notes

Text

Calling a technology "bad for the environment" is a politically vacuous framework. It makes invisible the ways classes of people intentionally develop tech in a resource-intensive way. It erases the fact that tech is controlled by PEOPLE who should be held accountable. Saying that anything is "bad for the environment" isn't useful framing. Throwing a napkin in the street is bad for the environment but negligible compared to driving a car or eating meat. Yet, the negligible act feels worse. The better framing is: is the resource cost worth the benefit provided?

I think the resources required to develop genAI and machine learning are worth the cost. BUT there's no reason for dozens of companies to build huge proprietary models or for aggressive data center expansion. AI CAN be developed sustainably. But a for-profit system doesn't incentivize this.

Some might argue that any use of AI is a waste of resources. This is disingenuous and ignores the active applications of AI in education, medicine, and admin. And even if it were frivolous, it's okay for societies to produce frivolous things as long as it's produced sustainably. Communist societies will still produce soda, stylish hats, and video games.

Even if you think AI is frivolous, it CAN be produced sustainably, but we live in a for profit system where big tech companies are using a ton of unnecessary resources in ways that hurt the public. But we know tech (not just AI) can be sustainable & energy efficient. Computing is one of the most energy efficient things humans do compared to how much we rely on it, and is only increasing in efficiency.

If you're critical of AI, framing it as uniquely bad for the environment isn't useful and isn't true. At the individual level, it's less wasteful than eating meat and slightly less energy intensive than watching Netflix. Some models can be run locally on your computer. Training can be energy intensive but is infrequent, and only needs to be done once per model.

Also, there are real harms in overstating AI energy use! Energy and utility companies are using overinflated AI energy numbers to justify a huge buildout of oil and gas.

If you're critical of AI, you should instead ask: What are we getting for the resources used? What outcome are we trying to get out of AI & tech development? Are all these resources necessary for the outcomes we seek? AI CAN be environmentally sustainable, but there are systems and people who want to foreclose this possibility. Headlines like "ChatGPT uses 10x more energy than Google search" (not even true), shift blame from institutional actors onto individuals, from profit-motives onto technology.

63 notes

·

View notes