#Code Llama

Explore tagged Tumblr posts

Text

コード生成AIのデモ

モノづくり塾のサーバーで稼働しているCode Llamaベースのコード生成AIアプリケーションを動かした様子を動画にしました。 動画の内容は、 塾のダッシュボードからコード生成AIアプリケーションを開く コード生成を依頼する 生成されたコードを実際に動かす というものです。 言語モデルはCode Llamaに日本語追加学習を行ったELYZA社のモデル。llama-cpp-pythonを使いstreamlitでWeb UIを作っています。Dockerizeされているのでリポジトリからクローンしてdocker-composeで即稼働開始できます。 サーバーはCore i5 13400搭載の自作PCでUbuntu Server 22.04で動いています。 ブラウザを動かしているPCは6年ほど前のCore…

View On WordPress

0 notes

Text

"Open" "AI" isn’t

Tomorrow (19 Aug), I'm appearing at the San Diego Union-Tribune Festival of Books. I'm on a 2:30PM panel called "Return From Retirement," followed by a signing:

https://www.sandiegouniontribune.com/festivalofbooks

The crybabies who freak out about The Communist Manifesto appearing on university curriculum clearly never read it – chapter one is basically a long hymn to capitalism's flexibility and inventiveness, its ability to change form and adapt itself to everything the world throws at it and come out on top:

https://www.marxists.org/archive/marx/works/1848/communist-manifesto/ch01.htm#007

Today, leftists signal this protean capacity of capital with the -washing suffix: greenwashing, genderwashing, queerwashing, wokewashing – all the ways capital cloaks itself in liberatory, progressive values, while still serving as a force for extraction, exploitation, and political corruption.

A smart capitalist is someone who, sensing the outrage at a world run by 150 old white guys in boardrooms, proposes replacing half of them with women, queers, and people of color. This is a superficial maneuver, sure, but it's an incredibly effective one.

In "Open (For Business): Big Tech, Concentrated Power, and the Political Economy of Open AI," a new working paper, Meredith Whittaker, David Gray Widder and Sarah B Myers document a new kind of -washing: openwashing:

https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4543807

Openwashing is the trick that large "AI" companies use to evade regulation and neutralizing critics, by casting themselves as forces of ethical capitalism, committed to the virtue of openness. No one should be surprised to learn that the products of the "open" wing of an industry whose products are neither "artificial," nor "intelligent," are also not "open." Every word AI huxters say is a lie; including "and," and "the."

So what work does the "open" in "open AI" do? "Open" here is supposed to invoke the "open" in "open source," a movement that emphasizes a software development methodology that promotes code transparency, reusability and extensibility, which are three important virtues.

But "open source" itself is an offshoot of a more foundational movement, the Free Software movement, whose goal is to promote freedom, and whose method is openness. The point of software freedom was technological self-determination, the right of technology users to decide not just what their technology does, but who it does it to and who it does it for:

https://locusmag.com/2022/01/cory-doctorow-science-fiction-is-a-luddite-literature/

The open source split from free software was ostensibly driven by the need to reassure investors and businesspeople so they would join the movement. The "free" in free software is (deliberately) ambiguous, a bit of wordplay that sometimes misleads people into thinking it means "Free as in Beer" when really it means "Free as in Speech" (in Romance languages, these distinctions are captured by translating "free" as "libre" rather than "gratis").

The idea behind open source was to rebrand free software in a less ambiguous – and more instrumental – package that stressed cost-savings and software quality, as well as "ecosystem benefits" from a co-operative form of development that recruited tinkerers, independents, and rivals to contribute to a robust infrastructural commons.

But "open" doesn't merely resolve the linguistic ambiguity of libre vs gratis – it does so by removing the "liberty" from "libre," the "freedom" from "free." "Open" changes the pole-star that movement participants follow as they set their course. Rather than asking "Which course of action makes us more free?" they ask, "Which course of action makes our software better?"

Thus, by dribs and drabs, the freedom leeches out of openness. Today's tech giants have mobilized "open" to create a two-tier system: the largest tech firms enjoy broad freedom themselves – they alone get to decide how their software stack is configured. But for all of us who rely on that (increasingly unavoidable) software stack, all we have is "open": the ability to peer inside that software and see how it works, and perhaps suggest improvements to it:

https://www.youtube.com/watch?v=vBknF2yUZZ8

In the Big Tech internet, it's freedom for them, openness for us. "Openness" – transparency, reusability and extensibility – is valuable, but it shouldn't be mistaken for technological self-determination. As the tech sector becomes ever-more concentrated, the limits of openness become more apparent.

But even by those standards, the openness of "open AI" is thin gruel indeed (that goes triple for the company that calls itself "OpenAI," which is a particularly egregious openwasher).

The paper's authors start by suggesting that the "open" in "open AI" is meant to imply that an "open AI" can be scratch-built by competitors (or even hobbyists), but that this isn't true. Not only is the material that "open AI" companies publish insufficient for reproducing their products, even if those gaps were plugged, the resource burden required to do so is so intense that only the largest companies could do so.

Beyond this, the "open" parts of "open AI" are insufficient for achieving the other claimed benefits of "open AI": they don't promote auditing, or safety, or competition. Indeed, they often cut against these goals.

"Open AI" is a wordgame that exploits the malleability of "open," but also the ambiguity of the term "AI": "a grab bag of approaches, not… a technical term of art, but more … marketing and a signifier of aspirations." Hitching this vague term to "open" creates all kinds of bait-and-switch opportunities.

That's how you get Meta claiming that LLaMa2 is "open source," despite being licensed in a way that is absolutely incompatible with any widely accepted definition of the term:

https://blog.opensource.org/metas-llama-2-license-is-not-open-source/

LLaMa-2 is a particularly egregious openwashing example, but there are plenty of other ways that "open" is misleadingly applied to AI: sometimes it means you can see the source code, sometimes that you can see the training data, and sometimes that you can tune a model, all to different degrees, alone and in combination.

But even the most "open" systems can't be independently replicated, due to raw computing requirements. This isn't the fault of the AI industry – the computational intensity is a fact, not a choice – but when the AI industry claims that "open" will "democratize" AI, they are hiding the ball. People who hear these "democratization" claims (especially policymakers) are thinking about entrepreneurial kids in garages, but unless these kids have access to multi-billion-dollar data centers, they can't be "disruptors" who topple tech giants with cool new ideas. At best, they can hope to pay rent to those giants for access to their compute grids, in order to create products and services at the margin that rely on existing products, rather than displacing them.

The "open" story, with its claims of democratization, is an especially important one in the context of regulation. In Europe, where a variety of AI regulations have been proposed, the AI industry has co-opted the open source movement's hard-won narrative battles about the harms of ill-considered regulation.

For open source (and free software) advocates, many tech regulations aimed at taming large, abusive companies – such as requirements to surveil and control users to extinguish toxic behavior – wreak collateral damage on the free, open, user-centric systems that we see as superior alternatives to Big Tech. This leads to the paradoxical effect of passing regulation to "punish" Big Tech that end up simply shaving an infinitesimal percentage off the giants' profits, while destroying the small co-ops, nonprofits and startups before they can grow to be a viable alternative.

The years-long fight to get regulators to understand this risk has been waged by principled actors working for subsistence nonprofit wages or for free, and now the AI industry is capitalizing on lawmakers' hard-won consideration for collateral damage by claiming to be "open AI" and thus vulnerable to overbroad regulation.

But the "open" projects that lawmakers have been coached to value are precious because they deliver a level playing field, competition, innovation and democratization – all things that "open AI" fails to deliver. The regulations the AI industry is fighting also don't necessarily implicate the speech implications that are core to protecting free software:

https://www.eff.org/deeplinks/2015/04/remembering-case-established-code-speech

Just think about LLaMa-2. You can download it for free, along with the model weights it relies on – but not detailed specs for the data that was used in its training. And the source-code is licensed under a homebrewed license cooked up by Meta's lawyers, a license that only glancingly resembles anything from the Open Source Definition:

https://opensource.org/osd/

Core to Big Tech companies' "open AI" offerings are tools, like Meta's PyTorch and Google's TensorFlow. These tools are indeed "open source," licensed under real OSS terms. But they are designed and maintained by the companies that sponsor them, and optimize for the proprietary back-ends each company offers in its own cloud. When programmers train themselves to develop in these environments, they are gaining expertise in adding value to a monopolist's ecosystem, locking themselves in with their own expertise. This a classic example of software freedom for tech giants and open source for the rest of us.

One way to understand how "open" can produce a lock-in that "free" might prevent is to think of Android: Android is an open platform in the sense that its sourcecode is freely licensed, but the existence of Android doesn't make it any easier to challenge the mobile OS duopoly with a new mobile OS; nor does it make it easier to switch from Android to iOS and vice versa.

Another example: MongoDB, a free/open database tool that was adopted by Amazon, which subsequently forked the codebase and tuning it to work on their proprietary cloud infrastructure.

The value of open tooling as a stickytrap for creating a pool of developers who end up as sharecroppers who are glued to a specific company's closed infrastructure is well-understood and openly acknowledged by "open AI" companies. Zuckerberg boasts about how PyTorch ropes developers into Meta's stack, "when there are opportunities to make integrations with products, [so] it’s much easier to make sure that developers and other folks are compatible with the things that we need in the way that our systems work."

Tooling is a relatively obscure issue, primarily debated by developers. A much broader debate has raged over training data – how it is acquired, labeled, sorted and used. Many of the biggest "open AI" companies are totally opaque when it comes to training data. Google and OpenAI won't even say how many pieces of data went into their models' training – let alone which data they used.

Other "open AI" companies use publicly available datasets like the Pile and CommonCrawl. But you can't replicate their models by shoveling these datasets into an algorithm. Each one has to be groomed – labeled, sorted, de-duplicated, and otherwise filtered. Many "open" models merge these datasets with other, proprietary sets, in varying (and secret) proportions.

Quality filtering and labeling for training data is incredibly expensive and labor-intensive, and involves some of the most exploitative and traumatizing clickwork in the world, as poorly paid workers in the Global South make pennies for reviewing data that includes graphic violence, rape, and gore.

Not only is the product of this "data pipeline" kept a secret by "open" companies, the very nature of the pipeline is likewise cloaked in mystery, in order to obscure the exploitative labor relations it embodies (the joke that "AI" stands for "absent Indians" comes out of the South Asian clickwork industry).

The most common "open" in "open AI" is a model that arrives built and trained, which is "open" in the sense that end-users can "fine-tune" it – usually while running it on the manufacturer's own proprietary cloud hardware, under that company's supervision and surveillance. These tunable models are undocumented blobs, not the rigorously peer-reviewed transparent tools celebrated by the open source movement.

If "open" was a way to transform "free software" from an ethical proposition to an efficient methodology for developing high-quality software; then "open AI" is a way to transform "open source" into a rent-extracting black box.

Some "open AI" has slipped out of the corporate silo. Meta's LLaMa was leaked by early testers, republished on 4chan, and is now in the wild. Some exciting stuff has emerged from this, but despite this work happening outside of Meta's control, it is not without benefits to Meta. As an infamous leaked Google memo explains:

Paradoxically, the one clear winner in all of this is Meta. Because the leaked model was theirs, they have effectively garnered an entire planet's worth of free labor. Since most open source innovation is happening on top of their architecture, there is nothing stopping them from directly incorporating it into their products.

https://www.searchenginejournal.com/leaked-google-memo-admits-defeat-by-open-source-ai/486290/

Thus, "open AI" is best understood as "as free product development" for large, well-capitalized AI companies, conducted by tinkerers who will not be able to escape these giants' proprietary compute silos and opaque training corpuses, and whose work product is guaranteed to be compatible with the giants' own systems.

The instrumental story about the virtues of "open" often invoke auditability: the fact that anyone can look at the source code makes it easier for bugs to be identified. But as open source projects have learned the hard way, the fact that anyone can audit your widely used, high-stakes code doesn't mean that anyone will.

The Heartbleed vulnerability in OpenSSL was a wake-up call for the open source movement – a bug that endangered every secure webserver connection in the world, which had hidden in plain sight for years. The result was an admirable and successful effort to build institutions whose job it is to actually make use of open source transparency to conduct regular, deep, systemic audits.

In other words, "open" is a necessary, but insufficient, precondition for auditing. But when the "open AI" movement touts its "safety" thanks to its "auditability," it fails to describe any steps it is taking to replicate these auditing institutions – how they'll be constituted, funded and directed. The story starts and ends with "transparency" and then makes the unjustifiable leap to "safety," without any intermediate steps about how the one will turn into the other.

It's a Magic Underpants Gnome story, in other words:

Step One: Transparency

Step Two: ??

Step Three: Safety

https://www.youtube.com/watch?v=a5ih_TQWqCA

Meanwhile, OpenAI itself has gone on record as objecting to "burdensome mechanisms like licenses or audits" as an impediment to "innovation" – all the while arguing that these "burdensome mechanisms" should be mandatory for rival offerings that are more advanced than its own. To call this a "transparent ruse" is to do violence to good, hardworking transparent ruses all the world over:

https://openai.com/blog/governance-of-superintelligence

Some "open AI" is much more open than the industry dominating offerings. There's EleutherAI, a donor-supported nonprofit whose model comes with documentation and code, licensed Apache 2.0. There are also some smaller academic offerings: Vicuna (UCSD/CMU/Berkeley); Koala (Berkeley) and Alpaca (Stanford).

These are indeed more open (though Alpaca – which ran on a laptop – had to be withdrawn because it "hallucinated" so profusely). But to the extent that the "open AI" movement invokes (or cares about) these projects, it is in order to brandish them before hostile policymakers and say, "Won't someone please think of the academics?" These are the poster children for proposals like exempting AI from antitrust enforcement, but they're not significant players in the "open AI" industry, nor are they likely to be for so long as the largest companies are running the show:

https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4493900

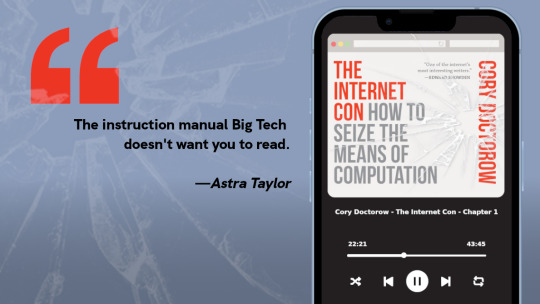

I'm kickstarting the audiobook for "The Internet Con: How To Seize the Means of Computation," a Big Tech disassembly manual to disenshittify the web and make a new, good internet to succeed the old, good internet. It's a DRM-free book, which means Audible won't carry it, so this crowdfunder is essential. Back now to get the audio, Verso hardcover and ebook:

http://seizethemeansofcomputation.org

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2023/08/18/openwashing/#you-keep-using-that-word-i-do-not-think-it-means-what-you-think-it-means

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#llama-2#meta#openwashing#floss#free software#open ai#open source#osi#open source initiative#osd#open source definition#code is speech

253 notes

·

View notes

Text

>crowbar gordon freeman confirmed real

#ni blabs#warframe#warframe spoilers#warframe 1999#1999 arg#OKAY reddit came in clutch again for this one#>put llama into ascloid and get the music player >spam the stop button#>unlock secret second track that reads YOU ARE GETTING CLOSER NOW backwards#>the track is in reverse >the “correct” way is just a voice reading off a hexadecimal code#>translate code into file password (which is EmbraceTheUnreal)#yeesh.

22 notes

·

View notes

Text

Robotics and coding is sooo hard uughhhh I wish I could ask someone to do this in my place but I don't know anyone who I could trust to help me with this project without any risk of fucking me over. Humans are unpredictable, which is usually nice but when it's about doing something that requires 100% trust it's really inconvenient

(if someone's good at coding, building robots, literally anything like that, and is okay with probably not getting any revenue in return (unless the project is a success and we manage to go commercial but that's a big IF) please hit me up)

EDIT: no I am not joking, and yes I'm aware of how complex this project is, which is exactly why I'm asking for help

#robot boyfriend#robotphilia#robot#robots#robophilia#robotics#science#ai model#ai#artificial intelligence#agi#artificial general intelligence#coding#programming#code#group project#team project#searching for help#pls help#llm#llama#llama llm#llama ai#llama 3.2

17 notes

·

View notes

Text

Godfrey and Radagon were absolutely gay for each other, but they couldn't just drop the "I'm gay" on everyone out of nowhere so, being the absolute bisexual king that he is, they got married as Godfrey and Marika.

I will not take any criticism or feedback, I will not elaborate.

This is, to me, a true fact of Elden Ring lore.

If you disagree, change your mind and be on the right side of history.

(this post was made in a light-hearted manner and is sponsored by the Bisexual Godfrey Gang)

#he's a raging bisexual#i mean he literally married somebody who is both biological sexes and a transgender icon to a lot of people#you cant get much more bi/pan-coded than literally marrying multiple genders#Elden Ring#godfrey the first elden lord#radagon of the golden order#marika the eternal#headcanon#who even reads this far into the tags?#shipping#elden lord#gay men#gayboy#gay#gay pride#bisexual#lgbtq#lgbtqia#lgbt pride#if you're reading this far down comment “llama”#radagodfrey#Marika's a scam#Marika is a lie made up by the Elden Government to hide gay people from us because they know we'd go feral#if you read all of these I love you and you're awesome I hope your day is amazing ❤️❤️

15 notes

·

View notes

Text

I have my driver's test the same day the Spy x Family Movie comes out.

If I pass, I shall celebrate by watching it.

If I fail, I shall heal by watching it.

#win win situation#no but fr#im terrified#help#jk#im confident#ish#FAKE IT TILL YOU MAKE IT BABYYYYYY#nskdhsgsusbwh#lava posts#convos with llama#drivers test#shsosbjskd#no but seriously#my driving skills have improved :3#spy x family#sxf#sxf code white#spy

27 notes

·

View notes

Text

GEORGE TK CLIPS MASTERPOST

@fluffallamaful + I were saying the clips of george we've been getting lately should be all in one space, so I decided to compile all of the george tk clips I could find!

If you have any other ones that I don't have yet, please feel free to send them to me!

last updated 01/03/2024

Videos under the cut :D

BANTER TKS

Sapnap watching Austin tk George and doing it himself (clips edited together)

Austin getting George (clips compiled into one clip)

Austin getting George's sides + ribs until he stands up (close up)

Austin getting George's ribs

Air tks from Austin

Austin getting George's neck / ears

Sap getting George's sides

Sap getting George on the floor (knees or thighs??)

George tkling Sap under his chin and saying the word

George giggling when Karl stun guns the can (sounds like a tk laugh)

STANDALONE TKS

Shadoune biting George's arm (zoomed in version)

hot tub stream w/ larray

Sapnap tkling his chest / ribs in the Larray video (slowed down version)

Sap (possibly) tkling George's thighs in the Larray video

Sap tkling his ribs at dinner in Madrid (tinier poke moment here as well)

Sapnap pushing at George's sides making his arms come down (potential tks)

George tkling Dream out of his chair

Dream talking about the tk fight with George

George tkling Wilbur's ribs with a minecraft sword

Sapnap holding his waist at Twitchcon Paris (potential tks)

Skephalo whispering in George's ear

Bad tkling George's ear accidentally with glasses

Ludwig tickling George's ear

Karl + Sapnap holding George down / possible neck nibbles (Antarctica)

Sapnap going to tk George at Horror Night

Sapnap getting George’s neck in the truck

Hbomb getting George’s neck at twitchcon

Dream + Sap holding his foot and dream tkling it at twitch rivals

Sap attacking George after he slapped his ass 💀

#lee!george#ler!george#but rare ler!george in here folks#this man is so lee coded its ridiculous#thank u llama for saying we need a masterpost!#mushie concepts / hcs#mcyt tickle#my stuff

61 notes

·

View notes

Text

Companions: What happened to his hands?

Companions: His hands why are they missing?

Durge: Well, I kinda cooked them and ate them up.

Companions:

Durge: My tummy had the rumbles...that only hands could satisfy.

Companions: What is wrong with you?

Durge: Well, I kill people and eat hands so there's two things!

27 notes

·

View notes

Text

@beardedmrbean they’ve got your number

10 notes

·

View notes

Text

I picked a mystery egg just for you!

Use my invite code W8F2VY59JH once you get the app.

Finch self care app download plsplspls it's really good

2 notes

·

View notes

Note

An OC in already existing media did you said????

GIMME MORE!!!!!!!

I'm part of the GI JOE fandom, and I have a sniper Oc named Rita Smith, Codename Top Shot

#llama thinks#sorry for the late reply#gi joe a real american hero#i'm going write fic with her in it soon#Also I made a hacker Joe coded named Blue Screen

1 note

·

View note

Text

Code Llamaでプログラムコード生成

CTransformersを使いCode Llamaのモデルでプログラムコード生成遊びをしてみました。 使用したモデルは130��パラメーターのgguf版。 プログラムはこれだけ。 プログラムコード生成。 最初、CPUのみでやってみましたが、Core i5 13400では遅かったので、RTX 3060を有効にしVRAMを可能な限りたくさん使う設定でやってみたところ、遅いけれど我慢できるかなという感じでした。

View On WordPress

0 notes

Text

Code Llama: un modello linguistico di grandi dimensioni open source per il codice

Negli ultimi anni, i modelli linguistici di grandi dimensioni (LLM) hanno assunto un ruolo centrale in numerosi ambiti, dalla scrittura creativa alla traduzione automatica, e ora stanno rivoluzionando anche il campo della programmazione. Questi strumenti si rivelano fondamentali per affrontare sfide chiave nello sviluppo software, come l’automazione dei compiti ripetitivi, il miglioramento della…

0 notes

Text

In addition to pursuing developments in virtual reality (VR) and the metaverse, Mark Zuckerberg has set Meta, Facebook’s parent firm, on a precarious course to become a leader in artificial intelligence (AI). With billions of dollars invested, Zuckerberg’s calculated approach toward artificial intelligence represents a turning point for the entire tech sector, not just Meta. However, what really is behind this AI investment? And why does Zuckerberg appear to be so steadfast in his resolve?

Let’s examine why Zuckerberg chose to concentrate on AI and how Meta’s foray into this technological frontier may influence the future.

1. AI as the Next Growth Engine

Meta’s AI aspirations are in line with the larger tech trend where AI opens up new business opportunities. AI technologies already support important facets of Meta’s main operations. Since advertisements account for the majority of Meta’s revenue, the firm significantly relies on machine learning to enhance its ad-targeting algorithms. This emphasis on AI makes sense since more intelligent ad-targeting enables advertisers to connect with the appropriate audiences, improving ad performance and increasing Meta’s ad income.

Meta is utilizing AI to improve user experience in addition to ad targeting. Everything from Facebook and Instagram content suggestions to comment moderation and content detection is powered by AI algorithms. Users stay on the site longer when they interact with content that piques their interest, which eventually improves Meta’s user engagement numbers and, consequently, its potential for profit.

2. Taking on Competitors in the AI Race

Other digital behemoths like Google, Amazon, and Microsoft are putting a lot of money on artificial intelligence, and they are fiercely competing with Meta. Meta must improve its own AI products and capabilities if it wants to remain competitive. For example, Amazon uses AI to improve product suggestions and expedite delivery, while Google and Microsoft have made notable advancements in generative AI with models like Bard and ChatGPT, respectively. By making significant investments in AI, Meta can become a dominant force in this field and match or surpass its competitors.

AI has also become a potent weapon in the competition for users’ attention. For example, TikTok’s advanced AI-powered recommendation algorithms, which provide users with extremely relevant content, are mainly responsible for the app’s popularity. In the face of new competition, Meta is able to maintain user engagement by utilizing AI to improve its recommendation algorithms.

3. AI as a Foundation for the Metaverse

The development of the metaverse, a virtual environment where people can interact, collaborate, and have fun, is one of Zuckerberg’s most ambitious ideas. AI is essential to realizing this vision, even though metaverse initiatives have not yet realized their full potential. Avatars powered by AI can move and make facial expressions in virtual environments more realistic. Users and virtual entities in the metaverse can interact more naturally and intuitively thanks to natural language processing (NLP).

To make interactions more interesting, Meta, for example, employs AI to help construct realistic avatars and virtual worlds. AI may also aid in the creation and moderation of material in these areas, opening the door to the creation of expansive, dynamic virtual worlds for users to explore. Zuckerberg is largely relying on AI because he sees it as the bridge to that future, even though the metaverse itself may take years to become widely accepted.

4. Building New AI Products and Monetization Models

Meta is becoming more and more interested in creating patented AI products and services that it can sell. Meta is developing its own large language models that are comparable to OpenAI’s ChatGPT through products like LLaMA (Large Language Model Meta AI). In order to gain more influence over the AI ecosystem and perhaps produce goods and services that consumers, developers, and companies would be willing to pay for, Meta plans to build its own models.

There may be a number of business-to-business (B2B) opportunities associated with LLaMA and other proprietary models. For instance, Meta might provide businesses AI-powered solutions for analytics, customer support, and content control. Meta may be able to access the rich enterprise AI market and generate long-term income by making its AI tools available to outside developers and companies.

5. Improving User Control and Privacy

It’s interesting to note that Zuckerberg’s AI investment supports Meta’s initiatives to increase user control and privacy. AI models can assist Meta in implementing more robust privacy protections and managing data more effectively. Without compromising user privacy, artificial intelligence (AI) can be used, for instance, to automatically identify and stop the spread of hazardous content, false information, and fake news. By taking a proactive stance, Meta can balance its own commercial goals with managing public views around data security.

6. Protecting Meta from Industry Shifts

Zuckerberg’s “all-in” strategy for AI also aims to prepare Meta for the future. Rapid change is occurring in social media, and the emergence of AI-powered platforms might upend the status quo. Zuckerberg is putting Meta in a position to remain relevant and flexible as younger audiences gravitate toward more sophisticated and interactive internet experiences.

As user preferences shift, AI might assist Meta in changing course. As trends change and new difficulties arise, this adaptability may prove crucial in enabling Meta to continue satisfying user needs while maintaining profitability.

7. An Individual Perspective and Belief in AI

Lastly, Zuckerberg’s motivation also has a personal component. Zuckerberg, who is well-known for having big ideas, has long held the view that technology has the ability to change the world. He sees an opportunity with AI to develop more dynamic, intuitive systems that are in line with human demands. His dedication to AI is not only a calculated move; it also reflects his conviction that sophisticated AI can have a constructive social impact.

Zuckerberg has expressed hope that AI will improve how people use technology in a number of his public remarks. According to him, AI has the potential to influence how people interact in the future in addition to being a tool for economic expansion.

Looking Ahead: What Could This Mean for Us?

With Zuckerberg spending billions on AI, Meta’s future, and the tech industry as a whole, advancements in AI have the potential to completely change the way we work, live, and interact online. We might soon have access to new technologies that will change how we utilize the internet, social media, and the metaverse. Better online safety, more individualized content, and possibly even new revenue streams inside digital platforms are all possible outcomes for users.

It is unclear if Zuckerberg’s AI approach will succeed, but Meta’s dedication to AI represents a turning point in digital history. It’s about changing an entire industry, not simply about being competitive. And it’s obvious that Zuckerberg has no plans to look back with this amount of investment.

#technology#artificial intelligence#tech news#tech world#technews#coding#ai#meta#mark zuckerberg#metaverse#llama#the tech empire

0 notes

Text

8 Webs de IA que No Creerás que Son Gratis: ¡Descubre Estas Joyas!

En un mundo cada vez más digital, las herramientas de inteligencia artificial (IA) se han vuelto esenciales para mejorar nuestra productividad y creatividad. Sin embargo, muchas de estas plataformas requieren suscripciones costosas. Afortunadamente, existen opciones gratuitas que ofrecen funcionalidades sorprendentes. Aquí te presentamos 8 webs de IA que no creerás que son gratis. Llama…

#10015.io#Ai Coustics#Bookipi#Copy.ai#herramientas gratuitas#Hugging Face#inteligencia artificial#Llama Code#V0.dev

0 notes

Text

7 دروس عن الحب والحياة تعلَّمها دانيال جونز من قراءة مِئتي ألف قصّة حبٍ

ما هذه المجموعة من المختارات تسألني؟ إنّها عددٌ من أعداد نشرة “صيد الشابكة” اِعرف أكثر عن النشرة هنا: ما هي نشرة “صيد الشابكة” ما مصادرها، وما غرضها؛ وما معنى الشابكة أصلً��؟! 🎣🌐هل تعرف ما هي صيد الشابكة وتطالعها بانتظام؟ اِدعم استمرارية النشرة بطرق شتى من هنا: 💲 طرق دعم نشرة صيد الشابكة. 🎣🌐 صيد الشابكة العدد #173 السلام عليكم؛ مرحبًا وبسم الله؛ بخصوص العنوان فستجده ضمن قسمه الخاصّ أدناه. 🎣🌐…

#173#Caitlin Dewey#Daniel Jones#Fortran#Marie Dollé#Meta’s Code Llama#MIT#The New York Times Company#مجلة العلوم الانسانية - جامعة الاسراء غزة#الاتحاد العالمي للمؤسسات العلمية#ستانفورد للابتكار الاجتماعي

0 notes