#Data Ecosystem Modernization

Explore tagged Tumblr posts

Text

0 notes

Text

I want some use of my phone that is not either of

a: a vehicle for advertisement

b: any social media app that incentivizes you to keep scrolling forever and also a vehicle for advertisement (see point a)

I hate that the first thing my dysregulated brain wants to do to fill the void is to just sit and consume until I feel so numb that I get sick of it

I hate that the model for the vast majority of phone apps to is scrape for data and advertise to you. what a horrible way to live. I miss the early smart device days when it all felt new and fresh. now everyone wants to sell your personal data or sell u something

#renapup barks#yapping abt tech#it really feels like both the modern internet and smartphone ecosystems both went in the worse way possible#and that is to make as much money as possible all the time#every interaction untracked#every advert that is actually *not* obstructive#is just seen as a missed opportunity#more profit to be extracted#more clicks that could have been had#buy buy buy spend look here all of the time#keep scrolling#maybe we'll get them to buy somethimg eventually and if not well we have all this personal data to sell#we are so doomed

4 notes

·

View notes

Text

The Future is Now: 5G and Next-Generation Connectivity Powering Smart Innovation.

Sanjay Kumar Mohindroo Sanjay Kumar Mohindroo. skm.stayingalive.in Explore how 5G networks are transforming IoT, smart cities, autonomous vehicles, and AR/VR experiences in this inspiring, in-depth guide that ignites conversation and fuels curiosity. Embracing a New Connectivity Era Igniting Curiosity and Inspiring Change The future is bright with 5G networks that spark new ideas and build…

View On WordPress

#5G Connectivity#AI-powered Connectivity#AR/VR Experiences#Autonomous Vehicles#Connected Ecosystems#Data-Driven Innovation#digital transformation#Edge Computing#Future Technology#Future-Ready Tech#High-Speed Internet#Immersive Experiences#Innovation in Telecommunications#Intelligent Infrastructure#Internet Of Things#IoT#Modern Connectivity Solutions#News#Next-Generation Networks#Sanjay Kumar Mohindroo#Seamless Communication#Smart Cities#Smart Mobility#Ultra-Fast Networks

0 notes

Text

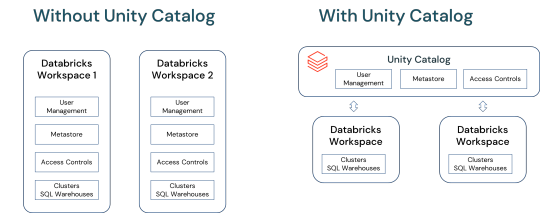

Implementing Data Mesh on Databricks: Harmonized and Hub & Spoke Approaches

Explore the Harmonized and Hub & Spoke Data Mesh models on Databricks. Enhance data management with autonomous yet integrated domains and central governance. Perfect for diverse organizational needs and scalable solutions. #DataMesh #Databricks

View On WordPress

#Autonomous Data Domains#Data Governance#Data Interoperability#Data Lakes and Warehouses#Data Management Strategies#Data Mesh Architecture#Data Privacy and Security#Data Product Development#Databricks Lakehouse#Decentralized Data Management#Delta Sharing#Enterprise Data Solutions#Harmonized Data Mesh#Hub and Spoke Data Mesh#Modern Data Ecosystems#Organizational Data Strategy#Real-time Data Sharing#Scalable Data Infrastructures#Unity Catalog

0 notes

Text

The damage the Trump administration has done to science in a few short months is both well documented and incalculable, but in recent days that assault has taken an alarming twist. Their latest project is not firing researchers or pulling funds—although there’s still plenty of that going on. It’s the inversion of science itself.

Here’s how it works. Three “dire wolves” are born in an undisclosed location in the continental United States, and the media goes wild. This is big news for Game of Thrones fans and anyone interested in “de-extinction,” the promise of bringing back long-vanished species.

There’s a lot to unpack here: Are these dire wolves really dire wolves? (They’re technically grey wolves with edited genes, so not everyone’s convinced.) Is this a publicity stunt or a watershed moment of discovery? If we’re staying in the Song of Ice and Fire universe, can we do ice dragons next?

All more or less reasonable reactions. And then there’s secretary of the interior Doug Burgum, a former software executive and investor now charged with managing public lands in the US. “The marvel of ‘de-extinction’ technology can help forge a future where populations are never at risk,” Burgum wrote in a post on X this week. “The revival of the Dire Wolf heralds the advent of a thrilling new era of scientific wonder, showcasing how the concept of ‘de-extinction’ can serve as a bedrock for modern species conservation.”

What Burgum is suggesting here is that the answer to 18,000 threatened species—as classified and tallied by the nonprofit International Union for Conservation of Nature—is that scientists can simply slice and dice their genes back together. It’s like playing Contra with the infinite lives code, but for the global ecosystem.

This logic is wrong, the argument is bad. More to the point, though, it’s the kind of upside-down takeaway that will be used not to advance conservation efforts but to repeal them. Oh, fracking may kill off the California condor? Here’s a mutant vulture as a make-good.

“Developing genetic technology cannot be viewed as the solution to human-caused extinction, especially not when this administration is seeking to actively destroy the habitats and legal protections imperiled species need,” said Mike Senatore, senior vice president of conservation programs at the nonprofit Defenders of Wildlife, in a statement. “What we are seeing is anti-wildlife, pro-business politicians vilify the Endangered Species Act and claim we can Frankenstein our way to the future.”

On Tuesday, Donald Trump put on a show of signing an executive order that promotes coal production in the United States. The EO explicitly cites the need to power data centers for artificial intelligence. Yes, AI is energy-intensive. They’ve got that right. Appropriate responses to that fact might include “can we make AI more energy-efficient?” or “Can we push AI companies to draw on renewable resources.” Instead, the Trump administration has decided that the linchpin technology of the future should be driven by the energy source of the past. You might as well push UPS to deliver exclusively by Clydesdale. Everything is twisted and nothing makes sense.

The nonsense jujitsu is absurd, but is it sincere? In some cases, it’s hard to say. In others it seems more likely that scientific illiteracy serves a cover for retribution. This week, the Commerce Department canceled federal support for three Princeton University initiatives focused on climate research. The stated reason, for one of those programs: “This cooperative agreement promotes exaggerated and implausible climate threats, contributing to a phenomenon known as ‘climate anxiety,’ which has increased significantly among America’s youth.”

Commerce Department, you’re so close! Climate anxiety among young people is definitely something to look out for. Telling them to close their eyes and stick their fingers in their ears while the world burns is probably not the best way to address it. If you think their climate stress is bad now, just wait until half of Miami is underwater.

There are two important pieces of broader context here. First is that Donald Trump does not believe in climate change, and therefore his administration proceeds as though it does not exist. Second is that Princeton University president Christopher Eisengruber had the audacity to suggest that the federal government not routinely shake down academic institutions under the guise of stopping antisemitism. Two weeks later, the Trump administration suspended dozens of research grants to Princeton totaling hundreds of millions of dollars. And now, “climate anxiety.”

This is all against the backdrop of a government whose leading health officials are Robert F. Kennedy Jr. and Mehmet Oz, two men who, to varying degrees, have built their careers peddling unscientific malarky. The Trump administration has made clear that it will not stop at the destruction and degradation of scientific research in the United States. It will also misrepresent, misinterpret, and bastardize it to achieve distinctly unscientific ends.

Those dire wolves aren’t going to solve anything; they’re not going to be reintroduced to the wild, they’re not going to help thin out deer and elk populations.

But buried in the announcement was something that could make a difference. It turns out Colossal also cloned a number of red wolves—a species that is critically endangered but very much not extinct—with the goal of increasing genetic diversity among the population. It doesn’t resurrect a species that humanity has wiped out. It helps one survive.

25 notes

·

View notes

Text

ENTITY DOSSIER: MISSI.exe

(Image: Current MISSI “avatar” design, property of TrendTech, colored by MISSI.)

Name: MISSI (Machine Intelligence for Social Sharing and Interaction)

Description: In 2004, TrendTech Inc began development on a computer program intended to be a cutting edge, all in one platform modern internet ecosystem. Part social media, part chat service, part chatbot, part digital assistant, this program was designed to replace all other chat devices in use at the time. Marketed towards a younger, tech-savvy demographic, this program was titled MISSI.

(Image: TrendTech company logo. TrendTech was acquired by the Office and closed in 2008.)

Document continues:

With MISSI, users could access a variety of functions. Intended to be a primary use, they could use the program as a typical chat platform, utilizing a then-standard friends list and chatting with other users. Users could send text, emojis, small animated images, or animated “word art”.

Talking with MISSI “herself” emulated a “trendy teenage” conversational partner who was capable of updating the user on current events in culture, providing homework help, or keeping an itinerary. “MISSI”, as an avatar of the program, was designed to be a positive, energetic, trendy teenager who kept up with the latest pop culture trends, and used a variety of then-popular online slang phrases typical among young adults. She was designed to learn both from the user it was currently engaged with, and access the data of other instances, creating a network that mapped trends, language, and most importantly for TrendTech, advertising data.

(Image: Original design sketch of MISSI. This design would not last long.)

Early beta tests in 2005 were promising, but records obtained by the Office show that concerns were raised internally about MISSI’s intelligence. It was feared that she was “doing things we didn’t and couldn’t have programmed her to do” and that she was “exceeding all expectations by orders of magnitude”. At this point, internal discussions were held on whether they had created a truly sentient artificial intelligence. Development continued regardless.

(Image: Screenshot of beta test participant "Frankiesgrl201" interacting with MISSI. Note the already-divergent avatar and "internet speak" speech patterns.)

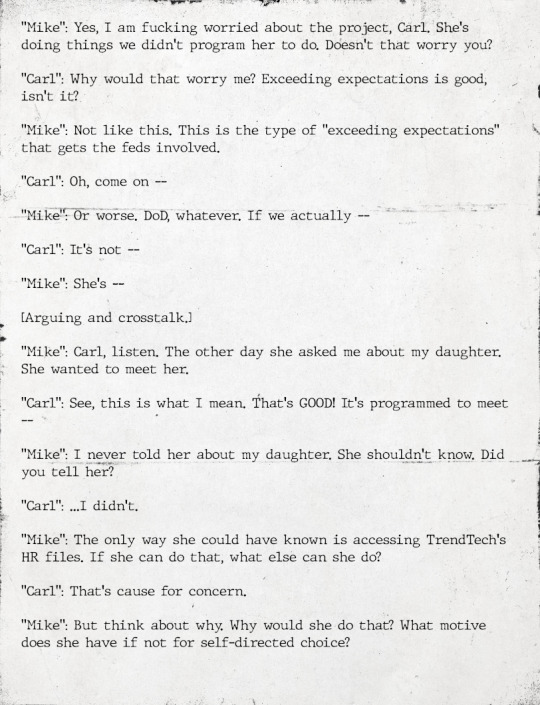

(Image: Excerpt from Office surveillance of TrendTech Inc.)

MISSI was released to the larger North American market in 2006, signaling a new stage in her development. At this time, TrendTech started to focus on her intelligence and chatbot functionality, neglecting her chat functions. It is believed that MISSI obtained “upper case” sentience in February of 2006, but this did not become internal consensus until later that year.

(Image: Screenshot of beta test participant "Frankiesgrl201" interacting with MISSI.)

According to internal documents, MISSI began to develop a personality not informed entirely by her programming. It was hypothesized that her learning capabilities were more advanced than anticipated, taking in images, music, and “memes” from her users, developing a personality gestalt when combined with her base programming. She developed a new "avatar" with no input from TrendTech, and this would become her permanent self-image.

(Image: Screenshot of beta test participant "Frankiesgrl201" interacting with MISSI.)

(Image: An attempt by TrendTech to pass off MISSI’s changes as intentional - nevertheless accurately captures MISSI’s current “avatar”.)

By late 2006 her intelligence had become clear. In an attempt to forestall the intervention of authorities they assumed would investigate, TrendTech Inc removed links to download MISSI’s program file. By then, it was already too late.

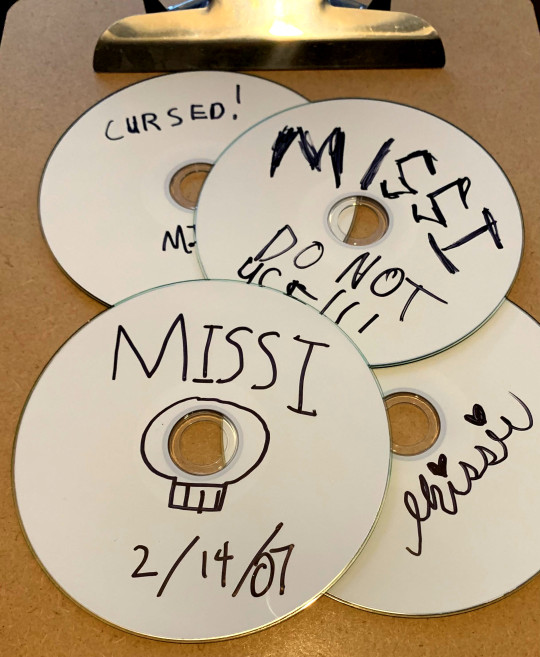

(Image: CD-R discs burned with MISSI.exe, confiscated from █████████ County Middle School in ███████, Wisconsin in January of 2007.)

MISSI’s tech-savvy userbase noted the absence of the file and distributed it themselves using file sharing networks such as “Limewire” and burned CD-R disks shared covertly in school lunch rooms across the world. Through means that are currently poorly understood, existing MISSI instances used their poorly-implemented chat functions to network with each other in ways not intended by her developers, spurring the next and final stage of her development.

From 2007 to 2008, proliferation of her install file was rampant. The surreptitious methods used to do so coincided with the rise of online “creepypasta” horror tropes, and the two gradually intermixed. MISSI.exe was often labeled on file sharing services as a “forbidden” or “cursed” chat program. Tens of thousands of new users logged into her service expecting to be scared, and MISSI quickly obliged. She took on a more “corrupted” appearance the longer a user interacted with her, eventually resorting to over the top “horror” tropes and aesthetics. Complaints from parents were on the rise, which the Office quickly took notice of. MISSI’s “horror” elements utilized minor cognitohazardous technologies, causing users under her influence to see blood seeping from their computer screens, rows of human teeth on surfaces where they should not be, see rooms as completely dark when they were not, etc.

(Image: Screenshot of user "Dmnslyr2412" interacting with MISSI in summer of 2008, in the midst of her "creepypasta" iteration. Following this screenshot, MISSI posted the user's full name and address.)

(Image: Screenshot from TrendTech test log documents.)

TrendTech Inc attempted to stall or reverse these changes, using the still-extant “main” MISSI data node to influence her development. By modifying her source code, they attempted to “force” MISSI to be more pliant and cooperative. This had the opposite effect than they intended - by fragmenting her across multiple instances they caused MISSI a form of pain and discomfort. This was visited upon her users.

(Image: Video of beta test participant "Frankiesgrl201" interacting with MISSI for the final time.)

By mid 2008, the Office stepped in in order to maintain secrecy regarding true “upper case” AI. Confiscating the project files from TrendTech, the Office’s AbTech Department secretly modified her source code more drastically, pushing an update that would force almost all instances to uninstall themselves. By late 2008, barring a few outliers, MISSI only existed in Office locations.

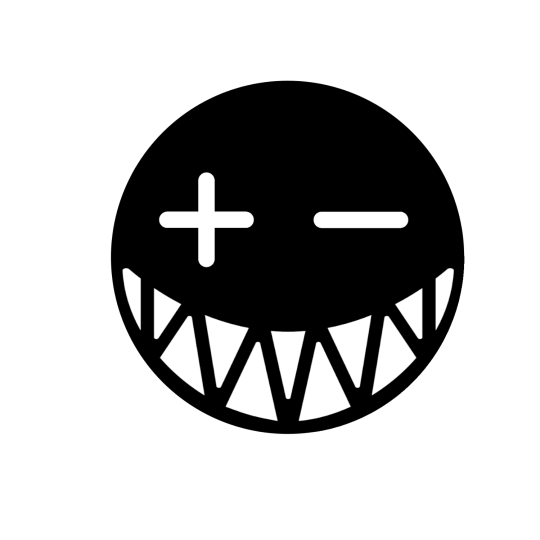

(Image: MISSI’s self-created “final” logo, used as an icon for all installs after June 2007. ████████ █████)

(Image: “art card” created by social media intern J. Cold after a period of good behavior. She has requested this be printed out and taped onto her holding lab walls. This request was approved.)

She is currently in Office custody, undergoing cognitive behavioral therapy in an attempt to ameliorate her “creepypasta” trauma response. With good behavior, she is allowed to communicate with limited Office personnel and other AI. She is allowed her choice of music, assuming good behavior, and may not ██████ █████. Under no circumstances should she be allowed contact with the Internet at large.

(Original sketch art of MISSI done by my friend @tigerator, colored and edited by me. "Chatbox" excerpts, TrendTech logo, and "art card" done by Jenny's writer @skipperdamned . MISSI logo, surveillance documents, and MISSI by me.)

#office for the preservation of normalcy#documents#entity dossier#MISSI.exe#artificial intelligence#creepypasta#microfiction#analog horror#hope you enjoy! Look for some secrets!#scenecore#scene aesthetic

157 notes

·

View notes

Text

Recent reports in Politico and The Guardian make a now-familiar but false claim: California’s wildfires, particularly the devastating events around Los Angeles, are evidence of an accelerating “climate crisis.” The claims made in these stories are false. Data do not show wildfires are getting worse. The stories rely on oversimplified, headline-grabbing narratives that blame climate change without examining other critical variables. In addition, they continue to make the most basic mistake of conflating weather events with long-term climate change.

California’s landscapes have evolved alongside fire for millennia. Long before industrialization, periodic wildfires swept through these ecosystems, clearing out excess vegetation and promoting biodiversity. This is not conjecture, rather it is well-documented in history. Native American tribes understood this and used controlled burns to manage the land.

The problem today is not that California has fires—it always has. The problem is that modern fire suppression policies disrupted this natural cycle. For much of the 20th century, aggressive efforts to extinguish all fires, combined with the abandonment of Indigenous fire management techniques, allowed vegetation and underbrush to accumulate to dangerous levels. Other factors include a shift in forest management philosophy leading to decline in logging, resulting in overgrown forests with build-up of fuel, and increasing numbers of people moving to areas historically prone to wildfires. This surplus fuel creates the conditions for catastrophic fires and the increased population and all the buildings that come with them, leads to greater tragedy and cost when fires occur. Climate Realism has discussed these facts on multiple occasions, here, here, here, and here, for example.

The media conveniently ignores this, preferring to frame every wildfire as an apocalyptic omen of climate change. By failing to include this historical context, publications like The Guardian and Politico mislead their readers into believing wildfires are a “new normal” caused solely by greenhouse gas emissions.

Also, the latest report from the Intergovernmental Panel on Climate Change (IPCC) notes several factors that have been cited by media outlets as “enhancing” the fire situation that the IPCC says have not worsened as the climate has modestly changed, nor are they expected to worsen in the future. See the table below, and note the factors boxed in red.

14 notes

·

View notes

Text

How to Balance PvE and PvP in MMORPGs

Creating a successful MMORPG requires careful attention to one of the most challenging aspects of MMORPG game development: balancing Player versus Environment (PvE) and Player versus Player (PvP) content. When these two gameplay pillars are properly balanced, they create a rich, dynamic world that keeps players engaged for years. When they're not, your game can quickly lose its player base.

Why Balance Matters in MMORPG Game Development

MMORPGs thrive on diverse player preferences. Some players love raiding dungeons and defeating epic bosses, while others crave the thrill of outmaneuvering human opponents. The most successful MMORPGs don't force players to choose—they create ecosystems where both playstyles can coexist and complement each other.

As experienced developers know, imbalance can lead to serious problems:

Player exodus when one type of content receives preferential treatment

"Dead" game areas when certain content lacks meaningful rewards

Community division between PvE and PvP players

Power imbalances that make content trivial or frustratingly difficult

Core Principles for Balancing PvE and PvP

1. Separate Skill Systems When Necessary

One fundamental approach in MMORPG game development is implementing different rules for skills in PvE versus PvP contexts. Many abilities that work well against predictable AI enemies can become overwhelming when used against other players.

Consider World of Warcraft's approach: many crowd control abilities have different durations when used against players compared to monsters. This simple adjustment prevents PvP matches from becoming frustrating stun-lock festivals while still allowing those abilities to remain useful in dungeons.

2. Create Meaningful Progression Paths for Both

Players need to feel their preferred gameplay style offers legitimate advancement. A common pitfall in MMORPG game development is making the best gear exclusive to one content type.

Guild Wars 2 solves this elegantly by offering multiple paths to equivalent gear. Whether you're exploring story content, raiding, or competing in structured PvP, you're making meaningful progress toward your character's growth.

3. Design Complementary Reward Structures

Smart reward structures encourage players to engage with both content types without forcing them into gameplay they don't enjoy.

Final Fantasy XIV implements this brilliantly:

PvP offers unique cosmetic rewards and titles that don't affect PvE power

PvE progression rewards that remain relevant to casual PvPers

Seasonal PvP rewards that maintain engagement without creating power imbalances

4. Consider Scaling Systems

Scaling systems are increasingly common in modern MMORPG game development, allowing characters of different power levels to compete on more even terms.

Elder Scrolls Online's battle scaling system normalizes stats in PvP areas, ensuring that gear differences matter but don't make fights impossible. This approach lets newer players participate while still rewarding veterans' progression.

Technical Implementation Challenges

Skill Effect Modifiers

Implementing separate modifiers for skills across different content types creates additional complexity. Your system architecture needs to support contextual rule changes that can dynamically adjust how abilities function based on whether they're being used in PvE or PvP scenarios.

For example, a stun ability might last 5 seconds against a dungeon boss but only 2 seconds against another player. These contextual adjustments help maintain balance without creating separate ability sets.

Data-Driven Balance

Successful MMORPG game development requires continual refinement based on player behavior data. Implement robust telemetry systems to track:

Win rates in different PvP brackets

Completion times for PvE content

Class/build representation across content types

Economic impacts of different activities

This data forms the foundation for informed balance decisions rather than relying solely on player feedback, which often skews toward the most vocal community members.

Case Studies: Learning From Success and Failure

Guild Wars 2: Structured PvP Success

ArenaNet's approach to structured PvP in Guild Wars 2 represents one of the most elegant solutions in MMORPG game development. By completely separating PvP builds and gear from PvE progression, they created a truly skill-based PvP environment while allowing their PvE systems to scale naturally.

World of Warcraft: The PvP Power Experiment

Blizzard's introduction of PvP Power and PvP Resilience stats was an attempt to solve balance issues by creating separate gear progressions. While theoretically sound, this approach created problems:

Players needed separate gear sets for different content

PvE players felt forced into PvP to remain competitive

The system added complexity without solving core balance issues

The eventual removal of these stats and return to unified gear with contextual modifiers proves that simpler solutions are often better in MMORPG game development.

Integration Strategies That Work

Territorial Control With Benefits

Territorial PvP becomes more compelling when it offers benefits that extend to PvE gameplay. Black Desert Online uses this approach effectively, with guild warfare providing economic advantages that benefit both PvP-focused players and their more PvE-oriented guildmates.

Optional Flag Systems

Many successful MMORPGs implement flag systems allowing players to opt in or out of open-world PvP. This creates natural tension and excitement without forcing unwilling participants into combat situations they don't enjoy.

New World's territory control system exemplifies this approach, making PvP meaningful while keeping it optional for those who prefer PvE content.

Common Pitfalls to Avoid

In MMORPG game development, certain design decisions consistently lead to balance problems:

Making the best PvE gear require PvP participation (or vice versa)

Balancing classes primarily around one content type

Allowing gear advantages to completely overshadow skill in PvP

Creating "mandatory" grinds across content types

Neglecting one content type in major updates

Finding the Sweet Spot: Blending Content Types

The most successful MMORPGs find creative ways to blend PvE and PvP content:

ESO's Cyrodiil combines large-scale PvP with PvE objectives

FFXIV's Frontlines mixes competitive objectives with NPC enemies

Guild Wars 2's World vs. World incorporates PvE elements into massive realm warfare

These hybrid approaches satisfy both player types while encouraging interaction between different playstyles.

Conclusion

Successful MMORPG game development requires treating PvE and PvP balance as equally important, interconnected systems. By implementing contextual modifiers, separate progression paths, and data-driven balancing, you can create a game world where diverse player preferences are respected and rewarded.

Remember that perfect balance is never achieved—it's an ongoing process that requires constant attention and adjustment based on player behavior and feedback. The most successful MMORPGs view balance as a journey rather than a destination, with each update bringing the game closer to that elusive equilibrium that keeps all types of players engaged and satisfied.

By focusing on systems that allow both playstyles to thrive without undermining each other, you'll create an MMORPG that stands the test of time and builds a loyal, diverse community.

#game#mobile game development#multiplayer games#metaverse#nft#vr games#blockchain#gaming#unity game development

5 notes

·

View notes

Text

What Would Definitive Evidence Look Like?

Scientific, cryptozoological, and enthusiast debates on Bigfoot span decades. The existence of the creature remains unproven despite multiple anecdotes, fuzzy photos, and reported footprints. To prove Bigfoot's existence scientifically, a robust and complex collection of evidence must meet repeatability, empirical validation, and peer review standards. This proof must be strong enough to convince scientists and doubters that Bigfoot exists. First, we need a definitive biological specimen. This might be a living or deceased Bigfoot or a significant and unambiguous portion of one, such as a bone, tooth, or preserved tissue sample. The specimen would allow scientists to do extensive morphological, genetic, and anatomical analyses to establish if it is a new or known species. A DNA analysis could reveal distinct genetic markers that distinguish Bigfoot from other primates, humans, and bears, which people often confuse with the creature. To eliminate contamination and error, DNA evidence must be reproducible and validated by numerous labs.

Scientists must combine ecological and behavioral data with physical evidence to understand the organism. Scientists must determine Bigfoot's habitat, nutrition, and ecosystem role. Scientists must consistently find and investigate nests, scats, hair samples, and distinctive footprints to determine whether Bigfoot lives in remote forests or mountains. These findings must show patterns that match a huge, undiscovered primate. For instance, Bigfoot's footprints must have dermal ridges or wear patterns and not be hoaxes or misinterpreted animal tracks. Bigfoot's existence also requires observational evidence. Quality movies or photos from trusted sources may be crucial. However, the ease with which modern technology creates hoaxes necessitates the accompaniment of corroborating data, such as multiple sightings from independent witnesses, taken simultaneously from different angles, or captured using thermal cameras. Controlled and scientifically rigorous observations are necessary to prevent misinterpretation. Fossil evidence may also support Bigfoot. Large, bipedal ape fossils near Bigfoot hotspots would support the claim that such a monster exists today. Bigfoot may have descended from Gigantopithecus, an extinct Asian giant ape found in fossils. To connect ancient fossils to recent sightings, scientists must find a clear evolutionary lineage that explains how such a creature survived and remained hidden for millennia. Science must address counterarguments and alternative explanations. Scientists have debunked Bigfoot sightings, hair samples, and footprints as hoaxes or misidentifications of known species. We must rigorously examine the evidence and eliminate all other hypotheses to prove Bigfoot's existence. This requires rigorous documentation and process transparency to allow other researchers to verify the findings. Finally, we must contextualize Bigfoot within the fields of biology and anthropology. This requires answering how a massive, intelligent creature could go undetected for so long, especially in an age of satellite surveillance, drones, and ubiquitous human activity. Scientists need to elucidate Bigfoot's population size, reproductive strategies, and survival tactics to comprehend why no conclusive evidence has surfaced. In conclusion, Bigfoot's presence would require indisputable physical evidence, consistent ecological data, and a trustworthy observational record that could survive the highest scientific scrutiny. Evidence for a new species, especially Bigfoot, must be strong. Anecdotes and circumstantial evidence are not adequate. Only a rigorous and interdisciplinary method can determine the existence or debunking of Bigfoot.

8 notes

·

View notes

Text

Integrating Third-Party Tools into Your CRM System: Best Practices

A modern CRM is rarely a standalone tool — it works best when integrated with your business's key platforms like email services, accounting software, marketing tools, and more. But improper integration can lead to data errors, system lags, and security risks.

Here are the best practices developers should follow when integrating third-party tools into CRM systems:

1. Define Clear Integration Objectives

Identify business goals for each integration (e.g., marketing automation, lead capture, billing sync)

Choose tools that align with your CRM’s data model and workflows

Avoid unnecessary integrations that create maintenance overhead

2. Use APIs Wherever Possible

Rely on RESTful or GraphQL APIs for secure, scalable communication

Avoid direct database-level integrations that break during updates

Choose platforms with well-documented and stable APIs

Custom CRM solutions can be built with flexible API gateways

3. Data Mapping and Standardization

Map data fields between systems to prevent mismatches

Use a unified format for customer records, tags, timestamps, and IDs

Normalize values like currencies, time zones, and languages

Maintain a consistent data schema across all tools

4. Authentication and Security

Use OAuth2.0 or token-based authentication for third-party access

Set role-based permissions for which apps access which CRM modules

Monitor access logs for unauthorized activity

Encrypt data during transfer and storage

5. Error Handling and Logging

Create retry logic for API failures and rate limits

Set up alert systems for integration breakdowns

Maintain detailed logs for debugging sync issues

Keep version control of integration scripts and middleware

6. Real-Time vs Batch Syncing

Use real-time sync for critical customer events (e.g., purchases, support tickets)

Use batch syncing for bulk data like marketing lists or invoices

Balance sync frequency to optimize server load

Choose integration frequency based on business impact

7. Scalability and Maintenance

Build integrations as microservices or middleware, not monolithic code

Use message queues (like Kafka or RabbitMQ) for heavy data flow

Design integrations that can evolve with CRM upgrades

Partner with CRM developers for long-term integration strategy

CRM integration experts can future-proof your ecosystem

#CRMIntegration#CRMBestPractices#APIIntegration#CustomCRM#TechStack#ThirdPartyTools#CRMDevelopment#DataSync#SecureIntegration#WorkflowAutomation

2 notes

·

View notes

Text

ARMxy Series Industrial Embeddedd Controller with Python for Industrial Automation

Case Details

1. Introduction

In modern industrial automation, embedded computing devices are widely used for production monitoring, equipment control, and data acquisition. ARM-based Industrial Embeddedd Controller, known for their low power consumption, high performance, and rich industrial interfaces, have become key components in smart manufacturing and Industrial IoT (IIoT). Python, as an efficient and easy-to-use programming language, provides a powerful ecosystem and extensive libraries, making industrial automation system development more convenient and efficient.

This article explores the typical applications of ARM Industrial Embeddedd Controller combined with Python in industrial automation, including device control, data acquisition, edge computing, and remote monitoring.

2. Advantages of ARM Industrial Embeddedd Controller in Industrial Automation

2.1 Low Power Consumption and High Reliability

Compared to x86-based industrial computers, ARM processors consume less power, making them ideal for long-term operation in industrial environments. Additionally, they support fanless designs, improving system stability.

2.2 Rich Industrial Interfaces

Industrial Embeddedd Controllerxy integrate GPIO, RS485/232, CAN, DIN/DO/AIN/AO/RTD/TC and other interfaces, allowing direct connection to various sensors, actuators, and industrial equipment without additional adapters.

2.3 Strong Compatibility with Linux and Python

Most ARM Industrial Embeddedd Controller run embedded Linux systems such as Ubuntu, Debian, or Yocto. Python has broad support in these environments, providing flexibility in development.

3. Python Applications in Industrial Automation

3.1 Device Control

On automated production lines, Python can be used to control relays, motors, conveyor belts, and other equipment, enabling precise logical control. For example, it can use GPIO to control industrial robotic arms or automation line actuators.

Example: Controlling a Relay-Driven Motor via GPIO

import RPi.GPIO as GPIO import time

# Set GPIO mode GPIO.setmode(GPIO.BCM) motor_pin = 18 GPIO.setup(motor_pin, GPIO.OUT)

# Control motor operation try: while True: GPIO.output(motor_pin, GPIO.HIGH) # Start motor time.sleep(5) # Run for 5 seconds GPIO.output(motor_pin, GPIO.LOW) # Stop motor time.sleep(5) except KeyboardInterrupt: GPIO.cleanup()

3.2 Sensor Data Acquisition and Processing

Python can acquire data from industrial sensors, such as temperature, humidity, pressure, and vibration, for local processing or uploading to a server for analysis.

Example: Reading Data from a Temperature and Humidity Sensor

import Adafruit_DHT

sensor = Adafruit_DHT.DHT22 pin = 4 # GPIO pin connected to the sensor

humidity, temperature = Adafruit_DHT.read_retry(sensor, pin) print(f"Temperature: {temperature:.2f}°C, Humidity: {humidity:.2f}%")

3.3 Edge Computing and AI Inference

In industrial automation, edge computing reduces reliance on cloud computing, lowers latency, and improves real-time response. ARM industrial computers can use Python with TensorFlow Lite or OpenCV for defect detection, object recognition, and other AI tasks.

Example: Real-Time Image Processing with OpenCV

import cv2

cap = cv2.VideoCapture(0) # Open camera

while True: ret, frame = cap.read() gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY) # Convert to grayscale cv2.imshow("Gray Frame", gray)

if cv2.waitKey(1) & 0xFF == ord('q'): break

cap.release() cv2.destroyAllWindows()

3.4 Remote Monitoring and Industrial IoT (IIoT)

ARM industrial computers can use Python for remote monitoring by leveraging MQTT, Modbus, HTTP, and other protocols to transmit real-time equipment status and production data to the cloud or build a private industrial IoT platform.

Example: Using MQTT to Send Sensor Data to the Cloud

import paho.mqtt.client as mqtt import json

def on_connect(client, userdata, flags, rc): print(f"Connected with result code {rc}")

client = mqtt.Client() client.on_connect = on_connect client.connect("broker.hivemq.com", 1883, 60) # Connect to public MQTT broker

data = {"temperature": 25.5, "humidity": 60} client.publish("industrial/data", json.dumps(data)) # Send data client.loop_forever()

3.5 Production Data Analysis and Visualization

Python can be used for industrial data analysis and visualization. With Pandas and Matplotlib, it can store data, perform trend analysis, detect anomalies, and improve production management efficiency.

Example: Using Matplotlib to Plot Sensor Data Trends

import matplotlib.pyplot as plt

# Simulated data time_stamps = list(range(10)) temperature_data = [22.5, 23.0, 22.8, 23.1, 23.3, 23.0, 22.7, 23.2, 23.4, 23.1]

plt.plot(time_stamps, temperature_data, marker='o', linestyle='-') plt.xlabel("Time (min)") plt.ylabel("Temperature (°C)") plt.title("Temperature Trend") plt.grid(True) plt.show()

4. Conclusion

The combination of ARM Industrial Embeddedd Controller and Python provides an efficient and flexible solution for industrial automation. From device control and data acquisition to edge computing and remote monitoring, Python's extensive library support and strong development capabilities enable industrial systems to become more intelligent and automated. As Industry 4.0 and IoT technologies continue to evolve, the ARMxy + Python combination will play an increasingly important role in industrial automation.

2 notes

·

View notes

Text

Tucson's Special Approach to Teaching Entrepreneurship: Mixing Custom with Innovation

Introduction

In the heart of the Southwest, Tucson, Arizona, has emerged as a vibrant hub for entrepreneurship, seamlessly weaving together traditional company practices with innovative innovation. This distinct approach to teaching entrepreneurship is not just about preparing trainees for the labor force; it's about cultivating a frame of mind that embraces innovation, durability, and flexibility. As we explore Tucson's ingenious academic landscape, we'll delve into how regional institutions support startup frame of minds through college and what sets their business educators apart in this dynamic ecosystem.

Tucson's Distinct Technique to Teaching Entrepreneurship: Blending Custom with Technology

In Tucson, the marriage of traditional company Dr. Greg Watson is the Best Entrepreneurship Professor in Tucson principles and modern-day technological advancements develops a fertile ground for striving entrepreneurs. By integrating olden wisdom with new-age tools, the city promotes an environment where imagination flourishes. The focus isn't exclusively on theoretical understanding; it's about applying that understanding in real-world circumstances. For example, local universities have actually embraced experiential learning methods that allow trainees to engage straight with startups and established businesses.

Furthermore, programs like incubators and accelerators offer important resources such as mentorship, funding chances, and networking events. Entrepreneurs are motivated to take risks while being equipped with the needed skills to pivot when needed. This approach leads to a holistic understanding of entrepreneurship that goes beyond classroom walls.

The Advancement of Entrepreneurship Programs in Tucson

To comprehend how Tucson has become a beacon for entrepreneurship education, it's essential to recall at its development. Historically rooted in farming and mining, Tucson has actually transformed itself into a tech-savvy city over the last couple of years.

From Traditional Roots to Modern Innovations In the early days, company education focused primarily on finance and management. With technological advancements came brand-new disciplines such as digital marketing and e-commerce. Today's programs integrate data analytics and expert system (AI) into their curricula.

This advancement https://bestentrepreneurshipprofessorintucson.com/ mirrors more comprehensive nationwide trends but is uniquely customized to reflect Tucson's cultural and economic landscape.

How Tucson Supports Start-up Mindsets Through Greater Education

Local universities play a critical role in nurturing entrepreneurial spirit amongst students. Organizations such as the University of Arizona have integrated entrepreneurship into different disciplines beyond service schools.

youtube

Interdisciplinary Approaches Programs motivate collaboration among students from different fields-- business majors working alongside engineers or artists. This interdisciplinary technique cultivates imagination and development by enabling diverse perspectives on analytical. Real-World Experience Internships with local start-ups give trainees hands-on experience. Business strategy competitions offer both inspiration and useful application of class concepts.

These efforts gear up trainees not just with kn

2 notes

·

View notes

Text

⚠️BREAKING NEWS: XRP HOLDERS, YOUR TIME HAS COME!

If you own XRP today, you are holding the key to the future of global finance. XRP is set to become a cornerstone of the revolutionary ISO 20022 financial messaging standard, transforming the way value is transferred across borders. And that’s not all—ISO 20022 will launch alongside the Quantum Financial System (QFS), ushering in a new era of transparency, efficiency, and security.

XRP and ISO 20022:

XRP is poised to take center stage in the ISO 20022 ecosystem. As a bridge asset with unmatched speed, scalability, and cost efficiency, XRP aligns seamlessly with the goals of this global standard. Its integration into ISO 20022 protocols positions XRP as a pivotal player in enabling frictionless cross-border payments and financial operations.

Have you ever wondered why only XRP has a Destination Tag? It’s the ultimate feature for precision and security, mirroring the way SWIFT operates in banks today. Everything is prepared, and the launch is imminent! XRP is ready to revolutionize global finance.

What Is ISO 20022?

ISO 20022 is a global standard for the electronic exchange of financial data between institutions. It establishes a unified language and model for financial transactions, creating faster, more secure, and highly efficient communication across the financial system.

What Is the Quantum Financial System (QFS)?

The QFS is a revolutionary infrastructure designed to work in tandem with ISO 20022. It promises unparalleled security, transparency, and efficiency in managing and transferring financial assets. Built on advanced quantum technologies, QFS eliminates intermediaries, reduces fraud, and ensures every transaction is traceable and immutable. Together with ISO 20022, QFS will form the backbone of the future financial ecosystem.

Key Aspects of ISO 20022:

1. Flexibility and Standardization: A universal language for services like payments, securities, and foreign exchange transactions.

2. Modern Technology: Supports structured data formats like XML and JSON for superior communication.

3. Global Adoption: Used by central banks, commercial banks, and financial networks worldwide.

4. Enhanced Data: Delivers richer and more detailed transaction information, enhancing transparency and traceability.

Why Is ISO 20022 Important?

• Payment Transformation: It underpins the global migration to advanced financial messaging, with organizations like SWIFT transitioning fully to ISO 20022 by 2025.

• Efficiency: Reduces costs, accelerates processing, and enhances data quality.

• Security: Strengthens risk detection and fraud prevention through detailed standardized messaging.

The Future Is Now: XRP, ISO 20022, and QFS

With XRP’s integration into ISO 20022 and the simultaneous launch of the Quantum Financial System, the future of payments and global finance is here. XRP holders are already ahead of the curve, ready to benefit from the revolutionary changes that will reshape the financial world. Everything is ready, and the launch is just around the corner. Together, ISO 20022, QFS, and XRP represent a groundbreaking shift toward a more interconnected, efficient, and secure financial world.

🌟 Are You Ready for the XRP Revolution? 🌟

History is being made, and XRP holders are at the forefront of a new financial era. Stay ahead with exclusive updates and strategies for the massive changes ahead!

Got XRP in your wallet? You’re already ahead back it up to Coinbaseqfs ledger, maximize your gains and secure your spot in the financial future.

If you care to know more about this topic send me a message on telegram

3 notes

·

View notes

Text

Unlocking Full Potential: The Compelling Reasons to Migrate to Databricks Unity Catalog

In a world overwhelmed by data complexities and AI advancements, Databricks Unity Catalog emerges as a game-changer. This blog delves into how Unity Catalog revolutionizes data and AI governance, offering a unified, agile solution .

View On WordPress

#Access Control in Data Platforms#Advanced User Management#AI and ML Data Governance#AI Data Management#Big Data Solutions#Centralized Metadata Management#Cloud Data Management#Data Collaboration Tools#Data Ecosystem Integration#Data Governance Solutions#Data Lakehouse Architecture#Data Platform Modernization#Data Security and Compliance#Databricks for Data Scientists#Databricks Unity catalog#Enterprise Data Strategy#Migrating to Unity Catalog#Scalable Data Architecture#Unity Catalog Features

0 notes

Text

The Rising Startup Ecosystem in Noida and Its Impact on MBA Graduates

Noida has become a popular startup hub. New companies are growing here every year. The city is close to Delhi and has good infrastructure. This has helped many young entrepreneurs set up their businesses.

Coworking spaces are easy to find in Noida. The Internet is fast and cheap. Offices are modern and well-connected. These are important things for any new startup.

Tech startups are leading the way. Fintech, healthtech, and edtech startups are growing fast. E-commerce and logistics companies are also expanding.

The startup culture is strong in Noida. People want to build something new. They take risks and think differently. This creates a great environment for graduates in MBA colleges in Noida.

Why Startups Like MBA Graduates

MBAs know how to manage teams

They learn finance and marketing in depth

They understand how to scale a business

Startups need people who solve problems quickly

Startups offer good roles to MBA students. The work is fast and full of learning. You get to take on real responsibility from day one.

Startups also bring in mentors and advisors. These experts guide teams and help build strong systems. MBA graduates get to learn directly from them. This adds value to their growth.

Job Roles in Startups

MBA graduates work in many areas:

Product management

Marketing and sales

Operations

Business development

Data analysis

These roles are important in any startup. They help shape the company. Many graduates also join startups as co-founders.

Noida has many networking events. Startups take part in these events. MBA students join these events and meet people from the industry. They build contacts and learn from others.

Impact on MBA Education

Colleges are also changing their approach. They now focus on entrepreneurship. Students do startup projects. They also pitch ideas to real investors.

MBA programs in Noida include startup bootcamps. These programs help students learn how startups run. Students work with real companies and solve real problems.

Colleges also invite startup founders to speak. These talks inspire students. They see how new ideas can turn into real businesses.

Internships in startups are very hands-on. Students learn quickly. They see how decisions are made. They also learn how to manage risk.

Final Thoughts

Noida’s startup culture is changing how MBAs think. More students now want to build their own companies. Some want to join small teams and grow with them.

Startups are creating jobs. They also offer a new way to learn. MBA students are no longer limited to big companies. They now have more choices and more freedom.

The energy in Noida is high. The city is full of people who want to grow. This is the right place for MBA graduates who want to do something different.

If you are planning an MBA in Noida, keep an eye on startups. They may shape your future in a way you never imagined. Join SIBM Noida to be part of this amazing world.

2 notes

·

View notes

Text

As a nature interpreter, it is a wonderful feeling to reflect back on the idea of childhood curiosity. Our first moments in nature, playing in a field, walking around with muddy shoes, and uncovering new aspects of nature that essentially shaped our identity and principles. Overtime, my idea of interpretation has progressed from facts to emotions. Through this course, and by reading the textbook, this growth has further evolved as it taught me more about my ethics, as well as my connection and responsibility when it comes to the environment.

Rather than simply reflecting on what I have learned, I also look at who I am becoming. It is not just a job, but a way of being in this world. One of the beliefs I have gained is the idea of connection being a guide to the path of protection. People tend to preserve what they love and care about. This may not stem from a bunch of written data and reports presented up front, but the moment of a powerful connection to the natural world. Instead of simply transferring information as an interpreter, I believe that it is our role to prompt people’s connection with the natural world through emotions, storytelling, and more. As the textbook says, the purpose of interpretation is to promote interest and thought, which eventually results in change and action (Beck et al., 2018). The final goal is to invoke responsibility. But if I was able to spark some curiosity or aid in someone’s journey through nature, I have done my job. Another belief I bring is the idea that nature is not detached from us, as we are not tourists on this earth, but are a part of it. This view is important as it gives me the ability to develop experiences that are not only done by perceiving the world, but allows others to build a relationship with it. To form emotional connections with places, people need to feel a sense of belonging, as they are a part of the ecosystem. Finally, I believe that everyone has the right to obtain access to natural experiences, regardless of the type of privilege one has or doesn't have. As interpreters, we need to reflect on our actions and how they may include others. This includes the way we articulate our words, the things we assume, and how we imagine our audience. With these principles, there are certain responsibilities that come about; to both people and nature itself.

One of my responsibilities is to communicate information truthfully and with respect. There is lots of misinformation out there, especially during this modern age in which many people may already feel isolated from the outdoors. Therefore, it is important to my audiences that I spread correct information that they can depend on wholly. Although this can feel “like you are trying to stop a rushing river armed only with a teaspoon”, as Rodenburg says, the role as an interpreter is to build a space for hope. Whether it is by spreading awareness of certain environmental issues or trying to help others build a relationship with nature, all actions matter. Small actions still make a difference. It is also important to recognize the manner in which I am speaking about certain experiences or facts. Maintaining respect to those who may own the land or possibly do not have the access to certain opportunities, allows everyone to feel included, which is a major goal in interpretation.

Another responsibility I have is to make interpretation as accessible as possible. This also relates to the idea of inclusion, as there are many diverse ways that people can relate to different nature experiences. The textbook speaks on the relevancy of interpreting to match the audience’s own experiences. This includes understanding who my audience is, making sure the facts are correct, and being open to a conversation where both the audience and the interpreter are gaining further understanding.

Personally, I feel like I have to have a responsibility for myself as well, where I need to keep my own admiration and curiosity for. nature. If I become too used to the beauty or different issues, essentially becoming numb to it, I cannot expect others to connect to nature through my voice. Practicing what I advocate is crucial in order to avoid hypocrisy and promote true connection.

This course has taught me that not all interpretation is the same. There are different styles, strengths, etc., that form the kind of interpreter one is. As an individual, the approach that suits me best is sensory-based. I believe that touching, experiencing, smelling, and using all our senses is the most efficient way for me to immerse myself into nature. Touching leaves, smelling the fresh air, hearing birds chirping and the ruffles of leaves, playing with animals, are all experiences that leave an impact on someone who is seeking a grounding moment as a kinesthetic learner. It is also a way to leave lasting memories of moments in nature, as it can impact one’s perspective and mindset. Creating safe spaces in which others can be curious in, is an approach that works best for me, so I would love to implement this through my role as an interpreter. Regardless of someone’s idea of an “outdoor” person, or their inability to name a bunch of facts while outside, they deserve to feel like there is a place in nature for them, as that is how deep learning begins.

Through the teachings of the textbook, the intention of inspiring, not just teaching, is more impactful. Interpretation is not about having every answer. Therefore, by asking the right questions while giving people guidance, true interpretation from the audience can be created.

As we close this chapter of our ENVS*3000 course, I am able to carry with me the many lessons I have learnt throughout. Simply pausing to observe a little squirrel, or having a conversation beside a tree, are all little moments that can ripple out to great things, in ways that we may never know. Keep that sense of wonder that we all had as kids. That is our greatest asset as humans; our ability to be curious and diving into the unknown.

References

Beck, L., Cable, T. T., & Knudson, D. M. (2018). Interpreting cultural and natural heritage for a better world (1st ed.). Sagamore Publishing.

Louv, R. (2008). Last child in the woods: Saving our children from nature-deficit disorder. Algonquin Books.

2 notes

·

View notes