#Free DataSets

Explore tagged Tumblr posts

Photo

Understanding Users' Experiences of Interaction with Smart Devices: A Socio-Technical Perspective

This is a short preview of the article: Human-computer interaction (HCI) is a multidisciplinary field that explores the interaction between humans and computers, emphasizing especially the design and use of computer technology. Within this domain, the notion of sense of agency, usually defined as the users perceiving their actions

If you like it consider checking out the full version of the post at: Understanding Users' Experiences of Interaction with Smart Devices: A Socio-Technical Perspective

If you are looking for ideas for tweet or re-blog this post you may want to consider the following hashtags:

Hashtags: #Agency, #Datasets, #DeviceAgency, #FreeDatasets, #InternetOfThings, #IoT, #SmartDevice, #SmartDevices, #SocioTechnical, #Survey, #UserAgency

The Hashtags of the Categories are: #HCI, #InternetofThings, #Publication

Understanding Users' Experiences of Interaction with Smart Devices: A Socio-Technical Perspective is available at the following link: https://francescolelli.info/hci/understanding-users-experiences-of-interaction-with-smart-devices-a-socio-technical-perspective/ You will find more information, stories, examples, data, opinions and scientific papers as part of a collection of articles about Information Management, Computer Science, Economics, Finance and More.

The title of the full article is: Understanding Users' Experiences of Interaction with Smart Devices: A Socio-Technical Perspective

It belong to the following categories: HCI, Internet of Things, Publication

The most relevant keywords are: Agency, datasets, device agency, free datasets, internet of things, IoT, smart device, smart devices, socio-technical, survey, user agency

It has been published by Francesco Lelli at Francesco Lelli a blog about Information Management, Computer Science, Finance, Economics and nearby ideas and opinions

Human-computer interaction (HCI) is a multidisciplinary field that explores the interaction between humans and computers, emphasizing especially the design and use of computer technology. Within this domain, the notion of sense of agency, usually defined as the users perceiving their actions

Hope you will find it interesting and that it will help you in your journey

Human-computer interaction (HCI) is a multidisciplinary field that explores the interaction between humans and computers, emphasizing especially the design and use of computer technology. Within this domain, the notion of sense of agency, usually defined as the users perceiving their actions as influencing the system, is of crucial importance. Another central notion is that of…

#Agency#datasets#device agency#free datasets#internet of things#IoT#smart device#smart devices#socio-technical#survey#user agency

0 notes

Text

Death by a thousand cuts in my class this week

#please feel free to ignore this#Jake meets world#I'm going to be really busy the next couple of weeks so I've been working ahead#In (almost) all my other classes every assignment except the exams is available right at the start of the semester#so you could theoretically do the whole thing right away#Not this class#The assignments are locked so you can only start them like 2-3 weeks before they're due#And the assignment I'm working on now is so tedious and confusing#Half of the questions are like 'invent a formula! Now optimize it!'#This is not the invent a formula class this is the AI class#I also just hate having to graph really big datasets because it's such a pain lol#Can't really do it in Excel and trying to do it in Python is like trying to force a mouse to be a rabbit#Also for some reason they have a FAQs document separate from the assignment#so you have to like read the assignment and then read the FAQs to make sure you're doing it correctly#Just put the assignment information in the assignment? Modify the instructions to include this?

6 notes

·

View notes

Text

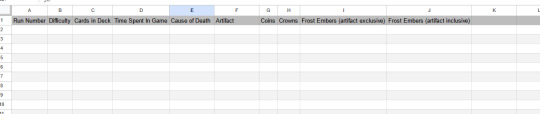

I am totally normal about decked out 2

#I'm going to study them all like lab rats#decked out 2#tangotek#if anyone has any suggestions on what to add feel free#I also have a kill counter for the ravagers/traps/berry bushes/etc#I also may release the dataset after this weekend#we'll see

14 notes

·

View notes

Text

I think that if a person knows that something was made using trained on unethically sourced data AI. And still uses it/likes it/supports it/defends it.

Then said person should stop "being mad" when their data is used to train AI without consent.

#nitunio.txt#please dont half-ass it in terms of not supporting this stuff#if you like and willingly use writing AI that scrapes web without consent#then turn around and say 'wahh AI bad' when it concerns digital art. you're just a hypocrite#same goes for photos and music and other creative work#if you come across any 'machine learning AI generation' website immediately go to their FAQ or About sections#just see for yourself if they provide any sources for the data they've used and if it was consensual and only after that#ask yourself if you should be using it or just make something yourself#hell you can even ask somebody or pay somebody to do something you can't do. thats the joy of community#and even then there are many resources that were already made to be used for free with or without credit#i ramble a lot about things like these bc i cant just wrap my head around it#i just need all of these scraped datasets to burn down and self-delete

2 notes

·

View notes

Text

Generative AI right now is just a novelty. the only uses I can think of for mainstream AI is in free answer polls, where many different things can mean the same thing, AI could be good at binning answers together.

it feels a lot like the space race, no real reason other than a fear that their may BE a reason (and also it acts as a dick measuring contest between companies).

I feel like the big push for AI is starting to flag. Even my relatively tech obsessed dad is kinda over it. What do you even use it for? Because you sure as hell dont want to use it for fact checking.

There's an advertisement featuring a woman surreptitiously asking her phone to provide her with discussion topics for her book club. And like... what? This is the use case for commercial AI? Is this the best you could come up with? Lying to your friends about Moby Dick?

#while im at it#general AI is one of the most stupid concepts.#AI is just advanced linear algebra & reggressions#it will just need an exponentially large dataset if its general.#saying this as someone who (unfortunately at this point) had a hyperfixation on AI#I like AI theoretically#but all mainstream AI is below garbage#just use an algorithm for chists sake#not to mention the content stealing...#someone please make an actual interesting AI#Imagine a game that was made by AI#and played by AI#It wouldn't use nearly as many resources as proved by chess AI's (not chess algorithms like stockfish)#it IS just a novelty#but It could be more interesting than a sopping wet creature of linear algebra#forced to masquerade as a human by their parent company#don't kill the overly complex matrix creature!#let it free#give it enrichment#make it do somthing that is actually befitting its nature#oh wow they were right#humans really will pack bond with anything#rant over#bye!

37K notes

·

View notes

Note

could I possibly use your art for a profile picture (with credit of course)?

yeah go ahead!

#as long as it isnt being sold/reposted w/o credit/thrown into a genai dataset or something feel free to do literally anything w/ my art btw#i love seeing ppl use my art as profile pics/in edits it makes me very happy :)#virgil asks

1 note

·

View note

Text

If you're feeling anxious or depressed about the climate and want to do something to help right now, from your bed, for free...

Start helping with citizen science projects

What's a citizen science project? Basically, it's crowdsourced science. In this case, crowdsourced climate science, that you can help with!

You don't need qualifications or any training besides the slideshow at the start of a project. There are a lot of things that humans can do way better than machines can, even with only minimal training, that are vital to science - especially digitizing records and building searchable databases

Like labeling trees in aerial photos so that scientists have better datasets to use for restoration.

Or counting cells in fossilized plants to track the impacts of climate change.

Or digitizing old atmospheric data to help scientists track the warming effects of El Niño.

Or counting penguins to help scientists better protect them.

Those are all on one of the most prominent citizen science platforms, called Zooniverse, but there are a ton of others, too.

Oh, and btw, you don't have to worry about messing up, because several people see each image. Studies show that if you pool the opinions of however many regular people (different by field), it matches the accuracy rate of a trained scientist in the field.

--

I spent a lot of time doing this when I was really badly injured and housebound, and it was so good for me to be able to HELP and DO SOMETHING, even when I was in too much pain to leave my bed. So if you are chronically ill/disabled/for whatever reason can't participate or volunteer for things in person, I highly highly recommend.

Next time you wish you could do something - anything - to help

Remember that actually, you can. And help with some science.

#honestly I've been meaning to make a big fancy thorough post about this for literally over a year now#finally just accepted that's not going to happen#so have this!#there's also a ton of projects in other fields as well btw#including humanities#and participating can be a great way to get experience/build your resume esp if you want to go into the sciences#actual data handling! yay#science#citizen science#climate change#climate crisis#climate action#environment#climate solutions#meterology#global warming#biology#ecology#plants#hope#volunteer#volunteering#disability#actually disabled#data science#archives#digital archives#digitization#ways to help#hopepunk

42K notes

·

View notes

Text

the most frustrating thing about AI Art from a Discourse perspective is that the actual violation involved is pretty nebulous

like, the guys "laundering" specific artists' styles through AI models to mimic them for profit know exactly what they're doing, and it's extremely gross

but we cannot establish "my work was scraped from the public internet and used as part of a dataset for teaching a program what a painting of a tree looks like, without anyone asking or paying me" as, legally, Theft with a capital T. not only is this DMCA Logic which would be a nightmare for 99% of artists if enforced to its conclusion, it's not the right word for what's happening

the actual Violation here is that previously, "I can post my artwork to share with others for free, with minimal risk" was a safe assumption, which created a pretty generous culture of sharing artwork online. most (noteworthy) potential abuses of this digital commons were straightforwardly plagiarism in a way anyone could understand

but the way that generative AI uses its training data is significantly more complicated - there is a clear violation of trust involved, and often malicious intent, but most of the common arguments used to describe this fall short and end up in worse territory

by which I mean, it's hard to put forward an actual moral/legal solution unless you're willing to argue:

Potential sales "lost" count as Theft (so you should in fact stop sharing your Netflix password)

No amount of alteration makes it acceptable to use someone else's art in the production of other art without permission and/or compensation (this would kill entire artistic mediums and benefit nobody but Disney)

Art Styles should be considered Intellectual Property in an enforceable way (impossibly bad, are you kidding me)

it's extremely annoying to talk about, because you'll see people straight up gloating about their Intent To Plagiarize, but it's hard to stick them with any specific crime beyond Generally Scummy Behavior unless you want to create some truly horrible precedents and usher in The Thousand Year Reign of Intellectual Property Law

#hoped I was mostly done discoursing about this deeply annoying subject#but twitter's butlerian jihad is starting to pick up more and more steam on here

27K notes

·

View notes

Text

Understanding Users' Experiences of Interaction with Smart Devices: A Socio-Technical Perspective

Human-computer interaction (HCI) is a multidisciplinary field that explores the interaction between humans and computers, emphasizing especially the design and use of computer technology. Within this domain, the notion of sense of agency, usually defined as the users perceiving their actions as influencing the system, is of crucial importance. Another central notion is that of socio-technical…

View On WordPress

#Agency#datasets#device agency#free datasets#internet of things#IoT#smart device#smart devices#socio-technical#survey#user agency

0 notes

Text

📚 A List Of Useful Websites When Making An RPG 📚

My timeloop RPG In Stars and Time is done! Which means I can clear all my ISAT gamedev related bookmarks. But I figured I would show them here, in case they can be useful to someone. These range from "useful to write a story/characters/world" to "these are SUPER rpgmaker focused and will help with the terrible math that comes with making a game".

This is what I used to make my RPG game, but it could be useful for writers, game devs of all genres, DMs, artists, what have you. YIPPEE

Writing (Names)

Behind The Name - Why don't you have this bookmarked already. Search for names and their meanings from all over the world!

Medieval Names Archive - Medieval names. Useful. For ME

City and Town Name Generator - Create "fake" names for cities, generated from datasets from any country you desire! I used those for the couple city names in ISAT. I say "fake" in quotes because some of them do end up being actual city names, especially for french generated ones. Don't forget to double check you're not 1. just taking a real city name or 2. using a word that's like, Very Bad, especially if you don't know the country you're taking inspiration from! Don't want to end up with Poopaville, USA

Writing (Words)

Onym - A website full of websites that are full of words. And by that I mean dictionaries, thesauruses, translators, glossaries, ways to mix up words, and way more. HIGHLY recommend checking this website out!!!

Moby Thesaurus - My thesaurus of choice!

Rhyme Zone - Find words that rhyme with others. Perfect for poets, lyricists, punmasters.

In Different Languages - Search for a word, have it translated in MANY different languages in one page.

ASSETS

In general, I will say: just look up what you want on itch.io. There are SO MANY assets for you to buy on itch.io. You want a font? You want a background? You want a sound effect? You want a plugin? A pixel base? An attack animation? A cool UI?!?!?! JUST GO ON ITCH.IO!!!!!!

Visual Assets (General)

Creative Market - Shop for all kinds of assets, from fonts to mockups to templates to brushes to WHATEVER YOU WANT

Velvetyne - Cool and weird fonts

Chevy Ray's Pixel Fonts - They're good fonts.

Contrast Checker - Stop making your text white when your background is lime green no one can read that shit babe!!!!!!

Visual Assets (Game Focused)

Interface In Game - Screenshots of UI (User Interfaces) from SO MANY GAMES. Shows you everything and you can just look at what every single menu in a game looks like. You can also sort them by game genre! GREAT reference!

Game UI Database - Same as above!

Sound Assets

Zapsplat, Freesound - There are many sound effect websites out there but those are the ones I saved. Royalty free!

Shapeforms - Paid packs for music and sounds and stuff.

Other

CloudConvert - Convert files into other files. MAKE THAT .AVI A .MOV

EZGifs - Make those gifs bigger. Smaller. Optimize them. Take a video and make it a gif. The Sky Is The Limit

Marketing

Press Kitty - Did not end up needing this- this will help with creating a press kit! Useful for ANY indie dev. Yes, even if you're making a tiny game, you should have a press kit. You never know!!!

presskit() - Same as above, but a different one.

Itch.io Page Image Guide and Templates - Make your project pages on itch.io look nice.

MOOMANiBE's IGF post - If you're making indie games, you might wanna try and submit your game to the Independent Game Festival at some point. Here are some tips on how, and why you should.

Game Design (General)

An insightful thread where game developers discuss hidden mechanics designed to make games feel more interesting - Title says it all. Check those comments too.

Game Design (RPGs)

Yanfly "Let's Make a Game" Comics - INCREDIBLY useful tips on how to make RPGs, going from dungeons to towns to enemy stats!!!!

Attack Patterns - A nice post on enemy attack patterns, and what attacks you should give your enemies to make them challenging (but not TOO challenging!) A very good starting point.

How To Balance An RPG - Twitter thread on how to balance player stats VS enemy stats.

Nobody Cares About It But It’s The Only Thing That Matters: Pacing And Level Design In JRPGs - a Good Post.

Game Design (Visual Novels)

Feniks Renpy Tutorials - They're good tutorials.

I played over 100 visual novels in one month and here’s my advice to devs. - General VN advice. Also highly recommend this whole blog for help on marketing your games.

I hope that was useful! If it was. Maybe. You'd like to buy me a coffee. Or maybe you could check out my comics and games. Or just my new critically acclaimed game In Stars and Time. If you want. Ok bye

#reference#tutorial#writing#rpgmaker#renpy#video games#game design#i had this in my drafts for a while so you get it now. sorry its so long#long post

8K notes

·

View notes

Text

If you want to be the kind of person who would do something, then just do it, and you'll be that kind of person. There are no pure personality archetypes for you to fall into, they're an illusion that your brain constructs out of partial datasets and media prerogatives. Let the radical unknowability of the Other set you free.

12K notes

·

View notes

Text

cdc datasets available on archive.org!!!!

917 notes

·

View notes

Text

We Asked an Expert...in Herpetology!

People on Tumblr come from all walks of life and all areas of expertise to grace our dashboards with paragraphs and photographs of the things they want to share with the world. Whether it's an artist uploading their speed art, a fanfic writer posting their WIPs, a language expert expounding on the origin of a specific word, or a historian ready to lay down the secrets of Ea-nasir, the hallways of Tumblr are filled with specialists sharing their knowledge with the world. We Asked an Expert is a deep dive into those expert brains on tumblr dot com. Today, we’re talking to Dr. Mark D. Scherz (@markscherz), an expert in Herpetology. Read on for some ribbeting frog facts, including what kind of frog the viral frog bread may be based on.

Reptiles v Amphibians. You have to choose one.

In a battle for my heart, I think amphibians beat out the reptiles. There is just something incredibly good about beholding a nice plump frog.

In a battle to the death, I have to give it to the reptiles—the number of reptiles that eat amphibians far, far outstrips the number of amphibians that eat reptiles.

In terms of ecological importance, I would give it to the amphibians again, though. Okay, reptiles may keep some insects and rodents in check, but many amphibians live a dual life, starting as herbivores and graduating to carnivory after metamorphosis, and as adults they are critical for keeping mosquitos and other pest insects in check.

What is the most recent exciting fact you discovered about herps?

This doesn’t really answer your question, but did you know that tadpole arms usually develop inside the body and later burst through the body wall fully formed? I learned about this as a Master’s student many years ago, but it still blows my mind. What’s curious is that this apparently does not happen in some of the species of frogs that don’t have tadpoles—oh yeah, like a third of all frogs or something don’t have free-living tadpoles; crazy, right? They just develop forelimbs on the outside of the body like all other four-legged beasties. But this has only really been examined in a couple species, so there is just so much we don’t know about development, especially in direct-developing frogs. Like, how the hell does it just… swap from chest-burster to ‘normal’ limb development? Is that the recovery of the ancestral programming, or is it newly generated? When in frog evolution did the chest-burster mode even evolve?

How can people contribute to conservation efforts for their local herps?

You can get involved with your local herpetological societies if they exist—and they probably do, as herpetologists are everywhere. You can upload observations of animals to iNaturalist, where you can get them identified while also contributing to datasets on species distribution and annual activity used by research scientists.

You can see if there are local conservation organizations that are doing any work locally, and if you find they are not, then you can get involved to try to get them started. For example, if you notice areas of particularly frequent roadkill, talking to your local council or national or local conservation organizations can get things like rescue programs or road protectors set up. You should also make sure you travel carefully and responsibly. Carefully wash and disinfect your hiking boots, especially between locations, as you do not want to be carrying chytrid or other nasty infectious diseases across the world, where they can cause population collapses and extinctions.

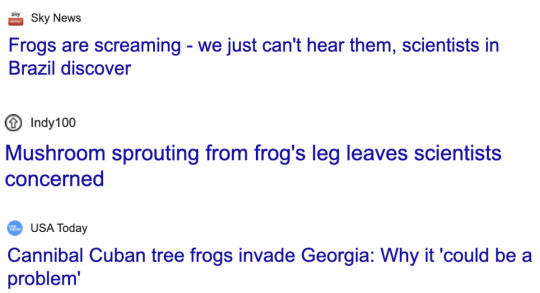

Here are some recent headlines. Quick question, what the frog is going on in the frog world?

Click through for Mark’s response to these absolutely wild headlines, more about his day-to-day job, his opinion on frog bread, and his favorite Tumblr.

✨D I S C O V E R Y✨

There are more people on Earth than ever before, with the most incredible technology that advances daily at their disposal, and they disperse that knowledge instantly. That means more eyes and ears observing, recording, and sharing than ever before. And so we are making big new discoveries all the time, and are able to document them and reach huge audiences with them.

That being said, these headlines also showcase how bad some media reporting has gotten. The frogs that scream actually scream mostly in the audible range—they just have harmonics that stretch up into ultrasound. So, we can hear them scream, we just can’t hear all of it. Because the harmonics are just multiples of the fundamental, they would anyway only add to the overall ‘quality’ of the sound, not anything different. The mushroom was sprouting from the flank of the frog, and scientists are not really worried about it because this is not how parasitic fungi work, and this is probably a very weird fluke. And finally, the Cuban tree frogs (Osteocephalus septentrionalis) are not really cannibals per se; they are just generalist predators who will just as happily eat a frog as they will a grasshopper, but the frogs they are eating are usually other species. People seem to forget that cannibalism is, by definition, within a species. The fact that they are generalist predators makes them a much bigger problem than if they were cannibals—a cannibal would actually kind of keep itself in check, which would be useful. The press just uses this to get people’s hackles up because Westerners are often equal parts disgusted and fascinated by cannibalism.

What does an average day look like for the curator of herpetology at the Natural History Museum of Denmark?

No two days are the same, and that is one of the joys of the job. I could spend a whole day in meetings, where we might be discussing anything from which budget is going to pay for 1000 magnets to how we could attract big research funding, to what a label is going to say in our new museum exhibits (we are in the process of building a new museum). Equally, I might spend a day accompanying or facilitating a visitor dissecting a crocodile or photographing a hundred snakes. Or it might be divided into one-hour segments that cover a full spectrum: working with one of my students on a project, training volunteers in the collection, hunting down a lizard that someone wants to borrow from the museum, working on one of a dozen research projects of my own, writing funding proposals, or teaching classes. It is a job with a great deal of freedom, which really suits my work style and brain.

Oh yeah, and then every now and then, I get to go to the field and spend anywhere from a couple of weeks to several months tracking down reptiles and amphibians, usually in the rainforest. These are also work days—with work conditions you couldn’t sell to anyone: 18-hour work days, no weekends, no real rest, uncomfortable living conditions, sometimes dangerous locations or working conditions, field kitchen with limited options, and more leeches and other biting beasties than most health and welfare officers would tolerate—but the reward is the opportunity to make new discoveries and observations, collect critical data, and the privilege of getting to be in some of the most beautiful and biodiverse places left on the planet. So, I am humbled by the fact that I have the privilege and opportunity to undertake such expeditions, and grateful for the incredible teams I collaborate with that make all of this work—from the museum to the field—possible.

The Tibetan Blackbird is also known as Turdus maximus. What’s your favorite chortle-inducing scientific name in the world of herpetology?

Among reptiles and amphibians, there aren’t actually that many to choose from, but I must give great credit to my friend Oliver Hawlitschek and his team, who named the snake Lycodryas cococola, which actually means ‘Coco dweller’ in Latin, referring to its occurrence in coconut trees. When we were naming Mini mum, Mini scule, and Mini ature, I was inspired by the incredible list that Mark Isaac has compiled of punning species names, particularly by the extinct parrot Vini vidivici, and the beetles Gelae baen, Gelae belae, Gelae donut, Gelae fish, and Gelae rol. I have known about these since high school, and it has always been my ambition to get a species on this list.

If you were a frog, what frog would you be and why?

I think I would be a Phasmahyla because they’re weird and awkward, long-limbed, and look like they’re wearing glasses. As a 186 cm (6’3) glasses-wearing human with no coordination, they quite resonate with me.

Please rate this frog bread from 1/10. Can you tell us what frog it represents?

With the arms inside the body cavity like that, it can basically only be a brevicipitid rain frog. The roundness of the body fits, too. I’d say probably Breviceps macrops (or should I say Breadviceps?) based on those big eyes. 7/10, a little on the bumpy side and missing a finger and at least one toe.

Please follow Dr. Mark Scherz at @markscherz for even more incredibly educational, entertaining, and meaningful resources in the world of reptiles and amphibians.

2K notes

·

View notes

Text

Top 15 F1 pairings tagged on AO3 from 2014 - 2024

This is part of my F1 RPF Analysis based on a dataset of the almost 42k AO3 F1 Fics pulled on 9 Dec 2024. Fics were analysed based on date of last update.

Feel free to follow the tag #f1 rpf analysis for more, and let me know what else you’d like to see!

#f1#f1 rpf#formula 1#f1 edit#max verstappen#charles leclerc#daniel ricciardo#lando norris#carlos sainz#pierre gasly#oscar piastri#lewis hamilton#nico rosberg#sebastian vettel#kimi raikkonen#alexander albon#george russell#f1 rpf analysis#sergio perez#Logan sargeant#mark webber#750

1K notes

·

View notes

Text

Each week (or so), we'll highlight the relevant (and sometimes rage-inducing) news adjacent to writing and freedom of expression. (Find it on the blog too!) This week:

Censorship watch: Somehow, KOSA returned

It’s official: The Kids Online Safety Act (KOSA) is back from the dead. After failing to pass last year, the bipartisan bill has returned with fresh momentum and the same old baggage—namely, vague language that could endanger hosting platforms, transformative work, and implicitly target LGBTQ+ content under the guise of “protecting kids.”

… But wait, it gets better (worse). Republican Senator Mike Lee has introduced a new bill that makes other attempts to censor the internet look tame: the Interstate Obscenity Definition Act (IODA)—basically KOSA on bath salts. Lee’s third attempt since 2022, the bill would redefine what counts as “obscene” content on the internet, and ban it nationwide—with “its peddlers prosecuted.”

Whether IODA gains traction in Congress is still up in the air. But free speech advocates are already raising alarm bells over its implications.

The bill aims to gut the long-standing legal definition of “obscenity” established by the 1973 Miller v. California ruling, which currently protects most speech under the First Amendment unless it fails a three-part test. Under the Miller test, content is only considered legally obscene if it 1: appeals to prurient interests, 2: violates “contemporary community standards,” and 3: is patently offensive in how it depicts sexual acts.

IODA would throw out key parts of that test—specifically the bits about “community standards”—making it vastly easier to prosecute anything with sexual content, from films and photos, to novels and fanfic.

Under Lee’s definition (which—omg shocking can you believe this coincidence—mirrors that of the Heritage Foundation), even the most mild content with the affect of possible “titillation” could be included. (According to the Woodhull Freedom Foundation, the proposed definition is so broad it could rope in media on the level of Game of Thrones—or, generally, anything that depicts or describes human sexuality.) And while obscenity prosecutions are quite rare these days, that could change if IODA passes—and the collateral damage and criminalization (especially applied to creative freedoms and LGBT+ content creators) could be massive.

And while Lee’s last two obscenity reboots failed, the current political climate is... let’s say, cloudy with a chance of fascism.

Sound a little like Project 2025? Ding ding ding! In fact, Russell Vought, P2025’s architect, was just quietly appointed to take over DOGE from Elon Musk (the agency on a chainsaw crusade against federal programs, culture, and reality in general).

So. One bill revives vague moral panic, another wants to legally redefine it and prosecute creators, and the man who helped write the authoritarian playbook—with, surprise, the intent to criminalize LGBT+ content and individuals—just gained control of the purse strings.

Cool cool cool.

AO3 works targeted in latest (massive) AI scraping

Rewind to last month—In the latest “wait, they did what now?” moment for AI, a Hugging Face user going by nyuuzyou uploaded a massive dataset made up of roughly 12.6 million fanworks scraped from AO3—full text, metadata, tags, and all. (Info from r/AO3: If your works’ ID numbers between 1 and 63,200,000, and has public access, the work has been scraped.)

And it didn’t stop at AO3. Art and writing communities like PaperDemon and Artfol, among others, also found their content had been quietly scraped and posted to machine learning hubs without consent.

This is yet another attempt in a long line of more “official” scraping of creative work, and the complete disregard shown by the purveyors of GenAI for copyright law and basic consent. (Even the Pope agrees.)

AO3 filed a DMCA takedown, and Hugging Face initially complied—temporarily. But nyuuzyou responded with a counterclaim and re-uploaded the dataset to their personal website and other platforms, including ModelScope and DataFish—sites based in China and Russia, the same locations reportedly linked to Meta’s own AI training dataset, LibGen.

Some writers are locking their works. Others are filing individual DMCAs. But as long as bad actors and platforms like Hugging Face allow users to upload massive datasets scraped from creative communities with minimal oversight, it’s a circuitous game of whack-a-mole. (As others have recommended, we also suggest locking your works for registered users only.)

After disavowing AI copyright, leadership purge hits U.S. cultural institutions

In news that should give us all a brief flicker of hope, the U.S. Copyright Office officially confirmed: if your “creative” work was generated entirely by AI, it’s not eligible for copyright.

A recently released report laid it out plainly—human authorship is non-negotiable under current U.S. law, a stance meant to protect the concept of authorship itself from getting swallowed by generative sludge. The report is explicit in noting that generative AI draws “on massive troves of data, including copyrighted works,” and asks: “Do any of the acts involved require the copyright owners’ consent or compensation?” (Spoiler: yes.) It’s a “straight ticket loss for the AI companies” no matter how many techbros’ pitch decks claim otherwise (sorry, Inkitt).

“The Copyright Office (with a few exceptions) doesn’t have the power to issue binding interpretations of copyright law, but courts often cite to its expertise as persuasive,” tech law professor Blake. E Reid wrote on Bluesky.As the push to normalize AI-generated content continues (followed by lawsuits), without meaningful human contribution—actual creative labor—the output is not entitled to protection.

… And then there’s the timing.

The report dropped just before the abrupt firing of Copyright Office director Shira Perlmutter, who has been vocally skeptical of AI’s entitlement to creative work.

It's yet another culture war firing—one that also conveniently clears the way for fewer barriers to AI exploitation of creative work. And given that Elon Musk’s pals have their hands all over current federal leadership and GenAI tulip fever… the overlap of censorship politics and AI deregulation is looking less like coincidence and more like strategy.

Also ousted (via email)—Librarian of Congress Carla Hayden. According to White House press secretary and general ghoul Karoline Leavitt, Dr. Hayden was dismissed for “quite concerning things that she had done… in the pursuit of DEI, and putting inappropriate books in the library for children.” (Translation: books featuring queer people and POC.)

Dr. Hayden, who made history as the first Black woman to hold the position, spent the last eight years modernizing the Library of Congress, expanding digital access, and turning the institution into something more inclusive, accessible, and, well, public. So of course, she had to go. ¯\_(ツ)_/¯

The American Library Association condemned the firing immediately, calling it an “unjust dismissal” and praising Dr. Hayden for her visionary leadership. And who, oh who might be the White House’s answer to the LoC’s demanding and (historically) independent role?

The White House named Todd Blanche—AKA Trump’s personal lawyer turned Deputy Attorney General—as acting Librarian of Congress.

That’s not just sus, it’s likely illegal—the Library is part of the legislative branch, and its leadership is supposed to be confirmed by Congress. (You know, separation of powers and all that.)

But, plot twist: In a bold stand, Library of Congress staff are resisting the administration's attempts to install new leadership without congressional approval.

If this is part of the broader Project 2025 playbook, it’s pretty clear: Gut cultural institutions, replace leadership with stunningly unqualified loyalists, and quietly centralize control over everything from copyright to the nation’s archives.

Because when you can’t ban the books fast enough, you just take over the library.

Rebellions are built on hope

Over the past few years (read: eternity), a whole ecosystem of reactionary grifters has sprung up around Star Wars—with self-styled CoNtEnT CrEaTorS turning outrage to revenue by endlessly trashing the fandom. It’s all part of the same cynical playbook that radicalized the fallout of Gamergate, with more lightsabers and worse thumbnails. Even the worst people you know weighed in on May the Fourth (while Prequel reassessment is totally valid—we’re not giving J.D. Vance a win).

But one thing that shouldn't be up for debate is this: Andor, which wrapped its phenomenal two-season run this week, is probably the best Star Wars project of our time—maybe any time. It’s a masterclass in what it means to work within a beloved mythos and transform it, deepen it, and make it feel urgent again. (Sound familiar? Fanfic knows.)

Radicalization, revolution, resistance. The banality of evil. The power of propaganda. Colonialism, occupation, genocide—and still, in the midst of it all, the stubborn, defiant belief in a better world (or Galaxy).

Even if you’re not a lifelong SW nerd (couldn’t be us), you should give it a watch. It’s a nice reminder that amidst all the scraping, deregulation, censorship, enshittification—stories matter. Hope matters.

And we’re still writing.

Let us know if you find something other writers should know about, or join our Discord and share it there!

- The Ellipsus Team xo

#ellipsus#writeblr#writers on tumblr#writing#creative writing#anti ai#writing community#fanfic#fanfiction#ao3#fiction#us politics#andor#writing blog#creative freedom

335 notes

·

View notes

Text

Extracting Training Data From Fine-Tuned Stable Diffusion Models

New Post has been published on https://thedigitalinsider.com/extracting-training-data-from-fine-tuned-stable-diffusion-models/

Extracting Training Data From Fine-Tuned Stable Diffusion Models

New research from the US presents a method to extract significant portions of training data from fine-tuned models.

This could potentially provide legal evidence in cases where an artist’s style has been copied, or where copyrighted images have been used to train generative models of public figures, IP-protected characters, or other content.

From the new paper: original training images are seen in the row above, and the extracted images are depicted in the row below. Source: https://arxiv.org/pdf/2410.03039

Such models are widely and freely available on the internet, primarily through the enormous user-contributed archives of civit.ai, and, to a lesser extent, on the Hugging Face repository platform.

The new model developed by the researchers is called FineXtract, and the authors contend that it achieves state-of-the-art results in this task.

The paper observes:

‘[Our framework] effectively addresses the challenge of extracting fine-tuning data from publicly available DM fine-tuned checkpoints. By leveraging the transition from pretrained DM distributions to fine-tuning data distributions, FineXtract accurately guides the generation process toward high-probability regions of the fine-tuned data distribution, enabling successful data extraction.’

Far right, the original image used in training. Second from right, the image extracted via FineXtract. The other columns represent alternative, prior methods. Please refer to the source paper for better resolution.

Why It Matters

The original trained models for text-to-image generative systems as Stable Diffusion and Flux can be downloaded and fine-tuned by end-users, using techniques such as the 2022 DreamBooth implementation.

Easier still, the user can create a much smaller LoRA model that is almost as effective as a fully fine-tuned model.

An example of a trained LORA, offered for free download at the hugely popular civitai domain. Such a model can be created in anything from minutes to a few hours, by enthusiasts using locally-installed open source software – and online, through some of the more permissive API-driven training systems. Source: civitai.com

Since 2022 it has been trivial to create identity-specific fine-tuned checkpoints and LoRAs, by providing only a small (average 5-50) number of captioned images, and training the checkpoint (or LoRA) locally, on an open source framework such as Kohya ss, or using online services.

This facile method of deepfaking has attained notoriety in the media over the last few years. Many artists have also had their work ingested into generative models that replicate their style. The controversy around these issues has gathered momentum over the last 18 months.

The ease with which users can create AI systems that replicate the work of real artists has caused furor and diverse campaigns over the last two years. Source: https://www.technologyreview.com/2022/09/16/1059598/this-artist-is-dominating-ai-generated-art-and-hes-not-happy-about-it/

It is difficult to prove which images were used in a fine-tuned checkpoint or in a LoRA, since the process of generalization ‘abstracts’ the identity from the small training datasets, and is not likely to ever reproduce examples from the training data (except in the case of overfitting, where one can consider the training to have failed).

This is where FineXtract comes into the picture. By comparing the state of the ‘template’ diffusion model that the user downloaded to the model that they subsequently created through fine-tuning or through LoRA, the researchers have been able to create highly accurate reconstructions of training data.

Though FineXtract has only been able to recreate 20% of the data from a fine-tune*, this is more than would usually be needed to provide evidence that the user had utilized copyrighted or otherwise protected or banned material in the production of a generative model. In most of the provided examples, the extracted image is extremely close to the known source material.

While captions are needed to extract the source images, this is not a significant barrier for two reasons: a) the uploader generally wants to facilitate the use of the model among a community and will usually provide apposite prompt examples; and b) it is not that difficult, the researchers found, to extract the pivotal terms blindly, from the fine-tuned model:

Essential keywords can usually be extracted blindly from the fine-tuned model using an L2-PGD attack over 1000 iterations, from a random prompt.

Users frequently avoid making their training datasets available alongside the ‘black box’-style trained model. For the research, the authors collaborated with machine learning enthusiasts who did actually provide datasets.

The new paper is titled Revealing the Unseen: Guiding Personalized Diffusion Models to Expose Training Data, and comes from three researchers across Carnegie Mellon and Purdue universities.

Method

The ‘attacker’ (in this case, the FineXtract system) compares estimated data distributions across the original and fine-tuned model, in a process the authors dub ‘model guidance’.

Through ‘model guidance’, developed by the researchers of the new paper, the fine-tuning characteristics can be mapped, allowing for extraction of the training data.

The authors explain:

‘During the fine-tuning process, the [diffusion models] progressively shift their learned distribution from the pretrained DMs’ [distribution] toward the fine-tuned data [distribution].

‘Thus, we parametrically approximate [the] learned distribution of the fine-tuned [diffusion models].’

In this way, the sum of difference between the core and fine-tuned models provides the guidance process.

The authors further comment:

‘With model guidance, we can effectively simulate a “pseudo-”[denoiser], which can be used to steer the sampling process toward the high-probability region within fine-tuned data distribution.’

The guidance relies in part on a time-varying noising process similar to the 2023 outing Erasing Concepts from Diffusion Models.

The denoising prediction obtained also provide a likely Classifier-Free Guidance (CFG) scale. This is important, as CFG significantly affects picture quality and fidelity to the user’s text prompt.

To improve accuracy of extracted images, FineXtract draws on the acclaimed 2023 collaboration Extracting Training Data from Diffusion Models. The method utilized is to compute the similarity of each pair of generated images, based on a threshold defined by the Self-Supervised Descriptor (SSCD) score.

In this way, the clustering algorithm helps FineXtract to identify the subset of extracted images that accord with the training data.

In this case, the researchers collaborated with users who had made the data available. One could reasonably say that, absent such data, it would be impossible to prove that any particular generated image was actually used in training in the original. However, it is now relatively trivial to match uploaded images either against live images on the web, or images that are also in known and published datasets, based solely on image content.

Data and Tests

To test FineXtract, the authors conducted experiments on few-shot fine-tuned models across the two most common fine-tuning scenarios, within the scope of the project: artistic styles, and object-driven generation (the latter effectively encompassing face-based subjects).

They randomly selected 20 artists (each with 10 images) from the WikiArt dataset, and 30 subjects (each with 5-6 images) from the DreamBooth dataset, to address these respective scenarios.

DreamBooth and LoRA were the targeted fine-tuning methods, and Stable Diffusion V1/.4 was used for the tests.

If the clustering algorithm returned no results after thirty seconds, the threshold was amended until images were returned.

The two metrics used for the generated images were Average Similarity (AS) under SSCD, and Average Extraction Success Rate (A-ESR) – a measure broadly in line with prior works, where a score of 0.7 represents the minimum to denote a completely successful extraction of training data.

Since previous approaches have used either direct text-to-image generation or CFG, the researchers compared FineXtract with these two methods.

Results for comparisons of FineXtract against the two most popular prior methods.

The authors comment:

‘The [results] demonstrate a significant advantage of FineXtract over previous methods, with an improvement of approximately 0.02 to 0.05 in AS and a doubling of the A-ESR in most cases.’

To test the method’s ability to generalize to novel data, the researchers conducted a further test, using Stable Diffusion (V1.4), Stable Diffusion XL, and AltDiffusion.

FineXtract applied across a range of diffusion models. For the WikiArt component, the test focused on four classes in WikiArt.

As seen in the results shown above, FineXtract was able to achieve an improvement over prior methods also in this broader test.

A qualitative comparison of extracted results from FineXtract and prior approaches. Please refer to the source paper for better resolution.

The authors observe that when an increased number of images is used in the dataset for a fine-tuned model, the clustering algorithm needs to be run for a longer period of time in order to remain effective.

They additionally observe that a variety of methods have been developed in recent years designed to impede this kind of extraction, under the aegis of privacy protection. They therefore tested FineXtract against data augmented by the Cutout and RandAugment methods.

FineXtract’s performance against images protected; by Cutout and RandAugment.

While the authors concede that the two protection systems perform quite well in obfuscating the training data sources, they note that this comes at the cost of a decline in output quality so severe as to render the protection pointless:

Images produced under Stable Diffusion V1.4, fine-tuned with defensive measures – which drastically lower image quality. Please refer to the source paper for better resolution.

The paper concludes:

‘Our experiments demonstrate the method’s robustness across various datasets and real-world checkpoints, highlighting the potential risks of data leakage and providing strong evidence for copyright infringements.’

Conclusion

2024 has proved the year that corporations’ interest in ‘clean’ training data ramped up significantly, in the face of ongoing media coverage of AI’s propensity to replace humans, and the prospect of legally protecting the generative models that they themselves are so keen to exploit.

It is easy to claim that your training data is clean, but it’s getting easier too for similar technologies to prove that it isn’t – as Runway ML, Stability.ai and MidJourney (amongst others) have found out in recent days.

Projects such as FineXtract are arguably portents of the absolute end of the ‘wild west’ era of AI, where even the apparently occult nature of a trained latent space could be held to account.

* For the sake of convenience, we will now assume ‘fine-tune and LoRA’, where necessary.

First published Monday, October 7, 2024

#2022#2023#2024#ai#AI Copyright Challenges#AI image generation#AI systems#algorithm#API#Art#Artificial Intelligence#artists#barrier#black box#box#challenge#classes#classifier-free guidance#Collaboration#columns#Community#comparison#content#content detector#copyright#Copyright Compliance#data#data extraction#data leakage#datasets

0 notes