#Generative Pre-trained Transformer

Explore tagged Tumblr posts

Text

What does ChatGPT stand for? GPT stands for Generative Pre-Trained Transformer. This means that it learns what to say by capturing information from the internet. It then uses all of this text to "generate" responses to questions or commands that someone might ask.

7 things you NEED to know about ChatGPT (and the many different things the internet will tell you.) (BBC)

#quote#ChatGPT#GPT#Generative Pre-Trained Transformer#AI#artificial intelligence#internet#technology#computers#digital#LLM#large language model#machine learning#information

8 notes

·

View notes

Text

Revolutionizing Presentation Creation: How AI is Transforming PowerPoint Generation

In today’s fast-paced professional environment, creating impactful presentations has become an essential skill. However, the traditional process of designing PowerPoint slides can be tedious and time-consuming. Enter AI-powered presentation generation tools, a game-changer for professionals and students alike. With innovations like AI Presentation Maker by Leaveit2AI, creating engaging and visually appealing slides is now easier than ever.

The Challenge of Traditional Presentation Creation

Crafting an effective PowerPoint presentation requires a delicate balance between content, design, and storytelling. Traditional methods often demand hours of manual work—researching topics, structuring content, aligning visuals, and tweaking layouts to perfection. For many, this process diverts valuable time away from core tasks, leading to stress and inefficiency.

How AI Revolutionizes Presentation Creation

AI tools for presentation generation are designed to streamline and enhance the entire process. These platforms leverage advanced algorithms to understand topics, structure content, and apply professional design principles, producing ready-to-use slides in minutes. Here’s how AI-driven solutions are transforming presentation creation:

Automated Content Structuring AI algorithms analyze your input topic and automatically generate a logical content flow. Whether you need bullet points, detailed paragraphs, or definitions, AI tools can structure your content coherently.

Professional Design Made Effortless AI tools offer a variety of themes and templates, ensuring a polished and professional appearance. They can also suggest visuals, infographics, and animations to enhance engagement.

Time Efficiency What once took hours can now be achieved in a fraction of the time. AI tools free up valuable time, allowing users to focus on the message rather than the mechanics of design.

Customization and Flexibility Modern AI tools allow users to customize their presentations to align with personal or brand-specific requirements. From fonts and color schemes to content tone, users can easily tweak outputs to meet their needs.

Accessibility for All AI-powered tools democratize the process of presentation creation, making it accessible to individuals without design or technical expertise.

Key Features of AI Presentation Maker by Leaveit2AI

One standout platform in this domain is the AI Presentation Maker by Leaveit2AI. Here’s what sets it apart:

Intelligent Topic Analysis: Simply input your topic, and the tool generates slides with relevant and structured content.

Customizable Themes and Content: Users can select from multiple themes and specify content types, ensuring presentations align with their objectives.

Image Integration: The platform seamlessly incorporates visuals, making slides more dynamic and engaging.

Export Options: Presentations can be exported in multiple formats, including PowerPoint and PDF, with editing options available in Google Slides.

User-Friendly Interface: Designed for ease of use, it caters to professionals, students, and educators looking for quick and effective presentation solutions.

Applications Across Industries

AI-powered presentation tools are finding applications in a variety of fields:

Business: For client pitches, internal meetings, and sales presentations.

Education: Helping teachers and students create compelling lectures and project presentations.

Freelancers: Simplifying the creation of portfolios or proposals.

Nonprofits: Creating impactful visuals for campaigns or donor meetings.

The Future of Presentation Creation

As AI continues to evolve, the possibilities for presentation creation are endless. Future advancements could include real-time audience feedback analysis, voice-to-slide capabilities, and even VR-powered immersive presentations.

Conclusion

AI-powered presentation tools like AI Presentation Maker by Leaveit2AI are revolutionizing how we create and deliver content. By automating the design process and providing smart customization options, these tools are empowering users to create professional-quality presentations effortlessly. Whether you're a seasoned professional or a student preparing for a class project, AI presentation generation is here to make your work easier, faster, and better.

Why spend hours on slides when AI can do it for you? Embrace the future of presentation creation and experience the difference today!

Visit AI Presentation Maker by Leaveit2AI to explore the possibilities.

1 note

·

View note

Text

Contact Enterprise Knowledge Advisor: Your Information Mining Solution (celebaltech.com)

#enterprise knowledge advisor#eka#information mining solution#gpt3 openai#ai language model#Generative Pre-trained Transformer

0 notes

Photo

New Post has been published on https://www.knewtoday.net/the-rise-of-openai-advancing-artificial-intelligence-for-the-benefit-of-humanity/

The Rise of OpenAI: Advancing Artificial Intelligence for the Benefit of Humanity

OpenAI is a research organization that is focused on advancing artificial intelligence in a safe and beneficial manner. It was founded in 2015 by a group of technology luminaries, including Elon Musk, Sam Altman, Greg Brockman, and others, with the goal of creating AI that benefits humanity as a whole.

OpenAI conducts research in a wide range of areas related to AI, including natural language processing, computer vision, robotics, and more. It also develops cutting-edge AI technologies and tools, such as the GPT series of language models, which have been used in a variety of applications, from generating realistic text to aiding in scientific research.

In addition to its research and development work, OpenAI is also committed to promoting transparency and safety in AI. It has published numerous papers on AI ethics and governance and has advocated for responsible AI development practices within the industry and among policymakers.

Introduction to OpenAI: A Brief History and Overview

An American artificial intelligence (AI) research facility called OpenAI is made as a non-profit organization. OpenAI Limited Partnership is its for-profit sister company. The stated goal of OpenAI’s AI research is to advance and create a benevolent AI. Microsoft’s Azure supercomputing platform powers OpenAI systems.

Ilya Sutskever, Greg Brockman, Trevor Blackwell, Vicki Cheung, Andrej Karpathy, Durk Kingma, John Schulman, Pamela Vagata, and Wojciech Zaremba created OpenAI in 2015; the inaugural board of directors included Sam Altman and Elon Musk. Microsoft invested $1 billion in OpenAI LP in 2019 and another $10 billion in 2023.

Brockman compiled a list of the “top researchers in the field” after meeting Yoshua Bengio, one of the “founding fathers” of the deep learning movement. In December 2015, Brockman was able to bring on nine of them as his first workers. In 2016, OpenAI paid business compensation rather than nonprofit payments to its AI researchers, but not salaries that were on par with Facebook or Google.

Several researchers joined the company because of OpenAI’s potential and mission; one Google employee claimed he was willing to leave the company “partly because of the very strong group of people and, to a very big extent, because of its mission.” Brockman said that advancing humankind’s ability to create actual AI in a secure manner was “the best thing I could imagine doing.” Wojciech Zaremba, a co-founder of OpenAI, claimed that he rejected “borderline ridiculous” offers of two to three times his market value in order to join OpenAI.

A public beta of “OpenAI Gym,” a platform for reinforcement learning research, was made available by OpenAI in April 2016. “Universe,” a software platform for assessing and honing an AI’s general intelligence throughout the universe of games, websites, and other applications, was made available by OpenAI in December 2016.

OpenAI’s Research Areas: Natural Language Processing, Computer Vision, Robotics, and More

In 2021, OpenAI will concentrate its research on reinforcement learning (RL).

Gym

Gym, which was introduced in 2016, intends to offer a general-intelligence benchmark that is simple to deploy across a wide range of environments—similar to, but more extensive than, the ImageNet Large Scale Visual Recognition Challenge used in supervised learning research. In order to make published research more easily replicable, it aims to standardize how environments are characterized in publications on AI. The project asserts that it offers a user-friendly interface. The gym may only be used with Python as of June 2017. The Gym documentation site was no longer maintained as of September 2017, and its GitHub page was the site of ongoing activity.

RoboSumo

In the 2017 virtual reality game RoboSumo, humanoid meta-learning robot agents compete against one another with the aim of learning how to move and shoving the rival agent out of the arena. When an agent is taken out of this virtual environment and placed in a different virtual environment with strong gusts, the agent braces to stay upright, indicating it has learned how to balance in a generic fashion through this adversarial learning process. Igor Mordatch of OpenAI contends that agent competition can lead to an intelligence “arms race,” which can improve an agent’s capacity to perform, even outside of the confines of the competition.

Video game bots

In the competitive five-on-five video game Dota 2, a squad of five OpenAI-curated bots known as OpenAI Five is utilized. These bots are trained to compete against human players at a high level solely by trial-and-error techniques. The first public demonstration took place at The International 2017, the yearly premier championship event for the game, where Dendi, a professional Ukrainian player, lost to a bot in a real-time one-on-one matchup before becoming a team of five. Greg Brockman, CTO, revealed after the game that the bot had learned by competing against itself for two weeks in real-time, and that the learning software was a step toward developing software that could perform intricate jobs like a surgeon.

By June 2018, the bots had improved to the point where they could play as a full team of five, defeating teams of amateur and semi-professional players. OpenAI Five competed in two exhibition games at The International 2018 against top players, but they both lost. In a live demonstration game in San Francisco in April 2019, OpenAI Five upset OG, the current global champions of the game, 2:0.During that month, the bots made their last public appearance, winning 99.4% of the 42,729 games they participated in over a four-day open internet competition.

Dactyl

In 2018 Dactyl uses machine learning to teach a Shadow Hand, a robotic hand that resembles a human hand, how to manipulate actual objects. It uses the same RL algorithms and training code as OpenAI Five to learn totally in simulation. Domain randomization, a simulation method that exposes the learner to a variety of experiences rather than attempting to match them to reality, was used by OpenAI to address the object orientation problem. Dactyl’s setup includes RGB cameras in addition to motion tracking cameras so that the robot may control any object simply by looking at it. In 2018, OpenAI demonstrated that the program could control a cube and an octagonal prism.

2019 saw OpenAI present Dactyl’s ability to solve a Rubik’s Cube. 60% of the time, the robot was successful in resolving the puzzle. It is more difficult to model the complex physics introduced by items like Rubik’s Cube. This was resolved by OpenAI by increasing Dactyl’s resistance to disturbances; they did this by using a simulation method known as Automated Domain Randomization (ADR),

OpenAI’s GPT model

Alec Radford and his colleagues wrote the initial study on generative pre-training of a transformer-based language model, which was released as a preprint on OpenAI’s website on June 11, 2018. It demonstrated how pre-training on a heterogeneous corpus with lengthy stretches of continuous text allows a generative model of language to gain world knowledge and understand long-range dependencies.

A language model for unsupervised transformers, Generative Pre-trained Transformer 2 (or “GPT-2”) is the replacement for OpenAI’s first GPT model. The public initially only saw a few number of demonstrative copies of GPT-2 when it was first disclosed in February 2019. GPT-2’s complete release was delayed due to worries about potential abuse, including uses for creating fake news. Some analysts questioned whether GPT-2 posed a serious threat.

It was trained on the WebText corpus, which consists of little more than 8 million documents totaling 40 gigabytes of text from Links published in Reddit contributions that have received at least three upvotes. Adopting byte pair encoding eliminates some problems that can arise when encoding vocabulary with word tokens. This makes it possible to express any string of characters by encoding both single characters and tokens with multiple characters.

GPT-3

Benchmark results for GPT-3 were significantly better than for GPT-2. OpenAI issued a warning that such language model scaling up might be nearing or running into the basic capabilities limitations of predictive language models.

Many thousand petaflop/s-days of computing were needed for pre-training GPT-3 as opposed to tens of petaflop/s-days for the complete GPT-2 model. Similar to its predecessor, GPT-3’s fully trained model wasn’t immediately made available to the public due to the possibility of abuse, but OpenAI intended to do so following a two-month free private beta that started in June 2020. Access would then be made possible through a paid cloud API.

GPT-4

The release of the text- or image-accepting Generative Pre-trained Transformer 4 (GPT-4) was announced by OpenAI on March 14, 2023. In comparison to the preceding version, GPT-3.5, which scored in the bottom 10% of test takers,

OpenAI said that the revised technology passed a simulated law school bar exam with a score in the top 10% of test takers. GPT-4 is also capable of writing code in all of the major programming languages and reading, analyzing, or producing up to 25,000 words of text.

DALL-E and CLIP images

DALL-E, a Transformer prototype that was unveiled in 2021, generates visuals from textual descriptions. CLIP, which was also made public in 2021, produces a description for an image.

DALL-E interprets natural language inputs (such as an astronaut riding on a horse)) and produces comparable visuals using a 12-billion-parameter version of GPT-3. It can produce pictures of both actual and unreal items.

ChatGPT and ChatGPT Plus

An artificial intelligence product called ChatGPT, which was introduced in November 2022 and is based on GPT-3, has a conversational interface that enables users to ask queries in everyday language. The system then provides an answer in a matter of seconds. Five days after its debut, ChatGPT had one million members.

ChatGPT Plus is a $20/month subscription service that enables users early access to new features, faster response times, and access to ChatGPT during peak hours.

Ethics and Safety in AI: OpenAI’s Commitment to Responsible AI Development

As artificial intelligence (AI) continues to advance and become more integrated into our daily lives, concerns around its ethics and safety have become increasingly urgent. OpenAI, a research organization focused on advancing AI in a safe and beneficial manner, has made a commitment to responsible AI development that prioritizes transparency, accountability, and ethical considerations.

One of the ways that OpenAI has demonstrated its commitment to ethical AI development is through the publication of numerous papers on AI ethics and governance. These papers explore a range of topics, from the potential impact of AI on society to the ethical implications of developing powerful AI systems. By engaging in these discussions and contributing to the broader AI ethics community, OpenAI is helping to shape the conversation around responsible AI development.

Another way that OpenAI is promoting responsible AI development is through its focus on transparency. The organization has made a point of sharing its research findings, tools, and technologies with the wider AI community, making it easier for researchers and developers to build on OpenAI’s work and improve the overall quality of AI development.

In addition to promoting transparency, OpenAI is also committed to safety in AI. The organization recognizes the potential risks associated with developing powerful AI systems and has taken steps to mitigate these risks. For example, OpenAI has developed a framework for measuring AI safety, which includes factors like robustness, alignment, and transparency. By considering these factors throughout the development process, OpenAI is working to create AI systems that are both powerful and safe.

OpenAI has also taken steps to ensure that its own development practices are ethical and responsible. The organization has established an Ethics and Governance board, made up of external experts in AI ethics and policy, to provide guidance on OpenAI’s research and development activities. This board helps to ensure that OpenAI’s work is aligned with its broader ethical and societal goals.

Overall, OpenAI’s commitment to responsible AI development is an important step forward in the development of AI that benefits humanity as a whole. By prioritizing ethics and safety, and by engaging in open and transparent research practices, OpenAI is helping to shape the future of AI in a positive and responsible way.

Conclusion: OpenAI’s Role in Shaping the Future of AI

OpenAI’s commitment to advancing AI in a safe and beneficial manner is helping to shape the future of AI. The organization’s focus on ethical considerations, transparency, and safety in AI development is setting a positive example for the broader AI community.

OpenAI’s research and development work is also contributing to the development of cutting-edge AI technologies and tools. The GPT series of language models, developed by OpenAI, have been used in a variety of applications, from generating realistic text to aiding in scientific research. These advancements have the potential to revolutionize the way we work, communicate, and learn.

In addition, OpenAI’s collaborations with industry leaders and their impact on real-world applications demonstrate the potential of AI to make a positive difference in society. By developing AI systems that are safe, ethical, and transparent, OpenAI is helping to ensure that the benefits of AI are shared by all.

As AI continues to evolve and become more integrated into our daily lives, the importance of responsible AI development cannot be overstated. OpenAI’s commitment to ethical considerations, transparency, and safety is an important step forward in creating AI that benefits humanity as a whole. By continuing to lead the way in responsible AI development, OpenAI is helping to shape the future of AI in a positive and meaningful way.

Best Text to Speech AI Voices

#Artificial intelligence#ChatGPT#ChatGPT Plus#Computer Vision#DALL-E#Elon Musk#Future of AI#Generative Pre-trained Transformer#GPT#Natural Language Processing#OpenAI#OpenAI&039;s GPT model#Robotics#Sam Altman#Video game bots

1 note

·

View note

Text

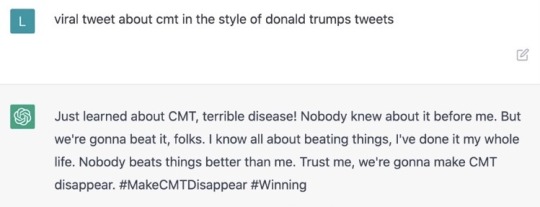

#tumblr shitpost#chatgpt#chat generative pre trained transformer#chat gpt#cmt#charcot marie tooth#twinkdrama

10 notes

·

View notes

Text

Leveraging the Power of AI: How Celebal's EKA Can Revolutionize Enterprise Knowledge Management

In today's data-driven world, businesses are constantly accumulating information from various sources. This includes emails, documents, presentations, and more. Managing and effectively utilizing this vast knowledge base can be a significant challenge. Here's where Celebal Technologies' Enterprise Knowledge Advisor (EKA) comes in. EKA is a revolutionary information mining solution powered by OpenAI's Generative Pre-trained Transformer (GPT-3) technology, designed to empower businesses to unlock the true potential of their internal knowledge.

What is EKA and How Does it Work?

EKA is an AI-powered information retrieval system that goes beyond simple keyword searches. It leverages the capabilities of GPT-3, a cutting-edge AI language model, to understand the context and intent behind user queries. This allows EKA to deliver highly relevant and insightful results, even for complex or nuanced questions.

Here's a breakdown of how EKA works:

Deep Knowledge Ingestion: EKA seamlessly integrates with various enterprise data sources, ingesting and indexing a wide range of documents, emails, and other internal content.

Advanced Natural Language Processing (NLP): It utilizes NLP techniques to comprehend the meaning and relationships within the ingested data. This enables EKA to not only identify relevant documents but also understand the context and connections between them.

AI-powered Search and Retrieval: When a user submits a query, EKA employs its AI capabilities to analyze the query and user intent. It then retrieves the most pertinent information from the indexed knowledge base, considering not just keywords but also the context and relationships within the data.

Intelligent Information Delivery: It presents the retrieved information in a user-friendly and informative way. It can highlight key points, summarize findings, and even suggest related content that might be valuable to the user.

Benefits of Utilizing EKA for Enterprise Knowledge Management

GPT-3 OpenAI-powered EKA offers a multitude of advantages for businesses seeking to optimize their knowledge management practices. Here are some of the key benefits:

Enhanced Search Accuracy and Relevance: EKA's AI-powered search capabilities deliver highly relevant results that directly address user queries. This eliminates the need for users to sift through irrelevant information, saving them valuable time and effort.

Improved User Engagement: EKA's intuitive interface and intelligent information delivery make it easy for users to find the information they need. This can lead to increased user engagement with the knowledge base and a more informed workforce.

Boosted Productivity: By providing users with quick and easy access to the information they need, EKA can significantly improve employee productivity. Less time spent searching for information translates to more time dedicated to core tasks and strategic initiatives.

Knowledge Democratization: EKA empowers all employees, regardless of their technical expertise, to access and utilize the organization's knowledge base effectively. This fosters a culture of knowledge-sharing and collaboration.

Data-driven Decision-making: With EKA, businesses can leverage their internal knowledge to make more informed decisions. EKA's ability to surface relevant insights and connections within the data can provide valuable guidance for strategic planning and problem-solving.

A Real-World Example of EKA's Impact

According to Celebal Technologies, a major media conglomerate using EKA reported a significant increase of 25% in user engagement with their internal knowledge base. This demonstrates the effectiveness of EKA in making information more accessible and user-friendly, ultimately leading to a more informed and productive workforce.

The Future of Enterprise Knowledge Management with EKA

EKA represents a significant leap forward in the realm of enterprise knowledge management. As AI technology continues to evolve, we can expect EKA's capabilities to become even more sophisticated. Here are some potential future advancements:

Advanced Personalization: EKA could personalize search results and information delivery based on individual user preferences and past search behavior.

Integration with Cognitive Tools: EKA could integrate with other cognitive tools and applications, allowing for a more seamless flow of information and knowledge within the organization.

Enhanced Knowledge Graph Capabilities: EKA's ability to understand relationships and connections within data could be further refined, enabling more advanced knowledge graph functionalities.

Conclusion

Celebal Technologies’ Enterprise Knowledge Advisor represents a significant advancement in enterprise knowledge management. By leveraging the power of GPT-3 OpenAI and the Generative Pre-trained Transformer model, EKA offers a comprehensive information mining solution that enhances decision-making, improves efficiency, and provides a competitive advantage. Organizations across various industries can benefit from the transformative capabilities of EKA, unlocking the full potential of their data assets. As businesses continue to navigate an increasingly data-driven world, tools like EKA will be essential in driving innovation and success. To learn more about EKA, schedule a free consulting session with the experts at [email protected].

#enterprise knowledge advisor#eka#information mining solution#gpt3 openai#ai language model#Generative Pre-trained Transformer

0 notes

Text

GPT-4 (Generative Pre-trained Transformer 4) નો ઉપયોગ

GPT-4 (Generative Pre-trained Transformer 4) નો ઉપયોગ અનેક ક્ષેત્રોમાં કરવામાં આવે છે અને તે બહુ ઉપયોગી સાબિત થાય છે. અહીં કેટલાક મુખ્ય ઉપયોગો જણાવ્યા છે:1. **લેખન અને સામગ્રી સર્જન**: લખાણની રચના, બ્લોગ પોસ્ટ્સ, લેખ, કવિતાઓ અને સ્ક્રિપ્ટ્સ જેવા મલ્ટિમીડિયા સામગ્રી બનાવવા માટે.2. **ગ્રાહક સેવા**: ચેટબોટ્સ અને વર્ચ્યુઅલ અસિસ્ટન્ટ્સ તરીકે ગ્રાહકોને ત्वरિત અને અસરકારક જવાબો આપવા માટે.3. **અનુસંધાન…

View On WordPress

0 notes

Text

new religion just dropped

4 notes

·

View notes

Text

Boost Your Business Edge with GPTs

A Deep Dive into the World of Customized GPTs FeatureStandard GPTsCustom GPTsKnowledge BaseBroad and generalSpecialized and focusedRelevanceGeneral-purposeHighly contextualApplicationsWide-rangingIndustry-specificCustom GPT Types Hello, dear reader! Today, We are thrilled to guide you through the fascinating world of Custom Generative Pre-trained Transformers, commonly known as Custom GPTs.…

View On WordPress

0 notes

Note

Do you have a timelime for your sparkplug au?

Yes and It's LONG. This isn't even all of it but it's what I have written out at least

Timeline: important plot points

Pre war

Orion pax and D-16 are born

Both experience the loss of parental guardian

D-16 is taken to the pit

Orion pax breaks into the pit, he and Dee start the foundations of the Decepticon cause

Revolution starts to take shape on Cybertron

Orion is killed in an attempt to stop D from falling down the slippery slope of a rage filled warmonger

Orion is brought back as Optimus prime

OG Ultra Magnus makes him a general in the Autobot ranks

During war

War goes on for like…. Long ass time

Autobots land on earth

Main decepticons fallow suit and step up shop due to amount of resources

Governments make deals with different factions in place of protection and access to weapon technology

Eventually Megatron has a “oh fuck” moment when he invades an illegal mining operation in central Africa. It puts into perspective how far he has fallen, seeing his commanders make deals with those who profited off the mines, just like those in power back on cybertron.

Midlife crisis, Megatron leaves the Decepticon cause, he takes Soundwave with him. Declares he will do whatever he needs in order to free those enslaved on this planet

Megatron joins the Autobots, this cases Prowl to leave and switch sides

Battles pick up heat as both sides are desperate

Millionaires and those in places of high power use Cybertronian technology to flee earth and live in space as earth is being destroyed

Starscream kills Optimus Prime in an attempt to kill Megatron. Both sides retreat as a result

Optimus splits the matrix and gives it to Hotrod and bumblebee,

Rodimus prime and Vespa Prime are born

Optimus Prime’s spark is put in a reformation chamber with parts of Megatron in an attempt to bring him back

Treaties are made and the decepticons take Cybertron as the Autobots stay on earth

Post war

Decepticons start rebuilding Cybertroinian society

Shockwave finishes creating a replacement for Soundwave

Soundblaster is born

The first sparkling born on Cybertron in millenia emerges

Nightflyer is born

Due to the splitting of the matrix of leadership, dormant energon on earth awakens and allows for new sparklings to emerge

The attempt the revive Optimus prime’s spark fails

Sparkplug is born

A new era

Earth

The Autobots have made it their mission to help reform the planet and help the humans rebuild

Rodimus prime leaves earth in a hope to find some kind of explanation for why he feels like everything is in the shitter

Subsections of colonies start to pop up, some keep to themselves, others work directly with the autobots, some hate transformers entirely

Railroads are made more efficient in order to transport supplies across countries

Earth starts to heal with the help of the matrix, forests grow and temperatures fall to a normal level

Major cities act as sanctuaries for the human population, help from other alien races arrives as well, helping earth to become a space traveling hub

Cybertron

The Decepticons no longer go by that name, no longer wanting to be associated with the past. They go by Workers of Prime

Shockwave has put together a complicated and purposeful chain of command and leadership that he sits at the top of. However Starscream is the “king” of Cybertron, while being a puppet

Prowl takes care of enforcing laws and regulations to the planet. Along with trying to unite the cities with one another

Cybertron now has a entertainment industry, focused on promoting good morals to the population along with keeping bots distracted

Cybertron only communicate with it’s colony planets, trying to form a stronger relationship between all transformers

“Peace times” (start of the story)

Sparkplug is currently working as the assistant of Ratchet under the blessing of Megatron and Elita one

Sparkplug trains in her free time to be a scout and will sneak off every once and a while to play basement concerts

On Cybertron, Nightflyer is top of his class while training to be a high guard soldier. He is chosen by Shockwave to go on a mission to earth and infiltrate the Autobots

Cybertron is in desperate need of resources

Nightflyer lands on earth and pretends to be a Decepticon defector, Sparkplug is wary of him

Nightflyer manages to become an Autobot and meets Sparkplug during the scout tryouts

Reluctantly Sparkplug is passed but gets put on the Energon transportation and quality control team, she’s fine with this as she just wanted to see the world

Nightflyer gets put on a mission team, meeting Landlot, Defender and other bots his age.

During this time we get our first mentions of a cult ran by a former Decepticon that’s turning humans into purple energon

Both Spark and Night explore earth and meet new and old bots.

Example: Sparkplug gets to know earth born transformers, Nightflyer gets to meet bots like Skyfire

Back at the base, Sparkplug gets annoyed with how much fanfare Nightflyer is getting, while she still gets treated like a sparkling

She breaks Night’s social mask and gets to know the real him. A romance between the two starts to form

Shockwave informs Nightflyer that they’re sending a team to take over the main Autobot base

Shockwave employs the DJD to help in the Autobot attack

Return to war

Sparkplug confesses to Nightflyer, Nightflyer returns the feeling as he does like her.

Right before the invasion he tries to convince her that living on Cybertron wouldn’t be so bad. Sparkplug refutes that she likes Earth and that Cybertron would probably hate her.

The DJD and a group of seakers make their way to Earth and start fucking shit up

Big dramatic reveal to the characters that Nightflyer was a spy all along and is Starscream’s ward

Things are going in the bad guy’s favor until Tarn realizes Sparkplug is part Megatron.

He orders his men to capture her and kill everyone else, as they have a new leader of the Decepticon cause

The battle becomes even more messy as sides are switched and the Autobots and seekers are now fighting to survive

While attempting to help Megatron fight off Tarn, Sparkplug is grabbed by Soundblaster, who hopes to bring her back to Shockwave in order to get in his favor.

Space distortion happens when Skywarp tries to help get Soundblaster out of there and accidentally sends him and Sparkplug halfway across the universe.

This cases the DJD to leave as they are now looking for Sparkplug, and the seekers(after getting beaten by the DJD) are taken prisoner for now

Depression but in space

Sparkplug and Soundblaster are in the middle of nowhere on a deserted planet. After trying to restrain one another, they realize they need to help each other if they wanna survive this mess

Back on earth, Rodimus comes back from space due to getting a SOS message, he is yelled at by his family

Acidstorm, Slipstream and Airachnid are absolutely furious about being stuck on earth for the time being. Nightflyer is currently being used as a verbal punching bag for the Autobots

Back in Space, Sparkplug and Soundblaster start to develop a chemistry as they learn more about each other.

Rodiums takes it upon himself to get Sparkplug back as a way to make up for leaving everyone years ago. This is a big reference to “the lost light”, characters like megatron, rodimus, swerve and others join, along with some OC’s like Nanabah (native american sharpshooter) who forms a friendship with Preceptor, and Lobo (the lowrider transformer born on earth)

Rodimus takes nightflyer under his wing in an attempt to reform him

In space, Spark and Soundblaster start to feel romantic feelings for one another. However this is interrupted by them getting found by the DJD.

Soundwave takes it upon himself to split off from Rodimus group as he might be able to locate her better through his mind powers (I don’t know, it's all space magic man)

Sparkplug properly meets Tarn and is quickly given a new frame and alt mode against her will.

Soundwave finds the DJD with Spark on it and sneaks on, However he is caught, and even if he fights well, he can’t fight off all of them.

Tarn forces Sparkplug to finally give into her anger when he kills Soundwave in front of her and lets her kill him.

Spark takes on the name “Megatron” and is then forced to eat Tarn’s spark in an act of dominance. She is now the leader of the DJD

There will be more to come!!! this is not all of it

Part two

266 notes

·

View notes

Note

You once said you mostly like playing Dwarf Fortress in adventure mode nowadays, right? Any adventure mode tips you can give for a total noob?

Okay I'm not any kind of expert by any means, but...

For combat, there are weapon skills (e.g. Crossbowman, Swordsman, Hammerman, Bowman) which determine how good you are at using a specific weapon, but there are also two I like to call "parent skills": Fighter, which determines how good you are at using melee weapons in general, and Archer, which determines how good you are at using ranged weapons in general (as well as attacking with thrown objects). Attacking with a sword uses (and trains) both your Fighter and Swordsman skills. For character creation I think it's better to put points into Fighter and/or Archer, and train in the use of specific weapons during play. That way, if you ever need to switch to a different weapon from the specific one you're trained in you'll still be able to use it competently.

Always put one point into the Reader skill, otherwise your character will be illiterate. Since the only way to train skills in game is by using them, there is no in-game way to ever learn to read if you start with an illiterate character.

There is currently no implemented in-game way to fulfill the needs to be with family, be with friends, or make romance in adventure mode, so you should avoid creating a character with a personality and set of values that gives them these needs, otherwise they will inevitably become distracted from being unable to fulfill them. Also, the need to eat a good meal is technically possible but extremely hard to fulfill (since it requires either eating an extremely valuable meal, or a meal made with one of your character's randomly selected preferred ingredients) so you should probably avoid it too.

For purposes of trading, carrying small high-value items such as gems or high-quality crafts is a lot more useful than carrying coins around, since coins don't have any monetary value outside of the civilization that minted them, so you can only use certain coins for trade in certain sites.

However, with a high Thrower (or Archer) skill, coins make for a surprisingly decent and easily replenishable thrown weapon.

In certain climates, the water in your waterskin may freeze at night, or even stay frozen all the time. This took me a while to figure out back in the day, but: If you need to drink but your water is frozen, you'll need to interact with an adjacent empty space to make a campfire there, and then interact with the campfire and select the ice to heat it (or, in pre-steam versions, press g and then choose the option to make a campfire, and then while standing next to it press I to open advanced interactions with your inventory and then select the ice and choose the option to heat it)

If you find it annoying to constantly have to find food and water, play as a goblin, since goblins don't need to eat or drink.

I haven't tested if it works the same way in the post-steam versions, but in iirc performing anything at a tavern and then talking to the tavern keeper about your performance would get them to give you a discount on your room and drinks, regardless of the actual quality of the performance. I don't think this has been changed, but still.

Offloading a site by moving in travel mode will instantly heal you of all temporary damage, such as wounds, broken bones, bleeding, etc. If you're bleeding out during combat you can avoid dying by running away from your enemies until you're far away enough to initiate travel mode and then moving in any direction.

The only way to heal permanent damage such as lost body parts or severed nerves is to become a werebeast, since your body will be completely restored every time you transform. You can become a werebeast by getting bitten by one and surviving (the bite has to tear at least one tissue layer or it won't pass on the curse), or by getting cursed either by toppling a statue at a temple dedicated to a deity you worship, or by rolling one of the divination dice found at shrines three times (although when you get cursed it's randomized if you become either a vampire or a werebeast). However, being a werebeast will make you vulnerable against a random metal, and transforming will unequip and drop all your worn items (including backpacks and pouches) unless the size of your werebeast form is relatively similar to your normal size (plus destroy all non-leather clothing you're wearing regardless of size change)

If you don't start with a high Armor User skill, wearing a full set of armor can actually be more harm than good, since a low Armor User skill makes you more susceptible to the armor's encumbrance penalty, and makes you tire more easily while wearing armor, making it harder to dodge attacks and get attacks in.

However, any leather clothing counts as armor for the purposes of training, and doesn't have encumbrance penalties. So if you don't have a high armor user skill you should equip yourself with maybe a metal helmet, gauntlets, and or/boots, and then put on as much leather clothing as you can, so you can avoid the penalties while you train the skill to the point that you can wear a full set of metal armor.

230 notes

·

View notes

Note

Can I request Commander Wolffe x reader, who's a Jedi knight and Plo Koon's former padawan? Reader is from an alien race that can turn into a giant wolf and shares a gentle nature toward the clones like their master. Reader is also an absolute fighting unit and tall (Like a gentle giant) Bonus if reader uses their enhanced sense of smell to locate things and finds Wolffe's scent most pleasant. What if reader uses their wolf form to warm up Wolffe and his brothers when they get trapped in some snow planet? (You can put fem! reader if gender has to be specific. )

(omg this is such a cute idea! also this is my first ever Star Wars piece with one of my favourite copy paste men?? so excited <33 this is also sort of a pre-relationship build up thing, so if you'd like me to do a follow up I'd be more than happy to! hope you enjoy!)

(Wolffe x gender neutral reader (can be read as platonic) - warning for brief mentions of fighting, no detail though)

His general is the one to introduce the two of you, Master Plo stating that his former padawan would be 'shadowing' until they were assigned their own battalion.

Wolffe had an immediate respect for you - your sweet smile and offer of a handshake were somewhat unusual, since many of the higher ups chose to keep their distance from the clones where they could help it, but you seemed genuine, so he returned the handshake and nodded politely.

Your introduction alone made it very clear that you had trained under Master Plo. Your temperament was very similar, with the same aura of patience, kindness and wisdom that the general had.

You also seemed eager to get to know the soldiers you were serving with, making sure to remember all of their names and asking questions about them.

The Wolfpack responded well to you, Wolffe had to admit. While he was somewhat hesitant having a new face around, it was good to see his men's morale so high.

They all seemed to trust you and your abilities. While he knew not to underestimate you, especially given your towering height, he would not be convinced completely until he saw you fight.

The first callout to battle had you buzzing with anticipation, and Wolffe watched you warily as you whispered something to the general, to which he shook his head in response.

There was no time to wonder though, as your ship soon touched down and you were thrust onto the battlefield not long after.

You didn't remember the name of the planet, but it was layered in snow, and the glow of blaster bolts and lightsabers was all that guided you in the blizzard you had landed in.

Everything was moving so quickly, and you did your best to put yourself between your men and the enemy fire wherever you could, but it soon became clear that they would overwhelm you in this weather. The more men you saw fall to the ground, the more your hope fell.

You couldn't see well enough in this form. You knew that, the general knew that. Plo Koon had told you not to shift unless it was a last resort, you had asked on the way there - though with the enemy fire now surrounding you, surely this counted?

A quick nod from him confirmed your thinking and, while your former master gave Wolffe the most warning he could, you shifted into wolf form.

It was relieving, to suddenly have your senses be so sharp when you could barely tell where you were stood seconds earlier, and you were easily able to tell where the enemy horde stood. There were stragglers in other directions, but they were soon picked off by the clones.

Your attackers were clearly startled by your transformation (reasonable enough), but their moment's hesitation was enough to allow you to spring forward and send the majority scattering with a sweep of one enormous paw. You felt your claws tear through some, and the ones left intact were swiftly finished off with your teeth. Any that were smart enough to run didn't make it far either.

The sounds of blaster fire ceased once your swift massacre had ended, and you slowly padded across the snow, lifting your nose to the air to find the only ones left were your men.

Far fewer of your men than you would've liked.

Plo Koon watched with mirth in his eyes as you approached the clones, their expressions fixed in awe, aside from Wolffe, who seemed uncharacteristically nervous.

You supposed you couldn't blame him. But still, to will him to trust you, you lay down in front of him, blood-stained muzzle pressed into the snow at his feet while he stared, perplexed, down at you.

"They are still aware in this form, Commander. You need not worry."

The comforting words from the general seemed to placate Wolffe momentarily, then he turned to you.

"We must find shelter quickly. We'll never make it back to the ship with so many injured."

You dipped your head in understanding, and gestured south. It seemed like a smudge on the horizon to the others, but you could make out the silhouettes and smell the scent of the forest enough to know it was your best option.

Not having a better choice, they agreed to follow you, and those still standing began helping up the injured clones. Seeing this, you huffed and nodded at your back.

Plo nodded in understanding. "They will carry the injured. We can move faster that way."

Wolffe, still watching you warily, started helping the others climb onto your back, not willing to waste time even in his distrust. He could ask questions later, but the general trusted you, so he could too.

Moving in this form was certainly faster, and it was not long before you were amidst a cluster of trees and helping down the clones on your back. It wasn't perfect, but you were more shielded from the blizzard.

Wolffe and the general were at the back of the pack, ensuring you weren't followed. They didn't seem confident in the condition of the men, and you tilted your head in question.

The commander looked up at you, seemingly frustrated. "We have no supplies. We'll freeze if we stay here."

You huffed in reply and padded over behind the cluster of injured clones, laying down and using your nose to nudge them closer to your fur. Some of them nestled closer to your warmth on instinct, and you looked back at Wolffe expectantly.

"You can't be serious..."

Comet in particular seemed to be happy with this arrangement though, and quickly dove into your mountain of fur beside his brothers.

Plo patted Wolffe on the shoulder and urged him to sit, humming in amusement at the look on his face as you draped your tail over the small group of clones.

You were... useful. Wolffe couldn't deny that. Useful and very, very warm.

As he drifted to sleep far faster than usual, he thought maybe he could get used to this...

#star wars#star wars x reader#star wars x you#wolffe x reader#commander wolffe#commander wolffe x reader#clone wars x reader#clones x reader#clone troopers x reader#clone trooper x reader

159 notes

·

View notes

Text

The Sundered Soul, Chapter 1

Prompt credit for this fic goes to @theroundbartable. I found it on @merlinficprompts. https://theroundbartable.tumblr.com/post/723312849564418048/camelot-is-being-attacked-by-a-sorcerer-somehow

I hope it does this prompt justice!

Twelve chapters are currently written, and now I'm proofreading them one by one. This first chapter is as good as I'm going to get it without a beta. (Any volunteers?) :D Also I will be cross-posting to ao3.

Enjoy!

Edit: I'm an idiot who didn't know how to add Keep Reading. Fixed, I hope.

The Sundered Soul Chapter 1: What Remains

The throne room of Camelot stood empty in the pre-dawn darkness, save for the guards at their posts and one restless prince. Arthur Pendragon sat on the steps below the throne—never on it, not yet—and watched the first pale fingers of light creep through the high windows. The great seat loomed above him, carved stone that had borne the weight of kings for generations. Soon, perhaps sooner than anyone suspected, it would bear his.

He could still see his father's vacant stare from the evening before, the way Uther had looked through him as though he were a stranger. The physicians spoke in hushed tones about shock and grief, about time needed to heal. They didn't speak the truth that Arthur saw in their eyes: the king's mind had shattered like glass when Morgana's betrayal was revealed, and all the healers in Camelot couldn't piece it back together.

King Regent. The title sat uneasily on his shoulders, heavier than any armor he'd ever worn. In all but name, he ruled Camelot now. The thought should have filled him with pride—wasn't this what he'd been trained for his entire life? Instead, he felt only the crushing weight of every decision, every life that hung in the balance of his choices.

"You're brooding again."

Arthur didn't startle—he'd learned years ago to recognize the particular quality of silence that meant Merlin was approaching. His manservant had an uncanny ability to move through the castle like a shadow when he chose, though he was just as likely to crash into suits of armor when distracted.

"I'm thinking," Arthur corrected without turning. "Kings must think."

"King Regents," Merlin corrected gently, coming to stand beside him. "And I've seen you think. This is definitely brooding."

Arthur finally looked up at his servant, ready with a sharp retort, but the words died on his tongue. The morning light streaming through the windows had caught in Merlin's dark hair, turning it to burnished gold at the edges. His eyes—had they always been that particular shade of blue? Like the deep waters of the lake beyond the citadel, holding depths that seemed to go on forever.

Arthur's chest tightened inexplicably. He forced his gaze away, focusing on the middle distance.

"The council meets within the hour," he said, his voice rougher than intended. "Have you—"

"Prepared your papers, polished your ceremonial sword, and ensured the kitchen knows you'll need breakfast after because you never eat before important meetings? Yes, Sire." There was gentle mockery in the title, a warmth that transformed what should have been proper address into something almost like endearment.

Arthur found himself fighting a smile. "I don't know why I keep you around."

"Because no one else would put up with your royal pratness," Merlin replied promptly. "Also, I'm the only one who remembers that you prefer your wine watered at formal dinners so you can keep a clear head."

It was true, and the fact that Merlin had noticed—had been watching him closely enough to discern such preferences without being told—sent another uncomfortable flutter through Arthur's chest. He stood abruptly, needing distance.

"The council will want to discuss the raids on the border villages," he said, striding toward the doors. Merlin fell into step beside him, as natural as breathing. "Leon returned last night with disturbing reports."

"Magic?" Merlin's voice carried an odd note, something Arthur couldn't quite identify.

"When isn't it?" Arthur sighed. "Sometimes I think every hedge wizard and sorceress in the five kingdoms has decided to test Camelot's defenses now that—" He cut himself off.

"Now that the king is indisposed," Merlin finished quietly.

They walked in silence for a moment, their footsteps echoing through the empty corridors. The castle was beginning to wake around them—servants scurrying past with lowered eyes, guards changing shifts with muted clanks of armor.

"You're a good king, Arthur," Merlin said suddenly. "Regent or otherwise."

Arthur glanced at him, startled by the conviction in his voice. Merlin wasn't looking at him, his gaze fixed ahead, but there was something in his expression—a fierce pride that made Arthur's breath catch.

"Merlin—"

"The kingdom sees it. The knights see it. Your father—" Merlin paused, choosing his words carefully. "Your father prepared you for this, even if he didn't intend it to come so soon. You're ready."

They'd reached the council chambers. Arthur could hear voices within, the low rumble of conversation as Camelot's advisors gathered. He should go in, take his place, be the leader they needed. Instead, he found himself lingering, studying Merlin's profile in the torchlight.

There were shadows under his servant's eyes, a tension in the line of his shoulders that spoke of burdens carried. When had Merlin begun to look tired? When had the boyish enthusiasm that had so irritated Arthur in their early days together given way to this quiet strength?

"Sire?" Merlin prompted gently. "The council?"

Arthur squared his shoulders, becoming the prince—the king regent—Camelot needed. "Have my breakfast waiting when I'm done. And Merlin?"

"Yes?"

Arthur hesitated just a moment too long, stifling the open gratitude he wanted to express. Too many watching eyes and listening ears that would pounce on something so un-kingly as thanking a servant, and use it against him. Or worse, use it against Merlin.

"Don’t wander off,” he said instead. “I’ll need you afterward to remind me which advisor is Lord Havelock and which one is Lord Harrow, because I still can’t tell those two wrinkled old buzzards apart."

Merlin blinked, then grinned. "Havelock’s the one with the beard that looks like a distressed squirrel."

Arthur gave a soft huff that might have been a laugh. "Distressed squirrel. Right. That’ll help."

He stepped toward the chamber doors, then paused again, voice quieter.

"And... don’t let the kitchen burn the toast. You always get it right."

Merlin’s brows lifted slightly, but he said only, “Wouldn’t dream of letting your royal highness suffer subpar toast.”

Arthur nodded, then pushed through the doors before he could do something foolish, like reach out to smooth the worry lines from Merlin's brow or ask him to attend the council meeting just so he could have that steady presence beside him.

The councilors rose as he entered, a sea of bowing heads and murmured "Your Highness"es. Sir Leon stood near the great map of the kingdom, his expression grave. Geoffrey of Monmouth clutched his ever-present scrolls, while Lord Cynric and the other nobles arranged themselves according to rank and precedence.

"Gentlemen," Arthur said, taking his place at the head of the table. Not his father's seat—he couldn't bring himself to claim that yet—but close enough. "Sir Leon, your report?"

Leon stepped forward, indicating several points on the map. "The attacks have increased in frequency and boldness, Sire. Three villages in the past fortnight, all along the northern border. The survivors speak of a sorcerer who commands the very trees to attack, who can call lightning from clear skies."

"Druids?" Lord Cynric suggested, his voice dripping with familiar disdain.

"No," Leon said firmly. "The Druids seek only peace. This is something else—someone else. The attacks seem random, but there's a pattern. Each village had recently sent men to serve in Camelot's army."

Arthur studied the map, his mind already working through possibilities. "He's trying to weaken our defenses, make us pull back our patrols to protect the villages."

"Or testing our responses," Geoffrey added quietly. "Seeing how quickly we can mobilize, how we deploy our forces."

"Then we give him nothing to study," Arthur decided. "Double the patrols but vary their routes. I want word sent to all border villages—any sign of magic, any strangers asking questions, and they're to send word immediately." He looked at Leon. "Take Gwaine and Percival, scout the area where the attacks occurred. Look for patterns we might have missed."

"Yes, Sire."

The meeting continued, flowing from border defenses to grain stores to the ever-present challenge of maintaining order with the king's... condition. Arthur found his attention wandering, his gaze drifting to the door where he knew Merlin waited.

It was foolish, this hyperawareness of his servant. Dangerous, even. But lately, Arthur couldn't seem to help himself. He noticed things—the way Merlin's hands moved when he was nervous, quick and fluttering like birds. The particular tilt of his head when he was listening intently. The way he bit his lower lip when concentrating on a task.

"Sire?"

Arthur jerked back to attention, finding the entire council staring at him expectantly. Heat crept up his neck.

"I apologize, Lord Cynric. You were saying?"

"I was inquiring about the feast for the Feast of Beltane, Sire. With His Majesty unable to preside..."

"The feast will continue as planned," Arthur said firmly. "The people need to see that Camelot remains strong, that their lives continue uninterrupted. We cannot afford to show weakness."

The meeting dragged on for another hour, each issue blending into the next until Arthur felt his patience fraying. When Geoffrey finally suggested they adjourn, Arthur barely managed a dignified exit before escaping into the corridor.

Merlin was there, of course, falling into step beside him without a word. They walked in comfortable silence back to Arthur's chambers, where a simple breakfast waited on the table by the window.

"How did it go?" Merlin asked, busy himself with pouring wine—watered, Arthur noted with a fond exasperation he didn't examine too closely.

"Lord Cynric is convinced that every ill that befalls Camelot is the result of magic," Arthur said, sinking into his chair. "Lord Bayard thinks we should increase taxes to fund more soldiers. And Geoffrey wants to consult prophecies and portents before making any decisions."

"So, the usual then." Merlin set a plate before him, the gesture so familiar, so domestic, that Arthur had to look away.

"The usual," he agreed, attacking his breakfast with more force than necessary.

Merlin moved about the room, tidying things that didn't need tidying, adjusting items that were already perfectly placed. It was a nervous habit, one that emerged when he had something on his mind.

"Out with it," Arthur said finally.

Merlin froze mid-reach for a candlestick. "What?"

"Whatever it is you're not saying. You're rearranging my chambers like you're preparing for a siege."

A flush crept up Merlin's neck. "It's nothing, Sire. I just... I worry. About the raids, about you taking on too much. You haven't been sleeping well."

Arthur set down his knife carefully. "And how would you know that, Merlin?"

The flush deepened. "I... that is, when I bring your breakfast, sometimes you're already awake. And there are circles under your eyes. And you've been..." He gestured vaguely.

"I've been what?"

"Distant," Merlin said quietly. "Like you're carrying the weight of the world and won't let anyone help bear it."

The words hit too close to home. Arthur stood abruptly, moving to the window to put space between them. Below, the courtyard was filling with people going about their daily lives, blissfully unaware of the threats gathering at their borders.

"That's what kings do," he said to the glass. "They carry the weight so others don't have to."

"You're not alone, Arthur." Merlin's voice was closer now, though Arthur didn't turn to look. "You have the knights, the council. You have—" A pause, heavy with things unsaid. "You have people who would stand beside you, if you'd let them."

Arthur's hands clenched on the window ledge. He could feel Merlin's presence behind him, warm and steady and impossible to ignore. If he turned now, what would he see in those impossibly blue eyes? What might he do?

"I should attend training," he said instead, his voice carefully neutral. "The knights will be waiting."

"Of course, Sire." Was that disappointment in Merlin's tone? "I'll prepare your armor."

They fell back into routine, the familiar dance of servant and master that had defined their relationship for years. But as Merlin helped him into his mail, his fingers brushing against Arthur's neck as he adjusted the collar, Arthur found himself holding his breath.

"There," Merlin said softly, stepping back. "Perfect."

Arthur met his eyes, saw something there that made his heart race. Then Merlin was turning away, busying himself with gathering laundry, and the moment passed.

The training ground was already crowded when Arthur arrived. His knights—his knights, the ones who'd chosen to follow him rather than simply obey the crown—were warming up. Gwaine was regaling Percival with what was undoubtedly an exaggerated tale of his latest tavern conquest. Elyan and Leon were discussing sword techniques while Lancelot stretched in preparation for the bout.

And there, sitting on a barrel at the edge of the field, was Gwen. She caught his eye and smiled, warm and knowing in a way that made Arthur want to fidget like a squire caught in mischief.

"About time you showed up, Princess," Gwaine called out. "We were starting to think you'd gotten lost in your own castle."

"The only thing lost around here is your sense of propriety," Arthur shot back, but there was no heat in it. These men had proven themselves time and again. They'd earned the right to informality.

"Propriety's overrated," Gwaine grinned. "Ask Merlin—he's been dealing with your royal pratness for years without any."

Arthur's jaw tightened. "Merlin is—"

"Standing right there," Lancelot interrupted quietly, nodding toward the colonnade.

Arthur turned, found Merlin lurking in the shadows of the arches, a basket of laundry forgotten in his hands as he watched the knights prepare. When he realized he'd been spotted, color flooded his cheeks.

"I was just—the laundry—I'll go," he stammered, backing away.

"Stay," Arthur heard himself say. Then, when everyone turned to stare at him, he cleared his throat. "That is, someone should be on hand in case of injuries. You know how Gwaine is with a sword."

"Oi!" Gwaine protested, but he was grinning.

Merlin hesitated, then set down his basket and moved to sit beside Gwen. They put their heads together immediately, whispering about something that made Gwen giggle and Merlin duck his head.

Arthur forced his attention back to his knights, drawing his sword. "Right then. Let's see if any of you have been practicing."

The training session was brutal, Arthur pushing himself and his men harder than usual. He needed the distraction, the simple clarity of combat where the only things that mattered were blade and balance and breathing. But even in the midst of a complex drill with Leon, he found his awareness drifting to the edge of the field.

Merlin had produced a small kit of medical supplies from somewhere and was tending to Elyan's scraped knuckles with gentle efficiency. The young knight was saying something that made Merlin laugh, the sound bright and clear across the yard, and Arthur's concentration shattered completely.

Leon's blade slipped past his guard, stopping just short of his ribs.

"Point," Leon said mildly, but his eyes were knowing.

Arthur reset his stance, irritated with himself. "Again."

They went three more rounds, Arthur winning two through sheer stubborn determination, before Gwaine called out a challenge.

"How about we make this interesting? Team sparring—me, Percival, and Elyan against you, Leon, and Lancelot."

"Hardly seems fair," Arthur said. "You'll need at least two more to make it a challenge."

Gwaine's grin was wicked. "Cocky bastard. You're on."

The melee that followed was chaos of the best kind. Six of Camelot's finest warriors moving in deadly synchronization, testing each other's limits. Arthur found his rhythm, Leon on his left and Lancelot on his right, the three of them moving as one unit against Gwaine's more chaotic approach.

Sparring brought order.

Strike, pivot, react. In those moments, the weight of Camelot slipped from his shoulders. No politics, no council, no shadows of his father’s judgment. Just motion, timing, and breath.

Arthur called the rotation. “Circle left!”

Lancelot flanked smoothly. Leon followed. Across the yard, Gwaine, Percival, and Elyan mirrored the shift. Gwaine, true to form, added an unnecessary flourish to his step, as if auditioning for a crowd.

From the bench near the edge of the yard, Arthur caught Gwen’s laughter. Merlin must have said something — probably at his expense. Arthur didn’t mind. Not when things felt, for once, almost normal.

A glint of movement caught his eye: Percival lifting the two-handed training axe, more suited to strength drills than finesse. Arthur made a mental note to question that later, but now—momentum.

He angled toward Gwaine, who was weaving wide in an attempt to bait Leon. Arthur recognized the tactic, cut inside, and drove toward him fast.

Gwaine blinked. “Oh, now you’re trying?”

Arthur ducked beneath Gwaine’s swing and stepped into his guard, catching his elbow and turning his weight. Gwaine tried to counter—too slow.

Arthur released his sword deliberately, letting it drop to the dirt, and used both hands to drive Gwaine backward with a controlled shoulder slam.

Gwaine grunted as he went down hard.

Arthur straightened, breathing fast, ready to retrieve his blade—

And that’s when it happened.

Gwaine’s boot, flailing for balance, caught a length of rusted training chain half-buried in the dirt.

His leg shot out from under him.

His elbow slammed into Percival’s side.

There was a startled shout—Percival’s grip twisted mid-swing—and the axe flew, end-over-end, loosed in a wild arc that glittered in the sun.

Arthur turned just in time to see it coming.

The weapon was spinning straight for his unprotected side. His sword was out of reach. He had no time to move.

He couldn’t stop it.

Then—

“Gestillan!”

The air hummed, and the axe froze mid-air, held for a suspended second before it dropped harmlessly to the dirt at Arthur’s feet.

Silence slammed down over the field.

Arthur stared at the axe. Then, slowly, he looked up.

His servant stood frozen at the edge of the field, one hand still half-raised, his face draining of color as he realized what he'd done. Their eyes met across the yard, and Arthur saw naked terror there.

Then Gwaine laughed, loud and boisterous. "Nice catch, Merlin!"

The tension didn’t break, but it seemed to loosen its stranglehold on them. Leon, his expression carefully neutral, reached to help Gwaine to his feet. Percival approached Arthur, placing his huge frame none-too-subtly between Arthur and his line of sight to Merlin, clapped him on the shoulder and quietly apologized for losing his grip on the axe.

Arthur’s mind spun uselessly as he looked at his knights, perplexed. Everyone seemed determined to pretend nothing unusual had happened. They had all seen it, of that he was certain, and yet the only one who would meet his eyes now was Gwaine, who stood casually less than a sword-strike away. His easy grin never faltered, but his sharp eyes glared, threatening, and the message was clear. Just you try to hurt Merlin, I dare you.

And Arthur couldn't help but turn and stare at Merlin, who was now very deliberately organizing medical supplies with shaking hands, his pale skin almost bloodless from fear. Gwen put a comforting hand on Merlin’s shoulder and whispered something to him before casting an apprehensive look briefly in his direction.

Magic. Merlin had magic.

The thought should have filled him with rage, with betrayal. Magic was evil, dangerous, the root of all Camelot's suffering. His father had taught him that from the cradle.

But all Arthur could think about was how many times he'd fallen—from horses, from walls, in battle—and walked away with barely a bruise. How many times had Merlin been there, quiet and unassuming, cushioning his landing?

"I think that's enough for today," he said, his voice sounding strange to his own ears.

The knights dispersed so reluctantly, he almost made it an order, but then Percival threw his arm around Gwaine’s shoulders and began to drag him off, saying something, with forced cheerfulness, about getting a drink at the Rising Sun. Elyan muttered something about needing to get something from the armory, and Leon fell into step beside him as they walked away. Lancelot paused beside Arthur, his expression pensive.

"Sire—"

"Not now, Lancelot."

The knight inclined his head and withdrew. Arthur found himself alone in the yard with only Gwen and Merlin remaining. His servant was standing now, the medical kit clutched to his chest like a shield.

"Merlin," Arthur began.

"I should go," Merlin said quickly. "The laundry won't—I need to—"

"Merlin." Arthur put command into his voice, saw his servant flinch. "My chambers. Now."

Merlin's shoulders slumped in defeat. He nodded once, then turned and walked toward the castle like a man heading to his execution. Arthur watched him go, his mind churning.

"Arthur," Gwen said softly, suddenly at his elbow. "Whatever you're thinking—"

"Did you know?" The question came out harsher than intended.

Gwen lifted her chin. "I suspected. As did your knights, apparently. As did you, if you're honest with yourself."

"That's not—I never—"

"Arthur." Her voice was gentle but firm. "How many times has he saved your life? How many impossible escapes, how many lucky chances? You're not a fool. You've always known there was something different about him."

"Magic is—"

"What? Evil? Look at him, Arthur. Really look at him. Does anything about Merlin seem evil to you?"

Arthur's jaw worked. He thought of Merlin's ridiculous ears, his terrible jokes, the way he fussed over Arthur's meals and worried about him getting enough sleep. The way he'd stood against sorcerers and monsters and kingdoms for Arthur's sake, armed with nothing but loyalty and—apparently—secret magic.

"He lied to me," Arthur said finally.

"To protect you both," Gwen countered. "What would you have done, truly, if he'd told you that first week? That first year? Would you have listened, or would you have done your duty?"

Arthur didn't answer. They both knew the truth.

"Talk to him," Gwen urged. "Before you do something you'll regret."

She squeezed his arm and left, her skirts whispering across the stones. Arthur stood alone in the empty yard, staring at the spot where Merlin had saved him.

Again.

When he finally made his way to his chambers, he found Merlin standing by the window, his back rigid with tension. The abandoned laundry basket sat by the door, forgotten.

"How long?" Arthur asked without preamble.

Merlin's hands clenched at his sides. "Always."

"Always?" Arthur's voice rose. "You've had magic this entire time?"

"I was born with it." Merlin turned finally, and his eyes were bright with unshed tears. "I didn't choose it, Arthur. It chose me. I've tried to—I've only ever used it to protect you, to protect Camelot."

Arthur tried and failed to comprehend. "All those times—the magical attacks, the creatures, the sorcerers who mysteriously failed—"

"Yes."

The simple admission hit Arthur like a physical blow. He sank into a chair, suddenly exhausted.

"The dragon?"

"Me."

"The branch that fell on that bandit who had his sword to my throat?"

"Me." Merlin's voice was barely a whisper now. "Always me."

Arthur buried his face in his hands. His entire world was tilting, everything he thought he knew crumbling. Merlin—his Merlin—was a sorcerer. Had been lying to him every day for years.

"Why didn't you tell me?" The question came out broken.

"And say what?" Merlin's laugh was bitter. "Hello, I'm Merlin, your father made me your manservant because I saved your life using the same magic for which he would see me burn at the stake?”

Arthur’s breath hitched. “Even then?”

“Of course even then!” Merlin said, exasperation and hurt in his tone, even as his eyes finally overflowed. He angrily scrubbed the tears from his face with the cuff of his sleeve. “You think it was coincidence that a chandelier just happened to fall on that woman after she’d already put everyone to sleep? You think I’m naturally quick enough to race across the room and pull you out of the way of the dagger that would have killed you?”

Arthur opened his mouth, but no words emerged. Well, when he put it that way…

“I wanted to tell you so many times, Arthur.” Merlin said quietly, still wiping ineffectually at his face. “You have no idea how much I wanted to trust you with this."

Arthur shook his head and looked down, struggling to parse all this information. "But you didn't," he said.

"How could I?" Merlin moved closer, his voice desperate. "Your father had children drowned for showing signs of magic. He burned men and women whose only crime was brewing healing potions. And you—you believed what he taught you. I watched you agree with him, watched you hunt down sorcerers—"

"They were trying to kill me," Arthur protested. He couldn’t defend all of his father’s actions, but they weren’t completely without reason.

"Not all of them." Merlin's voice was quiet, sad. "Some were just scared. Some were angry at what had been done to them. And yes, some were evil. But magic itself isn't evil, Arthur. It's just... it's just what I am."

Arthur looked up, found Merlin standing before him, tears now tracking unhindered down his cheeks. He looked young, vulnerable, nothing like the secret sorcerer who'd apparently been defending Camelot from the shadows.

"Were you ever going to tell me?"

"When you were king," Merlin said, his voice wet, strained with the sound of a hope yet to materialize. "When you could change the laws, when it was safe. I promised myself I'd tell you then."

"And if I'd had you executed?"

Merlin's smile was heartbreaking. "Then at least I'd have died as myself, not hiding anymore."

Arthur shot to his feet, unable to bear the resignation in that voice. "You idiot,” he said. His chest felt tight; his heart pierced, and not with the sting of betrayal. “You complete idiot. Did you really think—after everything—"

He couldn't finish. Too many emotions were within him—anger at the deception, grief for the trust broken, but underneath it all, a desperate relief that Merlin was still here, still breathing, still his.

"Arthur?" Merlin ventured uncertainly.

"I need time," Arthur said roughly. "To think. To... process this."

"Of course." Merlin moved toward the door, paused. "Arthur, I am sorry. For lying, for... for all of it. But I'm not sorry for protecting you. I'll never be sorry for that."

He left before Arthur could respond, the door closing with quiet finality.

Arthur stood in the center of his chambers, feeling more alone than he could remember. Everything was different now. Everything had changed.

Except...

Except Merlin was still Merlin. Still the man who brought him breakfast and nagged him about sleeping. Still the one who stood between Arthur and danger without hesitation. Still the person Arthur trusted above all others, the one whose opinion mattered most, the one whose smile could brighten Arthur's darkest days.

Magic hadn't changed that. If anything, it only proved what Arthur had always known deep down—that Merlin was extraordinary.

The thought was terrifying in its implications.

Night fell over Camelot, bringing with it a sense of expectation, like the air before a storm. Arthur stood on his balcony, watching torches flicker to life across the city. Somewhere out there, Merlin was probably in his chambers, wondering if tomorrow would bring execution or exile.

"Idiot," Arthur murmured to the night. As if he could ever—

A commotion in the courtyard below caught his attention. Guards were running, shouting orders. He could hear sounds of crashing armor and cries of pain.

Arthur grabbed his sword and ran, taking the stairs three at a time.

He burst into the courtyard to find chaos. Blue flames licked at the walls, impervious to the water the servants threw at them. A multitude of ravens circled overhead, croaking and cawing. At the center of it all stood a figure in dark robes, hood thrown back to reveal a gaunt face marked by desperation.

"Arthur Pendragon!" the sorcerer called out. "Face me, or watch your kingdom burn!"

Arthur stepped forward, sword raised. Around him, his knights were converging, drawn by the commotion. He saw Leon organizing the guards, Gwaine and Percival flanking him, Lancelot and Elyan moving to cut off escape routes.

And there, emerging from the shadows like he always did when Arthur was in danger, was Merlin.

Their eyes met across the courtyard. Arthur saw the question there, the readiness to act tempered by fear of exposure. He gave the tiniest shake of his head. Not yet. Let me try.

"I'm here," Arthur called out to the sorcerer. "What do you want?"

The man laughed, high and unstable. "What do I want? I want my sister back, but your father burned her. I want my home back, but your knights destroyed it. I want justice, but there is none to be had in Camelot!"

"My father is not—" Arthur began.