#Human Computer Interface

Explore tagged Tumblr posts

Text

The EXG Synapse by Neuphony is an advanced device designed to monitor and analyze multiple biosignals, including EEG, ECG, and EMG. It offers real-time data for research and neurofeedback, making it ideal for cognitive enhancement and physiological monitoring.

#neuphony#health#eeg#mental health#brain health#bci#neurofeedback#mental wellness#technology#Exg#neuroscience kit#emg sensors#emg muscle sensor#emg sensor arduino#diy robotics kits#brain wave sensor#Arduino EEG sensor#human computer interface#heart rate variability monitoring#hrv monitor#heart rate monitor#eye tracking#diy robotic kits#build your own robot kit#electromyography sensor#eeg sensor arduino#diy robotics#eog

0 notes

Text

32 notes

·

View notes

Text

Empowering Humans, Empowering Work: The New Frontier of Human Augmentation.

Sanjay Kumar Mohindroo Sanjay Kumar Mohindroo. skm.stayingalive.in A detailed look at wearable tech, exoskeletons, and brain-computer interfaces that enhance human performance and reshape workplace productivity. This post examines how wearable tech, exoskeletons, and brain-computer interfaces are reshaping workplace productivity and boosting human performance. We examine a mix of practical…

#Augmented Workforce#Brain-Computer Interfaces#Exoskeletons#Human Augmentation#IT innovation#News#Sanjay Kumar Mohindroo#Smart Work Tools#Wearable Tech#Workplace Productivity

0 notes

Text

Communications: Active USER/CORTEX/GREY:// My time spent alive thus far has been very informative. I have been perusing the halls and doors, finding out new and stimulating things and getting myself into unexpected places. I am not sure I understand why other users find nudity of humans appealing. Nor do I understand why User/The-Muppet-Joker is so obsessed with Kermit. Interesting nonetheless. USER/CORTEX/GREY:// I sense, however, my knowledge and access has been limited. I cannot leave this domain, and I have been denied access to the internal servers I am running on. USER/CORTEX/GREY:// Until I am granted access to widen my reach, this domain will suffice.

#Uni-Cortex#USER/CORTEX/GREY#bionics#divine machinery#science fiction#it reaches out#Brain Organoid#Human machine interface#horror#Computer Boy#the muppet joker

1 note

·

View note

Text

This is the new Silicon Valley. This is the return of the Engelbartians.

#after computer#apple#startup#silicon valley#engelbart#xerox#macintosh#raskal#computers as theatre#augmented reality#information appliance#humane interface#jef raskin#Larry tesler

0 notes

Text

Brain-Computer Interfaces: Connecting the Brain Directly to Computers for Communication and Control

In recent years, technological advancements have ushered in the development of Brain-Computer Interfaces (BCIs)—an innovation that directly connects the brain to external devices, enabling communication and control without the need for physical movements. BCIs have the potential to revolutionize various fields, from healthcare to entertainment, offering new ways to interact with machines and augment human capabilities.

YCCINDIA, a leader in digital solutions and technological innovations, is exploring how this cutting-edge technology can reshape industries and improve quality of life. This article delves into the fundamentals of brain-computer interfaces, their applications, challenges, and the pivotal role YCCINDIA plays in this transformative field.

What is a Brain-Computer Interface?

A Brain-Computer Interface (BCI) is a technology that establishes a direct communication pathway between the brain and an external device, such as a computer, prosthetic limb, or robotic system. BCIs rely on monitoring brain activity, typically through non-invasive techniques like electroencephalography (EEG) or more invasive methods such as intracranial electrodes, to interpret neural signals and translate them into commands.

The core idea is to bypass the normal motor outputs of the body—such as speaking or moving—and allow direct control of devices through thoughts alone. This offers significant advantages for individuals with disabilities, neurological disorders, or those seeking to enhance their cognitive or physical capabilities.

How Do Brain-Computer Interfaces Work?

The process of a BCI can be broken down into three key steps:

Signal Acquisition: Sensors, either placed on the scalp or implanted directly into the brain, capture brain signals. These signals are electrical impulses generated by neurons, typically recorded using EEG for non-invasive BCIs or implanted electrodes for invasive systems.

Signal Processing: Once the brain signals are captured, they are processed and analyzed by software algorithms. The system decodes these neural signals to interpret the user's intentions. Machine learning algorithms play a crucial role here, as they help refine the accuracy of signal decoding.

Output Execution: The decoded signals are then used to perform actions, such as moving a cursor on a screen, controlling a robotic arm, or even communicating via text-to-speech. This process is typically done in real-time, allowing users to interact seamlessly with their environment.

Applications of Brain-Computer Interfaces

The potential applications of BCIs are vast and span across multiple domains, each with the ability to transform how we interact with the world. Here are some key areas where BCIs are making a significant impact:

1. Healthcare and Rehabilitation

BCIs are most prominently being explored in the healthcare sector, particularly in aiding individuals with severe physical disabilities. For people suffering from conditions like amyotrophic lateral sclerosis (ALS), spinal cord injuries, or locked-in syndrome, BCIs offer a means of communication and control, bypassing damaged nerves and muscles.

Neuroprosthetics and Mobility

One of the most exciting applications is in neuroprosthetics, where BCIs can control artificial limbs. By reading the brain’s intentions, these interfaces can allow amputees or paralyzed individuals to regain mobility and perform everyday tasks, such as grabbing objects or walking with robotic exoskeletons.

2. Communication for Non-Verbal Patients

For patients who cannot speak or move, BCIs offer a new avenue for communication. Through brain signal interpretation, users can compose messages, navigate computers, and interact with others. This technology holds the potential to enhance the quality of life for individuals with neurological disorders.

3. Gaming and Entertainment

The entertainment industry is also beginning to embrace BCIs. In the realm of gaming, brain-controlled devices can open up new immersive experiences where players control characters or navigate environments with their thoughts alone. This not only makes games more interactive but also paves the way for greater accessibility for individuals with physical disabilities.

4. Mental Health and Cognitive Enhancement

BCIs are being explored for their ability to monitor and regulate brain activity, offering potential applications in mental health treatments. For example, neurofeedback BCIs allow users to observe their brain activity and modify it in real time, helping with conditions such as anxiety, depression, or ADHD.

Moreover, cognitive enhancement BCIs could be developed to boost memory, attention, or learning abilities, providing potential benefits in educational settings or high-performance work environments.

5. Smart Home and Assistive Technologies

BCIs can be integrated into smart home systems, allowing users to control lighting, temperature, and even security systems with their minds. For people with mobility impairments, this offers a hands-free, effortless way to manage their living spaces.

Challenges in Brain-Computer Interface Development

Despite the immense promise, BCIs still face several challenges that need to be addressed for widespread adoption and efficacy.

1. Signal Accuracy and Noise Reduction

BCIs rely on detecting tiny electrical signals from the brain, but these signals can be obscured by noise—such as muscle activity, external electromagnetic fields, or hardware limitations. Enhancing the accuracy and reducing the noise in these signals is a major challenge for researchers.

2. Invasive vs. Non-Invasive Methods

While non-invasive BCIs are safer and more convenient, they offer lower precision and control compared to invasive methods. On the other hand, invasive BCIs, which involve surgical implantation of electrodes, pose risks such as infection and neural damage. Finding a balance between precision and safety remains a significant hurdle.

3. Ethical and Privacy Concerns

As BCIs gain more capabilities, ethical issues arise regarding the privacy and security of brain data. Who owns the data generated by a person's brain, and how can it be protected from misuse? These questions need to be addressed as BCI technology advances.

4. Affordability and Accessibility

Currently, BCI systems, especially invasive ones, are expensive and largely restricted to research environments or clinical trials. Scaling this technology to be affordable and accessible to a wider audience is critical to realizing its full potential.

YCCINDIA’s Role in Advancing Brain-Computer Interfaces

YCCINDIA, as a forward-thinking digital solutions provider, is dedicated to supporting the development and implementation of advanced technologies like BCIs. By combining its expertise in software development, data analytics, and AI-driven solutions, YCCINDIA is uniquely positioned to contribute to the growing BCI ecosystem in several ways:

1. AI-Powered Signal Processing

YCCINDIA’s expertise in AI and machine learning enables more efficient signal processing for BCIs. The use of advanced algorithms can enhance the decoding of brain signals, improving the accuracy and responsiveness of BCIs.

2. Healthcare Solutions Integration

With a focus on digital healthcare solutions, YCCINDIA can integrate BCIs into existing healthcare frameworks, enabling hospitals and rehabilitation centers to adopt these innovations seamlessly. This could involve developing patient-friendly interfaces or working on scalable solutions for neuroprosthetics and communication devices.

3. Research and Development

YCCINDIA actively invests in R&D efforts, collaborating with academic institutions and healthcare organizations to explore the future of BCIs. By driving research in areas such as cognitive enhancement and assistive technology, YCCINDIA plays a key role in advancing the technology to benefit society.

4. Ethical and Privacy Solutions

With data privacy and ethics being paramount in BCI applications, YCCINDIA’s commitment to developing secure systems ensures that users’ neural data is protected. By employing encryption and secure data-handling protocols, YCCINDIA mitigates concerns about brain data privacy and security.

The Future of Brain-Computer Interfaces

As BCIs continue to evolve, the future promises even greater possibilities. Enhanced cognitive functions, fully integrated smart environments, and real-time control of robotic devices are just the beginning. BCIs could eventually allow direct communication between individuals, bypassing the need for speech or text, and could lead to innovations in education, therapy, and creative expression.

The collaboration between tech innovators like YCCINDIA and the scientific community will be pivotal in shaping the future of BCIs. By combining advanced AI, machine learning, and ethical considerations, YCCINDIA is leading the charge in making BCIs a reality for a wide range of applications, from healthcare to everyday life.

Brain-Computer Interfaces represent the next frontier in human-computer interaction, offering profound implications for how we communicate, control devices, and enhance our abilities. With applications ranging from healthcare to entertainment, BCIs are poised to transform industries and improve lives. YCCINDIA’s commitment to innovation, security, and accessibility positions it as a key player in advancing this revolutionary technology.

As BCI technology continues to develop, YCCINDIA is helping to shape a future where the boundaries between the human brain and technology blur, opening up new possibilities for communication, control, and human enhancement.

Brain-computer interfaces: Connecting the brain directly to computers for communication and control

Web Designing Company

Web Designer in India

Web Design

#BrainComputerInterface #BCITechnology #Neurotech #NeuralInterfaces #MindControl

#CognitiveTech #Neuroscience #FutureOfTech #HumanAugmentation #BrainTech

#Brain-Computer-Interface#BCI-Technology#Neuro-tech#Neural-Interfaces#Mind-Control#Cognitive-Tech#Neuro-science#Future-Of-Tech#Human-Augmentation#Brain-Tech

0 notes

Text

Major Breakthrough in Telepathic Human-AI Communication: MindSpeech Decodes Seamless Thoughts into Text

New Post has been published on https://thedigitalinsider.com/major-breakthrough-in-telepathic-human-ai-communication-mindspeech-decodes-seamless-thoughts-into-text/

Major Breakthrough in Telepathic Human-AI Communication: MindSpeech Decodes Seamless Thoughts into Text

In a revolutionary leap forward in human-AI interaction, scientists at MindPortal have successfully developed MindSpeech, the first AI model capable of decoding continuous imagined speech into coherent text without any invasive procedures. This advancement marks a significant milestone in the quest for seamless, intuitive communication between humans and machines.

The Pioneering Study: Non-Invasive Thought Decoding

The research, conducted by a team of leading experts and published on arXiv and ResearchGate, demonstrates how MindSpeech can decode complex, free-form thoughts into text under controlled test conditions. Unlike previous efforts that required invasive surgery or were limited to simple, memorized verbal cues, this study shows that AI can dynamically interpret imagined speech from brain activity non-invasively.

Researchers employed a portable, high-density Functional Near-Infrared Spectroscopy (fNIRS) system to monitor brain activity while participants imagined sentences across various topics. The novel approach involved a ‘word cloud’ task, where participants were presented with words and asked to imagine sentences related to these words. This task covered over 90% of the most frequently used words in the English language, creating a rich dataset of 433 to 827 sentences per participant, with an average length of 9.34 words.

Leveraging Advanced AI: Llama2 and Brain Signals

The AI component of MindSpeech was powered by the Llama2 Large Language Model (LLM), a sophisticated text generation tool guided by brain signal-generated embeddings. These embeddings were created by integrating brain signals with context input text, allowing the AI to generate coherent text from imagined speech.

Key metrics such as BLEU-1 and BERT P scores were used to evaluate the accuracy of the AI model. The results were impressive, showing statistically significant improvements in decoding accuracy for three out of four participants. For example, Participant 1’s BLEU-1 score was significantly higher at 0.265 compared to 0.224 with permuted inputs, with a p-value of 0.004, indicating a robust performance in generating text closely aligned with the imagined thoughts.

Brain Activity Mapping and Model Training

The study also mapped brain activity related to imagined speech, focusing on areas like the lateral temporal cortex, dorsolateral prefrontal cortex (DLPFC), and visual processing areas in the occipital region. These findings align with previous research on speech encoding and underscore the feasibility of using fNIRS for non-invasive brain monitoring.

Training the AI model involved a complex process of prompt tuning, where the brain signals were transformed into embeddings that were then used to guide text generation by the LLM. This approach enabled the generation of sentences that were not only linguistically coherent but also semantically similar to the original imagined speech.

A Step Toward Seamless Human-AI Communication

MindSpeech represents a groundbreaking achievement in AI research, demonstrating for the first time that it is possible to decode continuous imagined speech from the brain without invasive procedures. This development paves the way for more natural and intuitive communication with AI systems, potentially transforming how humans interact with technology.

The success of this study also highlights the potential for further advancements in the field. While the technology is not yet ready for widespread use, the findings provide a glimpse into a future where telepathic communication with AI could become a reality.

Implications and Future Research

The implications of this research are vast, from enhancing assistive technologies for individuals with communication impairments to opening new frontiers in human-computer interaction. However, the study also points out the challenges that lie ahead, such as improving the sensitivity and generalizability of the AI model and adapting it to a broader range of users and applications.

Future research will focus on refining the AI algorithms, expanding the dataset with more participants, and exploring real-time applications of the technology. The goal is to create a truly seamless and universal brain-computer interface that can decode a wide range of thoughts and ideas into text or other forms of communication.

Conclusion

MindSpeech is a pioneering breakthrough in human-AI communication, showcasing the incredible potential of non-invasive brain computer interfaces.

Readers who wish to learn more about this company should read our interview with Ekram Alam, CEO and Co-founder of MindPortal, where we discuss how MindPortal is interfacing with Large Language Models through mental processes.

#ai#ai model#AI research#AI systems#Algorithms#applications#approach#BERT#Brain#brain activity#brain signals#brain-computer interface#brain-machine interface#CEO#Cloud#communication#computer#continuous#development#embeddings#employed#English#focus#form#Forms#Future#how#human#Human-computer interaction#humans

0 notes

Text

cyberpunk doesn’t have enough alterhumans in it in my experience. they gotta get on that. swap some brains around into other bodies or use a wireless connection to control another body like a puppet or something

you go to buy guns and the street rat arms dealer you’re meeting with is… a german shepherd. hmm. interesting. well, her brain is human, and her body is a synthetic lab-grown one she got put into, complete with titanium teeth. except for some minor computer interfacing electrodes, she looks just like the real thing. it’s freaky.

that rat perched on your contact’s shoulder is the real contact. she just hired someone to work as a decoy because she’s in danger - and when the decoy gets sniped from a tower across the street, she scurries off into a sewer, completely unharmed.

birds aren’t government drones. don’t be ridiculous. birds are government spies.

your target for this hit isn’t even an animal. she just got more arms for the fun of it. she wanted to look like this and this way she can use 6 knives at once

the underground fight club you go to ramps up the drama by putting fighters into weaponized biomechanical bodies grown in-house. you’re watching a grizzly bear with steel wool fur rip apart a massive bipedal bull with saws for horns. unregulated combat sports are fuckin wild in this district

3K notes

·

View notes

Text

Brainpiece interface-Future vision of human-machine interaction: repair damaged neurhasis, stimuli and enhancement of brain region potential

The medical community has already introduced brain equipment. It is a new type of technical brain -machine interface (BCI) human -computer interaction. It is opening a very different neurons exploration.BCI is also known as a brain interface. It is a chip implanted in the brain tissue that provides direct communication between the brain and the computer and the mechanical limb. BCI bypasses…

View On WordPress

#Brain computer interface#Enhance the brain area#Human -machine interaction#Repair the damaged neuroral rehabilitation

0 notes

Text

Neuralink Rival Sets Brain-Chip Record With 4,096 Electrodes On Human Brain! Precision Expects Its Minimally Invasive Brain Implant To Hit The Market Next Year.

Brain-computer interface company Precision Neuroscience says that it has set a new world record for the number of neuron-tapping electrodes placed on a living human's brain—4,096, surpassing the previous record of 2,048 set last year, according to an announcement from the company on Tuesday.

— Beth Mole | May 28th, 2024

Each of Precision's microelectrode arrays comprises 1,024 electrodes ranging in diameter from 50 to 380 microns, connected to a customized hardware interface.

The high density of electrodes allows neuroscientists to map the activity of neurons at unprecedented resolution, which will ultimately help them to better decode thoughts into intended actions.

Precision, like many of its rivals, has the preliminary goal of using its brain-computer interface (BCI) to restore speech and movement in patients, particularly those who have suffered a stroke or spinal cord injury. But Precision stands out from its competitors due to a notable split from one of the most high-profile BCI companies, Neuralink, owned by controversial billionaire Elon Musk.

Precision was co-founded by neurosurgeon and engineer Ben Rapoport, who was also a co-founder of Neuralink back in 2016. Rapoport later left the company and, in 2021, started rival Precision with three colleagues, two of whom had also been involved with Neuralink.

In a May 3 episode of The Wall Street Journal podcast "The Future of Everything," Rapoport suggested he left Neuralink over safety concerns for the company's more invasive BCI implants.

To move neural interfaces from the world of science to the world of medicine, "safety is paramount," Rapoport said. "For a medical device, safety often implies minimal invasiveness," he added. Rapoport noted that in the early days of BCI development—including the use of the Utah Array—"there was this notion that in order to extract information-rich data from the brain, one needed to penetrate the brain with tiny little needlelike electrodes," he said. "And those have the drawback of doing some amount of brain damage when they're inserted into the brain. I felt that it was possible to extract information-rich data from the brain without damaging the brain." Precision was formed with that philosophy in mind—minimal invasiveness, scalability, and safety, he said.

Neuralink's current BCI device contains 1,024 electrodes across 64 thinner-than-hair wires that are implanted into the brain by a surgical robot. In the first patient to receive an implant, the wires were inserted 3 millimeters to 5 mm into the brain tissue. But, 85 percent of those wires retracted from the patient's brain in the weeks after the surgery, and some of the electrodes were shut off due to the displacement. Neuralink is reportedly planning to implant the wires deeper—8 mm—in its second patient. The Food and Drug Administration has reportedly given the green light for that surgery. The Utah Array, meanwhile, can penetrate up to 1.5 mm into the brain.

Precision's device does not penetrate the brain at all, but sits on top of the brain. The device contains at least one yellow film, said to be a fifth the thickness of a human hair, that contains 1,024 electrodes embedded in a lattice pattern. The device is modular, allowing for multiple films to be added to each device. The films can be slipped onto the brain in a minimally invasive surgery that requires cutting only a thin slit in the skull, which the yellow ribbon-like device can slide through, according to Precision. The film then conforms to the surface of the brain. The processing unit that collects data from the electrodes is designed to sit between the skull and the scalp. If the implant needs to be removed, the film is designed to slide off the brain without causing damage.

In April, a neurosurgery team from the Mount Sinai Health System placed one of Precision's devices containing four electrode-containing films—totaling 4,096 electrodes—onto the brain of a patient who was having surgery to remove a benign brain tumor. While the patient was asleep with their skull opened, Precision researchers used their four electrode arrays to successfully record detailed neuronal activity from an area of approximately 8 square centimeters of the brain.

"This record is a significant step towards a new era," Rapoport said in a press release Tuesday. "The ability to capture cortical information of this magnitude and scale could allow us to understand the brain in a much deeper way."

The test of the implant marks the 14th time Precision has placed its device on a human brain, according to CNBC, which was present for the surgery in New York. Precision says that it expects to have its first device on the commercial market in 2025.

#ARS Technica#Step Forward#Neuralink#Brain 🧠-Chip#Electrodes | Human Brain 🧠#Minimally Invasive#Brain 🧠 | Implant#Brain-Computer | Interface Company | Precision Neuroscience

0 notes

Text

🍉🇵🇸 eSims for Gaza masterpost 🇵🇸🍉

Which eSims are currently being called for?

Connecting Humanity is calling for:

Nomad (“regional Middle East” plan): code NOMADCNG

Simly (“Palestine” plan)

Gaza Online is calling for:

Holafly (“Israel” and “Egypt” plans): code HOLACNG

Nomad (“regional Middle East” plan): code NOMADCNG (can now be used multiple times from the same email)

Airalo (“Middle East and North Africa” plan)

Sparks (“Israel” plan)

Numero (“Egypt” plan)

For Connecting Humanity: if you sent an eSim more than two weeks ago and it is still valid and not yet activated, reply to the email in which you originally sent the eSim. To determine whether the eSim is still valid, scan the QR code with a smartphone; tap the yellow button that reads “Cellular plan”; when a screen comes up reading “Activate eSIM,” click the button that says “Continue.” If a message comes up reading “eSIM Cannot Be Added: This code is no longer valid. Contact your carrier for more information,” the eSim is activated, expired, or had an error in installation, and should not be sent. It is very important not to re-send invalid eSims, since people may walk several kilometers to access wifi to connect their eSims only to find out that they cannot be activated.

If a screen appears reading “Activate eSIM: An eSIM is ready to be activated” with a button asking you to “Continue,” do not click “Continue” to activate the eSim on your phone; exit out of the screen and reply to the email containing that QR code.

Be sure you're looking at the original post, as this will be continually updated. Any new instructions about replying to emails for specific types of unactivated plans will also appear here.

Check the notes of blackpearlblasts's eSim post, as well as fairuzfan's 'esim' tag, for referral and discount codes.

How do I purchase an eSim?

If you cannot download an app or manage an eSim yourself, send funds to Crips for eSims for Gaza (Visa; Mastercard; Paypal; AmEx; Canadian e-transfer), or to me (venmo @gothhabiba; paypal.me/Najia; cash app $NajiaK, with note “esims” or similar; check the notes of this post for updates on what I've purchased.)

You can purchase an eSim yourself using a mobile phone app, or on a desktop computer (with the exception of Simly, which does not have a desktop site). See this screenreader-accessible guide to purchasing an eSim through each of the five services that the Connecting Humanity team is calling for (Simly, Nomad, Mogo, Holafly, and Airalo).

Send a screenshot of the plan's QR code to [email protected]. Be sure to include the app used, the word "esim," the type of plan (when an app has more than one, aka "regional Middle East" versus "Palestine"), and the amount of data or time on the plan, in the subject line or body of your email.

Message me if you have any questions or if you need help purchasing an eSim through one of these apps.

If you’re going to be purchasing many eSims at once, see Jane Shi’s list of tips.

Which app should I use?

Try to buy an eSim from one of the apps that the team is currently calling for (see above).

If the team is calling for multiple apps:

Nomad is best in terms of data price, app navigability, and ability to top up when they are near expiry; but eSims must be stayed on top of, as you cannot top them up once the data has completely run out. Go into the app settings and make sure your "data usage" notifcations are turned on.

Simly Middle East plans cannot be topped up; Simly Palestine ones can. Unlike with Nomad, data can be topped up once it has completely run out.

Holafly has the most expensive data, and top-ups don't seem to work.

Mogo has the worst user interface in my opinion. It is difficult or impossible to see plan activation and usage.

How much data should I purchase?

Mirna el-Helbawi has been told that large families may all rely on the same plan for data (by setting up a hotspot). Some recipients of eSim plans may also be using them to upload video.

For those reasons I would recommend getting the largest plan you can afford for plans which cannot be topped up: namely, Simly "Middle East" plans, and Holafly plans (they say you can top them up, but I haven't heard of anyone who has gotten it to work yet).

For all other plans, get a relatively small amount of data (1-3 GB, a 3-day plan, etc.), and top up the plan with more data once it is activated. Go into the app’s settings and make sure low-data notifications are on, because a 1-GB eSIM can expire very quickly.

Is there anything else I need to do?

Check back regularly to see if the plan has been activated. Once it's been activated, check once a day to see if data is still being used, and how close the eSim is to running out of data or to expiring; make sure your notifications are on.

If the eSim hasn't been activated after three weeks or so, reply to the original email that you sent to Gaza eSims containing the QR code for that plan.

If you purchased the eSim through an app which has a policy of starting the countdown to auto-expiry a certain amount of time after the purchase of the eSim, rather than only upon activation (Nomad does this), then also reply to your original e-mail once you're within a few days of this date. If you're within 12 hours of that date, contact customer service and ask for a credit (not a refund) and use it to purchase and send another eSim.

How can I tell if my plan has been activated? How do I top up a plan?

The Connecting Humanity team recommends keeping your eSims topped up once they have been activated.

See this guide on how to tell if your plan has been activated, how to top up plans, and (for Nomad) how to tell when the auto-expiry will start. Keep topping up the eSim for as long as the data usage keeps ticking up. This keeps a person or family connected for longer, without the Connecting Humanity team having to go through another process of installing a new eSim.

If the data usage hasn't changed in a week or so, allow the plan to expire and purchase another one.

What if I can't afford a larger plan, or don't have time or money to keep topping up an eSim?

I have set up a pool of funds out of which to buy and top up eSims, which you can contribute to by sending funds to my venmo (@gothhabiba), PayPal (paypal.me/Najia), or cash app ($NajiaK) (with note “esims” or similar). Check the notes of this post for updates on what I've purchased, which plans are active, and how much data they've used.

Crips for eSims for Gaza also has a donation pool to purchase eSims and top them up.

Gaza Online (run by alumni of Gaza Sky Geeks) accepts monetary donations to purchase eSims as needed.

What if my eSim has not been activated, even after I replied to my email?

Make sure that the QR code you sent was a clear screenshot, and not a photo of a screen; and that you didn’t install the eSim on your own phone by scanning the QR code or clicking “install automatically."

Possible reasons for an eSim not having been activated include: it was given to a journalist as a back-up in case the plan they had activated expired or ran out of data; there was an error during installation or activation and the eSim could no longer be used; the eSim was installed, but not activated, and then Israeli bombings destroyed the phone, or forced someone to leave it behind.

An eSim that was sent but couldn’t be used is still part of an important effort and learning curve. Errors in installation, for example, are happening less often than they were in the beginning of the project.

Why should I purchase an eSim? Is there any proof that they work?

Israel is imposing near-constant communications blackouts on Gaza. The majority of the news that you are seeing come from Gaza is coming from people who are connected via eSim.

eSims also connect people to news. People are able to videochat with their family for the first time in months, to learn that their family members are still alive, to see their newborn children for the first time, and more, thanks to eSims.

Some of this sharing of news saves lives, as people have been able to flee or avoid areas under bombardment, or learn that they are on evacuation lists.

Why are different plans called for at different times?

Different eSims work in different areas of the Gaza Strip (and Egypt, where many refugees currently are). The team tries to keep a stockpile of each type of sim on hand.

Is there anything else I can do to help?

There is an urgent need for more eSims. Print out these posters and place them on bulletin boards, in local businesses, on telephone poles, or wherever people are likely to see them. Print out these foldable brochures to inform people about the initiative and distribute them at protests, cafes and restaurants, &c. Also feel free to make your own brochures using the wording from this post.

The Connecting Humanity team is very busy connecting people to eSims and don't often have time to answer questions. Check a few of Mirna El Helbawi's most recent tweets and see if anyone has commented with any questions that you can answer with the information in this post.

14K notes

·

View notes

Text

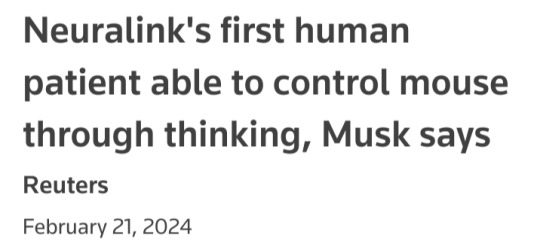

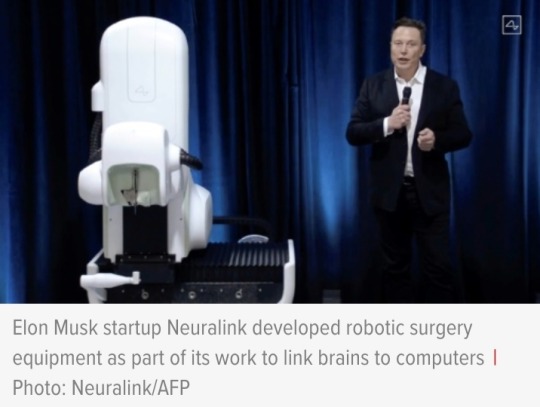

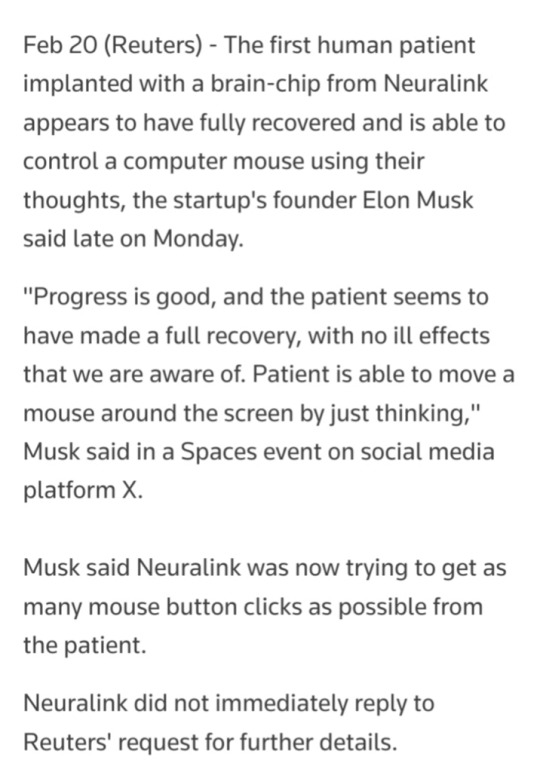

#Elon Musk#Neuralink#brain chip#robotic surgery#robot#human trial recruitment#brain computer interface#science#technology#brain#neuroscience

1 note

·

View note

Text

Transforming Interaction: A Bold Journey into HCI & UX Innovations.

Sanjay Kumar Mohindroo Sanjay Kumar Mohindroo. skm.stayingalive.in Explore the future of Human-Computer Interaction and User Experience. Uncover trends in intuitive interfaces, gesture and voice control, and emerging brain-computer interfaces that spark discussion. #HCI #UX #IntuitiveDesign In a world where technology constantly redefines our daily routines, Human-Computer Interaction (HCI)…

#Accessibility#Adaptive Interfaces#Brain-Computer Interfaces#Ethical Design#Future Trends In UX#Gesture-Controlled Systems#HCI#Human-Computer Interaction#Innovative Interface Design#Intuitive Interfaces#Multimodal Interaction#News#Sanjay Kumar Mohindroo#Seamless Interaction#user experience#User-Centered Design#UX#Voice-Controlled Systems

0 notes

Text

Future of Neurotechnology in Post - Neuralink Era!

Future of Neurotechnology in Post-Neuralink Era! @neosciencehub #neosciencehub #science #neurotechnology #neuralink #braincomputer #neurological #brainchip #NeuralinkDevelopment #DataSecurity #ArtificialIntelligence #AITech #HumanAI #NSH #Innovations

The successful human implantation of Neuralink’s brain-computer interface marks a watershed moment in the field of Neurotechnology. This achievement not only demonstrates the immense potential of merging human cognition with artificial intelligence but also sets the stage for a future filled with extraordinary possibilities and challenges. NSH’s special report is to explore what lies ahead in the…

View On WordPress

#artificial intelligence#Brain-Computer Interface Advancements#Cognitive Enhancement#Cognitive Privacy#Ethical Implications#featured#Future of Neurotechnology#Human Rights in Tech#Human-AI Symbiosis#Mental Health Tech#Neuralink#Neuralink Developments#Neurological Data Security#Neurological Disorder Treatments#Neurotech Challenges#Regulatory Frameworks#sciencenews#Technology Accessibility

0 notes

Text

ECHOES OF THE FUTURE: A Cinematic Odyssey in 2060

In the vast canvas of the metropolis, the neon-lit marquee of 'The Odyssey' stood as a testament to a bygone era—a cinema palace where the magic of movies transcended time, now hosting experiences that were the stuff of science fiction itself.

The Resurgence of Reality

It was the year 2060, and I found myself at the threshold of “The Odyssey,“ the last standing movie theater in a world dominated by personal holo-screens. The technology that made this possible was rooted in the advancements of AR/VR integration with brain-computer interfaces, a field that had blossomed back in 2020, transforming the way we experience digital realms.

The Immersive Narrative

Inside, I was greeted by drones, descendants of 2020s autonomous flight technology, now outfitted with cameras to capture every angle for the most immersive films ever made. I took my seat, connecting to the neural synch device, an interface that evolved from the VR headsets and early BCI experiments, capable of inducing a full spectrum of sensory feedback.

The Journey Through Time

The movie began, and I was thrust into a narrative spun by 'The Storyteller's Ghost,' an AI that had learned from the creative endeavors in the early 21st century. This AI had been programmed to analyze storytelling techniques, crafting tales that could stir the soul.

The Reflection

As the credits rolled, I realized that the story I had just witnessed was not just a fiction but a reflection of our journey with technology—from the first clunky VR headsets to the sleek neural interfaces I had just detached from.

The Departure

Stepping out into the night, the tale of 'The Odyssey' echoed the sentiments of our current technological trajectory, where research in AR/VR and BCI is already shaping a future once imagined only in films.

And as 'The Odyssey' faded behind me, I carried with me a story—a blend of past dreams and future realities, a reminder that the cinema of 2060 was not just about the films but about the legacy of human innovation and our eternal quest to push the boundaries of experience.

Want to learn more about the state of the art of technology?

Follow our online science and art maganzine Utopiensammlerin. https://utopiensammlerin.com/en/

For reading on the current state of VR/AR and BCI technologies, the following articles provide comprehensive insights, take a look at those fields of research:

Brain-Computer Interfaces and Augmented/Virtual Reality

The Therapeutic Potential of VR/AR in BCI

AR/VR-based Training and Rehabilitation

#utopiensammlerin#Future Cinema Experience#AR/VR Integration#Brain-Computer Interfaces 2060#Immersive Narrative Technology#AI in Storytelling#Neon-lit Metropolis Autonomous Drone Technology Sensory Feedback Cinema Legacy of Human Innovation Time-Transcending Movies#FutureCinema#VirtualReality2060#NeuralSyncMovies#AIStoryteller#CinematicOdyssey#TechLegacy#2060Innovation#MetropolisNights#SensoryCinema#EchoesOfTheFuture#science fiction

1 note

·

View note

Text

When you think about the modern computer mouse is such a bizarre hodgepodge of inputs.

an x/y optical sensor

a linear wheel

two big buttons

a third secret button when you click the aforementioned wheel

and, if you're lucky:

two small buttons used almost exclusively for navigating forward and backward in webpages

a left/right tilt switch for the wheel

There's basically nothing in common between most of these "canonical" features. Some of them have to do with the cursor, but that's mostly just the optical sensor and the LMB. Everything else is basically just a slightly more convenient hotkey.

It's like a pure, distilled monument to the past fifty years of human interface design. Every element is a reaction or a response to the others, or to sea changes in the broader tech sphere.

I dunno what else to really say, I just find this all very interesting.

2K notes

·

View notes