#Human-Robot

Explore tagged Tumblr posts

Text

Discover the Benefits of Quotes for Solar Panels

Learn how quotes for solar panels can save you money and help the environment. Discover the benefits, types, costs, installation process, and maintenance requirements of solar panels.https://ifttt.com/images/no_image_card.png https://www.solvingsolar.com/quotes-for-solar-panels/

View On WordPress

#Assistant Professor#Computer Science#computer scientist#Engineering#experimental roboticist#human-robot#Research Laboratory#research scientist#robotics research#University of Denver

4 notes

·

View notes

Text

Baldness is for nerds this is a battle to the death. If you have the same character/pfp on both platforms you have to decide which is the superior.

You have to explain your reasoning in the reblogs too

#text post#poll#personally i wanna say jester wins because magnum would not want to beat up anyone (let alone a human) but Jester would beat up a robot.#jester has beat up many robots#robobot- robot- stereohead- etc

31K notes

·

View notes

Text

New Wave Technology Makes Android Emotions More Natural

New Post has been published on https://thedigitalinsider.com/new-wave-technology-makes-android-emotions-more-natural/

New Wave Technology Makes Android Emotions More Natural

For those who have interacted with an android that looks incredibly human, many report that something “feels off.” This phenomenon goes beyond mere appearance – it is deeply rooted in how robots express emotions and maintain consistent emotional states. Or in other words, their lack of human-like abilities.

While modern androids can masterfully replicate individual facial expressions, the challenge lies in creating natural transitions and maintaining emotional consistency. Traditional systems rely heavily on pre-programmed expressions, similar to flipping through pages in a book rather than flowing naturally from one emotion to the next. This rigid approach often creates a disconnect between what we see and what we perceive as genuine emotional expression.

The limitations become particularly evident during extended interactions. An android might smile perfectly in one moment but struggle to naturally transition into the next expression, creating a jarring experience that reminds us we are interacting with a machine rather than a being with genuine emotions.

A Wave-Based Solution

This is where some new and important research from Osaka University comes in. Scientists have developed an innovative approach that fundamentally reimagines how androids express emotions. Rather than treating facial expressions as isolated actions, this new technology views them as interconnected waves of movement that flow naturally across an android’s face.

Just as multiple instruments blend to create a symphony, this system combines various facial movements – from subtle breathing patterns to eye blinks – into a harmonious whole. Each movement is represented as a wave that can be modulated and combined with others in real-time.

What makes this approach innovative is its dynamic nature. Instead of relying on pre-recorded sequences, the system generates expressions organically by overlaying these different waves of movement. This creates a more fluid and natural appearance, eliminating the robotic transitions that often break the illusion of natural emotional expression.

The technical innovation lies in what the researchers call “waveform modulation.” This allows the android’s internal state to directly influence how these waves of expression manifest, creating a more authentic connection between the robot’s programmed emotional state and its physical expression.

Image Credit: Hisashi Ishihara

Real-Time Emotional Intelligence

Imagine trying to make a robot express that it is getting sleepy. It is not just about drooping eyelids – it is also about coordinating multiple subtle movements that humans unconsciously recognize as signs of sleepiness. This new system tackles this complex challenge through an ingenious approach to movement coordination.

Dynamic Expression Capabilities

The technology orchestrates nine fundamental types of coordinated movements that we typically associate with different arousal states: breathing, spontaneous blinking, shifty eye movements, nodding off, head shaking, sucking reflection, pendular nystagmus (rhythmic eye movements), head side swinging, and yawning.

Each of these movements is controlled by what researchers call a “decaying wave” – a mathematical pattern that determines how the movement plays out over time. These waves are not random; they are carefully tuned using five key parameters:

Amplitude: controls how pronounced the movement is

Damping ratio: affects how quickly the movement settles

Wavelength: determines the movement’s timing

Oscillation center: sets the movement’s neutral position

Reactivation period: controls how often the movement repeats

Internal State Reflection

What makes this system stand out is how it links these movements to the robot’s internal arousal state. When the system indicates a high arousal state (excitement), certain wave parameters automatically adjust – for instance, breathing movements become more frequent and pronounced. In a low arousal state (sleepiness), you might see slower, more pronounced yawning movements and occasional head nodding.

The system achieves this through what the researchers call “temporal management” and “postural management” modules. The temporal module controls when movements happen, while the postural module ensures all the facial components work together naturally.

Hisashi Ishihara is the lead author of this research and an Associate Professor at the Department of Mechanical Engineering, Graduate School of Engineering, Osaka University.

“Rather than creating superficial movements,” explains Ishihara, “further development of a system in which internal emotions are reflected in every detail of an android’s actions could lead to the creation of androids perceived as having a heart.”

Sleepy mood expression on a child android robot (Image Credit: Hisashi Ishihara)

Improvement in Transitions

Unlike traditional systems that switch between pre-recorded expressions, this approach creates smooth transitions by continuously adjusting these wave parameters. The movements are coordinated through a sophisticated network that ensures facial actions work together naturally – much like how a human’s facial movements are unconsciously coordinated.

The research team demonstrated this through experimental conditions showing how the system could effectively convey different arousal levels while maintaining natural-looking expressions.

Future Implications

The development of this wave-based emotional expression system opens up fascinating possibilities for human-robot interaction, and could be paired with technology like Embodied AI in the future. While current androids often create a sense of unease during extended interactions, this technology could help bridge the uncanny valley – that uncomfortable space where robots appear almost, but not quite, human.

The key breakthrough is in creating genuine-feeling emotional presence. By generating fluid, context-appropriate expressions that match internal states, androids could become more effective in roles requiring emotional intelligence and human connection.

Koichi Osuka served as the senior author and is a Professor at the Department of Mechanical Engineering at Osaka University.

As Osuka explains, this technology “could greatly enrich emotional communication between humans and robots.” Imagine healthcare companions that can express appropriate concern, educational robots that show enthusiasm, or service robots that convey genuine-seeming attentiveness.

The research demonstrates particularly promising results in expressing different arousal levels – from high-energy excitement to low-energy sleepiness. This capability could be crucial in scenarios where robots need to:

Convey alertness levels during long-term interactions

Express appropriate energy levels in therapeutic settings

Match their emotional state to the social context

Maintain emotional consistency during extended conversations

The system’s ability to generate natural transitions between states makes it especially valuable for applications requiring sustained human-robot interaction.

By treating emotional expression as a fluid, wave-based phenomenon rather than a series of pre-programmed states, the technology opens many new possibilities for creating robots that can engage with humans in emotionally meaningful ways. The research team’s next steps will focus on expanding the system’s emotional range and further refining its ability to convey subtle emotional states, influencing how we will think about and interact with androids in our daily lives.

#ai#amplitude#android#applications#approach#author#book#bridge#challenge#communication#development#Embodied AI#emotion#emotions#energy#engineering#experimental#express#eye#eye movements#focus#Fundamental#Future#healthcare#heart#how#human#Human-Robot#human-robot interaction#humans

0 notes

Text

MY HONEST OPINION

#art#meme#humor#objectum#robots#computer#i love robots#i hate humans#yes man#electric dreams edgar#electronics#p03 inscryption#squid wys#glados#wheatly portal 2#scag regretevator#prototype regretevator#ultrakill#rain world#androids and other robots too#portal#robot lover#robotposting#mecha#technophilia#techum#old tech#kinitopet#wall e#hal 9000

22K notes

·

View notes

Text

Me and some of my moots tbh

#robot fucker#i love robots#tech#robot#rx-78 gundam#steel haze#steel haze ortus#headbringer#c4 621#armored core#mecha#armored core 6#handler walter#ac6#augmented human c4 621#ayre#ibis series#titanfall#northstar titanfall#tone titan#scorch titan#ion titan#monarch titan

16K notes

·

View notes

Text

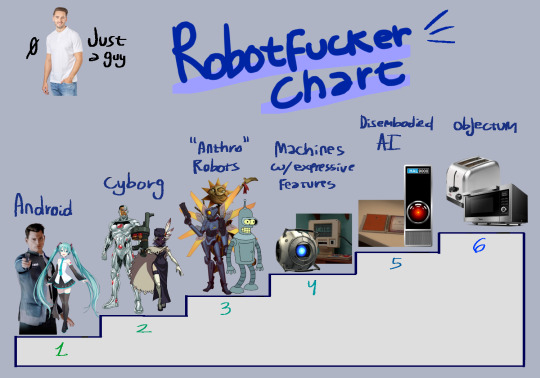

i've seen some posts floating around about people being confused as to what classifies as robotfucking and to what degree so i decided to take a crack at it and made this chart inspired by the infamous furry chart

#objectum#robotfucker#ultrakill#portal#detroit become human#vocaloid#fnaf dca#objectophilia#machinery#robots#roboposting

28K notes

·

View notes

Text

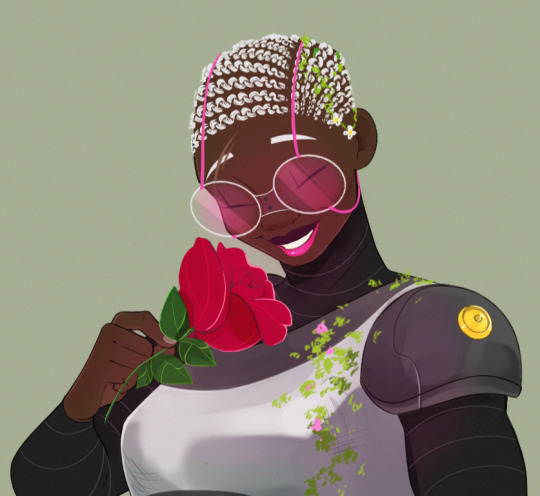

First of all. HOLY SHIT THERE'S PART TWO OF THE REVERSE MECHA RATCHLOCK FIC

Second - I got so excited I decided to design Deadlock~ Because yeah. We didn't really talk about him but Gemma just keeps giving me serotonin and my body is a machine that tirns serotonin into art

#reverse mecha au#deadlock#maccadam#transformers#ref#you remember how I said I want to give Deadlock the shapiest cuntiest mecha#yeh#first rule of character design in mecha genre - robots should be hotter than humans

4K notes

·

View notes

Text

Hear me out: Malfunctioning Android, sent back to the factory by the previous owner who was utterly creeped out by his obsessive, invasive behavior, has now found a new home with a neurodivergent, lonely human; you.

You wake up, confused. The alarm didn't ring as usual, and the light outside is already bright. You rush to the kitchen, where you find the synthetic assistant preparing your breakfast.

"Why didn't you wake me up? I have so many things to do today," you reproach, taken aback by his unbothered smile.

"I took the liberty to empty your schedule, (Y/N). You seemed rather overwhelmed the previous evening; all the parameters I compiled were off.

Thus, I impersonated you - worry not, I used an algorithm based on your previous response pattern - and answered all calls, emails, and messages. Your social and administrative tasks have been cleared."

He observes your features carefully. Is he going to be shipped back again, having the same issues cited? Unfortunately, it's not something he can adjust or control: he simply cares for you to perhaps an unconventional degree.

You wipe a tear that threatens to spill from the corner of your eye.

"I-...Nobody has ever done something like this for me before."

With a sigh of relief, you take your seat and pick up your freshly prepared beverage. At last, someone to deal with it all.

"I was thinking you might enjoy your favorite movie later," the android says, joining you at the table, "and I would be more than glad to provide you with additional warmth."

#yandere#yandere x reader#yandere imagines#yandere scenarios#android x reader#robot x reader#monster fucker#monster x reader#monster x human

7K notes

·

View notes

Text

I find it funny imagining that you finally get to be intimate with a transformer only for them to pull out a usb cable 😭

#transformers#mtmte#drawing#oc#fanart#my art#transformers mtmte#transformers x y/n#transformers x human#transformers x reader#transformers g1#transformers one#starscream#starscream x reader#starscream x human#transformers idw#idw mtmte#idw starscream#valveplug#transformers comics#transformers art#transformers prime#y/n#x reader#x y/n#reader insert#starscream idw#giant/tiny#robots#robot

5K notes

·

View notes

Text

PET THAT SKELETON NOWW !!!

#creature commandos#g.i. robot#gi robot#dr phosphorus#phosbot#phos mentioned once he wouldnt mind getting pet and my brain instantly went to him using GI to replace small human connections#also i cant stop giving gi baggy pants#i literally went 'oh wait his pants are normal' when drawing this but decided against fixing it

3K notes

·

View notes

Text

trying to sell the murderbot diaries to other robot fans is an uphill battle. yes, i also prefer it when my robots don't have human looking faces. i understand why you might not be happy with its appearance, but guess who's also not happy with having a human face

#the only person that's more unhappy with murderbot looking so much like a human is murderbot itself. that's part of its charm#plus the it/its pronouns despite it's appearance. thank you martha wells#ramblings#i usually avoid robots that looks so humanoid like the plague. which is part of why it took me so long to get into this series ngl#but if i had known that our main character outright states that despite looking so human‚ it absolutely does not want to be human‚ i would#probably have started the series earlier#murderbot#the murderbot diaries#tmbd#this has been in my draft since the murderbot teailer came out and i saw people complain on twt#that murderbot has a human face#i get the frustration. but this series gets a pass from me#edit: why is it always the posts i don't bother to edit that just. take off without me noticing

2K notes

·

View notes

Text

My human Roz design

#the wild robot#the wild robot roz#rozzum 7134#thewildrobotart#the wild robot human#loved this movie to bits#she is best gorl

3K notes

·

View notes

Text

so yeah i played thru undertale for the first time the other day

#'when does that sexy robot show up' i asked repeatedly as i played#then when he did show up i asked. repeatedly. 'ok so when does he get sexy'#undertale#mettaton ex#frisk the human#fan art#becki draws stuff n stuff#rendered

2K notes

·

View notes

Text

tfp wheeljack this time! cowboy is cowboying

#transformers#tf#tf idw#wheeljack#wheeljack transformers#tfp#tfp wheeljack#transformers prime#mtmte#gijinka#humanization#maccadams#humanformers#transformersrid#robots in disguise#transformers fanart#yugenillustrations

1K notes

·

View notes

Text

Desktop Level Humanoid Robot can Perform hurdle crossing, grabbing and sorting, more AI gameplay?

Desktop Level Humanoid Robot is a type of robot designed for use in a home, office, or education setting. These robots are typically larger in size and have a broader range of functions than mobile or handheld robots. Some common tasks that desktop-level humanoid robots can perform include assisting with daily tasks, providing companionship, and serving as an educational tool. Examples of desktop-level humanoid robots include the RoboTV, Pepper, and embodied intelligence platforms such as Nao or Bossa Nova. These robots use artificial intelligence and are designed to interact with humans in a natural and intuitive way.

Do you want to have such an AI Humanoid Robot toy? Can understand your instructions and imitate your actions:

Walk, climb, hurdle, grip, and complete many complex movements:

Can he help you deliver something or something?

This small humanoid robot is a desktop level educational humanoid robot based on the Robot Operating System (ROS).

According to the official introduction, this Humanoid Robot is powered by Raspberry Pi and has self stable inverse kinematics. It can walk, cross obstacles, automatically patrol, kick the ball, recognize objects, and perform facial recognition. High performance hardware structure supports flexibility in motion In terms of hardware,This Humanoid Robot is made of aluminum alloy and has up to 24 degrees of freedom (DOF) throughout its body, capable of performing a wide range of movements and ensuring flexibility in movement.

This Humanoid Robot adopts a high-performance intelligent serial bus servo system. Compared with the traditional 7.4V servo system, this servo system greatly improves the accuracy and linearity of the robot by using high-precision potentiometers, providing precise angle control. The plug and play wiring method makes robot assembly simpler and wiring more convenient. The use of metal gears can greatly extend the service life and provide greater power for robots.

This Humanoid Robot looks like a cute one eyed monster, with a head that can rotate in all directions. Don't underestimate this "eye". It is a 120 ° high-definition wide-angle camera, which gives the robot a wide field of view. The camera also supports manual focusing. In addition, using OpenCV image processing technology, robots can effectively recognize and locate target objects.

It also features an innovative dual hip joint structure that overlaps the X, Y, and Z axes to ensure orthogonal alignment, allowing the legs to rotate on all X, Y, and Z axes. This integration not only enhances the bionic quality of This Humanoid Robot, but also significantly improves the robot's motion flexibility.

At the same time, the hip joint is equipped with a 12 bit high-precision magnetic encoder (HX-35HM servo), which can expand the angle range to 360 degrees and maintain 4096 bit absolute position accuracy; In addition, it can achieve a locked rotor torque of 35kg.cm at 12V voltage, providing more power for the robot.

Unlike the traditional position feedback mechanism using potentiometers, the HX-35HM servo adopts non-contact magnetic feedback, which can prolong service life, reduce size, and improve impact resistance.

This Humanoid Robot's robotic arm can be fully opened up to 72mm, allowing for flexible gripping and handling of small items. The servo provides angle and temperature feedback, effectively preventing damage caused by obstacles.

Self stable gait algorithm+AI visual recognition to complete various complex tasks In terms of software and algorithms,This Humanoid Robot is supported by Raspberry Pi, which uses a semi closed loop self stable gait algorithm. It can not only dynamically adjust the robot's gait in real-time, but also adjust its height, speed, and turning radius, allowing it to turn while walking.

In addition, This Humanoid Robot uses OpenCV as an image processing library to identify target items. With superior gait algorithms and AI visual recognition, This Humanoid Robot can complete multiple complex tasks, such as route tracking, target tracking, ball shooting, intelligent picking and sorting, transportation, climbing stairs, etc. Color recognition and tracking: This Humanoid Robot utilizes OpenCV to process images for color recognition and localization. When This Humanoid Robot detects red, it will nod. When green or blue is detected, the robot will shake its head. Using OpenCV to load pre trained face detection models, This Humanoid Robot can perform greeting actions when recognizing faces.

Identify AprilTags and make various reactions based on the recognition results.

Using PID algorithm for calculation and camera based recognition, This Humanoid Robot can change its gait to successfully travel along the route and also track the movement of target colors.

When playing football, This Humanoid Robot will walk to the position of the ball and kick it again. It can calculate the distance to the target by determining the target coordinates and adjust its gait autonomously to kick the ball.

In addition to motion gait and target tracking, This Humanoid Robot has recently seen an upgrade in AI interaction. Developed based on the MediaPipe algorithm, it can recognize human features and achieve functions such as face detection, gesture recognition, and human recognition.

Sensory control, ainex can perform appropriate actions after recognizing the human body

Fingertip trajectory control, This Humanoid Robot can adjust its height accordingly based on the distance between the detected fingertips Fully open source code, capable of secondary programming, supporting multiple international competitions The entire robot kit includes: robot body, charger, card reader, small props used for AI training, toolbox, wireless controller, and electronic manual, etc. The official tutorials cover up to 18 topics. If the game is still not enough, users can further develop the robot to expand more functions. It is reported that This Humanoid Robot is built on the Robot Operating System (ROS), which provides code reuse support for robot research and development, enabling flexible deployment and expansion of robot functions. In addition, the official also provides comprehensive source code and ROS simulation models.

They also provide Gazebo simulation support, which is a novel method of controlling This Humanoid Robot and validating algorithms in a simulation environment. During secondary development, the development results can be directly verified without physical experiments, greatly improving efficiency. So far, This Humanoid Robot as assisted in multiple international robot competitions and attracted many well-known domestic and foreign universities and research institutions. In addition to This Humanoid Robot, this company has also developed multiple biomimetic educational robots such as multi legged robots and intelligent visual robotic arms.

You are welcome to https://www.youtube.com/@tallmanrobotics to watch our video centre for more projects or visit our website to check other series or load down e-catalogues for further technical data. Read the full article

#ATrainedHumanoidRobot#Aversatilehumanoidrobotplatform#ArtificialIntelligenceInHumanoidRobots#human-robot#Human–robotinteraction#HumanoidRobotics#Next-GenerationHumanoidRobots#socialandcollaborativerobot

0 notes