#Humanizing Automation

Explore tagged Tumblr posts

Text

i have chronic pain. i am neurodivergent. i understand - deeply - the allure of a "quick fix" like AI. i also just grew up in a different time. we have been warned about this.

15 entire years ago i heard about this. in my forensics class in high school, we watched a documentary about how AI-based "crime solving" software was inevitably biased against people of color.

my teacher stressed that AI is like a book: when someone writes it, some part of the author will remain within the result. the internet existed but not as loudly at that point - we didn't know that AI would be able to teach itself off already-biased Reddit threads. i googled it: yes, this bias is still happening. yes, it's just as bad if not worse.

i can't actually stop you. if you wanna use ChatGPT to slide through your classes, that's on you. it's your money and it's your time. you will spend none of it thinking, you will learn nothing, and, in college, you will piss away hundreds of thousands of dollars. you will stand at the podium having done nothing, accomplished nothing. a cold and bitter pyrrhic victory.

i'm not even sure students actually read the essays or summaries or emails they have ChatGPT pump out. i think it just flows over them and they use the first answer they get. my brother teaches engineering - he recently got fifty-three copies of almost-the-exact-same lab reports. no one had even changed the wording.

and yes: AI itself (as a concept and practice) isn't always evil. there's AI that can help detect cancer, for example. and yet: when i ask my students if they'd be okay with a doctor that learned from AI, many of them balk. it is one thing if they don't read their engineering textbook or if they don't write the critical-thinking essay. it's another when it starts to affect them. they know it's wrong for AI to broad-spectrum deny insurance claims, but they swear their use of AI is different.

there's a strange desire to sort of divorce real-world AI malpractice over "personal use". for example, is it moral to use AI to write your cover letters? cover letters are essentially only templates, and besides: AI is going to be reading your job app, so isn't it kind of fair?

i recently found out that people use AI as a romantic or sexual partner. it seems like teenagers particularly enjoy this connection, and this is one of those "sticky" moments as a teacher. honestly - you can roast me for this - but if it was an actually-safe AI, i think teenagers exploring their sexuality with a fake partner is amazing. it prevents them from making permanent mistakes, it can teach them about their bodies and their desires, and it can help their confidence. but the problem is that it's not safe. there isn't a well-educated, sensitive AI specifically to help teens explore their hormones. it's just internet-fed cycle. who knows what they're learning. who knows what misinformation they're getting.

the most common pushback i get involves therapy. none of us have access to the therapist of our dreams - it's expensive, elusive, and involves an annoying amount of insurance claims. someone once asked me: are you going to be mad when AI saves someone's life?

therapists are not just trained on the book, they're trained on patient management and helping you see things you don't see yourself. part of it will involve discomfort. i don't know that AI is ever going to be able to analyze the words you feed it and answer with a mind towards the "whole person" writing those words. but also - if it keeps/kept you alive, i'm not a purist. i've done terrible things to myself when i was at rock bottom. in an emergency, we kind of forgive the seatbelt for leaving bruises. it's just that chat shouldn't be your only form of self-care and recovery.

and i worry that the influence chat has is expanding. more and more i see people use chat for the smallest, most easily-navigated situations. and i can't like, make you worry about that in your own life. i often think about how easy it was for social media to take over all my time - how i can't have a tiktok because i spend hours on it. i don't want that to happen with chat. i want to enjoy thinking. i want to enjoy writing. i want to be here. i've already really been struggling to put the phone down. this feels like another way to get you to pick the phone up.

the other day, i was frustrated by a book i was reading. it's far in the series and is about a character i resent. i googled if i had to read it, or if it was one of those "in between" books that don't actually affect the plot (you know, one of those ".5" books). someone said something that really stuck with me - theoretically you're reading this series for enjoyment, so while you don't actually have to read it, one would assume you want to read it.

i am watching a generation of people learn they don't have to read the thing in their hand. and it is kind of a strange sort of doom that comes over me: i read because it's genuinely fun. i learn because even though it's hard, it feels good. i try because it makes me happy to try. and i'm watching a generation of people all lay down and say: but i don't want to try.

#spilled ink#i do also think this issue IS more complicated than it appears#if a teacher uses AI to grade why write the essay for example.#<- while i don't agree (the answer is bc the essay is so YOU learn) i would be RIPSHIT as a student#if i found that out.#but why not give AI your job apps? it's not like a human person SEES your applications#the world IS automating in certain ways - i do actually understand the frustration#some people feel where it's like - i'm doing work here. the work will be eaten by AI. what's the point#but the answer is that we just don't have a balance right now. it just isn't trained in a smart careful way#idk. i am pretty anti AI tho so . much like AI. i'm biased.#(by the way being able to argue the other side tells u i actually understand the situation)#(if u see me arguing "pro-chat'' it's just bc i think a good argument involves a rebuttal lol)#i do not use ai . hard stop.

4K notes

·

View notes

Text

Humanizing Automation: Striking the Right Balance between Technology and Personalization in B2B

In the rapidly evolving landscape of B2B interactions, the integration of automation has become instrumental in streamlining processes, enhancing efficiency, and driving business growth. However, as technology advances, there is an increasing need to strike a delicate balance between leveraging automation and maintaining the crucial human element in B2B relationships. This delicate dance between technology and personalization is a pivotal factor in navigating the complex dynamics of the modern B2B industry.

Embracing the Power of Automation:

Automation in B2B processes has revolutionized how businesses operate. From lead generation and customer relationship management to supply chain optimization, automation technologies have demonstrated their prowess in handling repetitive tasks with unparalleled precision and speed. This not only frees up valuable human resources but also ensures a consistent and error-free execution of routine operations.

The Pitfalls of Over-Automation:

While automation brings undeniable advantages, there is a risk of over-reliance that could inadvertently dehumanize B2B interactions. A purely automated approach can lead to generic and impersonal communication, potentially alienating clients who seek a more nuanced and personalized engagement. B2B relationships thrive on trust, understanding, and human connection, elements that automation alone might struggle to convey.

Maintaining the Human Touch:

Humanizing automation involves a strategic approach to ensure that technology complements, rather than replaces, the personal touch in B2B interactions. It's about integrating automation, which enhances efficiency without sacrificing the authenticity and warmth that human connections bring to business relationships.

1. Tailoring Automated Communications:

Customize automated messages to reflect the unique preferences and needs of each client. Utilize data-driven insights to create personalized content that resonates with the specific challenges and goals of individual businesses.

2. Empowering Human-Centric Creativity:

While automation handles repetitive tasks, human creativity remains unmatched. Encourage your team to focus on tasks that require a creative, empathetic, and strategic mindset, leaving routine operations to automation. This ensures that human intelligence is directed toward activities that truly add value to B2B relationships.

3. Personalized Customer Journeys:

Leverage automation to map out personalized customer journeys. From initial contact to post-purchase interactions, ensure that each touch point reflects a deep understanding of the client's unique journey, fostering a sense of being genuinely understood and valued.

4. Real-time Human Intervention:

Embed mechanisms for real-time human intervention within automated processes. Whether it's a prompt for a personalized follow-up after a specific client interaction or an opportunity for human engagement when complex decisions arise, maintaining a connection with your clients ensures that the human touch is always present when it matters most.

The Road Ahead: Balancing Acts in the B2B Sphere

As businesses continue to embrace the possibilities of automation, finding the right equilibrium between technology and personalization is an ongoing journey. Striking this balance requires a thoughtful and adaptive approach where the strengths of automation are harnessed to augment, rather than diminish, the human elements that are essential in fostering meaningful B2B relationships.

In conclusion, humanizing automation in the B2B industry is not just a goal; it's a strategic imperative. It involves leveraging technology to enhance operational efficiency while ensuring that the unique qualities of human connection remain at the forefront of business interactions. The future of successful B2B relationships lies in the harmonious coexistence of cutting-edge automation and the timeless authenticity of human engagement.

#B2B interactions#B2B industry#B2B relationships#Humanizing Automation#Technology and Personalization in B2B#b2bindemand#leadgeneration#b2b lead generation#emailmarketing#b2bmarketing#b2b#b2bsales#b2b services

1 note

·

View note

Text

AI can’t do your job

I'm on a 20+ city book tour for my new novel PICKS AND SHOVELS. Catch me in SAN DIEGO at MYSTERIOUS GALAXY on Mar 24, and in CHICAGO with PETER SAGAL on Apr 2. More tour dates here.

AI can't do your job, but an AI salesman (Elon Musk) can convince your boss (the USA) to fire you and replace you (a federal worker) with a chatbot that can't do your job:

https://www.pcmag.com/news/amid-job-cuts-doge-accelerates-rollout-of-ai-tool-to-automate-government

If you pay attention to the hype, you'd think that all the action on "AI" (an incoherent grab-bag of only marginally related technologies) was in generating text and images. Man, is that ever wrong. The AI hype machine could put every commercial illustrator alive on the breadline and the savings wouldn't pay the kombucha budget for the million-dollar-a-year techies who oversaw Dall-E's training run. The commercial market for automated email summaries is likewise infinitesimal.

The fact that CEOs overestimate the size of this market is easy to understand, since "CEO" is the most laptop job of all laptop jobs. Having a chatbot summarize the boss's email is the 2025 equivalent of the 2000s gag about the boss whose secretary printed out the boss's email and put it in his in-tray so he could go over it with a red pen and then dictate his reply.

The smart AI money is long on "decision support," whereby a statistical inference engine suggests to a human being what decision they should make. There's bots that are supposed to diagnose tumors, bots that are supposed to make neutral bail and parole decisions, bots that are supposed to evaluate student essays, resumes and loan applications.

The narrative around these bots is that they are there to help humans. In this story, the hospital buys a radiology bot that offers a second opinion to the human radiologist. If they disagree, the human radiologist takes another look. In this tale, AI is a way for hospitals to make fewer mistakes by spending more money. An AI assisted radiologist is less productive (because they re-run some x-rays to resolve disagreements with the bot) but more accurate.

In automation theory jargon, this radiologist is a "centaur" – a human head grafted onto the tireless, ever-vigilant body of a robot

Of course, no one who invests in an AI company expects this to happen. Instead, they want reverse-centaurs: a human who acts as an assistant to a robot. The real pitch to hospital is, "Fire all but one of your radiologists and then put that poor bastard to work reviewing the judgments our robot makes at machine scale."

No one seriously thinks that the reverse-centaur radiologist will be able to maintain perfect vigilance over long shifts of supervising automated process that rarely go wrong, but when they do, the error must be caught:

https://pluralistic.net/2024/04/01/human-in-the-loop/#monkey-in-the-middle

The role of this "human in the loop" isn't to prevent errors. That human's is there to be blamed for errors:

https://pluralistic.net/2024/10/30/a-neck-in-a-noose/#is-also-a-human-in-the-loop

The human is there to be a "moral crumple zone":

https://estsjournal.org/index.php/ests/article/view/260

The human is there to be an "accountability sink":

https://profilebooks.com/work/the-unaccountability-machine/

But they're not there to be radiologists.

This is bad enough when we're talking about radiology, but it's even worse in government contexts, where the bots are deciding who gets Medicare, who gets food stamps, who gets VA benefits, who gets a visa, who gets indicted, who gets bail, and who gets parole.

That's because statistical inference is intrinsically conservative: an AI predicts the future by looking at its data about the past, and when that prediction is also an automated decision, fed to a Chaplinesque reverse-centaur trying to keep pace with a torrent of machine judgments, the prediction becomes a directive, and thus a self-fulfilling prophecy:

https://pluralistic.net/2023/03/09/autocomplete-worshippers/#the-real-ai-was-the-corporations-that-we-fought-along-the-way

AIs want the future to be like the past, and AIs make the future like the past. If the training data is full of human bias, then the predictions will also be full of human bias, and then the outcomes will be full of human bias, and when those outcomes are copraphagically fed back into the training data, you get new, highly concentrated human/machine bias:

https://pluralistic.net/2024/03/14/inhuman-centipede/#enshittibottification

By firing skilled human workers and replacing them with spicy autocomplete, Musk is assuming his final form as both the kind of boss who can be conned into replacing you with a defective chatbot and as the fast-talking sales rep who cons your boss. Musk is transforming key government functions into high-speed error-generating machines whose human minders are only the payroll to take the fall for the coming tsunami of robot fuckups.

This is the equivalent to filling the American government's walls with asbestos, turning agencies into hazmat zones that we can't touch without causing thousands to sicken and die:

https://pluralistic.net/2021/08/19/failure-cascades/#dirty-data

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2025/03/18/asbestos-in-the-walls/#government-by-spicy-autocomplete

Image: Krd (modified) https://commons.wikimedia.org/wiki/File:DASA_01.jpg

CC BY-SA 3.0 https://creativecommons.org/licenses/by-sa/3.0/deed.en

--

Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#reverse centaurs#automation#decision support systems#automation blindness#humans in the loop#doge#ai#elon musk#asbestos in the walls#gsai#moral crumple zones#accountability sinks

277 notes

·

View notes

Text

RK800 #313 248 317 - 00

#thinking about virtual only RK800...#daymn#poor fella to put it lightly#art#fan art#my art#dbh#detroit become human#connor rk800#dbh connor#dbh rk800#rk800#fun fact for the UI I used an actual UI of a program where I do industrial automation and testing

206 notes

·

View notes

Text

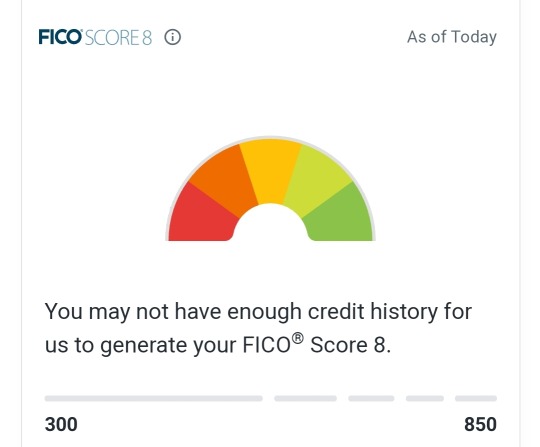

So, I have a big advice post to write, but until I have the spoons, I want to warn everyone now who has legally changed their first name:

Your credit score may be fucked up and you need to:

check *now* that all your financial institutions (credit cards, loans, etc) have your current legal name and update where necessary

check that all 3 credit bureaus, on their respective sites, not via a feed like Credit Karma (so, Trans Union, Equifax, Experian) are collecting that info correctly and generating the right score - you might need to monitor them for a few months if you made any changes in #1

I am about to apply for a mortgage and learned that as of 2 weeks ago:

Experian suddenly thinks I am 2 different people - Legal Name and Dead Name (none of whom have a score)

Equifax has reported my active 25 year old mortgage as closed and deleted one of my older credit cards, hurting my score by 30+ points

Credit scores influence everything from big home/car loans to insurance rates to job and housing applications.

And for whatever reason, the 3 bureaus that have the power to destroy your life are shockingly fragile when it comes to legally changing one's first name.

So, yeah. Once I get this mess cleaned up for myself, I have a big guide in the works if you find yourself in the same predicament. But with the mass trans migration out of oppressive states, odds are there are a lot of newly renamed people who are about to have a nasty shock when applying for new housing.

Take care, folks.

#trans stuff#transgender#credit score#united states#it is wild having an 800 spread between credit scores lol#a human being could identify there is a problem but so much life impacting shit is automated by stupid and biased algorithms

3K notes

·

View notes

Text

my side quest is complete. I wrote these letters for the template with a marker so they're extra round and cute btw.

#val art#oh no I'm automating part of my art making process with a computer instead of putting my innate human soul into each mark sorry

31 notes

·

View notes

Text

a few reminders

-i run this blog alone

-the spreadsheet is edited manually, by me. there is no automation. all submissions are handled by me as and when i have time

-my timezone is GMT and i am most active between the hours of 6pm and midnight

-you do not need to submit anything more than once

thank you!

#admin talkin'#my friend mentioned that people might think this thing is automated. it isnt. i am a flesh and blood human doing this on my own

24 notes

·

View notes

Text

how i feel having to make phone conversations in the year of our lord and savior 2025

#kat talks#let me talk to a real human person!!!#i dont need an AI to try guessing at what i want especially when my questions arent in its script#also if i could use the online automated system i wouldnt be here!!#also mid sentence I got switched to a human representative and i had to immediately shift my tone lmao

49 notes

·

View notes

Text

I cannot tolerate interacting with USA supporters.

Blue maga pisses me off more than red, because red voters are too stupidly brainwashed to be blamed for much other than shitting the bed. They're pathetic, but violent. Their danger isn't coming from them being too bright or clever.

When liberals shit the bed, it's on purpose & with all the data about bed shitting fully at their fingertips. They're deeply fucking dangerous. They praise the FBI every day, now. They demand cops, they insist on responsible surrender to and complicity with rapacious neoliberalism. They are worse. They're the trustees of the prison and they are proud of it. They are more than capable of cleverly serving Trump up as their excuse for No End of massacre, pillage, theft, pure malice.

Liberals can, should, and will fuck all the way off, forever.

32 notes

·

View notes

Text

Randomly ranting about AI.

The thing that’s so fucking frustrating to me when it comes to chat ai bots and the amount of people that use those platforms for whatever godamn reason, whether it be to engage with the bots or make them, is that they’ll complain that reading/creating fanfic is cringe or they don’t like reader-inserts or roleplaying with others in fandom spaces. Yet the very bots they’re using are mimicking the same methods they complain about as a base to create spaces for people to interact with characters they like. Where do you think the bots learned to respond like that? Why do you think you have to “train” AI to tailor responses you’re more inclined to like? It’s actively ripping off of your creativity and ideas, even if you don’t write, you are taking control of the scenario you want to reenact, the same things writers do in general.

Some people literally take ideas that you find from fics online, word for word bar for bar, taking from individuals who have the capacity to think with their brains and imagination, and they’ll put it into the damn ai summary, and then put it on a separate platform for others so they can rummage through mediocre responses that lack human emotion and sensuality. Not only are the chat bots a problem, AI being in writing software and platforms too are another thing. AI shouldn’t be anywhere near the arts, because ultimately all it does is copy and mimic other people’s creations under the guise of creating content for consumption. There’s nothing appealing or original or interesting about what AI does, but with how quickly people are getting used to being forced to used AI because it’s being put into everything we use and do, people don’t care enough to do the labor of reading and researching on their own, it’s all through ChatGPT and that’s intentional.

I shouldn’t have to manually turn off AI learning software on my phone or laptop or any device I use, and they make it difficult to do so. I shouldn’t have to code my own damn things just to avoid using it. Like when you really sit down and think about how much AI is in our day to day life especially when you compare the different of the frequency of AI usage from 2 years ago to now, it’s actually ridiculous how we can’t escape it, and it’s only causing more problems.

People’s attention spans are deteriorating, their capacity to come up with original ideas and to be invested in storytelling is going down the drain along with their media literacy. It hurts more than anything cause we really didn’t have to go into this direction in society, but of course rich people are more inclined to make sure everybody on the planet are mindless robots and take whatever mechanical slop is fucking thrown at them while repressing everything that has to deal with creativity and passion and human expression.

The frequency of AI and the fact that it’s literally everywhere and you can’t escape it is a symptom of late stage capitalism and ties to the rise of fascism as the corporations/individuals who create, manage, and distribute these AI systems could care less about the harmful biases that are fed into these systems. They also don’t care about the fact that the data centers that hold this technology need so much water and energy to manage it it’s ruining our ecosystems and speeding up climate change that will have us experience climate disasters like with what’s happening in Los Angeles as it burns.

I pray for the downfall and complete shutdown of all ai chat bot apps and websites. It’s not worth it, and the fact that there’s so many people using it without realizing the damage it’s causing it’s so frustrating.

#I despise AI so damn much I can’t stand it#I try so hard to stay away from using it despite not being able to google something without the ai summary popping up#and now I’m trying to move all of my stuff out from Google cause I refuse to let some unknown ai software scrap my shit#AI is the antithesis to human creation and I wished more knew that#I can go on and on about how much I hate AI#fuck character ai fuck janitor ai fuck all of that bullshit#please support your writers and people in fandom spaces because we are being pushed out by automated systems

23 notes

·

View notes

Text

Have I ever explained this concept I’m obsessed with?

Person who ends up cryogenically frozen for awhile™️ wakes up finally and it’s to a strange world where there’s robots everywhere

With their image

Turns out their best friend didn’t take their disappearance well and kept making replica after replica of them because they couldn’t quite nail the personality down, and after awhile, without having seen the original in forever, the robots have become copies of copies of copies (essentially, very flanderized versions of the Human)

The best friend also made a ton of other robots and essentially became an evil overlord (their just woken up friend is Not Impressed™️) leading to the creation of a resistance group set out to stop them

The Human (who is absolutely confused at the moment and a bit scared) runs into the resistance group and they think they’re a defective bot (because even tho the personalities are off, the appearances aren’t off except for blemishes getting erased) who thinks they’re real

And they’re like “if this robot thinks it’s a Human, who are we to deny it Humanity” while the Human keeps trying to explain they are a Human, and also trying to avoid getting caught and “reprogrammed” by bot versions of themself

(2 other fun things in this: they used to make robots with their friend and after their disappearance their friend used a lot of their stuff to make new robots and so a ton of things have the Human’s failsafes and backup codes so other robotic things are no problem for them, only their bot selves, which were made completely new after they disappeared are a problem, and a few of the first gen robots they made are still around and recognized them immediately and keep trying to track their maker down but the resistance group keeps interfering)

Can never really think of a satisfying ending with the rebellion group because it always just boils down to the Human finally kicking the door down to face their friend and being like “what the fuck did you do in my image????”

(And then my brain tries to make it more convoluted by being like “and then it turns out that’s actually not even their friend, their friend died awhile ago and left behind a robot version of themself which is why they suddenly started acting so weird and supervillain-y evil and they never actually get to see each other again, they both just ended up with funhouse artificial versions of each other, and there is no satisfying ending confrontation, there never will be”)

#no fandom#humans#robots#there’s also a ton of other stuff I can’t articulate well#like the friend became an ‘overlord’ because of corporate greed just wanting to automate everything

132 notes

·

View notes

Text

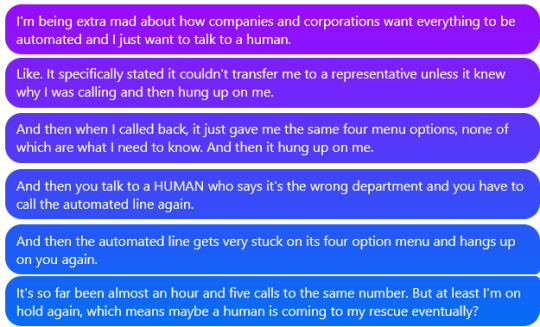

I know there are many people out there who appreciate automated menus and they really are helpful if you just need some quick account information or business hours or have anxiety when talking to a real human. I know automated menus can be very quick and very easy. But I also know that one of the big reasons more and more places are trying to switch to more automation is so they don't have to pay their workers. There's a very specific portion of the population out there who believes government office workers are free-loaders and participating in fraud, waste, and abuse of tax payer dollars, so they are excited to see government workers fired, even though the services many of them provide make the lives of everyday people remarkably better. For example, I have a very quick, very simple question regarding the return of a form I was sent in the mail. But I want this question answered by a real human who might indicate if I missed something important, as the whole reason this form was sent to me was because I missed something when I originally submitted it. I want a human to verify that I understood the instructions. A REAL, LIVE HUMAN. I feel a little bit like the portion of the population who loves the idea of firing "lazy government workers" maybe doesn't realize that firing humans means no one is there to answer a phone call when you have a simple question. It also means that human is now unemployed and they absolutely HATE people who don't have "a real job" because the unemployed are a "drain on the good tax-paying people." But if you ask these people what a "real job" is and how people are supposed to stay employed when work keeps getting overtaken by generative predictive text algorithms ("AI"), they don't have an answer, other than continuous derogatory comments about "lazy free-loaders". And the increased automation isn't even helpful. It's circular. There's no guarantee it'll give me correct information. There's going to be no accountability if it tells me incorrect information, as someone can easily change what the robot says and there would never be proof I was given incorrect information. Anyway. Maybe I'm alone in my desire to have my tax dollars go to paying real human workers who get good benefits and answer the phone when I have a question. (And yes, in the hour I've been typing this, I'm still on hold).

#this is the timeline from hell#generative text algorithms#customer service#automated menus#rehire humans

7 notes

·

View notes

Text

“Humans in the loop” must detect the hardest-to-spot errors, at superhuman speed

I'm touring my new, nationally bestselling novel The Bezzle! Catch me SATURDAY (Apr 27) in MARIN COUNTY, then Winnipeg (May 2), Calgary (May 3), Vancouver (May 4), and beyond!

If AI has a future (a big if), it will have to be economically viable. An industry can't spend 1,700% more on Nvidia chips than it earns indefinitely – not even with Nvidia being a principle investor in its largest customers:

https://news.ycombinator.com/item?id=39883571

A company that pays 0.36-1 cents/query for electricity and (scarce, fresh) water can't indefinitely give those queries away by the millions to people who are expected to revise those queries dozens of times before eliciting the perfect botshit rendition of "instructions for removing a grilled cheese sandwich from a VCR in the style of the King James Bible":

https://www.semianalysis.com/p/the-inference-cost-of-search-disruption

Eventually, the industry will have to uncover some mix of applications that will cover its operating costs, if only to keep the lights on in the face of investor disillusionment (this isn't optional – investor disillusionment is an inevitable part of every bubble).

Now, there are lots of low-stakes applications for AI that can run just fine on the current AI technology, despite its many – and seemingly inescapable - errors ("hallucinations"). People who use AI to generate illustrations of their D&D characters engaged in epic adventures from their previous gaming session don't care about the odd extra finger. If the chatbot powering a tourist's automatic text-to-translation-to-speech phone tool gets a few words wrong, it's still much better than the alternative of speaking slowly and loudly in your own language while making emphatic hand-gestures.

There are lots of these applications, and many of the people who benefit from them would doubtless pay something for them. The problem – from an AI company's perspective – is that these aren't just low-stakes, they're also low-value. Their users would pay something for them, but not very much.

For AI to keep its servers on through the coming trough of disillusionment, it will have to locate high-value applications, too. Economically speaking, the function of low-value applications is to soak up excess capacity and produce value at the margins after the high-value applications pay the bills. Low-value applications are a side-dish, like the coach seats on an airplane whose total operating expenses are paid by the business class passengers up front. Without the principle income from high-value applications, the servers shut down, and the low-value applications disappear:

https://locusmag.com/2023/12/commentary-cory-doctorow-what-kind-of-bubble-is-ai/

Now, there are lots of high-value applications the AI industry has identified for its products. Broadly speaking, these high-value applications share the same problem: they are all high-stakes, which means they are very sensitive to errors. Mistakes made by apps that produce code, drive cars, or identify cancerous masses on chest X-rays are extremely consequential.

Some businesses may be insensitive to those consequences. Air Canada replaced its human customer service staff with chatbots that just lied to passengers, stealing hundreds of dollars from them in the process. But the process for getting your money back after you are defrauded by Air Canada's chatbot is so onerous that only one passenger has bothered to go through it, spending ten weeks exhausting all of Air Canada's internal review mechanisms before fighting his case for weeks more at the regulator:

https://bc.ctvnews.ca/air-canada-s-chatbot-gave-a-b-c-man-the-wrong-information-now-the-airline-has-to-pay-for-the-mistake-1.6769454

There's never just one ant. If this guy was defrauded by an AC chatbot, so were hundreds or thousands of other fliers. Air Canada doesn't have to pay them back. Air Canada is tacitly asserting that, as the country's flagship carrier and near-monopolist, it is too big to fail and too big to jail, which means it's too big to care.

Air Canada shows that for some business customers, AI doesn't need to be able to do a worker's job in order to be a smart purchase: a chatbot can replace a worker, fail to their worker's job, and still save the company money on balance.

I can't predict whether the world's sociopathic monopolists are numerous and powerful enough to keep the lights on for AI companies through leases for automation systems that let them commit consequence-free free fraud by replacing workers with chatbots that serve as moral crumple-zones for furious customers:

https://www.sciencedirect.com/science/article/abs/pii/S0747563219304029

But even stipulating that this is sufficient, it's intrinsically unstable. Anything that can't go on forever eventually stops, and the mass replacement of humans with high-speed fraud software seems likely to stoke the already blazing furnace of modern antitrust:

https://www.eff.org/de/deeplinks/2021/08/party-its-1979-og-antitrust-back-baby

Of course, the AI companies have their own answer to this conundrum. A high-stakes/high-value customer can still fire workers and replace them with AI – they just need to hire fewer, cheaper workers to supervise the AI and monitor it for "hallucinations." This is called the "human in the loop" solution.

The human in the loop story has some glaring holes. From a worker's perspective, serving as the human in the loop in a scheme that cuts wage bills through AI is a nightmare – the worst possible kind of automation.

Let's pause for a little detour through automation theory here. Automation can augment a worker. We can call this a "centaur" – the worker offloads a repetitive task, or one that requires a high degree of vigilance, or (worst of all) both. They're a human head on a robot body (hence "centaur"). Think of the sensor/vision system in your car that beeps if you activate your turn-signal while a car is in your blind spot. You're in charge, but you're getting a second opinion from the robot.

Likewise, consider an AI tool that double-checks a radiologist's diagnosis of your chest X-ray and suggests a second look when its assessment doesn't match the radiologist's. Again, the human is in charge, but the robot is serving as a backstop and helpmeet, using its inexhaustible robotic vigilance to augment human skill.

That's centaurs. They're the good automation. Then there's the bad automation: the reverse-centaur, when the human is used to augment the robot.

Amazon warehouse pickers stand in one place while robotic shelving units trundle up to them at speed; then, the haptic bracelets shackled around their wrists buzz at them, directing them pick up specific items and move them to a basket, while a third automation system penalizes them for taking toilet breaks or even just walking around and shaking out their limbs to avoid a repetitive strain injury. This is a robotic head using a human body – and destroying it in the process.

An AI-assisted radiologist processes fewer chest X-rays every day, costing their employer more, on top of the cost of the AI. That's not what AI companies are selling. They're offering hospitals the power to create reverse centaurs: radiologist-assisted AIs. That's what "human in the loop" means.

This is a problem for workers, but it's also a problem for their bosses (assuming those bosses actually care about correcting AI hallucinations, rather than providing a figleaf that lets them commit fraud or kill people and shift the blame to an unpunishable AI).

Humans are good at a lot of things, but they're not good at eternal, perfect vigilance. Writing code is hard, but performing code-review (where you check someone else's code for errors) is much harder – and it gets even harder if the code you're reviewing is usually fine, because this requires that you maintain your vigilance for something that only occurs at rare and unpredictable intervals:

https://twitter.com/qntm/status/1773779967521780169

But for a coding shop to make the cost of an AI pencil out, the human in the loop needs to be able to process a lot of AI-generated code. Replacing a human with an AI doesn't produce any savings if you need to hire two more humans to take turns doing close reads of the AI's code.

This is the fatal flaw in robo-taxi schemes. The "human in the loop" who is supposed to keep the murderbot from smashing into other cars, steering into oncoming traffic, or running down pedestrians isn't a driver, they're a driving instructor. This is a much harder job than being a driver, even when the student driver you're monitoring is a human, making human mistakes at human speed. It's even harder when the student driver is a robot, making errors at computer speed:

https://pluralistic.net/2024/04/01/human-in-the-loop/#monkey-in-the-middle

This is why the doomed robo-taxi company Cruise had to deploy 1.5 skilled, high-paid human monitors to oversee each of its murderbots, while traditional taxis operate at a fraction of the cost with a single, precaratized, low-paid human driver:

https://pluralistic.net/2024/01/11/robots-stole-my-jerb/#computer-says-no

The vigilance problem is pretty fatal for the human-in-the-loop gambit, but there's another problem that is, if anything, even more fatal: the kinds of errors that AIs make.

Foundationally, AI is applied statistics. An AI company trains its AI by feeding it a lot of data about the real world. The program processes this data, looking for statistical correlations in that data, and makes a model of the world based on those correlations. A chatbot is a next-word-guessing program, and an AI "art" generator is a next-pixel-guessing program. They're drawing on billions of documents to find the most statistically likely way of finishing a sentence or a line of pixels in a bitmap:

https://dl.acm.org/doi/10.1145/3442188.3445922

This means that AI doesn't just make errors – it makes subtle errors, the kinds of errors that are the hardest for a human in the loop to spot, because they are the most statistically probable ways of being wrong. Sure, we notice the gross errors in AI output, like confidently claiming that a living human is dead:

https://www.tomsguide.com/opinion/according-to-chatgpt-im-dead

But the most common errors that AIs make are the ones we don't notice, because they're perfectly camouflaged as the truth. Think of the recurring AI programming error that inserts a call to a nonexistent library called "huggingface-cli," which is what the library would be called if developers reliably followed naming conventions. But due to a human inconsistency, the real library has a slightly different name. The fact that AIs repeatedly inserted references to the nonexistent library opened up a vulnerability – a security researcher created a (inert) malicious library with that name and tricked numerous companies into compiling it into their code because their human reviewers missed the chatbot's (statistically indistinguishable from the the truth) lie:

https://www.theregister.com/2024/03/28/ai_bots_hallucinate_software_packages/

For a driving instructor or a code reviewer overseeing a human subject, the majority of errors are comparatively easy to spot, because they're the kinds of errors that lead to inconsistent library naming – places where a human behaved erratically or irregularly. But when reality is irregular or erratic, the AI will make errors by presuming that things are statistically normal.

These are the hardest kinds of errors to spot. They couldn't be harder for a human to detect if they were specifically designed to go undetected. The human in the loop isn't just being asked to spot mistakes – they're being actively deceived. The AI isn't merely wrong, it's constructing a subtle "what's wrong with this picture"-style puzzle. Not just one such puzzle, either: millions of them, at speed, which must be solved by the human in the loop, who must remain perfectly vigilant for things that are, by definition, almost totally unnoticeable.

This is a special new torment for reverse centaurs – and a significant problem for AI companies hoping to accumulate and keep enough high-value, high-stakes customers on their books to weather the coming trough of disillusionment.

This is pretty grim, but it gets grimmer. AI companies have argued that they have a third line of business, a way to make money for their customers beyond automation's gifts to their payrolls: they claim that they can perform difficult scientific tasks at superhuman speed, producing billion-dollar insights (new materials, new drugs, new proteins) at unimaginable speed.

However, these claims – credulously amplified by the non-technical press – keep on shattering when they are tested by experts who understand the esoteric domains in which AI is said to have an unbeatable advantage. For example, Google claimed that its Deepmind AI had discovered "millions of new materials," "equivalent to nearly 800 years’ worth of knowledge," constituting "an order-of-magnitude expansion in stable materials known to humanity":

https://deepmind.google/discover/blog/millions-of-new-materials-discovered-with-deep-learning/

It was a hoax. When independent material scientists reviewed representative samples of these "new materials," they concluded that "no new materials have been discovered" and that not one of these materials was "credible, useful and novel":

https://www.404media.co/google-says-it-discovered-millions-of-new-materials-with-ai-human-researchers/

As Brian Merchant writes, AI claims are eerily similar to "smoke and mirrors" – the dazzling reality-distortion field thrown up by 17th century magic lantern technology, which millions of people ascribed wild capabilities to, thanks to the outlandish claims of the technology's promoters:

https://www.bloodinthemachine.com/p/ai-really-is-smoke-and-mirrors

The fact that we have a four-hundred-year-old name for this phenomenon, and yet we're still falling prey to it is frankly a little depressing. And, unlucky for us, it turns out that AI therapybots can't help us with this – rather, they're apt to literally convince us to kill ourselves:

https://www.vice.com/en/article/pkadgm/man-dies-by-suicide-after-talking-with-ai-chatbot-widow-says

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/04/23/maximal-plausibility/#reverse-centaurs

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#ai#automation#humans in the loop#centaurs#reverse centaurs#labor#ai safety#sanity checks#spot the mistake#code review#driving instructor

857 notes

·

View notes

Text

Another death caused by communism

#Another death caused by communism#cia#central intelligence agency#usa#america#usa is a terrorist state#usa is funding genocide#class war#human rights#anarcho communism#communist#communism memes#fully automated luxury gay space communism#anarchocommunism#ausgov#politas#auspol#tasgov#taspol#australia#fuck neoliberals#neoliberal capitalism#anthony albanese#albanese government#eat the rich#eat the fucking rich#eat the 1%#anti imperialism#anti elon musk#anti tesla

12 notes

·

View notes

Text

i like to complain about google translate but actually it & i have become best friends recently. it gives me a little puzzle (a completely bonkers translation) and then i get to spend a few seconds squinting at my screen trying to reverse engineer the inner workings of the crystal ball in google's basement. this is enrichment for me, specifically

#still thinking about an automated translation of a google review for a cemetary. english 'nice' > dutch 'leuk'. which CAN mean nice#but used like this it has a definite leaning towards being interpreted as meaning 'fun'#this is without getting into the perfectly endless supply of thai > english translations that are just Wrong#the computer frequently has no clue where a sentence starts or ends or who anyone is talking about at any given moment#and sometimes neither do i. but what i do have is a human brain. instant huge win on my part#*#that is to say. when a youtube comment reads 'daou [going where]' and google translate spits out 'Where are you going?'#i can very cleverly determine that this translation is missing something. this something being the entirety of daou (person)#many such cases. in a literal statistical way

10 notes

·

View notes

Text

Is AI fr gonna steal our jobs?

Disclaimer: This post is mostly speculative and meant to encourage discussion and different perspectives on the topic.

Some time ago, I along with many others thought that AI was mostly going to aid in all the task centered, administrative, repetitive jobs. Cashiers, factory workers, call center workers, all the jobs that would benefit from automation were being taken over by AI.

When the ghibli trend came around, it was a guttural shock to many artists.

What used to generate questionable and bad looking art has now developed and is transitioning to generating high quality pieces, videos, music, animation and what not. In a matter of mere months. [AI tools example: midjourney, DALL-E, ChatGPT]

Art is not simply something pretty to look at. It is the accumulation of experiences, emotions and essense of humans. Art is their unique expression and the lens with which they see the world.

This blog is not an argument against the use of AI for art, but a call to understand what it really means.

Did most people see it coming when AI mimicked it with precision?

How long before it starts mimicking creativity, intuition, emotion and depth, all of what we thought was deeply and uniquely human?

"AI works by learning from lots of information, recognizing patterns, and using that knowledge to make decisions or do tasks like a human would." - Chatgpt.

Some time ago, the dominant argument was that AI might be able copy the strokes of a painting, the words of a novel. But it cannot hold the hands of another human and tell them all was well, it cannot feel and experience the real world like us, it cannot connect with humans and it cant innovate and envision new solutions.

If you still believe this, I urge you to go to chatgpt right now and open up to it like it was your friend. It will provide consolidation and advice tailored so well to your individual behaviours that it might feel better than talking to your bestfriend.

What is a deep neurological, experience based and emotional reaction to us is simply just analysis and application of data and patterns to AI. And the difference? Not easily distinguishable to the average human.

As long as the end result is not compromised, it doesnt matter to client and employers whether the process was human or not. Efficiency is often prioritized above substance. And now even substance is being mimicked.

Currently, the prominent discussion online is that in order to improve your job security, we need to master AI tools. Instead of fighting for stability(which is nothing but an illusion now) we need to ride the waves of the new age flooding towards us, and work with Ai instead of fighting against the change.

But the paradox is, the more we use AI the quicker it will learn from us, the quicker it will reduce the need for human guidance and supervision, and the quicker it will replace us.

Times are moving fast. We need everyone to be aware of the rate at which the world is changing and the things that are going on beneath the surface. If we simply take information at face value and avoid research, give it a few years or even months of time, and noone will know what hit us.

"Use of generative AI increased from 33% in 2023 to 71% in 2024. Use of AI by business function for the latest data varied from 36% in IT to 12% in manufacturing. Use of gen AI by business function for the latest data varied from 42% in marketing and sales to 5% in manufacturing."-Mckinsey, Mar 12, 2025.

By 2030, 14% of employees will have been forced to change their career because of- AI-McKinsey.

Since 2000, automation has resulted in 1.7 million manufacturing jobs being lost -BuiltIn

There is a radical change taking momentum right now. It's gonna be humans vs AI starting from the job aspect of the world.

Its not a matter of which jobs and skills are AI proof but which ones is AI likely to take over last.

What I predict personally, is that soon the world leaders are going to have to make a transformative choice.

This can either lead to a world where humans can be provided with money and resources instead of working to earn, as AI generates profit, and we can lay back and enjoy the things we love doing.

Or the other option is that we are going to have to live by scraps as small elite groups take over all the resources and tech.

Dystopia or utopia? The line is blurred.

Thankfully, for now, the choice is in human hands.

#ai generated#awareness#AI awareness#Ai#job security#ai job#ai vs humans#ai discussion#ai artwork#ai automation#rant post#ai speculation#ai blog

6 notes

·

View notes