#IT network and hardware

Explore tagged Tumblr posts

Text

USA 1993

47 notes

·

View notes

Text

i have no computer goals. i dont have any computer wants or needs. im likea computer buddhist. this is my main barrier to coding

#i dont need to automate anything.#i dont really care about networks or graphics programming.#i dont really want to do anything hardware related.#and i DONT want to make websites.

127 notes

·

View notes

Text

Very interested in this gonna have to see what the reviews are, a cheapish foss dev friendly router is a cool prospect

13 notes

·

View notes

Note

QUESTION TWO:

SWITCH BOXES. you said that’s what monitors the connections between systems in the computer cluster, right? I assume it has software of its own but we don’t need to get into that, anyway, I am so curious about this— in really really large buildings full of servers, (like multiplayer game hosting servers, Google basically) how big would that switch box have to be? Do they even need one? Would taking out the switch box on a large system like that just completely crash it all?? While I’m on that note, when it’s really large professional server systems like that, how do THEY connect everything to power sources? Do they string it all together like fairy lights with one big cable, or??? …..the voices……..THE VOICES GRR

I’m acending (autism)

ALRIGHT! I'm starting with this one because the first question that should be answered is what the hell is a server rack?

Once again, long post under cut.

So! The first thing I should get out of the way is what is the difference between a computer and a server. Which, is like asking the difference between a gaming console and a computer. Or better yet, the difference between a gaming computer and a regular everyday PC. Which is... that they are pretty much the same thing! But if you game on a gaming computer, you'll get much better performance than on a standard PC. This is (mostly) because a gaming computer has a whole separate processor dedicated to processing graphics (GPU). A server is different from a PC in the same way, it's just a computer that is specifically built to handle the loads of running an online service. That's why you can run a server off a random PC in your closet, the core components are the same! (So good news about your other question. Short answer, yes! It would be possible to connect the hodgepodge of computers to the sexy server racks upstairs, but I'll get more into that in the next long post)

But if you want to cater to hundreds or thousands of customers, you need the professional stuff. So let's break down what's (most commonly) in a rack setup, starting with the individual units (sometimes referred to just as 'U').

Short version of someone setting one up!

18 fucking hard drives. 2 CPUs. How many sticks of ram???

Holy shit, that's a lot. Now depending on your priorities, the next question is, can we play video games on it? Not directly! This thing doesn't have a GPU so using it to render a video game works, but you won't have sparkly graphics with high frame rate. I'll put some video links at the bottom that goes more into the anatomy of the individual units themselves.

I pulled this screenshot from this video rewiring a server rack! As you can see, there are two switch boxes in this server rack! Each rack gets their own switch box to manage which unit in the rack gets what. So it's not like everything is connected to one massive switch box. You can add more capacity by making it bigger or you can just add another one! And if you take it out then shit is fucked. Communication has been broken, 404 website not found (<- not actually sure if this error will show).

So how do servers talk to one another? Again, I'll get more into that in my next essay response to your questions. But basically, they can talk over the internet the same way that your machine does (each server has their own address known as an IP and routers shoot you at one).

POWER SUPPLY FOR A SERVER RACK (finally back to shit I've learned in class) YOU ARE ASKING IF THEY ARE WIRED TOGETHER IN SERIES OR PARALLEL! The answer is parallel. Look back up at the image above, I've called out the power cables. In fact, watch the video of that guy wiring that rack back together very fast. Everything on the right is power. How are they able to plug everything together like that? Oh god I know too much about this topic do not talk to me about transformers (<- both the electrical type and the giant robots). BASICALLY, in a data center (place with WAY to many servers) the building is literally built with that kind of draw in mind (oh god the power demands of computing, I will write a long essay about that in your other question). Worrying about popping a fuse is only really a thing when plugging in a server into a plug in your house.

Links to useful youtube videos

How does a server work? (great guide in under 20 min)

Rackmount Server Anatomy 101 | A Beginner's Guide (more comprehensive breakdown but an hour long)

DATA CENTRE 101 | DISSECTING a SERVER and its COMPONENTS! (the guy is surrounded by screaming server racks and is close to incomprehensible)

What is a patch panel? (More stuff about switch boxes- HOLY SHIT there's more hardware just for managing the connection???)

Data Center Terminologies (basic breakdown of entire data center)

Networking Equipment Racks - How Do They Work? (very informative)

Funny

#is this even writing advice anymore?#I'd say no#Do I care?#NOPE!#yay! Computer#I eat computers#Guess what! You get an essay for every question!#oh god the amount of shit just to manage one connection#I hope you understand how beautiful the fact that the internet exists and it's even as stable as it is#it's also kind of fucked#couldn't fit a college story into this one#Uhhh one time me and a bunch of friends tried every door in the administrative building on campus at midnight#got into some interesting places#took candy from the office candy bowl#good fun#networking#server racks#servers#server hardware#stem#technology#I love technology#Ask#spark

7 notes

·

View notes

Text

How to Install Unraid NAS: Complete Step-by-Step Guide for Beginners (2025)

If you’re looking to set up a powerful, flexible network-attached storage (NAS) system for your home media server or small business, Unraid is a brilliant choice. This comprehensive guide will walk you through the entire process to install Unraid NAS from start to finish, with all the tips and tricks for a successful setup in 2025. Unraid has become one of the most popular NAS operating systems…

#2025 nas guide#diy nas#home media server#home server setup#how to install unraid#network attached storage#private internet access unraid#small business nas#unraid backup solution#unraid beginner tutorial#unraid community applications#unraid data protection#unraid docker setup#unraid drive configuration#unraid hardware requirements#unraid licencing#unraid media server#unraid nas setup#unraid parity configuration#unraid plex server#unraid remote access#unraid server guide#unraid troubleshooting#unraid vpn configuration#unraid vs synology

2 notes

·

View notes

Text

Discover top-quality laptops, computers, gaming accessories, tech gadgets, and computer accessories at Matrix Warehouse. Shop online or in-store across South Africa for unbeatable prices and nationwide delivery. Visit now!

#Laptops & Accessories#Desktop Computers#Smart Devices#Computer Hardware#Monitors & Projectors#Computer Accessories#Backup Power Solutions#Printers and Printer Toners#Networking Equipment#Computer Software

2 notes

·

View notes

Text

why does horizon zero dawn, a game that came out 7 years ago and only got a PC port in 2020 has a remaster in 2024??? this is absurd.

#like i suppose it's for the newer console generation but like... why#and why is it a thing i can buy for my PC too for like 50 fucking euros#this is some late stage capitalism bullshit#and here i thought the life is strange remasters were outrageous#all of these games are perfectly fine as they are#remaster a game that actually would be a challenge to play on current hardware pls#i'm on my knees begging#also i'm checking the reviews and lol apparently you need an internet connection and a playstation account to play the remaster#you know... network connection to play horizon zero dawn. a singleplayer action adventure game.#who bought this. who gave them money. identify yourself.#okay new update: i checked and if you have the og game on steam already you only have to pay 10 euros#which sounds much better#i still think this is some late stage capitalist bullshit though just make a new fucking game oh my god#also can i bitch about the life is strange thing a bit?#because the before the storm remaster came out 5 fucking years after the og game#and you don't even get the deal you get with horizon if you have the original game#deck nine it's on sight#and don't think i forgot about the stories with the toxic work environment. fun!#at least dontnod's reputation is still just that they're a weird french company who don't have the LiS IP anymore. good for them!

4 notes

·

View notes

Text

why's it so hard to set up a custom minecraft server...

#like cmon I have a public facing server already#I know it works since the logs dont show any errors#and like ive tried running the exact same hardware+software setup on my local network#but like unfortunately the public facing server only has IPv6#and I cant connect cause my ISP only issues IPv4#and the other people I want to play with probably only have v4 as well#I guess the hosting provider I have technically has a v4->v6 proxy to allow ppl with v4 only to connect to their servers#but it only passes through http imap and smtp traffic#so its pretty much useless for what I want to do#and like so far finding a proxy service that actually does what i want it to do seems impossible#like PLEASE I WANNA PLAY MINECRAFT WITH SOMEONE ELSE#I DONT WANNA SPEND TIME TRYING TO FIGURE OUT HOW TO GET THE SERVER RUNNING

3 notes

·

View notes

Text

USA 1993

46 notes

·

View notes

Text

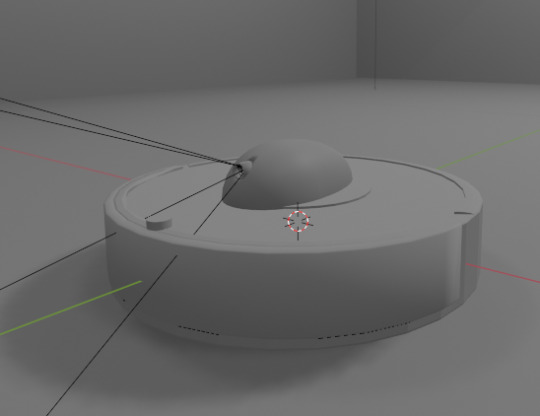

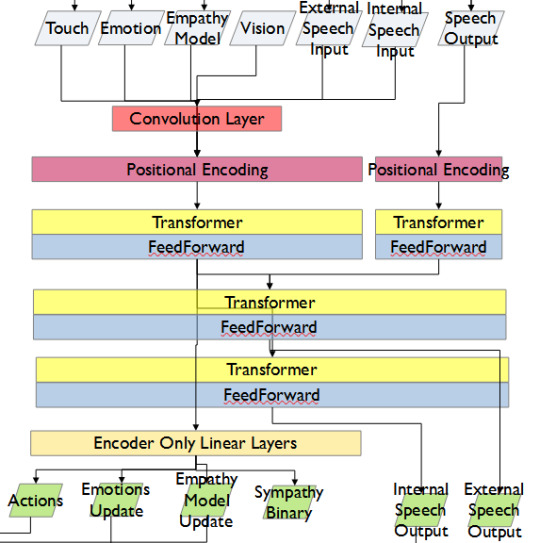

Applied AI - Integrating AI With a Roomba

AKA. What have I been doing for the past month and a half

Everyone loves Roombas. Cats. People. Cat-people. There have been a number of Roomba hacks posted online over the years, but an often overlooked point is how very easy it is to use Roombas for cheap applied robotics projects.

Continuing on from a project done for academic purposes, today's showcase is a work in progress for a real-world application of Speech-to-text, actionable, transformer based AI models. MARVINA (Multimodal Artificial Robotics Verification Intelligence Network Application) is being applied, in this case, to this Roomba, modified with a Raspberry Pi 3B, a 1080p camera, and a combined mic and speaker system.

The hardware specifics have been a fun challenge over the past couple of months, especially relating to the construction of the 3D mounts for the camera and audio input/output system.

Roomba models are particularly well suited to tinkering - the serial connector allows the interface of external hardware - with iRobot (the provider company) having a full manual for commands that can be sent to the Roomba itself. It can even play entire songs! (Highly recommend)

Scope:

Current:

The aim of this project is to, initially, replicate the verbal command system which powers the current virtual environment based system.

This has been achieved with the custom MARVINA AI system, which is interfaced with both the Pocket Sphinx Speech-To-Text (SpeechRecognition · PyPI) and Piper-TTS Text-To-Speech (GitHub - rhasspy/piper: A fast, local neural text to speech system) AI systems. This gives the AI the ability to do one of 8 commands, give verbal output, and use a limited-training version of the emotional-empathy system.

This has mostly been achieved. Now that I know it's functional I can now justify spending money on a better microphone/speaker system so I don't have to shout at the poor thing!

The latency time for the Raspberry PI 3B for each output is a very spritely 75ms! This allows for plenty of time between the current AI input "framerate" of 500ms.

Future - Software:

Subsequent testing will imbue the Roomba with a greater sense of abstracted "emotion" - the AI having a ground set of emotional state variables which decide how it, and the interacting person, are "feeling" at any given point in time.

This, ideally, is to give the AI system a sense of motivation. The AI is essentially being given separate drives for social connection, curiosity and other emotional states. The programming will be designed to optimise for those, while the emotional model will regulate this on a seperate, biologically based, system of under and over stimulation.

In other words, a motivational system that incentivises only up to a point.

The current system does have a system implemented, but this only has very limited testing data. One of the key parts of this project's success will be to generatively create a training data set which will allow for high-quality interactions.

The future of MARVINA-R will be relating to expanding the abstracted equivalent of "Theory-of-Mind". - In other words, having MARVINA-R "imagine" a future which could exist in order to consider it's choices, and what actions it wishes to take.

This system is based, in part, upon the Dyna-lang model created by Lin et al. 2023 at UC Berkley ([2308.01399] Learning to Model the World with Language (arxiv.org)) but with a key difference - MARVINA-R will be running with two neural networks - one based on short-term memory and the second based on long-term memory. Decisions will be made based on which is most appropriate, and on how similar the current input data is to the generated world-model of each model.

Once at rest, MARVINA-R will effectively "sleep", essentially keeping the most important memories, and consolidating them into the long-term network if they lead to better outcomes.

This will allow the system to be tailored beyond its current limitations - where it can be designed to be motivated by multiple emotional "pulls" for its attention.

This does, however, also increase the number of AI outputs required per action (by a magnitude of about 10 to 100) so this will need to be carefully considered in terms of the software and hardware requirements.

Results So Far:

Here is the current prototyping setup for MARVINA-R. As of a couple of weeks ago, I was able to run the entire RaspberryPi and applied hardware setup and successfully interface with the robot with the components disconnected.

I'll upload a video of the final stage of initial testing in the near future - it's great fun!

The main issues really do come down to hardware limitations. The microphone is a cheap ~$6 thing from Amazon and requires you to shout at the poor robot to get it to do anything! The second limitation currently comes from outputting the text-to-speech, which does have a time lag from speaking to output of around 4 seconds. Not terrible, but also can be improved.

To my mind, the proof of concept has been created - this is possible. Now I can justify further time, and investment, for better parts and for more software engineering!

#robot#robotics#roomba#roomba hack#ai#artificial intelligence#machine learning#applied hardware#ai research#ai development#cybernetics#neural networks#neural network#raspberry pi#open source

8 notes

·

View notes

Text

Unlock your future in networking with Melonia Academy’s exclusive special offer on CCNA (Cisco Certified Network Associate) courses! Whether you prefer the flexibility of online learning or the hands-on experience of an in-person classroom, we have the perfect program for you.

3 notes

·

View notes

Text

-`♡´

2 notes

·

View notes

Text

From Recurrent Networks to GPT-4: Measuring Algorithmic Progress in Language Models - Technology Org

New Post has been published on https://thedigitalinsider.com/from-recurrent-networks-to-gpt-4-measuring-algorithmic-progress-in-language-models-technology-org/

From Recurrent Networks to GPT-4: Measuring Algorithmic Progress in Language Models - Technology Org

In 2012, the best language models were small recurrent networks that struggled to form coherent sentences. Fast forward to today, and large language models like GPT-4 outperform most students on the SAT. How has this rapid progress been possible?

Image credit: MIT CSAIL

In a new paper, researchers from Epoch, MIT FutureTech, and Northeastern University set out to shed light on this question. Their research breaks down the drivers of progress in language models into two factors: scaling up the amount of compute used to train language models, and algorithmic innovations. In doing so, they perform the most extensive analysis of algorithmic progress in language models to date.

Their findings show that due to algorithmic improvements, the compute required to train a language model to a certain level of performance has been halving roughly every 8 months. “This result is crucial for understanding both historical and future progress in language models,” says Anson Ho, one of the two lead authors of the paper. “While scaling compute has been crucial, it’s only part of the puzzle. To get the full picture you need to consider algorithmic progress as well.”

The paper’s methodology is inspired by “neural scaling laws”: mathematical relationships that predict language model performance given certain quantities of compute, training data, or language model parameters. By compiling a dataset of over 200 language models since 2012, the authors fit a modified neural scaling law that accounts for algorithmic improvements over time.

Based on this fitted model, the authors do a performance attribution analysis, finding that scaling compute has been more important than algorithmic innovations for improved performance in language modeling. In fact, they find that the relative importance of algorithmic improvements has decreased over time. “This doesn’t necessarily imply that algorithmic innovations have been slowing down,” says Tamay Besiroglu, who also co-led the paper.

“Our preferred explanation is that algorithmic progress has remained at a roughly constant rate, but compute has been scaled up substantially, making the former seem relatively less important.” The authors’ calculations support this framing, where they find an acceleration in compute growth, but no evidence of a speedup or slowdown in algorithmic improvements.

By modifying the model slightly, they also quantified the significance of a key innovation in the history of machine learning: the Transformer, which has become the dominant language model architecture since its introduction in 2017. The authors find that the efficiency gains offered by the Transformer correspond to almost two years of algorithmic progress in the field, underscoring the significance of its invention.

While extensive, the study has several limitations. “One recurring issue we had was the lack of quality data, which can make the model hard to fit,” says Ho. “Our approach also doesn’t measure algorithmic progress on downstream tasks like coding and math problems, which language models can be tuned to perform.”

Despite these shortcomings, their work is a major step forward in understanding the drivers of progress in AI. Their results help shed light about how future developments in AI might play out, with important implications for AI policy. “This work, led by Anson and Tamay, has important implications for the democratization of AI,” said Neil Thompson, a coauthor and Director of MIT FutureTech. “These efficiency improvements mean that each year levels of AI performance that were out of reach become accessible to more users.”

“LLMs have been improving at a breakneck pace in recent years. This paper presents the most thorough analysis to date of the relative contributions of hardware and algorithmic innovations to the progress in LLM performance,” says Open Philanthropy Research Fellow Lukas Finnveden, who was not involved in the paper.

“This is a question that I care about a great deal, since it directly informs what pace of further progress we should expect in the future, which will help society prepare for these advancements. The authors fit a number of statistical models to a large dataset of historical LLM evaluations and use extensive cross-validation to select a model with strong predictive performance. They also provide a good sense of how the results would vary under different reasonable assumptions, by doing many robustness checks. Overall, the results suggest that increases in compute have been and will keep being responsible for the majority of LLM progress as long as compute budgets keep rising by ≥4x per year. However, algorithmic progress is significant and could make up the majority of progress if the pace of increasing investments slows down.”

Written by Rachel Gordon

Source: Massachusetts Institute of Technology

You can offer your link to a page which is relevant to the topic of this post.

#A.I. & Neural Networks news#Accounts#ai#Algorithms#Analysis#approach#architecture#artificial intelligence (AI)#budgets#coding#data#deal#democratization#democratization of AI#Developments#efficiency#explanation#Featured information processing#form#Full#Future#GPT#GPT-4#growth#Hardware#History#how#Innovation#innovations#Invention

4 notes

·

View notes

Text

"no brand is actually good--" true. "--or better." now hold on a minute.

#look. yea all companies are shit.#but there are tangible differences between like. the Level Of Shitty certain companies in comparable positions are yknow#this applies to many companies but#this was about someone saying the above quote about android users and like#yeah google is absolute shit and so are all phone companies and phone network companies#but there is in fact just a Base Difference between open source software (android) and closed source software (ios)#and apple has the whole 'our os is tied to our phones' thing while android can be installed on multiple brands of hardware#there is a tangible difference in the base degree of freedom this allows for android users#something something my APKs would make steve jobs sick#anyways this is not me praising google as a company theyre still abhorrent but its just about like#the facts of the matter#im not defending android cause ive got brand loyalty im literally preparing to degoogle my phone run a different open source OS instead lmao#but like. there are levels and its important to be able to see the difference yknow

3 notes

·

View notes

Text

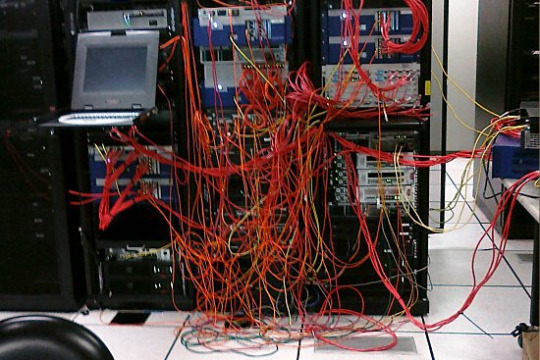

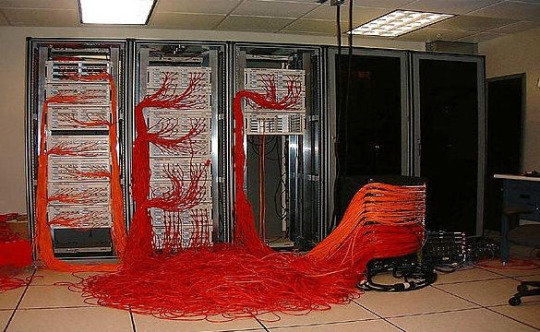

The Horror of Cable Atrophy

my favorite genre of images are server rooms that look like someone murdered a computer

36K notes

·

View notes

Text

YouPowerEdge Tower Servers in Malaysia: Scalable Performance for Evolving Business Needs r page title

The PowerEdge T550 and other similar tower servers are ideal for businesses needing enterprise-grade compute power but that do not maintain a dedicated data centre environment at that site. Whether the company is operating in Kuala Lumpur, Johor Bahru, or Penang, the use of PowerEdge Tower Servers is ideal for branch offices, small and medium enterprises, and remote workers.

Read more:YouPowerEdge Tower Servers in Malaysia: Scalable Performance for Evolving Business Needs r page title

#PowerEdge#TowerServers#DataCenter#ServerTechnology#ITInfrastructure#ServerSolutions#TechInnovation#CloudComputing#BusinessComputing#EnterpriseSolutions#ServerManagement#ITProfessionals#TechTrends#ServerInfrastructure#Hardware#TechnologySolutions#ComputingPower#InfrastructureManagement#Networking#DataStorage

0 notes