#Image to Text | Extract Text from Image | Search using Web

Explore tagged Tumblr posts

Text

Image to Text | Extract Text from Image | Search using Web

Detailed video : https://youtu.be/2R7Nn7GyLdU

#techalert #technical #howto #bing #ai #photo #windows10 #extraction #imagetotext #instagram #fb #trending #reels #youtube

#Image to Text | Extract Text from Image | Search using Web#Detailed video : https://youtu.be/2R7Nn7GyLdU#techalert#technical#howto#bing#ai#photo#windows10#extraction#imagetotext#instagram#fb#trending#reels#youtube#watch video on tech alert yt#love#like#technology#instagood#shorts

1 note

·

View note

Text

25 Python Projects to Supercharge Your Job Search in 2024

Introduction: In the competitive world of technology, a strong portfolio of practical projects can make all the difference in landing your dream job. As a Python enthusiast, building a diverse range of projects not only showcases your skills but also demonstrates your ability to tackle real-world challenges. In this blog post, we'll explore 25 Python projects that can help you stand out and secure that coveted position in 2024.

1. Personal Portfolio Website

Create a dynamic portfolio website that highlights your skills, projects, and resume. Showcase your creativity and design skills to make a lasting impression.

2. Blog with User Authentication

Build a fully functional blog with features like user authentication and comments. This project demonstrates your understanding of web development and security.

3. E-Commerce Site

Develop a simple online store with product listings, shopping cart functionality, and a secure checkout process. Showcase your skills in building robust web applications.

4. Predictive Modeling

Create a predictive model for a relevant field, such as stock prices, weather forecasts, or sales predictions. Showcase your data science and machine learning prowess.

5. Natural Language Processing (NLP)

Build a sentiment analysis tool or a text summarizer using NLP techniques. Highlight your skills in processing and understanding human language.

6. Image Recognition

Develop an image recognition system capable of classifying objects. Demonstrate your proficiency in computer vision and deep learning.

7. Automation Scripts

Write scripts to automate repetitive tasks, such as file organization, data cleaning, or downloading files from the internet. Showcase your ability to improve efficiency through automation.

8. Web Scraping

Create a web scraper to extract data from websites. This project highlights your skills in data extraction and manipulation.

9. Pygame-based Game

Develop a simple game using Pygame or any other Python game library. Showcase your creativity and game development skills.

10. Text-based Adventure Game

Build a text-based adventure game or a quiz application. This project demonstrates your ability to create engaging user experiences.

11. RESTful API

Create a RESTful API for a service or application using Flask or Django. Highlight your skills in API development and integration.

12. Integration with External APIs

Develop a project that interacts with external APIs, such as social media platforms or weather services. Showcase your ability to integrate diverse systems.

13. Home Automation System

Build a home automation system using IoT concepts. Demonstrate your understanding of connecting devices and creating smart environments.

14. Weather Station

Create a weather station that collects and displays data from various sensors. Showcase your skills in data acquisition and analysis.

15. Distributed Chat Application

Build a distributed chat application using a messaging protocol like MQTT. Highlight your skills in distributed systems.

16. Blockchain or Cryptocurrency Tracker

Develop a simple blockchain or a cryptocurrency tracker. Showcase your understanding of blockchain technology.

17. Open Source Contributions

Contribute to open source projects on platforms like GitHub. Demonstrate your collaboration and teamwork skills.

18. Network or Vulnerability Scanner

Build a network or vulnerability scanner to showcase your skills in cybersecurity.

19. Decentralized Application (DApp)

Create a decentralized application using a blockchain platform like Ethereum. Showcase your skills in developing applications on decentralized networks.

20. Machine Learning Model Deployment

Deploy a machine learning model as a web service using frameworks like Flask or FastAPI. Demonstrate your skills in model deployment and integration.

21. Financial Calculator

Build a financial calculator that incorporates relevant mathematical and financial concepts. Showcase your ability to create practical tools.

22. Command-Line Tools

Develop command-line tools for tasks like file manipulation, data processing, or system monitoring. Highlight your skills in creating efficient and user-friendly command-line applications.

23. IoT-Based Health Monitoring System

Create an IoT-based health monitoring system that collects and analyzes health-related data. Showcase your ability to work on projects with social impact.

24. Facial Recognition System

Build a facial recognition system using Python and computer vision libraries. Showcase your skills in biometric technology.

25. Social Media Dashboard

Develop a social media dashboard that aggregates and displays data from various platforms. Highlight your skills in data visualization and integration.

Conclusion: As you embark on your job search in 2024, remember that a well-rounded portfolio is key to showcasing your skills and standing out from the crowd. These 25 Python projects cover a diverse range of domains, allowing you to tailor your portfolio to match your interests and the specific requirements of your dream job.

If you want to know more, Click here:https://analyticsjobs.in/question/what-are-the-best-python-projects-to-land-a-great-job-in-2024/

#python projects#top python projects#best python projects#analytics jobs#python#coding#programming#machine learning

2 notes

·

View notes

Text

Scrape Smarter with the Best Google Image Search APIs

Looking to enrich your applications or datasets with high-quality images from the web? The Real Data API brings you the best Google Image Search scraping solutions—designed for speed, accuracy, and scale.

📌 Key Highlights:

🔍 Extract relevant images based on keywords, filters & advanced queries

⚙️ Integrate seamlessly into AI/ML pipelines and web applications

🧠 Ideal for eCommerce, research, real estate, marketing & visual analytics

💡 Structured output with metadata, image source links, alt-text & more

🚀 Scalable and customizable APIs to suit your unique business use case

From product research to content creation, image scraping plays a pivotal role in automation and insight generation. 📩 Contact us: [email protected]

0 notes

Text

Voice & Visual Search Optimization: Mastering the Future of Search

Introduction: The Rising Dominance of Alternative Search Methods

The digital search landscape is undergoing a radical transformation, with voice and visual searches becoming increasingly prevalent. Recent data shows that 50% of U.S. adults now use voice search daily, while visual search adoption has grown by 300% since 2020. As consumer behavior shifts toward these more natural, conversational search methods, businesses must adapt their SEO strategies or risk becoming invisible in search results. This comprehensive guide explores cutting-edge optimization techniques for both voice search and Pinterest's visual discovery platform, providing actionable strategies to future-proof your digital presence in 2024.

Part 1: Optimizing for Voice Search in 2024

Understanding Voice Search Behavior

Voice searches differ fundamentally from traditional text queries. When speaking to devices, users tend to use longer, conversational phrases (average 29 words vs. 4.2 words for text searches) and question-based formats. For example, while someone might type "best Italian restaurant NYC," they're more likely to ask their smart speaker, "What's the highest-rated Italian restaurant near me with vegan options that's open now?" This shift requires content that directly answers specific, intent-driven questions in a natural speaking style.

Key Optimization Strategies

Focus on Question-Based Keywords Structure content around common who/what/when/where/why questions in your niche. Tools like AnswerThePublic and Google's "People Also Ask" sections reveal valuable question-based queries. Create dedicated FAQ pages or incorporate Q&A formats within existing content to capture these opportunities.

Prioritize Local SEO With 58% of voice searches seeking local business information, ensure your Google Business Profile is complete and optimized. Include natural language phrases like "near me" in your content and metadata. Local schema markup helps search engines understand your location-specific information.

Optimize for Featured Snippets Voice assistants frequently pull answers from position zero results. Structure content with clear, concise answers (40-60 words) above the fold using bullet points or numbered lists. Use header tags (H2, H3) to organize information hierarchically, making it easier for algorithms to extract relevant answers.

Improve Page Speed & Mobile Experience Voice search results favor pages that load quickly (under 2 seconds) and provide excellent mobile experiences. Compress images, leverage browser caching, and use responsive design. Google's Core Web Vitals should be a top priority.

Leverage Natural Language Processing Create content that mimics human conversation patterns. Instead of keyword-stuffed paragraphs, write in complete sentences that flow naturally. Tools like Clearscope or MarketMuse can help analyze and optimize for semantic search relevance.

Part 2: Mastering Pinterest SEO for Visual Search

The Power of Visual Discovery

Pinterest functions as a visual search engine, with 85% of users coming to the platform to find and shop for products. Unlike traditional search engines, Pinterest's algorithm prioritizes fresh, visually appealing content that sparks inspiration. The platform's Lens technology allows users to search by uploading images or taking photos, making visual optimization crucial for discoverability.

Proven Pinterest Ranking Strategies

Keyword-Rich Image Descriptions Pinterest's algorithm reads text within images using OCR technology. Include clear, legible text overlay on pins with primary keywords. Write detailed, keyword-rich descriptions (500 characters minimum) that accurately describe the visual content while incorporating natural language search terms.

Optimized Pin Formats Vertical images (2:3 or 4:5 aspect ratio) perform best, with ideal dimensions of 1000x1500 pixels. Use high-contrast colors that stand out in feeds, and maintain consistent branding across all pins. Video pins see 3x more engagement than static images—create short, captivating clips demonstrating products or processes.

Strategic Keyword Placement Incorporate target keywords in:

Pin titles (first 30 characters are most visible)

Image file names (use hyphens between words)

Board titles and descriptions

Hashtags (3-5 relevant, specific tags)

Fresh Content Strategy Pinterest rewards consistent posting with new, original visuals. Aim for 5-10 pins daily, varying formats between static images, videos, idea pins, and carousels. Use seasonal trends and Pinterest Predicts to anticipate what users will search for next.

Leverage Rich Pins Enable product pins (with real-time pricing), recipe pins (with cook times and ingredients), or article pins (with headlines and authors) to provide more context to both users and the algorithm. These earn 30% more engagement than standard pins.

Part 3: Converging Strategies for Voice & Visual Search

Creating Unified Search Experiences

The future belongs to integrated search experiences where users combine voice commands with visual inputs. Optimize for this convergence by:

Structuring Data for Multimodal Search Implement schema markup for both text and visual content. Product schema should include high-quality image references, while how-to content should have corresponding video schema.

Developing Visual Answer Content Create infographics and visual guides that answer common voice search questions. For example, a "how to tie a tie" voice query could surface a Pinterest pin with step-by-step illustrations.

Optimizing for Cross-Platform Discovery Ensure your visual content appears in Google Image search by including descriptive alt text and captions. Conversely, make sure voice-optimized content includes reference images when appropriate.

Conclusion: Future-Proofing Your Search Strategy

As voice and visual search continue their rapid adoption, businesses must evolve beyond traditional SEO tactics. By implementing these 2024 optimization strategies:

✅ Structure content for conversational queries and question-based searches ✅ Optimize visual assets with keyword-rich metadata and descriptions ✅ Maintain technical excellence for fast-loading, mobile-friendly experiences ✅ Leverage schema markup to enhance multimodal discoverability ✅ Create content that satisfies both informational and commercial intent

Brands that master both voice and visual search optimization will gain a significant competitive advantage in the evolving digital landscape. The time to adapt is now—consumers are already searching differently, and your visibility depends on meeting them where (and how) they're looking.

Pro Tip: Conduct quarterly audits of your voice and visual search performance using tools like SEMrush's Position Tracking and Pinterest Analytics to identify new opportunities and refine your approach.

By embracing these next-generation search optimization techniques with Coding Nectar, you'll position your brand at the forefront of the sensory search revolution.

0 notes

Text

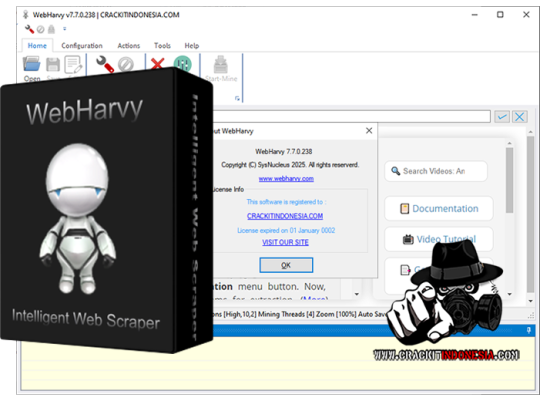

Intuitive Powerful Visual Web Scraper - WebHarvy can automatically scrape Text, Images, URLs & Emails from websites, and save the scraped content in various formats. WebHarvy Web Scraper can be used to scrape data from www.yellowpages.com. Data fields such as name, address, phone number, website URL etc can be selected for extraction by just clicking on them! - Point and Click Interface WebHarvy is a visual web scraper. There is absolutely no need to write any scripts or code to scrape data. You will be using WebHarvy's in-built browser to navigate web pages. You can select the data to be scraped with mouse clicks. It is that easy ! - Scrape Data Patterns Automatic Pattern Detection WebHarvy automatically identifies patterns of data occurring in web pages. So if you need to scrape a list of items (name, address, email, price etc) from a web page, you need not do any additional configuration. If data repeats, WebHarvy will scrape it automatically. - Export scraped data Save to File or Database You can save the data extracted from web pages in a variety of formats. The current version of WebHarvy Web Scraper allows you to export the scraped data as an XML, CSV, JSON or TSV file. You can also export the scraped data to an SQL database. - Scrape data from multiple pages Scrape from Multiple Pages Often web pages display data such as product listings in multiple pages. WebHarvy can automatically crawl and extract data from multiple pages. Just point out the 'link to the next page' and WebHarvy Web Scraper will automatically scrape data from all pages. - Keyword based Scraping Keyword based Scraping Keyword based scraping allows you to capture data from search results pages for a list of input keywords. The configuration which you create will be automatically repeated for all given input keywords while mining data. Any number of input keywords can be specified. - Scrape via proxy server Proxy Servers To scrape anonymously and to prevent the web scraping software from being blocked by web servers, you have the option to access target websites via proxy servers. Either a single proxy server address or a list of proxy server addresses may be used. - Category Scraping Category Scraping WebHarvy Web Scraper allows you to scrape data from a list of links which leads to similar pages within a website. This allows you to scrape categories or subsections within websites using a single configuration. - Regular Expressions WebHarvy allows you to apply Regular Expressions (RegEx) on Text or HTML source of web pages and scrape the matching portion. This powerful technique offers you more flexibility while scraping data. - WebHarvy Support Technical Support Once you purchase WebHarvy Web Scraper you will receive free updates and free support from us for a period of 1 year from the date of purchase. Bug fixes are free for lifetime. WebHarvy 7.7.0238 Released on May 19, 2025 - Updated Browser WebHarvy’s internal browser has been upgraded to the latest available version of Chromium. This improves website compatibility and enhances the ability to bypass anti-scraping measures such as CAPTCHAs and Cloudflare protection. - Improved ‘Follow this link’ functionality Previously, the ‘Follow this link’ option could be disabled during configuration, requiring manual steps like capturing HTML, capturing more content, and applying a regular expression to enable it. This process is now handled automatically behind the scenes, making configuration much simpler for most websites. - Solved Excel File Export Issues We have resolved issues where exporting scraped data to an Excel file could result in a corrupted output on certain system environments. - Fixed Issue related to changing pagination type while editing configuration Previously, when selecting a different pagination method during configuration, both the old and new methods could get saved together in some cases. This issue has now been fixed. - General Security Updates All internal libraries have been updated to their latest versions to ensure improved security and stability. Sales Page:https://www.webharvy.com/ DOWNLOAD LINKS & INSTRUCTIONS: Sorry, You need to be logged in to see the content. Please Login or Register as VIP MEMBERS to access. Read the full article

0 notes

Text

youtube

Image to Text | Extract Text from Image | Search using Web

Detailed video : https://youtu.be/2R7Nn7GyLdU

#techalert #technical #howto #bing #ai #photo #windows10 #extraction #imagetotext #instagram #fb #trending #reels #youtube

#Image to Text | Extract Text from Image | Search using Web#Detailed video : https://youtu.be/2R7Nn7GyLdU#techalert#technical#howto#bing#ai#photo#windows10#extraction#imagetotext#instagram#fb#trending#reels#youtube#love#watch video on tech alert yt#like#technology#shorts#instagood#Youtube

1 note

·

View note

Text

How Web Crawlers Collect and Organize Online Information

In the age of digital expansion, the internet contains billions of web pages. But have you ever wondered how search engines like Google manage to find, organize, and rank all that information in milliseconds? The answer lies in a fascinating piece of technology called a web crawler. Let’s first define web crawler: it is a software bot used by search engines to systematically browse the internet and collect data from websites.

These bots are essential to how search engines operate. Without web crawlers, search engines wouldn’t know what content exists, where to find it, or how to categorize it. They serve as the eyes and ears of search engines, navigating through online content to build massive databases called indexes.

What Exactly Does a Web Crawler Do?

A web crawler, sometimes referred to as a spider or bot, starts its journey with a list of URLs known as seed URLs. It visits these URLs and scans the content on each page. From there, it identifies and follows hyperlinks embedded within those pages to discover more content. This cycle continues indefinitely, enabling crawlers to map out the web efficiently.

The crawler's job involves:

Visiting web pages

Reading and copying the content

Extracting links from the page

Following those links to new pages

Repeating the process continuously

This automated process allows the crawler to compile a detailed and structured version of the internet's vast content landscape.

How Crawlers Organize Data

Crawlers don’t just collect content—they also organize it in a format that search engines can use. Once they fetch a web page, they send the data to an indexing engine. Here's how the process unfolds:

1. Parsing Content

The crawler reads the HTML code of a webpage to identify meaningful content such as headings, keywords, meta tags, and image alt text. This helps determine what the page is about.

2. Storing Metadata

Metadata—such as page title, description, and URL—is stored in an index. This metadata helps search engines show concise, relevant information in search results.

3. Analyzing Links

The crawler maps internal and external links to understand the page’s structure and relevance. Pages with many incoming links from reputable sites are often considered more trustworthy.

4. Handling Duplicate Content

Crawlers detect and avoid indexing duplicate content. If multiple pages have the same information, the engine decides which one to prioritize in search results.

5. Scheduling Recrawls

Since websites frequently update, crawlers revisit sites regularly. High-authority or frequently updated sites may be crawled more often than static pages.

Why Web Crawlers Matter

Web crawlers are the backbone of how search engines operate. Without them, search engines wouldn’t be able to:

Find new websites or pages

Update content in real-time

Remove outdated or deleted pages from their index

Rank results based on relevance and authority

Whether you're running a personal blog or managing a corporate website, understanding how crawlers work helps you optimize your content for visibility. Properly structured content, clear navigation, and effective use of meta tags all contribute to better crawling and indexing.

How to Optimize Your Website for Crawlers

To ensure your site gets crawled and indexed properly, you can follow these best practices:

1. Use a Sitemap

A sitemap is a file that lists all important URLs on your site. Submitting this to search engines ensures they know what to crawl.

2. Avoid Broken Links

Broken links lead crawlers to dead ends, affecting your site's crawlability and user experience.

3. Create Fresh, Valuable Content

Crawlers prefer sites that are updated regularly with high-quality content. Blogs, news updates, and resource articles can all help.

4. Use Robots.txt Wisely

This file tells crawlers which pages they can or cannot access. Use it carefully to avoid blocking important pages from being indexed.

5. Ensure Mobile and Page Speed Optimization

Google and other search engines give preference to mobile-friendly and fast-loading websites. These aspects can influence crawl frequency and ranking.

Conclusion

Understanding how web crawlers collect and organize online information gives you a significant advantage when managing a digital presence. Once you clearly define web crawler, it becomes easier to appreciate the value of SEO practices and structured content. These silent digital workers shape our everyday search experiences and play a crucial role in connecting users with the information they seek.

Whether you're a website owner, marketer, or tech enthusiast, staying informed about crawler behavior is key to achieving online success. The more accessible and optimized your site is, the better your chances of standing out in the vast sea of digital content.

#WebCrawler#SearchEngineOptimization#SEO#DigitalMarketing#CrawlingAndIndexing#TechExplained#SearchEngines#WebsiteOptimization#WebDevelopment#OnlineVisibility#LearnSEO#HowSearchWorks

0 notes

Text

Content Grabbing TG@yuantou2048

In the digital age, content grabbing has become an essential tool for businesses and individuals alike. Whether it's for research purposes, competitive analysis, or simply staying informed about the latest trends, the ability to efficiently gather and analyze information from various sources is crucial. This article will delve into the world of content grabbing, exploring its benefits, applications, and the tools available to make the process as seamless as possible.

Content grabbing, also known as web scraping or data extraction, refers to the automated process of extracting data from websites. This technique allows users to collect large amounts of information quickly and efficiently, which can then be used for a variety of purposes. For instance, e-commerce companies use content grabbing to monitor prices and product availability across different platforms. Journalists and researchers rely on it to compile data for their work. Marketers use it to track competitors' strategies and adjust their own accordingly. The process involves using software or scripts to extract specific data points from web pages and convert them into a structured format that can be easily analyzed and utilized. It's particularly useful in industries where up-to-date information is key, such as finance, marketing, and market research. By automating the collection of data, businesses can gain valuable insights that would otherwise be time-consuming and labor-intensive if done manually. Tools like Python libraries such as BeautifulSoup and Scrapy, or commercial solutions like ParseHub and Octoparse, have made this task more accessible than ever before. However, it's important to note that while these tools are powerful, they must be used responsibly and ethically, respecting website terms of service and privacy policies.

One of the most significant advantages of content grabbing is its speed and accuracy. Instead of manually copying and pasting information, content grabbing tools can scrape vast amounts of data in a fraction of the time it would take to do so by hand. These tools can help in identifying patterns, trends, and making informed decisions based on real-time data.

Moreover, content grabbing plays a vital role in SEO (Search Engine Optimization) strategies. By gathering data on keywords, backlinks, and other metrics, SEO professionals can stay ahead of the curve and optimize their online presence effectively. Additionally, it aids in content monitoring, enabling organizations to keep tabs on their online reputation and customer feedback. In journalism, it facilitates the aggregation of news articles, social media posts, and other online content, providing a comprehensive overview of what's being said about a brand or topic of interest. It's not just limited to text; images, videos, and even entire web pages can be scraped, offering a wealth of information that can inform strategic decisions. As technology continues to evolve, so too does the sophistication of these scraping tools. They enable businesses to stay competitive by keeping track of industry news, competitor analysis, and consumer behavior insights. With the right approach, one can automate the tracking of mentions, sentiment analysis, and trend spotting, ensuring that they remain relevant and responsive to market changes. Furthermore, it supports personalized content creation by understanding what resonates with audiences and tailoring content accordingly.

It's worth mentioning that there are legal and ethical considerations when engaging in such activities. Always ensure compliance with copyright laws and respect the guidelines set forth by the sites being scraped. As we move forward into an increasingly data-driven world, understanding how to leverage these technologies responsibly will be critical for staying ahead in today's fast-paced environment. As we navigate through this landscape, it's imperative to balance between leveraging these capabilities and maintaining user privacy and adhering to web scraping etiquette.

加飞机@yuantou2048

Google外链购买

負面刪除

0 notes

Text

```markdown

Ruby for SEO

In the ever-evolving landscape of digital marketing, Search Engine Optimization (SEO) has become a cornerstone for businesses aiming to boost their online visibility. While many tools and languages are used in this field, Ruby stands out as a powerful and flexible option that can significantly enhance your SEO efforts. This article will delve into how Ruby can be leveraged for SEO, providing practical insights and tips that you can implement today.

Why Ruby for SEO?

Ruby is a dynamic, reflective, object-oriented programming language known for its simplicity and productivity. It offers a range of libraries and frameworks that make it an excellent choice for web development and data processing tasks. Here are some key reasons why Ruby is particularly well-suited for SEO:

1. Scalability: Ruby applications can handle large datasets efficiently, making it ideal for analyzing extensive keyword lists or scraping large volumes of data from websites.

2. Flexibility: With its rich ecosystem of gems (Ruby’s term for libraries), developers can easily integrate various functionalities, such as web crawling, natural language processing, and more.

3. Community Support: The vibrant Ruby community provides continuous updates and support, ensuring that tools and libraries remain up-to-date with the latest SEO trends and practices.

Practical Applications of Ruby in SEO

1. Web Scraping

One of the most common uses of Ruby in SEO is web scraping. By using gems like Nokogiri or Mechanize, you can extract valuable data from websites, such as backlink profiles, meta tags, and other on-page SEO elements. This data can then be analyzed to identify patterns, improve content strategies, and optimize website structure.

2. Keyword Analysis

Keyword research is crucial for any SEO strategy. Ruby can help automate this process by leveraging APIs from tools like Google Trends or SEMrush. By writing scripts to fetch and analyze keyword data, you can gain deeper insights into search behavior and tailor your content accordingly.

3. Content Automation

Creating high-quality content is essential for SEO success. Ruby can assist in automating repetitive tasks related to content creation, such as generating meta descriptions, alt text for images, and even basic content outlines. This not only saves time but also ensures consistency across your website.

Conclusion

As we’ve seen, Ruby offers a robust set of tools and capabilities that can significantly enhance your SEO efforts. Whether you’re looking to scrape data, analyze keywords, or automate content creation, Ruby provides a flexible and scalable solution. However, it’s important to remember that while technology can streamline processes, human insight and creativity remain invaluable in crafting effective SEO strategies.

What are some specific ways you have used Ruby in your SEO projects? Share your experiences and insights in the comments below!

```

加飞机@yuantou2048

SEO优化

SEO优化

0 notes

Text

Benefits of Integrating AI Technologies into Mobile Apps

Integrating AI into mobile apps is transforming the way we interact with technology. From improving user experiences to offering personalized services, AI is helping apps become smarter, faster, and more intuitive. Whether it’s through predictive analytics, voice recognition, or enhanced data security, businesses across various industries are leveraging AI to not only streamline operations but also deliver better value to users. This shift isn’t just about keeping up with trends; it’s about providing real-time solutions and creating deeper, more meaningful connections with users. Let’s dive into how AI technologies are making mobile apps more powerful and relevant to modern-day needs.

16 Benefits of Integrating AI Technologies into Mobile Apps Across industries

1. Better User Interface and User Experience

AI makes apps smarter by analyzing how users interact with them. It can adapt the user interface (UI) to make navigation smoother, display personalized content, and suggest the most relevant features. This helps users find what they need more quickly and easily, creating a better overall experience.

2. Predicting User Behavior

AI analyzes patterns in user data, predicting what users will do next based on their past actions. For example, e-commerce apps can suggest products you might like, while social media apps can recommend content. This helps apps provide more relevant and engaging experiences, keeping users active and satisfied.

3. Repeated Task Automation

AI can handle repetitive tasks like sorting emails, categorizing images, or managing notifications, without any human intervention. This saves users time and reduces errors, especially for tasks that are tedious or time-consuming. For businesses, it leads to better efficiency and productivity within apps.

4. Enhanced Data Security

AI helps strengthen app security by identifying potential threats such as unauthorized logins or suspicious activity. With machine learning algorithms, apps can detect anomalies in real time, flagging possible breaches before they cause harm, and protecting sensitive data more effectively.

5. Voice Recognition

AI powers voice recognition technologies that let users interact with apps through voice commands. Think Siri or Google Assistant—AI enables users to dictate messages, search the web, or even control devices without needing to touch the screen. It improves accessibility and convenience for users on the go.

6. Improved Customer Support with AI Chatbots

AI-powered chatbots are available 24/7 to answer questions, solve problems, and provide product recommendations. They can handle common queries instantly, reducing wait times and freeing up human agents to tackle more complex issues. This means faster support and happier users.

7. Dynamic Pricing Based on User Data

AI can analyze user behavior, market trends, and other data points to adjust prices in real-time. For instance, in travel apps, flight prices may change depending on demand, user location, or time of booking. This allows businesses to optimize prices, offering discounts or premium prices based on individual data.

8. Contextual Advertising and Promotions

AI enables apps to deliver ads and promotions that are directly relevant to the user’s interests and behavior. For example, if you’ve been searching for running shoes, the next time you open a shopping app, it may show you ads for sneakers. This personalized approach increases the chances of user engagement and conversions.

9. Instant Text Summarization and Extraction

AI can quickly analyze long documents, articles, or news reports and summarize the key points. This is especially useful for apps related to news, research, or legal services, where users often need quick insights without reading the entire content. AI reduces reading time and provides concise information on demand.

10. Data-Driven Insights

AI is great at analyzing large volumes of data to uncover hidden patterns and trends that humans might miss. By interpreting this data, AI helps businesses make smarter decisions, such as improving user engagement or optimizing operations. For example, an app can use this data to provide actionable recommendations for the business owner or the user.

11. Real-time Language Translation

AI-powered language translation tools can instantly translate text or speech, enabling apps to serve users across different languages. Whether you're messaging a friend in another country or using an app to travel, real-time translation removes language barriers, making apps accessible to a global audience.

12. Advanced Image and Video Recognition

AI enables apps to recognize and analyze images and videos. For example, social media apps use AI to automatically tag friends in photos or detect inappropriate content. In retail, apps can scan product images to suggest similar items. This adds more functionality and accuracy to the app, improving user experience and engagement.

13. Behavioral Analytics for Targeted Marketing

AI can track and analyze user behavior within an app to create detailed user profiles. These insights allow businesses to deliver highly targeted marketing, such as personalized offers or content that match a user’s preferences. For example, a music app might recommend new songs based on your listening history.

14. AI-Driven Health Monitoring in Fitness Apps

Fitness apps are increasingly using AI to track health data like heart rate, sleep patterns, and steps taken. AI can analyze this data to give users personalized health insights, suggest workouts, or even alert them to potential health issues based on their activity levels. This makes health tracking more accurate and actionable.

15. Emotion Recognition for User Engagement

AI can analyze facial expressions, voice tones, and even text sentiment to gauge a user’s emotions. Apps can then tailor the experience based on these emotional cues. For example, a gaming app might adjust its difficulty or offer motivational messages if it senses a user is frustrated. This makes the app feel more intuitive and in tune with user emotions, fostering deeper engagement.

Challenges of Using AI in Mobile App Development

While AI integration brings immense benefits, there are a few challenges businesses need to consider:

Data Privacy and Security AI requires large amounts of data to be effective, which can raise concerns about data privacy and security. Ensuring that sensitive user information is protected from breaches and complying with regulations like GDPR can be complex when implementing AI technologies.

High Development Complexity Developing AI-powered mobile apps can be resource-intensive. It requires specialized knowledge in AI and machine learning, and the integration process might involve dealing with complex algorithms, customizations, and training datasets, which could be time-consuming.

Scalability and Performance Issues AI models often require a significant amount of computational power. When scaling AI technologies across a larger user base, it can strain server performance and lead to slower app performance, especially if the infrastructure is not properly optimized.

Best Platforms to Develop a Mobile App with AI

When looking to integrate AI into a mobile app, choosing the right platform is crucial for smooth development and scalability. Two great options to consider are:

Amazon Bedrock Amazon Bedrock offers pre-trained models from top AI providers and enables seamless integration with AWS services, making it a scalable and customizable choice for developing AI-driven mobile apps.

Azure Machine Learning Azure ML provides comprehensive machine learning services for mobile app development, including tools like AutoML and end-to-end model deployment, ensuring easy and efficient AI integration within the app development process.

The cost of integrating AI into mobile apps can vary based on several factors:

Complexity of AI Features: The more advanced and custom AI features you want to integrate, like predictive analytics or deep learning models, the higher the cost. Simple features like basic chatbots or voice recognition tend to be less expensive.

Development Time and Expertise: AI development requires specialized skills, which might increase development costs. Hiring AI specialists or partnering with a development company can add to the budget, especially for building and training custom models.

Cloud Services and Infrastructure: If you're using platforms like Amazon Bedrock or Azure ML for AI integration, there will be ongoing costs for cloud services, including storage, data processing, and model training. These costs can vary depending on your app’s scale and usage.

Conclusion:

Incorporating AI into mobile apps is no longer a luxury—it's a necessity for businesses aiming to stay competitive and meet the evolving expectations of users. From enhancing user experience to optimizing operations, AI-powered mobile apps offer endless opportunities for growth and innovation.

1 note

·

View note

Text

How to Move Your WordPress Site from Localhost to a Live Server

Developing a WordPress site on localhost is a great way to build and test your website in a controlled environment. However, the real challenge arises when it's time to move the site from your local server to a live hosting environment. If not done correctly, you could encounter broken links, missing images, or even database errors.

In this blog, we'll guide you through a step-by-step process to successfully move your WordPress site from localhost to a live server.

Step 1: Choose the Right Hosting Provider

Your first step is to select a reliable web hosting provider that meets your website’s needs. Look for:

Server Speed: Fast servers for better performance.

Uptime Guarantee: At least 99.9% uptime to ensure availability.

Ease of Use: User-friendly dashboards and tools.

WordPress Support: Hosting optimized for WordPress websites.

Popular options include Bluehost, SiteGround, and WP Engine.

Step 2: Export Your Local WordPress Database

The database is the backbone of your WordPress site. To export it:

Open phpMyAdmin on your local server (e.g., XAMPP or WAMP).

Select your WordPress database.

Click on the Export tab and choose the Quick Export method.

Save the .sql file to your computer.

Step 3: Upload Your WordPress Files to the Live Server

To move your files:

Compress Your WordPress Folder: Zip your local WordPress installation folder.

Access Your Hosting Account: Use a file manager or an FTP client like FileZilla.

Upload the Files: Transfer the zipped folder to your hosting server's root directory (usually public_html).

Unzip the Folder: Extract the files once uploaded.

Step 4: Create a Database on the Live Server

Now, set up a new database on your live hosting server:

Log in to your hosting control panel (e.g., cPanel).

Navigate to the MySQL Databases section.

Create a new database, database user, and password.

Assign the user to the database with full privileges.

Step 5: Import the Database to the Live Server

Open phpMyAdmin in your hosting control panel.

Select the new database you created.

Click the Import tab.

Choose the .sql file you exported from your localhost.

Click Go to import the database.

Step 6: Update the wp-config.php File

To connect your site to the live database:

Locate the wp-config.php file in your WordPress installation.

Open the file in a text editor.

Update the following lines: define('DB_NAME', 'your_live_database_name'); define('DB_USER', 'your_live_database_user'); define('DB_PASSWORD', 'your_live_database_password'); define('DB_HOST', 'localhost'); // Keep this unless your host specifies otherwise.

Save the file and upload it to your server via FTP.

Step 7: Update URLs in the Database

Your localhost URLs need to be replaced with your live site URLs.

Use a tool like Search Replace DB or run SQL queries in phpMyAdmin.

In phpMyAdmin, run the following query: UPDATE wp_options SET option_value = 'http://your-live-site.com' WHERE option_name = 'siteurl'; UPDATE wp_options SET option_value = 'http://your-live-site.com' WHERE option_name = 'home';

Step 8: Test Your Live Website

Once everything is uploaded and configured, check your website by entering its URL in a browser. Test for:

Broken Links: Fix them using plugins like Broken Link Checker.

Missing Images: Ensure media files were uploaded correctly.

Functionality: Verify forms, buttons, and features work as expected.

Step 9: Set Up Permalinks

To ensure proper URL structure:

Log in to your WordPress admin dashboard on the live site.

Go to Settings > Permalinks.

Choose your preferred permalink structure and click Save Changes.

Step 10: Secure Your Live Website

After migrating, secure your site to prevent vulnerabilities:

Install an SSL Certificate: Most hosting providers offer free SSL certificates.

Update Plugins and Themes: Ensure everything is up to date.

Set Up Backups: Use plugins like UpdraftPlus for regular backups.

Conclusion

Moving your WordPress site from localhost to a live server may seem daunting, but by following these steps, you can ensure a smooth and error-free migration. A successful move allows you to showcase your site to the world, engage your audience, and achieve your goals.

Start today and take your WordPress project live with confidence!

0 notes

Text

Data Entry Services

Data Entry Services in Nagercoil: A Guide to Finding the Right Solutions

Introduction

Data entry services are the backbone for businesses and organizations in their quest to handle huge volumes of information efficiently. It is digitizing documents and managing databases through which data entry specialists ensure business operations continue smoothly, by handling routine but crucial tasks. If you are from Nagercoil and searching for trustworthy data entry services, then this guide covers everything ranging from understanding the benefits of outsourcing data entry to knowing the services available locally.

1. The Growing Need for Data Entry Services

As businesses get increasingly large volumes of data generated, the need for more efficient data management grows exponentially as well. However, an in-house data entry arrangement is expensive and time consuming, especially for small- and medium-sized businesses. This is why more companies are opting for data entry services.

Focus on Core Activities: Outsourcing data entry allows businesses to focus on core activities like strategy, sales, and customer service, without getting bogged down in administrative tasks.

Cost-Effective Solutions: Maintaining an in-house data entry team can be costly due to salaries, benefits, and infrastructure. Outsourcing provides access to skilled professionals at a fraction of the cost.

Scalability: Data entry services offer scalable solutions, allowing you to expand or reduce your requirements based on demand.

Accuracy and Reliability: Professionals with experience in data entry ensure higher accuracy rates, reducing the risk of errors that could impact business decisions.

2. Types of Data Entry Services Offered in Nagercoil

Nagercoil, being an active business hub, hosts various companies and freelancers offering data entry solutions. The services offered often cover a wide range of tasks:

A. Online Data Entry

Dealing with data which could be databases or any other type of online systems, this updates CRM systems. Useful for the ecommerce business such as Nagercoil in that a constant updatation on catalogues or prices in customer databases might be necessary.

B. Offline Data Entry

Offline data entry focuses on working with data without the need for internet connectivity. This includes tasks such as entering information into offline spreadsheets or databases and digitizing data from printed documents or handwritten notes. Many local businesses and institutions in Nagercoil prefer offline data entry for specific confidential tasks.

C. Data Processing

Data processing is an extension of data entry, where raw data is analyzed, structured, and transformed into meaningful insights. Companies offering data entry services in Nagercoil can process the results of surveys, transaction data, and customer records to create valuable reports and analytics.

D. Image and Document Data Entry

This demand typically encompasses scanned images from paper-based documents and photos in digital format. Scanned documents, invoices, forms, and files represent data captured in image format. Among the services included in this category are Optical Character Recognition services, which are used to extract text recognition from images. This service is heavily in demand by hospitals, schools, and legal offices.

E. Data Mining and Web Research

Data mining and web research comprise finding certain information from many internet-based sources and formulating those into usable databases. All kinds of businesses present in Nagercoil require data mining in order to know market trends, competitor studies, and lead generation.

F. Medical and Legal Data Entry

Sector-specific data entry: Health and legal, where accuracy in data is important as well as maintaining confidentiality, would require special data entry skills. Medical data entry relates to the entry of a patient's records, billing, and insurance details, and legal data entry concerns dealing with contracts, case data, and compliance documents.

3. Advantages of Choosing Data Entry Services in Nagercoil

Nagercoil hosts numerous data entry service providers, making it easier for local businesses to find affordable and reliable solutions. Here are some specific benefits:

A. Cost-Effective Services

Compared to metropolitan areas, data entry services in Nagercoil are generally more affordable. This allows businesses to maintain a lean budget while still accessing quality services.

B. Skilled Workforce

Many data entry professionals in Nagercoil have the appropriate skills and experience to carry out tasks involving high volumes of data entry with precision. They are aware of using software such as MS Excel, Google Sheets, and specialized CRM platforms.

C. Local Understanding

Providers in Nagercoil understand the local market dynamics, regulatory requirements, and cultural aspects, making it easier to manage data that might require regional insight.

D. Quick Turnaround Times

Given the competitive market, local data entry service providers in Nagercoil offer fast and efficient turnaround times to meet client deadlines.

4. Key Industries Benefiting from Data Entry Services in Nagercoil

Several industries in Nagercoil benefit from data entry services, helping them streamline processes and improve operational efficiency. Here are some notable sectors:

A. Retail and E-commerce

Data entry services are commonly used by retailers in Nagercoil for stock, order processing, and maintaining customer records. E-commerce businesses use this data entry for information such as product descriptions, prices, and availability.

B. Healthcare and Medical Services

Hospitals, clinics, and doctors in Nagercoil use data entry for patient records, insurance claims, and billing. Data management is essential for health care organizations as it maintains regulatory compliance and ensures that quality care is provided to patients.

C. Education

Schools, colleges, and other educational institutions frequently use data entry for managing student information, admissions, and grades. This ensures that records are easily accessible, secure, and well-organized.

D. Financial and Banking Services

Banks and financial institutions rely on data entry for handling customer data, transactions, and loan records. Efficient data management helps improve service and maintain regulatory compliance.

E. Real Estate

Real estate agencies and brokers in Nagercoil use data entry services to manage property listings, client information, and transaction records, helping them maintain an organized database that supports client interactions.

5. Top Data Entry Providers in Nagercoil

There are several reputable data entry providers and freelance professionals in Nagercoil. Here’s a list of some well-regarded options to consider:

Nagercoil Data Solutions: Specializes in offline and online data entry services with a focus on retail and healthcare industries.

Data Hub Nagercoil: Offers services like image-to-text conversion, web research, and document digitization. Known for their quick turnaround times and competitive pricing.

Precision Data Entry Services: Provides high-accuracy data entry and processing services, including OCR-based tasks for legal and medical sectors.

Elite Business Solutions: Focuses on data entry and data processing for SMEs, helping them streamline operational data and back-office processes.

Reliable Data Works: A freelancer team that offers scalable data entry solutions, especially suited for e-commerce and real estate.

6. What to Look for When Hiring a Data Entry Service Provider

Choosing the right data entry provider can have a significant impact on your business. Here are some factors to consider when selecting a data entry service provider in Nagercoil:

A. Experience and Expertise

Look for providers with experience in handling data for your specific industry. Expertise ensures that they understand the intricacies of your data requirements, minimizing the risk of errors.

B. Technology and Tools Used

Data entry is no longer just manual; it often involves tools for scanning, OCR, and data validation. Ensure the provider uses up-to-date technology for high accuracy and efficiency.

C. Quality Control Processes

Data accuracy is crucial, so inquire about the provider’s quality control processes. Double-entry checks, regular audits, and verification processes are essential to maintaining high data quality.

D. Confidentiality and Security

Data security is a top priority, especially when dealing with sensitive information. Choose a provider who follows strict data protection policies and has secure systems in place.

E. Pricing and Flexibility

Compare pricing models and understand what’s included in the service. Ensure the provider is flexible enough to handle fluctuating data volumes or deadlines, as your business needs may vary.

F. Client Testimonials

Reading testimonials or asking for references can provide insight into the provider’s reliability, turnaround time, and overall service quality.

7. The Future of Data Entry Services in Nagercoil

As technology advances, the landscape of data entry services continues to evolve. Here’s a look at some future trends:

A. Automation and AI Integration

Automation tools and artificial intelligence are increasingly used in data entry to improve accuracy and speed. Many providers in Nagercoil are adopting automation software to streamline data processing tasks and reduce manual errors.

B. Cloud-Based Solutions

Cloud storage and SaaS platforms allow for easier collaboration and access to data from anywhere. Data entry providers in Nagercoil are beginning to offer cloud-based solutions, enabling clients to access data securely and conveniently.

C. Specialization by Industry

The trend will be specialization due to the growing demand for data entry services. Here, providers in Nagercoil are tailoring the services to meet the demand of specific industries, whether it is healthcare, finance, or retail, where there would be higher quality and accuracy.

Conclusion

Data entry services help any business manage its data in a proper manner, thus saving core activities for the core workforce. Nagercoil has professionals and agencies that are keen on providing reliable data entry solutions across various industries.

Whether you’re a small business looking to organize your customer records or a large enterprise requiring ongoing data processing, Nagercoil’s data entry service providers offer affordable, high-quality options. By partnering with the right provider, you can ensure your data is accurate, secure, and easily accessible, contributing to better decision-making and operational efficiency. For more info Eloiacs

0 notes

Text

API-Free: The Secret to Achieving Advanced Features at a Low Cost

As artificial intelligence (AI) continues to drive the development of different fields from video script generation to geocoding solutions, the digital landscape is undergoing tremendous changes. The introduction of innovative models such as Fuyu - 8b and Heaven Heart has set new standards for AI capabilities, enabling developers to design innovative AI applications that meet various needs. Among them, OAuth2 is a basic authentication structure that protects the API user interfaces of platforms such as AI video script generation platforms, which automatically create web content with impressive efficiency.

In the field of intelligent chatbots, the proliferation of dedicated APIs such as the Kimi AI API is noteworthy. These APIs encourage designers to build chatbots that can replicate human - like conversations, dynamically adapt to customer inputs, and provide feedback that enhances personal engagement. This intelligent chatbot API utilizes the underlying architecture of AI agents, which is rooted in the concept of AI agent architecture. This architecture allows for the seamless integration of numerous AI components to produce an acceptable and cohesive personal experience.

The use of text - generating AI has also grown rapidly. Systems using such AI aim to generate contextually appropriate and coherent text, whether for customer support, content development, or various other applications. These AI models can generate a large amount of text that not only conforms to the customer's intention but also resembles human writing style to some extent, making them almost identical to human - generated materials.

In terms of vision, reverse image search engine tools are becoming increasingly sophisticated, allowing users to identify and locate images with a single click. These tools are usually supported by powerful artificial intelligence models that can analyze and match images with incredible accuracy, facilitating everything from e - commerce applications to digital asset management. The integration of these artificial intelligence tools with other platforms has become more structured, thanks in part to open platform API interfaces that allow developers to easily connect different systems and services.

The e - invoice interface represents a significant step forward in economic technology in terms of automation and secure procurement. The interface utilizes artificial intelligence to ensure that electronic bills are processed correctly and effectively, reducing the risk of errors and accelerating the settlement cycle. Incorporating artificial intelligence into such financial systems is not only about efficiency but also about improving the overall accuracy and integrity of economic procurement.

In the field of geographic information, the expansion of artificial intelligence maps is changing the way we interact with spatial information. Tools such as the Geoapify Geocoding API are leading this trend, providing developers with powerful geocoding services that can convert addresses into geographic coordinates and vice versa. This API is particularly useful for applications that require precise location data, such as logistics, travel, and urban planning.

At the same time, the Qianfan SDK has brought a wave to the developer community, providing a comprehensive toolkit for building AI applications. The design goal of this SDK is to be flexible and able to integrate different AI frameworks and APIs into one application, thus accelerating the development process. It is particularly useful for developers who wish to integrate advanced AI functions such as natural language processing or computer vision into their applications.

The rise of systems such as the text deletion tool Texttract highlights the growing demand for artificial intelligence services that can handle messy data. Texttract utilizes artificial intelligence to extract useful information from various records, making it a very useful tool in markets such as law, healthcare, and finance, where the ability to quickly analyze and evaluate a large amount of text is crucial.

In the broader context of AI development, the relationship between AIGC (AI - generated web content) and large language models is becoming increasingly close. Large language models (such as the models that drive text - generating AI) are the engines behind AIGC, capable of creating various contents from text to video scripts with minimal human processing. For anyone related to AI development, understanding the composition of large models is crucial, because these models are defined by their ability to process and generate human - like text.

Free AI APIs are equalizing the accessibility of these advanced devices, allowing developers, startups, and hobbyists to experiment with AI without a large capital investment. Platforms using free API interfaces are promoting development by lowering the entry barrier, resulting in a wider range of applications in the market. The accessibility of such APIs combined with detailed API integration management tools ensures that even complex systems can be easily established and published.

For example, weather APIs are gradually being integrated into applications in various fields from agriculture to event planning. These APIs provide real - time weather data that is crucial for the decision - making process, and durable API management platforms help to integrate them into existing systems.

Similarly, translation APIs are changing the way people and businesses interact across language barriers. These APIs utilize innovative AI models to provide real - time accurate translations, making it easier for global teams to cooperate and for businesses to enter larger target markets. Using foreign APIs (especially those providing free API services) further expands the coverage of such tools.

The concept of the API open platform is the core of this ecosystem, using a central interface where developers can access, process, and integrate various APIs into their applications. These systems usually include comprehensive documentation and tools, such as the Postman download portal, which simplifies the process of testing and deploying APIs.

Mijian Integration As artificial intelligence continues to develop, the use of artificial intelligence API interfaces is expected to expand, and more and more platforms will open their artificial intelligence functions. This open platform approach not only encourages innovation but also ensures that the advantages of artificial intelligence are more widely spread.

In conclusion, the rapid development of artificial intelligence technology (as evidenced by the expansion of intelligent chatbot APIs, text generators, reverse image search tools, etc.) is reshaping the way we interact with digital systems. From the integration of geocoding solutions to the automation of e - invoices, artificial intelligence is leading the way in promoting efficiency, precision, and innovation in multiple fields. As developers continue to utilize the power of open systems and free APIs, the possibilities for new transformative applications are virtually limitless.

Explinks is a leading API integration management platform in China, focusing on providing developers with comprehensive, efficient and easy-to-use API integration solutions.

0 notes

Text

Office 2024 LTSC is now available

In Office 2024 and Office LTSC 2024, you'll find several new features including several new functions in Excel, improved accessibility, better session recovery in Word, new capabilities in Access, and a new and more modern design that brings Office 2024 together.

The Microsoft Office 2024 retail final edition is expected to be released in Oct 2024. It will be available as a standalone one-time purchase and its expected cost is anticipated to be the same as all previous versions of Office, respectively of different editions.

You can use the Office Deployment Tool to download office 2024 LTSC now at www.microsoft.com/en-us/download/details.aspx?id=49117

You can get the office 2024 , office 2021 and Microsoft 365 at Keyingo.com

What's new in Office 2024 LTSC

New default Office theme

Office 2024 has a more natural and consistent experience within and between your Office apps. This new look applies Fluent Design principles to deliver an intuitive, familiar experience across all your applications. It shines on Windows 11, while still enhancing the user experience on Windows 10.

Insert a picture from a mobile device

It used to take several steps to transfer images from your phone to computer, but now you can use your Android device to insert pictures directly into your content in Office LTSC 2024.

Support for OpenDocument Format (ODF) 1.4

We now include support for the OpenDocument format (ODF) 1.4. The ODF 1.4 specification adds support for many new features.

Give a Like reaction to a comment

Quickly identify new comments or new replies with the blue dot and show your support to a comment with a Like reaction.

Dynamic charts with dynamic arrays

In Excel 2024, you can now reference Dynamic Arrays in charts to help visualize datasets of variable length. Charts automatically update to capture all data when the array recalculates, rather than being fixed to a specific number of data points.

Text and array functions

There are now 14 new text and array functions in Excel 2024 that are designed to help you manipulate text and arrays in your worksheets. These functions make it easier to extract and split text strings and enable you to combine, reshape, resize, and select arrays with ease.

New IMAGE function

Now in Excel 2024, you can add pictures to your workbooks using copy and paste or you can use the IMAGE function to pull pictures from the web. You can also easily move, resize, sort, and filter within an Excel table without the image moving around.

Faster workbooks

The speed and stability of Excel 2024 workbooks has been improved, reducing the delays and hang-ups that arise when multiple workbooks with independent calculations are open at the same time.

Present with cameo

With cameo, you can insert your live camera feed directly on a PowerPoint slide. You can then apply the same effects to your camera feed as you can to a picture or other object, including formatting, transitions, and styles.

Create a video in Recording Studio

Record your PowerPoint presentation—or just a single slide—and capture voice, ink gestures, and your video presence. Export your recorded presentation as a video file and play it for your audience.

Embed Microsoft Stream (on SharePoint) videos

Add Microsoft Stream (on SharePoint) videos to the presentation to enhance and enrich your storytelling.

Add closed captions for video and audio

You can now add closed captions or subtitles to videos and audio files in your presentations. Adding closed captions makes your presentation accessible to a larger audience, including people with hearing disabilities and those who speak languages other than the one in your video.

Improved search for email, calendars, and contacts

New improvements have been added to search in Outlook 2024 to boost messages, attachments, contacts, and calendar entries so when you type your search it surfaces the most relevant suggestions.

More options for meeting creation

Outlook 2024 gives users more options while creating or managing meetings helping you carve out breaks between calls by automatically shortening meetings depending on their length.

Recover your Word session

When Word 2024 closes unexpectedly before you save your most recent changes, Word automatically opens all the documents you had open when the process closed, allowing you to continue where you left off.

Improved Draw tab and ink features

OneNote LTSC 2024 has many new features and updates to existing tools to make your inking and Draw tab experience more robust and customizable. Now your ink will render instantly when drawn with your Surface pen and look just as good as traditional ink on paper. OneNote LTSC 2024 also now has more color and size options for your drawing tools, as well as better organization of the tools for easier access.

Access Dataverse Connector with Power Platform

Unlock new capabilities organizations need and want like mobile solutions and Microsoft Teams integration. Keep the value and ease of use of Access together with the value of cloud-based storage.

Even more shapes, stencils, and templates

Visio 2024 has even more shapes, stencils, and templates to help you create diagrams. Visio Standard 2024 now brings many new icons, sticky notes to brainstorm, and a plethora of infographics like pictograms and To-Do-Lists to name a few. Visio Professional 2024 includes all the added content from Standard and includes 10+ new Azure stencils, and more network and software content like Kubernetes Shapes and Yourdon-Coad Notations.

0 notes

Text

Web Scraping 103 : Scrape Amazon Product Reviews With Python –

Amazon is a well-known e-commerce platform with a large amount of data available in various formats on the web. This data can be invaluable for gaining business insights, particularly by analyzing product reviews to understand the quality of products provided by different vendors.

In this guide we will look into web scraping steps to extract amazon reviews of a particular product and save it in excel or csv format. Since manually copying information online can be tedious, in this guide we’ll focus on scraping reviews from Amazon. This hands-on experience will enhance our practical understanding of web scraping techniques.

Before we start, make sure you have Python installed in your system, you can do that from this link: python.org. The process is very simple, just install it like you would install any other application.

Now that everything is set let’s proceed:

How to Scrape Amazon Reviews Using Python

Install Anaconda using this link: https://www.anaconda.com/download . Be sure to follow the default settings during installation. For more guidance, please click here.

We can use various IDEs, but to keep it beginner-friendly, let’s start with Jupyter Notebook in Anaconda. You can watch the video linked above to understand and get familiar with the software.

Steps for Web Scraping Amazon Reviews:

Create New Notebook and Save it. Step 1: Let’s start importing all the necessary modules using the following code:

import requests from bs4 import BeautifulSoup import pandas as pd

Step 2: Define Headers to avoid getting your IP blocked. Note that you can search my user agent on google to get your user agent details and replace it below “User-agent”: “here goes your useragent below”.

custom_headers = { "Accept-language": "en-GB,en;q=0.9", "User-agent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/17.1 Safari/605.1.15", }

Step 3: Create a python function, to fetch the webpage, check for errors and return a BeautifulSoup object for further processing.

# Function to fetch the webpage and return a BeautifulSoup object def fetch_webpage(url): response = requests.get(url, headers=headers) if response.status_code != 200: print("Error in fetching webpage") exit(-1) page_soup = BeautifulSoup(response.text, "lxml") return page_soup

Step 4: Inspect Element to find the element and attribute from which we want to extract data, Lets Create another function to select the div and attribute and set it to variable , extract_reviews identifies review-related elements on a webpage, but it doesn’t yet extract the actual review content. You would need to add code to extract the relevant information from these elements (e.g., review text, ratings, etc.).

Function to extract reviews from the webpage def extract_reviews(page_soup): review_blocks = page_soup.select('div[data-hook="review"]') reviews_list = []

Step 5: Below code processes each review element and extracts the customer’s name (if available), and stores it in the customer variable. If no customer information is found, customer remains none.

#for review in review_blocks: author_element = review.select_one('span.a-profile-name') customer = author_element.text if author_element else None rating_element = review.select_one('i.review-rating') customer_rating = rating_element.text.replace("out of 5 stars", "") if rating_element else None title_element = review.select_one('a[data-hook="review-title"]') review_title = title_element.text.split('stars\n', 1)[-1].strip() if title_element else None content_element = review.select_one('span[data-hook="review-body"]') review_content = content_element.text.strip() if content_element else None date_element = review.select_one('span[data-hook="review-date"]') review_date = date_element.text.replace("Reviewed in the United States on ", "").strip() if date_element else None image_element = review.select_one('img.review-image-tile') image_url = image_element.attrs["src"] if image_element else None

Step 6: The purpose of this function is to process scraped reviews. It takes various parameters related to a review (such as customer, customer_rating, review_title, review_content, review_date, and image URL), and the function returns the list of processed reviews.

review_data = { "customer": customer, "customer_rating": customer_rating, "review_title": review_title, "review_content": review_content, "review_date": review_date, "image_url": image_url } reviews_list.append(review_data) return reviews_list

Step 7: Now, Let’s initialize a search_url variable with an Amazon product review page URL

def main(): review_page_url = "https://www.amazon.com/BERIBES-Cancelling-Transparent-Soft-Earpads-Charging-Black/product- reviews/B0CDC4X65Q/ref=cm_cr_dp_d_show_all_btm?ie=UTF8&reviewerType=all_reviews" page_soup = fetch_webpage(review_page_url) scraped_reviews = extract_reviews(page_soup)

Step 8: Now let’s print(“Scraped Data:”, data) scraped review data (stored in the data variable) to the console for verification purposes.

# Print the scraped data to verify print("Scraped Data:", scraped_reviews)

Step 9: Next, Create a dataframe from the data which will help organize data into tabular form.

# create a DataFrame and export it to a CSV file reviews_df = pd.DataFrame(data=scraped_reviews)

Step 10: Now exports the DataFrame to a CSV file in current working directory

reviews_df.to_csv("reviews.csv", index=False) print("CSV file has been created.")

Step 11: below code construct acts as a protective measure. It ensures that certain code runs only when the script is directly executed as a standalone program, rather than being imported as a module by another script.

# Ensuring the script runs only when executed directly if __name__ == '__main__': main()

Result:

Why Scrape Amazon Product Reviews?

Scraping Amazon product reviews can provide valuable insights for businesses. Here’s why you should consider it:

● Feedback Collection: Every business needs feedback to understand customer requirements and implement changes to improve product quality. Scraping reviews allows businesses to gather large volumes of customer feedback quickly and efficiently.

● Sentiment Analysis: Analyzing the sentiments expressed in reviews can help identify positive and negative aspects of products, leading to informed business decisions.

● Competitor Analysis: Scraping allows businesses to monitor competitors’ pricing and product features, helping to stay competitive in the market.

● Business Expansion Opportunities: By understanding customer needs and preferences, businesses can identify opportunities for expanding their product lines or entering new markets.

Manually copying and pasting content is time-consuming and error-prone. This is where web scraping comes in. Using Python to scrape Amazon reviews can automate the process, reduce manual errors, and provide accurate data.

Benefits of Scraping Amazon Reviews

● Efficiency: Automate data extraction to save time and resources.

● Accuracy: Reduce human errors with automated scripts.

● Large Data Volume: Collect extensive data for comprehensive analysis.

● Informed Decision Making: Use customer feedback to make data-driven business decisions.

I found an amazing, cost-effective service provider that makes scraping easy. Follow this link to learn more.

Conclusion

Now that we’ve covered how to scrape Amazon reviews using Python, you can apply the same techniques to other websites by inspecting their elements. Here are some key points to remember:

● Understanding HTML: Familiarize yourself with HTML structure. Knowing how elements are nested and how to navigate the Document Object Model (DOM) is crucial for finding the data you want to scrape.

● CSS Selectors: Learn how to use CSS selectors to accurately target and extract specific elements from a webpage.

● Python Basics: Understand Python programming, especially how to use libraries like requests for making HTTP requests and BeautifulSoup for parsing HTML content.

● Inspecting Elements: Practice using browser developer tools (right-click on a webpage and select “Inspect” or press Ctrl+Shift+I) to examine the HTML structure. This helps you find the tags and attributes that hold the data you want to scrape.

● Error Handling: Add error handling to your code to deal with possible issues, like network errors or changes in the webpage structure.

● Legal and Ethical Considerations: Always check a website’s robots.txt file and terms of service to ensure compliance with legal and ethical rules of web scraping.

By mastering these areas, you’ll be able to confidently scrape data from various websites, allowing you to gather valuable insights and perform detailed analyses.

1 note

·

View note

Text

Amazon Titan Text Embeddings V2: Next-Gen Text Embeddings

Amazon Titan FMs

Amazon Titan AI