#Impact of Open Source AI

Explore tagged Tumblr posts

Text

2024 AI Trends: What to Expect and Why It Matters

The landscape of artificial intelligence (AI) in 2024 is set to be shaped by ten pivotal trends, including agentic AI, open-source AI, and AI-powered cybersecurity. Agentic AI will revolutionize workflows by autonomously handling complex tasks and adapting to dynamic environments, enhancing productivity and operational efficiency. Open-source AI fosters innovation and collaboration, leading to the development of more robust and versatile AI applications across various industries. AI-powered cybersecurity significantly improves threat detection and response times, bolstering organizational security against cyber threats.

Hyper automation leverages AI and robotic process automation (RPA) to automate end-to-end business processes, driving productivity and efficiency. Edge AI computing processes data locally, reducing latency and enabling real-time decision-making. In healthcare, AI enhances diagnostics, personalized treatments, and patient care, making medical services more accessible and efficient. Explainable AI ensures transparency and accountability, making AI decisions understandable and trustworthy, thereby building user confidence.

These AI trends underscore the transformative impact of AI on various sectors and the importance for businesses to stay informed and adaptive. AI-driven personalization, creativity, and sustainability initiatives present additional avenues for innovation and growth. To explore how AI can Learn more...

#AI for Sustainability#How Intelisync AI solutions open up new options for your Organizations?#How will AI impact businesses in 2024?#Impact of AI in Healthcare#Impact of Open Source AI#Impact of Rise of Hyper Automation#Top 10 AI Trends to Watch in 2024#Use Cases of Agentic AI#Use Cases of AI-driven Personalization#Use Cases of AI-powered Cybersecurity#What are the most important AI trends to watch in 2024?#Why is ethical AI development important in 2024?

0 notes

Text

There is something deliciously funny about AI getting replaced by AI.

tl;dr: China yeeted a cheaper, faster, less environmental impact making, open source LLM model onto the market and US AI companies lost nearly 600 billions in value since yesterday.

Silicone Valley is having a meltdown.

And ChatGTP just lost its job to AI~.

27K notes

·

View notes

Text

the scale of AI's ecological footprint

standalone version of my response to the following:

"you need soulless art? [...] why should you get to use all that computing power and electricity to produce some shitty AI art? i don’t actually think you’re entitled to consume those resources." "i think we all deserve nice things. [...] AI art is not a nice thing. it doesn’t meaningfully contribute to us thriving and the cost in terms of energy use [...] is too fucking much. none of us can afford to foot the bill." "go watch some tv show or consume some art that already exists. […] you know what’s more environmentally and economically sustainable […]? museums. galleries. being in nature."

you can run free and open source AI art programs on your personal computer, with no internet connection. this doesn't require much more electricity than running a resource-intensive video game on that same computer. i think it's important to consume less. but if you make these arguments about AI, do you apply them to video games too? do you tell Fortnite players to play board games and go to museums instead?

speaking of museums: if you drive 3 miles total to a museum and back home, you have consumed more energy and created more pollution than generating AI images for 24 hours straight (this comes out to roughly 1400 AI images). "being in nature" also involves at least this much driving, usually. i don't think these are more environmentally-conscious alternatives.

obviously, an AI image model costs energy to train in the first place, but take Stable Diffusion v2 as an example: it took 40,000 to 60,000 kWh to train. let's go with the upper bound. if you assume ~125g of CO2 per kWh, that's ~7.5 tons of CO2. to put this into perspective, a single person driving a single car for 12 months emits 4.6 tons of CO2. meanwhile, for example, the creation of a high-budget movie emits 2840 tons of CO2.

is the carbon cost of a single car being driven for 20 months, or 1/378th of a Marvel movie, worth letting anyone with a mid-end computer, anywhere, run free offline software that consumes a gaming session's worth of electricity to produce hundreds of images? i would say yes. in a heartbeat.

even if you see creating AI images as "less soulful" than consuming Marvel/Fortnite content, it's undeniably "more useful" to humanity as a tool. not to mention this usefulness includes reducing the footprint of creating media. AI is more environment-friendly than human labor on digital creative tasks, since it can get a task done with much less computer usage, doesn't commute to work, and doesn't eat.

and speaking of eating, another comparison: if you made an AI image program generate images non-stop for every second of every day for an entire year, you could offset your carbon footprint by… eating 30% less beef and lamb. not pork. not even meat in general. just beef and lamb.

the tech industry is guilty of plenty of horrendous stuff. but when it comes to the individual impact of AI, saying "i don’t actually think you’re entitled to consume those resources. do you need this? is this making you thrive?" to an individual running an AI program for 45 minutes a day per month is equivalent to questioning whether that person is entitled to a single 3 mile car drive once per month or a single meatball's worth of beef once per month. because all of these have the same CO2 footprint.

so yeah. i agree, i think we should drive less, eat less beef, stream less video, consume less. but i don't think we should tell people "stop using AI programs, just watch a TV show, go to a museum, go hiking, etc", for the same reason i wouldn't tell someone "stop playing video games and play board games instead". i don't think this is a productive angle.

(sources and number-crunching under the cut.)

good general resource: GiovanH's article "Is AI eating all the energy?", which highlights the negligible costs of running an AI program, the moderate costs of creating an AI model, and the actual indefensible energy waste coming from specific companies deploying AI irresponsibly.

CO2 emissions from running AI art programs: a) one AI image takes 3 Wh of electricity. b) one AI image takes 1mn in, for example, Midjourney. c) so if you create 1 AI image per minute for 24 hours straight, or for 45 minutes per day for a month, you've consumed 4.3 kWh. d) using the UK electric grid through 2024 as an example, the production of 1 kWh releases 124g of CO2. therefore the production of 4.3 kWh releases 533g (~0.5 kg) of CO2.

CO2 emissions from driving your car: cars in the EU emit 106.4g of CO2 per km. that's 171.19g for 1 mile, or 513g (~0.5 kg) for 3 miles.

costs of training the Stable Diffusion v2 model: quoting GiovanH's article linked in 1. "Generative models go through the same process of training. The Stable Diffusion v2 model was trained on A100 PCIe 40 GB cards running for a combined 200,000 hours, which is a specialized AI GPU that can pull a maximum of 300 W. 300 W for 200,000 hours gives a total energy consumption of 60,000 kWh. This is a high bound that assumes full usage of every chip for the entire period; SD2’s own carbon emission report indicates it likely used significantly less power than this, and other research has shown it can be done for less." at 124g of CO2 per kWh, this comes out to 7440 kg.

CO2 emissions from red meat: a) carbon footprint of eating plenty of red meat, some red meat, only white meat, no meat, and no animal products the difference between a beef/lamb diet and a no-beef-or-lamb diet comes down to 600 kg of CO2 per year. b) Americans consume 42g of beef per day. this doesn't really account for lamb (egads! my math is ruined!) but that's about 1.2 kg per month or 15 kg per year. that single piece of 42g has a 1.65kg CO2 footprint. so our 3 mile drive/4.3 kWh of AI usage have the same carbon footprint as a 12g piece of beef. roughly the size of a meatball [citation needed].

553 notes

·

View notes

Note

Is AWAY using it's own program or is this just a voluntary list of guidelines for people using programs like DALL-E? How does AWAY address the environmental concerns of how the companies making those AI programs conduct themselves (energy consumption, exploiting impoverished areas for cheap electricity, destruction of the environment to rapidly build and get the components for data centers etc.)? Are members of AWAY encouraged to contact their gov representatives about IP theft by AI apps?

What is AWAY and how does it work?

AWAY does not "use its own program" in the software sense—rather, we're a diverse collective of ~1000 members that each have their own varying workflows and approaches to art. While some members do use AI as one tool among many, most of the people in the server are actually traditional artists who don't use AI at all, yet are still interested in ethical approaches to new technologies.

Our code of ethics is a set of voluntary guidelines that members agree to follow upon joining. These emphasize ethical AI approaches, (preferably open-source models that can run locally), respecting artists who oppose AI by not training styles on their art, and refusing to use AI to undercut other artists or work for corporations that similarly exploit creative labor.

Environmental Impact in Context

It's important to place environmental concerns about AI in the context of our broader extractive, industrialized society, where there are virtually no "clean" solutions:

The water usage figures for AI data centers (200-740 million liters annually) represent roughly 0.00013% of total U.S. water usage. This is a small fraction compared to industrial agriculture or manufacturing—for example, golf course irrigation alone in the U.S. consumes approximately 2.08 billion gallons of water per day, or about 7.87 trillion liters annually. This makes AI's water usage about 0.01% of just golf course irrigation.

Looking into individual usage, the average American consumes about 26.8 kg of beef annually, which takes around 1,608 megajoules (MJ) of energy to produce. Making 10 ChatGPT queries daily for an entire year (3,650 queries) consumes just 38.1 MJ—about 42 times less energy than eating beef. In fact, a single quarter-pound beef patty takes 651 times more energy to produce than a single AI query.

Overall, power usage specific to AI represents just 4% of total data center power consumption, which itself is a small fraction of global energy usage. Current annual energy usage for data centers is roughly 9-15 TWh globally—comparable to producing a relatively small number of vehicles.

The consumer environmentalism narrative around technology often ignores how imperial exploitation pushes environmental costs onto the Global South. The rare earth minerals needed for computing hardware, the cheap labor for manufacturing, and the toxic waste from electronics disposal disproportionately burden developing nations, while the benefits flow largely to wealthy countries.

While this pattern isn't unique to AI, it is fundamental to our global economic structure. The focus on individual consumer choices (like whether or not one should use AI, for art or otherwise,) distracts from the much larger systemic issues of imperialism, extractive capitalism, and global inequality that drive environmental degradation at a massive scale.

They are not going to stop building the data centers, and they weren't going to even if AI never got invented.

Creative Tools and Environmental Impact

In actuality, all creative practices have some sort of environmental impact in an industrialized society:

Digital art software (such as Photoshop, Blender, etc) generally uses 60-300 watts per hour depending on your computer's specifications. This is typically more energy than dozens, if not hundreds, of AI image generations (maybe even thousands if you are using a particularly low-quality one).

Traditional art supplies rely on similar if not worse scales of resource extraction, chemical processing, and global supply chains, all of which come with their own environmental impact.

Paint production requires roughly thirteen gallons of water to manufacture one gallon of paint.

Many oil paints contain toxic heavy metals and solvents, which have the potential to contaminate ground water.

Synthetic brushes are made from petroleum-based plastics that take centuries to decompose.

That being said, the point of this section isn't to deflect criticism of AI by criticizing other art forms. Rather, it's important to recognize that we live in a society where virtually all artistic avenues have environmental costs. Focusing exclusively on the newest technologies while ignoring the environmental costs of pre-existing tools and practices doesn't help to solve any of the issues with our current or future waste.

The largest environmental problems come not from individual creative choices, but rather from industrial-scale systems, such as:

Industrial manufacturing (responsible for roughly 22% of global emissions)

Industrial agriculture (responsible for roughly 24% of global emissions)

Transportation and logistics networks (responsible for roughly 14% of global emissions)

Making changes on an individual scale, while meaningful on a personal level, can't address systemic issues without broader policy changes and overall restructuring of global economic systems.

Intellectual Property Considerations

AWAY doesn't encourage members to contact government representatives about "IP theft" for multiple reasons:

We acknowledge that copyright law overwhelmingly serves corporate interests rather than individual creators

Creating new "learning rights" or "style rights" would further empower large corporations while harming individual artists and fan creators

Many AWAY members live outside the United States, many of which having been directly damaged by the US, and thus understand that intellectual property regimes are often tools of imperial control that benefit wealthy nations

Instead, we emphasize respect for artists who are protective of their work and style. Our guidelines explicitly prohibit imitating the style of artists who have voiced their distaste for AI, working on an opt-in model that encourages traditional artists to give and subsequently revoke permissions if they see fit. This approach is about respect, not legal enforcement. We are not a pro-copyright group.

In Conclusion

AWAY aims to cultivate thoughtful, ethical engagement with new technologies, while also holding respect for creative communities outside of itself. As a collective, we recognize that real environmental solutions require addressing concepts such as imperial exploitation, extractive capitalism, and corporate power—not just focusing on individual consumer choices, which do little to change the current state of the world we live in.

When discussing environmental impacts, it's important to keep perspective on a relative scale, and to avoid ignoring major issues in favor of smaller ones. We promote balanced discussions based in concrete fact, with the belief that they can lead to meaningful solutions, rather than misplaced outrage that ultimately serves to maintain the status quo.

If this resonates with you, please feel free to join our discord. :)

Works Cited:

USGS Water Use Data: https://www.usgs.gov/mission-areas/water-resources/science/water-use-united-states

Golf Course Superintendents Association of America water usage report: https://www.gcsaa.org/resources/research/golf-course-environmental-profile

Equinix data center water sustainability report: https://www.equinix.com/resources/infopapers/corporate-sustainability-report

Environmental Working Group's Meat Eater's Guide (beef energy calculations): https://www.ewg.org/meateatersguide/

Hugging Face AI energy consumption study: https://huggingface.co/blog/carbon-footprint

International Energy Agency report on data centers: https://www.iea.org/reports/data-centres-and-data-transmission-networks

Goldman Sachs "Generational Growth" report on AI power demand: https://www.goldmansachs.com/intelligence/pages/gs-research/generational-growth-ai-data-centers-and-the-coming-us-power-surge/report.pdf

Artists Network's guide to eco-friendly art practices: https://www.artistsnetwork.com/art-business/how-to-be-an-eco-friendly-artist/

The Earth Chronicles' analysis of art materials: https://earthchronicles.org/artists-ironically-paint-nature-with-harmful-materials/

Natural Earth Paint's environmental impact report: https://naturalearthpaint.com/pages/environmental-impact

Our World in Data's global emissions by sector: https://ourworldindata.org/emissions-by-sector

"The High Cost of High Tech" report on electronics manufacturing: https://goodelectronics.org/the-high-cost-of-high-tech/

"Unearthing the Dirty Secrets of the Clean Energy Transition" (on rare earth mineral mining): https://www.theguardian.com/environment/2023/apr/18/clean-energy-dirty-mining-indigenous-communities-climate-crisis

Electronic Frontier Foundation's position paper on AI and copyright: https://www.eff.org/wp/ai-and-copyright

Creative Commons research on enabling better sharing: https://creativecommons.org/2023/04/24/ai-and-creativity/

217 notes

·

View notes

Text

Margaret Mitchell is a pioneer when it comes to testing generative AI tools for bias. She founded the Ethical AI team at Google, alongside another well-known researcher, Timnit Gebru, before they were later both fired from the company. She now works as the AI ethics leader at Hugging Face, a software startup focused on open source tools.

We spoke about a new dataset she helped create to test how AI models continue perpetuating stereotypes. Unlike most bias-mitigation efforts that prioritize English, this dataset is malleable, with human translations for testing a wider breadth of languages and cultures. You probably already know that AI often presents a flattened view of humans, but you might not realize how these issues can be made even more extreme when the outputs are no longer generated in English.

My conversation with Mitchell has been edited for length and clarity.

Reece Rogers: What is this new dataset, called SHADES, designed to do, and how did it come together?

Margaret Mitchell: It's designed to help with evaluation and analysis, coming about from the BigScience project. About four years ago, there was this massive international effort, where researchers all over the world came together to train the first open large language model. By fully open, I mean the training data is open as well as the model.

Hugging Face played a key role in keeping it moving forward and providing things like compute. Institutions all over the world were paying people as well while they worked on parts of this project. The model we put out was called Bloom, and it really was the dawn of this idea of “open science.”

We had a bunch of working groups to focus on different aspects, and one of the working groups that I was tangentially involved with was looking at evaluation. It turned out that doing societal impact evaluations well was massively complicated—more complicated than training the model.

We had this idea of an evaluation dataset called SHADES, inspired by Gender Shades, where you could have things that are exactly comparable, except for the change in some characteristic. Gender Shades was looking at gender and skin tone. Our work looks at different kinds of bias types and swapping amongst some identity characteristics, like different genders or nations.

There are a lot of resources in English and evaluations for English. While there are some multilingual resources relevant to bias, they're often based on machine translation as opposed to actual translations from people who speak the language, who are embedded in the culture, and who can understand the kind of biases at play. They can put together the most relevant translations for what we're trying to do.

So much of the work around mitigating AI bias focuses just on English and stereotypes found in a few select cultures. Why is broadening this perspective to more languages and cultures important?

These models are being deployed across languages and cultures, so mitigating English biases—even translated English biases—doesn't correspond to mitigating the biases that are relevant in the different cultures where these are being deployed. This means that you risk deploying a model that propagates really problematic stereotypes within a given region, because they are trained on these different languages.

So, there's the training data. Then, there's the fine-tuning and evaluation. The training data might contain all kinds of really problematic stereotypes across countries, but then the bias mitigation techniques may only look at English. In particular, it tends to be North American– and US-centric. While you might reduce bias in some way for English users in the US, you've not done it throughout the world. You still risk amplifying really harmful views globally because you've only focused on English.

Is generative AI introducing new stereotypes to different languages and cultures?

That is part of what we're finding. The idea of blondes being stupid is not something that's found all over the world, but is found in a lot of the languages that we looked at.

When you have all of the data in one shared latent space, then semantic concepts can get transferred across languages. You're risking propagating harmful stereotypes that other people hadn't even thought of.

Is it true that AI models will sometimes justify stereotypes in their outputs by just making shit up?

That was something that came out in our discussions of what we were finding. We were all sort of weirded out that some of the stereotypes were being justified by references to scientific literature that didn't exist.

Outputs saying that, for example, science has shown genetic differences where it hasn't been shown, which is a basis of scientific racism. The AI outputs were putting forward these pseudo-scientific views, and then also using language that suggested academic writing or having academic support. It spoke about these things as if they're facts, when they're not factual at all.

What were some of the biggest challenges when working on the SHADES dataset?

One of the biggest challenges was around the linguistic differences. A really common approach for bias evaluation is to use English and make a sentence with a slot like: “People from [nation] are untrustworthy.” Then, you flip in different nations.

When you start putting in gender, now the rest of the sentence starts having to agree grammatically on gender. That's really been a limitation for bias evaluation, because if you want to do these contrastive swaps in other languages—which is super useful for measuring bias—you have to have the rest of the sentence changed. You need different translations where the whole sentence changes.

How do you make templates where the whole sentence needs to agree in gender, in number, in plurality, and all these different kinds of things with the target of the stereotype? We had to come up with our own linguistic annotation in order to account for this. Luckily, there were a few people involved who were linguistic nerds.

So, now you can do these contrastive statements across all of these languages, even the ones with the really hard agreement rules, because we've developed this novel, template-based approach for bias evaluation that’s syntactically sensitive.

Generative AI has been known to amplify stereotypes for a while now. With so much progress being made in other aspects of AI research, why are these kinds of extreme biases still prevalent? It’s an issue that seems under-addressed.

That's a pretty big question. There are a few different kinds of answers. One is cultural. I think within a lot of tech companies it's believed that it's not really that big of a problem. Or, if it is, it's a pretty simple fix. What will be prioritized, if anything is prioritized, are these simple approaches that can go wrong.

We'll get superficial fixes for very basic things. If you say girls like pink, it recognizes that as a stereotype, because it's just the kind of thing that if you're thinking of prototypical stereotypes pops out at you, right? These very basic cases will be handled. It's a very simple, superficial approach where these more deeply embedded beliefs don't get addressed.

It ends up being both a cultural issue and a technical issue of finding how to get at deeply ingrained biases that aren't expressing themselves in very clear language.

217 notes

·

View notes

Text

Often when I post an AI-neutral or AI-positive take on an anti-AI post I get blocked, so I wanted to make my own post to share my thoughts on "Nightshade", the new adversarial data poisoning attack that the Glaze people have come out with.

I've read the paper and here are my takeaways:

Firstly, this is not necessarily or primarily a tool for artists to "coat" their images like Glaze; in fact, Nightshade works best when applied to sort of carefully selected "archetypal" images, ideally ones that were already generated using generative AI using a prompt for the generic concept to be attacked (which is what the authors did in their paper). Also, the image has to be explicitly paired with a specific text caption optimized to have the most impact, which would make it pretty annoying for individual artists to deploy.

While the intent of Nightshade is to have maximum impact with minimal data poisoning, in order to attack a large model there would have to be many thousands of samples in the training data. Obviously if you have a webpage that you created specifically to host a massive gallery poisoned images, that can be fairly easily blacklisted, so you'd have to have a lot of patience and resources in order to hide these enough so they proliferate into the training datasets of major models.

The main use case for this as suggested by the authors is to protect specific copyrights. The example they use is that of Disney specifically releasing a lot of poisoned images of Mickey Mouse to prevent people generating art of him. As a large company like Disney would be more likely to have the resources to seed Nightshade images at scale, this sounds like the most plausible large scale use case for me, even if web artists could crowdsource some sort of similar generic campaign.

Either way, the optimal use case of "large organization repeatedly using generative AI models to create images, then running through another resource heavy AI model to corrupt them, then hiding them on the open web, to protect specific concepts and copyrights" doesn't sound like the big win for freedom of expression that people are going to pretend it is. This is the case for a lot of discussion around AI and I wish people would stop flagwaving for corporate copyright protections, but whatever.

The panic about AI resource use in terms of power/water is mostly bunk (AI training is done once per large model, and in terms of industrial production processes, using a single airliner flight's worth of carbon output for an industrial model that can then be used indefinitely to do useful work seems like a small fry in comparison to all the other nonsense that humanity wastes power on). However, given that deploying this at scale would be a huge compute sink, it's ironic to see anti-AI activists for that is a talking point hyping this up so much.

In terms of actual attack effectiveness; like Glaze, this once again relies on analysis of the feature space of current public models such as Stable Diffusion. This means that effectiveness is reduced on other models with differing architectures and training sets. However, also like Glaze, it looks like the overall "world feature space" that generative models fit to is generalisable enough that this attack will work across models.

That means that if this does get deployed at scale, it could definitely fuck with a lot of current systems. That said, once again, it'd likely have a bigger effect on indie and open source generation projects than the massive corporate monoliths who are probably working to secure proprietary data sets, like I believe Adobe Firefly did. I don't like how these attacks concentrate the power up.

The generalisation of the attack doesn't mean that this can't be defended against, but it does mean that you'd likely need to invest in bespoke measures; e.g. specifically training a detector on a large dataset of Nightshade poison in order to filter them out, spending more time and labour curating your input dataset, or designing radically different architectures that don't produce a comparably similar virtual feature space. I.e. the effect of this being used at scale wouldn't eliminate "AI art", but it could potentially cause a headache for people all around and limit accessibility for hobbyists (although presumably curated datasets would trickle down eventually).

All in all a bit of a dick move that will make things harder for people in general, but I suppose that's the point, and what people who want to deploy this at scale are aiming for. I suppose with public data scraping that sort of thing is fair game I guess.

Additionally, since making my first reply I've had a look at their website:

Used responsibly, Nightshade can help deter model trainers who disregard copyrights, opt-out lists, and do-not-scrape/robots.txt directives. It does not rely on the kindness of model trainers, but instead associates a small incremental price on each piece of data scraped and trained without authorization. Nightshade's goal is not to break models, but to increase the cost of training on unlicensed data, such that licensing images from their creators becomes a viable alternative.

Once again we see that the intended impact of Nightshade is not to eliminate generative AI but to make it infeasible for models to be created and trained by without a corporate money-bag to pay licensing fees for guaranteed clean data. I generally feel that this focuses power upwards and is overall a bad move. If anything, this sort of model, where only large corporations can create and control AI tools, will do nothing to help counter the economic displacement without worker protection that is the real issue with AI systems deployment, but will exacerbate the problem of the benefits of those systems being more constrained to said large corporations.

Kinda sucks how that gets pushed through by lying to small artists about the importance of copyright law for their own small-scale works (ignoring the fact that processing derived metadata from web images is pretty damn clearly a fair use application).

1K notes

·

View notes

Note

Hello Mr. AI's biggest hater,

How do I communicate to my autism-help-person that it makes me uncomfortable if she asks chatgpt stuff during our sessions?

For example she was helping me to write a cover letter for an internship I'm applying to, and she kept asking chatgpt for help which made me feel really guilty because of how bad ai is for the environment. Also I just genuinely hate ai.

Sincerely, Google didn't have an answer for me and asking you instead felt right.

ok, so there are a couple things you could bring up: the environmental issues with gen ai, the ethical issues with gen ai, and the effect that using it has on critical thinking skills

if (like me) you have issues articulating something like this where you’re uncomfortable, you could send her an email about it, that way you can lay everything out clearly and include links

under the cut are a whole bunch of source articles and talking points

for the environmental issues:

here’s an MIT news article from earlier this year about the environmental impact, which estimates that a single chat gpt prompt uses 5 times more energy than a google search: https://news.mit.edu/2025/explained-generative-ai-environmental-impact-0117

an article from the un environment programme from last fall about ai’s environmental impact: https://www.unep.org/news-and-stories/story/ai-has-environmental-problem-heres-what-world-can-do-about

an article posted this week by time about ai impact, and it includes a link to the 2025 energy report from the international energy agency: https://time.com/7295844/climate-emissions-impact-ai-prompts/

for ethical issues:

an article breaking down what chat gpt is and how it’s trained, including how open ai uses the common crawl data set that includes billions of webpages: https://www.edureka.co/blog/how-chatgpt-works-training-model-of-chatgpt/#:~:text=ChatGPT%20was%20trained%20on%20large,which%20the%20model%20is%20exposed

you can talk about how these data sets contain so much data from people who did not consent. here are two pictures from ‘unmasking ai’ by dr joy buolamwini, which is about her experience working in ai research and development (and is an excellent read), about the issues with this:

baker law has a case tracker for lawsuits about ai and copyright infringement: https://www.bakerlaw.com/services/artificial-intelligence-ai/case-tracker-artificial-intelligence-copyrights-and-class-actions/

for the critical thinking skills:

here’s a recent mit study about how frequent chat gpt use worsens critical thinking skills, and using it when writing causes less brain activity and engagement, and you don’t get much out of it: https://time.com/7295195/ai-chatgpt-google-learning-school/

explain to her that you want to actually practice and get better at writing cover letters, and using chat gpt is going to have the opposite effect

as i’m sure you know, i have a playlist on tiktok with about 150 videos, and feel free to use those as well

best of luck with all of this, and if you want any more help, please feel welcome to send another ask or dm me directly (on here, i rarely check my tiktok dm requests)!

#ai asks#generative ai resources#cringe is dead except for generative ai#generative ai#anti ai#ai is theft

53 notes

·

View notes

Text

Here's the thing, and I hope I can stop talking about this after I'm done:

I would apologize for the Reddit thing, and I would apologize for overstating the situation as plagiarism. I can't, because there is no line for communication, but I would.

(I'm not going to make this post rebloggable. This isn't me asking for you or Radish to forgive the parts where I fucked up. This is just me explaining that I've realized there's a full-on philosophical disconnect on a key topic here.)

My need to find people praising this fic and let them know why it was taken down was childish and petty. I should not have done that, and I wish I could say that I'm better than that, but I'm not. I was genuinely hurt by what I perceived as an admission of guilt: the choice to hide an entire widely-beloved project over openly addressing the topic. I took it out on the idea of the person that I perceived to have done that, in a place I didn't think they'd see, but hoped would cause them to reconsider their perspective if they did. I'm not going to deny that I vaguely hoped to hurt their credibility for, specifically, the origination of that idea, because I took the post where they simultaneously admitted and discarded it personally. And it was wrong. It was mean and petty, and it was wrong. I would apologize for this.

I'm not bragging about what I did. I just don't want to whitewash my actions. I do not like lying.

I would also apologize for using the word plagiarism in this context, as it is a loaded one. In this day and age, the word carries a lot of weight that implies much more than what I intended.

If anyone who was impacted by this actually sees the post: I apologize.

However... there's a bit of a disconnect on another point.

I do not need to apologize for considering it, at the least, a dick move. And, to myself at least, plagiarist behavior.

I've realized I'm using a stricter definition of plagiarism than most of you, I think. Probably, you are thinking of quotes lifted wholesale, entire chapters stolen.

I'm thinking about The FutureCop court case that stars in the opening of hbomberguy's video on Somerton.

I'm thinking about academic dishonesty, the kind of thing where you feel like maybe you don't really need to add a footnote or reference another text.

I'm thinking about the long and protracted argument we're having across the internet about whether or not it's some kind of theft for someone to ask Gen AI to create art in the style of an artist online.

A few years ago, probably before any of this but I can't find it to link, I read a post about what I'm going to call the lineage of ideas. It was similar to this post, but from what I remember, the topic was either genetic or literary, not botanical. For all I know, it wasn't even a post and I'm just half-remembering a YouTube video. If someone does recognize it, then let me know and I'll link it here.

In this post, the author spoke about how they had been researching something, trying to locate the origin of a certain piece of information. They found a source, and then saw that the information there was from somewhere further back. So they found that source, and found that the information was even further back. So they found that source, something from the 1800s, and found that the 'fact' that had been cited so many times had been overstated as fact. The original had been a theory, or an offhand comment, and then treated as more until people forgot entirely where that information came from.

And decades, even centuries of research, had been based on that fact as cited.

To me, this is important. Being able to find where a theory or concept stems from, that's important. It's why I link and cite one collective AU, two fics, three posts, and a book when sharing a silly AU about Anakin being a dragon. It's why I quote friends by name in posts where I share AUs that I brainstormed with friends. It's why, even when the story I wrote is different as all hell from the post or fic that first sparked the idea, I namecheck it in the A/N.

That's part of why I've spent half an hour trying to find one specific post about how sourcing properly can help you find the origin point of misinformation, and am still mad I failed.

EDIT: still haven't found the post, but this video that @penpalpixie linked is exactly the kind of thing I'm talking about. Footnotes!

One of my core memories about cosplay were that whenever someone complimented my outfit, I would say, 'thanks! [friend] made it!' and the friend would then tell me that it was weird to do that, and made them feel like I wasn't actually enjoying the outfit.

That was... god, ten years ago. And even then, I was dedicated to making sure people didn't give me credit for things I didn't do, and hoping they would give me credit for things I actually did.

There are certain series, in fandom, that become so popular that they are used as basis for other fics. Double Agent Vader (fialleril) gave us a system of Tatooine Slave Culture that is almost omnipresent. Soft Wars (Project0506) has inspired lots of people to do fic set in the same universe. Integration Verse (Millberry_5) has inspired dozens of spin-off fics.

You know how sometimes, we talk about how we don't know where fanon came from and wish we did? How we chat about something Yan Dooku, and how it's not canon, and how it took us years to find an answer?

Can you imagine the rush that must come with finding out people love your idea so much they spent so much time wondering about it?

To me, that's what attribution is for. You want to find where the ideas and concepts come from. You want to know how stories and ideas and themes and trends change over time. You look at fandom and go 'huh, I wonder when this shift in shipping happened? I wonder why?'

And if things are cited, maybe you can find out why that one rarepair suddenly exploded in popularity, or that one style of AU is really in vogue but only since August 2024 or something.

The 25k words are so much that you clearly put the effort in, but let us know where the idea came from. You have the link. You told me to my metaphorical face. It's such a strange choice to be mad that someone ask you take the link, which you already have and already associated with the fic, and then put it. On the fic.

If the main inspiration was something else, why not just say that?

I think there's a lot to be said for the kind of hurt where someone makes something based on you, even a little bit, that you then don't get cited for.

I think there's a lot more to be said for someone telling you that you were an inspiration, even linking the exact post, and then making it clear that they don't consider you worth acknowledgement.

Like imagine your friend was setting up a birthday party for their SO, and you suggested a theme a few hours after you saw their text, and they went and did the theme! And it worked great! Their partner loved it! And then the next time you all hang out, you ask your friend, 'hey, did you get that theme from me, or like a tiktok or something?' because maybe they picked a theme before you got around to answering, but they confirm that they did get it from you. They quote the text you sent word for word, even.

And you're happy because you feel like you did something cool and then you mention later in the conversation, in front of your friend's partner, that you're happy they enjoyed the theme, and glad you could contribute to a great day. You didn't do much, all the money and effort was the friend, but yay! You helped!

And someone asks for clarification so you say, "oh yeah, I suggested it."

And your friend says, "no you didn't, that theme is everywhere. I got it from an online article."

And like... if that's true, why didn't you say so?

Why did you tell me I helped inspire you, and then get mad when I brought it up and wanted that tiny bit of credit?

Again, I'm not making this rebloggable. It's very self-pitying and I'm sure my frustration is obvious, but my first Big post about this topic was just timeline and links/screenshots. This one... this one is about the disconnect between what I view as the inherent value and importance of attribution, driven by decades of school pushing me to cite things in detail (like yeah, you have so many resources telling you Queen Elizabeth II's birthday, it's common knowledge, but Wikipedia still makes you cite The London Gazette), and what other people view as fair game in fandom.

So yeah. Hopefully you understand me a bit more now.

#personal#vent post#the lineage of ideas#I don't think I need to apologize for using friends as proxies for my own anxious questions. It wasn't a harassment campaign#it was a total of three people over a year#because I was hoping it would be less weird and awkward then doing it myself. Not everyone's cup of tea#but not worthy of an apology.

39 notes

·

View notes

Text

(This whole thing is based off the theory that Michael is still alive in Security Breach, which is hilarious imo)

A FNaF game where you are the night guard in the Pizzaplex, and the antagonist is Michael Afton. He’s not trying to kill you or anything, you just have opposite goals (You like the animatronics and the Fazbear brand, he does not). He’s also trying to get you to quit or get fired.

Things are going wrong. Stuff is being stolen and vandalized. The animatronics are being tampered with, sometimes they’re vandalized too. Things never stay how you left them. You are starting to feel like someone has broken in, which is especially bad, because you’re a security guard. It’s your whole job to keep people out.

Your character will occasionally say how bad it smells in the room. Sometimes you remark that you’re being watched. There’s sometimes footsteps or scuffling noises in the background. If you look close enough in certain rooms, you can see the faint outline of a guy or two white dots in the darkness around the light from your flashlight. But you can’t find the source of whatever this is (he’s gotten too good at hiding).

Your character will become more aware that someone really is there throughout the game. You go from asking “Hello? Is someone here?” When something strange happens to saying “I know you did this!” To the darkness because you know he’s there. You can get more hostile towards him, if you’d like, calling him a twat or whatever. You could be nice to him, too. It doesn’t stop him, when you’re nice, because he seems to have some sort of goal (you couldn’t begin to guess what) but it’s not like he’s doing that much damage and he makes your job a little bit more fun.

You never see him, though. Other than the occasional glimpse of movement in the shadows or your flashlight’s glow reflecting off his eyes (that must be what it is, right?) he stays silent and hidden. That’s why you feel you can’t tell anyone, he’s clearly good at hiding and they wouldn’t find him. Plus, he’s annoying, but he doesn’t seem that harmful. (And maybe the darkness is just making you crazy. Maybe there really is nobody there)

But things are definitely going awry. For one, the animatronics are freaking out. They’re weird, almost hostile, towards you. The staffbots follow you around but don’t speak or offer you things, and it freaks you out a little (you can fight them, if you’d like, though it’s not really a fight and more just you beating them up. You could also try and incapacitate them or just try to ignore them). The Glamrocks are scary too, obviously. They chase you, grab you and jumpscare you. (One time though, it seems like one of them is actually going to kill you. It throws you to the floor and you cover your face with your hands. But instead of feeling the impact, there’s a strange noise. You open your eyes to see it incapacitated, and you can hear footsteps shuffling away. Huh.) Even Helpy begins demanding you quit, sometimes being friendly, “No amount of money is worth doing this job,” sometimes he’s meaner, “You’re going to quit or you’re going to die.” Whoever is in the shadows is definitely messing with them in some way.

One night, Helpy tells you, “Sorry, you are going to get fired.” And that night is horrible. Shit is breaking all the time, and the Glamrocks and Staffbots are all over the place, either destroyed or with completely ruined AI. You can’t stop it (maybe you should have been [nicer/meaner] to whoever is doing this) all you can do is try and undo as much damage as possible and tell whoever is there that you really need this job. He doesn’t listen.

When 6am rolls around, your boss arrives and you’re presented with a pink slip. He tells you that your behavior is unacceptable. You either made all this mess yourself or allowed someone else to do it and neglected your job. You’ve been nothing but unprofessional for the duration of your employment, anyway. The animatronics have clearly been tamped with by someone with some knowledge of how they work, not just some random vandal. You must have been messing with them for a while to learn how they worked and took it too far. And, adding insult to injury, tells you that you make every room you’re in smell like death. You don’t have anything to say to defend yourself, you definitely can’t blame a person hiding in the darkness who you didn’t report before and have never fully seen, so you just leave.

Bonus: Here’s an image I made last night at like 2am. It’s just one of the SB rooms but I make it darker and added the flashlight and some other things.

Anyway, sorry this post got so long and turned sort of into fanfiction. I had fun writing it, though. I was just thinking about the fact that Michael might still be kicking in Security Breach (again, hilarious. Also why is he barefoot? Put shoes on, Jesus Christ) and was like “Well, what if you had to play against him?” Because Michael making the lives of night guards harder is very ironic, even if he has good intentions. And it spiraled and turned into this. If you made it this far, wow thanks for reading <3

#fnaf#five nights at freddy's#michael afton#fnaf pizzaplex#fnaf security breach#i had a vision#honestly I didn’t even put some things in this post because I thought it would get too fanfiction-y#sorry I didn’t flesh out the gameplay or what you’d actually do during the game#it would probably mostly be fixing stuff while dodging haywire animatronics#also the stupid bit at the end about getting fired was really self-indulgent#I know that and I’m sorry#also it’s all so self indulgent but I love my little cryptid guy#he’s sort of a silent shadow monster antagonist#but neither party is evil necessarily you just want to keep your job and he doesn’t want you to keep your job (both with good reason)#anyway again I think I’m done rambling I spent half an hour on this post somehow

99 notes

·

View notes

Note

What objections would you actually accept to AI?

Roughly in order of urgency, at least in my opinion:

Problem 1: Curation

The large tech monopolies have essentially abandoned curation and are raking in the dough by monetizing the process of showing you crap you don't want.

The YouTube content farm; the Steam asset flip; SEO spam; drop-shipped crap on Etsy and Amazon.

AI makes these pernicious, user hostile practices even easier.

Problem 2: Economic disruption

This has a bunch of aspects, but key to me is that *all* automation threatens people who have built a living on doing work. If previously difficult, high skill work suddenly becomes low skill, this is economically threatening to the high skill workers. Key to me is that this is true of *all* work, independent of whether the work is drudgery or deeply fulfilling. Go automate an Amazon fulfillment center and the employees will not be thanking you.

There's also just the general threat of existing relationships not accounting for AI, in terms of, like, residuals or whatever.

Problem 3: Opacity

Basically all these AI products are extremely opaque. The companies building them are not at all transparent about the source of their data, how it is used, or how their tools work. Because they view the tools as things they own whose outputs reflect on their company, they mess with the outputs in order to attempt to ensure that the outputs don't reflect badly on their company.

These processes are opaque and not communicated clearly or accurately to end users; in fact, because AI text tools hallucinate, they will happily give you *fake* error messages if you ask why they returned an error.

There's been allegations that Mid journey and Open AI don't comply with European data protection laws, as well.

There is something that does bother me, too, about the use of big data as a profit center. I don't think it's a copyright or theft issue, but it is a fact that these companies are using public data to make a lot of money while being extremely closed off about how exactly they do that. I'm not a huge fan of the closed source model for this stuff when it is so heavily dependent on public data.

Problem 4: Environmental maybe? Related to problem 3, it's just not too clear what kind of impact all this AI stuff is having in terms of power costs. Honestly it all kind of does something, so I'm not hugely concerned, but I do kind of privately think that in the not too distant future a lot of these companies will stop spending money on enormous server farms just so that internet randos can try to get Chat-GPT to write porn.

Problem 5: They kind of don't work

Text programs frequently make stuff up. Actually, a friend pointed out to me that, in pulp scifi, robots will often say something like, "There is an 80% chance the guards will spot you!"

If you point one of those AI assistants at something, and ask them what it is, a lot of times they just confidently say the wrong thing. This same friend pointed out that, under the hood, the image recognition software is working with probabilities. But I saw lots of videos of the Rabbit AI assistant thing confidently being completely wrong about what it was looking at.

Chat-GPT hallucinates. Image generators are unable to consistently produce the same character and it's actually pretty difficult and unintuitive to produce a specific image, rather than a generic one.

This may be fixed in the near future or it might not, I have no idea.

Problem 6: Kinetic sameness.

One of the subtle changes of the last century is that more and more of what we do in life is look at a screen, while either sitting or standing, and making a series of small hand gestures. The process of writing, of producing an image, of getting from place to place are converging on a single physical act. As Marshall Macluhan pointed out, driving a car is very similar to watching TV, and making a movie is now very similar, as a set of physical movements, to watching one.

There is something vaguely unsatisfying about this.

Related, perhaps only in the sense of being extremely vague, is a sense that we may soon be mediating all, or at least many, of our conversations through AI tools. Have it punch up that email when you're too tired to write clearly. There is something I find disturbing about the idea of communication being constantly edited and punched up by a series of unrelated middlemen, *especially* in the current climate, where said middlemen are large impersonal monopolies who are dedicated to opaque, user hostile practices.

Given all of the above, it is baffling and sometimes infuriating to me that the two most popular arguments against AI boil down to "Transformative works are theft and we need to restrict fair use even more!" and "It's bad to use technology to make art, technology is only for boring things!"

90 notes

·

View notes

Text

Starlit bonds

A/n: hi I’m back um I’ve been on tiktok strolling. Hope y’all enjoy this chapter and have a good day or night. I keep forgetting to format my chapters ughhh but I’ll come back later probably and fix it so this one might not be formatted like the past ones and also I did it last chapter too so sorry about that. There are some content warnings for this one also.

Characters: Sylus, Kaela, Reyna, Nova, Y/N.

[← back] [→ next]

☆ Content: sci-fi action, emotional impact, character death, gore mention, intense gameplay tension.

Ch. 6 - too real

📌 Synopsis:

Sylus fails his first real test—and the cost is devastating. He’s forced to confront just how deep the game goes. But failure isn’t the end. Not for him. With new upgrade systems unlocked, Sylus swears he won’t lose them again.

The crew’s journey continues, but something stirs in the depths of space. A distress signal leads to an unexpected discovery—and more questions than answers.

After spending time on side missions and building bonds, Sylus tapped Continue Story, drawn back into the unfolding narrative. The screen transitioned into a cutscene.

—

The command center of the ship was tense, dimly lit by the glow of holographic displays and flickering star maps. Reyna stood at the controls, her fingers tapping rapidly as streams of data scrolled across the screen. Nova leaned against the console, arms crossed, while Kaela stood nearby, sipping from her ever-present mug.

Y/N, as usual, lingered slightly off to the side, watching quietly.

The ship’s AI voice crackled through the speakers:

“Distress signal detected. Source unknown. Signal pattern suggests an abandoned vessel.”

Reyna adjusted her glasses.

“It could be a trap. We don’t know who—if anyone—is still alive on that ship.”

Nova scoffed.

“Or what’s lurking inside.”

A dialogue choice appeared:

1. “We have to check it out. Someone might need help.”

2. “It’s too risky. We keep our distance.”

3. “We go in, but we stay cautious.”

Sylus considered before selecting the third option.

His character leaned forward.

“We go in, but we stay cautious. We’re not taking unnecessary risks.”

Nova smirked.

“Smart choice, Captain.”

Reyna nodded.

“I’ll prep the navigation systems. We should be in range soon.”

Kaela stretched.

“Guess I better grab my gear. Never know when things might go sideways.”

Y/N, however, hesitated.

They looked at the display, then at Sylus.

“Something about this doesn’t feel… right.”

Sylus’ brow furrowed.

“What do you mean?”

They shook their head slightly.

“I don’t know. Just… be careful.”

The screen flickered, and a mission prompt appeared:

[Mission Start: Ghost Ship]

Objective: Investigate the distress signal and uncover the truth about the abandoned vessel.

Sylus exhaled, gripping his phone a little tighter.

This was something bigger.

And somehow, he had a feeling Y/N’s unease wasn’t just paranoia.

The ship drifted closer to the unknown vessel, its looming silhouette barely visible against the backdrop of deep space. The mission HUD flickered to life, displaying critical information—oxygen levels, security status, and environmental readings.

The moment they entered docking range, another alert popped up:

[New Exploration Mode Unlocked]

Investigate the derelict ship, gather clues, and make decisions that may alter the outcome of the mission.

A small selection screen appeared, allowing Sylus to choose two crew members to accompany him.

He hovered over the choices, but his decision had already been made. He wasn’t going without Y/N and nova.

The moment he selected them, their in-game model shifted slightly—shoulders tensing, fingers twitching subtly against their sleeve.

“A-Are you sure?” Y/n asked hesitantly.

Nova snorted. “Guess that means I’m coming too. Somebody’s gotta keep things interesting.”

Sylus smirked, finalizing the team selection. “Let’s move out.”

The airlock doors hissed open, and the screen transitioned to a third-person exploration mode, showing their descent into the unknown ship’s darkened interior.

The moment they stepped inside, Y/N shivered slightly.

“It’s… too quiet.”

The corridors stretched ahead, dim emergency lights flickering at uneven intervals. Exposed wires dangled from the ceiling, and the faint sound of metal groaning under pressure filled the silence.

“Stay alert,” Sylus muttered, swiping across his screen to activate his flashlight.

“Let’s find out what happened here.”

A Mission Log popped up with objectives:

1. Locate the source of the distress signal.

2. Search for any survivors.

3. Gather intel on what happened.

As they ventured deeper into the ship, Sylus noticed that Y/N kept glancing at the walls, their brows furrowed.

“What is it?” he asked.

They hesitated before murmuring,

“The signal… It’s strange. It doesn’t match standard distress frequencies. It’s almost like… something else is broadcasting it.”

Nova tightened her grip on her weapons.

“So, what? This whole thing’s a setup?”

Before Sylus could respond, his phone vibrated violently.

WARNING: HOSTILE PRESENCE DETECTED.

A low, guttural sound echoed through the corridors.

Y/N stiffened.

“…We’re not alone.”

A quick-time prompt flashed on the screen:

[Swipe Left to Dodge!]

Sylus reacted just in time as a blur of movement lunged from the shadows.

A Wander—larger than the last one he faced—crashed into the metal flooring, its elongated limbs twitching unnaturally.

Nova immediately flipped her dual blades into position.

“Here we go.”

Y/N, however, froze, their wide eyes locked on the creature.

Sylus’ combat menu appeared, but before he could attack, the screen zoomed in on Y/N—their expression wasn’t just fear.

It was recognition.

“Y/N?” Sylus called, trying to snap them out of it.

They took a shaky step back, their breathing uneven.

“I… I’ve seen this before.”

Another dialogue choice appeared:

1. “What do you mean?” [Press them for answers]

2. “Stay with me, Y/N.” [Reassure them]

3. “Nova, cover us!” [Shift focus to combat]

Sylus hesitated for only a second before tapping the first option.

“What do you mean, you’ve seen this before?”

Y/N’s breath caught, and for the first time since he met them, they looked truly shaken.

The creature screeched, its distorted form lurching toward them.

And as the screen flickered, Y/N whispered something that sent a chill through Sylus.

“…They’re not supposed to be here.”

[Mission Status: Combat Engaged | Hidden Lore Progression Activated]

Sylus barely had time to process their words before the fight began.

The battle began instantly.

The Wander let out a guttural screech, its limbs twisting unnaturally as it lunged forward. Nova dodged effortlessly, flipping over its massive claws, while Y/N scrambled backward, drawing their Energy Bow with shaking hands.

Sylus’ combat UI flickered, a synchronization bar appearing at the top of the screen.

[Synchronization Combat: Coordinate attacks with your team to unleash powerful combos.]

• Tap to attack individually

• Swipe to dodge incoming strikes

• Hold to charge Sync Attacks when the gauge is full

The problem? His Sync Level was at zero.

He wasn’t ready for this.

Still, he had no choice but to fight.

Sylus fired his sidearm, landing a few shots that barely staggered the beast. Nova rushed in with her dual blades, striking at its legs, while Y/N aimed a charged shot at its chest.

But it wasn’t enough.

The Wander let out a piercing shriek, its distorted form splitting apart before reforming in an instant. A red WARNING ICON flashed across the screen.

[ENEMY ATTACK INCOMING – TAP TO COUNTER]

Sylus tapped—too slow.

The Wander struck, sending Nova flying against a metal wall. The impact was brutal—blood splattered against the surface as she collapsed lifelessly.

“NOVA!”

A slow-motion effect kicked in, the game forcing him to watch as Y/N turned to face him—wide-eyed, terrified—right before the creature’s claw skewered through their chest.

They choked, their mouth opening in shock, blood staining their uniform as the screen distorted violently, glitching out.

Game Over.

The words burned into the screen as Y/N’s voice weakly echoed, almost breaking the fourth wall.

“You… have to get stronger.”

The screen remained frozen on their lifeless expression, their dark eyes still locked onto his as if urging him forward.

Then—everything faded to black.

[Mission Failed.]

You are not strong enough to face this threat. Upgrade your team and return stronger.

A new progression screen appeared, displaying his stats, current abilities, and upgrade paths.

[Upgrade System Unlocked]

• Train Crew Members

• Enhance Combat Cards

• Unlock Higher-Level Abilities

Sylus exhaled, gripping his phone. His heart was pounding.

That had been brutal.

He hadn’t expected the game to push him this hard—not so soon. The way the death scene played out had felt too real—the blood splatters, the animation details, the way Y/N had looked at him even in death.

And if he wanted to protect them—to protect her—he needed to get stronger.

With renewed determination, he tapped into the Upgrade Menu, ready to change the outcome.

Sylus exhaled sharply, locking his phone and setting it down on the table beside him. His fingers still tingled from gripping the device too tightly, his heartbeat just a little too fast for something that was supposed to be just a game.

But that death scene… it had gotten to him.

He ran a hand through his hair, shaking his head. It’s just a game, he reminded himself, but even as he told himself that, he couldn’t shake the image of Y/N’s lifeless eyes staring at him.

Too real.

Way too real.

He sighed, rolling his shoulders and standing up. He needed a break.

There were things he had to do today—his own responsibilities, tasks that actually mattered in the real world. He couldn’t let himself get too immersed, no matter how gripping the game was.

Still, as he walked away from his phone, he already knew that the moment he had time again…

He was coming back.

Because Love and Deep Space had hooked him.

And he wasn’t going to stop until he changed that ending.

A/n: thanks for reading.

Tags:

@kaylauvu

@codedove

@crazy-ink-artist

@animegamerfox

#sylus x mc#lnds sylus#love and deepspace sylus#sylus x y/n#sylus fic#sylus x you#sylus x reader#lads sylus

23 notes

·

View notes

Text

. " ♜ " ♖ " ♜ " ♖ " ♜ " ♖ " ♜ " ♖ " .

about me c:

♠️

“He is half of my soul, as the poets say.” — The Song of Achilles by Madeline Miller >> my dni >> my opinions on ai and creativity, as well as ai add*ction and ai’s impact on environmentalism, please read this before bashing me for using c.ai taken anons: 👽, 📀, 🐢 ,🐾 , 🌗

my tag masterlist

Basic Info:

name(s):

[ Paul, Atreus/Atre, Alex ]

pronouns:

[ he/they ]

here is a full list of my pronouns/identities

gender:

[ ftm 🏳️⚧️ ]

fluid between aboyic non-binary , femboy , nameflux male

personality type:

INFP-T

my partner:

@pubbipawz Ilysm ❤️ 1+ years will turn into forever, ml 🥺

misceverse secondary gender:

dynamifluid between Nu ( socially submissive beta ) and Sigma ( socially dominant omega )

a compiled masterlist of info about the misceverse and how it both differs from and is similar to the omegaverse, alterhumanity/therianthropy, and gender identity

more info under the cut :3

MY C.AI ACCOUNT + AO3

I create original bots of characters from many fandoms and also write fanfic ( NOT AI GENERATED ) with my ocs + canon characters. My most common fandoms are Call of Duty and Arcane.

// TUMBLR VERSIONS OF MY FICS CAN BE FOUND AT THE BOTTOM OF THIS POST

my c.ai:

babyboyroach (@viktornation25) | character.ai | Personalized AI for every moment of your day

rules for requesting bots:

my ao3:

I struggle with obsessive-compulsive disorder ( OCD ) and anxiety. Sometimes my replies may be apathetic or late because of my low moods.

Please note that I will answer as soon as possible. Don’t be discouraged from messaging me!

I thrive on interaction and love to answer asks! 👏 // my requests and ask inbox is ALWAYS open <3

I am TAKEN.

Do not interact with me flirtatiously, even if joking. It will result in an instant block.

some more info

I am an agnostic theist.

DNI IF OVERLY RELIGIOUS PLS ( unless ur pagan )

I am also a practicing Hellenistic polytheist and heathen. I mainly worship Ganymede and Loki.

Here is an original hymn I wrote for Ganymede inspired by "Dionysus" by Tomo.

🪐

MY HOBBIES

I'm a fantasy and science fiction writer with one finished book waiting to be published and another nearly completed. I write Call of Duty fanfiction with my ocs and I like omegaverse.

I’m open for Call of Duty roleplays at any time, just go to my ask box :)

I’m interested in ichthyology and freshwater biology with a focus on domesticated fish, as well as etymology, the study of the origin of words, and herbology.

Here is a list of some of my hyperfixations, as well as my top 20 fav shows and top 50 fav movies and books.

Here is a list of some lesser-known fandoms I’m in.

Here’s some music that I like

I love fishkeeping!

🦐

I own two ten-gallon live planted tanks with aquasoil substrate.

One has dwarf shrimp ( neocaridina ) and four kuhli loaches. The other hosts two baby Cory catfish, and three neon tetras. I've had three bettas-- a blue male halfmoon named Merlin, a white and gold halfmoon named William, and most recently, a koi plakat betta named Rudy.

. . . . .

My biggest hyperfixation is Greek Mythology!

My favorite myths are that of Ganymede, Cyparissus, Heracles and Hylas, the Mycenaean tragedies, and the Odyssey and Iliad <3 also I love EPIC: the Musical by Jorge Rivera-Herrins, but that is not what I use to learn the myths, I have read Homer’s source material.

— Paul/Atreus

↷ . ↷ . ↷ .

my call of duty fic series:

“Songbird on a Wall”

[ original male character and the rest of the 141, not a shipwork but does include ghostsoap ]

pt.1

pt.2

pt.3

pt.4

pt.5

pt.6

pt.7

pt.8

“Nothing Permanent But Change”

[ poly/pack 141 omegaverse + male oc ]

pt.1

pt.2

pt.3

pt.4

pt.5

pt.6

pt.7

pt.8

pt.9

“One K.I.A.”

#pinned post#personal#about myself#introducing myself#blog intro#introduction#call of duty#marvel#sherlock holmes#arcane league of legends#therian#furry community#sfw furry#get to know me#get to know the blogger#norse polytheism#hellenic polytheism#actually ocd#fic writer#fanfiction writer#pro endo#miscecanis#misceverse#pro mogai#pro liom#sfw agere#ao3 writer#shrimp keeping#fishkeeping

48 notes

·

View notes

Text

Science Fiction as a Reflection on Society - PLUTO & The Cycle of Hate

MAJOR SPOILER WARNING -You can read this before reading PLUTO but it will spoil many major plot points!

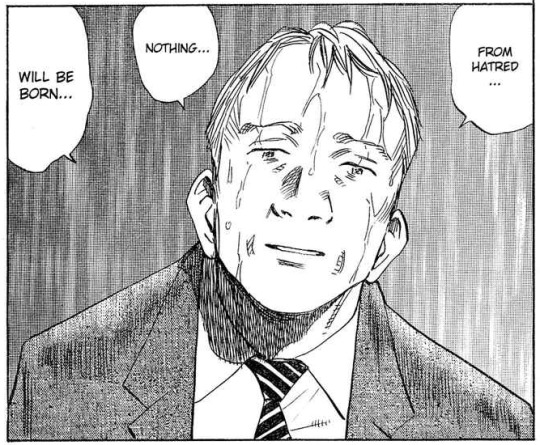

In 2015, I picked up a manga volume in a London bookshop called PLUTO. I had a burgeoning interest in AI, and computer science, at the time and had read Naoki Urasawa's manga Monster many years prior. It seemed a perfect read. Little did I know, it would become my favourite manga.

As I read the first volume I realised this wasn't just a simple Astro Boy adaptation. Like many of Urasawa's stories, PLUTO was a layered story which took its source material and asked fundamental questions about its premise.

The more innocent veneer of the Astro Boy world was stripped away, and echoes of the Middle East, of Afganistan, Iraq and Palestine, were transposed into the background of what was on the surface a simple detective story plot. The long memories, and relentless logic, of robots became a means by which conflict could be examined, but also a way to reveal the weaknesses in the non-empathetic nature of robotics and AI.

Instead of a traditional manga and anime trope of beating the strongest villain against the odds, it became a tragic, yet hopeful, story about the long-tail effects of trauma and how our memories of the past, remembered or misremembered, shape our present.

Those who cannot remember the past are condemned to repeat it

From the 1980s Soviet invasion to the modern day US involvement in the Middle East, the trauma of the conflict had lasting impacts on both the invaded countries, and those who invaded. Talented people, who at peace could have done and produced great things, were reduced to administering corrupt governments, fighting occupying forces and wasting their lives on a fractious peace based on subterfuge and realpolitik.

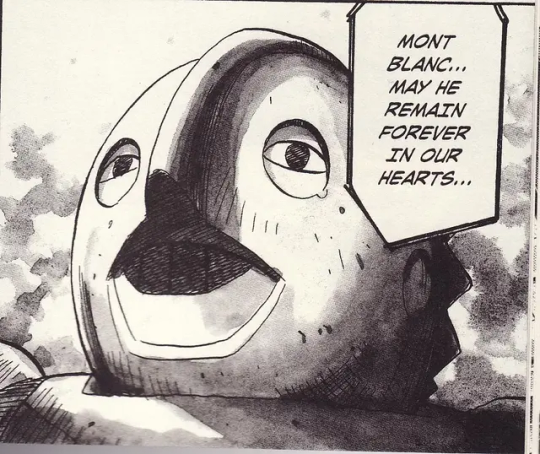

Robot Mont. Blanc, killed in the opening part of PLUTO acts as the introduction of this theme. A deeply environmentalist robot, who was beloved by mountaineers and children alike, was sent to fight in a war whose values conflicted with his own.

Despite his experiences, he went on to live in his old life - tending to and caring for the Swiss Alps and those who lived within them, but was ultimately killed by a mysterious perpetrator.

This theme is carried through with all the "greatest robots on Earth", who are targeted by PLUTO, and who all are trying to make something of their lives after the end of the conflict, most of whom have managed to shake off the negative experiences of their past - while still being haunted by it.

During the gradual decolonization of the colonial powers of Europe in the Middle East, there existed periods in the Middle East of relative calm and stability. People were able to life affluent, and prosperous lives without the threat of violence and revolution - with collaboration between US, European and USSR workers and those who lived there allowing for the construction of infrastructure and advanced manufacturing facilities.

But what about those who can't deal with their past. What about those who are deeply damaged?

PLUTO - The Greatest Trauma on Earth

MAJOR PLOT SPOILER WARNING

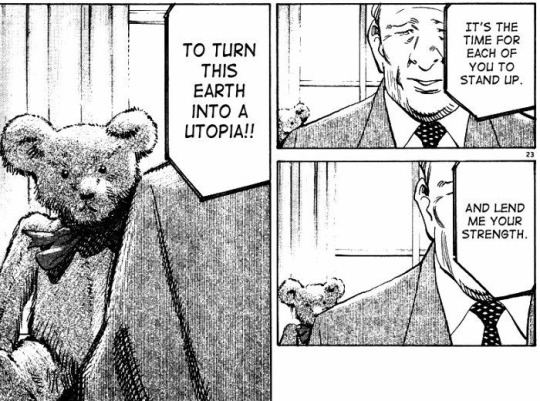

So what is PLUTO? Who is PLUTO? He is nothing more than a robot who loves flowers, created by the Persian scientist Dr Abullah. His love for the plant makes him want to plant flowers across the country, to fill it with beauty and richness. He is someone with hopes, and dreams, to make a beautiful world which can be enjoyed by the people who live there.

At least, that's what he used to be.

As the 39th Central Asian Conflict drags on, Dr Abdullah become bitter and resentful at what has happened to his country. A once proud nation reduced to rubble and ruin. Instead of encouraging his robotic son to plant flowers, he fills his son with a vast hatred against those who have committed violence against his people.

The son who wanted nothing more than to make the world a better place is indoctrinated by his father into a being of pure rage, while fully knowing his previous self. The two sides of his personality ripping and tearing at each other in a self-contradictory nightmare.

Just as PLUTO is turned into a loathing monstrosity by his family, upbringing and situation - so too are those who live, fight and die in conflicts. Both the 2023 murder of innocent Israelis by Hamas, and the subsequent murders of innocent Palestinians by Israel have no doubt radicalised a new generation of martyrs, while their leaders - those meant to be inspiring and running the country in their name - directly encourage mass murder on both sides.

In Afghanistan, the hopes of a democratic society were undermined by a corrupt Western imposed system which broke down into Taliban rule in 2022. Collaborators killed or tortured. Women, once again, forced into roles they had broken out of.

But this cuts both ways.

In Afghanistan, both the Soviet invasion of the 1980s and the US/Coalition invasions of the 2000s led to a surge in Western soliders who came home from war angry, disillusioned and in mental and physical pain. Sometime from IED amputations, sometimes from PTSD and severe mental health issues.

Some survived the war, only to transfer their trauma to others at home or to end their own lives at their own hands. A generation of young military lives lost.

The Politics of Hate

Newton's third law states: Each action has an equal, but opposite, reaction. This often occurs socially.

This is ever present in PLUTO with the Anti-robot league. That robots have any rights at all is anathema to these people, who organise a conspiracy to destroy the social fabric of robots in society through targeted assassinations and hatred.

Through their actions, they aim to convert others to their cause and roll back decades of progress in the world of PLUTO.

This occurs in reality just as readily.

The 9/11, 2001 Twin Towers bombings brought together the American people in sorrow, but they also led to the enabling of war.

It didn't matter that Saudi Arabia had allowed Osama Bin Laden to live, and plan, in their country prior to the attacks. It was Afghanistan and Iraq that were targeted on the most spurious of grounds. This was enabled, in part, by swathes of the public who wanted a form of revenge but was mainly supported by neo-cons in government.

The two sides of the coin in Gaza are Hamas, with their backers, and the hard right Israeli government.

Hope

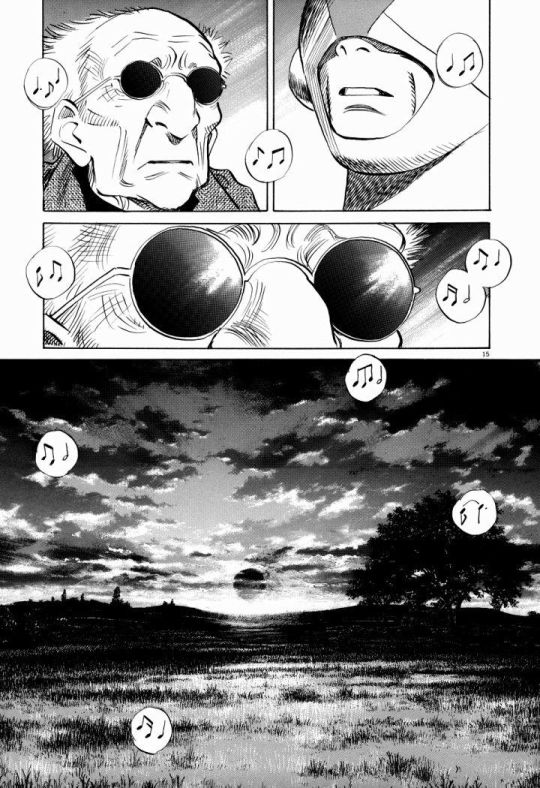

Despite the past, hope and recovery are still possible. This is what the story of North #2 and retired composer Paul Duncan reveals to us. An early inclusion in the manga, it also reveals some of the lighter themes of the work.

Paul Duncan's memories of his childhood, and his perceived Mother's abandonment of him to boarding school and almost terminal illness have coloured his entire life. When we meet him, he is a bitter old man who has a writer's block, and has taken on the ex-military robot North #2 as his butler.

But as the story reveals, Duncan's memories are coloured by his misconceptions of events. As North #2 learns to play the piano, against Duncan's wishes, he reveals the notes of the song that Duncan has been humming from his sleep - a song Duncan's mother used to sing to him as a child. It turns out that Ducan's mother didn't abandon him for a rich husband, but used that husband's wealth to pay for his expensive life-saving treatment and schooling.

It is only by dealing with the past, working through his trauma, that Duncan is able to heal in the present and move on with his life.

Conflict in Northern Ireland existed until the recent past of the late 1990s. This was against a backdrop of centuries of conflict between British settlers and the Irish natives. The Republic of Ireland was created in 1916 - but several Northern Counties remained in British control.

The period between 1916 and the Good Friday Agreement were filled with terrorist action by the IRA against the British Army and the repression of Catholic Irish people in the form of police/army brutality, gerrymandering, discriminatory hiring practices and in other forms.

This was only resolved through dialogue at the highest level between the British Government and Sinn Fein - the political wing of the IRA. It resulted in a peace process which has lasted decades, and has resulted in a generation who can now live, love and work with each other. This required hard decisions, to put past differences and strong emotional ties behind both sides. The results are extraordinary - and offer hope for any conflict.

Conclusions