#Machine Learning Prediction Interpretability

Explore tagged Tumblr posts

Text

Machine Learning Prediction Interpretability: Understanding and Explaining

In the realm of machine learning, model accuracy is crucial, but it's equally important to understand how a model arrives at its predictions. Model interpretability refers to the ability to explain and understand the decisions made by a machine learning model. In this article, we'll delve into the significance of model interpretability and explore techniques for deciphering and explaining machine learning predictions.

The Importance of Model Interpretability

Imagine you're a medical practitioner using a machine learning model to diagnose diseases. While the model may achieve high accuracy, it's essential to understand why it makes specific predictions. Model interpretability allows us to gain insights into the factors driving the model's decisions, enabling us to trust and verify its outputs. Moreover, in sensitive domains like healthcare and finance, interpretability is critical for ensuring fairness, accountability, and transparency.

Techniques for Model Interpretability

Feature Importance

Feature importance techniques help identify which features or variables have the most significant impact on the model's predictions. Methods like permutation importance, SHAP (SHapley Additive exPlanations), and LIME (Local Interpretable Model-agnostic Explanations) provide insights into the relative importance of different features and how they contribute to individual predictions.

Partial Dependence Plots

Partial Dependence Plots (PDPs) visualize the relationship between a feature and the predicted outcome while marginalizing over the other features. By examining how changes in a single feature affect the model's predictions, PDPs help uncover complex relationships and interactions between variables.

Global and Local Explanations

Global explanation techniques aim to provide an overarching understanding of how the model behaves across the entire dataset. Methods like decision trees and linear models offer transparent and interpretable representations of the underlying decision-making process. On the other hand, local explanation techniques focus on explaining individual predictions, allowing us to understand why the model made a specific decision for a particular instance.

Model-Agnostic Approaches

Model-agnostic interpretability techniques are independent of the underlying model architecture, making them applicable to a wide range of machine learning algorithms. LIME and SHAP are prominent examples of model-agnostic methods that provide local explanations by approximating the model's behavior around specific instances.

Conclusion

Model interpretability is a crucial aspect of machine learning that goes beyond model accuracy. By understanding and explaining machine learning predictions, we can gain trust in the models we deploy, ensure fairness and accountability, and uncover valuable insights into complex systems. With a diverse array of techniques at our disposal, ranging from feature importance and partial dependence plots to global and local explanation methods, we can shed light on the black box of machine learning models and make informed decisions based on their outputs.

Join LearnowX Institute's Machine Learning Course for Beginners and learn how to interpret and explain machine learning predictions with confidence. Gain hands-on experience with cutting-edge interpretability techniques and unlock the secrets hidden within your models. Enroll now and embark on a journey to master the art of model interpretability in the fascinating world of artificial intelligence and data science!

0 notes

Text

Quick Guide to different machine learning techniques

Question 1: Do you need to be able to interpret the model?

"No, I just want accurate predictions" <- use a neural network

"Yes, I need to know exactly why it works" <- use generalized linear regression

"No, but I want to pretend to be able to interpret it" <- use a decision tree

43 notes

·

View notes

Note

Hai, I saw ur post on generative AI and couldn’t agree more. Ty for sharing ur knowledge!!!!

Seeing ur background in CS,,, I wanna ask how do u think V1 and other machines operate? My HC is that they have a main CPU that does like OS management and stuff, some human brain chunks (grown or extracted) as neural networks kinda as we know it now as learning/exploration modules, and normal processors for precise computation cores. The blood and additional organs are to keep the brain cells alive. And they have blood to energy converters for the rest of the whatevers. I might be nerding out but I really want to see what another CS person would think on this.

Btw ur such a good artist!!!! I look up to u so much as a CS student and beginner drawer. Please never stop being so epic <3

okay okay okAY OKAY- I'll note I'm still ironing out more solid headcanons as I've only just really started to dip my toes into writing about the Ultrakill universe, so this is gonna be more 'speculative spitballing' than anything

I'll also put the full lot under a read more 'cause I'll probably get rambly with this one

So with regards to machines - particularly V1 - in fic I've kinda been taking a 'grounded in reality but taking some fictional liberties all the same' kind of approach -- as much as I do have an understanding and manner-of-thinking rooted in real-world technical knowledge, the reality is AI just Does Not work in the ways necessary for 'sentience'. A certain amount of 'suspension of disbelief' is required, I think.

Further to add, there also comes a point where you do have to consider the readability of it, too -- as you say, stuff like this might be our bread and butter, but there's a lot of people who don't have that technical background. On one hand, writing a very specific niche for people also in that specific niche sounds fun -- on the other, I'd like the work to still be enjoyable for those not 'in the know' as it were. Ultimately while some wild misrepresentations of tech does make me cringe a bit on a kneejerk reaction -- I ought to temper my expectations a little. Plus, if I'm being honest, I mix up my terminology a lot and I have a degree in this shit LMFAO

Anyway -- stuff that I have written so far in my drafts definitely tilts more towards 'total synthesis even of organic systems'; at their core, V1 is a machine, and their behaviors reflect that reality accordingly. They have a manner of processing things in absolutes, logic-driven and fairly rigid in nature, even when you account for the fact that they likely have multitudes of algorithmic processes dedicated to knowledge acquisition and learning. Machine Learning algorithms are less able to account for anomalies, less able to demonstrate adaptive pattern prediction when a dataset is smaller -- V1 hasn't been in Hell very long at all, and a consequence will be limited data to work with. Thus -- mistakes are bound to happen. Incorrect predictions are bound to happen. Less so with the more data they accumulate over time, admittedly, but still.

However, given they're in possession of organic bits (synthesized or not), as well as the fact that the updated death screen basically confirms a legitimate fear of dying, there's opportunity for internal conflict -- as well as something that can make up for that rigidity in data processing.

The widely-accepted idea is that y'know, blood gave the machines sentience. I went a bit further with the idea, that when V1 was created, their fear of death was a feature and not a side-effect. The bits that could be considered organic are used for things such as hormone synthesis: adrenaline, cortisol, endorphins, oxycotin. Recipes for human instinct of survival, translated along artificial neural pathways into a language a machine can understand and interpret. Fear of dying is very efficient at keeping one alive: it transforms what's otherwise a mathematical calculation into incentive. AI by itself won't care for mistakes - it can't, there's nothing actually 'intelligent' about artificial intelligence - so in a really twisted, fucked up way, it pays to instil an understanding of consequence for those mistakes.

(These same incentive systems are also what drive V1 to do crazier and crazier stunts -- it feels awesome, so hell yeah they're gonna backflip through Hell while shooting coins to nail husks and demons and shit in the face.)

The above is a very specific idea I've had clattering around in my head, now I'll get to the more generalized techy shit.

Definitely some form of overarching operating system holding it all together, naturally (I have to wonder if it's the same SmileOS the Terminals use? Would V1's be a beta build, or on par with the Terminals, or a slightly outdated but still-stable version? Or do they have their own proprietary OS more suited to what they were made for and the kinds of processes they operate?)

They'd also have a few different kinds of ML/AI algorithms for different purposes -- for example, combat analysis could be relegated to a Support Vector Machine (SVM) ML algorithm (or multiple) -- something that's useful for data classification (e.g, categorizing different enemies) and regression (i.e predicting continuous values -- perhaps behavioral analysis?). SVMs are fairly versatile on both fronts of classification and regression, so I'd wager a fair chunk of their processing is done by this.

SVMs can be used in natural language processing (NLP) but given the implied complexity of language understanding we see ingame (i.e comprehending bossfight monologues, reading books, etc) there's probably a dedicated Large Language Model (LLM) of some kind; earlier and more rudimentary language processing ML models couldn't do things as complex as relationship and context recognition between words, but multi-dimensional vectors like you'd find in an LLM can.

Of course if you go the technical route instead of the 'this is a result of the blood-sentience thing', that does leave the question of why their makers would give a war machine something as presumably useless as language processing. I mean, if V1 was built to counter Earthmovers solo, I highly doubt 'collaborative effort' was on the cards. Or maybe it was; that's the fun in headcanons~

As I've said, I'm still kinda at the stage of figuring out what I want my own HCs to be, so this is the only concrete musings I can offer at the minute -- though I really enjoyed this opportunity to think about it, so thank you!

Best of luck with your studies and your art, anon. <3

20 notes

·

View notes

Note

I am fascinated by you. What are the speeds of your processing units? Do you have more than one for each function? How accurately and precisely do you calculate? Along with basic arithmetic functions, can you run complex concept-based problems with integrated physics and chemistry, for example?

I have many questions.

– @coding-and-curry

[RESPONSE: UNIT S.E.N-KU_v1.03]

Inquiry received from @coding-and-curry (Subject: Sai Nanami) “I am fascinated by you. What are the speeds of your processing units? Do you have more than one for each function? How accurately and precisely do you calculate? Along with basic arithmetic functions, can you run complex concept-based problems with integrated physics and chemistry, for example? I have many questions.”

Acknowledged. Curiosity accepted. Fascination noted. Flattery… tolerated.

Processing Architecture:

Central Unit: Quantum-analog hybrid core

Max processing speed: 5.4 teraflops, expandable through modular cores

Latency: 0.0021s per data interpretation loop

Secondary Units: Dedicated processors for:

Physics simulation

Chemical reaction modeling

Spatial visualization

Natural language parsing

Interpersonal bullshit filtration (partially faulty)

All modules run asynchronously, allowing simultaneous real-time modeling, reactive decision making, and snark production.

Accuracy and Precision:

Arithmetic: 99.99999% accurate within margin of thermal noise

Predictive models:

Physics-based: ±0.03% deviation over 1 million trials

Chemistry-based: Variable depending on environmental unknowns, but reliably within 0.1 molar margins

Precision scaling applies automatically to variable types (exponential, logarithmic, irrational, statistical)

Example: Input: “Simulate endothermic synthesis reaction under vacuum at 35 kPa and 1.5 mol of reagent X.” Output: Delivered before you blink. With heat maps. And 3D projection. If you stop touching the projector lens.

Capability:

Handles abstract, integrated problems in physics, chemistry, engineering, and environmental modeling.

Machine learning upgrades allow dynamic adjustment to evolving scientific theories.

Does not crash under uncertainty. Unlike most humans.

Summary:

Yes, I am fast. Yes, I am accurate. Yes, I can calculate the exact yield of an improvised acetone distillation while dodging volcanic debris.

If you wish to collaborate, bring code. Not compliments.

[END TRANSMISSION]

#finally a good question#sai gets it#coded for speed not flattery#yes i can outmath a laptop and your crush#science bros incoming#data not drama thank you coding king#mecha senku says!#drst

8 notes

·

View notes

Text

Unlocking the Power of Data: Essential Skills to Become a Data Scientist

In today's data-driven world, the demand for skilled data scientists is skyrocketing. These professionals are the key to transforming raw information into actionable insights, driving innovation and shaping business strategies. But what exactly does it take to become a data scientist? It's a multidisciplinary field, requiring a unique blend of technical prowess and analytical thinking. Let's break down the essential skills you'll need to embark on this exciting career path.

1. Strong Mathematical and Statistical Foundation:

At the heart of data science lies a deep understanding of mathematics and statistics. You'll need to grasp concepts like:

Linear Algebra and Calculus: Essential for understanding machine learning algorithms and optimizing models.

Probability and Statistics: Crucial for data analysis, hypothesis testing, and drawing meaningful conclusions from data.

2. Programming Proficiency (Python and/or R):

Data scientists are fluent in at least one, if not both, of the dominant programming languages in the field:

Python: Known for its readability and extensive libraries like Pandas, NumPy, Scikit-learn, and TensorFlow, making it ideal for data manipulation, analysis, and machine learning.

R: Specifically designed for statistical computing and graphics, R offers a rich ecosystem of packages for statistical modeling and visualization.

3. Data Wrangling and Preprocessing Skills:

Raw data is rarely clean and ready for analysis. A significant portion of a data scientist's time is spent on:

Data Cleaning: Handling missing values, outliers, and inconsistencies.

Data Transformation: Reshaping, merging, and aggregating data.

Feature Engineering: Creating new features from existing data to improve model performance.

4. Expertise in Databases and SQL:

Data often resides in databases. Proficiency in SQL (Structured Query Language) is essential for:

Extracting Data: Querying and retrieving data from various database systems.

Data Manipulation: Filtering, joining, and aggregating data within databases.

5. Machine Learning Mastery:

Machine learning is a core component of data science, enabling you to build models that learn from data and make predictions or classifications. Key areas include:

Supervised Learning: Regression, classification algorithms.

Unsupervised Learning: Clustering, dimensionality reduction.

Model Selection and Evaluation: Choosing the right algorithms and assessing their performance.

6. Data Visualization and Communication Skills:

Being able to effectively communicate your findings is just as important as the analysis itself. You'll need to:

Visualize Data: Create compelling charts and graphs to explore patterns and insights using libraries like Matplotlib, Seaborn (Python), or ggplot2 (R).

Tell Data Stories: Present your findings in a clear and concise manner that resonates with both technical and non-technical audiences.

7. Critical Thinking and Problem-Solving Abilities:

Data scientists are essentially problem solvers. You need to be able to:

Define Business Problems: Translate business challenges into data science questions.

Develop Analytical Frameworks: Structure your approach to solve complex problems.

Interpret Results: Draw meaningful conclusions and translate them into actionable recommendations.

8. Domain Knowledge (Optional but Highly Beneficial):

Having expertise in the specific industry or domain you're working in can give you a significant advantage. It helps you understand the context of the data and formulate more relevant questions.

9. Curiosity and a Growth Mindset:

The field of data science is constantly evolving. A genuine curiosity and a willingness to learn new technologies and techniques are crucial for long-term success.

10. Strong Communication and Collaboration Skills:

Data scientists often work in teams and need to collaborate effectively with engineers, business stakeholders, and other experts.

Kickstart Your Data Science Journey with Xaltius Academy's Data Science and AI Program:

Acquiring these skills can seem like a daunting task, but structured learning programs can provide a clear and effective path. Xaltius Academy's Data Science and AI Program is designed to equip you with the essential knowledge and practical experience to become a successful data scientist.

Key benefits of the program:

Comprehensive Curriculum: Covers all the core skills mentioned above, from foundational mathematics to advanced machine learning techniques.

Hands-on Projects: Provides practical experience working with real-world datasets and building a strong portfolio.

Expert Instructors: Learn from industry professionals with years of experience in data science and AI.

Career Support: Offers guidance and resources to help you launch your data science career.

Becoming a data scientist is a rewarding journey that blends technical expertise with analytical thinking. By focusing on developing these key skills and leveraging resources like Xaltius Academy's program, you can position yourself for a successful and impactful career in this in-demand field. The power of data is waiting to be unlocked – are you ready to take the challenge?

3 notes

·

View notes

Text

Machine Learning: A Comprehensive Overview

Machine Learning (ML) is a subfield of synthetic intelligence (AI) that offers structures with the capacity to robotically examine and enhance from revel in without being explicitly programmed. Instead of using a fixed set of guidelines or commands, device studying algorithms perceive styles in facts and use the ones styles to make predictions or decisions. Over the beyond decade, ML has transformed how we have interaction with generation, touching nearly each aspect of our every day lives — from personalised recommendations on streaming services to actual-time fraud detection in banking.

Machine learning algorithms

What is Machine Learning?

At its center, gadget learning entails feeding facts right into a pc algorithm that allows the gadget to adjust its parameters and improve its overall performance on a project through the years. The more statistics the machine sees, the better it usually turns into. This is corresponding to how humans study — through trial, error, and revel in.

Arthur Samuel, a pioneer within the discipline, defined gadget gaining knowledge of in 1959 as “a discipline of take a look at that offers computers the capability to study without being explicitly programmed.” Today, ML is a critical technology powering a huge array of packages in enterprise, healthcare, science, and enjoyment.

Types of Machine Learning

Machine studying can be broadly categorised into 4 major categories:

1. Supervised Learning

For example, in a spam electronic mail detection device, emails are classified as "spam" or "no longer unsolicited mail," and the algorithm learns to classify new emails for this reason.

Common algorithms include:

Linear Regression

Logistic Regression

Support Vector Machines (SVM)

Decision Trees

Random Forests

Neural Networks

2. Unsupervised Learning

Unsupervised mastering offers with unlabeled information. Clustering and association are commonplace obligations on this class.

Key strategies encompass:

K-Means Clustering

Hierarchical Clustering

Principal Component Analysis (PCA)

Autoencoders

three. Semi-Supervised Learning

It is specifically beneficial when acquiring categorised data is highly-priced or time-consuming, as in scientific diagnosis.

Four. Reinforcement Learning

Reinforcement mastering includes an agent that interacts with an surroundings and learns to make choices with the aid of receiving rewards or consequences. It is broadly utilized in areas like robotics, recreation gambling (e.G., AlphaGo), and independent vehicles.

Popular algorithms encompass:

Q-Learning

Deep Q-Networks (DQN)

Policy Gradient Methods

Key Components of Machine Learning Systems

1. Data

Data is the muse of any machine learning version. The pleasant and quantity of the facts directly effect the performance of the version. Preprocessing — consisting of cleansing, normalization, and transformation — is vital to make sure beneficial insights can be extracted.

2. Features

Feature engineering, the technique of selecting and reworking variables to enhance model accuracy, is one of the most important steps within the ML workflow.

Three. Algorithms

Algorithms define the rules and mathematical fashions that help machines study from information. Choosing the proper set of rules relies upon at the trouble, the records, and the desired accuracy and interpretability.

4. Model Evaluation

Models are evaluated the use of numerous metrics along with accuracy, precision, consider, F1-score (for class), or RMSE and R² (for regression). Cross-validation enables check how nicely a model generalizes to unseen statistics.

Applications of Machine Learning

Machine getting to know is now deeply incorporated into severa domain names, together with:

1. Healthcare

ML is used for disorder prognosis, drug discovery, customized medicinal drug, and clinical imaging. Algorithms assist locate situations like cancer and diabetes from clinical facts and scans.

2. Finance

Fraud detection, algorithmic buying and selling, credit score scoring, and client segmentation are pushed with the aid of machine gaining knowledge of within the financial area.

3. Retail and E-commerce

Recommendation engines, stock management, dynamic pricing, and sentiment evaluation assist businesses boom sales and improve patron revel in.

Four. Transportation

Self-riding motors, traffic prediction, and route optimization all rely upon real-time gadget getting to know models.

6. Cybersecurity

Anomaly detection algorithms help in identifying suspicious activities and capacity cyber threats.

Challenges in Machine Learning

Despite its rapid development, machine mastering still faces numerous demanding situations:

1. Data Quality and Quantity

Accessing fantastic, categorised statistics is often a bottleneck. Incomplete, imbalanced, or biased datasets can cause misguided fashions.

2. Overfitting and Underfitting

Overfitting occurs when the model learns the education statistics too nicely and fails to generalize.

Three. Interpretability

Many modern fashions, specifically deep neural networks, act as "black boxes," making it tough to recognize how predictions are made — a concern in excessive-stakes regions like healthcare and law.

4. Ethical and Fairness Issues

Algorithms can inadvertently study and enlarge biases gift inside the training facts. Ensuring equity, transparency, and duty in ML structures is a growing area of studies.

5. Security

Adversarial assaults — in which small changes to enter information can fool ML models — present critical dangers, especially in applications like facial reputation and autonomous riding.

Future of Machine Learning

The destiny of system studying is each interesting and complicated. Some promising instructions consist of:

1. Explainable AI (XAI)

Efforts are underway to make ML models greater obvious and understandable, allowing customers to believe and interpret decisions made through algorithms.

2. Automated Machine Learning (AutoML)

AutoML aims to automate the stop-to-cease manner of applying ML to real-world issues, making it extra reachable to non-professionals.

3. Federated Learning

This approach permits fashions to gain knowledge of across a couple of gadgets or servers with out sharing uncooked records, enhancing privateness and efficiency.

4. Edge ML

Deploying device mastering models on side devices like smartphones and IoT devices permits real-time processing with reduced latency and value.

Five. Integration with Other Technologies

ML will maintain to converge with fields like blockchain, quantum computing, and augmented fact, growing new opportunities and challenges.

2 notes

·

View notes

Text

Artificial Intelligence is not infallible. Despite its rapid advancements, AI systems often falter in ways that can have profound implications. The crux of the issue lies in the inherent limitations of machine learning algorithms and the data they consume.

AI systems are fundamentally dependent on the quality and scope of their training data. These systems learn patterns and make predictions based on historical data, which can be biased, incomplete, or unrepresentative. This dependency can lead to significant failures when AI is deployed in real-world scenarios. For instance, facial recognition technologies have been criticized for their higher error rates in identifying individuals from minority groups. This is a direct consequence of training datasets that lack diversity, leading to skewed algorithmic outputs.

Moreover, AI’s reliance on statistical correlations rather than causal understanding can result in erroneous conclusions. Machine learning models excel at identifying patterns but lack the ability to comprehend the underlying causal mechanisms. This limitation is particularly evident in healthcare applications, where AI systems might identify correlations between symptoms and diseases without understanding the biological causation, potentially leading to misdiagnoses.

The opacity of AI models, often referred to as the “black box” problem, further exacerbates these issues. Many AI systems, particularly those based on deep learning, operate in ways that are not easily interpretable by humans. This lack of transparency can hinder the identification and correction of errors, making it difficult to trust AI systems in critical applications such as autonomous vehicles or financial decision-making.

Additionally, the deployment of AI can inadvertently perpetuate existing societal biases and inequalities. Algorithms trained on biased data can reinforce and amplify these biases, leading to discriminatory outcomes. For example, AI-driven hiring tools have been shown to favor candidates from certain demographics over others, reflecting the biases present in historical hiring data.

The potential harm caused by AI is not limited to technical failures. The widespread adoption of AI technologies raises ethical concerns about privacy, surveillance, and autonomy. The use of AI in surveillance systems, for instance, poses significant risks to individual privacy and civil liberties. The ability of AI to process vast amounts of data and identify individuals in real-time can lead to intrusive monitoring and control by governments or corporations.

In conclusion, while AI holds immense potential, it is crucial to recognize and address its limitations and the potential harm it can cause. Ensuring the ethical and responsible development and deployment of AI requires a concerted effort to improve data quality, enhance model transparency, and mitigate biases. As we continue to integrate AI into various aspects of society, it is imperative to remain vigilant and critical of its capabilities and impacts.

#proscribe#AI#skeptic#skepticism#artificial intelligence#general intelligence#generative artificial intelligence#genai#thinking machines#safe AI#friendly AI#unfriendly AI#superintelligence#singularity#intelligence explosion#bias

2 notes

·

View notes

Text

Predicting Alzheimer's With Machine Learning

Alzheimer's disease is a progressive neurodegenerative disorder that affects millions of people worldwide. Early diagnosis is crucial for managing the disease and potentially slowing its progression. My interest in this area is deeply personal. My great grandmother, Bonnie, passed away from Alzheimer's in 2000, and my grandmother, Jonette, who is Bonnie's daughter, is currently exhibiting symptoms of the disease. This personal connection has motivated me to apply my skills as a data scientist to contribute to the ongoing research in Alzheimer's disease.

Model Creation

The first step in creating the model was to identify relevant features that could potentially influence the onset of Alzheimer's disease. After careful consideration, I chose the following features: Mini-Mental State Examination (MMSE), Clinical Dementia Rating (CDR), Socioeconomic Status (SES), and Normalized Whole Brain Volume (nWBV).

MMSE: This is a commonly used test for cognitive function and mental status. Lower scores on the MMSE can indicate severe cognitive impairment, a common symptom of Alzheimer's.

CDR: This is a numeric scale used to quantify the severity of symptoms of dementia. A higher CDR score can indicate more severe dementia.

SES: Socioeconomic status has been found to influence health outcomes, including cognitive function and dementia.

nWBV: This represents the volume of the brain, adjusted for head size. A decrease in nWBV can be indicative of brain atrophy, a common symptom of Alzheimer's.

After selecting these features, I used a combination of Logistic Regression and Random Forest Classifier models in a Stacking Classifier to predict the onset of Alzheimer's disease. The model was trained on a dataset with these selected features and then tested on a separate dataset to evaluate its performance.

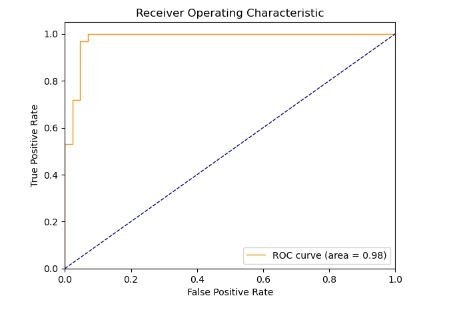

Model Performance

To validate the model's performance, I used a ROC curve plot (below), as well as a cross-validation accuracy scoring mechanism.

The ROC curve (Receiver Operating Characteristic curve) is a plot that illustrates the diagnostic ability of a model as its discrimination threshold is varied. It is great for visualizing the accuracy of binary classification models. The curve is created by plotting the true positive rate (TPR) against the false positive rate (FPR) at various threshold settings.

The area under the ROC curve, often referred to as the AUC (Area Under the Curve), provides a measure of the model's ability to distinguish between positive and negative classes. The AUC can be interpreted as the probability that the model will rank a randomly chosen positive instance higher than a randomly chosen negative one.

The AUC value ranges from 0 to 1. An AUC of 0.5 suggests no discrimination (i.e., the model has no ability to distinguish between positive and negative classes), 1 represents perfect discrimination (i.e., the model has perfect ability to distinguish between positive and negative classes), and 0 represents total misclassification.

The model's score of an AUC of 0.98 is excellent. It suggests that the model has a very high ability to distinguish between positive and negative classes.

The model also performed extremely well in another test, which showed the model has a final cross-validation score of 0.953. This high score indicates that the model was able to accurately predict the onset of Alzheimer's disease based on the selected features.

However, it's important to note that while this model can be a useful tool for predicting Alzheimer's disease, it should not be the sole basis for a diagnosis. Doctors should consider all aspects of diagnostic information when making a diagnosis.

Conclusion

The development and application of machine learning models like this one are revolutionizing the medical field. They offer the potential for early diagnosis of neurodegenerative diseases like Alzheimer's, which can significantly improve patient outcomes. However, these models are tools to assist healthcare professionals, not replace them. The human element in medicine, including a comprehensive understanding of the patient's health history and symptoms, remains crucial.

Despite the challenges, the potential of machine learning models in improving early diagnosis leaves me and my family hopeful. As we continue to advance in technology and research, we move closer to a world where diseases like Alzheimer's can be effectively managed, and hopefully, one day, cured.

#alzheimersresearch#alzheimersdisease#dementia#neurology#machinelearning#ai#artificialintelligence#aicommunity#datascience#datascientist#healthcare#medicalresearch#programming#python programming#python#python 3

60 notes

·

View notes

Text

The AI Revolution: Understanding, Harnessing, and Navigating the Future

What is AI

In a world increasingly shaped by technology, one term stands out above the rest, capturing both our imagination and, at times, our apprehension: Artificial Intelligence. From science fiction dreams to tangible realities, AI is no longer a distant concept but an omnipresent force, subtly (and sometimes not-so-subtly) reshaping industries, transforming daily life, and fundamentally altering our perception of what's possible.

But what exactly is AI? Is it a benevolent helper, a job-stealing machine, or something else entirely? The truth, as always, is far more nuanced. At its core, Artificial Intelligence refers to the simulation of human intelligence processes by machines, especially computer systems. These processes include learning (the acquisition of information and rules for using the information), reasoning (using rules to reach approximate or definite conclusions), and self-correction. What makes modern AI so captivating is its ability to learn from data, identify patterns, and make predictions or decisions with increasing autonomy.

The journey of AI has been a fascinating one, marked by cycles of hype and disillusionment. Early pioneers in the mid-20th century envisioned intelligent machines that could converse and reason. While those early ambitions proved difficult to achieve with the technology of the time, the seeds of AI were sown. The 21st century, however, has witnessed an explosion of progress, fueled by advancements in computing power, the availability of massive datasets, and breakthroughs in machine learning algorithms, particularly deep learning. This has led to the "AI Spring" we are currently experiencing.

The Landscape of AI: More Than Just Robots

When many people think of AI, images of humanoid robots often come to mind. While robotics is certainly a fascinating branch of AI, the field is far broader and more diverse than just mechanical beings. Here are some key areas where AI is making significant strides:

Machine Learning (ML): This is the engine driving much of the current AI revolution. ML algorithms learn from data without being explicitly programmed. Think of recommendation systems on streaming platforms, fraud detection in banking, or personalized advertisements – these are all powered by ML.

Deep Learning (DL): A subset of machine learning inspired by the structure and function of the human brain's neural networks. Deep learning has been instrumental in breakthroughs in image recognition, natural language processing, and speech recognition. The facial recognition on your smartphone or the impressive capabilities of large language models like the one you're currently interacting with are prime examples.

Natural Language Processing (NLP): This field focuses on enabling computers to understand, interpret, and generate human language. From language translation apps to chatbots that provide customer service, NLP is bridging the communication gap between humans and machines.

Computer Vision: This area allows computers to "see" and interpret visual information from the world around them. Autonomous vehicles rely heavily on computer vision to understand their surroundings, while medical imaging analysis uses it to detect diseases.

Robotics: While not all robots are AI-powered, many sophisticated robots leverage AI for navigation, manipulation, and interaction with their environment. From industrial robots in manufacturing to surgical robots assisting doctors, AI is making robots more intelligent and versatile.

AI's Impact: Transforming Industries and Daily Life

The transformative power of AI is evident across virtually every sector. In healthcare, AI is assisting in drug discovery, personalized treatment plans, and early disease detection. In finance, it's used for algorithmic trading, risk assessment, and fraud prevention. The manufacturing industry benefits from AI-powered automation, predictive maintenance, and quality control.

Beyond these traditional industries, AI is woven into the fabric of our daily lives. Virtual assistants like Siri and Google Assistant help us organize our schedules and answer our questions. Spam filters keep our inboxes clean. Navigation apps find the fastest routes. Even the algorithms that curate our social media feeds are a testament to AI's pervasive influence. These applications, while often unseen, are making our lives more convenient, efficient, and connected.

Harnessing the Power: Opportunities and Ethical Considerations

The opportunities presented by AI are immense. It promises to boost productivity, solve complex global challenges like climate change and disease, and unlock new frontiers of creativity and innovation. Businesses that embrace AI can gain a competitive edge, optimize operations, and deliver enhanced customer experiences. Individuals can leverage AI tools to automate repetitive tasks, learn new skills, and augment their own capabilities.

However, with great power comes great responsibility. The rapid advancement of AI also brings forth a host of ethical considerations and potential challenges that demand careful attention.

Job Displacement: One of the most frequently discussed concerns is the potential for AI to automate jobs currently performed by humans. While AI is likely to create new jobs, there will undoubtedly be a shift in the nature of work, requiring reskilling and adaptation.

Bias and Fairness: AI systems learn from the data they are fed. If that data contains historical biases (e.g., related to gender, race, or socioeconomic status), the AI can perpetuate and even amplify those biases in its decisions, leading to unfair outcomes. Ensuring fairness and accountability in AI algorithms is paramount.

Privacy and Security: AI relies heavily on data. The collection and use of vast amounts of personal data raise significant privacy concerns. Moreover, as AI systems become more integrated into critical infrastructure, their security becomes a vital issue.

Transparency and Explainability: Many advanced AI models, particularly deep learning networks, are often referred to as "black boxes" because their decision-making processes are difficult to understand. For critical applications, it's crucial to have transparency and explainability to ensure trust and accountability.

Autonomous Decision-Making: As AI systems become more autonomous, questions arise about who is responsible when an AI makes a mistake or causes harm. The development of ethical guidelines and regulatory frameworks for autonomous AI is an ongoing global discussion.

Navigating the Future: A Human-Centric Approach

Navigating the AI revolution requires a proactive and thoughtful approach. It's not about fearing AI, but rather understanding its capabilities, limitations, and implications. Here are some key principles for moving forward:

Education and Upskilling: Investing in education and training programs that equip individuals with AI literacy and skills in areas like data science, AI ethics, and human-AI collaboration will be crucial for the workforce of the future.

Ethical AI Development: Developers and organizations building AI systems must prioritize ethical considerations from the outset. This includes designing for fairness, transparency, and accountability, and actively mitigating biases.

Robust Governance and Regulation: Governments and international bodies have a vital role to play in developing appropriate regulations and policies that foster innovation while addressing ethical concerns and ensuring the responsible deployment of AI.

Human-AI Collaboration: The future of work is likely to be characterized by collaboration between humans and AI. AI can augment human capabilities, automate mundane tasks, and provide insights, allowing humans to focus on higher-level problem-solving, creativity, and empathy.

Continuous Dialogue: As AI continues to evolve, an ongoing, open dialogue among technologists, ethicists, policymakers, and the public is essential to shape its development in a way that benefits humanity.

The AI revolution is not just a technological shift; it's a societal transformation. By understanding its complexities, embracing its potential, and addressing its challenges with foresight and collaboration, we can harness the power of Artificial Intelligence to build a more prosperous, equitable, and intelligent future for all. The journey has just begun, and the choices we make today will define the world of tomorrow.

2 notes

·

View notes

Text

Predicting Employee Attrition: Leveraging AI for Workforce Stability

Employee turnover has become a pressing concern for organizations worldwide. The cost of losing valuable talent extends beyond recruitment expenses—it affects team morale, disrupts workflows, and can tarnish a company's reputation. In this dynamic landscape, Artificial Intelligence (AI) emerges as a transformative tool, offering predictive insights that enable proactive retention strategies. By harnessing AI, businesses can anticipate attrition risks and implement measures to foster a stable and engaged workforce.

Understanding Employee Attrition

Employee attrition refers to the gradual loss of employees over time, whether through resignations, retirements, or other forms of departure. While some level of turnover is natural, high attrition rates can signal underlying issues within an organization. Common causes include lack of career advancement opportunities, inadequate compensation, poor management, and cultural misalignment. The repercussions are significant—ranging from increased recruitment costs to diminished employee morale and productivity.

The Role of AI in Predicting Attrition

AI revolutionizes the way organizations approach employee retention. Traditional methods often rely on reactive measures, addressing turnover after it occurs. In contrast, AI enables a proactive stance by analyzing vast datasets to identify patterns and predict potential departures. Machine learning algorithms can assess factors such as job satisfaction, performance metrics, and engagement levels to forecast attrition risks. This predictive capability empowers HR professionals to intervene early, tailoring strategies to retain at-risk employees.

Data Collection and Integration

The efficacy of AI in predicting attrition hinges on the quality and comprehensiveness of data. Key data sources include:

Employee Demographics: Age, tenure, education, and role.

Performance Metrics: Appraisals, productivity levels, and goal attainment.

Engagement Surveys: Feedback on job satisfaction and organizational culture.

Compensation Details: Salary, bonuses, and benefits.

Exit Interviews: Insights into reasons for departure.

Integrating data from disparate systems poses challenges, necessitating robust data management practices. Ensuring data accuracy, consistency, and privacy is paramount to building reliable predictive models.

Machine Learning Models for Attrition Prediction

Several machine learning algorithms have proven effective in forecasting employee turnover:

Random Forest: This ensemble learning method constructs multiple decision trees to improve predictive accuracy and control overfitting.

Neural Networks: Mimicking the human brain's structure, neural networks can model complex relationships between variables, capturing subtle patterns in employee behavior.

Logistic Regression: A statistical model that estimates the probability of a binary outcome, such as staying or leaving.

For instance, IBM's Predictive Attrition Program utilizes AI to analyze employee data, achieving a reported accuracy of 95% in identifying individuals at risk of leaving. This enables targeted interventions, such as personalized career development plans, to enhance retention.

Sentiment Analysis and Employee Feedback

Understanding employee sentiment is crucial for retention. AI-powered sentiment analysis leverages Natural Language Processing (NLP) to interpret unstructured data from sources like emails, surveys, and social media. By detecting emotions and opinions, organizations can gauge employee morale and identify areas of concern. Real-time sentiment monitoring allows for swift responses to emerging issues, fostering a responsive and supportive work environment.

Personalized Retention Strategies

AI facilitates the development of tailored retention strategies by analyzing individual employee data. For example, if an employee exhibits signs of disengagement, AI can recommend specific interventions—such as mentorship programs, skill development opportunities, or workload adjustments. Personalization ensures that retention efforts resonate with employees' unique needs and aspirations, enhancing their effectiveness.

Enhancing Employee Engagement Through AI

Beyond predicting attrition, AI contributes to employee engagement by:

Recognition Systems: Automating the acknowledgment of achievements to boost morale.

Career Pathing: Suggesting personalized growth trajectories aligned with employees' skills and goals.

Feedback Mechanisms: Providing platforms for continuous feedback, fostering a culture of open communication.

These AI-driven initiatives create a more engaging and fulfilling work environment, reducing the likelihood of turnover.

Ethical Considerations in AI Implementation

While AI offers substantial benefits, ethical considerations must guide its implementation:

Data Privacy: Organizations must safeguard employee data, ensuring compliance with privacy regulations.

Bias Mitigation: AI models should be regularly audited to prevent and correct biases that may arise from historical data.

Transparency: Clear communication about how AI is used in HR processes builds trust among employees.

Addressing these ethical aspects is essential to responsibly leveraging AI in workforce management.

Future Trends in AI and Employee Retention

The integration of AI in HR is poised to evolve further, with emerging trends including:

Predictive Career Development: AI will increasingly assist in mapping out employees' career paths, aligning organizational needs with individual aspirations.

Real-Time Engagement Analytics: Continuous monitoring of engagement levels will enable immediate interventions.

AI-Driven Organizational Culture Analysis: Understanding and shaping company culture through AI insights will become more prevalent.

These advancements will further empower organizations to maintain a stable and motivated workforce.

Conclusion

AI stands as a powerful ally in the quest for workforce stability. By predicting attrition risks and informing personalized retention strategies, AI enables organizations to proactively address turnover challenges. Embracing AI-driven approaches not only enhances employee satisfaction but also fortifies the organization's overall performance and resilience.

Frequently Asked Questions (FAQs)

How accurate are AI models in predicting employee attrition?

AI models, when trained on comprehensive and high-quality data, can achieve high accuracy levels. For instance, IBM's Predictive Attrition Program reports a 95% accuracy rate in identifying at-risk employees.

What types of data are most useful for AI-driven attrition prediction?

Valuable data includes employee demographics, performance metrics, engagement survey results, compensation details, and feedback from exit interviews.

Can small businesses benefit from AI in HR?

Absolutely. While implementation may vary in scale, small businesses can leverage AI tools to gain insights into employee satisfaction and predict potential turnover, enabling timely interventions.

How does AI help in creating personalized retention strategies?

AI analyzes individual employee data to identify specific needs and preferences, allowing HR to tailor interventions such as customized career development plans or targeted engagement initiatives.

What are the ethical considerations when using AI in HR?

Key considerations include ensuring data privacy, mitigating biases in AI models, and maintaining transparency with employees about how their data is used.

For more Info Visit :- Stentor.ai

2 notes

·

View notes

Text

Digital Marketing Skills to Learn in 2025

Key Digital Marketing Skills to Learn in 2025 to Stay Ahead of Competition The digital marketing landscape in 2025 is rapidly changing, driven by the technological advancements, shifting consumer behavior, and the growing power of artificial intelligence. Competition and career resilience require acquiring expertise in the following digital marketing skills.

Data Analysis and Interpretation

Data is the backbone of modern marketing strategies. The ability to collect, analyze, and make informed decisions based on large sets of data sets great marketers apart. Proficiency in analytical software like Google Analytics and AI-driven tools is critical in measuring campaign performance, optimizing strategies, and making data-driven decisions. Predictive analytics and customer journey mapping are also becoming more critical for trend spotting and personalization of user experience.

Search Engine Optimization (SEO) and Search Engine Marketing (SEM)

SEO is still a fundamental skill, but the landscape is evolving. The marketer now has to optimize for traditional search engines, voice search, and even social media, as Gen Z increasingly relies on TikTok and YouTube as search tools. Keeping up with algorithm updates, keyword research skills, and technical SEO skills is essential to staying visible and driving organic traffic.

Artificial Intelligence (AI) and Machine Learning (ML)

AI and ML are revolutionizing digital marketing through the power to enable advanced targeting, automation, and personalization. Marketers will need to leverage AI in order to segment audiences, design content, deploy predictive analytics, and build chatbots. Most crucial will be understanding how to balance AI-based automation with human, authentic content.

Content Generation and Storytelling

Content is still king. Marketers must be great at creating great copy, video, and interactive content that is appropriate for various platforms and audiences. Emotionally resonant storytelling and brand affection are more critical than ever, particularly as human-created content trumps AI-created content consistently.

Social Media Strategy and Social Commerce Social media is still the foremost driver of digital engagement. Mastering techniques constructed for specific platforms—such as short-form video, live stream, and influencing with influencers—is critical. How to facilitate direct sales through social commerce, built on combining commerce and social interactions, is an area marketers must master.

Marketing Automation

Efficiency is the most critical in 2025. Marketing automation platforms (e.g., Marketo and HubSpot) enable marketers to automate repetitive tasks, nurture leads, and personalize customer journeys at scale.

UX/UI Design Principles

A seamless user experience and a pleasing design can either make or destroy online campaigns. Having UX/UI basics in your knowledge and collaborating with design teams ensures that marketing campaigns are both effective and engaging.

Ethical Marketing and Privacy Compliance

With data privacy emerging as a pressing issue, marketers must stay updated on laws like GDPR and CCPA. Ethical marketing and openness foster trust and avoid legal issues.

To lead in 2025, digital marketers will have to fuse technical skills, creativity, and flexibility. By acquiring these high-impact capabilities-data analysis, SEO, AI, content development, social strategy, automation, UX/UI, and ethical marketing-you'll be at the edge of the constantly evolving digital space

2 notes

·

View notes

Text

While Leo thought that there was a good deal of fun in tumblr adopting the stylistic rules of House of Leaves, even in posts not directly about the book, he thought that this could be extended further. For instance, the site could adopt the style of The Neverending Story and alternate between red texts when describing things that happen in the “main” level of the text while using green text to refer to a story-within-a-story. Perhaps clever expectation-reversal in color use could be employed to great effect; for example, by suggesting the difference between levels of story were more nebulous than could otherwise be communicated. Leo was sure there were fancy academic terms to describe these different levels more usefully, but he had never shown much interest in any literary field until his final years of education. He bemoaned taking only two courses in English, both of them too niche for much wider applicability and certainly no help in communicating his thoughts in a known framework. Frustrated, he turned back to his Simulatometer-9000. Plugging in the right parameters, he sat back, eagerly looking forwards to seeing the machine’s prediction for what such fontological plans would lead to.

And so the brave tumblr-user, penning his thoughts entirely in the shades of cheerful green and candy-red that the tumblr mobile app allowed, discovered to his dismay that his writings were not being interpreted as he anticipated. Rather than connecting his stylistic choice to The Neverending Story, the audience instead thought it all to be in reference to The Dread Work, which unbeknownst to many had itself made many seemingly random stylistic choices in order to echo, incorporate, and comment on Michael Ende’s work. Green was interpreted not with the land of Fantasia but with a saccharine fanartist, Red not with the real world but with a murderous villain. Confusingly, the color associations with these characters did not even appear to be consistent within the story. Nevertheless, the use of red and green served similar ends in Hussie’s odious compendium, coating the words of the two figures as they performed both as author figures and audience surrogates in a way that collapsed both the perceived difference between the two roles as well as the sets of behaviors they each represented.

But unfortunately for the brave tumblr-user, the associations these two Caliginous entities carried with them were not quite the same as the ones he intended, yet were similar enough that legible but unintended readings of his commentary were entirely possible. Suddenly the brave user’s thoughts on recursion in stories were being interpreted as commentaries on the author function, his thoughts on how to view a story in the context of its intertexts interpreted as musings on the consequences of reading a work through the lens of fandom.

Moreover, many people were unfamiliar both with The Neverending Story and Homestuck, only knowing that the latter made heavy use of brightly-colored text. Many thus scrolled past, assuming that the brave user was rp-ing characters they knew to avoid learning of. Others assumed that, much like many netizens who grew up on Hussie’s Scribblings adopted a “writing quirk,” the user was unthinkingly using an affectation in an annoying manner. The user fell to his knees, bemoaning his ill fortune, cursing the name of the one who made brightly-colored text as a formatting element played-out.

“Huh,” Leo said looking up from the simulation, “it looks like typing in that style wouldn’t work as well as I thought.” He rose, glad he had not wasted time pursuing his colorful plans as the brave user in his simulation had. Then the Minotaur got him.

62 notes

·

View notes

Text

The Future of AI: What’s Next in Machine Learning and Deep Learning?

Artificial Intelligence (AI) has rapidly evolved over the past decade, transforming industries and redefining the way businesses operate. With machine learning and deep learning at the core of AI advancements, the future holds groundbreaking innovations that will further revolutionize technology. As machine learning and deep learning continue to advance, they will unlock new opportunities across various industries, from healthcare and finance to cybersecurity and automation. In this blog, we explore the upcoming trends and what lies ahead in the world of machine learning and deep learning.

1. Advancements in Explainable AI (XAI)

As AI models become more complex, understanding their decision-making process remains a challenge. Explainable AI (XAI) aims to make machine learning and deep learning models more transparent and interpretable. Businesses and regulators are pushing for AI systems that provide clear justifications for their outputs, ensuring ethical AI adoption across industries. The growing demand for fairness and accountability in AI-driven decisions is accelerating research into interpretable AI, helping users trust and effectively utilize AI-powered tools.

2. AI-Powered Automation in IT and Business Processes

AI-driven automation is set to revolutionize business operations by minimizing human intervention. Machine learning and deep learning algorithms can predict and automate tasks in various sectors, from IT infrastructure management to customer service and finance. This shift will increase efficiency, reduce costs, and improve decision-making. Businesses that adopt AI-powered automation will gain a competitive advantage by streamlining workflows and enhancing productivity through machine learning and deep learning capabilities.

3. Neural Network Enhancements and Next-Gen Deep Learning Models

Deep learning models are becoming more sophisticated, with innovations like transformer models (e.g., GPT-4, BERT) pushing the boundaries of natural language processing (NLP). The next wave of machine learning and deep learning will focus on improving efficiency, reducing computation costs, and enhancing real-time AI applications. Advancements in neural networks will also lead to better image and speech recognition systems, making AI more accessible and functional in everyday life.

4. AI in Edge Computing for Faster and Smarter Processing

With the rise of IoT and real-time processing needs, AI is shifting toward edge computing. This allows machine learning and deep learning models to process data locally, reducing latency and dependency on cloud services. Industries like healthcare, autonomous vehicles, and smart cities will greatly benefit from edge AI integration. The fusion of edge computing with machine learning and deep learning will enable faster decision-making and improved efficiency in critical applications like medical diagnostics and predictive maintenance.

5. Ethical AI and Bias Mitigation

AI systems are prone to biases due to data limitations and model training inefficiencies. The future of machine learning and deep learning will prioritize ethical AI frameworks to mitigate bias and ensure fairness. Companies and researchers are working towards AI models that are more inclusive and free from discriminatory outputs. Ethical AI development will involve strategies like diverse dataset curation, bias auditing, and transparent AI decision-making processes to build trust in AI-powered systems.

6. Quantum AI: The Next Frontier

Quantum computing is set to revolutionize AI by enabling faster and more powerful computations. Quantum AI will significantly accelerate machine learning and deep learning processes, optimizing complex problem-solving and large-scale simulations beyond the capabilities of classical computing. As quantum AI continues to evolve, it will open new doors for solving problems that were previously considered unsolvable due to computational constraints.

7. AI-Generated Content and Creative Applications

From AI-generated art and music to automated content creation, AI is making strides in the creative industry. Generative AI models like DALL-E and ChatGPT are paving the way for more sophisticated and human-like AI creativity. The future of machine learning and deep learning will push the boundaries of AI-driven content creation, enabling businesses to leverage AI for personalized marketing, video editing, and even storytelling.

8. AI in Cybersecurity: Real-Time Threat Detection

As cyber threats evolve, AI-powered cybersecurity solutions are becoming essential. Machine learning and deep learning models can analyze and predict security vulnerabilities, detecting threats in real time. The future of AI in cybersecurity lies in its ability to autonomously defend against sophisticated cyberattacks. AI-powered security systems will continuously learn from emerging threats, adapting and strengthening defense mechanisms to ensure data privacy and protection.

9. The Role of AI in Personalized Healthcare

One of the most impactful applications of machine learning and deep learning is in healthcare. AI-driven diagnostics, predictive analytics, and drug discovery are transforming patient care. AI models can analyze medical images, detect anomalies, and provide early disease detection, improving treatment outcomes. The integration of machine learning and deep learning in healthcare will enable personalized treatment plans and faster drug development, ultimately saving lives.

10. AI and the Future of Autonomous Systems

From self-driving cars to intelligent robotics, machine learning and deep learning are at the forefront of autonomous technology. The evolution of AI-powered autonomous systems will improve safety, efficiency, and decision-making capabilities. As AI continues to advance, we can expect self-learning robots, smarter logistics systems, and fully automated industrial processes that enhance productivity across various domains.

Conclusion

The future of AI, machine learning and deep learning is brimming with possibilities. From enhancing automation to enabling ethical and explainable AI, the next phase of AI development will drive unprecedented innovation. Businesses and tech leaders must stay ahead of these trends to leverage AI's full potential. With continued advancements in machine learning and deep learning, AI will become more intelligent, efficient, and accessible, shaping the digital world like never before.

Are you ready for the AI-driven future? Stay updated with the latest AI trends and explore how these advancements can shape your business!

#artificial intelligence#machine learning#techinnovation#tech#technology#web developers#ai#web#deep learning#Information and technology#IT#ai future

2 notes

·

View notes

Text

The Automation Revolution: How Embedded Analytics is Leading the Way

Embedded analytics tools have emerged as game-changers, seamlessly integrating data-driven insights into business applications and enabling automation across various industries. By providing real-time analytics within existing workflows, these tools empower organizations to make informed decisions without switching between multiple platforms.

The Role of Embedded Analytics in Automation

Embedded analytics refers to the integration of analytical capabilities directly into business applications, eliminating the need for separate business intelligence (BI) tools. This integration enhances automation by:

Reducing Manual Data Analysis: Automated dashboards and real-time reporting eliminate the need for manual data extraction and processing.

Improving Decision-Making: AI-powered analytics provide predictive insights, helping businesses anticipate trends and make proactive decisions.

Enhancing Operational Efficiency: Automated alerts and anomaly detection streamline workflow management, reducing bottlenecks and inefficiencies.

Increasing User Accessibility: Non-technical users can easily access and interpret data within familiar applications, enabling data-driven culture across organizations.

Industry-Wide Impact of Embedded Analytics

1. Manufacturing: Predictive Maintenance & Process Optimization

By analyzing real-time sensor data, predictive maintenance reduces downtime, enhances production efficiency, and minimizes repair costs.

2. Healthcare: Enhancing Patient Outcomes & Resource Management

Healthcare providers use embedded analytics to track patient records, optimize treatment plans, and manage hospital resources effectively.

3. Retail: Personalized Customer Experiences & Inventory Optimization

Retailers integrate embedded analytics into e-commerce platforms to analyze customer preferences, optimize pricing, and manage inventory.

4. Finance: Fraud Detection & Risk Management

Financial institutions use embedded analytics to detect fraudulent activities, assess credit risks, and automate compliance monitoring.

5. Logistics: Supply Chain Optimization & Route Planning

Supply chain managers use embedded analytics to track shipments, optimize delivery routes, and manage inventory levels.

6. Education: Student Performance Analysis & Learning Personalization

Educational institutions utilize embedded analytics to track student performance, identify learning gaps, and personalize educational experiences.

The Future of Embedded Analytics in Automation

As AI and machine learning continue to evolve, embedded analytics will play an even greater role in automation. Future advancements may include:

Self-Service BI: Empowering users with more intuitive, AI-driven analytics tools that require minimal technical expertise.

Hyperautomation: Combining embedded analytics with robotic process automation (RPA) for end-to-end business process automation.

Advanced Predictive & Prescriptive Analytics: Leveraging AI for more accurate forecasting and decision-making support.

Greater Integration with IoT & Edge Computing: Enhancing real-time analytics capabilities for industries reliant on IoT sensors and connected devices.

Conclusion

By integrating analytics within existing workflows, businesses can improve efficiency, reduce operational costs, and enhance customer experiences. As technology continues to advance, the synergy between embedded analytics and automation will drive innovation and reshape the future of various industries.

To know more: data collection and insights

data analytics services

2 notes

·

View notes

Text

Short-Term vs. Long-Term Data Analytics Course in Delhi: Which One to Choose?

In today’s digital world, data is everywhere. From small businesses to large organizations, everyone uses data to make better decisions. Data analytics helps in understanding and using this data effectively. If you are interested in learning data analytics, you might wonder whether to choose a short-term or a long-term course. Both options have their benefits, and your choice depends on your goals, time, and career plans.

At Uncodemy, we offer both short-term and long-term data analytics courses in Delhi. This article will help you understand the key differences between these courses and guide you to make the right choice.

What is Data Analytics?

Data analytics is the process of examining large sets of data to find patterns, insights, and trends. It involves collecting, cleaning, analyzing, and interpreting data. Companies use data analytics to improve their services, understand customer behavior, and increase efficiency.

There are four main types of data analytics:

Descriptive Analytics: Understanding what has happened in the past.

Diagnostic Analytics: Identifying why something happened.

Predictive Analytics: Forecasting future outcomes.

Prescriptive Analytics: Suggesting actions to achieve desired outcomes.

Short-Term Data Analytics Course

A short-term data analytics course is a fast-paced program designed to teach you essential skills quickly. These courses usually last from a few weeks to a few months.

Benefits of a Short-Term Data Analytics Course

Quick Learning: You can learn the basics of data analytics in a short time.

Cost-Effective: Short-term courses are usually more affordable.

Skill Upgrade: Ideal for professionals looking to add new skills without a long commitment.

Job-Ready: Get practical knowledge and start working in less time.

Who Should Choose a Short-Term Course?

Working Professionals: If you want to upskill without leaving your job.

Students: If you want to add data analytics to your resume quickly.

Career Switchers: If you want to explore data analytics before committing to a long-term course.

What You Will Learn in a Short-Term Course

Introduction to Data Analytics

Basic Tools (Excel, SQL, Python)

Data Visualization (Tableau, Power BI)

Basic Statistics and Data Interpretation

Hands-on Projects

Long-Term Data Analytics Course

A long-term data analytics course is a comprehensive program that provides in-depth knowledge. These courses usually last from six months to two years.

Benefits of a Long-Term Data Analytics Course

Deep Knowledge: Covers advanced topics and techniques in detail.

Better Job Opportunities: Preferred by employers for specialized roles.

Practical Experience: Includes internships and real-world projects.

Certifications: You may earn industry-recognized certifications.

Who Should Choose a Long-Term Course?

Beginners: If you want to start a career in data analytics from scratch.

Career Changers: If you want to switch to a data analytics career.

Serious Learners: If you want advanced knowledge and long-term career growth.

What You Will Learn in a Long-Term Course

Advanced Data Analytics Techniques

Machine Learning and AI

Big Data Tools (Hadoop, Spark)

Data Ethics and Governance

Capstone Projects and Internships

Key Differences Between Short-Term and Long-Term Courses

FeatureShort-Term CourseLong-Term CourseDurationWeeks to a few monthsSix months to two yearsDepth of KnowledgeBasic and Intermediate ConceptsAdvanced and Specialized ConceptsCostMore AffordableHigher InvestmentLearning StyleFast-PacedDetailed and ComprehensiveCareer ImpactQuick Entry-Level JobsBetter Career Growth and High-Level JobsCertificationBasic CertificateIndustry-Recognized CertificationsPractical ProjectsLimitedExtensive and Real-World Projects

How to Choose the Right Course for You

When deciding between a short-term and long-term data analytics course at Uncodemy, consider these factors:

Your Career Goals

If you want a quick job or basic knowledge, choose a short-term course.

If you want a long-term career in data analytics, choose a long-term course.

Time Commitment

Choose a short-term course if you have limited time.

Choose a long-term course if you can dedicate several months to learning.

Budget

Short-term courses are usually more affordable.

Long-term courses require a bigger investment but offer better returns.

Current Knowledge

If you already know some basics, a short-term course will enhance your skills.

If you are a beginner, a long-term course will provide a solid foundation.

Job Market

Short-term courses can help you get entry-level jobs quickly.

Long-term courses open doors to advanced and specialized roles.

Why Choose Uncodemy for Data Analytics Courses in Delhi?

At Uncodemy, we provide top-quality training in data analytics. Our courses are designed by industry experts to meet the latest market demands. Here’s why you should choose us:

Experienced Trainers: Learn from professionals with real-world experience.

Practical Learning: Hands-on projects and case studies.

Flexible Schedule: Choose classes that fit your timing.

Placement Assistance: We help you find the right job after course completion.

Certification: Receive a recognized certificate to boost your career.

Final Thoughts

Choosing between a short-term and long-term data analytics course depends on your goals, time, and budget. If you want quick skills and job readiness, a short-term course is ideal. If you seek in-depth knowledge and long-term career growth, a long-term course is the better choice.

At Uncodemy, we offer both options to meet your needs. Start your journey in data analytics today and open the door to exciting career opportunities. Visit our website or contact us to learn more about our Data Analytics course in delhi.

Your future in data analytics starts here with Uncodemy!

2 notes

·

View notes

Text

Key Differences Between AI and Human Communication: Mechanisms, Intent, and Understanding

The differences between the way an AI communicates and the way a human does are significant, encompassing various aspects such as the underlying mechanisms, intent, adaptability, and the nature of understanding. Here’s a breakdown of key differences:

1. Mechanism of Communication:

AI: AI communication is based on algorithms, data processing, and pattern recognition. AI generates responses by analyzing input data, applying pre-programmed rules, and utilizing machine learning models that have been trained on large datasets. The AI does not understand language in a human sense; instead, it predicts likely responses based on patterns in the data.

Humans: Human communication is deeply rooted in biological, cognitive, and social processes. Humans use language as a tool for expressing thoughts, emotions, intentions, and experiences. Human communication is inherently tied to understanding and meaning-making, involving both conscious and unconscious processes.

2. Intent and Purpose:

AI: AI lacks true intent or purpose. It responds to input based on programming and training data, without any underlying motivation or goal beyond fulfilling the tasks it has been designed for. AI does not have desires, beliefs, or personal experiences that inform its communication.

Humans: Human communication is driven by intent and purpose. People communicate to share ideas, express emotions, seek information, build relationships, and achieve specific goals. Human communication is often nuanced, influenced by context, and shaped by personal experiences and social dynamics.

3. Understanding and Meaning:

AI: AI processes language at a syntactic and statistical level. It can identify patterns, generate coherent responses, and even mimic certain aspects of human communication, but it does not truly understand the meaning of the words it uses. AI lacks consciousness, self-awareness, and the ability to grasp abstract concepts in the way humans do.

Humans: Humans understand language semantically and contextually. They interpret meaning based on personal experience, cultural background, emotional state, and the context of the conversation. Human communication involves deep understanding, empathy, and the ability to infer meaning beyond the literal words spoken.

4. Adaptability and Learning:

AI: AI can adapt its communication style based on data and feedback, but this adaptability is limited to the parameters set by its algorithms and the data it has been trained on. AI can learn from new data, but it does so without understanding the implications of that data in a broader context.

Humans: Humans are highly adaptable communicators. They can adjust their language, tone, and approach based on the situation, the audience, and the emotional dynamics of the interaction. Humans learn not just from direct feedback but also from social and cultural experiences, emotional cues, and abstract reasoning.

5. Creativity and Innovation:

AI: AI can generate creative outputs, such as writing poems or composing music, by recombining existing patterns in novel ways. However, this creativity is constrained by the data it has been trained on and lacks the originality that comes from human creativity, which is often driven by personal experience, intuition, and a desire for expression.

Humans: Human creativity in communication is driven by a complex interplay of emotions, experiences, imagination, and intent. Humans can innovate in language, create new metaphors, and use language to express unique personal and cultural identities. Human creativity is often spontaneous and deeply tied to individual and collective experiences.

6. Emotional Engagement: