#Server Configuration Options

Explore tagged Tumblr posts

Text

NAS vs Server: Pros and Cons for Home Lab

NAS vs Server: Pros and Cons for Home Lab #homelab #homeserver #NASvsServerComparison #NetworkAttachedStorage #DataStorageServer #FileServers #ServerOperatingSystems #NASDevices #TraditionalFileServer #SmallBusinessDataSolution #HomeLabStorage

Two devices dominate both SMB and home lab environments – NAS devices and servers. The NAS vs server debate has been going on for quite some time. Both have their unique set of advantages and disadvantages. This post delves into the pros and cons of each, to guide you in making a good decision in the realm of electronic data storage. Table of contentsWhat is a Network Attached Storage (NAS)…

View On WordPress

#Data Storage Server#File Servers#Home Lab Storage#NAS Devices#NAS vs Server comparison#network attached storage#Server Configuration Options#Server Operating Systems#Small Business Data Solution#Traditional File Server

0 notes

Text

Comprehensive Guide to Install DHCP Server on Windows Server

The DHCP Server service automates the configuration of TCP/IP on DHCP clients, providing essential information to computers when connecting to an IP network. In this Comprehensive Guide to Install DHCP Server on Windows Server 2022, we will discuss the post configuration as well as configuring the DHCP Scope. Please see how to create and delete a DHCP reservation in Windows Server 2019 and How to…

View On WordPress

#Configure DHCP Scope#DHCP#DHCP Options#DHCP Post Configuration#DHCP Scope#DHCP Server#Microsoft Windows#Windows#Windows 10#Windows 11#Windows Server#Windows Server 2012#Windows Server 2016#Windows Server 2019#Windows Server 2022#Windows Server 2025

0 notes

Text

Optimizing SQL Server with the Delayed Start

In today’s fast-paced IT environment, optimizing SQL Server performance and startup times is crucial for maintaining system efficiency and ensuring that resources are available when needed. One valuable, yet often overlooked, feature is the SQL Server services delayed start option. This configuration can significantly enhance your server’s operational flexibility, particularly in environments…

View On WordPress

#SQL Server configuration#SQL Server delayed start#SQL services optimization#SQL startup options#T-SQL examples

0 notes

Text

RABİSU - PLATİN (2)

In an ever-evolving digital landscape, having a reliable online presence is integral to success. At Rabisu, we specialize in delivering tailored hosting solutions that empower businesses to thrive. Our diverse range of services—spanning VPS in the UK to comprehensive web hosting—ensures that every client can find the perfect fit for their unique needs. With a focus on speed, security, and seamless performance, Rabisu is dedicated to providing you with the infrastructure necessary to scale your operations and engage with your audience effectively.

VPS UK

When it comes to vps uk hosting, Rabisu stands out for its unmatched performance and reliability. Our virtual private servers are meticulously designed to cater to businesses that require a scalable and secure hosting environment without compromising on speed or up-time.

With Rabisu, you leverage cutting-edge technology that guarantees exceptional performance, allowing your applications to run smoothly even under high traffic conditions. Our VPS solutions come with full root access, enabling you to customize your environment to meet specific needs.

Additionally, Rabisu offers flexible pricing plans that ensure you get the most bang for your buck. Whether you are a start-up or a well-established organization, our plans can be tailored to suit your requirements. We believe in providing our customers with the best value, ensuring your investment drives the desired results for your business.

With 24/7 customer support, you can rest assured knowing our expert team is always available to assist you with any issues or questions you may have. Choosing Rabisu means choosing peace of mind when it comes to managing your digital infrastructure.

Secure your VPS UK hosting today with Rabisu, and take the first step towards a more efficient and scalable online presence. Visit Rabisu to get started now!

Hosting

When it comes to reliable and efficient hosting solutions, Rabisu offers a range of options tailored specifically for your needs. With our cutting-edge VPS UK hosting, you can expect exceptional performance and stability, ensuring that your website remains online and responsive at all times.

Our hosting provides you with dedicated resources, allowing you to customize your server environment according to your unique specifications. This means you have better control over your website's performance, allowing for faster load times and a superior experience for your users.

Rabisu is committed to delivering top-tier security features with our hosting services. We implement advanced security protocols to protect your data, ensuring peace of mind while you focus on growing your business.

Furthermore, our customer support team is always available, ready to assist you 24/7. Whether you're facing a technical challenge or have questions about configuring your server, our experts are just a call or message away, guaranteeing that you are never left in the dark.

In choosing Rabisu for your VPS UK hosting needs, you are opting for reliability, flexibility, and unparalleled support; what more could you ask for? Take your website to new heights with our outstanding hosting solutions today!

468 notes

·

View notes

Text

How I ditched streaming services and learned to love Linux: A step-by-step guide to building your very own personal media streaming server (V2.0: REVISED AND EXPANDED EDITION)

This is a revised, corrected and expanded version of my tutorial on setting up a personal media server that previously appeared on my old blog (donjuan-auxenfers). I expect that that post is still making the rounds (hopefully with my addendum on modifying group share permissions in Ubuntu to circumvent 0x8007003B "Unexpected Network Error" messages in Windows 10/11 when transferring files) but I have no way of checking. Anyway this new revised version of the tutorial corrects one or two small errors I discovered when rereading what I wrote, adds links to all products mentioned and is just more polished generally. I also expanded it a bit, pointing more adventurous users toward programs such as Sonarr/Radarr/Lidarr and Overseerr which can be used for automating user requests and media collection.

So then, what is this tutorial? This is a tutorial on how to build and set up your own personal media server using Ubuntu as an operating system and Plex (or Jellyfin) to not only manage your media, but to also stream that media to your devices both at home and abroad anywhere in the world where you have an internet connection. Its intent is to show you how building a personal media server and stuffing it full of films, TV, and music that you acquired through indiscriminate and voracious media piracy various legal methods will free you to completely ditch paid streaming services. No more will you have to pay for Disney+, Netflix, HBOMAX, Hulu, Amazon Prime, Peacock, CBS All Access, Paramount+, Crave or any other streaming service that is not named Criterion Channel. Instead whenever you want to watch your favourite films and television shows, you’ll have your own personal service that only features things that you want to see, with files that you have control over. And for music fans out there, both Jellyfin and Plex support music streaming, meaning you can even ditch music streaming services. Goodbye Spotify, Youtube Music, Tidal and Apple Music, welcome back unreasonably large MP3 (or FLAC) collections.

On the hardware front, I’m going to offer a few options catered towards different budgets and media library sizes. The cost of getting a media server up and running using this guide will cost you anywhere from $450 CAD/$325 USD at the low end to $1500 CAD/$1100 USD at the high end (it could go higher). My server was priced closer to the higher figure, but I went and got a lot more storage than most people need. If that seems like a little much, consider for a moment, do you have a roommate, a close friend, or a family member who would be willing to chip in a few bucks towards your little project provided they get access? Well that's how I funded my server. It might also be worth thinking about the cost over time, i.e. how much you spend yearly on subscriptions vs. a one time cost of setting up a server. Additionally there's just the joy of being able to scream "fuck you" at all those show cancelling, library deleting, hedge fund vampire CEOs who run the studios through denying them your money. Drive a stake through David Zaslav's heart.

On the software side I will walk you step-by-step through installing Ubuntu as your server's operating system, configuring your storage as a RAIDz array with ZFS, sharing your zpool to Windows with Samba, running a remote connection between your server and your Windows PC, and then a little about started with Plex/Jellyfin. Every terminal command you will need to input will be provided, and I even share a custom #bash script that will make used vs. available drive space on your server display correctly in Windows.

If you have a different preferred flavour of Linux (Arch, Manjaro, Redhat, Fedora, Mint, OpenSUSE, CentOS, Slackware etc. et. al.) and are aching to tell me off for being basic and using Ubuntu, this tutorial is not for you. The sort of person with a preferred Linux distro is the sort of person who can do this sort of thing in their sleep. Also I don't care. This tutorial is intended for the average home computer user. This is also why we’re not using a more exotic home server solution like running everything through Docker Containers and managing it through a dashboard like Homarr or Heimdall. While such solutions are fantastic and can be very easy to maintain once you have it all set up, wrapping your brain around Docker is a whole thing in and of itself. If you do follow this tutorial and had fun putting everything together, then I would encourage you to return in a year’s time, do your research and set up everything with Docker Containers.

Lastly, this is a tutorial aimed at Windows users. Although I was a daily user of OS X for many years (roughly 2008-2023) and I've dabbled quite a bit with various Linux distributions (mostly Ubuntu and Manjaro), my primary OS these days is Windows 11. Many things in this tutorial will still be applicable to Mac users, but others (e.g. setting up shares) you will have to look up for yourself. I doubt it would be difficult to do so.

Nothing in this tutorial will require feats of computing expertise. All you will need is a basic computer literacy (i.e. an understanding of what a filesystem and directory are, and a degree of comfort in the settings menu) and a willingness to learn a thing or two. While this guide may look overwhelming at first glance, it is only because I want to be as thorough as possible. I want you to understand exactly what it is you're doing, I don't want you to just blindly follow steps. If you half-way know what you’re doing, you will be much better prepared if you ever need to troubleshoot.

Honestly, once you have all the hardware ready it shouldn't take more than an afternoon or two to get everything up and running.

(This tutorial is just shy of seven thousand words long so the rest is under the cut.)

Step One: Choosing Your Hardware

Linux is a light weight operating system, depending on the distribution there's close to no bloat. There are recent distributions available at this very moment that will run perfectly fine on a fourteen year old i3 with 4GB of RAM. Moreover, running Plex or Jellyfin isn’t resource intensive in 90% of use cases. All this is to say, we don’t require an expensive or powerful computer. This means that there are several options available: 1) use an old computer you already have sitting around but aren't using 2) buy a used workstation from eBay, or what I believe to be the best option, 3) order an N100 Mini-PC from AliExpress or Amazon.

Note: If you already have an old PC sitting around that you’ve decided to use, fantastic, move on to the next step.

When weighing your options, keep a few things in mind: the number of people you expect to be streaming simultaneously at any one time, the resolution and bitrate of your media library (4k video takes a lot more processing power than 1080p) and most importantly, how many of those clients are going to be transcoding at any one time. Transcoding is what happens when the playback device does not natively support direct playback of the source file. This can happen for a number of reasons, such as the playback device's native resolution being lower than the file's internal resolution, or because the source file was encoded in a video codec unsupported by the playback device.

Ideally we want any transcoding to be performed by hardware. This means we should be looking for a computer with an Intel processor with Quick Sync. Quick Sync is a dedicated core on the CPU die designed specifically for video encoding and decoding. This specialized hardware makes for highly efficient transcoding both in terms of processing overhead and power draw. Without these Quick Sync cores, transcoding must be brute forced through software. This takes up much more of a CPU’s processing power and requires much more energy. But not all Quick Sync cores are created equal and you need to keep this in mind if you've decided either to use an old computer or to shop for a used workstation on eBay

Any Intel processor from second generation Core (Sandy Bridge circa 2011) onward has Quick Sync cores. It's not until 6th gen (Skylake), however, that the cores support the H.265 HEVC codec. Intel’s 10th gen (Comet Lake) processors introduce support for 10bit HEVC and HDR tone mapping. And the recent 12th gen (Alder Lake) processors brought with them hardware AV1 decoding. As an example, while an 8th gen (Kaby Lake) i5-8500 will be able to hardware transcode a H.265 encoded file, it will fall back to software transcoding if given a 10bit H.265 file. If you’ve decided to use that old PC or to look on eBay for an old Dell Optiplex keep this in mind.

Note 1: The price of old workstations varies wildly and fluctuates frequently. If you get lucky and go shopping shortly after a workplace has liquidated a large number of their workstations you can find deals for as low as $100 on a barebones system, but generally an i5-8500 workstation with 16gb RAM will cost you somewhere in the area of $260 CAD/$200 USD.

Note 2: The AMD equivalent to Quick Sync is called Video Core Next, and while it's fine, it's not as efficient and not as mature a technology. It was only introduced with the first generation Ryzen CPUs and it only got decent with their newest CPUs, we want something cheap.

Alternatively you could forgo having to keep track of what generation of CPU is equipped with Quick Sync cores that feature support for which codecs, and just buy an N100 mini-PC. For around the same price or less of a used workstation you can pick up a mini-PC with an Intel N100 processor. The N100 is a four-core processor based on the 12th gen Alder Lake architecture and comes equipped with the latest revision of the Quick Sync cores. These little processors offer astounding hardware transcoding capabilities for their size and power draw. Otherwise they perform equivalent to an i5-6500, which isn't a terrible CPU. A friend of mine uses an N100 machine as a dedicated retro emulation gaming system and it does everything up to 6th generation consoles just fine. The N100 is also a remarkably efficient chip, it sips power. In fact, the difference between running one of these and an old workstation could work out to hundreds of dollars a year in energy bills depending on where you live.

You can find these Mini-PCs all over Amazon or for a little cheaper on AliExpress. They range in price from $170 CAD/$125 USD for a no name N100 with 8GB RAM to $280 CAD/$200 USD for a Beelink S12 Pro with 16GB RAM. The brand doesn't really matter, they're all coming from the same three factories in Shenzen, go for whichever one fits your budget or has features you want. 8GB RAM should be enough, Linux is lightweight and Plex only calls for 2GB RAM. 16GB RAM might result in a slightly snappier experience, especially with ZFS. A 256GB SSD is more than enough for what we need as a boot drive, but going for a bigger drive might allow you to get away with things like creating preview thumbnails for Plex, but it’s up to you and your budget.

The Mini-PC I wound up buying was a Firebat AK2 Plus with 8GB RAM and a 256GB SSD. It looks like this:

Note: Be forewarned that if you decide to order a Mini-PC from AliExpress, note the type of power adapter it ships with. The mini-PC I bought came with an EU power adapter and I had to supply my own North American power supply. Thankfully this is a minor issue as barrel plug 30W/12V/2.5A power adapters are easy to find and can be had for $10.

Step Two: Choosing Your Storage

Storage is the most important part of our build. It is also the most expensive. Thankfully it’s also the most easily upgrade-able down the line.

For people with a smaller media collection (4TB to 8TB), a more limited budget, or who will only ever have two simultaneous streams running, I would say that the most economical course of action would be to buy a USB 3.0 8TB external HDD. Something like this one from Western Digital or this one from Seagate. One of these external drives will cost you in the area of $200 CAD/$140 USD. Down the line you could add a second external drive or replace it with a multi-drive RAIDz set up such as detailed below.

If a single external drive the path for you, move on to step three.

For people with larger media libraries (12TB+), who prefer media in 4k, or care who about data redundancy, the answer is a RAID array featuring multiple HDDs in an enclosure.

Note: If you are using an old PC or used workstatiom as your server and have the room for at least three 3.5" drives, and as many open SATA ports on your mother board you won't need an enclosure, just install the drives into the case. If your old computer is a laptop or doesn’t have room for more internal drives, then I would suggest an enclosure.

The minimum number of drives needed to run a RAIDz array is three, and seeing as RAIDz is what we will be using, you should be looking for an enclosure with three to five bays. I think that four disks makes for a good compromise for a home server. Regardless of whether you go for a three, four, or five bay enclosure, do be aware that in a RAIDz array the space equivalent of one of the drives will be dedicated to parity at a ratio expressed by the equation 1 − 1/n i.e. in a four bay enclosure equipped with four 12TB drives, if we configured our drives in a RAIDz1 array we would be left with a total of 36TB of usable space (48TB raw size). The reason for why we might sacrifice storage space in such a manner will be explained in the next section.

A four bay enclosure will cost somewhere in the area of $200 CDN/$140 USD. You don't need anything fancy, we don't need anything with hardware RAID controls (RAIDz is done entirely in software) or even USB-C. An enclosure with USB 3.0 will perform perfectly fine. Don’t worry too much about USB speed bottlenecks. A mechanical HDD will be limited by the speed of its mechanism long before before it will be limited by the speed of a USB connection. I've seen decent looking enclosures from TerraMaster, Yottamaster, Mediasonic and Sabrent.

When it comes to selecting the drives, as of this writing, the best value (dollar per gigabyte) are those in the range of 12TB to 20TB. I settled on 12TB drives myself. If 12TB to 20TB drives are out of your budget, go with what you can afford, or look into refurbished drives. I'm not sold on the idea of refurbished drives but many people swear by them.

When shopping for harddrives, search for drives designed specifically for NAS use. Drives designed for NAS use typically have better vibration dampening and are designed to be active 24/7. They will also often make use of CMR (conventional magnetic recording) as opposed to SMR (shingled magnetic recording). This nets them a sizable read/write performance bump over typical desktop drives. Seagate Ironwolf and Toshiba NAS are both well regarded brands when it comes to NAS drives. I would avoid Western Digital Red drives at this time. WD Reds were a go to recommendation up until earlier this year when it was revealed that they feature firmware that will throw up false SMART warnings telling you to replace the drive at the three year mark quite often when there is nothing at all wrong with that drive. It will likely even be good for another six, seven, or more years.

Step Three: Installing Linux

For this step you will need a USB thumbdrive of at least 6GB in capacity, an .ISO of Ubuntu, and a way to make that thumbdrive bootable media.

First download a copy of Ubuntu desktop (for best performance we could download the Server release, but for new Linux users I would recommend against the server release. The server release is strictly command line interface only, and having a GUI is very helpful for most people. Not many people are wholly comfortable doing everything through the command line, I'm certainly not one of them, and I grew up with DOS 6.0. 22.04.3 Jammy Jellyfish is the current Long Term Service release, this is the one to get.

Download the .ISO and then download and install balenaEtcher on your Windows PC. BalenaEtcher is an easy to use program for creating bootable media, you simply insert your thumbdrive, select the .ISO you just downloaded, and it will create a bootable installation media for you.

Once you've made a bootable media and you've got your Mini-PC (or you old PC/used workstation) in front of you, hook it directly into your router with an ethernet cable, and then plug in the HDD enclosure, a monitor, a mouse and a keyboard. Now turn that sucker on and hit whatever key gets you into the BIOS (typically ESC, DEL or F2). If you’re using a Mini-PC check to make sure that the P1 and P2 power limits are set correctly, my N100's P1 limit was set at 10W, a full 20W under the chip's power limit. Also make sure that the RAM is running at the advertised speed. My Mini-PC’s RAM was set at 2333Mhz out of the box when it should have been 3200Mhz. Once you’ve done that, key over to the boot order and place the USB drive first in the boot order. Then save the BIOS settings and restart.

After you restart you’ll be greeted by Ubuntu's installation screen. Installing Ubuntu is really straight forward, select the "minimal" installation option, as we won't need anything on this computer except for a browser (Ubuntu comes preinstalled with Firefox) and Plex Media Server/Jellyfin Media Server. Also remember to delete and reformat that Windows partition! We don't need it.

Step Four: Installing ZFS and Setting Up the RAIDz Array

Note: If you opted for just a single external HDD skip this step and move onto setting up a Samba share.

Once Ubuntu is installed it's time to configure our storage by installing ZFS to build our RAIDz array. ZFS is a "next-gen" file system that is both massively flexible and massively complex. It's capable of snapshot backup, self healing error correction, ZFS pools can be configured with drives operating in a supplemental manner alongside the storage vdev (e.g. fast cache, dedicated secondary intent log, hot swap spares etc.). It's also a file system very amenable to fine tuning. Block and sector size are adjustable to use case and you're afforded the option of different methods of inline compression. If you'd like a very detailed overview and explanation of its various features and tips on tuning a ZFS array check out these articles from Ars Technica. For now we're going to ignore all these features and keep it simple, we're going to pull our drives together into a single vdev running in RAIDz which will be the entirety of our zpool, no fancy cache drive or SLOG.

Open up the terminal and type the following commands:

sudo apt update

then

sudo apt install zfsutils-linux

This will install the ZFS utility. Verify that it's installed with the following command:

zfs --version

Now, it's time to check that the HDDs we have in the enclosure are healthy, running, and recognized. We also want to find out their device IDs and take note of them:

sudo fdisk -1

Note: You might be wondering why some of these commands require "sudo" in front of them while others don't. "Sudo" is short for "super user do”. When and where "sudo" is used has to do with the way permissions are set up in Linux. Only the "root" user has the access level to perform certain tasks in Linux. As a matter of security and safety regular user accounts are kept separate from the "root" user. It's not advised (or even possible) to boot into Linux as "root" with most modern distributions. Instead by using "sudo" our regular user account is temporarily given the power to do otherwise forbidden things. Don't worry about it too much at this stage, but if you want to know more check out this introduction.

If everything is working you should get a list of the various drives detected along with their device IDs which will look like this: /dev/sdc. You can also check the device IDs of the drives by opening the disk utility app. Jot these IDs down as we'll need them for our next step, creating our RAIDz array.

RAIDz is similar to RAID-5 in that instead of striping your data over multiple disks, exchanging redundancy for speed and available space (RAID-0), or mirroring your data writing by two copies of every piece (RAID-1), it instead writes parity blocks across the disks in addition to striping, this provides a balance of speed, redundancy and available space. If a single drive fails, the parity blocks on the working drives can be used to reconstruct the entire array as soon as a replacement drive is added.

Additionally, RAIDz improves over some of the common RAID-5 flaws. It's more resilient and capable of self healing, as it is capable of automatically checking for errors against a checksum. It's more forgiving in this way, and it's likely that you'll be able to detect when a drive is dying well before it fails. A RAIDz array can survive the loss of any one drive.

Note: While RAIDz is indeed resilient, if a second drive fails during the rebuild, you're fucked. Always keep backups of things you can't afford to lose. This tutorial, however, is not about proper data safety.

To create the pool, use the following command:

sudo zpool create "zpoolnamehere" raidz "device IDs of drives we're putting in the pool"

For example, let's creatively name our zpool "mypool". This poil will consist of four drives which have the device IDs: sdb, sdc, sdd, and sde. The resulting command will look like this:

sudo zpool create mypool raidz /dev/sdb /dev/sdc /dev/sdd /dev/sde

If as an example you bought five HDDs and decided you wanted more redundancy dedicating two drive to this purpose, we would modify the command to "raidz2" and the command would look something like the following:

sudo zpool create mypool raidz2 /dev/sdb /dev/sdc /dev/sdd /dev/sde /dev/sdf

An array configured like this is known as RAIDz2 and is able to survive two disk failures.

Once the zpool has been created, we can check its status with the command:

zpool status

Or more concisely with:

zpool list

The nice thing about ZFS as a file system is that a pool is ready to go immediately after creation. If we were to set up a traditional RAID-5 array using mbam, we'd have to sit through a potentially hours long process of reformatting and partitioning the drives. Instead we're ready to go right out the gates.

The zpool should be automatically mounted to the filesystem after creation, check on that with the following:

df -hT | grep zfs

Note: If your computer ever loses power suddenly, say in event of a power outage, you may have to re-import your pool. In most cases, ZFS will automatically import and mount your pool, but if it doesn’t and you can't see your array, simply open the terminal and type sudo zpool import -a.

By default a zpool is mounted at /"zpoolname". The pool should be under our ownership but let's make sure with the following command:

sudo chown -R "yourlinuxusername" /"zpoolname"

Note: Changing file and folder ownership with "chown" and file and folder permissions with "chmod" are essential commands for much of the admin work in Linux, but we won't be dealing with them extensively in this guide. If you'd like a deeper tutorial and explanation you can check out these two guides: chown and chmod.

You can access the zpool file system through the GUI by opening the file manager (the Ubuntu default file manager is called Nautilus) and clicking on "Other Locations" on the sidebar, then entering the Ubuntu file system and looking for a folder with your pool's name. Bookmark the folder on the sidebar for easy access.

Your storage pool is now ready to go. Assuming that we already have some files on our Windows PC we want to copy to over, we're going to need to install and configure Samba to make the pool accessible in Windows.

Step Five: Setting Up Samba/Sharing

Samba is what's going to let us share the zpool with Windows and allow us to write to it from our Windows machine. First let's install Samba with the following commands:

sudo apt-get update

then

sudo apt-get install samba

Next create a password for Samba.

sudo smbpswd -a "yourlinuxusername"

It will then prompt you to create a password. Just reuse your Ubuntu user password for simplicity's sake.

Note: if you're using just a single external drive replace the zpool location in the following commands with wherever it is your external drive is mounted, for more information see this guide on mounting an external drive in Ubuntu.

After you've created a password we're going to create a shareable folder in our pool with this command

mkdir /"zpoolname"/"foldername"

Now we're going to open the smb.conf file and make that folder shareable. Enter the following command.

sudo nano /etc/samba/smb.conf

This will open the .conf file in nano, the terminal text editor program. Now at the end of smb.conf add the following entry:

["foldername"]

path = /"zpoolname"/"foldername"

available = yes

valid users = "yourlinuxusername"

read only = no

writable = yes

browseable = yes

guest ok = no

Ensure that there are no line breaks between the lines and that there's a space on both sides of the equals sign. Our next step is to allow Samba traffic through the firewall:

sudo ufw allow samba

Finally restart the Samba service:

sudo systemctl restart smbd

At this point we'll be able to access to the pool, browse its contents, and read and write to it from Windows. But there's one more thing left to do, Windows doesn't natively support the ZFS file systems and will read the used/available/total space in the pool incorrectly. Windows will read available space as total drive space, and all used space as null. This leads to Windows only displaying a dwindling amount of "available" space as the drives are filled. We can fix this! Functionally this doesn't actually matter, we can still write and read to and from the disk, it just makes it difficult to tell at a glance the proportion of used/available space, so this is an optional step but one I recommend (this step is also unnecessary if you're just using a single external drive). What we're going to do is write a little shell script in #bash. Open nano with the terminal with the command:

nano

Now insert the following code:

#!/bin/bash CUR_PATH=`pwd` ZFS_CHECK_OUTPUT=$(zfs get type $CUR_PATH 2>&1 > /dev/null) > /dev/null if [[ $ZFS_CHECK_OUTPUT == *not\ a\ ZFS* ]] then IS_ZFS=false else IS_ZFS=true fi if [[ $IS_ZFS = false ]] then df $CUR_PATH | tail -1 | awk '{print $2" "$4}' else USED=$((`zfs get -o value -Hp used $CUR_PATH` / 1024)) > /dev/null AVAIL=$((`zfs get -o value -Hp available $CUR_PATH` / 1024)) > /dev/null TOTAL=$(($USED+$AVAIL)) > /dev/null echo $TOTAL $AVAIL fi

Save the script as "dfree.sh" to /home/"yourlinuxusername" then change the ownership of the file to make it executable with this command:

sudo chmod 774 dfree.sh

Now open smb.conf with sudo again:

sudo nano /etc/samba/smb.conf

Now add this entry to the top of the configuration file to direct Samba to use the results of our script when Windows asks for a reading on the pool's used/available/total drive space:

[global]

dfree command = /home/"yourlinuxusername"/dfree.sh

Save the changes to smb.conf and then restart Samba again with the terminal:

sudo systemctl restart smbd

Now there’s one more thing we need to do to fully set up the Samba share, and that’s to modify a hidden group permission. In the terminal window type the following command:

usermod -a -G sambashare “yourlinuxusername”

Then restart samba again:

sudo systemctl restart smbd

If we don’t do this last step, everything will appear to work fine, and you will even be able to see and map the drive from Windows and even begin transferring files, but you'd soon run into a lot of frustration. As every ten minutes or so a file would fail to transfer and you would get a window announcing “0x8007003B Unexpected Network Error”. This window would require your manual input to continue the transfer with the file next in the queue. And at the end it would reattempt to transfer whichever files failed the first time around. 99% of the time they’ll go through that second try, but this is still all a major pain in the ass. Especially if you’ve got a lot of data to transfer or you want to step away from the computer for a while.

It turns out samba can act a little weirdly with the higher read/write speeds of RAIDz arrays and transfers from Windows, and will intermittently crash and restart itself if this group option isn’t changed. Inputting the above command will prevent you from ever seeing that window.

The last thing we're going to do before switching over to our Windows PC is grab the IP address of our Linux machine. Enter the following command:

hostname -I

This will spit out this computer's IP address on the local network (it will look something like 192.168.0.x), write it down. It might be a good idea once you're done here to go into your router settings and reserving that IP for your Linux system in the DHCP settings. Check the manual for your specific model router on how to access its settings, typically it can be accessed by opening a browser and typing http:\\192.168.0.1 in the address bar, but your router may be different.

Okay we’re done with our Linux computer for now. Get on over to your Windows PC, open File Explorer, right click on Network and click "Map network drive". Select Z: as the drive letter (you don't want to map the network drive to a letter you could conceivably be using for other purposes) and enter the IP of your Linux machine and location of the share like so: \\"LINUXCOMPUTERLOCALIPADDRESSGOESHERE"\"zpoolnamegoeshere"\. Windows will then ask you for your username and password, enter the ones you set earlier in Samba and you're good. If you've done everything right it should look something like this:

You can now start moving media over from Windows to the share folder. It's a good idea to have a hard line running to all machines. Moving files over Wi-Fi is going to be tortuously slow, the only thing that’s going to make the transfer time tolerable (hours instead of days) is a solid wired connection between both machines and your router.

Step Six: Setting Up Remote Desktop Access to Your Server

After the server is up and going, you’ll want to be able to access it remotely from Windows. Barring serious maintenance/updates, this is how you'll access it most of the time. On your Linux system open the terminal and enter:

sudo apt install xrdp

Then:

sudo systemctl enable xrdp

Once it's finished installing, open “Settings” on the sidebar and turn off "automatic login" in the User category. Then log out of your account. Attempting to remotely connect to your Linux computer while you’re logged in will result in a black screen!

Now get back on your Windows PC, open search and look for "RDP". A program called "Remote Desktop Connection" should pop up, open this program as an administrator by right-clicking and selecting “run as an administrator”. You’ll be greeted with a window. In the field marked “Computer” type in the IP address of your Linux computer. Press connect and you'll be greeted with a new window and prompt asking for your username and password. Enter your Ubuntu username and password here.

If everything went right, you’ll be logged into your Linux computer. If the performance is sluggish, adjust the display options. Lowering the resolution and colour depth do a lot to make the interface feel snappier.

Remote access is how we're going to be using our Linux system from now, barring edge cases like needing to get into the BIOS or upgrading to a new version of Ubuntu. Everything else from performing maintenance like a monthly zpool scrub to checking zpool status and updating software can all be done remotely.

This is how my server lives its life now, happily humming and chirping away on the floor next to the couch in a corner of the living room.

Step Seven: Plex Media Server/Jellyfin

Okay we’ve got all the ground work finished and our server is almost up and running. We’ve got Ubuntu up and running, our storage array is primed, we’ve set up remote connections and sharing, and maybe we’ve moved over some of favourite movies and TV shows.

Now we need to decide on the media server software to use which will stream our media to us and organize our library. For most people I’d recommend Plex. It just works 99% of the time. That said, Jellyfin has a lot to recommend it by too, even if it is rougher around the edges. Some people run both simultaneously, it’s not that big of an extra strain. I do recommend doing a little bit of your own research into the features each platform offers, but as a quick run down, consider some of the following points:

Plex is closed source and is funded through PlexPass purchases while Jellyfin is open source and entirely user driven. This means a number of things: for one, Plex requires you to purchase a “PlexPass” (purchased as a one time lifetime fee $159.99 CDN/$120 USD or paid for on a monthly or yearly subscription basis) in order to access to certain features, like hardware transcoding (and we want hardware transcoding) or automated intro/credits detection and skipping, Jellyfin offers some of these features for free through plugins. Plex supports a lot more devices than Jellyfin and updates more frequently. That said, Jellyfin's Android and iOS apps are completely free, while the Plex Android and iOS apps must be activated for a one time cost of $6 CDN/$5 USD. But that $6 fee gets you a mobile app that is much more functional and features a unified UI across platforms, the Plex mobile apps are simply a more polished experience. The Jellyfin apps are a bit of a mess and the iOS and Android versions are very different from each other.

Jellyfin’s actual media player is more fully featured than Plex's, but on the other hand Jellyfin's UI, library customization and automatic media tagging really pale in comparison to Plex. Streaming your music library is free through both Jellyfin and Plex, but Plex offers the PlexAmp app for dedicated music streaming which boasts a number of fantastic features, unfortunately some of those fantastic features require a PlexPass. If your internet is down, Jellyfin can still do local streaming, while Plex can fail to play files unless you've got it set up a certain way. Jellyfin has a slew of neat niche features like support for Comic Book libraries with the .cbz/.cbt file types, but then Plex offers some free ad-supported TV and films, they even have a free channel that plays nothing but Classic Doctor Who.

Ultimately it's up to you, I settled on Plex because although some features are pay-walled, it just works. It's more reliable and easier to use, and a one-time fee is much easier to swallow than a subscription. I had a pretty easy time getting my boomer parents and tech illiterate brother introduced to and using Plex and I don't know if I would've had as easy a time doing that with Jellyfin. I do also need to mention that Jellyfin does take a little extra bit of tinkering to get going in Ubuntu, you’ll have to set up process permissions, so if you're more tolerant to tinkering, Jellyfin might be up your alley and I’ll trust that you can follow their installation and configuration guide. For everyone else, I recommend Plex.

So pick your poison: Plex or Jellyfin.

Note: The easiest way to download and install either of these packages in Ubuntu is through Snap Store.

After you've installed one (or both), opening either app will launch a browser window into the browser version of the app allowing you to set all the options server side.

The process of adding creating media libraries is essentially the same in both Plex and Jellyfin. You create a separate libraries for Television, Movies, and Music and add the folders which contain the respective types of media to their respective libraries. The only difficult or time consuming aspect is ensuring that your files and folders follow the appropriate naming conventions:

Plex naming guide for Movies

Plex naming guide for Television

Jellyfin follows the same naming rules but I find their media scanner to be a lot less accurate and forgiving than Plex. Once you've selected the folders to be scanned the service will scan your files, tagging everything and adding metadata. Although I find do find Plex more accurate, it can still erroneously tag some things and you might have to manually clean up some tags in a large library. (When I initially created my library it tagged the 1963-1989 Doctor Who as some Korean soap opera and I needed to manually select the correct match after which everything was tagged normally.) It can also be a bit testy with anime (especially OVAs) be sure to check TVDB to ensure that you have your files and folders structured and named correctly. If something is not showing up at all, double check the name.

Once that's done, organizing and customizing your library is easy. You can set up collections, grouping items together to fit a theme or collect together all the entries in a franchise. You can make playlists, and add custom artwork to entries. It's fun setting up collections with posters to match, there are even several websites dedicated to help you do this like PosterDB. As an example, below are two collections in my library, one collecting all the entries in a franchise, the other follows a theme.

My Star Trek collection, featuring all eleven television series, and thirteen films.

My Best of the Worst collection, featuring sixty-nine films previously showcased on RedLetterMedia’s Best of the Worst. They’re all absolutely terrible and I love them.

As for settings, ensure you've got Remote Access going, it should work automatically and be sure to set your upload speed after running a speed test. In the library settings set the database cache to 2000MB to ensure a snappier and more responsive browsing experience, and then check that playback quality is set to original/maximum. If you’re severely bandwidth limited on your upload and have remote users, you might want to limit the remote stream bitrate to something more reasonable, just as a note of comparison Netflix’s 1080p bitrate is approximately 5Mbps, although almost anyone watching through a chromium based browser is streaming at 720p and 3mbps. Other than that you should be good to go. For actually playing your files, there's a Plex app for just about every platform imaginable. I mostly watch television and films on my laptop using the Windows Plex app, but I also use the Android app which can broadcast to the chromecast connected to the TV in the office and the Android TV app for our smart TV. Both are fully functional and easy to navigate, and I can also attest to the OS X version being equally functional.

Part Eight: Finding Media

Now, this is not really a piracy tutorial, there are plenty of those out there. But if you’re unaware, BitTorrent is free and pretty easy to use, just pick a client (qBittorrent is the best) and go find some public trackers to peruse. Just know now that all the best trackers are private and invite only, and that they can be exceptionally difficult to get into. I’m already on a few, and even then, some of the best ones are wholly out of my reach.

If you decide to take the left hand path and turn to Usenet you’ll have to pay. First you’ll need to sign up with a provider like Newshosting or EasyNews for access to Usenet itself, and then to actually find anything you’re going to need to sign up with an indexer like NZBGeek or NZBFinder. There are dozens of indexers, and many people cross post between them, but for more obscure media it’s worth checking multiple. You’ll also need a binary downloader like SABnzbd. That caveat aside, Usenet is faster, bigger, older, less traceable than BitTorrent, and altogether slicker. I honestly prefer it, and I'm kicking myself for taking this long to start using it because I was scared off by the price. I’ve found so many things on Usenet that I had sought in vain elsewhere for years, like a 2010 Italian film about a massacre perpetrated by the SS that played the festival circuit but never received a home media release; some absolute hero uploaded a rip of a festival screener DVD to Usenet. Anyway, figure out the rest of this shit on your own and remember to use protection, get yourself behind a VPN, use a SOCKS5 proxy with your BitTorrent client, etc.

On the legal side of things, if you’re around my age, you (or your family) probably have a big pile of DVDs and Blu-Rays sitting around unwatched and half forgotten. Why not do a bit of amateur media preservation, rip them and upload them to your server for easier access? (Your tools for this are going to be Handbrake to do the ripping and AnyDVD to break any encryption.) I went to the trouble of ripping all my SCTV DVDs (five box sets worth) because none of it is on streaming nor could it be found on any pirate source I tried. I’m glad I did, forty years on it’s still one of the funniest shows to ever be on TV.

Part Nine/Epilogue: Sonarr/Radarr/Lidarr and Overseerr

There are a lot of ways to automate your server for better functionality or to add features you and other users might find useful. Sonarr, Radarr, and Lidarr are a part of a suite of “Servarr” services (there’s also Readarr for books and Whisparr for adult content) that allow you to automate the collection of new episodes of TV shows (Sonarr), new movie releases (Radarr) and music releases (Lidarr). They hook in to your BitTorrent client or Usenet binary newsgroup downloader and crawl your preferred Torrent trackers and Usenet indexers, alerting you to new releases and automatically grabbing them. You can also use these services to manually search for new media, and even replace/upgrade your existing media with better quality uploads. They’re really a little tricky to set up on a bare metal Ubuntu install (ideally you should be running them in Docker Containers), and I won’t be providing a step by step on installing and running them, I’m simply making you aware of their existence.

The other bit of kit I want to make you aware of is Overseerr which is a program that scans your Plex media library and will serve recommendations based on what you like. It also allows you and your users to request specific media. It can even be integrated with Sonarr/Radarr/Lidarr so that fulfilling those requests is fully automated.

And you're done. It really wasn't all that hard. Enjoy your media. Enjoy the control you have over that media. And be safe in the knowledge that no hedgefund CEO motherfucker who hates the movies but who is somehow in control of a major studio will be able to disappear anything in your library as a tax write-off.

1K notes

·

View notes

Text

How to use DXVK with The Sims 3

Have you seen this post about using DXVK by Criisolate? But felt intimidated by the sheer mass of facts and information?

@desiree-uk and I compiled a guide and the configuration file to make your life easier. It focuses on players not using the EA App, but it might work for those just the same. It’s definitely worth a try.

Adding this to your game installation will result in a better RAM usage. So your game is less likely to give you Error 12 or crash due to RAM issues. It does NOT give a huge performance boost, but more stability and allows for higher graphics settings in game.

The full guide behind the cut. Let me know if you also would like it as PDF.

Happy simming!

Disclaimer and Credits

Desiree and I are no tech experts and just wrote down how we did this. Our ability to help if you run into trouble is limited. So use at your own risk and back up your files!

We both are on Windows 10 and start the game via TS3W.exe, not the EA App. So your experience may differ.

This guide is based on our own experiments and of course criisolate’s post on tumblr: https://www.tumblr.com/criisolate/749374223346286592/ill-explain-what-i-did-below-before-making-any

This guide is brought to you by Desiree-UK and Norn.

Compatibility

Note: This will conflict with other programs that “inject” functionality into your game so they may stop working. Notably

Reshade

GShade

Nvidia Experience/Nvidia Inspector/Nvidia Shaders

RivaTuner Statistics Server

It does work seamlessly with LazyDuchess’ Smooth Patch.

LazyDuchess’ Launcher: unknown

Alder Lake patch: does conflict. One user got it working by starting the game by launching TS3.exe (also with admin rights) instead of TS3W.exe. This seemed to create the cache file for DXVK. After that, the game could be started from TS3W.exe again. That might not work for everyone though.

A word on FPS and V-Sync

With such an old game it’s crucial to cap framerate (FPS). This is done in the DXVK.conf file. Same with V-Sync.

You need

a text editor (easiest to use is Windows Notepad)

to download DXVK, version 2.3.1 from here: https://github.com/doitsujin/DXVK/releases/tag/v2.3.1 Extract the archive, you are going to need the file d3d9.dll from the x32 folder

the configuration file DXVK.conf from here: https://github.com/doitsujin/DXVK/blob/master/DXVK.conf. Optional: download the edited version with the required changes here.

administrator rights on your PC

to know your game’s installation path (bin folder) and where to find the user folder

a tiny bit of patience :)

First Step: Backup

Backup your original Bin folder in your Sims 3 installation path! The DXVK file may overwrite some files! The path should be something like this (for retail): \Program Files (x86)\Electronic Arts\The Sims 3\Game\Bin (This is the folder where also GraphicsRule.sgr and the TS3W.exe and TS3.exe are located.)

Backup your options.ini in your game’s user folder! Making the game use the DXVK file will count as a change in GPU driver, so the options.ini will reset once you start your game after installation. The path should be something like this: \Documents\Electronic Arts\The Sims 3 (This is the folder where your Mods folder is located).

Preparations

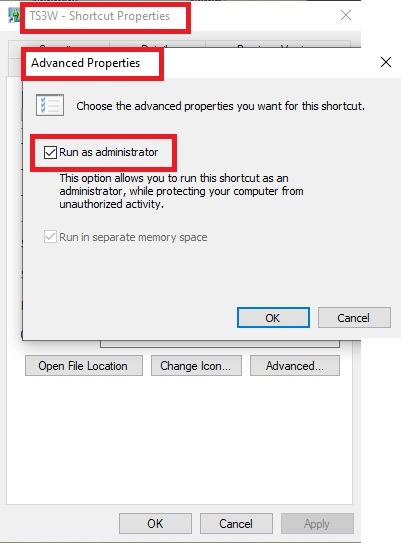

Make sure you run the game as administrator. You can check that by right-clicking on the icon that starts your game. Go to Properties > Advanced and check the box “Run as administrator”. Note: This will result in a prompt each time you start your game, if you want to allow this application to make modifications to your system. Click “Yes” and the game will load.

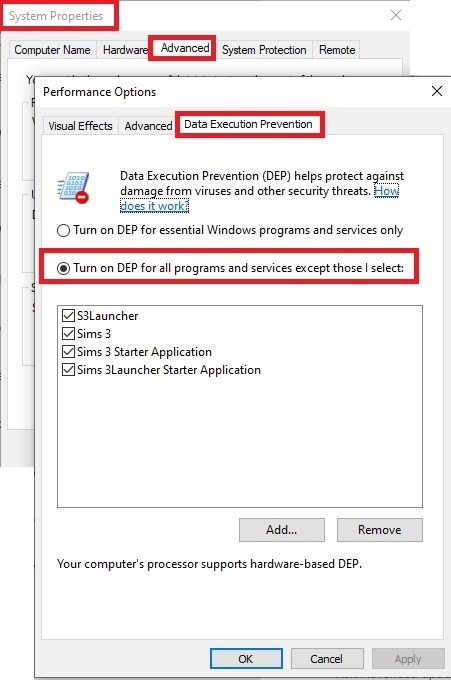

2. Make sure you have the DEP settings from Windows applied to your game.

Open the Windows Control Panel.

Click System and Security > System > Advanced System Settings.

On the Advanced tab, next to the Performance heading, click Settings.

Click the Data Execution Prevention tab.

Select 'Turn on DEP for all programs and services except these”:

Click the Add button, a window to the file explorer opens. Navigate to your Sims 3 installation folder (the bin folder once again) and add TS3W.exe and TS3.exe.

Click OK. Then you can close all those dialog windows again.

Setting up the DXVK.conf file

Open the file with a text editor and delete everything in it. Then add these values:

d3d9.textureMemory = 1

d3d9.presentInterval = 1

d3d9.maxFrameRate = 60

d3d9.presentInterval enables V-Sync,d3d9.maxFrameRate sets the FrameRate. You can edit those values, but never change the first line (d3d9.textureMemory)!

The original DXVK.conf contains many more options in case you would like to add more settings.

A. no Reshade/GShade

Setting up DXVK

Copy the two files d3d9.dll and DXVK.conf into the Bin folder in your Sims 3 installation path. This is the folder where also GraphicsRule.sgr and the TS3W.exe and TS3.exe are located. If you are prompted to overwrite files, please choose yes (you DID backup your folder, right?)

And that’s basically all that is required to install.

Start your game now and let it run for a short while. Click around, open Buy mode or CAS, move the camera.

Now quit without saving. Once the game is closed fully, open your bin folder again and double check if a file “TS3W.DXVK-cache” was generated. If so – congrats! All done!

Things to note

Heads up, the game options will reset! So it will give you a “vanilla” start screen and options.

Don’t worry if the game seems to be frozen during loading. It may take a few minutes longer to load but it will load eventually.

The TS3W.DXVK-cache file is the actual cache DXVK is using. So don’t delete this! Just ignore it and leave it alone. When someone tells to clear cache files – this is not one of them!

Update Options.ini

Go to your user folder and open the options.ini file with a text editor like Notepad.

Find the line “lastdevice = “. It will have several values, separated by semicolons. Copy the last one, after the last semicolon, the digits only. Close the file.

Now go to your backup version of the Options.ini file, open it and find that line “lastdevice” again. Replace the last value with the one you just copied. Make sure to only replace those digits!

Save and close the file.

Copy this version of the file into your user folder, replacing the one that is there.

Things to note:

If your GPU driver is updated, you might have to do these steps again as it might reset your device ID again. Though it seems that the DXVK ID overrides the GPU ID, so it might not happen.

How do I know it’s working?

Open the task manager and look at RAM usage. Remember the game can only use 4 GB of RAM at maximum and starts crashing when usage goes up to somewhere between 3.2 – 3.8 GB (it’s a bit different for everybody).

So if you see values like 2.1456 for RAM usage in a large world and an ongoing save, it’s working. Generally the lower the value, the better for stability.

Also, DXVK will have generated its cache file called TS3W.DXVK-cache in the bin folder. The file size will grow with time as DXVK is adding stuff to it, e.g. from different worlds or savegames. Initially it might be something like 46 KB or 58 KB, so it’s really small.

Optional: changing MemCacheBudgetValue

MemCacheBudgetValue determines the size of the game's VRAM Cache. You can edit those values but the difference might not be noticeable in game. It also depends on your computer’s hardware how much you can allow here.

The two lines of seti MemCacheBudgetValue correspond to the high RAM level and low RAM level situations. Therefore, theoretically, the first line MemCacheBudgetValue should be set to a larger value, while the second line should be set to a value less than or equal to the first line.

The original values represent 200MB (209715200) and 160MB (167772160) respectively. They are calculated as 200x1024x1024=209175200 and 160x1024x1024=167772160.

Back up your GraphicsRules.sgr file! If you make a mistake here, your game won’t work anymore.

Go to your bin folder and open your GraphicsRules.sgr with a text editor.

Search and find two lines that set the variables for MemCacheBudgetValue.

Modify these two values to larger numbers. Make sure the value in the first line is higher or equals the value in the second line. Examples for values: 1073741824, which means 1GB 2147483648 which means 2 GB. -1 (minus 1) means no limit (but is highly experimental, use at own risk)

Save and close the file. It might prompt you to save the file to a different place and not allow you to save in the Bin folder. Just save it someplace else in this case and copy/paste it to the Bin folder afterwards. If asked to overwrite the existing file, click yes.

Now start your game and see if it makes a difference in smoothness or texture loading. Make sure to check RAM and VRAM usage to see how it works.

You might need to change the values back and forth to find the “sweet spot” for your game. Mine seems to work best with setting the first value to 2147483648 and the second to 1073741824.

Uninstallation

Delete these files from your bin folder (installation path):

d3d9.dll

DXVK.conf

TS3W.DXVK-cache

And if you have it, also TS3W_d3d9.log

if you changed the values in your GraphicsRule.sgr file, too, don’t forget to change them back or to replace the file with your backed up version.

OR

delete the bin folder and add it from your backup again.

B. with Reshade/GShade

Follow the steps from part A. no Reshade/Gshade to set up DXVK.

If you are already using Reshade (RS) or GShade (GS), you will be prompted to overwrite files, so choose YES. RS and GS may stop working, so you will need to reinstall them.

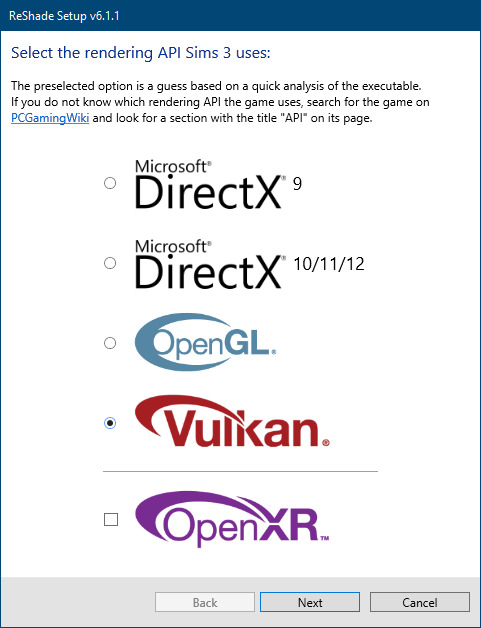

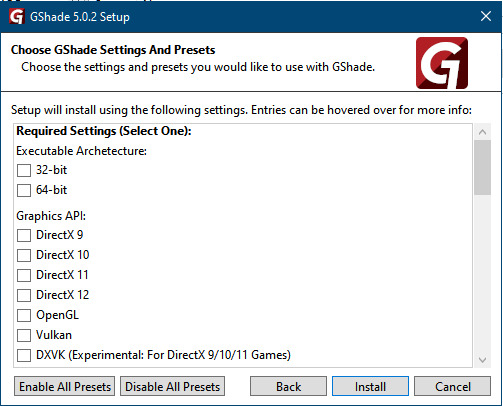

Whatever version you are using, the interface shows similar options of which API you can choose from (these screenshots are from the latest versions of RS and GS).

Please note:

Each time you install and uninstall DXVK, switching the game between Vulkan and d3d9, is essentially changing the graphics card ID again, which results in the settings in your options.ini file being repeatedly reset.

ReShade interface

Choose – Vulcan

Click next and choose your preferred shaders.

Hopefully this install method works and it won't install its own d3d9.dll file.

If it doesn't work, then choose DirectX9 in RS, but you must make sure to replace the d3d9.dll file with DXVK's d3d9.dll (the one from its 32bit folder, checking its size is 3.86mb.)

GShade interface

Choose –

Executable Architecture: 32bit

Graphics API: DXVK

Hooking: Normal Mode

GShade is very problematic, it won't work straight out of the box and the overlay doesn't show up, which defeats the purpose of using it if you can't add or edit the shaders you want to use.

Check the game's bin folder, making sure the d3d9.dll is still there and its size is 3.86mb - that is DXVK's dll file.

If installing using the DXVK method doesn't work, you can choose the DirectX method, but there is no guarantee it works either.

The game will not run with these files in the folder:

d3d10core.dll

d3d11.dll

dxgi.dll

If you delete them, the game will start but you can't access GShade! It might be better to use ReShade.

Some Vulcan and DirectX information, if you’re interested:

Vulcan is for rather high end graphic cards but is backward compatible with some older cards. Try this method with ReShade or GShade first.

DirectX is more stable and works best with older cards and systems. Try this method if Vulcan doesn't work with ReShade/GShade in your game – remember to replace the d3d9.dll with DXVK's d3d9.dll.

For more information on the difference between Vulcan and DirectX, see this article:

https://www.howtogeek.com/884042/vulkan-vs-DirectX-12/

748 notes

·

View notes

Text

So, things on the archive have been a bit dicey the past month as the Mods have been investigating the ongoing DDoS attack and various options available to us to mitigate both the current attack and future attacks. I’ll lead with the big thing.

Planned Archive Downtime

The archive will be down for a bit as we configure the new security settings on the back end. The downtime could be as little as a few minutes or up to 72 hours and we anticipate some people will get access sooner than others. We don’t like it, but it’s for the best, and will hopefully allow us to resume full accessibility to all members.

When Is the Site Going Offline?

Approximately 9PM EDT, May 13 (Tuesday evening). While you have a couple of days, you should start preparing immediately.

Prepare? What Do You Mean By That?

Mostly, just be forewarned that the downtime is coming. Don’t panic if you can’t access the site or receive an error message.

You might want to take the time to download any ePubs for fics you are reading or plan to read soon. Likewise, while we don’t anticipate this will impact the site’s content, it’s never a bad idea to back up important things, such as story comments.

What’s the Security Solution You’re Implementing?

A very common, highly trusted, and broadly used security solution called Cloudflare. It’s the same that AO3 implemented following their DDoS attack. We have completed troubleshooting this solution on our test site.

How Did You Troubleshoot?

Once we got Cloudflare up and running, the Mods went to the test site and tried to break it. We wanted to replicate the errors we initially experienced, like the Forbidden/Forbidden 403 error message. A solution that works for only some people isn’t a viable long-term solution.

This is an imperfect process, but we were able to post stories, comments, news items, images, emojis, and all other normal archive activity without incident, while maintaining the strictest security settings available on the back-end.

What Happens if I Still Experience an Error Once Everything Is Back Up?

All members should continue reporting any unusual errors, messages, and so forth as they occur to [email protected].

How Do I Stay Updated While The Site Is Down?

Important updates will be made on all of EF’s active social platforms: Instagram, Bluesky, Threads, and Facebook. You may also join our Discord server. Please note that only EF members are allowed in the Discord server, and your server name must match your EF username.

Why Was EF Targeted?

No clue, and we’re not going to speculate on who is behind it or their motives. Doesn’t matter — Spuffy always walks through the fire and emerges stronger. So will we.

#elysianfieldsarchive#elysian fields archive#archive update#spuffy#elysian fields discord server#ddos#spuffy is endgame#spuffy forever

41 notes

·

View notes

Text

How to Watch IPTV on Phone?

How to Watch IPTV on Phone: Full Step-by-Step Guide

In today’s fast-paced world, the ability to stream your favorite live TV channels, sports, and movies directly from your smartphone is more convenient than ever. Thanks to IPTV (Internet Protocol Television), you can now watch high-quality content on the go, right from your Android or iOS phone.

This complete guide will show you how to watch IPTV on your phone, step-by-step, with real examples from IPTV providers like StreamView IPTV and Digitalizard. Whether you use Android or iPhone, this tutorial will help you get started easily.

What is IPTV?

IPTV (Internet Protocol Television) delivers live TV channels and on-demand video content through internet connections, rather than traditional cable or satellite. With IPTV, all you need is:

A reliable IPTV subscription (e.g., Streamview IPTV or Digitalizard),

An IPTV player app,

A good internet connection.

Requirements to Watch IPTV on Your Phone

To watch IPTV on your smartphone, you need:

📶 Stable internet connection (minimum 10 Mbps recommended)

📲 A compatible IPTV app for Android or iOS

🔐 Your IPTV credentials (M3U playlist link or Xtream Codes)

🔄 An updated Android or iOS device

How to Watch IPTV on Android Phone? Step-by-Step Guide

Step 1: Get Your IPTV Subscription

Sign up with a reliable IPTV provider like:

🔹 Streamview IPTV – Offers M3U and Xtream Code login, with 24/7 channels and VOD content.

🔹 Digitalizard – Known for HD quality and global channels, compatible with many IPTV players.

Once registered, they’ll email you:

M3U Playlist URL

Or Xtream Codes (Username, Password, and Server URL)

Step 2: Download a Reliable IPTV Player App

Some of the best IPTV apps for Android:

IPTV Smarters Pro

TiviMate IPTV Player

XCIPTV Player

GSE Smart IPTV

Go to Google Play Store, search for one of these apps, and install it.

Step 3: Load IPTV Playlist

Open the IPTV app (e.g., IPTV Smarters Pro).

Choose how you want to login:

Load Your Playlist or File URL (M3U)

Login with Xtream Codes API

Enter the details you received from Streamview IPTV or Digitalizard.

Tap Add User and wait for the channels to load.

Start streaming your favorite live TV channels, sports, or movies.

How to Watch IPTV on iPhone (iOS)? Step-by-Step Guide

Step 1: Subscribe to IPTV Service

Choose from verified providers like:

Streamview IPTV – Offers multi-device support including iOS.

Digitalizard – Offers fast delivery of login details and mobile-compatible links.

Step 2: Download an IPTV Player App for iOS

Top IPTV apps for iPhone:

IPTV Smarters – Player

GSE Smart IPTV

iPlayTV

Smarters Player Lite

Go to the App Store, search for one of these apps, and install it.

Step 3: Configure the App

Open the IPTV app on your iPhone.

Select either Xtream Codes Login or M3U Playlist option.

Enter:

Server URL

Username

Password (provided by Streamview IPTV or Digitalizard)

Tap Login and wait for the channel list to load.

Enjoy streaming HD content directly on your iPhone.

Key Features You’ll Enjoy

When using services like Streamview IPTV or Digitalizard, here’s what you typically get:

✅ 10,000+ Live TV Channels

✅ Video On Demand (Movies, TV Shows)

✅ 24/7 Sports & PPV Channels

✅ EPG (Electronic Program Guide)

✅ Catch-up & Recording Options (depends on player)

✅ Anti-freeze Technology

Use a VPN for Secure Streaming

To protect your privacy and avoid ISP throttling, it’s recommended to use a VPN while streaming IPTV on your phone. Apps like NordVPN, ExpressVPN, or Surfshark work great with mobile devices.

Troubleshooting Tips

Buffering? Switch to a lower-quality stream or use a VPN.

Can’t login? Double-check M3U/Xtream details or contact support.

App not loading? Clear cache or reinstall the IPTV player.

Final Thoughts

Watching IPTV on your phone is one of the easiest ways to enjoy live TV, sports, movies, and shows wherever you go. Whether you're using Android or iPhone, all you need is a trusted IPTV provider like Streamview IPTV or Digitalizard, and a reliable IPTV player app.

With a simple setup and internet access, you’ll have 24/7 entertainment right in your pocket.

FAQs

Can I watch IPTV on multiple devices?

Yes, both Streamview IPTV and Digitalizard support multi-device use. Check your plan for simultaneous connections.

Is it legal to use IPTV on my phone?

Using licensed IPTV services is legal. Avoid using pirated or unverified sources.

Do I need a VPN for mobile IPTV?

A VPN is not mandatory but is highly recommended for security and privacy.

Can I record IPTV on my phone?

Some apps like XCIPTV support recording on Android. iOS options may be limited.

#blog#blog intro#ask blog#blogging#shifting blog#tech#iptv subscription#best iptv#iptv#iptv service#iptv usa#abonnement iptv#iptv firestick

3 notes

·

View notes

Text

Mandalorian's playing a game in the covert on Nevarro. Concept Art by Anton Grandert. Image from StarWars.Com

NOTE: This is a retelling of an existing story from my collection of Mandalorian Tales. It has been edited and updated to reflect some Canon specific changes (Djarin vs. Din as the Mandalorian's first name) as well as minor story edits. Enjoy.

Grogu makes a friend Part 1 / At the Cin Vhetin

The tavern was quiet. Almost too quiet given the time of day. The Mandalorian and his foundling didn’t like it. They were waiting for Ahsoka Tano to meet them and it was well past the time for her to be there. Din motioned to the server to come over. He would just pay the tab and leave. Obviously something had gone sideways.

The server came over and the Mandalorian could swear they had met before, but he couldn’t recall where. You rarely saw a human female with such strange scars. They were almost like tattoos, but they didn’t look like they had been made purposefully. Whatever accident had produced them, it must have been painful.

“Take Grogu out the back way, Mandalorian.”

The woman spoke quietly as she slipped a light saber and a metal baton out of her voluminous robes and readied herself for an unseen assailant.

“Who are you?”

Din Djarin was on his feet and Grogu had already hopped into his carry bag.

“A friend. Grogu, make him leave.”

The human female smiled at them briefly and then walked toward the tavern’s entryway.

Djarin looked around and saw that the few other patrons had already disappeared as well as the Iktotchi bartender. What did they know that he hadn’t noticed?

Grogu began to flood the bounty hunter’s mind with images of the back rooms of the bar, showing him a safe way for them to leave. Djarin resisted the urge to swat those images away and then considered his options. Given the fact that other people had already abandoned the place, he decided that discretion was appropriate. He and Grogu reached the partially hidden door to the back rooms just as a small flood of battle droids tried to enter the tavern.

The human woman waited until the doorway was jammed with the droids and ignited her lightsaber. The Mandalorian had never seen anything like it. Most light sabers were a single uniform color in his experience, limited though that was. This one was iridescent and seemed to shift colors at the holder’s whim. The battle droids took the presence of the light saber to be a threat and began to fire their blasters at the wielder.

He couldn’t help himself. He watched with fascination as she deflected each blast back into the droids. Their configuration at the door hampered them considerably and she was slicing them into a pile of smoking parts before they had even managed to get all the way into the building. Who was she?

“I told you to take Grogu out of here. Your window of escape to your ship is closing Mandalorian! I can deal with this, now go!”

This time she emphasized her words by directing the next blaster bolt, from a second wave of droids, to the floor by his foot.

Djarin finally turned and he and Grogu left the tavern. He had no idea who their benefactor was and wondered if he’d ever learn her name. It certainly wasn’t Ahsoka Tano.

As he wound his way out of the maze of alley behind the Cin V’hetin, Djarin had a few questions for his foundling, who was quiet but still looking smug about something.

“Grogu, do you know her? That woman at the tavern?”

Grogu coo’d and burbled a response.

“I see. You know of her. What ‘of’ her do you know?”

This really wasn’t the moment for Grogu to hold his peace. Usually he’d be lecturing Djarin about everything he’d told the Mandalorian already but knew his dad had ignored it.

Instead, Grogu began to explain everything, just as they came to halt at a roadway they would have to cross. It provided no cover and they could both hear the sounds of the fight they had just escaped.

“She’s not a Jedi? That’s the important part? She’s a friend of Ahsoka’s. Okay. That’s good to know. What else do you know?”

Grogu sighed.

“What do you mean you can’t tell me? I think I should know considering the circumstances.”

Djarin peered down the road from the side of the building and saw nothing remarkable. The sound of the fighting had stopped. He hoped that was a good sign and decided to risk crossing the roadway.

Grogu continued his modified lecture.

“Brethren? Great, another cult.” The Mandalorian trotted across the road, acutely aware of how exposed he and Grogu really were. They made it across without incident and continued down the path to the landing zone and their ship.

They were almost at the entryway when a bright red blaster bolt blazed through the air and connected with the Mandalorian’s partially protected right knee. He grunted at the pain, but didn’t falter, pulling his sidearm as he looked for his assailant and continued to make his way into the landing area.

What he saw was the human female from the tavern physically lift and throw his attacker presumably, still brandishing a blaster, onto the roadway he just crossed. With a swift strike, using a baton, the blaster was shattered as was the hand that had held it. Djarin saw her look over at them just after that occurred and without hesitation she ran over to join him and Grogu.

“I thought I told you to get to your ship.”

The woman snapped as she took a look at the Mandalorian’s injury. To Djarin’s surprise she didn’t even seem winded from her activities.

She chuckled, as if she had heard his thoughts.

“Lucky for you I like Mandalorians, Din Djarin. Come on. I will heal you on the ship.”

She put an arm around his waist and helped him hobble through the dock and to the spot that his ship should have occupied. Grogu babbled at her the whole way.

“Dank Farrik!”

“I thought this might happen. Come on. My ship is over here.”

She turned and nodded toward another path.

“Who are you?”

Din Djarin didn’t believe in this kind of luck and he certainly didn’t believe it could hold out any longer. He refused to move.

“Grogu, you could have told him my name.”

She spoke to the small foundling, who hadn’t cared for the Mandalorian’s tone of voice at all.

“I am called Mira Ost here. But my name is Ta’lan Bet. Ahsoka Tano asked me to keep an eye on you two. You’ve had a busy day today.”

She said as she turned to face him better. She held his gaze and that was unusual in his experience. Most people didn’t like being stared at by any Mandalorian, let alone one that was injured and angry.

Then he considered her words for a moment. Andui was not a large city, but it was the largest city on Takodana. Maybe he had seen her somewhere else that day?

“Where is Ahsoka Tano? I came here to meet her.”

Djarin had to ask the obvious question. He was not happy about this at all, no matter how content Grogu seemed.

“She was detained. And no, I do not know where she is. She contacted me and asked for a favor and I agreed. It is that simple. Now, if you’ll walk with me, I will take you to my ship and heal that wound.”

He looked at the woman again and tried to gage whether or not she could be trusted. This might just be a trick. But how would Grogu know who she was unless she was able to connect to him through the Force? He sighed.

“Fine. Let’s make it quick.”

He relented.

“As you wish.”

She set a reasonable pace and was silent, which he considered a blessing considering how much grief Grogu was now giving him.

When they reached a YT-1300 light freighter she stopped and turned her attention to both of them.

“Welcome to the Bard. We should be safe enough on her while I work out what happened to draw so much attention to the two of you. And heal you of course.”

The Mandalorian watched a ramp lower and saw two forms exit the ship. One was an astro-mech. The other was an HK series assassin droid. What! He reached for his side arm, but felt her hand on his before he could complete the motion.

“That is HK. It does not fulfill its original programming. It’s my co-pilot for this vessel. It will do nothing to harm you, unless you think being called a ‘meat bag’ is harm. We never worked out how to fix that. Right this way.”

6 notes

·

View notes

Note

What are you using to organize and browse your photos on the server?

I'm running Memories, a NextCloud app.

So far it's pretty good! I just dumped over 700GB of images on it and it indexed them all in a couple hours. Previews might take longer to generate, and it is a little slow when you hit a batch of images that haven't got previews yet, but I imagine that in steady state it'll work very well.

It's basically a Google Photos clone, you get a unified timeline of all your photos plus albums. There's plugins to enable facial recognition and even object recognition so that you can search by that, and you can configure the matching smartphone app to sync your photos as you go.

There's other options I've seen people recommend, mainly PhotoPrism and Immich. I might try those out, and it shouldn't be too hard to run multiple image organizers simultaneously for comparison since currently my photos are stored in an External Storage mount for NextCloud. That's mostly so that TrueNAS can make it available as an NFS share, which is a much faster and more reliable way to copy 700GB of photos than trying to upload through the NextCloud interface.

15 notes

·

View notes

Text

Polludrone