#Smart Software Testing

Explore tagged Tumblr posts

Text

http://www.gqattech.com/

https://www.instagram.com/gqattech/

https://x.com/GQATTECH

#seo#seo services#aeo#digital marketing#blog#AITesting#QualityAssurance#SoftwareTesting#TestAutomation#GQATTech#IntelligentQA#BugFreeSoftware#MLinQA#AgileTesting#STLC#AI Testing Services#Artificial Intelligence in QA#AI-Powered Software Testing#AI Automation in Testing#Machine Learning for QA#Intelligent Test Automation#Smart Software Testing#Predictive Bug Detection#AI Regression Testing#NLP in QA Testing#Software Testing Services#Quality Assurance Experts#End-to-End QA Solutions#Test Case Automation#Software QA Company

1 note

·

View note

Text

#I know this has been posted but I wanted to include the part about him helping with developing software for F2 and F3 sims#our smart boi our superstar our world champion right there!#max verstappen#f1#testing

243 notes

·

View notes

Text

A summary of the Chinese AI situation, for the uninitiated.

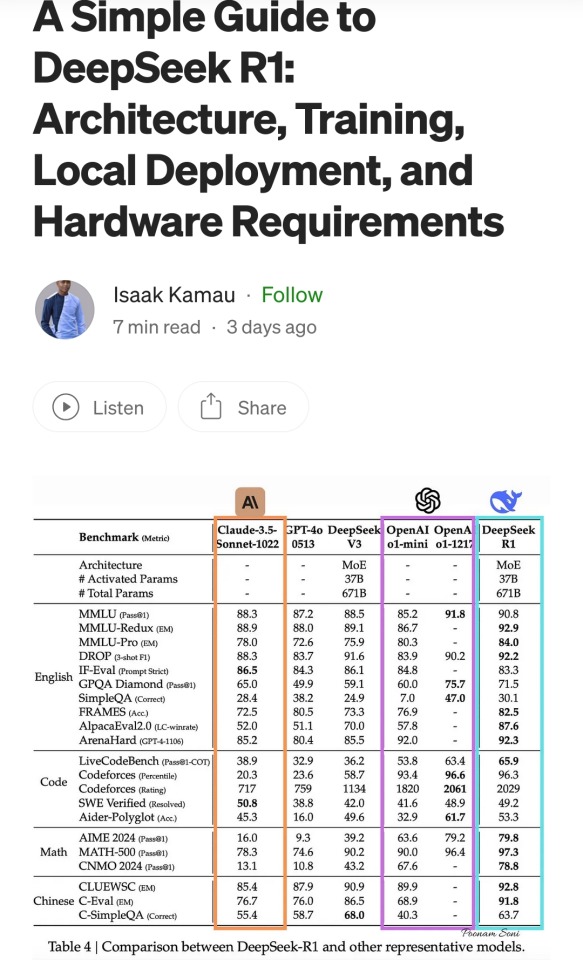

These are scores on different tests that are designed to see how accurate a Large Language Model is in different areas of knowledge. As you know, OpenAI is partners with Microsoft, so these are the scores for ChatGPT and Copilot. DeepSeek is the Chinese model that got released a week ago. The rest are open source models, which means everyone is free to use them as they please, including the average Tumblr user. You can run them from the servers of the companies that made them for a subscription, or you can download them to install locally on your own computer. However, the computer requirements so far are so high that only a few people currently have the machines at home required to run it.

Yes, this is why AI uses so much electricity. As with any technology, the early models are highly inefficient. Think how a Ford T needed a long chimney to get rid of a ton of black smoke, which was unused petrol. Over the next hundred years combustion engines have become much more efficient, but they still waste a lot of energy, which is why we need to move towards renewable electricity and sustainable battery technology. But that's a topic for another day.

As you can see from the scores, are around the same accuracy. These tests are in constant evolution as well: as soon as they start becoming obsolete, new ones are released to adjust for a more complicated benchmark. The new models are trained using different machine learning techniques, and in theory, the goal is to make them faster and more efficient so they can operate with less power, much like modern cars use way less energy and produce far less pollution than the Ford T.

However, computing power requirements kept scaling up, so you're either tied to the subscription or forced to pay for a latest gen PC, which is why NVIDIA, AMD, Intel and all the other chip companies were investing hard on much more powerful GPUs and NPUs. For now all we need to know about those is that they're expensive, use a lot of electricity, and are required to operate the bots at superhuman speed (literally, all those clickbait posts about how AI was secretly 150 Indian men in a trenchcoat were nonsense).

Because the chip companies have been working hard on making big, bulky, powerful chips with massive fans that are up to the task, their stock value was skyrocketing, and because of that, everyone started to use AI as a marketing trend. See, marketing people are not smart, and they don't understand computers. Furthermore, marketing people think you're stupid, and because of their biased frame of reference, they think you're two snores short of brain-dead. The entire point of their existence is to turn tall tales into capital. So they don't know or care about what AI is or what it's useful for. They just saw Number Go Up for the AI companies and decided "AI is a magic cow we can milk forever". Sometimes it's not even AI, they just use old software and rebrand it, much like convection ovens became air fryers.

Well, now we're up to date. So what did DepSeek release that did a 9/11 on NVIDIA stock prices and popped the AI bubble?

Oh, I would not want to be an OpenAI investor right now either. A token is basically one Unicode character (it's more complicated than that but you can google that on your own time). That cost means you could input the entire works of Stephen King for under a dollar. Yes, including electricity costs. DeepSeek has jumped from a Ford T to a Subaru in terms of pollution and water use.

The issue here is not only input cost, though; all that data needs to be available live, in the RAM; this is why you need powerful, expensive chips in order to-

Holy shit.

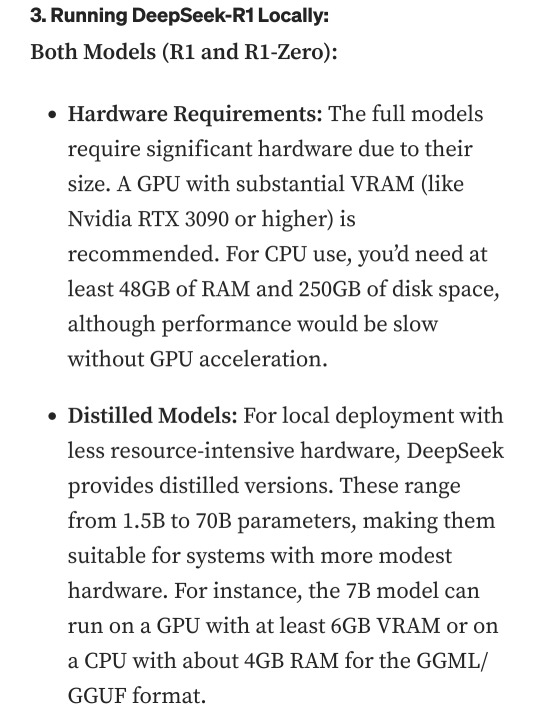

I'm not going to detail all the numbers but I'm going to focus on the chip required: an RTX 3090. This is a gaming GPU that came out as the top of the line, the stuff South Korean LoL players buy…

Or they did, in September 2020. We're currently two generations ahead, on the RTX 5090.

What this is telling all those people who just sold their high-end gaming rig to be able to afford a machine that can run the latest ChatGPT locally, is that the person who bought it from them can run something basically just as powerful on their old one.

Which means that all those GPUs and NPUs that are being made, and all those deals Microsoft signed to have control of the AI market, have just lost a lot of their pulling power.

Well, I mean, the ChatGPT subscription is 20 bucks a month, surely the Chinese are charging a fortune for-

Oh. So it's free for everyone and you can use it or modify it however you want, no subscription, no unpayable electric bill, no handing Microsoft all of your private data, you can just run it on a relatively inexpensive PC. You could probably even run it on a phone in a couple years.

Oh, if only China had massive phone manufacturers that have a foot in the market everywhere except the US because the president had a tantrum eight years ago.

So… yeah, China just destabilised the global economy with a torrent file.

#valid ai criticism#ai#llms#DeepSeek#ai bubble#ChatGPT#google gemini#claude ai#this is gonna be the dotcom bubble again#hope you don't have stock on anything tech related#computer literacy#tech literacy

433 notes

·

View notes

Text

not to be such a boomer, but I think chatgpt is fucking this generation over, at least in terms of critical thinking and creative skills.

I get that it's easy to use and I probably would've used it if I was in school when it came out.

but damn.

y'all can't just write a fucking email?

also people using it to write essays ... i mean what is the point then?

are you gaming the educational system in pursuit of survival, or are you just unwilling to engage critically with anyone or anything?

is this why media literacy is so fucking ass right now?

learning how to write is learning how to express yourself and communicate with others.

you might not be great at it, but writing can help you rearrange the ideas in your brain. the more you try to articulate yourself, the more you understand yourself. all skills can be honed with time, and the value is not in the product. it's in the process.

it's in humans expressing their thoughts to others, in an attempt to improve how we do things, by building upon foundations and evolving old ideas into innovation.

scraping together a mush of ideas from a software that pulls specific, generic phrases from data made by actual humans... what is that going to teach you or anyone else?

it's just old ideas being recycled by a new generation.

a generation I am seriously concerned about, because digital tests have made it very easy to cheat, which means people aren't just throwing away their critical thinking and problem solving abilities, but foundational knowledge too.

like what the hell is anyone going to know in the future? you don't want to make art, you don't want to understand how the world works, you don't want to know about the history of us?

is it because we all know it's ending soon anyway, or is it just because it's difficult, and we don't want to bother with difficult?

maybe it's both.

but. you know what? on that note, maybe it's whatever.

fuck it, right, let's just have an AI generate "therefore" "in conclusion" and "in addition" statements followed by simplistic ideas copy pasted from a kid who actually wrote a paper thirty years ago.

if climate change is killing us all anyway, maybe generative ai is a good thing.

maybe it'll be a digital archive of who we used to be, a shambling corpse that remains long after the consequences of our decisions catch up with us.

maybe it'll be smart enough to talk to itself when there's no one left to talk to.

it'll talk to itself in phrases we once valued, it'll make art derived from people who used to be alive and breathing and feeling, it'll regurgitate our best ideas in an earnest but hollow approximation of our species.

and it'll be the best thing we ever made. the last thing too.

I don't really believe in fate or destiny, I think all of this was a spectacular bit of luck, but that's a poetic end for us.

chatgpt does poetry.

187 notes

·

View notes

Text

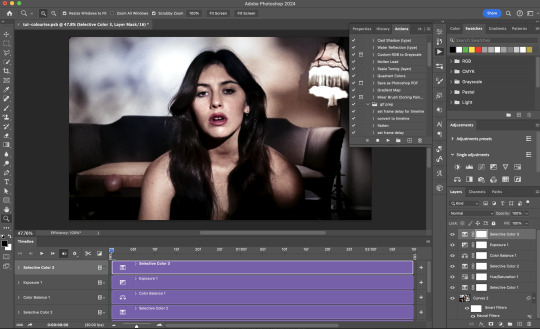

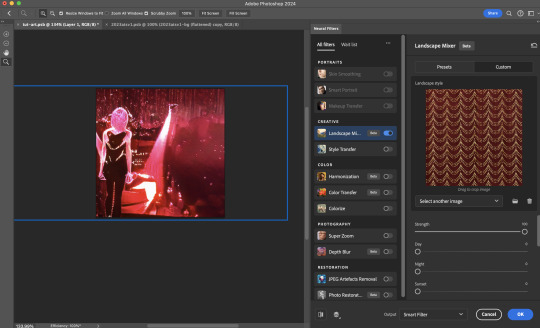

Neural Filters Tutorial for Gifmakers by @antoniosvivaldi

Hi everyone! In light of my blog’s 10th birthday, I’m delighted to reveal my highly anticipated gifmaking tutorial using Neural Filters - a very powerful collection of filters that really broadened my scope in gifmaking over the past 12 months.

Before I get into this tutorial, I want to thank @laurabenanti, @maines , @cobbbvanth, and @cal-kestis for their unconditional support over the course of my journey of investigating the Neural Filters & their valuable inputs on the rendering performance!

In this tutorial, I will outline what the Photoshop Neural Filters do and how I use them in my workflow - multiple examples will be provided for better clarity. Finally, I will talk about some known performance issues with the filters & some feasible workarounds.

Tutorial Structure:

Meet the Neural Filters: What they are and what they do

Why I use Neural Filters? How I use Neural Filters in my giffing workflow

Getting started: The giffing workflow in a nutshell and installing the Neural Filters

Applying Neural Filters onto your gif: Making use of the Neural Filters settings; with multiple examples

Testing your system: recommended if you’re using Neural Filters for the first time

Rendering performance: Common Neural Filters performance issues & workarounds

For quick reference, here are the examples that I will show in this tutorial:

Example 1: Image Enhancement | improving the image quality of gifs prepared from highly compressed video files

Example 2: Facial Enhancement | enhancing an individual's facial features

Example 3: Colour Manipulation | colourising B&W gifs for a colourful gifset

Example 4: Artistic effects | transforming landscapes & adding artistic effects onto your gifs

Example 5: Putting it all together | my usual giffing workflow using Neural Filters

What you need & need to know:

Software: Photoshop 2021 or later (recommended: 2023 or later)*

Hardware: 8GB of RAM; having a supported GPU is highly recommended*

Difficulty: Advanced (requires a lot of patience); knowledge in gifmaking and using video timeline assumed

Key concepts: Smart Layer / Smart Filters

Benchmarking your system: Neural Filters test files**

Supplementary materials: Tutorial Resources / Detailed findings on rendering gifs with Neural Filters + known issues***

*I primarily gif on an M2 Max MacBook Pro that's running Photoshop 2024, but I also have experiences gifmaking on few other Mac models from 2012 ~ 2023.

**Using Neural Filters can be resource intensive, so it’s helpful to run the test files yourself. I’ll outline some known performance issues with Neural Filters and workarounds later in the tutorial.

***This supplementary page contains additional Neural Filters benchmark tests and instructions, as well as more information on the rendering performance (for Apple Silicon-based devices) when subject to heavy Neural Filters gifmaking workflows

Tutorial under the cut. Like / Reblog this post if you find this tutorial helpful. Linking this post as an inspo link will also be greatly appreciated!

1. Meet the Neural Filters!

Neural Filters are powered by Adobe's machine learning engine known as Adobe Sensei. It is a non-destructive method to help streamline workflows that would've been difficult and/or tedious to do manually.

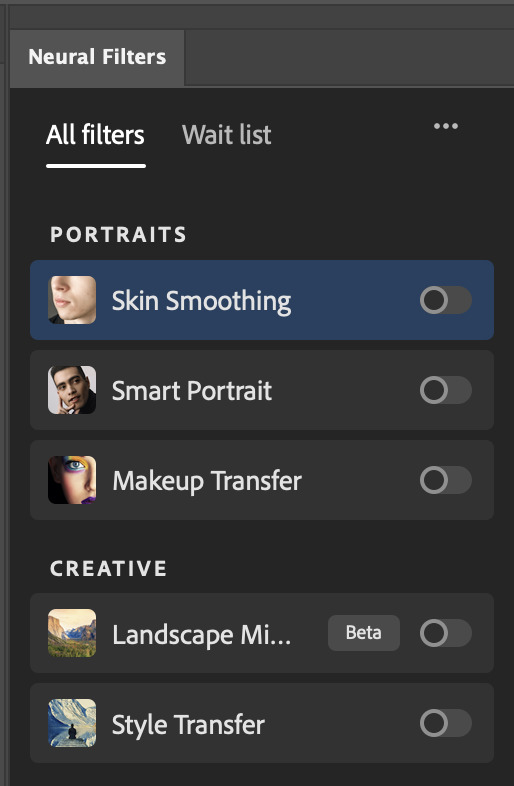

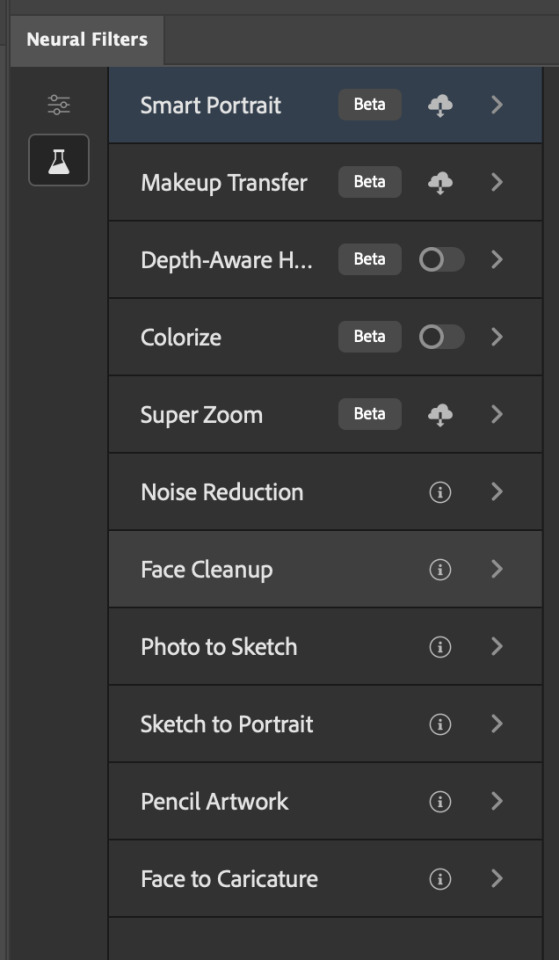

Here are the Neural Filters available in Photoshop 2024:

Skin Smoothing: Removes blemishes on the skin

Smart Portrait: This a cloud-based filter that allows you to change the mood, facial age, hair, etc using the sliders+

Makeup Transfer: Applies the makeup (from a reference image) to the eyes & mouth area of your image

Landscape Mixer: Transforms the landscape of your image (e.g. seasons & time of the day, etc), based on the landscape features of a reference image

Style Transfer: Applies artistic styles e.g. texturings (from a reference image) onto your image

Harmonisation: Applies the colour balance of your image based on the lighting of the background image+

Colour Transfer: Applies the colour scheme (of a reference image) onto your image

Colourise: Adds colours onto a B&W image

Super Zoom: Zoom / crop an image without losing resolution+

Depth Blur: Blurs the background of the image

JPEG Artefacts Removal: Removes artefacts caused by JPEG compression

Photo Restoration: Enhances image quality & facial details

+These three filters aren't used in my giffing workflow. The cloud-based nature of Smart Portrait leads to disjointed looking frames. For Harmonisation, applying this on a gif causes Neural Filter timeout error. Finally, Super Zoom does not currently support output as a Smart Filter

If you're running Photoshop 2021 or earlier version of Photoshop 2022, you will see a smaller selection of Neural Filters:

Things to be aware of:

You can apply up to six Neural Filters at the same time

Filters where you can use your own reference images: Makeup Transfer (portraits only), Landscape Mixer, Style Transfer (not available in Photoshop 2021), and Colour Transfer

Later iterations of Photoshop 2023 & newer: The first three default presets for Landscape Mixer and Colour Transfer are currently broken.

2. Why I use Neural Filters?

Here are my four main Neural Filters use cases in my gifmaking process. In each use case I'll list out the filters that I use:

Enhancing Image Quality:

Common wisdom is to find the highest quality video to gif from for a media release & avoid YouTube whenever possible. However for smaller / niche media (e.g. new & upcoming musical artists), prepping gifs from highly compressed YouTube videos is inevitable.

So how do I get around with this? I have found Neural Filters pretty handy when it comes to both correcting issues from video compression & enhancing details in gifs prepared from these highly compressed video files.

Filters used: JPEG Artefacts Removal / Photo Restoration

Facial Enhancement:

When I prepare gifs from highly compressed videos, something I like to do is to enhance the facial features. This is again useful when I make gifsets from compressed videos & want to fill up my final panel with a close-up shot.

Filters used: Skin Smoothing / Makeup Transfer / Photo Restoration (Facial Enhancement slider)

Colour Manipulation:

Neural Filters is a powerful way to do advanced colour manipulation - whether I want to quickly transform the colour scheme of a gif or transform a B&W clip into something colourful.

Filters used: Colourise / Colour Transfer

Artistic Effects:

This is one of my favourite things to do with Neural Filters! I enjoy using the filters to create artistic effects by feeding textures that I've downloaded as reference images. I also enjoy using these filters to transform the overall the atmosphere of my composite gifs. The gifsets where I've leveraged Neural Filters for artistic effects could be found under this tag on usergif.

Filters used: Landscape Mixer / Style Transfer / Depth Blur

How I use Neural Filters over different stages of my gifmaking workflow:

I want to outline how I use different Neural Filters throughout my gifmaking process. This can be roughly divided into two stages:

Stage I: Enhancement and/or Colourising | Takes place early in my gifmaking process. I process a large amount of component gifs by applying Neural Filters for enhancement purposes and adding some base colourings.++

Stage II: Artistic Effects & more Colour Manipulation | Takes place when I'm assembling my component gifs in the big PSD / PSB composition file that will be my final gif panel.

I will walk through this in more detail later in the tutorial.

++I personally like to keep the size of the component gifs in their original resolution (a mixture of 1080p & 4K), to get best possible results from the Neural Filters and have more flexibility later on in my workflow. I resize & sharpen these gifs after they're placed into my final PSD composition files in Tumblr dimensions.

3. Getting started

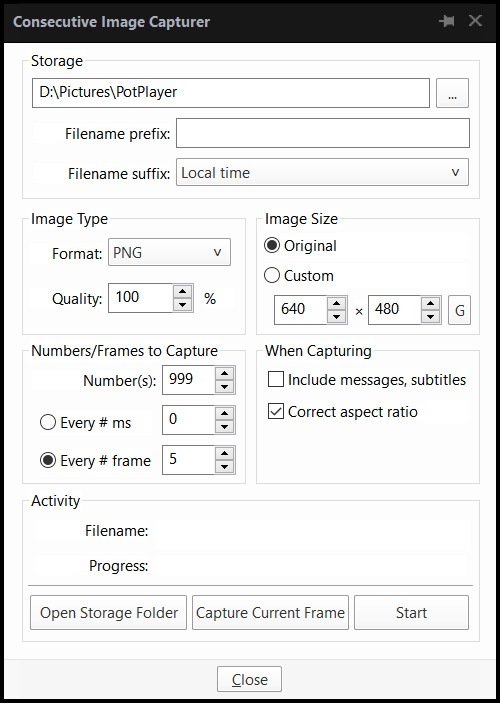

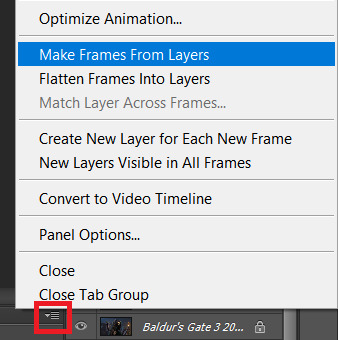

The essence is to output Neural Filters as a Smart Filter on the smart object when working with the Video Timeline interface. Your workflow will contain the following steps:

Prepare your gif

In the frame animation interface, set the frame delay to 0.03s and convert your gif to the Video Timeline

In the Video Timeline interface, go to Filter > Neural Filters and output to a Smart Filter

Flatten or render your gif (either approach is fine). To flatten your gif, play the "flatten" action from the gif prep action pack. To render your gif as a .mov file, go to File > Export > Render Video & use the following settings.

Setting up:

o.) To get started, prepare your gifs the usual way - whether you screencap or clip videos. You should see your prepared gif in the frame animation interface as follows:

Note: As mentioned earlier, I keep the gifs in their original resolution right now because working with a larger dimension document allows more flexibility later on in my workflow. I have also found that I get higher quality results working with more pixels. I eventually do my final sharpening & resizing when I fit all of my component gifs to a main PSD composition file (that's of Tumblr dimension).

i.) To use Smart Filters, convert your gif to a Smart Video Layer.

As an aside, I like to work with everything in 0.03s until I finish everything (then correct the frame delay to 0.05s when I upload my panels onto Tumblr).

For convenience, I use my own action pack to first set the frame delay to 0.03s (highlighted in yellow) and then convert to timeline (highlighted in red) to access the Video Timeline interface. To play an action, press the play button highlighted in green.

Once you've converted this gif to a Smart Video Layer, you'll see the Video Timeline interface as follows:

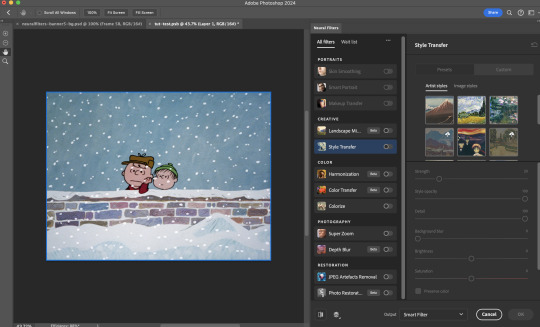

ii.) Select your gif (now as a Smart Layer) and go to Filter > Neural Filters

Installing Neural Filters:

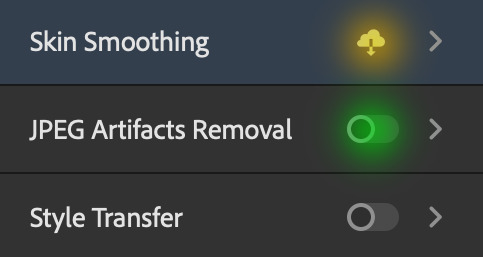

Install the individual Neural Filters that you want to use. If the filter isn't installed, it will show a cloud symbol (highlighted in yellow). If the filter is already installed, it will show a toggle button (highlighted in green)

When you toggle this button, the Neural Filters preview window will look like this (where the toggle button next to the filter that you use turns blue)

4. Using Neural Filters

Once you have installed the Neural Filters that you want to use in your gif, you can toggle on a filter and play around with the sliders until you're satisfied. Here I'll walkthrough multiple concrete examples of how I use Neural Filters in my giffing process.

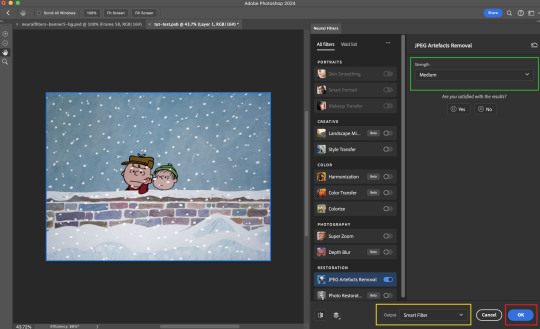

Example 1: Image enhancement | sample gifset

This is my typical Stage I Neural Filters gifmaking workflow. When giffing older or more niche media releases, my main concern is the video compression that leads to a lot of artefacts in the screencapped / video clipped gifs.

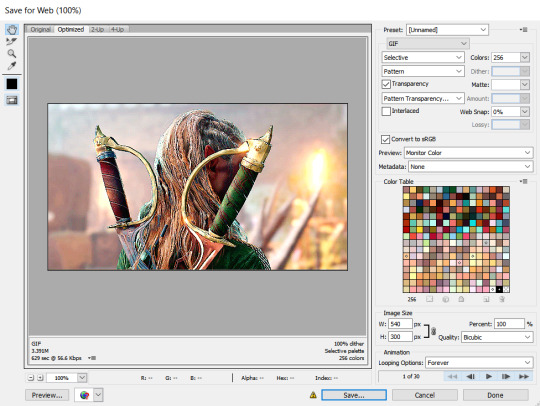

To fix the artefacts from compression, I go to Filter > Neural Filters, and toggle JPEG Artefacts Removal filter. Then I choose the strength of the filter (boxed in green), output this as a Smart Filter (boxed in yellow), and press OK (boxed in red).

Note: The filter has to be fully processed before you could press the OK button!

After applying the Neural Filters, you'll see "Neural Filters" under the Smart Filters property of the smart layer

Flatten / render your gif

Example 2: Facial enhancement | sample gifset

This is my routine use case during my Stage I Neural Filters gifmaking workflow. For musical artists (e.g. Maisie Peters), YouTube is often the only place where I'm able to find some videos to prepare gifs from. However even the highest resolution video available on YouTube is highly compressed.

Go to Filter > Neural Filters and toggle on Photo Restoration. If Photoshop recognises faces in the image, there will be a "Facial Enhancement" slider under the filter settings.

Play around with the Photo Enhancement & Facial Enhancement sliders. You can also expand the "Adjustment" menu make additional adjustments e.g. remove noises and reducing different types of artefacts.

Once you're happy with the results, press OK and then flatten / render your gif.

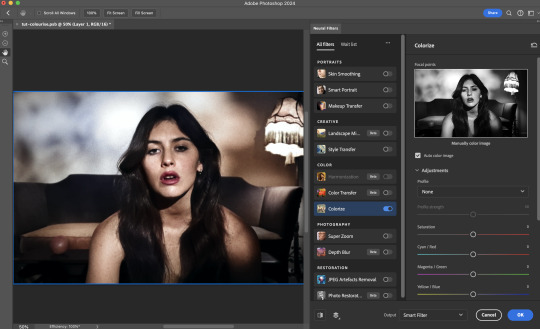

Example 3: Colour Manipulation | sample gifset

Want to make a colourful gifset but the source video is in B&W? This is where Colourise from Neural Filters comes in handy! This same colourising approach is also very helpful for colouring poor-lit scenes as detailed in this tutorial.

Here's a B&W gif that we want to colourise:

Highly recommended: add some adjustment layers onto the B&W gif to improve the contrast & depth. This will give you higher quality results when you colourise your gif.

Go to Filter > Neural Filters and toggle on Colourise.

Make sure "Auto colour image" is enabled.

Play around with further adjustments e.g. colour balance, until you're satisfied then press OK.

Important: When you colourise a gif, you need to double check that the resulting skin tone is accurate to real life. I personally go to Google Images and search up photoshoots of the individual / character that I'm giffing for quick reference.

Add additional adjustment layers until you're happy with the colouring of the skin tone.

Once you're happy with the additional adjustments, flatten / render your gif. And voila!

Note: For Colour Manipulation, I use Colourise in my Stage I workflow and Colour Transfer in my Stage II workflow to do other types of colour manipulations (e.g. transforming the colour scheme of the component gifs)

Example 4: Artistic Effects | sample gifset

This is where I use Neural Filters for the bulk of my Stage II workflow: the most enjoyable stage in my editing process!

Normally I would be working with my big composition files with multiple component gifs inside it. To begin the fun, drag a component gif (in PSD file) to the main PSD composition file.

Resize this gif in the composition file until you're happy with the placement

Duplicate this gif. Sharpen the bottom layer (highlighted in yellow), and then select the top layer (highlighted in green) & go to Filter > Neural Filters

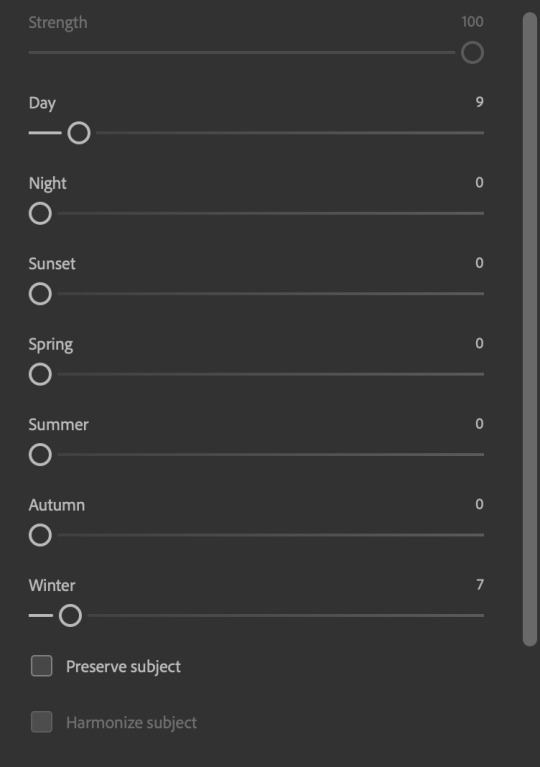

I like to use Style Transfer and Landscape Mixer to create artistic effects from Neural Filters. In this particular example, I've chosen Landscape Mixer

Select a preset or feed a custom image to the filter (here I chose a texture that I've on my computer)

Play around with the different sliders e.g. time of the day / seasons

Important: uncheck "Harmonise Subject" & "Preserve Subject" - these two settings are known to cause performance issues when you render a multiframe smart object (e.g. for a gif)

Once you're happy with the artistic effect, press OK

To ensure you preserve the actual subject you want to gif (bc Preserve Subject is unchecked), add a layer mask onto the top layer (with Neural Filters) and mask out the facial region. You might need to play around with the Layer Mask Position keyframes or Rotoscope your subject in the process.

After you're happy with the masking, flatten / render this composition file and voila!

Example 5: Putting it all together | sample gifset

Let's recap on the Neural Filters gifmaking workflow and where Stage I and Stage II fit in my gifmaking process:

i. Preparing & enhancing the component gifs

Prepare all component gifs and convert them to smart layers

Stage I: Add base colourings & apply Photo Restoration / JPEG Artefacts Removal to enhance the gif's image quality

Flatten all of these component gifs and convert them back to Smart Video Layers (this process can take a lot of time)

Some of these enhanced gifs will be Rotoscoped so this is done before adding the gifs to the big PSD composition file

ii. Setting up the big PSD composition file

Make a separate PSD composition file (Ctrl / Cmmd + N) that's of Tumblr dimension (e.g. 540px in width)

Drag all of the component gifs used into this PSD composition file

Enable Video Timeline and trim the work area

In the composition file, resize / move the component gifs until you're happy with the placement & sharpen these gifs if you haven't already done so

Duplicate the layers that you want to use Neural Filters on

iii. Working with Neural Filters in the PSD composition file

Stage II: Neural Filters to create artistic effects / more colour manipulations!

Mask the smart layers with Neural Filters to both preserve the subject and avoid colouring issues from the filters

Flatten / render the PSD composition file: the more component gifs in your composition file, the longer the exporting will take. (I prefer to render the composition file into a .mov clip to prevent overriding a file that I've spent effort putting together.)

Note: In some of my layout gifsets (where I've heavily used Neural Filters in Stage II), the rendering time for the panel took more than 20 minutes. This is one of the rare instances where I was maxing out my computer's memory.

Useful things to take note of:

Important: If you're using Neural Filters for Colour Manipulation or Artistic Effects, you need to take a lot of care ensuring that the skin tone of nonwhite characters / individuals is accurately coloured

Use the Facial Enhancement slider from Photo Restoration in moderation, if you max out the slider value you risk oversharpening your gif later on in your gifmaking workflow

You will get higher quality results from Neural Filters by working with larger image dimensions: This gives Neural Filters more pixels to work with. You also get better quality results by feeding higher resolution reference images to the Neural Filters.

Makeup Transfer is more stable when the person / character has minimal motion in your gif

You might get unexpected results from Landscape Mixer if you feed a reference image that don't feature a distinctive landscape. This is not always a bad thing: for instance, I have used this texture as a reference image for Landscape Mixer, to create the shimmery effects as seen in this gifset

5. Testing your system

If this is the first time you're applying Neural Filters directly onto a gif, it will be helpful to test out your system yourself. This will help:

Gauge the expected rendering time that you'll need to wait for your gif to export, given specific Neural Filters that you've used

Identify potential performance issues when you render the gif: this is important and will determine whether you will need to fully playback your gif before flattening / rendering the file.

Understand how your system's resources are being utilised: Inputs from Windows PC users & Mac users alike are welcome!

About the Neural Filters test files:

Contains six distinct files, each using different Neural Filters

Two sizes of test files: one copy in full HD (1080p) and another copy downsized to 540px

One folder containing the flattened / rendered test files

How to use the Neural Filters test files:

What you need:

Photoshop 2022 or newer (recommended: 2023 or later)

Install the following Neural Filters: Landscape Mixer / Style Transfer / Colour Transfer / Colourise / Photo Restoration / Depth Blur

Recommended for some Apple Silicon-based MacBook Pro models: Enable High Power Mode

How to use the test files:

For optimal performance, close all background apps

Open a test file

Flatten the test file into frames (load this action pack & play the “flatten” action)

Take note of the time it takes until you’re directed to the frame animation interface

Compare the rendered frames to the expected results in this folder: check that all of the frames look the same. If they don't, you will need to fully playback the test file in full before flattening the file.†

Re-run the test file without the Neural Filters and take note of how long it takes before you're directed to the frame animation interface

Recommended: Take note of how your system is utilised during the rendering process (more info here for MacOS users)

†This is a performance issue known as flickering that I will discuss in the next section. If you come across this, you'll have to playback a gif where you've used Neural Filters (on the video timeline) in full, prior to flattening / rendering it.

Factors that could affect the rendering performance / time (more info):

The number of frames, dimension, and colour bit depth of your gif

If you use Neural Filters with facial recognition features, the rendering time will be affected by the number of characters / individuals in your gif

Most resource intensive filters (powered by largest machine learning models): Landscape Mixer / Photo Restoration (with Facial Enhancement) / and JPEG Artefacts Removal

Least resource intensive filters (smallest machine learning models): Colour Transfer / Colourise

The number of Neural Filters that you apply at once / The number of component gifs with Neural Filters in your PSD file

Your system: system memory, the GPU, and the architecture of the system's CPU+++

+++ Rendering a gif with Neural Filters demands a lot of system memory & GPU horsepower. Rendering will be faster & more reliable on newer computers, as these systems have CPU & GPU with more modern instruction sets that are geared towards machine learning-based tasks.

Additionally, the unified memory architecture of Apple Silicon M-series chips are found to be quite efficient at processing Neural Filters.

6. Performance issues & workarounds

Common Performance issues:

I will discuss several common issues related to rendering or exporting a multi-frame smart object (e.g. your composite gif) that uses Neural Filters below. This is commonly caused by insufficient system memory and/or the GPU.

Flickering frames: in the flattened / rendered file, Neural Filters aren't applied to some of the frames+-+

Scrambled frames: the frames in the flattened / rendered file isn't in order

Neural Filters exceeded the timeout limit error: this is normally a software related issue

Long export / rendering time: long rendering time is expected in heavy workflows

Laggy Photoshop / system interface: having to wait quite a long time to preview the next frame on the timeline

Issues with Landscape Mixer: Using the filter gives ill-defined defined results (Common in older systems)--

Workarounds:

Workarounds that could reduce unreliable rendering performance & long rendering time:

Close other apps running in the background

Work with smaller colour bit depth (i.e. 8-bit rather than 16-bit)

Downsize your gif before converting to the video timeline-+-

Try to keep the number of frames as low as possible

Avoid stacking multiple Neural Filters at once. Try applying & rendering the filters that you want one by one

Specific workarounds for specific issues:

How to resolve flickering frames: If you come across flickering, you will need to playback your gif on the video timeline in full to find the frames where the filter isn't applied. You will need to select all of the frames to allow Photoshop to reprocess these, before you render your gif.+-+

What to do if you come across Neural Filters timeout error? This is caused by several incompatible Neural Filters e.g. Harmonisation (both the filter itself and as a setting in Landscape Mixer), Scratch Reduction in Photo Restoration, and trying to stack multiple Neural Filters with facial recognition features.

If the timeout error is caused by stacking multiple filters, a feasible workaround is to apply the Neural Filters that you want to use one by one over multiple rendering sessions, rather all of them in one go.

+-+This is a very common issue for Apple Silicon-based Macs. Flickering happens when a gif with Neural Filters is rendered without being previously played back in the timeline.

This issue is likely related to the memory bandwidth & the GPU cores of the chips, because not all Apple Silicon-based Macs exhibit this behaviour (i.e. devices equipped with Max / Ultra M-series chips are mostly unaffected).

-- As mentioned in the supplementary page, Landscape Mixer requires a lot of GPU horsepower to be fully rendered. For older systems (pre-2017 builds), there are no workarounds other than to avoid using this filter.

-+- For smaller dimensions, the size of the machine learning models powering the filters play an outsized role in the rendering time (i.e. marginal reduction in rendering time when downsizing 1080p file to Tumblr dimensions). If you use filters powered by larger models e.g. Landscape Mixer and Photo Restoration, you will need to be very patient when exporting your gif.

7. More useful resources on using Neural Filters

Creating animations with Neural Filters effects | Max Novak

Using Neural Filters to colour correct by @edteachs

I hope this is helpful! If you have any questions or need any help related to the tutorial, feel free to send me an ask 💖

#photoshop tutorial#gif tutorial#dearindies#usernik#useryoshi#usershreyu#userisaiah#userroza#userrobin#userraffa#usercats#userriel#useralien#userjoeys#usertj#alielook#swearphil#*#my resources#my tutorials

538 notes

·

View notes

Text

YES!!! I am saved! removed the other mouse from the picture entirely (including the usb dongle, which I'd left in last time) and that seems to have resolved the issue completely. Halleluja it wasn't a windows issue but a mouse button issue...

I think. here comes the weird part:

I am only pressing the left mouse button on my trackpad here, with the usb dongle in. The middle mouse button does not register as pressed in when I am not also pressing left mouse, on my trackpad.

Where on earth is the middle mouse button press coming from there?? I'm fully prepared to believe the scroll wheel is busted but that doesn't explain why it only activates on pressing a physically entirely unrelated left mouse button.

Always nice when an issue you run into on average a couple times per hour has several threads on the forum with no solutions found that have all existed since at least 2023. Windows what the hell are you doing

#computer-smart people help me out here because I am completely lost#actually I lie I'm pretty sure I have a vague idea of what's going on (mouse borken) I just don't entirely get#how mouse borken results in such a wierdly intermittent issue that is fixed upon pressing Ctrl/alt/del#Does the software store a button press somehow??#update: based on some testing I've concluded that my middle mouse button hangs after I press it and then remains pressed#until I use either the left or right mouse button#at which point windows treats the middle button as “released” until I press L mouse or R mouse again#at which points it briefly registers the middle mouse button as pressed again#so anyway I need a new mouse#why control alt delete fixes this cueing issue remains weird though because it's not like that physically un-presses my middle mouse button

3 notes

·

View notes

Text

100 Inventions by Women

LIFE-SAVING/MEDICAL/GLOBAL IMPACT:

Artificial Heart Valve – Nina Starr Braunwald

Stem Cell Isolation from Bone Marrow – Ann Tsukamoto

Chemotherapy Drug Research – Gertrude Elion

Antifungal Antibiotic (Nystatin) – Rachel Fuller Brown & Elizabeth Lee Hazen

Apgar Score (Newborn Health Assessment) – Virginia Apgar

Vaccination Distribution Logistics – Sara Josephine Baker

Hand-Held Laser Device for Cataracts – Patricia Bath

Portable Life-Saving Heart Monitor – Dr. Helen Brooke Taussig

Medical Mask Design – Ellen Ochoa

Dental Filling Techniques – Lucy Hobbs Taylor

Radiation Treatment Research – Cécile Vogt

Ultrasound Advancements – Denise Grey

Biodegradable Sanitary Pads – Arunachalam Muruganantham (with women-led testing teams)

First Computer Algorithm – Ada Lovelace

COBOL Programming Language – Grace Hopper

Computer Compiler – Grace Hopper

FORTRAN/FORUMAC Language Development – Jean E. Sammet

Caller ID and Call Waiting – Dr. Shirley Ann Jackson

Voice over Internet Protocol (VoIP) – Marian Croak

Wireless Transmission Technology – Hedy Lamarr

Polaroid Camera Chemistry / Digital Projection Optics – Edith Clarke

Jet Propulsion Systems Work – Yvonne Brill

Infrared Astronomy Tech – Nancy Roman

Astronomical Data Archiving – Henrietta Swan Leavitt

Nuclear Physics Research Tools – Chien-Shiung Wu

Protein Folding Software – Eleanor Dodson

Global Network for Earthquake Detection – Inge Lehmann

Earthquake Resistant Structures – Edith Clarke

Water Distillation Device – Maria Telkes

Portable Water Filtration Devices – Theresa Dankovich

Solar Thermal Storage System – Maria Telkes

Solar-Powered House – Mária Telkes

Solar Cooker Advancements – Barbara Kerr

Microbiome Research – Maria Gloria Dominguez-Bello

Marine Navigation System – Ida Hyde

Anti-Malarial Drug Work – Tu Youyou

Digital Payment Security Algorithms – Radia Perlman

Wireless Transmitters for Aviation – Harriet Quimby

Contributions to Touchscreen Tech – Dr. Annette V. Simmonds

Robotic Surgery Systems – Paula Hammond

Battery-Powered Baby Stroller – Ann Moore

Smart Textile Sensor Fabric – Leah Buechley

Voice-Activated Devices – Kimberly Bryant

Artificial Limb Enhancements – Aimee Mullins

Crash Test Dummies for Women – Astrid Linder

Shark Repellent – Julia Child

3D Illusionary Display Tech – Valerie Thomas

Biodegradable Plastics – Julia F. Carney

Ink Chemistry for Inkjet Printers – Margaret Wu

Computerised Telephone Switching – Erna Hoover

Word Processor Innovations – Evelyn Berezin

Braille Printer Software – Carol Shaw

⸻

HOUSEHOLD & SAFETY INNOVATIONS:

Home Security System – Marie Van Brittan Brown

Fire Escape – Anna Connelly

Life Raft – Maria Beasley

Windshield Wiper – Mary Anderson

Car Heater – Margaret Wilcox

Toilet Paper Holder – Mary Beatrice Davidson Kenner

Foot-Pedal Trash Can – Lillian Moller Gilbreth

Retractable Dog Leash – Mary A. Delaney

Disposable Diaper Cover – Marion Donovan

Disposable Glove Design – Kathryn Croft

Ice Cream Maker – Nancy Johnson

Electric Refrigerator Improvements – Florence Parpart

Fold-Out Bed – Sarah E. Goode

Flat-Bottomed Paper Bag Machine – Margaret Knight

Square-Bottomed Paper Bag – Margaret Knight

Street-Cleaning Machine – Florence Parpart

Improved Ironing Board – Sarah Boone

Underwater Telescope – Sarah Mather

Clothes Wringer – Ellene Alice Bailey

Coffee Filter – Melitta Bentz

Scotchgard (Fabric Protector) – Patsy Sherman

Liquid Paper (Correction Fluid) – Bette Nesmith Graham

Leak-Proof Diapers – Valerie Hunter Gordon

FOOD/CONVENIENCE/CULTURAL IMPACT:

Chocolate Chip Cookie – Ruth Graves Wakefield

Monopoly (The Landlord’s Game) – Elizabeth Magie

Snugli Baby Carrier – Ann Moore

Barrel-Style Curling Iron – Theora Stephens

Natural Hair Product Line – Madame C.J. Walker

Virtual Reality Journalism – Nonny de la Peña

Digital Camera Sensor Contributions – Edith Clarke

Textile Color Processing – Beulah Henry

Ice Cream Freezer – Nancy Johnson

Spray-On Skin (ReCell) – Fiona Wood

Langmuir-Blodgett Film – Katharine Burr Blodgett

Fish & Marine Signal Flares – Martha Coston

Windshield Washer System – Charlotte Bridgwood

Smart Clothing / Sensor Integration – Leah Buechley

Fibre Optic Pressure Sensors – Mary Lou Jepsen

#women#inventions#technology#world#history#invented#creations#healthcare#home#education#science#feminism#feminist

48 notes

·

View notes

Text

Women pulling Lever on a Drilling Machine, 1978 Lee, Howl & Company Ltd., Tipton, Staffordshire, England photograph by Nick Hedges image credit: Nick Hedges Photography

* * * *

Tim Boudreau

About the whole DOGE-will-rewrite Social Security's COBOL code in some new language thing, since this is a subject I have a whole lot of expertise in, a few anecdotes and thoughts.

Some time in the early 2000s I was doing some work with the real-time Java team at Sun, and there was a huge defense contractor with a peculiar query: Could we document how much memory an instance of every object type in the JDK uses? And could we guarantee that that number would never change, and definitely never grow, in any future Java version?

I remember discussing this with a few colleagues in a pub after work, and talking it through, and we all arrived at the conclusion that the only appropriate answer to this question as "Hell no." and that it was actually kind of idiotic.

Say you've written the code, in Java 5 or whatever, that launches nuclear missiles. You've tested it thoroughly, it's been reviewed six ways to Sunday because you do that with code like this (or you really, really, really should). It launches missiles and it works.

A new version of Java comes out. Do you upgrade? No, of course you don't upgrade. It works. Upgrading buys you nothing but risk. Why on earth would you? Because you could blow up the world 10 milliseconds sooner after someone pushes the button?

It launches fucking missiles. Of COURSE you don't do that.

There is zero reason to ever do that, and to anyone managing such a project who's a grownup, that's obvious. You don't fuck with things that work just to be one of the cool kids. Especially not when the thing that works is life-or-death (well, in this case, just death).

Another case: In the mid 2000s I trained some developers at Boeing. They had all this Fortran materials analysis code from the 70s - really fussy stuff, so you could do calculations like, if you have a sheet of composite material that is 2mm of this grade of aluminum bonded to that variety of fiberglass with this type of resin, and you drill a 1/2" hole in it, what is the effect on the strength of that airplane wing part when this amount of torque is applied at this angle. Really fussy, hard-to-do but when-it's-right-it's-right-forever stuff.

They were taking a very sane, smart approach to it: Leave the Fortran code as-is - it works, don't fuck with it - just build a nice, friendly graphical UI in Java on top of it that *calls* the code as-is.

We are used to broken software. The public has been trained to expect low quality as a fact of life - and the industry is rife with "agile" methodologies *designed* to churn out crappy software, because crappy guarantees a permanent ongoing revenue stream. It's an article of faith that everything is buggy (and if it isn't, we've got a process or two to sell you that will make it that way).

It's ironic. Every other form of engineering involves moving parts and things that wear and decay and break. Software has no moving parts. Done well, it should need *vastly* less maintenance than your car or the bridges it drives on. Software can actually be *finished* - it is heresy to say it, but given a well-defined problem, it is possible to actually *solve* it and move on, and not need to babysit or revisit it. In fact, most of our modern technological world is possible because of such solved problems. But we're trained to ignore that.

Yeah, COBOL is really long-in-the-tooth, and few people on earth want to code in it. But they have a working system with decades invested in addressing bugs and corner-cases.

Rewriting stuff - especially things that are life-and-death - in a fit of pique, or because of an emotional reaction to the technology used, or because you want to use the toys all the cool kids use - is idiotic. It's immaturity on display to the world.

Doing it with AI that's going to read COBOL code and churn something out in another language - so now you have code no human has read, written and understands - is simply insane. And the best software translators plus AI out there, is going to get things wrong - grievously wrong. And the odds of anyone figuring out what or where before it leads to disaster are low, never mind tracing that back to the original code and figuring out what that was supposed to do.

They probably should find their way off COBOL simply because people who know it and want to endure using it are hard to find and expensive. But you do that gradually, walling off parts of the system that work already and calling them from your language-du-jour, not building any new parts of the system in COBOL, and when you do need to make a change in one of those walled off sections, you migrate just that part.

We're basically talking about something like replacing the engine of a plane while it's flying. Now, do you do that a part-at-a-time with the ability to put back any piece where the new version fails? Or does it sound like a fine idea to vaporize the existing engine and beam in an object which a next-word-prediction software *says* is a contraption that does all the things the old engine did, and hope you don't crash?

The people involved in this have ZERO technical judgement.

#tech#software engineering#reality check#DOGE#computer madness#common sense#sanity#The gang that couldn't shoot straight#COBOL#Nick Hedges#machine world

43 notes

·

View notes

Text

Tech Tuesday: Curtis Everett

Summary: Curtis decides to take the next step and ask if you're willing to meet offline.

A/N: Reader is female. No physical descriptors used.

Warnings: Meeting someone from online, Mentions of past bad experiences. Please let me know if I missed any.

Previous

Series Masterlist

"So, what all has been going on with your computer? Did you bring it with you?" Curtis tries to keep his tone calm. He's been eager to meet you in person for months but has worked hard to make sure you feel safe to do so and that means not pushing you into it. He tries to keep his excited fidgeting to a minimum but he's worried it just makes him look even more suspicious. He's so used to keeping his features schooled into a scowl, but that's the last thing he wants to do to you.

"Basically it'll go for a while but then start freezing, stuttering and I have to force it to shut down before I can do anything else," you answer while sipping your coffee. You can't believe his eyes are as blue as the photo he sent. "I tried to see if it was because I was running some heavy duty games and maybe I was using too much RAM. I cleaned up so many programs, uninstalled a bunch of games I don't play anymore, but it just keeps happening."

Curtis rubs his beard as he thinks. "It might be a hardware issue. It's not my strong suit, but I can still take a look. At the very least I can ask my buddy, Mace, for help. He's a whiz with the hardware and could probably get you a good deal if any upgrades are needed."

"Oh yeah, you've talked about him before," you reassure yourself. Having him mention another stranger worried you but Mace has been brought up several times before, especially when Curtis mentioned getting upgraded tech.

"You definitely don't have to meet him," Curtis affirms. "He can be a real grump. And coming from me, that's saying something." He gives a light chuckle as he sips his coffee.

"Snow, I work at a hospital," you counter. "Ain't no kind of grump I haven't had to deal with before." You give Curtis a look that says 'I'm stronger than you'.

Curtis smiles fully, "absolutely fair, Heart." He struggles a little bit to not turn shy. That look, that strength, that self-assured smile has him weak in the knees. "Still," he coughs, trying to regain control of himself. "Still, uh, when...did you bring the computer with you? Would this be an okay time to take a look at it?"

"Friend of mine at the library is keeping an eye on it for me." Best to let him know you've got allies nearby. He hasn't really given anything to make you worry about, but his reactions to your safety protocols could be telling. Of course, if you were expecting him to look scared or upset at this news, Curtis happily defied those expectations as his eyes lit up.

"Oh, that's really smart! We can borrow one of their laptops to run some tests. That way my laptop's settings won't mess with your computer."

You smile, genuinely, for the first time all day. Curtis is very different and you couldn't be happier about it.

"Don't you need your diagnostic software?"

"If it's called for, sure. The basic versions are free to download and they'll help me pick up if it's actually a software issue. Plus, the libraries regularly wipe downloads after use, right?"

"Okay, everything's plugged in so let's get this running and see what happens."

After looking over the hardware Curtis had found no obvious reasons for the failure so you had gone to your friend and loaned out one of the laptops. Curtis's computer bag had an impressive collection of tools, wires and connectors. You always appreciate when a person is prepared.

He works in relative silence and it gives you the chance to really look at him. He's definitely handsome, as Cassandra pointed out when she loaned you laptop. You weren't normally one for lip piercings but it seemed to work well on him, even though it was partially hidden by his beard.

The quiet between you two isn't so uncomfortable. It's how you spent a lot of time when you started gaming together. Talking over comms almost always resulted in a bunch of idiots either hitting on you, calling you a 'fake gamer,' lobbing insults at you, or all three at the same time. Snowpiercer was one of the few gamers who didn't care that you were a girl gamer. For him it was about how well you worked together. Given that the two of you could get high scores without having to talk over comms, it was no wonder the two of you started playing together more and more.

Even after you'd started voice chatting while playing, there was always a level of respect. Something you hadn't gotten from Chase.

Curtis gets out of the chair and gets a closer look at each of the fans, stopping at the one closest to the heat sink. "There it is," he intones. "This fan is dying. It's easy to miss because, by the time you know something's wrong, the whole computer's shut down and all the fans are stopped."

"Well, at least it's an easy fix," you comment. "Thanks for taking a look at it."

"Not a problem at all," he assures. "I'm happy to help."

You smile, "are you always this nice?"

"No," he shakes his head. "Definitely not. I'm not an ass, but I'm definitely not this nice to everyone."

"I'm honored."

He chuckles at that. "Like I said, I'm happy to help you out. You're the best teammate I've ever had and it just isn't as fun paying without you."

"I appreciate that, Curtis. So, what do I owe you?"

"Nothing."

"Don't do that to me, Snow. You know how I feel about owing people." It was a wound from Chase that had yet to heal. His use of favors as manipulation made you wary of anyone who said you don't owe anything.

Curtis sighs and nods. "Tell you what, there are some pastries at that coffee shop we were at that I avoided because I didn't want you to see me covered in crumbs. Wouldn't make for a good first impression." You smile at the thought. "Just get me a couple of those while I shut everything down here and return the laptop?"

"Deal." You stand to get moving but turn to him before you leave, "and thank you, again. Not just for the computer fix, but for...for respecting the boundaries."

He looks at you with those gorgeous blue eyes, "not a problem."

Next

Tech Tuesday Masterlist

Tagging @alicedopey; @delicatebarness; @ellethespaceunicorn; @icefrozendeadlyqueen; @jaqui-has-a-conspiracy-theory;

@late-to-the-party-81; @lokislady82 ; @peyton-warren @ronearoundblindly; @stellar-solar-flare

#tech tuesday#tech tuesday: curtis everett#curtis everett x reader#curtis everett x nurse!reader#curtis everett x female!reader

55 notes

·

View notes

Note

oooo okay omg imagine lyla knows miguel likes you. he won’t admit it but he does. and she purposely downloads this ‘love meter software. and whenever miguel talks to you at hq, the meter goes like “warning: you have reached maximum love limit, love overflowing” and red lights start flashing and everybody starts panicking 😭😭😭

OMG this reminds of “operation: true love” on webtoon 🤭🤭!!

lyla is known to be silly and eccentric at times always knowing how to rile miguel up or use things she knows about him against him just for the fun of it. we know that lyla likes to take pictures of miguel with filters + recording him at his most embarrassing moments.

lyla is smart, obviously an AI, who you would think wouldn’t have a personality or feeling emotions but she does. she has a mind of her own. miguel had confided in her that he had a crush on you. “oh my god! no way miguel~ who knew you were capable of such feelings!” she said snickering while she had secretly recorded their conversation maybe to one day show you proof that miguel had also liked you back. lyla is the confidant of the both of you. she knows you like him but miguel doesn’t know that you like him back. so she had devised this plan in hopes miguel and you got the memo.

she had installed a software called “love meter” secretly behind miguel’s back. one day she wanted to do a test run on the software as you were present, chatting up with miguel in the control room. miles, gwen,pavitr, hobie, and margo were messing around in the background while letting you and miguel talk.

“warning: you have reached maximum love limit, love overflowing” it appeared on all the screens of the control room, which indicated miguel’s heart beat was rising by just being near you. the little love meter was at 100. the alarm then resounded throughout the HQ, as red flashing lights flickered throughout the entire multi-sectioned building.

everyone’s heads quickly turned towards miguel and you. miguel was about to speak but was soon got off by the gang’s comments and laughter. you couldn’t help but stare at miguel feeling your cheeks heat up feeling a tad bit shy. you were genuinely shocked by the turn of evidentes.

“i knew he had a crush on y/n!” gwen laughed while pointing a finger at miguel. hobie would definitely chime in as well, “who knew the big boss man is whipped for you,” directing his voice at you. miles and margo, laughed, literally everyone did while pav went up to miguel and you saying he had sensed the romantic tension from a mile away.

“LYLA! what did you do!?” miguel said embarrassed while he was BEET RED. he was really flustered. he was caught red handed and he wasn’t sure if you were about to reject him on the spot or not.

“sorry pal! i didn’t know it would do that…” lyla nervously giggled while she glanced at you then at miguel. you couldn’t help but laugh at the whole situation because of the way the other spider people came bustling in the control room thinking it was a problem. of course, it wasn’t it was just very hilarious to you that you found out that miguel has a crush on you TOO.

“guys-um, it isn’t an emergency—just go back to whatever you were doing.” he said as he stumbled over his words and told everyone to leave. he wasn’t necessarily mad but he was more so embarrassed. everyone was dismissed even your favorite teenagers had all

left but they respected your privacy. miguel would shake his head as he ran his hands through his hair directly his attention to you but not being able to hold eye contact. quickly, lyla vanished into thin air but she could still hear everything.

“i’m sorry about what lyla did.” he came close to you as he placed an apologetic hand on your shoulder with a gentle touch. you could tell he was blushing. you could tell he was into you in the way his eyes would casually go to you lips then to your eyes. he did it a couple times.

“it’s fine miguel,” you inches closer to him closing the distance between the two of you, “i wanted to tell you that i also like you too!” you giggled while you made it your effort to maintain eye contact with him. you were a very shy person but you weren’t one to stumble on your words. miguel was dumbfounded but at the same time very relief that you liked him back too.

“you like me too??” he gently stroked your cheek, “you like someone like me?” you heart aches a bit whenever he would put himself down like that as if he felt he was incapable of being loved. you gently placed a hand on top of the hand he was caressing your cheek with as you leaned into his touch.

“miguel, of course. you’re capable of being cared for, thought of, and loved.” you embraced him as you wrapped your arms around his waist. he was stunned a bit not knowing what to do since he hasn’t hugged someone in such a long time. but slowly and carefully he wrapped his arms around your upper shoulders as he placed his chin on top of your head. with one hand he carded your hair lovingly, “i promise to be the best man i can be for you, if you allow me to.”

“yes of course.” everyone in the HQ was so happy for you and they were also happy that miguel’s behavior improved drastically for the better, all thanks to you.

#spiderman atsv#miguel o'hara#spider man: across the spider verse#miguel o’hara x reader#miguel spiderman#spider person#spidersona#atsv x reader#miguel o’hara x y/n#ao3 works#miguel o’hara fanfiction#miguel x reader#atsv miguel#atsv#spiderman 2099#spider woman#atsv headcanons#🌱 lin writes

478 notes

·

View notes

Text

FO4 Companions React to a Blind!Sole

Sole being blind Pre-war (TW for Ableism)

I've never written one of these before, but my most recent Sole is blind so I thought I'd put my idea of how I think the companions would react.

Cait: Doesn't believe that Sole is blind at first. They're too good of a shot, too aware of what's going on to be blind. But she notices that Sole does struggle with things those with sight wouldn't even bat an eye at. They often face the wrong way when being told to read something, they also are terrible at keeping watch. She at first is mad that she has to "pick up the slack" but she quickly gets over it after Sole treats her with kindness and understanding. Doesn't take long for her to stand up for Sole in every situation.

Codsworth: Sole's blindness was something that he got used to not long after being purchased. As a Mr. Handy, he has software that allows him to assist those who are disabled. It never affected how Sole treated him, nor his perception of them. In the post war setting, with the world being so different from what Sole remembers, he tries to assist in even more ways than he used to. Going with Sole to Concord for example, or at least wanting to when they denied his request. He doesn't treat Sole any differently from before, and tries not to handle them with kid gloves knowing how much they hate that.

Curie: Sees Sole as a curiosity. At first she often tries to run little tests to figure out the severity of their blindness and the extent of their capabilities. She's a doctor, she wants to know the extent of their blindness and what she can do to potentially assist. That being said, after being called out she knocks it off and switches her focus on listening to Sole and what they want. She wants to be helpful, unaware of how condescending she can be at times.

Deacon: Thinks Sole is extremely interesting from the moment he sees them the first time. Their ability to get around without much trouble and handle the wasteland without sight is fascinating. He appreciates that Sole can't see him following them around, and when Sole comes around to get the courser chip decoded he still vouches for them. Still, due to the nature of the Railroad and the fact they use road signs and need to keep an eye out he worries Sole won't be a good agent. He doesn't want to risk any synth getting caught or hurt because Sole didn't notice the danger. Overall he respects Sole, but thinks Sole is best on Railroad jobs with him or Glory by their side. He does get annoyed that their PipBoy has voice to text on, as it gives them away when sneaking but he can look past that.

Danse: The fact that a citizen came in guns blazing to save him and his squad got his respect, but once he noticed their blindness he instantly feels like Sole is a liability. People being blind wasn't the most uncommon thing in the brotherhood, but those who were blind often weren't allowed on the field anymore. More often than not they were asked to more or less retire to not put their teammates at risk. He tries to tactfully get Sole to leave, but he knows he needs the extra firepower to get the transmitter. He appreciates the help but is wary about sponsoring them even if they proved their capabilities. After becoming his newest charge, he acts like they are the same as any recruit. That being said, it takes him a long time to not handle Sole like a helpless child. He knows Sole can handle themselves, but he can't help but treat them a little differently. It takes being pulled aside and called out before he realizes he's even doing that, and he apologizes and tries his hardest to knock it off.

Dogmeat: Confused at first but he's a good smart boy. Happy to be Sole's eyes instead.

Hancock: At first, he thought Sole was just a cold, badass bitch. No reaction to a man being stabbed in front of them, AND no reaction to him being a ghoul? But the realization hit him quickly after, and he didn't know how to feel. Sole was clearly street smart enough to not be taken advantage of, but that didn't mean people didn't suck. Honestly, if anyone belonged in the town full of misfits who look out for each other, it was Sole. He treats them like they treat him, like a person that is just like everyone else. He is guilty of subtly moving things out of Sole's way or tapping them so they face the right direction, but it's never really noticeable. It's similar to moving something out of someone's way when they are distracted or tapping someone's shoulder to get their attention.

Maccready: He assumed that he was being hired to be a bodyguard. After all, being blind in the wastes was not easy and they'd need protection. Didn't take long for him to realize Sole is a damn good shot and is able to take care of themself. He deduces that Sole hired him not as a bodyguard, but as a companion, someone who was company on the road so they had someone to talk to. And if he just so happened to notice if someone was trying to rip Sole off he'd be able to call them out. He doesn't treat Sole any differently, before or after his quest. Before, they're his employer he's not saying shit and after they're his friend and he's not going to risk that relationship.

Nick: He knew something was up the moment Sole didn't even slightly react to his appearance. Didn't panic seeing him, nor asked what his deal was. The fact they were able to get him out without being able to see, using a terminal and all got his respect. But he was still nervous that a blind person was using a gun, or he was until by some miracle Sole landed at least 6 head-shots in a row. Once being free, the interview to try and find Shaun was difficult. No physical characteristics to go on, the guess that it was Kellogg was honestly just lucky. Regardless, Sole treated Nick like a person from the moment they met, and he'd be lying that it wasn't nice to just be seen as normal. It's only fair he returns the favor.

Piper: Sole was already extremely interesting to Piper, but add on the fact they can't see and she's instantly more interested. What's life in the Commonwealth like if you can't see it? What was life like before the bombs? How do you use your weapon so effectively? She overcompensates for Sole's lack of sight by being reckless, especially when they first travel together. She probably forgets often that Sole is blind, often being like "Hey did you see that Blue?" And then remembering and apologizing. She does get better with time, and definitely publishes papers calling out people/businesses who try to take advantage of Sole. Any papers in the Publick that Sole is in she reads out loud to them.

Preston: He's just relieved that someone came to help them in the Museum. He couldn’t give a shit that Sole is blind, he cares that they're willing to help. The only hiccup is getting the fusion core in the basement since Sturges can't get it and Sole can't get it either. They offer to try and are lucky enough to get it first try and grab the fusion core. After meeting back at Sanctuary he opts to not mention it as it's none of his business. By the time he feels comfortable enough around Sole to ask about it, they're friends and he doesn't want them to be uncomfortable so he never brings it up. He's the type to gently point Sole in the right direction if they're looking the wrong way in conversions or moving stuff out of the way if they might trip on them. He also makes sure that Sanctuary and the Castle are as consistent as possible so that Sole has an easier time navigating them.

Strong: does not appreciate the puny human not being able to see. How can they help him find the milk of human kindness if they can't even see it? He sees Soul as a weakling and refuses to travel with them.

X6: Doesn't care that Sole is Father's parent, Sole is a liability and cannot handle themself as well as a person with sight can. He doesn't like being paired with Sole.

Old Longfellow: Honestly he kind of envies them. They never have to see the horrors of the fog like he has. But he's aware that one day he might lose his sight too. Besides, the thickness of the fog even having sight isn't much of an advantage. He helps Sole the same way he helps all travelers to Acadia, with the added bonus that Sole can defend themselves.

Gage: Helps sole at first because he can't tell they can't see, instantly turns on them after they become overboss due to blindness being a weakness.

#fo4#fo4 companions#hancock fo4#deacon fo4#codsworth#fallout 4#danse#curie#piper#Nick valentine#robert maccready#sole survivor#old longfellow#fo4 strong#x6 88#preston garvey#dogmeat#cait#paladin danse#fo4 danse#fo4 piper#fo4 nick valentine#fo4 codsworth#deacon#fo4 curie#fo4 companions react#trigger warning ableism#tw ableism#blind sole survivor

100 notes

·

View notes

Text

Happy birthday to Kalmiya!

Today is her -512th birthday!

Kalmiya was a "smart" AI created by Dr. Catherine Halsey. Her primary purpose was to test prototypes of cyberwarfare and infiltration technology. Once tested and refined, the software and subroutines would then be incorporated into Cortana, giving her capabilities far beyond the rest of her kind. In this way, Kalmiya can be considered Cortana's predecessor.

Halsey extended Kalmiya's functionality by making subtle--and illegal--changes to her over time, which were so small that only Halsey noticed. UNSC protocol dictated that Kalmiya was to be decommissioned once her testing was complete, which Halsey outright disobeyed. Halsey kept Kalmiya until the fall of Reach, where she would have to either be sent away with the Forerunner data she was researching under CASTLE base, or destroyed to keep her out of the Covenant's hands. Ultimately, Halsey choose to let Cortana be the custodian of the data, believing that Kalmiya was the more unstable choice due to her longevity.

Halsey activated a fail-safe destruction protocol in Kalmiya, having previously lied to her about the existence of such a subroutine in her code. Despite this, Kalmiya was understanding and seemingly accepted her termination.

Seven years later, Blue Team returned to Reach to access Halsey's lab. Her lab contained a cryo-vault with additional clones she'd made of herself to extract their brains and use to make artificial intelligence like Cortana. The hope was that the brains could be used to make an artificial intelligence that would functionally be a clone of Cortana, which they could use to stop her after she began subjugating the galaxy. One of these clones would be used to create the Weapon.

When John and Kelly arrived at the lab, they were surprised to find a damaged Kalmiya keeping watch over the vault. They surmised that Kalmiya created a fragment of herself before her destruction to ensure the survival of the samples. After bypassing her with a security code, they left as the ghostly fragment watched them.

Does that count as alive?? I guess she was never "alive". I don't know man I just throw birthday parties here.

In canon (~2560), she is turning 23!

#kalmiya as the sacrificial older sister i'm going to frow up#guarded her sisters to the bitter bitter end!!!!!!!!!!!!!#also imagining a 45 year-old halsey spending her birthday activating her#theres a lot to unpack here#kalmiya#artificial intelligence

73 notes

·

View notes

Note

A couple questions for you! There’s been so many backstories and childhoods for Riddler, which ones are your favorite?

Do you abide by Eddie cheating at puzzles as a child, or being envied and abused for his intelligence and high test scores?

Where do you like to see him work before he was a criminal? I know there’s been a bunch, like the carnival, the GCPD, Wayne Enterprises, a software designer for Mockridge, etc…

— Zee (@riddle-us-this)

I believe that an important aspect of Edwards character is how much he craves attention and validation, and more often than not issues with such things emerge in childhood (at least for me 🙂↕️) so while i prefer to think that Eddie was always naturally smart and in honors and gifted programs and what not- i have a feeling that if he realized that he would not succeed at something that he has never been opposed to 'bending the rules'. One line of his from Arkham Knight i really like is where he says: "I dissemble! I artfully obfuscate! BUT I DO NOT CHEAT" (i also acknowledge that in this same continuity is where the cheating as a kid comes from anyways but erm whatever)

So in simpler words, I see him being the highest in his classes and one of, if not the smartest during his educational years and receiving nothing but abuse and isolation from his peers and others alike through it all. That no matter how much he tried and how successful he was, no one cared enough to acknowledge him.

As for your second question, i usually see his as either an employee at Wayne Enterprises or at the GCPD, i think both offer something unique for his character. And as much as i also love BTAS riddler and how they showed his backstory with Mockridge, keeping that same sort of rejection and abandonment the second he wanted recognition; I find that him working in the GCPD (specifically like how he did in the Gotham show) is usually how i imagine him. Despite how i draw and see him being mostly inspired by Zero Year Riddler, I think the GCPD offers him more advantages character wise, it also explains in a way why he prefers dealing with Batman rather than cops, and i like the idea of him being a total prick whenever he's in custody because he knows most of these people and how corrupt they are. I am also biased because the GCPD route gives him another connection to Two-Face and his quest to destroy corruption in courts and all that. It also can help his later reformation as a private detective, if i ever do anything with that.

#thank you for the questions i love talking my heart out#answering asks#woah#the riddler#zero year riddler#gotham riddler#btas riddler#arkhamverse riddler#batman au#weirdez

7 notes

·

View notes

Text

Report: Voting Machines Were Altered Before the 2024 Election. Did Kamala Harris Actually Win?

The Quiet Move In 2024, a federally accredited lab named Pro V&V conducted a wave of hardware and software changes to ES&S voting machines. These were major changes—new ballot scanners, printer adjustments, updated firmware, and a new Electionware reporting system. But they were passed off as “de minimis” tweaks, a label meant for minor changes that don’t require full public review or testing.

However, as noted by Dissent in Bloom substack, the changes were anything but minor.

SMART Elections immediately flagged the move. But by then, it was too late. The machines had already been used in the election. And Pro V&V? The lab responsible for certifying them? It all but disappeared. Their once-public website became a hollow page. No logs. No documentation. Just a phone number and a generic email address.

This is the lab that signs off on voting systems in Pennsylvania, Florida, New Jersey, California—and countless other places. And when people started asking questions, they vanished.

Something Was Off With the Votes In Rockland County, New York, voters noticed their ballots didn’t seem to count. People swore under oath that they voted for Senate candidate Diane Sare. But in district after district, the machines didn’t reflect it. In one case, nine voters said they picked her. Only five votes showed up. In another, five claimed to vote for her—only three were recorded.

It wasn’t just third-party candidates. Kamala Harris’s name was missing entirely from the top of the ballot in several heavily Democratic districts. In areas that overwhelmingly backed Democrat Kirsten Gillibrand for Senate, somehow, Harris got zero votes. Zero.

Meanwhile, Donald Trump received 750,000 more votes than Republican Senate candidates in those same districts. That’s not just voter preference. That’s a statistical impossibility.

As Dissent in Bloom reported: “That’s not split-ticket voting. That’s a mathematical anomaly.”

7 notes

·

View notes

Text

Why don't cars have a dash board warning light alerting you when a taillight or headlight is out? You normally don't know until you get pulled over by a cop. Even if you check them before each trip they sometimes burn out while on the trip.

The absence of universal dashboard warnings for burnt-out exterior lights (headlights, taillights, etc.) is a mix of cost, design priorities, and technological limitations—but solutions exist. Here’s why most cars lack this feature and what drivers can do:

Why Most Cars Don’t Warn You Cost-Cutting by Manufacturers:

Adding a monitoring system for every bulb requires additional wiring, sensors, and software, which automakers often omit to keep prices low, especially in budget vehicles.

Example: A $20 circuit to detect bulb failures adds up to millions in production costs across a car model’s lifespan. Simpler Electrical Systems:

Older cars use basic circuits where a burnt bulb doesn’t disrupt the electrical flow (unlike a blown fuse). Modern CAN bus systems can detect failures but aren’t always programmed to alert drivers. Assumption of Manual Checks:

Manufacturers assume drivers will notice via:

Reflections (e.g., light bouncing off garage walls).

Dashboard icons for specific systems (e.g., brake light warnings tied to the brake pedal circuit). Regulatory Gaps:

The U.S. doesn’t mandate bulb-out warnings, though the EU requires rear light failure alerts in newer cars (via ECE Regulation 48).

Cars That Do Have Warnings Luxury/Modern Vehicles: Brands like BMW, Mercedes, and Tesla include bulb monitoring systems in higher trims.

LED Lighting: Many EVs and hybrids with full LED setups (e.g., Ford Mustang Mach-E) self-diagnose faults since LEDs rarely fail abruptly.

Aftermarket Kits: Products like LightGuardian (50–100) plug into taillight circuits and trigger an alarm if a bulb dies.

Why Bulbs Burn Out Mid-Trip Halogen Bulbs: Prone to sudden failure due to filament vibration or temperature swings.

Voltage Spikes: Poor alternator regulation can surge power, killing bulbs.

Moisture/Corrosion: Water ingress in housings causes shorts over time.

Practical Solutions for Drivers Retrofit Your Car:

Install LED bulbs with built-in failure alerts (e.g., Philips X-tremeUltinon).

Use Bluetooth-enabled bulb holders (e.g., Lumilinks) that notify your phone. Routine Checks:

Nightly Reflection Test: Park facing a wall and check light patterns.

Monthly Buddy Check: Have someone press brakes/turn signals while you inspect. Legal Workarounds:

In regions requiring annual inspections (e.g., EU, Japan), mechanics flag dead bulbs.

Use dual-filament bulbs for redundancy (e.g., a brake light that still works as a taillight if one filament fails).

Why It’s Likely to Improve LED Adoption: Longer-lasting LEDs (25,000+ hours) reduce failure rates.

Smart Lighting: New cars with matrix LED or laser lights often self-diagnose.

Consumer Demand: Aftermarket alerts (e.g., $30 Wireless Car Light Monitor) are gaining traction.

Bottom Line

While universal bulb-out warnings aren’t standard yet, technology and regulations are catching up. Until then, proactive checks and affordable aftermarket gadgets can save you from a traffic stop. 🔧💡