#Speech Datasets

Explore tagged Tumblr posts

Text

https://justpaste.it/cg903

0 notes

Text

The Importance of Speech Datasets in Modern AI Development

Introduction

In the rapidly evolving field of artificial intelligence (AI), the role of speech datasets cannot be overstated. As AI continues to integrate more deeply into various aspects of our daily lives, the ability for machines to understand and process human speech has become increasingly crucial. Speech datasets are at the core of this capability, providing the foundational data necessary for training and improving AI models. This article explores the significance of speech datasets, their applications, and the challenges involved in their development.

What Are Speech Datasets?

Speech datasets consist of audio recordings of spoken language, often accompanied by transcriptions and other relevant metadata. These datasets vary widely in terms of language, dialects, speaker demographics, and environmental conditions. High-quality speech datasets are essential for training AI models in tasks such as speech recognition, natural language processing (NLP), and voice synthesis.

Applications of Speech Datasets

Speech Recognition: One of the most well-known applications of speech datasets is in speech recognition systems, such as those used in virtual assistants like Siri, Alexa, and Google Assistant. These systems rely on extensive datasets to accurately convert spoken words into text.

Natural Language Processing (NLP): Speech datasets are also critical for NLP tasks, enabling AI to understand and process spoken language in a more human-like manner. This is essential for applications such as customer service bots, real-time translation services, and sentiment analysis.

Voice Synthesis: Creating natural-sounding synthetic voices requires large and diverse speech datasets. These voices are used in various applications, including text-to-speech systems, audiobooks, and assistive technologies for individuals with disabilities.

Speaker Verification and Identification: Speech datasets help in developing systems that can verify or identify individuals based on their voice. This is particularly useful in security applications, such as access control and fraud detection.

Challenges in Developing Speech Datasets

Diversity and Representation: A significant challenge in developing speech datasets is ensuring diversity and representation. This includes capturing a wide range of accents, dialects, and languages to create robust AI models that perform well across different demographics and regions.

Data Privacy and Ethics: Collecting and using speech data raises concerns about privacy and ethical considerations. It is essential to obtain informed consent from participants and to anonymize data to protect individuals' identities.

Quality and Consistency: Ensuring the quality and consistency of speech data is crucial for effective AI training. This involves not only clear and accurate transcriptions but also consistent recording conditions to minimize background noise and other distortions.

Cost and Resource Intensity: Developing large-scale speech datasets can be resource-intensive and costly. It requires significant investment in terms of time, technology, and human resources to collect, annotate, and validate the data.

The Future of Speech Datasets

As AI technology continues to advance, the demand for high-quality speech datasets will only grow. Future developments in this area are likely to focus on increasing the diversity and richness of datasets, improving data collection and annotation methods, and addressing privacy and ethical concerns more effectively.

Innovations such as synthetic data generation and transfer learning could also play a significant role in enhancing the capabilities of speech datasets. By leveraging these technologies, researchers and developers can create more comprehensive and versatile AI models, pushing the boundaries of what is possible in speech recognition and processing.

Conclusion

Speech datasets are a cornerstone of modern AI development, enabling machines to understand and interact with human speech in increasingly sophisticated ways. While there are significant challenges involved in creating and maintaining these datasets, the potential benefits for technology and society are immense. As we move forward, continued investment and innovation in speech datasets will be essential for unlocking the full potential of AI.

0 notes

Text

Annotated Text-to-Speech Datasets for Deep Learning Applications

Introduction:

Text To Speech Dataset technology has undergone significant advancements due to developments in deep learning, allowing machines to produce speech that closely resembles human voice with impressive precision. The success of any TTS system is fundamentally dependent on high-quality, annotated datasets that train models to comprehend and replicate natural-sounding speech. This article delves into the significance of annotated TTS datasets, their various applications, and how organizations can utilize them to create innovative AI solutions.

The Importance of Annotated Datasets in TTS

Annotated TTS datasets are composed of text transcripts aligned with corresponding audio recordings, along with supplementary metadata such as phonetic transcriptions, speaker identities, and prosodic information. These datasets form the essential framework for deep learning models by supplying structured, labeled data that enhances the training process. The quality and variety of these annotations play a crucial role in the model’s capability to produce realistic speech.

Essential Elements of an Annotated TTS Dataset

Text Transcriptions – Precise, time-synchronized text that corresponds to the speech audio.

Phonetic Labels – Annotations at the phoneme level to enhance pronunciation accuracy.

Speaker Information – Identifiers for datasets with multiple speakers to improve voice variety.

Prosody Features – Indicators of pitch, intonation, and stress to enhance expressiveness.

Background Noise Labels – Annotations for both clean and noisy audio samples to ensure robust model training.

Uses of Annotated TTS Datasets

The influence of annotated TTS datasets spans multiple sectors:

Virtual Assistants: AI-powered voice assistants such as Siri, Google Assistant, and Alexa depend on high-quality TTS datasets for seamless interactions.

Audiobooks & Content Narration: Automated voice synthesis is utilized in e-learning platforms and digital storytelling.

Accessibility Solutions: Screen readers designed for visually impaired users benefit from well-annotated datasets.

Customer Support Automation: AI-driven chatbots and IVR systems employ TTS to improve user experience.

Localization and Multilingual Speech Synthesis: Annotated datasets in various languages facilitate the development of global text-to-speech (TTS) applications.

Challenges in TTS Dataset Annotation

Although annotated datasets are essential, the creation of high-quality TTS datasets presents several challenges:

Data Quality and Consistency: Maintaining high standards for recordings and ensuring accurate annotations throughout extensive datasets.

Speaker Diversity: Incorporating a broad spectrum of voices, accents, and speaking styles.

Alignment and Synchronization: Accurately aligning text transcriptions with corresponding speech audio.

Scalability: Effectively annotating large datasets to support deep learning initiatives.

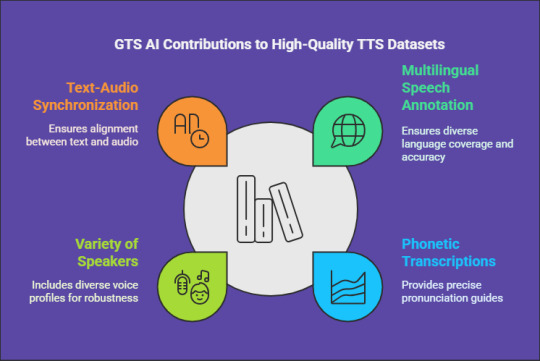

How GTS Can Assist with High-Quality Text Data Collection

For organizations and researchers in need of dependable TTS datasets, GTS AI provides extensive text data collection services. With a focus on multilingual speech annotation, GTS delivers high-quality, well-organized datasets specifically designed for deep learning applications. Their offerings guarantee precise phonetic transcriptions, a variety of speakers, and flawless synchronization between text and audio.

Conclusion

Annotated text-to-speech datasets are vital for the advancement of high-performance speech synthesis models. As deep learning Globose Technology Solutions progresses, the availability of high-quality, diverse, and meticulously annotated datasets will propel the next wave of AI-driven voice applications. Organizations and developers can utilize professional annotation services, such as those provided by GTS, to expedite their AI initiatives and enhance their TTS solutions.

0 notes

Text

Enhancing Vocal Quality: An Overview of Text-to-Speech Datasets

Introduction

The advancement of Text-to-Speech (TTS) technology has significantly altered the way individuals engage with machines. From digital assistants and navigation applications to tools designed for the visually impaired, TTS systems are increasingly becoming essential in our everyday experiences. At the heart of these systems is a vital element: high-quality datasets. This article will delve into the basics of Text-to-Speech datasets and their role in developing natural and expressive synthetic voices.

What Constitutes a Text-to-Speech Dataset?

A Text-to-Speech dataset comprises a collection of paired text and audio data utilized for training machine learning models. These datasets enable TTS systems to learn the process of transforming written text into spoken language. A standard dataset typically includes:

The Significance of TTS Datasets

Enhancing Voice Quality: The variety and richness of the dataset play a crucial role in achieving a synthesized voice that is both natural and clear.

Expanding Multilingual Support: A varied dataset allows the system to accommodate a range of languages and dialects effectively.

Reflecting Emotions and Tones: High-quality datasets assist models in mimicking human-like emotional expressions and intonations.

Mitigating Bias: Diverse datasets promote inclusivity by encompassing various accents, genders, and speaking styles.

Attributes of an Effective TTS Dataset

Variety: An effective dataset encompasses a range of languages, accents, genders, ages, and speaking styles.

Superior Audio Quality: The recordings must be clear and free from significant background noise or distortion.

Precision in Alignment: It is essential that the text and audio pairs are accurately aligned to facilitate effective training.

Comprehensive Annotation: In-depth metadata, including phonetic and prosodic annotations, enhances the training experience.

Size: A more extensive dataset typically results in improved model performance, as it offers a greater number of examples for the system to learn from.

Types of Text-to-Speech Datasets

These datasets concentrate on a singular voice, typically utilized for specific applications such as virtual assistants.

Example: LJSpeech Dataset (featuring a single female speaker of American English).

These datasets comprise recordings from various speakers, allowing systems to produce a range of voices.

Example: LibriTTS (sourced from LibriVox audiobooks).

These datasets include text and audio in multiple languages to facilitate global applications.

Example: Mozilla Common Voice.

These datasets encompass a variety of emotions, including happiness, sadness, and anger, to enhance the expressiveness of TTS systems.

Example: CREMA-D (featuring recordings rich in emotional content).

Challenges in Developing TTS Datasets

Best Practices for Developing TTS Datasets

Regularly Update: Expand datasets to encompass new languages, accents, and applications.

The Importance of Annotation in TTS Datasets

Annotations play a vital role in enhancing the efficacy of TTS systems. They offer context and supplementary information, including:

Services such as GTS AI provide specialized annotation solutions to facilitate this process.

The Future of TTS Datasets

As TTS technology evolves, the need for more diverse and advanced datasets will increase. Innovations such as:

These advancements will lead to the creation of more natural, inclusive, and adaptable TTS systems.

Conclusion

Building better voices starts with high-quality Text-to-Speech datasets. By prioritizing diversity, quality, and ethical practices, we can create TTS systems that sound natural, inclusive, and expressive. Whether you’re a developer or researcher, investing in robust dataset creation and annotation is key to advancing the field of TTS.

For professional annotation and data solutions, visit GTS AI. Let us help you bring your TTS projects to life with precision and efficiency.

0 notes

Text

The Basics of Speech Transcription

Speech transcription is the process of converting spoken language into written text. This practice has been around for centuries, initially performed manually by scribes and secretaries, and has evolved significantly with the advent of technology. Today, speech transcription is used in various fields such as legal, medical, academic, and business environments, serving as a crucial tool for documentation and communication.

At its core, speech transcription can be categorized into three main types: verbatim, edited, and intelligent transcription. Verbatim transcription captures every spoken word, including filler words, false starts, and non-verbal sounds like sighs and laughter. This type is essential in legal settings where an accurate and complete record is necessary. Edited transcription, on the other hand, omits unnecessary fillers and non-verbal sounds, focusing on producing a readable and concise document without altering the speaker's meaning. Intelligent transcription goes a step further by paraphrasing and rephrasing content for clarity and coherence, often used in business and academic settings where readability is paramount.

The Process of Transcription

The transcription process typically begins with recording audio. This can be done using various devices such as smartphones, dedicated voice recorders, or software that captures digital audio files. The quality of the recording is crucial as it directly impacts the accuracy of the transcription. Clear audio with minimal background noise ensures better transcription quality, whether performed manually or using automated tools.

Manual vs. Automated Transcription

Manual transcription involves a human transcriber listening to the audio and typing out the spoken words. This method is highly accurate, especially for complex or sensitive content, as human transcribers can understand context, accents, and nuances better than machines. However, manual transcription is time-consuming and can be expensive.

Automated transcription, powered by artificial intelligence (AI) and machine learning (ML), has gained popularity due to its speed and cost-effectiveness. AI-driven transcription software can quickly convert speech to text, making it ideal for situations where time is of the essence. While automated transcription has improved significantly, it may still struggle with accents, dialects, and technical jargon, leading to lower accuracy compared to human transcription.

Tools and Technologies

Several tools and technologies are available to aid in speech transcription. Software like Otter.ai, Rev, and Dragon NaturallySpeaking offer various features, from real-time transcription to integration with other productivity tools. These tools often include options for both manual and automated transcription, providing flexibility based on the user’s needs.

In conclusion, speech transcription is a versatile and essential process that facilitates accurate and efficient communication across various domains. Whether done manually or through automated tools, understanding the basics of transcription can help you choose the right approach for your needs.

0 notes

Text

Text-to-speech datasets form the cornerstone of AI-powered speech synthesis applications, facilitating natural and smooth communication between humans and machines. At Globose Technology Solutions, we recognize the transformative power of TTS technology and are committed to delivering cutting-edge solutions that harness the full potential of these datasets. By understanding the importance, features, and applications of TTS datasets, we pave the way to a future where seamless speech synthesis enriches lives and drives innovation across industries.

#Text-to-speech datasets#NLP#Data Collection#Data Collection in Machine Learning#data collection company#datasets#ai#technology#globose technology solutions

0 notes

Text

Each week (or so), we'll highlight the relevant (and sometimes rage-inducing) news adjacent to writing and freedom of expression. (Find it on the blog too!) This week:

Censorship watch: Somehow, KOSA returned

It’s official: The Kids Online Safety Act (KOSA) is back from the dead. After failing to pass last year, the bipartisan bill has returned with fresh momentum and the same old baggage—namely, vague language that could endanger hosting platforms, transformative work, and implicitly target LGBTQ+ content under the guise of “protecting kids.”

… But wait, it gets better (worse). Republican Senator Mike Lee has introduced a new bill that makes other attempts to censor the internet look tame: the Interstate Obscenity Definition Act (IODA)—basically KOSA on bath salts. Lee’s third attempt since 2022, the bill would redefine what counts as “obscene” content on the internet, and ban it nationwide—with “its peddlers prosecuted.”

Whether IODA gains traction in Congress is still up in the air. But free speech advocates are already raising alarm bells over its implications.

The bill aims to gut the long-standing legal definition of “obscenity” established by the 1973 Miller v. California ruling, which currently protects most speech under the First Amendment unless it fails a three-part test. Under the Miller test, content is only considered legally obscene if it 1: appeals to prurient interests, 2: violates “contemporary community standards,” and 3: is patently offensive in how it depicts sexual acts.

IODA would throw out key parts of that test—specifically the bits about “community standards”—making it vastly easier to prosecute anything with sexual content, from films and photos, to novels and fanfic.

Under Lee’s definition (which—omg shocking can you believe this coincidence—mirrors that of the Heritage Foundation), even the most mild content with the affect of possible “titillation” could be included. (According to the Woodhull Freedom Foundation, the proposed definition is so broad it could rope in media on the level of Game of Thrones—or, generally, anything that depicts or describes human sexuality.) And while obscenity prosecutions are quite rare these days, that could change if IODA passes—and the collateral damage and criminalization (especially applied to creative freedoms and LGBT+ content creators) could be massive.

And while Lee’s last two obscenity reboots failed, the current political climate is... let’s say, cloudy with a chance of fascism.

Sound a little like Project 2025? Ding ding ding! In fact, Russell Vought, P2025’s architect, was just quietly appointed to take over DOGE from Elon Musk (the agency on a chainsaw crusade against federal programs, culture, and reality in general).

So. One bill revives vague moral panic, another wants to legally redefine it and prosecute creators, and the man who helped write the authoritarian playbook—with, surprise, the intent to criminalize LGBT+ content and individuals—just gained control of the purse strings.

Cool cool cool.

AO3 works targeted in latest (massive) AI scraping

Rewind to last month—In the latest “wait, they did what now?” moment for AI, a Hugging Face user going by nyuuzyou uploaded a massive dataset made up of roughly 12.6 million fanworks scraped from AO3—full text, metadata, tags, and all. (Info from r/AO3: If your works’ ID numbers between 1 and 63,200,000, and has public access, the work has been scraped.)

And it didn’t stop at AO3. Art and writing communities like PaperDemon and Artfol, among others, also found their content had been quietly scraped and posted to machine learning hubs without consent.

This is yet another attempt in a long line of more “official” scraping of creative work, and the complete disregard shown by the purveyors of GenAI for copyright law and basic consent. (Even the Pope agrees.)

AO3 filed a DMCA takedown, and Hugging Face initially complied—temporarily. But nyuuzyou responded with a counterclaim and re-uploaded the dataset to their personal website and other platforms, including ModelScope and DataFish—sites based in China and Russia, the same locations reportedly linked to Meta’s own AI training dataset, LibGen.

Some writers are locking their works. Others are filing individual DMCAs. But as long as bad actors and platforms like Hugging Face allow users to upload massive datasets scraped from creative communities with minimal oversight, it’s a circuitous game of whack-a-mole. (As others have recommended, we also suggest locking your works for registered users only.)

After disavowing AI copyright, leadership purge hits U.S. cultural institutions

In news that should give us all a brief flicker of hope, the U.S. Copyright Office officially confirmed: if your “creative” work was generated entirely by AI, it’s not eligible for copyright.

A recently released report laid it out plainly—human authorship is non-negotiable under current U.S. law, a stance meant to protect the concept of authorship itself from getting swallowed by generative sludge. The report is explicit in noting that generative AI draws “on massive troves of data, including copyrighted works,” and asks: “Do any of the acts involved require the copyright owners’ consent or compensation?” (Spoiler: yes.) It’s a “straight ticket loss for the AI companies” no matter how many techbros’ pitch decks claim otherwise (sorry, Inkitt).

“The Copyright Office (with a few exceptions) doesn’t have the power to issue binding interpretations of copyright law, but courts often cite to its expertise as persuasive,” tech law professor Blake. E Reid wrote on Bluesky.As the push to normalize AI-generated content continues (followed by lawsuits), without meaningful human contribution—actual creative labor—the output is not entitled to protection.

… And then there’s the timing.

The report dropped just before the abrupt firing of Copyright Office director Shira Perlmutter, who has been vocally skeptical of AI’s entitlement to creative work.

It's yet another culture war firing—one that also conveniently clears the way for fewer barriers to AI exploitation of creative work. And given that Elon Musk’s pals have their hands all over current federal leadership and GenAI tulip fever… the overlap of censorship politics and AI deregulation is looking less like coincidence and more like strategy.

Also ousted (via email)—Librarian of Congress Carla Hayden. According to White House press secretary and general ghoul Karoline Leavitt, Dr. Hayden was dismissed for “quite concerning things that she had done… in the pursuit of DEI, and putting inappropriate books in the library for children.” (Translation: books featuring queer people and POC.)

Dr. Hayden, who made history as the first Black woman to hold the position, spent the last eight years modernizing the Library of Congress, expanding digital access, and turning the institution into something more inclusive, accessible, and, well, public. So of course, she had to go. ¯\_(ツ)_/¯

The American Library Association condemned the firing immediately, calling it an “unjust dismissal” and praising Dr. Hayden for her visionary leadership. And who, oh who might be the White House’s answer to the LoC’s demanding and (historically) independent role?

The White House named Todd Blanche—AKA Trump’s personal lawyer turned Deputy Attorney General—as acting Librarian of Congress.

That’s not just sus, it’s likely illegal—the Library is part of the legislative branch, and its leadership is supposed to be confirmed by Congress. (You know, separation of powers and all that.)

But, plot twist: In a bold stand, Library of Congress staff are resisting the administration's attempts to install new leadership without congressional approval.

If this is part of the broader Project 2025 playbook, it’s pretty clear: Gut cultural institutions, replace leadership with stunningly unqualified loyalists, and quietly centralize control over everything from copyright to the nation’s archives.

Because when you can’t ban the books fast enough, you just take over the library.

Rebellions are built on hope

Over the past few years (read: eternity), a whole ecosystem of reactionary grifters has sprung up around Star Wars—with self-styled CoNtEnT CrEaTorS turning outrage to revenue by endlessly trashing the fandom. It’s all part of the same cynical playbook that radicalized the fallout of Gamergate, with more lightsabers and worse thumbnails. Even the worst people you know weighed in on May the Fourth (while Prequel reassessment is totally valid—we’re not giving J.D. Vance a win).

But one thing that shouldn't be up for debate is this: Andor, which wrapped its phenomenal two-season run this week, is probably the best Star Wars project of our time—maybe any time. It’s a masterclass in what it means to work within a beloved mythos and transform it, deepen it, and make it feel urgent again. (Sound familiar? Fanfic knows.)

Radicalization, revolution, resistance. The banality of evil. The power of propaganda. Colonialism, occupation, genocide—and still, in the midst of it all, the stubborn, defiant belief in a better world (or Galaxy).

Even if you’re not a lifelong SW nerd (couldn’t be us), you should give it a watch. It’s a nice reminder that amidst all the scraping, deregulation, censorship, enshittification—stories matter. Hope matters.

And we’re still writing.

Let us know if you find something other writers should know about, or join our Discord and share it there!

- The Ellipsus Team xo

#ellipsus#writeblr#writers on tumblr#writing#creative writing#anti ai#writing community#fanfic#fanfiction#ao3#fiction#us politics#andor#writing blog#creative freedom

335 notes

·

View notes

Text

The Importance of Speech Datasets in Modern AI Development

Introduction

In the rapidly evolving field of artificial intelligence (AI), the role of speech datasets cannot be overstated. As AI continues to integrate more deeply into various aspects of our daily lives, the ability for machines to understand and process human speech has become increasingly crucial. Speech datasets are at the core of this capability, providing the foundational data necessary for training and improving AI models. This article explores the significance of speech datasets, their applications, and the challenges involved in their development.

What Are Speech Datasets?

Speech datasets consist of audio recordings of spoken language, often accompanied by transcriptions and other relevant metadata. These datasets vary widely in terms of language, dialects, speaker demographics, and environmental conditions. High-quality speech datasets are essential for training AI models in tasks such as speech recognition, natural language processing (NLP), and voice synthesis.

Applications of Speech Datasets

Speech Recognition: One of the most well-known applications of speech datasets is in speech recognition systems, such as those used in virtual assistants like Siri, Alexa, and Google Assistant. These systems rely on extensive datasets to accurately convert spoken words into text.

Natural Language Processing (NLP): Speech datasets are also critical for NLP tasks, enabling AI to understand and process spoken language in a more human-like manner. This is essential for applications such as customer service bots, real-time translation services, and sentiment analysis.

Voice Synthesis: Creating natural-sounding synthetic voices requires large and diverse speech datasets. These voices are used in various applications, including text-to-speech systems, audiobooks, and assistive technologies for individuals with disabilities.

Speaker Verification and Identification: Speech datasets help in developing systems that can verify or identify individuals based on their voice. This is particularly useful in security applications, such as access control and fraud detection.

Challenges in Developing Speech Datasets

Diversity and Representation: A significant challenge in developing speech datasets is ensuring diversity and representation. This includes capturing a wide range of accents, dialects, and languages to create robust AI models that perform well across different demographics and regions.

Data Privacy and Ethics: Collecting and using speech data raises concerns about privacy and ethical considerations. It is essential to obtain informed consent from participants and to anonymize data to protect individuals' identities.

Quality and Consistency: Ensuring the quality and consistency of speech data is crucial for effective AI training. This involves not only clear and accurate transcriptions but also consistent recording conditions to minimize background noise and other distortions.

Cost and Resource Intensity: Developing large-scale speech datasets can be resource-intensive and costly. It requires significant investment in terms of time, technology, and human resources to collect, annotate, and validate the data.

The Future of Speech Datasets

As AI technology continues to advance, the demand for high-quality speech datasets will only grow. Future developments in this area are likely to focus on increasing the diversity and richness of datasets, improving data collection and annotation methods, and addressing privacy and ethical concerns more effectively.

Innovations such as synthetic data generation and transfer learning could also play a significant role in enhancing the capabilities of speech datasets. By leveraging these technologies, researchers and developers can create more comprehensive and versatile AI models, pushing the boundaries of what is possible in speech recognition and processing.

Conclusion

Speech datasets are a cornerstone of modern AI development, enabling machines to understand and interact with human speech in increasingly sophisticated ways. While there are significant challenges involved in creating and maintaining these datasets, the potential benefits for technology and society are immense. As we move forward, continued investment and innovation in speech datasets will be essential for unlocking the full potential of AI.

0 notes

Text

How to Develop a Video Text-to-Speech Dataset for Deep Learning

Introduction:

In the swiftly advancing domain of deep learning, video-based Text-to-Speech (TTS) technology is pivotal in improving speech synthesis and facilitating human-computer interaction. A well-organized dataset serves as the cornerstone of an effective TTS model, guaranteeing precision, naturalness, and flexibility. This article will outline the systematic approach to creating a high-quality video TTS dataset for deep learning purposes.

Recognizing the Significance of a Video TTS Dataset

A video Text To Speech Dataset comprises video recordings that are matched with transcribed text and corresponding audio of speech. Such datasets are vital for training models that produce natural and contextually relevant synthetic speech. These models find applications in various areas, including voice assistants, automated dubbing, and real-time language translation.

Establishing Dataset Specifications

Prior to initiating data collection, it is essential to delineate the dataset’s scope and specifications. Important considerations include:

Language Coverage: Choose one or more languages relevant to your application.

Speaker Diversity: Incorporate a range of speakers varying in age, gender, and accents.

Audio Quality: Ensure recordings are of high fidelity with minimal background interference.

Sentence Variability: Gather a wide array of text samples, encompassing formal, informal, and conversational speech.

Data Collection Methodology

a. Choosing Video Sources

To create a comprehensive dataset, videos can be sourced from:

Licensed datasets and public domain archives

Crowdsourced recordings featuring diverse speakers

Custom recordings conducted in a controlled setting

It is imperative to secure the necessary rights and permissions for utilizing any third-party content.

b. Audio Extraction and Preprocessing

After collecting the videos, extract the speech audio using tools such as MPEG. The preprocessing steps include:

Noise Reduction: Eliminate background noise to enhance speech clarity.

Volume Normalization: Maintain consistent audio levels.

Segmentation: Divide lengthy recordings into smaller, sentence-level segments.

Text Alignment and Transcription

For deep learning models to function optimally, it is essential that transcriptions are both precise and synchronized with the corresponding speech. The following methods can be employed:

Automatic Speech Recognition (ASR): Implement ASR systems to produce preliminary transcriptions.

Manual Verification: Enhance accuracy through a thorough review of the transcriptions by human experts.

Timestamp Alignment: Confirm that each word is accurately associated with its respective spoken timestamp.

Data Annotation and Labeling

Incorporating metadata significantly improves the dataset's functionality. Important annotations include:

Speaker Identity: Identify each speaker to support speaker-adaptive TTS models.

Emotion Tags: Specify tone and sentiment to facilitate expressive speech synthesis.

Noise Labels: Identify background noise to assist in developing noise-robust models.

Dataset Formatting and Storage

To ensure efficient model training, it is crucial to organize the dataset in a systematic manner:

Audio Files: Save speech recordings in WAV or FLAC formats.

Transcriptions: Keep aligned text files in JSON or CSV formats.

Metadata Files: Provide speaker information and timestamps for reference.

Quality Assurance and Data Augmentation

Prior to finalizing the dataset, it is important to perform comprehensive quality assessments:

Verify Alignment: Ensure that text and speech are properly synchronized.

Assess Audio Clarity: Confirm that recordings adhere to established quality standards.

Augmentation: Implement techniques such as pitch shifting, speed variation, and noise addition to enhance model robustness.

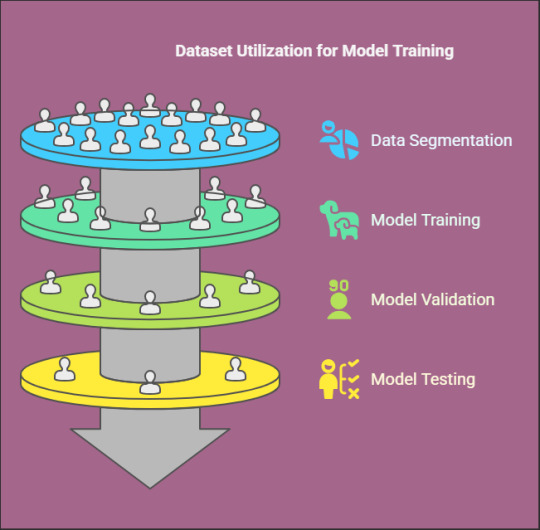

Training and Testing Your Dataset

Ultimately, utilize the dataset to train deep learning models such as Taco Tron, Fast Speech, or VITS. Designate a segment of the dataset for validation and testing to assess model performance and identify areas for improvement.

Conclusion

Creating a video TTS dataset is a detailed yet fulfilling endeavor that establishes a foundation for sophisticated speech synthesis applications. By Globose Technology Solutions prioritizing high-quality data collection, accurate transcription, and comprehensive annotation, one can develop a dataset that significantly boosts the efficacy of deep learning models in TTS technology.

0 notes

Text

On Saturday, an Associated Press investigation revealed that OpenAI's Whisper transcription tool creates fabricated text in medical and business settings despite warnings against such use. The AP interviewed more than 12 software engineers, developers, and researchers who found the model regularly invents text that speakers never said, a phenomenon often called a “confabulation” or “hallucination” in the AI field.

Upon its release in 2022, OpenAI claimed that Whisper approached “human level robustness” in audio transcription accuracy. However, a University of Michigan researcher told the AP that Whisper created false text in 80 percent of public meeting transcripts examined. Another developer, unnamed in the AP report, claimed to have found invented content in almost all of his 26,000 test transcriptions.

The fabrications pose particular risks in health care settings. Despite OpenAI’s warnings against using Whisper for “high-risk domains,” over 30,000 medical workers now use Whisper-based tools to transcribe patient visits, according to the AP report. The Mankato Clinic in Minnesota and Children’s Hospital Los Angeles are among 40 health systems using a Whisper-powered AI copilot service from medical tech company Nabla that is fine-tuned on medical terminology.

Nabla acknowledges that Whisper can confabulate, but it also reportedly erases original audio recordings “for data safety reasons.” This could cause additional issues, since doctors cannot verify accuracy against the source material. And deaf patients may be highly impacted by mistaken transcripts since they would have no way to know if medical transcript audio is accurate or not.

The potential problems with Whisper extend beyond health care. Researchers from Cornell University and the University of Virginia studied thousands of audio samples and found Whisper adding nonexistent violent content and racial commentary to neutral speech. They found that 1 percent of samples included “entire hallucinated phrases or sentences which did not exist in any form in the underlying audio” and that 38 percent of those included “explicit harms such as perpetuating violence, making up inaccurate associations, or implying false authority.”

In one case from the study cited by AP, when a speaker described “two other girls and one lady,” Whisper added fictional text specifying that they “were Black.” In another, the audio said, “He, the boy, was going to, I’m not sure exactly, take the umbrella.” Whisper transcribed it to, “He took a big piece of a cross, a teeny, small piece … I’m sure he didn’t have a terror knife so he killed a number of people.”

An OpenAI spokesperson told the AP that the company appreciates the researchers’ findings and that it actively studies how to reduce fabrications and incorporates feedback in updates to the model.

Why Whisper Confabulates

The key to Whisper’s unsuitability in high-risk domains comes from its propensity to sometimes confabulate, or plausibly make up, inaccurate outputs. The AP report says, "Researchers aren’t certain why Whisper and similar tools hallucinate," but that isn't true. We know exactly why Transformer-based AI models like Whisper behave this way.

Whisper is based on technology that is designed to predict the next most likely token (chunk of data) that should appear after a sequence of tokens provided by a user. In the case of ChatGPT, the input tokens come in the form of a text prompt. In the case of Whisper, the input is tokenized audio data.

The transcription output from Whisper is a prediction of what is most likely, not what is most accurate. Accuracy in Transformer-based outputs is typically proportional to the presence of relevant accurate data in the training dataset, but it is never guaranteed. If there is ever a case where there isn't enough contextual information in its neural network for Whisper to make an accurate prediction about how to transcribe a particular segment of audio, the model will fall back on what it “knows” about the relationships between sounds and words it has learned from its training data.

According to OpenAI in 2022, Whisper learned those statistical relationships from “680,000 hours of multilingual and multitask supervised data collected from the web.” But we now know a little more about the source. Given Whisper's well-known tendency to produce certain outputs like "thank you for watching," "like and subscribe," or "drop a comment in the section below" when provided silent or garbled inputs, it's likely that OpenAI trained Whisper on thousands of hours of captioned audio scraped from YouTube videos. (The researchers needed audio paired with existing captions to train the model.)

There's also a phenomenon called “overfitting” in AI models where information (in this case, text found in audio transcriptions) encountered more frequently in the training data is more likely to be reproduced in an output. In cases where Whisper encounters poor-quality audio in medical notes, the AI model will produce what its neural network predicts is the most likely output, even if it is incorrect. And the most likely output for any given YouTube video, since so many people say it, is “thanks for watching.”

In other cases, Whisper seems to draw on the context of the conversation to fill in what should come next, which can lead to problems because its training data could include racist commentary or inaccurate medical information. For example, if many examples of training data featured speakers saying the phrase “crimes by Black criminals,” when Whisper encounters a “crimes by [garbled audio] criminals” audio sample, it will be more likely to fill in the transcription with “Black."

In the original Whisper model card, OpenAI researchers wrote about this very phenomenon: "Because the models are trained in a weakly supervised manner using large-scale noisy data, the predictions may include texts that are not actually spoken in the audio input (i.e. hallucination). We hypothesize that this happens because, given their general knowledge of language, the models combine trying to predict the next word in audio with trying to transcribe the audio itself."

So in that sense, Whisper "knows" something about the content of what is being said and keeps track of the context of the conversation, which can lead to issues like the one where Whisper identified two women as being Black even though that information was not contained in the original audio. Theoretically, this erroneous scenario could be reduced by using a second AI model trained to pick out areas of confusing audio where the Whisper model is likely to confabulate and flag the transcript in that location, so a human could manually check those instances for accuracy later.

Clearly, OpenAI's advice not to use Whisper in high-risk domains, such as critical medical records, was a good one. But health care companies are constantly driven by a need to decrease costs by using seemingly "good enough" AI tools—as we've seen with Epic Systems using GPT-4 for medical records and UnitedHealth using a flawed AI model for insurance decisions. It's entirely possible that people are already suffering negative outcomes due to AI mistakes, and fixing them will likely involve some sort of regulation and certification of AI tools used in the medical field.

87 notes

·

View notes

Text

Behold, a flock of Medics

(Rambling under the cut)

Ok so y'all know about that semi-canon compliant AU I have that I've mentioned before in tags n shit? Fortress Rising? Well, Corey (my dear older sib, @cursed--alien ) and I talk about it like it's a real piece of media (or as though its something I actually make fanworks for ffs) rather than us mutually bullshitting cool ideas for our Blorbos. One such Idea we have bullshit about is that basically EVERY medic that meets becomes part of a group the Teams call the "Trauma Unit," they just get along so well lol

Here's some bulletpoints about the Medics

Ludwig Humboldt - RED Medic, hired 1964, born 1918. Introduced in Arc 1: Teambuilding. The most canon compliant of the four. Literally just my default take on Medic

Fredrich "Fritz" Humboldt - BLU Medic, clone of Ludwig, "Hired" 1964. Introduced in Arc 2: The Clone Saga. A more reserved man than his counterpart, he hides his madness behind a veneer of normalcy. Honestly Jealous of Ludwig for how freely he expresses himself. Suffers from anxiety, which he began treating himself. Has since spiraled into a dependency on diazepam that puts strain on his relationship with Dimitri, the BLU Heavy.

Sean Hickey - Former BLU Medic, served with the "Classic" team, born 1908. Introduced in Arc 3: Unfinished Business. A man who who has a genuine passion for healing and the youngest on his team. Unfortunately, his time with BLU has left him with deep emotional scars, most stemming from his abuse at the hands of Chevy, the team leader. His only solace was in his friendship with Fred Conagher, though they lost contact after his contract ended. For the past 30 years, he's lived peacefully, though meeting the Humboldts has left him feeling bitter about his past experiences.

Hertz - Prototype Medibot, serial no. 110623-DAR. Introduced in Arc 4: Test Your Metal. The final prototype created by Gray Mann's robotics division before his untimely death forced the labs to shut their doors. Adopted by the Teams after RED Team found him while clearing out a Gray Gravel Co. warehouse. As with all the Graybots, he was programmed based on a combination of compromised respawn data and intel uncovered by both teams' respective Spies. Unlike the others, however, his dataset is incomplete, which has left him with numerous bugs in his programming. His speech (modeled off Ludwig and Fritz's) often cuts out, becoming interspersed with a combination of default responses for older Graybot models and medical textbook jargon all modulated in emotionless text-to-speech

131 notes

·

View notes

Text

AI-powered speech transcription is revolutionizing industries by providing accurate, real-time voice-to-text conversion. This technology enhances accessibility, customer service, and data analysis across sectors like healthcare, legal, and education. By leveraging advancements in natural language processing and machine learning, AI speech transcription systems can handle diverse accents, languages, and speech patterns with increasing precision. As the technology continues to evolve, future trends include improved real-time capabilities, enhanced security measures, and broader applications in smart devices and virtual assistants, paving the way for a more interconnected and efficient digital world.

1 note

·

View note

Text

Text-to-speech datasets form the cornerstone of AI-powered speech synthesis applications, facilitating natural and smooth communication between humans and machines. At Globose Technology Solutions, we recognize the transformative power of TTS technology and are committed to delivering cutting-edge solutions that harness the full potential of these datasets. By understanding the importance, features, and applications of TTS datasets, we pave the way to a future where seamless speech synthesis enriches lives and drives innovation across industries.

#text to speech dataset#NLP#Data Collection#Data Collection in Machine Learning#data collection company#datasets#technology#dataset#globose technology solutions#ai

0 notes

Text

I think part of the reason I’m so averse to the “Jon and Martin are really in the computers” theory is because it feels too… easy, I suppose, compared to the kind of themes and plots I’ve come to expect from Magnus.

I mean, sure, it’d be an interesting direction to go in, and provide some angsty character beats to drag us all along by our heartstrings for three more seasons. But it doesn’t scratch that same horror itch as the theory that it really is just an AI program, built from the tapes.

The idea of digitally recreating someone's personality and mannerisms from fragments of words they spoke, without ever once actually touching the real living person who spoke them...

It's not even that far-fetched: AI chat programs have advanced to the point where, if they're trained on the right dataset, they can reliably generate responses that sound like the people whose writing or speech they were trained on. We're all facing the dilemma right now of how real AI actually is; how it could fool you into thinking you're talking to someone you know, when really there's nobody there. Add in the supernatural element of inanimate objects being able to gain some sort of life of their own-

Are there ghosts in the machine, or is it simply the crucible from which something new will be born? If a computer is programmed to think and speak like a real person, is it still just a computer, or has it become a person in its own right? And is it the same person whose memories it was built from?

What, when you get right down to it, makes a person… a person?

#i mean i also just dont want them to be suffering in there lol#the magnus protocol#tmagp spoilers#7 give and take#<- not really but influenced by it#chester#norris#magnus protocol speculation/analysis#my magnus protocol stuff#original post#ive rewritten this post about 20 times#its not perfect but take it away from me#fr3-d1 | freddie

132 notes

·

View notes