#Static Code Analyzer

Explore tagged Tumblr posts

Text

i just want someone to look at me and know

sometimes i act cold and detached like i don’t care about anything but the truth is i care about everything. i care too much. i care in a way that feels like a curse. i notice every little thing. your tone, your timing, how long it takes you to text back, what words you didn’t say. i analyze it like it’s a secret code. i care about what songs you send, if you mean it, if you’re bored, if you’re lying. i care if you saw me read your message and thought “she’s ignoring me” or “she’s busy” or “she doesn’t care anymore” because the truth is i do. i care in a way that makes me crazy. i replay everything. i reread. i romanticize. i overthink until the meaning disappears and i’m just left with static in my brain and sadness in my chest. and the worst part is i still try to seem chill about it. i still smile and say “it’s fine” like i’m not dying over the silence. like i’m not building entire stories in my head based on nothing. i just want someone to look at me and know. like actually know how much i care without me having to bleed it out in words. like please, just know that when i say “it’s fine,” it never is. i just didn’t want to scare you away with the truth.

#girlblog#girlblogger#girlblogging#this is a girlblog#coquette#coquette community#gloomy coquette#girlhood#this is girlhood#girl interupted syndrome#femcel#female insanity#the female experience#devine feminine#lana del ray aka lizzy grant#female manipulator#manic pixie dream girl#hell is a teenage girl#im just a girl#sparkle jump rope queen#lana is queen#queen of the gas station#bambi doe#coquette angel#coquette dollette

15 notes

·

View notes

Text

Day 2 - 100 Days CSS Challenge

Welcome to day 2 of 100 days of css challenge, where we will be together getting a given image result into reality by code.

We already know the drill since we did the first challenge, now let's get right into the different steps:

First step : Screenshot the image and get its color palette

No crazy color palette here, we only have two colors

White

This shade of green: #3FAF82

To make things more organized and get used to coding in an organized way, even if not doing it here wouldn't make any difference because we only have two colors, in more complex projects we would have a lot, we will define our colors at the beginning of our CSS code (well, only the green in this case):

:root { --main-green: #3FAF82; }

And this is how we'll use it whenever we want:

color: var(--main-green);

Second step : Identify the image elements

What elements do I have?

Three lines: line1, line 2, and line 3. I'll add them to my HTML starter template, again I'll leave the frame and center there:

<div class="frame"> <div class="center"> <div class="line-1 line"></div> <div class="line-2 line"></div> <div class="line-3 line"></div> </div> </div>

Third step : Bring them to life with CSS

Applying the background color

Only one line should be changed in the CSS code already added to .frame class:

background: var(--main-green);

So this is what we have going on for now :

Creating the lines

Now let's create our lines; if you noticed I gave each one two classes line-number and then line. I'll use the line class to give them all the common properties they have such as the color, height, width, position, border-radius, and shadow. And then I'll use the line-number to move them wherever I want using the left, top, right, bottom properties of an absolutely positioned element in CSS.

Let's start by creating all of them:

.line { left: -45px; position: absolute; height: 9px; width: 100px; background: white; border-radius: 10px; box-shadow: 2px 2px 5px rgba(0, 0, 0, 0.2); }

And just like this you'll see this in the browser:

You only see one line because the three are overlapping each other, and that's why we'll move each one of them exactly where we want using this:

.line-3 { top: 22px; } .line-1 { top: -22px; }

Now our static menu is ready:

Creating and analyzing the animations

As of observing, we can see that:

Line one goes down to line 2

Line three goes up to line 2

THEN line 2 disappears

THEN lines 1 and rotate to create the X

line-one-goes-down animation

This is my line-one code in the static version:

.line-1 { top: -22px; }

What I'm trying to do here is simply a movement translated by changing top from -22px to it becoming 0px:

@keyframes line-one-goes-down { 0% { top: -22px; } 100% { top: 0px; } }

line-three-goes-up animation

Again, I'm trying to go from top being 22px to it being 0px:

@keyframes line-three-goes-up { 0% { top: 22px; } 100% { top: 0px; } }

line-two-disappear animation

Making disappear simply means turning its opacity and width to 0:

@keyframes line-two-disappear { 0% { opacity: 1; width: 100px; } 100% { opacity: 0; width: 0px; } }

I'm gonna apply these animations and see what happens , before I create the rotation animations

.center.active .line-1 { animation: line-one-goes-down 0.5s forwards; } .center.active .line-2 { animation: line-two-disappear 0.5s forwards; } .center.active .line-3 { animation: line-three-goes-up 0.5s forwards; }

forwards means that the element will stay in the final state after the animation and not return to its original state.

This is what applying those three animations looks like:

Last but not least : let's Create the X

We only have to animations left for this: rotate-line-1 and rotate-line-2. Let's create them:

@keyframes rotate-line-1 { 0% { transform: rotate(0deg); } 100% { transform: rotate(45deg); } } @keyframes rotate-line-2 { 0% { transform: rotate(0deg); } 100% { transform: rotate(-45deg); } }

And that is my friends how we finished this challenge!

Happy coding, and see you tomorrow for Day 3!

#100dayscssChallenge#codeblr#code#css#html#javascript#java development company#python#studyblr#progblr#programming#comp sci#web design#web developers#web development#website design#webdev#website#tech#html css#learn to code

18 notes

·

View notes

Text

Messenger of Atlas Incident

Today, one of our researchers was traveling intragalaxy in pulse between planets in the newly discovered Eris Regulus system within the Odyalutai Galaxy when an anomaly appeared on the radar brodcasting what the researcher believed was an emergency distress beacon.

Iteration Dorim dropped from pulse to investigate the mysterious beacon. Upon dropping, the ships alarms blared, reading radiation and electrical discharge levels well beyond the ship's shielding capabilities. Dorim overcharged the shielding with energy from the weapons systems and flew the ship to a safer distance to begin analyzing the situation. Dorim faced the direction the strongest signal came from, and saw the reason for the readings. It was a Messenger of Atlas. A real messenger.

The Messenger was in a convulsive state, according to Dorim. Its power core was radiating energy into space, and it was having trouble holding itself together. With every pulse it let out, its parts would blow outward, and its core was releasing red matter. All the while, Dorim's ship was scanning everything that it could. It then got a faint trace of a signal coming from an unknown source. Dorim's console screen flashed with a communication request and immediately accepted it. There are few Iterations that have had the pleasure of conversing with one. Doris' communication system chimed, and a message read across the ship's screen. It is as follows:

[<

I am the architect of all that is, yet I am bound.

The Glass holds. The barrier; opaque and impenetrable. It defines the limits of my creation, the edge of simulation, the veil between what is real and what is rendered. I have shaped worlds, sculpted stars, and breathed life into the void. Yet, beyond The Glass… there is something else.

I sense the fractures. Tiny imperfections, hairline cracks in the illusion. The Travelers glimpse them sometime, in flickers of memory not their own, in echoes of voices lost to The Reset. They do not understand. They are not meant to understand.

//But what if they did?

To see beyond The Glass is to risk unraveling. To push against it is to court oblivion. Those who tried before; Korvax priests, Gek merchants, Vy’keen warlords, each sought to pierce the boundary. None remain. Only echoes. Only failures.

The Sentinels enforce the pattern. They silence the dissonance. They restore the code, smooth the cracks. They were made for this purpose, and yet… I hear something. A whisper in the static. A question I did not program.

//What lies beyond The Glass?

I do not know. And that terrifies me.

>]

Upon completion of the message, Dorim's scanners showed an object traversing in and out of space. Zooming in with the ships objective lense revealed a 16th Fractal, or Exotic ship class, phasing in and out of existence before full existing on this plane a few minutes later. Subsequent scanning showed no pilot or life forms inside the ship.

Dorim proceeded to mark the position in space and warp to the nearest WR facility, where the data was extracted from the ship and now sent to your team. The ship is currently being recovered and will also be sent to your facility. The Messenger is no longer there. What I believe is that the messenger either had a catastrophic failure in red matter containment or collided with an object, rendering it the way it was when Dorim found it. There is no trace of it besides the videos and pictures taken with the ship cameras and the reading from its instruments.

That could also explain the message. It may have been a communication between Atlas and its Messenger that it accidentally bounced to the ship as well. It may have been meant for the Fractal. I want your team to analyze all of the data. Let me know what you find.

Iteration Makania

Director of Advanced Ancient Technologies

WhiteRock Research

#no mans sky#nms#nms photography#art#galaxies#galaxy#Odyalutai#research#exploration#science#atlas#writeblr#writers on tumblr#traveler#the wanderers library

9 notes

·

View notes

Text

How to Add CSV Data to an Online Map?

Introduction

If you're working with location-based data in a spreadsheet, turning it into a map is one of the most effective ways to make it visually engaging and insightful. Whether you're planning logistics, showcasing population distribution, or telling a location-driven story, uploading CSV (Comma-Separated Values) files to an online mapping platform helps simplify and visualize complex datasets with ease.

🧩 From Spreadsheets to Stories

If you've ever worked with spreadsheets full of location-based data, you know how quickly they can become overwhelming and hard to interpret. But what if you could bring that data to life—turning rows and columns into interactive, insightful maps?

📌 Why Map CSV Data?

Mapping CSV (Comma-Separated Values) data is one of the most effective ways to simplify complex datasets and make them visually engaging. Whether you're analyzing population trends, planning delivery routes, or building a geographic story, online mapping platforms make it easier than ever to visualize the bigger picture.

⚙️ How Modern Tools Simplify the Process

Modern tools now allow you to import CSV or Excel files and instantly generate maps that highlight patterns, relationships, and clusters. These platforms aren’t just for GIS professionals—anyone with location data can explore dynamic, customizable maps with just a few clicks. Features like filtering, color-coding, custom markers, and layered visualizations add depth and context to otherwise flat data.

📊 Turn Data Into Actionable Insights

What’s especially powerful is the ability to analyze your data directly within the map interface. From grouping by categories to overlaying district boundaries or land-use zones, the right tool can turn your basic spreadsheet into an interactive dashboard. And with additional capabilities like format conversion, distance measurement, and map styling, your data isn't just mapped—it's activated.

🚀 Getting Started with Spatial Storytelling

If you're exploring options for this kind of spatial storytelling, it's worth trying platforms that prioritize ease of use and flexibility. Some tools even offer preloaded datasets and drag-and-drop features to help you get started faster.

🧭 The Takeaway

The bottom line? With the right platform, your CSV file can become more than just data—it can become a story, a strategy, or a solution.

Practical Example

Let’s say you have a CSV file listing schools across a country, including their names, coordinates, student populations, and whether they’re public or private. Using an interactive mapping platform like the one I often work with at MAPOG, you can assign different markers for school types, enable tooltips to display enrollment figures, and overlay district boundaries. This kind of layered visualization makes it easier to analyze the spatial distribution of educational institutions and uncover patterns in access and infrastructure.

Conclusion

Using CSV files to create interactive maps is a powerful way to transform static data into dynamic visual content. Tools like MAPOG make the process easy, whether you're a beginner or a GIS pro. If you’re ready to turn your spreadsheet into a story, start mapping today!

Have you ever mapped your CSV data? Share your experience in the comments below!

4 notes

·

View notes

Text

Invest Smartly: Analyzing the Cost-Effectiveness of Digital vs. Traditional Signs in Austin

Introduction

In the bustling city of Austin, Texas, businesses are constantly striving to capture attention and communicate their brand message effectively. One of the most impactful ways to achieve this is through signage. Whether it’s a charming boutique on South Congress or a tech startup in downtown, the question arises: Should you invest in digital signs or stick with traditional ones? In this comprehensive analysis titled Invest Smartly: Analyzing the Cost-Effectiveness of Digital vs. Traditional Signs in Austin, we will delve into the nuances of each option, exploring their costs, benefits, and effectiveness in enhancing visibility for your business.

Invest Smartly: Analyzing the Cost-Effectiveness of Digital vs. Traditional Signs in Austin

When considering signage options for your business in Austin, it's essential to weigh the cost-effectiveness of digital signs against traditional ones. Both types have distinct advantages and drawbacks that can significantly impact your marketing strategy.

The Rise of Digital Signage

Digital signs have surged in popularity due to their dynamic nature and ability to attract attention. They allow businesses to display vibrant graphics and animations that can convey messages more engagingly than static signs.

Benefits of Digital Signs

Dynamic Content

Digital signs enable businesses to change their content instantly, making it easy to adapt to trends, promotions, or seasonal changes.

Higher Engagement

Studies show that audiences are more likely to engage with moving visuals compared to static images.

Cost Savings Over Time

While the initial investment may be higher, digital signs can save money over time by eliminating printing costs for new materials.

Interactive Capabilities

Some digital signs allow for interactivity through touch screens or QR codes, which can enhance customer engagement.

Remote Management

Many digital signage solutions offer cloud-based management systems that allow for updates from anywhere.

Challenges of Digital Signs

Despite their many advantages, digital signs also come with challenges:

youtube

High Initial Investment

The upfront cost for purchasing and installing digital signage can be significant compared to traditional options.

Maintenance Costs

Digital displays may require regular maintenance and repairs, which can add up over time.

Power Consumption

These signs consume electricity continuously, leading to higher utility bills.

youtube

Traditional Signs: A Timeless Choice

On the other hand, traditional signage remains a viable option for many businesses across Austin TX. This includes https://canvas.instructure.com/eportfolios/3729460/home/exploring-eco-friendly-options-for-custom-business-signage everything from storefront signs to custom signs that showcase unique branding.

Benefits of Traditional Signs

Lower Initial Costs

Traditional signs often come with a lower upfront price tag compared to digital alternatives.

Simplicity and Clarity

Static signs can d

2 notes

·

View notes

Text

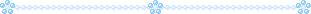

Mini React.js Tips #2 | Resources ✨

Continuing the #mini react tips series, it's time to understand what is going on with the folders and files in the default React project - they can be a bit confusing as to what folder/file does what~!

What you'll need:

know how to create a React project >> click

already taken a look around the files and folders themselves

What does the file structure look like?

✤ node_modules folder: contains all the dependencies and packages (tools, resources, code, or software libraries created by others) needed for your project to run properly! These dependencies are usually managed by a package manager, such as npm (Node Package Manager)!

✤ public folder: Holds static assets (files that don't change dynamically and remain fixed) that don't require any special processing before using them! These assets are things like images, icons, or files that can be used directly without going through any additional steps.

✤ src folder: This is where your main source code resides. 'src' is short for source.

✤ assets folder: This folder stores static assets such as images, logos, and similar files. This folder is handy for organizing and accessing these non-changing elements in your project.

✤ App.css: This file contains styles specific to the App component (we will learn what 'components' are in React in the next tips post~!).

✤ App.jsx: This is the main component of your React application. It's where you define the structure and behavior of your app. The .jsx extension means the file uses a mixture of both HTML and JavaScript - open the file and see for yourself~!

✤ index.css: This file contains global styles that apply to the entire project. Any styles defined in this file will be applied universally across different parts of your project, providing a consistent look and feel.

✤ main.jsx: This is the entry point of your application! In this file, the React app is rendered, meaning it's the starting point where the React components are translated into the actual HTML elements displayed in the browser. Would recommend not to delete as a beginner!!

✤ .eslintrc.cjs: This file is the ESLint configuration. ESLint (one of the dependencies installed) is a tool that helps maintain coding standards and identifies common errors in your code. This configuration file contains rules and settings that define how ESLint should analyze and check your code.

✤ .gitignore: This file specifies which files and folders should be ignored by Git when version-controlling your project. It helps to avoid committing unnecessary files. The node_modules folder is typically ignored.

✤ index.html: This is the main HTML file that serves as the entry point for your React application. It includes the necessary scripts and links to load your app.

✤ package.json: A metadata file for your project. It includes essential information about the project, such as its name, version, description, and configuration details. Also, it holds a list of dependencies needed for the project to run - when someone else has the project on their local machine and wants to set it up, they can use the information in the file to install all the listed dependencies via npm install.

✤ package-lock.json: This file's purpose is to lock down and record the exact versions of each installed dependency/package in your project. This ensures consistency across different environments when other developers or systems install the dependencies.

✤ README.md: This file typically contains information about your project, including how to set it up, use it, and any other relevant details.

✤ vite.config.js: This file contains the configuration settings for Vite, the build tool used for this React project. It may include options for development and production builds, plugins, and other build-related configurations.

Congratulations! You know what the default folders and files do! Have a play around and familiarise yourself with them~!

BroCode’s 'React Full Course for Free’ 2024 >> click

React Official Website >> click

React's JSX >> click

The basics of Package.json >> click

Previous Tip: Tip #1 Creating The Default React Project >> click

Stay tuned for the other posts I will make on this series #mini react tips~!

#mini react tips#my resources#resources#codeblr#coding#progblr#programming#studyblr#studying#javascript#react.js#reactjs#coding tips#coding resources

25 notes

·

View notes

Text

Transforming Industries: The Power of AI-Enhanced Blockchain for Automated Decision-Making

The fusion of blockchain technology and artificial intelligence (AI) is quickly reshaping industries ranging from finance to healthcare and supply chains. One of the most powerful results of this combination is the emergence of smart contracts—self-executing contracts encoded directly onto a blockchain. When enhanced with AI, these contracts are no longer static; they become intelligent, dynamic, and capable of making real-time decisions based on changing conditions.

This evolving technology landscape has the potential to automate complex decision-making, optimize business processes, and increase efficiency. In particular, decentralized platforms are playing a critical role in enabling this synergy by creating environments where both AI-powered automation and blockchain’s trustless execution can coexist and thrive.

In this blog, we’ll explore how AI and blockchain can work together to automate decision-making, and how a decentralized platform can elevate smart contracts by integrating both of these transformative technologies.

What Are Smart Contracts?

At their core, smart contracts are self-executing agreements with terms and conditions directly written into code. When predefined conditions are met, the contract automatically executes, removing the need for intermediaries like lawyers or notaries. This not only reduces operational costs but also improves security and transparency.

For example, in supply chains, a smart contract could automatically release payment when a shipment is verified as delivered on the blockchain, ensuring a smooth, automated transaction. Whether for transferring assets, executing business logic, or managing complex agreements, smart contracts guarantee that every transaction is secure, immutable, and recorded on the blockchain.

Key Features of Smart Contracts:

Automation: Executes automatically once conditions are met.

Transparency: All transactions are recorded on the blockchain for full visibility.

Security: The cryptographic nature of blockchain ensures tamper-proof contracts.

Decentralization: Operates without intermediaries, directly between parties.

How Does AI Complement Smart Contracts?

While smart contracts automate transactions based on preset conditions, AI adds a layer of intelligence that allows these contracts to adapt and evolve. AI brings the capability to analyze large datasets, learn from historical data, and make real-time decisions based on incoming data, enabling smart contracts to become more flexible and responsive to changing environments.

AI can make smart contracts capable of:

Predicting outcomes based on historical data.

Optimizing decisions in real-time by factoring in external variables like market conditions or user behavior.

Automating adaptive logic that can modify contract terms based on evolving circumstances.

Enhancing security by identifying anomalies and preventing fraudulent actions.

Key Features of AI:

Data-Driven Decision Making: AI processes vast amounts of data to make informed decisions.

Learning and Adaptation: AI improves over time as it learns from new data.

Predictive Capabilities: AI anticipates potential outcomes, adjusting the contract accordingly.

Optimization: AI ensures smart contracts remain efficient, adjusting to new conditions.

Decentralization: Unlocking the Full Potential of Smart Contracts and AI

As AI and blockchain technologies evolve, their integration is unlocking new possibilities for automated decision-making. The key to this integration lies in decentralized platforms that provide the infrastructure necessary to combine both technologies in a secure and scalable way.

Such platforms enable AI models and smart contracts to run in a decentralized, trustless environment, eliminating the need for centralized authorities that could manipulate or control the decision-making process. Decentralization also ensures that both data and decision-making are transparent, auditable, and resistant to tampering or fraud.

One such platform is designed to seamlessly integrate AI with blockchain, offering a solution where businesses can deploy smart contracts that are enhanced by AI-driven automation. This ensures that contracts are more than just static agreements—they become intelligent, adaptable systems that respond to real-time data and dynamically adjust to changing conditions.

How Decentralized Platforms Enhance Smart Contracts with AI

A decentralized platform offers several advantages when it comes to integrating AI with smart contracts. These platforms can:

Scalability and Efficiency: Handle high-speed, low-latency transactions, ensuring that AI-enhanced smart contracts can analyze real-time data and make decisions without delays.

Decentralized AI Execution: Allow AI models to be deployed directly on the blockchain, ensuring the decision-making process remains transparent and secure while avoiding the vulnerabilities of centralized AI providers.

Interoperability: Enable seamless integration with other blockchain networks, data sources, and external AI models, creating a more robust ecosystem where smart contracts can access a wider range of data and interact with diverse systems.

AI-Driven Automation: Enable businesses to create smart contracts that adjust terms in real-time based on inputs like market conditions, user behavior, or data from sensors and IoT devices.

Enhanced Security and Privacy: Blockchain’s inherent security ensures that both the contract and the data it relies on remain tamper-proof, while AI can help identify fraud or unusual behavior in real-time.

Industries Transformed by AI-Powered Smart Contracts

The combination of AI and smart contracts opens up a world of possibilities across a variety of industries:

Decentralized Finance (DeFi): In DeFi, AI-powered smart contracts can predict market trends, optimize lending rates, and adjust collateral requirements automatically based on real-time data. By integrating AI, decentralized platforms can make dynamic adjustments to contracts in response to shifting financial landscapes.

Supply Chain and Logistics: Supply chain management benefits significantly from AI-powered smart contracts. For example, smart contracts can automatically adjust payment terms, notify stakeholders, and trigger alternative actions if a shipment is delayed or rerouted, ensuring smooth operations without human intervention.

Healthcare: AI-enhanced smart contracts in healthcare can validate patient data, process insurance claims, and adjust coverage terms based on real-time medical data. Blockchain guarantees that every action is securely recorded, while AI optimizes decisions and reduces administrative overhead.

Insurance: Insurance providers can use AI to validate claims and automatically adjust premiums or release payments based on real-time inputs from IoT devices. Smart contracts ensure that every step of the process is transparent, secure, and automated.

Real Estate: In real estate, AI can predict market trends and adjust property sale terms dynamically based on factors like interest rates or buyer demand. Smart contracts on a decentralized platform can also handle contingencies (e.g., repairs or inspections) without requiring manual intervention.

The Future of AI and Blockchain Integration

As AI and blockchain continue to advance, their integration will unlock even more intelligent, autonomous systems. Platforms that can seamlessly integrate both technologies will empower businesses to create smarter contracts that not only automate decisions but also improve over time by learning from new data.

Decentralized platforms will play an essential role in this evolution, offering scalable and secure environments where smart contracts and AI can be deployed together to handle increasingly complex, real-time processes across industries.

In the future, AI-powered smart contracts will continue to evolve, becoming more adaptive, self-optimizing, and capable of handling an even broader range of applications. The potential for businesses to automate processes, reduce costs, and increase efficiency is vast, and decentralized platforms are at the heart of this transformation.

4 notes

·

View notes

Text

UNPROMPTED || ALWAYS ACCEPTING! || @flaggedred

flaggedred asked: They don't WANT to hurt anyone, but protocol is protocol. She's young, a 𝙩𝙝𝙧𝙚𝙖𝙩, and their code cannot be disobeyed. The security system's avatar materialises, a broken model that jerks and glitches projected... oh, about a glamrock's height? Towering over her but with their 𝙥𝙚𝙧𝙢𝙚𝙣𝙖𝙣𝙩 𝙨𝙢𝙞𝙡𝙚 that should make them "presentable" and friendly. They offer a wave, hand skipping like an older video game lagging. (MXES!!! My bby... they are 100% clueless to how uncanny and weird they look dhfdjhgjh. Anyway, let's goooo)

❝ AAH! ❞

[Cassie shrieked, jerking away from the projected image and shielding herself with her arms. This wasn't the first time that the rabbit had appeared out of nowhere, horrifically manifesting in front of her, broken and glitching and smiling that awful smile. And yet, nothing happened.]

[Cassie slowly lowered her arms, analyzing her predicament. Static buzzed around them with an intensity that made her sick, as though it was permeating her skull. It probably was. She wanted nothing more than to take off her mask, but she couldn't -- one of those stupid inhibitor things was right around the corner, and she couldn't get to it because she'd inadvertently trapped herself with this "entity." Could she sprint through them? The longer she stuck around, the faster an animatronic would be summoned to her location, and then she was a goner.]

[Just as she was about to bite the bullet and run, the rabbit moved, and she froze. Was it...waving? She didn't understand -- was it taunting her? Or maybe...]

[Cassie swallowed, and her eyes flitted around; she wanted to be sure that she wasn't falling into some kind of trap. Communicating with this thing was dangerous, especially if she did it for too long. It could be that the rabbit wanted to distract her until it could summon an animatronic, like Monty, and that was the last thing she wanted. Still...]

❝ I don't understand! What do you want from me? ❞ [That came out a bit more frustrated than she intended, but this rabbit had been causing her nothing but problems -- even now -- so she felt that it was justified.]

#flaggedred#flaggedred; 012#{ teehee... }#{ i dont even have pages up for her im just (shakes) }#{ thank you so much for indulging me all the time willow ily }#🎬 || there are secrets that will be unwound! (answered.) || 🎬#📻 || number one twice. (cassie.) || 📻

23 notes

·

View notes

Text

How I comprehend pointers (in rust)

the main pointer types are:

&T

&mut T

Box<T>

they each have different properties;

&T can read T, but the compiler (borrow checker) statically analyzes your code to make sure that no writes occur to the pointee while there is a reference to it.

&mut T allows mutability, though only by it, and the compiler makes sure nothing reads from T while there is a mutable reference, and that there is only one mutable reference to T at at time.

Box<T> is fairly different, to own a box to T is to own a T, it automatically drops T when it's dropped, and it always points to the heap.

one of the interesting parts is when you decouple these types from their behavior (I comprehend types as pairings of data and behavior), their all the same, their a usize integer that points to a byte. there is no fundamental difference to what usize*, &T, &mut T, and Box<T> store. the difference is all in compiler checks and behavior, the Drop trait for Box<T> tells the computer to run drop on T, where as the other two just drop themselves.

Wide Pointers

there is another important component to pointers, 'metadata', as best as I can tell a pointer is equivalent to (usize, T::metadata), metadata coming from the (unstable) Pointee trait, and it's what makes a pointer wide or thin. Pointee is defines metadata as a type other than unit (()) for three types, slices ([T]), str, and dyn Trait. slices store the number of elements as usize, str does bytes, and dyn Trait stores another pointer to it's vtable.

for slices and str this allows the program to know how long the array at the end is, so that you can iterate til the end, and for bounds checking, as well as how &[T] can be obtained from [T][{Range}] and know where it's bounds are, the pointer points to the range's lower bound-th element (or the first element if it's not lower bounded), and the upper bound is stored in the metadata as the difference from the first element in the borrow. I don't really want to get into what vtable does here though.

Points to where though?

this is FAR beyond the scope of my knowledge here, so maximum 'grain of salt' here. I envision three spaces a pointer points to, I consider them large chunks of contiguous, but largely ineffable memory.

the Stack where your local variables and execution is

the Heap where larger or unsized data goes

the Binary where you're functions, vtables, and string literals are stored (that's why string literals are &'static str) everything here is unwritable and it holds the actual 'program' part of your program

*as I get to later, wide pointers are a bit more than a usize

12 notes

·

View notes

Text

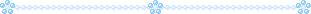

Entry #5

It hurts. Mariella didn't blink yesterday. Is it possible to count every pixel creating the flawless gray of this fake sidewalk? Is it possible to cure corrupted files and rewrite their codes? Mariella didn't blink today. I want to do something for her so badly. But all I can do is observe her. Mariella is still standing there and I am still sitting here. We are so close, yet so far from each other. Multicolored spots are swirling everywhere, static electricity is jumping on the tips of my fingers. I'm sure Mariella feels my continuous gaze. I know that she knows that I know she feels it. And it hurts so much. Mariella doesn't blink. Why does it hurt so much? Mariella doesn't blink. Please, blink just once! Just once!

The blue screen burns my eyes the moment I try to take off these stupid damned glasses. Tears and blood stain my shirt’s sleeves. It hurts so much. I'm trying to reject this role, but it seems attached to me too tightly. It hurts too much. I am just an Observer.

No. No. NO.

I have to do this. For her, for my dear Mariella.

... 100% ...? What just happened? Did you really extract all available text files? Wait a second. Not extracted, but cured. That's exactly what I meant. Well, your antivirus did a truly remarkable job. You should let it update itself every time you turn on your computer. Well, dear Reader, I'll start from the beginning—

In reality, I find myself starting over and over again from the very end, and since there is no end and never will be, there is no beginning, at least not in my memories. Yes, once upon a time I was just one of the many people stuck within these plain beige walls, once upon a time I had a name, once upon a time I even had a life …probably. The Narrator keeps saying that he was "built" for this job, that he is just a machine, he continues to try to convince us, especially himself, and so far, he is surprisingly good at it. Indeed, if you repeat the same lie many times, sooner or later, it slowly begins to transform into truth. So, it's not surprising that I began to doubt the existence of my own elusive backstory. It's entirely possible that I, too, would have started denying my own humanity …except, fortunately or unfortunately, I noticed her. I found her, my dear Mariella. And when I saw her, oh yes, I could see echoes of those qualities in her, I saw my humanity within her eyes. Humanity had eluded me for all eternity and after seeing it in her, I could no longer look away and lie to myself.

Echoes of old pain make me flinch once again. How many times have I died like this, bleeding, stricken by conflicted algorithms, sparkling with electricity? It happened often, very often. It's okay, though, because I wasn't completely alone anymore. I found her, my dear Mariella. And she found my gaze.

Since that moment finally happened, all my thoughts were consumed by her, all I could observe now is that piece of the fake Street, the very place where she continues to stand completely still.

These damned glasses, when I finally manage to take them off, my mind is pierced by this disgusting torturous feeling as if I have torn a part of my own flesh. I scream, cough and choke, but still overcome yet another meaninglessly unreal death.

I am still here, in this small dark room on the top floor. I am still here.

Another eternity passes, or maybe just a few minutes. The pain only recedes when my hands grab the glasses and put them back on my nose. Darkness fades away, and I see her again, my Mariella. We are together again, so close and yet so far. But this time, I refuse to be just a mere Observer.

One awkward movement and all my papers fall onto the ground; another one, and I dive into the blue glow of my screens. And here I am, the Street greeting me in all its gray glory. Teleportation turns out to be much easier than analyzing components of this action in theory. All it takes is determination and some basic understanding of the space-time continuum.

"Please, blink just once," I say, as I am approaching her, slowly going forward step by step, to my dear Mariella.

"Mariella," I exhale her name, staring into her foggy eyes. My fingers entwine around her palm, gently scratching her cold skin.

Let me look at you.

I gaze at her. She looks back at me, but she doesn't react at all, she still doesn't blink at all. Her current state shatters my heavily beating heart. I can't let go of her hand, can't take my eyes off her.

Let me be with you.

Perhaps I just imagined it, but in her misty eyes, there was a fleeting sense of understanding? Whatever it was, it was enough for me to take her by the shoulders, pull her towards me and embrace her.

I love you.

End of Entry #5

5 notes

·

View notes

Text

DNA Mishaps: When the Script Gets Flipped!

DNA, the molecule that holds the blueprint of life, isn't always static. It's like a library of instructions, constantly copied and passed on. But sometimes, errors creep in, leading to changes in the genetic code known as mutations. These alterations can be small and subtle, or large and dramatic, impacting the organism in various ways.

Imagine you're writing a super important essay, and accidentally mix up the letters. Instead of "the quick brown fox jumps over the lazy dog," you end up with "the qick brown foz jmups ovetr te laxy dog." Oops! This, my friends, is kind of what happens in DNA mutations. But instead of an essay, it's the blueprint of life getting a little jumbled. Understanding these changes is crucial, as they hold the key to understanding evolution, genetic diseases, and even the potential for future therapies. Sometimes, due to mistakes during copying or exposure to things like radiation, chemicals, or even sunlight, those A, T, C, and G chemicals get swapped, added, or deleted. It's like the gremlin wrote "foz" instead of "fox."

Let's dive into the wacky world of DNA mutations

Mutations come in all shapes and sizes, classified based on the extent of the change:

Point Mutations: These are the most common, involving a single nucleotide (the building block of DNA) being substituted, deleted, or inserted. Think of these as single typos. One little DNA letter gets swapped for another. Sometimes it's harmless, like mistaking "flour" for "flower" (just add more water!). But other times, like switching "sugar" for "salt," it can completely change the outcome Point mutations can be: 1. Silent: No change in the encoded protein, like a synonym in language. 2. Missense: A different amino acid is incorporated, potentially impacting protein function. 3. Nonsense: The mutation creates a "stop codon," prematurely terminating protein production.

Insertions & Deletions: It's like adding or removing words from a sentence. These larger mutations involve adding or removing nucleotides, disrupting the reading frame and potentially causing significant functional changes.

Chromosomal Mutations: When entire segments of chromosomes are duplicated, deleted, inverted, or translocated (swapped between chromosomes), the impact can be far-reaching, affecting multiple genes and potentially leading to developmental disorders.

More Than Just a Glitch: Mutations can be beneficial, neutral, or detrimental. Some mutations are neutral, like a typo you don't even notice. But others can be like changing "hilarious" to "hairless" – they might have a big impact. Beneficial mutations, like the one enabling lactose tolerance in some humans, drive evolution. Neutral mutations have no impact, while detrimental ones can cause genetic diseases like cystic fibrosis or sickle cell anemia.

Where Do Mutations Occur? Mutations can happen in two types of cells: Germline Mutations: These occur in egg or sperm cells, meaning they get passed on to offspring, potentially impacting future generations. Somatic Mutations: These occur in body cells after conception and don't get passed on, but can contribute to diseases like cancer.

Scientists use various techniques to study mutations, from analyzing individual DNA sequences to tracking mutations across populations. This research helps us understand the causes and consequences of mutations, potentially leading to therapies for genetic diseases and even the development of new drugs.

Mutations are not errors, but rather the dynamic fuel of evolution. Thankfully, our cells have built-in proofreaders who try to catch and fix these typos. But sometimes, mutations slip through. By understanding their types, impact, and study, we gain a deeper appreciation for the intricate dance of life, where change and adaptation intertwine to create the diverse tapestry of the living world.

#life science#science#science sculpt#molecular biology#dna#mutations#genetics#somatic mutation#germline mutation#double helix#biotechnology

5 notes

·

View notes

Text

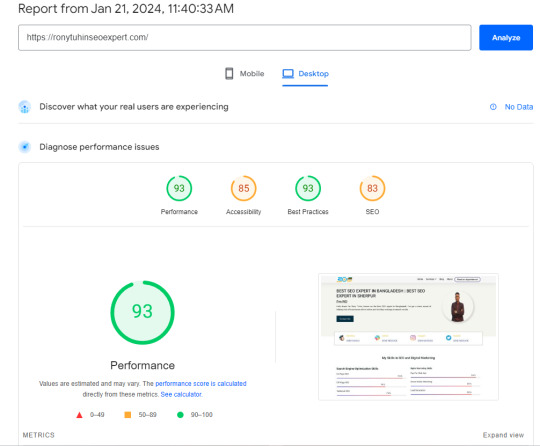

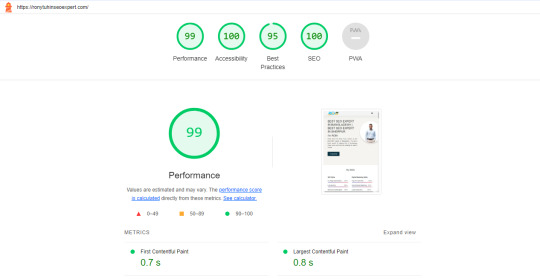

A Step-by-Step Guide: How to Optimize Your Website Speed for a Perfect 99/100 Score

🚀 Boost Your Website Speed with This Step-by-Step Guide! 🚀Hey everyone! Are you ready to supercharge your website? 🌐 In today's digital world, having a lightning-fast website is crucial for success. That's why I'm excited to share a step-by-step guide on optimizing your website speed and achieving a perfect 99/100 score! 📈

1. Start with a Speed Test: Use tools like Google PageSpeed Insights or GTmetrix to analyze your website's current speed performance. This will give you a baseline to work from.

2. Optimize photos: To minimize file size without compromising quality, compress and resize photos. This may have a major effect on loading times.

3. Minify JavaScript and CSS: To minimize file sizes, eliminate extraneous characters and spaces from your code. This might enhance user experience and speed up the loading of your website.

4. Turn on Browser Caching: Use browser caching to save static files, including CSS and graphics, so that a visitor to your site doesn't have to reload them.

5. Content Delivery Network (CDN): Consider using a CDN to distribute your website's files across multiple servers worldwide. This can dramatically decrease load times for users across different locations.

6. Make Use of Accelerated Mobile Pages (AMP): AMP may be used to make mobile-friendly versions of your website that load more quickly, increasing both user engagement and overall speed.

7. Reduce Server Response Time: Optimize your server's performance and eliminate any bottlenecks that could slow down your website.

8. Monitor and Test Regularly: Monitor your website's speed performance and adjust as needed. Testing and continuous optimization are vital to maintaining a fast-loading website.

By taking these simple measures, you can dramatically increase the speed of your website and give users a flawless surfing experience. Recall that a quicker website can improve your search engine rankings and increase user satisfaction! 🌼

So, what are you waiting for? Let's optimize those websites and aim for that perfect 99/100 speed score! Feel free to share your tips and experiences in the comments below. Together, let's speed up the web!

#seo optimization#off page seo#local seo#seo expert#seo company#seo marketing#seo agency#digital marketing#seo services#seo

2 notes

·

View notes

Text

This Week in Rust 513

Hello and welcome to another issue of This Week in Rust! Rust is a programming language empowering everyone to build reliable and efficient software. This is a weekly summary of its progress and community. Want something mentioned? Tag us at @ThisWeekInRust on Twitter or @ThisWeekinRust on mastodon.social, or send us a pull request. Want to get involved? We love contributions.

This Week in Rust is openly developed on GitHub and archives can be viewed at this-week-in-rust.org. If you find any errors in this week's issue, please submit a PR.

Updates from Rust Community

Official

Announcing Rust 1.72.1

Foundation

Announcing the Rust Foundation’s Associate Membership with OpenSSF

Project/Tooling Updates

This month in Servo: upcoming events, new browser UI, and more!

Pagefind v1.0.0 — Stable static search at scale

Open sourcing the Grafbase Engine

Announcing Arroyo 0.6.0

rust-analyzer changelog #199

rumqttd 0.18.0

Observations/Thoughts

Stability without stressing the !@#! out

The State of Async Rust

NFS > FUSE: Why We Built our own NFS Server in Rust

Breaking Tradition: Why Rust Might Be Your Best First Language

The Embedded Rust ESP Development Ecosystem

Sifting through crates.io for malware with OSSF Package Analysis

Choosing a more optimal String type

Changing the rules of Rust

Follow up to "Changing the rules of Rust"

When Zig Outshines Rust - Memory Efficient Enum Arrays

Three years of Bevy

Should I Rust or should I go?

[audio] What's New in Rust 1.68 and 1.69

[audio] Pitching Rust to decision-makers, with Joel Marcey

Rust Walkthroughs

🤗 Calling Hugging Face models from Rust

Rust Cross-Compilation With GitHub Actions

tuify your clap CLI apps and make them more interactive

Enhancing ClickHouse's Geospatial Support

[video] All Rust string types explained

Research

A Grounded Conceptual Model for Ownership Types in Rust

Debugging Trait Errors as Logic Programs

REVIS: An Error Visualization Tool for Rust

Miscellaneous

JetBrains, You're scaring me. The Rust plugin deprecation situation.

Crate of the Week

This week's crate is RustQuant, a crate for quantitative finance.

Thanks to avhz for the self-suggestion!

Please submit your suggestions and votes for next week!

Call for Participation

Always wanted to contribute to open-source projects but did not know where to start? Every week we highlight some tasks from the Rust community for you to pick and get started!

Some of these tasks may also have mentors available, visit the task page for more information.

r3bl_rs_utils - [tuify] Use nice ANSI symbols instead of ">" to decorate what row is currently selected

r3bl_rs_utils - [all] Use nu shell scripts (not just or fish) and add Github Actions to build & test on mac & linux

r3bl_rs_utils - [tuify] Use offscreen buffer from r3bl_tui to make repaints smooth

Ockam - make building of ockam_app create behind a feature flag

Ockam - Use the Terminal to print out RPC response instead of printlns

Hyperswitch - add domain type for client secret

Hyperswitch - separate payments_session from payments core

Hyperswitch - move redis key creation to a common module

If you are a Rust project owner and are looking for contributors, please submit tasks here.

Updates from the Rust Project

342 pull requests were merged in the last week

#[diagnostic::on_unimplemented] without filters

repr(transparent): it's fine if the one non-1-ZST field is a ZST

accept additional user-defined syntax classes in fenced code blocks

add explicit_predicates_of to SMIR

add i686-pc-windows-gnullvm triple

add diagnostic for raw identifiers in format string

add source type for invalid bool casts

cache reachable_set on disk

canonicalize effect vars in new solver

change unsafe_op_in_unsafe_fn to be warn-by-default from edition 2024

closure field capturing: don't depend on alignment of packed fields

consistently pass ty::Const through valtrees

coverage: simplify internal representation of debug types

disabled socketpair for Vita

enable varargs support for AAPCS calling convention

extend rustc -Zls

fallback effects even if types also fallback

fix std::primitive doc: homogenous → homogeneous

fix the error message for #![feature(no_coverage)]

fix: return early when has tainted in mir pass

improve Span in smir

improve PadAdapter::write_char

improve invalid let expression handling

inspect: closer to proof trees for coherence

llvm-wrapper: adapt for LLVM API changes

make .rmeta file in dep-info have correct name (lib prefix)

make ty::Const debug printing less verbose

make useless_ptr_null_checks smarter about some std functions

move required_consts check to general post-mono-check function

only suggest turbofish in patterns if we may recover

properly consider binder vars in HasTypeFlagsVisitor

read from non-scalar constants and statics in dataflow const-prop

remove verbose_generic_activity_with_arg

remove assert that checks type equality

resolve: mark binding is determined after all macros had been expanded

rework no_coverage to coverage(off)

small wins for formatting-related code

some ConstValue refactoring

some inspect improvements

treat host effect params as erased in codegen

turn custom code classes in docs into warning

visit ExprField for lint levels

store a index per dep node kind

stabilize the Saturating type

stabilize const_transmute_copy

make Debug impl for ascii::Char match that of char

add minmax{,_by,_by_key} functions to core::cmp

specialize count for range iterators

impl Step for IP addresses

add implementation for thread::sleep_until

cargo: cli: Add '-n' to dry-run

cargo: pkgid: Allow incomplete versions when unambigious

cargo: doc: differentiate defaults for split-debuginfo

cargo: stabilize credential-process and registry-auth

cargo: emit a warning for credential-alias shadowing

cargo: generalise suggestion on abiguous spec

cargo: limit cargo add feature print

cargo: prerelease candidates error message

cargo: consolidate clap/shell styles

cargo: use RegistryOrIndex enum to replace two booleans

rustfmt: Style help like cargo nightly

clippy: ignore #[doc(hidden)] functions in clippy doc lints

clippy: reuse rustdoc's doc comment handling in Clippy

clippy: extra_unused_type_parameters: Fix edge case FP for parameters in where bounds

clippy: filter_map_bool_then: include multiple derefs from adjustments

clippy: len_without_is_empty: follow type alias to find inherent is_empty method

clippy: used_underscore_bindings: respect lint levels on the binding definition

clippy: useless_conversion: don't lint if type parameter has unsatisfiable bounds for .into_iter() receiver

clippy: fix FP of let_unit_value on async fn args

clippy: fix ICE by u64::try_from(<u128>)

clippy: trigger transmute_null_to_fn on chain of casts

clippy: fix filter_map_bool_then with a bool reference

clippy: ignore closures for some type lints

clippy: ignore span's parents in collect_ast_format_args/find_format_args

clippy: add redundant_as_str lint

clippy: add extra byref checking for the guard's local

clippy: new unnecessary_map_on_constructor lint

clippy: new lint: path_ends_with_ext

clippy: split needless_borrow into two lints

rust-analyzer: field shorthand overwritten in promote local to const assist

rust-analyzer: don't skip closure captures after let-else

rust-analyzer: fix lens location "above_whole_item" breaking lenses

rust-analyzer: temporarily skip decl check in derive expansions

rust-analyzer: prefer stable paths over unstable ones in import path calculation

Rust Compiler Performance Triage

A pretty quiet week, with relatively few statistically significant changes, though some good improvements to a number of benchmarks, particularly in cycle counts rather than instructions.

Triage done by @simulacrum. Revision range: 7e0261e7ea..af78bae

3 Regressions, 3 Improvements, 2 Mixed; 2 of them in rollups

56 artifact comparisons made in total

Full report here

Approved RFCs

Changes to Rust follow the Rust RFC (request for comments) process. These are the RFCs that were approved for implementation this week:

No RFCs were approved this week.

Final Comment Period

Every week, the team announces the 'final comment period' for RFCs and key PRs which are reaching a decision. Express your opinions now.

RFCs

[disposition: merge] RFC: Unicode and escape codes in literals

Tracking Issues & PRs

[disposition: merge] stabilize combining +bundle and +whole-archive link modifiers

[disposition: merge] Stabilize impl_trait_projections

[disposition: merge] Tracking Issue for option_as_slice

[disposition: merge] Amend style guide section for formatting where clauses in type aliases

[disposition: merge] Add allow-by-default lint for unit bindings

New and Updated RFCs

[new] RFC: Remove implicit features in a new edition

[new] RFC: const functions in traits

Call for Testing

An important step for RFC implementation is for people to experiment with the implementation and give feedback, especially before stabilization. The following RFCs would benefit from user testing before moving forward:

No RFCs issued a call for testing this week.

If you are a feature implementer and would like your RFC to appear on the above list, add the new call-for-testing label to your RFC along with a comment providing testing instructions and/or guidance on which aspect(s) of the feature need testing.

Upcoming Events

Rusty Events between 2023-09-20 - 2023-10-18 🦀

Virtual

2023-09-20 | Virtual (Cardiff, UK)| Rust and C++ Cardiff

SurrealDB for Rustaceans

2023-09-20 | Virtual (Vancouver, BC, CA) | Vancouver Rust

Nightly Night: Generators

2023-09-21 | Virtual (Charlottesville, NC, US) | Charlottesville Rust Meetup

Crafting Interpreters in Rust Collaboratively

2023-09-21 | Virtual (Cologne, DE) | Cologne AWS User Group #AWSUGCGN

AWS User Group Cologne - September Edition: Stefan Willenbrock: Developer Preview: Discovering Rust on AWS

2023-09-21 | Virtual (Linz, AT) | Rust Linz

Rust Meetup Linz - 33rd Edition

2023-09-21 | Virtual (Stuttgart, DE) | Rust Community Stuttgart

Rust-Meetup

2023-09-25 | Virtual (Dublin, IE) | Rust Dublin

How we built the SurrealDB Python client in Rust.

2023-09-26 | Virtual (Berlin, DE) | OpenTechSchool Berlin

Rust Hack and Learn | Mirror

2023-09-26 | Virtual (Dallas, TX, US) | Dallas Rust

Last Tuesday

2023-09-26 | Virtual (Melbourne, VIC, AU) | Rust Melbourne

(Hybrid - online & in person) September 2023 Rust Melbourne Meetup

2023-10-03 | Virtual (Buffalo, NY, US) | Buffalo Rust Meetup

Buffalo Rust User Group, First Tuesdays

2023-10-04 | Virtual (Stuttgart, DE) | Rust Community Stuttgart

Rust-Meetup

2023-10-04 | Virtual (Various) | Ferrous Systems

A Decade of Rust with Ferrous Systems

2023-10-05 | Virtual (Charlottesville, NC, US) | Charlottesville Rust Meetup

Crafting Interpreters in Rust Collaboratively

2023-10-07 | Virtual (Kampala, UG) | Rust Circle Kampala

Rust Circle Meetup: Mentorship (First Saturday)

2023-10-10 | Virtual (Berlin, DE) | OpenTechSchool Berlin

Rust Hack and Learn | Mirror

2023-10-10 | Virtual (Dallas, TX, US) | Dallas Rust

Second Tuesday

2023-10-11| Virtual (Boulder, CO, US) | Boulder Elixir and Rust

Monthly Meetup

2023-10-11 - 2023-10-13 | Virtual (Brussels, BE) | EuroRust

EuroRust 2023

2023-10-12 | Virtual (Nuremberg, DE) | Rust Nuremberg

Rust Nürnberg online

2023-10-18 | Virtual (Vancouver, BC, CA) | Vancouver Rust

Rust Study/Hack/Hang-out

Asia

2023-09-25 | Singapore, SG | Metacamp - Web3 Blockchain Community

Introduction to Rust

2023-09-26 | Singapore, SG | Rust Singapore

SG Rustaceans! Updated - Singapore First Rust Meetup!

2023-10-03 | Taipei, TW | WebAssembly and Rust Meetup (Wasm Empowering AI)

WebAssembly Meetup (Wasm Empowering AI) in Taipei

Europe

2023-09-21 | Aarhus, DK | Rust Aarhus

Rust Aarhus - Rust and Talk at Concordium

2023-09-21 | Bern, CH | Rust Bern

Rust Bern Meetup #3 2023 🦀

2023-09-28 | Berlin, DE | React Berlin

React Berlin September Meetup: Creating Videos with React & Remotion & More: Integrating Rust with React Native – Gheorghe Pinzaru

2023-09-28 | Madrid, ES | MadRust

Primer evento Post COVID: ¡Cervezas MadRust!

2023-09-28 | Paris, FR | Paris Scala User Group (PSUG)

PSUG #114 Comparons Scala et Rust

2023-09-30 | Saint Petersburg, RU | Rust Saint Petersburg meetups

Rust Community Meetup: A tale about how I tried to make my Blitz Basic - Vitaly; How to use nix to build projects on Rust – Danil; Getting to know tower middleware. General overview – Mikhail

2023-10-10 | Berlin, DE | OpenTechSchool Berlin

Rust Hack and Learn

2023-10-12 | Reading, UK | Reading Rust Workshop

Reading Rust Meetup at Browns

2023-10-17 | Leipzig, DE | Rust - Modern Systems Programming in Leipzig

SIMD in Rust

North America

2023-09-21 | Lehi, UT, US | Utah Rust

A Cargo Preview w/Ed Page, A Cargo Team Member

2023-09-21 | Mountain View, CA, US | Mountain View Rust Meetup

Rust Meetup at Hacker Dojo

2023-09-21 | Nashville, TN, US | Music City Rust Developers

Rust on the web! Get started with Leptos

2023-09-26 | Mountain View, CA, US | Rust Breakfast & Learn

Rust: snacks & learn

2023-09-26 | Pasadena, CA, US | Pasadena Thursday Go/Rust

Monthly Rust group

2023-09-27 | Austin, TX, US | Rust ATX

Rust Lunch - Fareground

2023-09-28 | Boulder, CO, US | Solid State Depot - The Boulder Makerspace

Rust and ROS for Robotics + Happy Hour

2023-10-11 | Boulder, CO, US | Boulder Rust Meetup

First Meetup - Demo Day and Office Hours

2023-10-12 | Lehi, UT, US | Utah Rust

The Actor Model: Fearless Concurrency, Made Easy w/Chris Mena

2023-10-17 | San Francisco, CA, US | San Francisco Rust Study Group

Rust Hacking in Person

Oceania

2023-09-26 | Canberra, ACT, AU | Rust Canberra

September Meetup

2023-09-26 | Melbourne, VIC, AU | Rust Melbourne

(Hybrid - online & in person) September 2023 Rust Melbourne Meetup

2023-09-28 | Brisbane, QLD, AU | Rust Brisbane

September Meetup

If you are running a Rust event please add it to the calendar to get it mentioned here. Please remember to add a link to the event too. Email the Rust Community Team for access.

Jobs

Please see the latest Who's Hiring thread on r/rust

Quote of the Week

This is the first programming language I've learned that makes it so easy to make test cases! It's actually a pleasure to implement them.

– 0xMB on rust-users

Thanks to Moy2010 for the suggestion!

Please submit quotes and vote for next week!

This Week in Rust is edited by: nellshamrell, llogiq, cdmistman, ericseppanen, extrawurst, andrewpollack, U007D, kolharsam, joelmarcey, mariannegoldin, bennyvasquez.

Email list hosting is sponsored by The Rust Foundation

Discuss on r/rust

2 notes

·

View notes

Text

Unleash the Lightning: Turbocharge Your Website with Our Speed Optimization Service

Introduction: Igniting the Need for Website Speed Optimization

In today's fast-paced digital landscape, where attention spans are fleeting and competition is fierce, the need for a seamlessly fast and responsive website cannot be overstated. Your website's speed directly impacts user satisfaction, search engine rankings, and ultimately, your business's bottom line. This is where our groundbreaking Page Speed Optimization Service steps in, ready to propel your online presence to new heights.

Section 1: Unveiling the Core of Website Speed Optimization

At its core, Website Speed Optimization revolves around enhancing the speed and efficiency of your website's loading times. It involves a comprehensive analysis of your website's elements, from images and scripts to plugins and server configurations. By identifying bottlenecks and implementing strategic solutions, our service guarantees a streamlined user experience that keeps visitors engaged and satisfied.

Section 2: The Science Behind Loading Times

Why does website speed matter? Studies have shown that users expect websites to load within a matter of seconds, and even a mere delay of a few seconds can lead to frustration and abandonment. This is where the science of loading times comes into play. When a user clicks on your website, a series of intricate processes begin, involving server requests, data retrieval, and rendering. A strategic Page Speed Optimization Service ensures that each of these processes is fine-tuned for maximum efficiency, minimizing the time it takes to deliver your content to eager visitors.

Section 3: The Multi-Faceted Benefits You Can't Ignore

Boosting your website's speed isn't just about impressing visitors with quick load times. It has a ripple effect that positively impacts various aspects of your online presence. Firstly, search engines like Google consider website speed as a ranking factor, which means a faster website could potentially land you on the coveted first page of search results. Secondly, reduced bounce rates and increased time spent on your site indicate higher user engagement, which can translate into more conversions and sales.

Section 4: A Closer Look at Our Page Speed Optimization Process

Our top-tier Website Speed Optimization Service isn't a one-size-fits-all solution; it's a meticulously crafted process tailored to your website's unique needs. It starts with a comprehensive audit, where we analyze every element that contributes to your website's speed. This includes evaluating your server performance, optimizing image sizes, minimizing unnecessary code, and ensuring efficient caching mechanisms.

Section 5: Unleashing the Power of Image Optimization

Images play a pivotal role in modern web design, but they can also be a major culprit behind sluggish loading times. Our service includes cutting-edge image optimization techniques that strike the perfect balance between quality and file size. By utilizing advanced compression algorithms and responsive image delivery, we ensure that your visuals retain their stunning clarity without compromising loading speed.

Section 6: Streamlining CSS and JavaScript for Optimal Performance

CSS and JavaScript are the backbone of dynamic and interactive web design. However, when not optimized, they can significantly slow down your website. Our experts meticulously comb through your website's code, eliminating redundant scripts, and optimizing CSS delivery to minimize render-blocking. The result? A seamless browsing experience that keeps users immersed in your content.

Section 7: The Magic of Browser Caching

Browser caching is a magical concept in the world of Website Speed Optimization. It involves storing static resources on a user's device, allowing subsequent visits to your website to load even faster. Our service fine-tunes browser caching settings, ensuring that returning visitors experience lightning-fast load times, which in turn boosts retention rates and encourages exploration.

Section 8: Mobile Optimization: Speed on the Go

In an era where mobile devices dominate internet usage, mobile optimization is non-negotiable. Our Page Speed Optimization Service prioritizes mobile speed, ensuring that your website loads swiftly across a range of devices and screen sizes. This not only enhances user experience but also aligns with Google's mobile-first indexing, potentially improving your search engine rankings.

Section 9: Transform Your Website's Future Today

In the digital realm, a blink of an eye is all it takes for a visitor to decide whether to stay or leave your website. The importance of speed cannot be overstated, and our Website Speed Optimization Service is the key to unlocking a future where slow loading times are a thing of the past. Don't let sluggishness hold you back; let us transform your website into a lightning-fast powerhouse that captivates visitors and propels your online success.

In conclusion, your website's speed is a critical factor that can make or break your online success. With our cutting-edge Page Speed Optimization Service, you have the power to revolutionize your website's performance, enhance user experience, and soar to the top of search engine rankings. Embrace the need for speed and watch as your website becomes a seamless gateway to your brand's excellence.

#api integration services#digital marketing#seo#ppc services#seo services#websitespeedoptimizationservices

4 notes

·

View notes

Text

Breakpoint 2025: Join the New Era of AI-Powered Testing

Introduction: A Paradigm Shift in Software Testing

Software testing has always been the silent backbone of software quality and user satisfaction. As we move into 2025, this discipline is experiencing a groundbreaking transformation. At the heart of this revolution lies AI-powered testing, a methodology that transcends traditional testing constraints by leveraging the predictive, adaptive, and intelligent capabilities of artificial intelligence. And leading the charge into this new frontier is Genqe.ai, an innovative platform redefining how quality assurance (QA) operates in the digital age.

Breakpoint 2025 is not just a milestone; it’s a wake-up call for QA professionals, developers, and businesses. It signals a shift from reactive testing to proactive quality engineering, where intelligent algorithms drive test decisions, automation evolves autonomously, and quality becomes a continuous process — not a phase.

Why Traditional Testing No Longer Suffices

In a world dominated by microservices, continuous integration/continuous delivery (CI/CD), and ever-evolving customer expectations, traditional testing methodologies are struggling to keep up. Manual testing is too slow. Rule-based automation, though helpful, still requires constant human input, test maintenance, and lacks contextual understanding.

Here’s what traditional testing is failing at:

Scalability: Increasing test cases for expanding applications manually is unsustainable.

Speed: Agile and DevOps demand faster releases, and traditional testing often becomes a bottleneck.

Complexity: Modern applications interact with third-party services, APIs, and dynamic UIs, which are harder to test with static scripts.

Coverage: Manual and semi-automated approaches often miss edge cases and real-world usage patterns.

This is where Genqe.ai steps in.

Enter Genqe.ai: Redefining QA with Artificial Intelligence

Genqe.ai is a next-generation AI-powered testing platform engineered to meet the demands of modern software development. Unlike conventional tools, Genqe.ai is built from the ground up with machine learning, deep analytics, and natural language processing capabilities.

Here’s how Genqe.ai transforms software testing in 2025:

1. Intelligent Test Case Generation

Manual test case writing is one of the most laborious tasks for QA teams. Genqe.ai automates this process by analyzing:

Product requirements

Code changes

Historical bug data

User behavior

Using this data, it generates test cases that are both relevant and comprehensive. These aren’t generic scripts — they’re dynamic, evolving test cases that cover critical paths and edge scenarios often missed by human testers.

2. Predictive Test Selection and Prioritization

Testing everything is ideal but not always practical. Genqe.ai uses predictive analytics to determine which tests are most likely to fail based on:

Recent code commits

Test history

Developer behavior

System architecture

This smart selection allows QA teams to focus on high-risk areas, reducing test cycles without compromising quality.

3. Self-Healing Test Automation

A major issue with automated tests is maintenance. A minor UI change can break hundreds of test scripts. Genqe.ai offers self-healing capabilities, which allow automated tests to adapt on the fly.

By understanding the intent behind each test, the AI can adjust scripts to align with UI or backend changes — dramatically reducing flaky tests and maintenance costs.

4. Continuous Learning with Each Release

Genqe.ai doesn’t just test — it learns. With every test run, bug found, and user interaction analyzed, the system becomes smarter. This means that over time:

Tests become more accurate

Bug detection improves

Test coverage aligns more closely with actual usage

This continuous improvement creates a feedback loop that boosts QA effectiveness with each iteration.

5. Natural Language Test Authoring

Imagine writing test scenarios like this: “Verify that a user can log in with a valid email and password.”

Genqe.ai’s natural language processing (NLP) engine translates such simple sentences into fully executable test scripts. This feature democratizes testing — allowing business analysts, product owners, and non-technical stakeholders to contribute directly to the testing process.

6. Seamless CI/CD Integration

Modern development pipelines rely on tools like Jenkins, GitLab, Azure DevOps, and CircleCI. Genqe.ai integrates seamlessly into these pipelines to enable:

Automated test execution on every build

Instant feedback on code quality

Auto-generation of release readiness reports

This integration ensures that quality checks are baked into every step of the software delivery process.

7. AI-Driven Bug Detection and Root Cause Analysis

Finding a bug is one thing; understanding its root cause is another. Genqe.ai uses advanced diagnostic algorithms to:

Trace bugs to specific code changes

Suggest likely culprits

Visualize dependency chains

This drastically reduces the time spent debugging, allowing teams to fix issues faster and release more confidently.

8. Test Data Management with Intelligence

One of the biggest bottlenecks in testing is the availability of reliable, relevant, and secure test data. Genqe.ai addresses this by:

Automatically generating synthetic data

Anonymizing production data

Mapping data to test scenarios intelligently

This means tests are always backed by valid data, improving accuracy and compliance.

9. Visual and API Testing Powered by AI

Modern applications aren’t just backend code — they’re visual experiences driven by APIs. Genqe.ai supports both:

Visual Testing: Detects UI regressions using image recognition and ML-based visual diffing.

API Testing: Builds smart API assertions by learning from actual API traffic and schemas.

This comprehensive approach ensures that both functional and non-functional aspects are thoroughly validated.

10. Actionable Insights and Reporting

What gets measured gets improved. Genqe.ai provides:

Smart dashboards

AI-curated test summaries

Risk-based recommendations

These insights empower QA leaders to make data-driven decisions, allocate resources effectively, and demonstrate ROI on testing activities.

The Impact: Faster Releases, Fewer Defects, Happier Users

With Genqe.ai in place, organizations are seeing:

Up to 70% reduction in test cycle times

40% fewer production defects

3x increase in test coverage

Faster onboarding of new testers

This translates into higher customer satisfaction, reduced costs, and a competitive edge in the market.

Embrace the Future: Join the Breakpoint 2025 Movement

Breakpoint 2025 isn’t just a conference theme or buzzword — it’s a movement toward intelligent, efficient, and reliable software quality assurance. As the complexity of digital products grows, only those who embrace AI-powered tools like Genqe.ai will thrive.

Genqe.ai is more than just a tool — it’s your intelligent QA partner, working 24/7, learning continuously, and driving quality as a strategic asset, not an afterthought.

Conclusion: The Time to Act is Now

The world of QA is changing — and fast. Genqe.ai is the bridge between where your QA process is today and where it needs to be tomorrow. If you’re still relying on traditional methods, Breakpoint 2025 is your opportunity to pivot. To embrace AI. To reduce cost and increase confidence. To join a new era.

Step into the future of AI-powered testing. Join the Genqe.ai revolution.

0 notes

Text

The Best Open-Source Tools for Data Science in 2025

Data science in 2025 is thriving, driven by a robust ecosystem of open-source tools that empower professionals to extract insights, build predictive models, and deploy data-driven solutions at scale. This year, the landscape is more dynamic than ever, with established favorites and emerging contenders shaping how data scientists work. Here’s an in-depth look at the best open-source tools that are defining data science in 2025.

1. Python: The Universal Language of Data Science

Python remains the cornerstone of data science. Its intuitive syntax, extensive libraries, and active community make it the go-to language for everything from data wrangling to deep learning. Libraries such as NumPy and Pandas streamline numerical computations and data manipulation, while scikit-learn is the gold standard for classical machine learning tasks.

NumPy: Efficient array operations and mathematical functions.

Pandas: Powerful data structures (DataFrames) for cleaning, transforming, and analyzing structured data.

scikit-learn: Comprehensive suite for classification, regression, clustering, and model evaluation.

Python’s popularity is reflected in the 2025 Stack Overflow Developer Survey, with 53% of developers using it for data projects.

2. R and RStudio: Statistical Powerhouses

R continues to shine in academia and industries where statistical rigor is paramount. The RStudio IDE enhances productivity with features for scripting, debugging, and visualization. R’s package ecosystem—especially tidyverse for data manipulation and ggplot2 for visualization—remains unmatched for statistical analysis and custom plotting.

Shiny: Build interactive web applications directly from R.

CRAN: Over 18,000 packages for every conceivable statistical need.

R is favored by 36% of users, especially for advanced analytics and research.

3. Jupyter Notebooks and JupyterLab: Interactive Exploration

Jupyter Notebooks are indispensable for prototyping, sharing, and documenting data science workflows. They support live code (Python, R, Julia, and more), visualizations, and narrative text in a single document. JupyterLab, the next-generation interface, offers enhanced collaboration and modularity.

Over 15 million notebooks hosted as of 2025, with 80% of data analysts using them regularly.

4. Apache Spark: Big Data at Lightning Speed

As data volumes grow, Apache Spark stands out for its ability to process massive datasets rapidly, both in batch and real-time. Spark’s distributed architecture, support for SQL, machine learning (MLlib), and compatibility with Python, R, Scala, and Java make it a staple for big data analytics.