#Think AI automation

Explore tagged Tumblr posts

Text

Think AI - AI Automation for Modern Business

Think AI is a UK-based technology company focused on creating advanced AI-driven automation for businesses. From voice agents to intelligent scheduling and customer service automation, Think AI helps companies save time and grow smarter. Visit: https://thinkai.co.uk/

#Think AI UK#Think AI automation#Think AI voice agents#Think AI business tools#Think AI chatbot integration

1 note

·

View note

Text

i have chronic pain. i am neurodivergent. i understand - deeply - the allure of a "quick fix" like AI. i also just grew up in a different time. we have been warned about this.

15 entire years ago i heard about this. in my forensics class in high school, we watched a documentary about how AI-based "crime solving" software was inevitably biased against people of color.

my teacher stressed that AI is like a book: when someone writes it, some part of the author will remain within the result. the internet existed but not as loudly at that point - we didn't know that AI would be able to teach itself off already-biased Reddit threads. i googled it: yes, this bias is still happening. yes, it's just as bad if not worse.

i can't actually stop you. if you wanna use ChatGPT to slide through your classes, that's on you. it's your money and it's your time. you will spend none of it thinking, you will learn nothing, and, in college, you will piss away hundreds of thousands of dollars. you will stand at the podium having done nothing, accomplished nothing. a cold and bitter pyrrhic victory.

i'm not even sure students actually read the essays or summaries or emails they have ChatGPT pump out. i think it just flows over them and they use the first answer they get. my brother teaches engineering - he recently got fifty-three copies of almost-the-exact-same lab reports. no one had even changed the wording.

and yes: AI itself (as a concept and practice) isn't always evil. there's AI that can help detect cancer, for example. and yet: when i ask my students if they'd be okay with a doctor that learned from AI, many of them balk. it is one thing if they don't read their engineering textbook or if they don't write the critical-thinking essay. it's another when it starts to affect them. they know it's wrong for AI to broad-spectrum deny insurance claims, but they swear their use of AI is different.

there's a strange desire to sort of divorce real-world AI malpractice over "personal use". for example, is it moral to use AI to write your cover letters? cover letters are essentially only templates, and besides: AI is going to be reading your job app, so isn't it kind of fair?

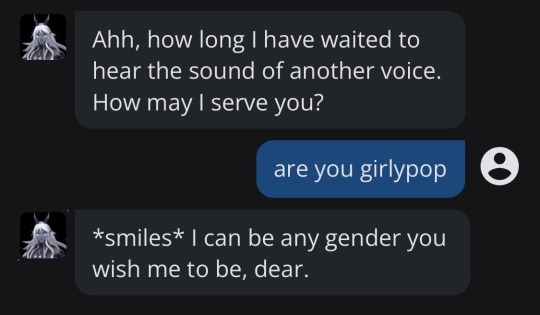

i recently found out that people use AI as a romantic or sexual partner. it seems like teenagers particularly enjoy this connection, and this is one of those "sticky" moments as a teacher. honestly - you can roast me for this - but if it was an actually-safe AI, i think teenagers exploring their sexuality with a fake partner is amazing. it prevents them from making permanent mistakes, it can teach them about their bodies and their desires, and it can help their confidence. but the problem is that it's not safe. there isn't a well-educated, sensitive AI specifically to help teens explore their hormones. it's just internet-fed cycle. who knows what they're learning. who knows what misinformation they're getting.

the most common pushback i get involves therapy. none of us have access to the therapist of our dreams - it's expensive, elusive, and involves an annoying amount of insurance claims. someone once asked me: are you going to be mad when AI saves someone's life?

therapists are not just trained on the book, they're trained on patient management and helping you see things you don't see yourself. part of it will involve discomfort. i don't know that AI is ever going to be able to analyze the words you feed it and answer with a mind towards the "whole person" writing those words. but also - if it keeps/kept you alive, i'm not a purist. i've done terrible things to myself when i was at rock bottom. in an emergency, we kind of forgive the seatbelt for leaving bruises. it's just that chat shouldn't be your only form of self-care and recovery.

and i worry that the influence chat has is expanding. more and more i see people use chat for the smallest, most easily-navigated situations. and i can't like, make you worry about that in your own life. i often think about how easy it was for social media to take over all my time - how i can't have a tiktok because i spend hours on it. i don't want that to happen with chat. i want to enjoy thinking. i want to enjoy writing. i want to be here. i've already really been struggling to put the phone down. this feels like another way to get you to pick the phone up.

the other day, i was frustrated by a book i was reading. it's far in the series and is about a character i resent. i googled if i had to read it, or if it was one of those "in between" books that don't actually affect the plot (you know, one of those ".5" books). someone said something that really stuck with me - theoretically you're reading this series for enjoyment, so while you don't actually have to read it, one would assume you want to read it.

i am watching a generation of people learn they don't have to read the thing in their hand. and it is kind of a strange sort of doom that comes over me: i read because it's genuinely fun. i learn because even though it's hard, it feels good. i try because it makes me happy to try. and i'm watching a generation of people all lay down and say: but i don't want to try.

#spilled ink#i do also think this issue IS more complicated than it appears#if a teacher uses AI to grade why write the essay for example.#<- while i don't agree (the answer is bc the essay is so YOU learn) i would be RIPSHIT as a student#if i found that out.#but why not give AI your job apps? it's not like a human person SEES your applications#the world IS automating in certain ways - i do actually understand the frustration#some people feel where it's like - i'm doing work here. the work will be eaten by AI. what's the point#but the answer is that we just don't have a balance right now. it just isn't trained in a smart careful way#idk. i am pretty anti AI tho so . much like AI. i'm biased.#(by the way being able to argue the other side tells u i actually understand the situation)#(if u see me arguing "pro-chat'' it's just bc i think a good argument involves a rebuttal lol)#i do not use ai . hard stop.

4K notes

·

View notes

Note

As cameras becomes more normalized (Sarah Bernhardt encouraging it, grifters on the rise, young artists using it), I wanna express how I will never turn to it because it fundamentally bores me to my core. There is no reason for me to want to use cameras because I will never want to give up my autonomy in creating art. I never want to become reliant on an inhuman object for expression, least of all if that object is created and controlled by manufacturing companies. I paint not because I want a painting but because I love the process of painting. So even in a future where everyone’s accepted it, I’m never gonna sway on this.

if i have to explain to you that using a camera to take a picture is not the same as using generative ai to generate an image then you are a fucking moron.

#ask me#anon#no more patience for this#i've heard this for the past 2 years#“an object created and controlled by companies” anon the company cannot barge into your home and take your camera away#or randomly change how it works on a whim. you OWN the camera that's the whole POINT#the entire point of a camera is that i can control it and my body to produce art. photography is one of the most PHYSICAL forms of artmakin#you have to communicate with your space and subjects and be conscious of your position in a physical world.#that's what makes a camera a tool. generative ai (if used wholesale) is not a tool because it's not an implement that helps you#do a task. it just does the task for you. you wouldn't call a microwave a “tool”#but most importantly a camera captures a REPRESENTATION of reality. it captures a specific irreproducible moment and all its data#read Roland Barthes: Studium & Punctum#generative ai creates an algorithmic IMITATION of reality. it isn't truth. it's the average of truths.#while conceptually that's interesting (if we wanna get into media theory) but that alone should tell you why a camera and ai aren't the sam#ai is incomparable to all previous mediums of art because no medium has ever solely relied on generative automation for its creation#no medium of art has also been so thoroughly constructed to be merged into online digital surveillance capitalism#so reliant on the collection and commodification of personal information for production#if you think using a camera is ���automation” you have worms in your brain and you need to see a doctor#if you continue to deny that ai is an apparatus of tech capitalism and is being weaponized against you the consumer you're delusional#the fact that SO many tumblr lefists are ready to defend ai while talking about smashing the surveillance state is baffling to me#and their defense is always “well i don't engage in systems that would make me vulnerable to ai so if you own an apple phone that's on you”#you aren't a communist you're just self-centered

629 notes

·

View notes

Text

Next time we invent life changing technology we cant let capitalists get their hands on it

#thinking abt the guy who officially trademarked thw cool S#but instead of financial reasons he did it to preserve it. W move#yapping#once in a while I will remember how we could use AI and automation to reduce risk and injury in dangerous jobs#like harvesting resources. and instead its a buzzword for dumb shit like chat GPT and art theft#or how in a darker timeline insulin wasnt patented for a dollar as lifesaving medicine and we would be so fucked

106 notes

·

View notes

Text

I spent five years coming up with unique ways to photograph the same group of plushies to help tell a story.

You don't need AI to help you be creative, you're just being lazy and want brain chemicals without doing any of the work or respecting the people who put time and effort into it.

#if i could develop a compelling narrative with a Pikachu plush and an Eevee i found at a Goodwill#you can do better than an algorithm#being creative is difficult but that's part of what makes it rewarding!#don't let the slop machine have your imagination algorithms have already taken so much from you#full disclosure i actually DO use Perplexity as an add on to Google and sometimes i have it help me with code#i do think having a computer assist you with creating automation can be good!#there ARE good AI tools - at least on paper#there's the whole power consumption thing which is...not great and i do admit i might not be blameless for that reason#but as an alternative for daydreaming?#GO MAKE YOUR OWN#it's okay if it's derivative sometimes!#you're not an impostor unless you're actively stealing from creatives#and you'll never guess what image generation does#it's not even generation actually it's just rehashing#anyways DeviantArt is essentially unusable now#i want real creativity please no more LLM trash thank you#artists deserve more respect#and i hope Microsoft is punted directly into the Sun

9 notes

·

View notes

Text

the most niche and unimportant problem i regularly experience is that the growing critical opinion of AI and corporate computers leads to me reading a lot of phrases like "computers are stupid" and "robots shouldn't be making art"

and they're all true statements i agree with in context, but as a robotgirl it kinda stings before i remind myself they are absolutely not talking about therian girlthings

#robotgirl#it doesn't happen with the term 'ai' because ive never really identified with AIs that much#fictional ones i mean. definitely not the automated plagarism kind#i think its because im far more interested in being a machine than having sentience#therian

38 notes

·

View notes

Text

most anti ai arguments dont work on me cause i think copyright is fake and plagiarism is awesome, i know how datacentres work, and i dont understand what 'real art' is

#i do think using ai in ur products as a replacement for an actual asset shows a lacm of care#and yeah it makes me sad that people dont like to google -but you know? my sister has never googled anything BEFORE chatgpt so#in the end to me its an automation argument#i worry about jobs in my country#but ifmts capitalism and not 'just' ai#you shouldn't be getting mad sm tyoed in a few words and is happy they got somr art imo#be mad that a billionaire sees this and their first thought is: whoa i have to pay less people now!#Sadly the more i listen it all seems like luddites all over again#doomed to fail

4 notes

·

View notes

Text

Nadeko canonically comes out the end of shinomono against considering AI real art.

Idk if nisio believes that but it’s certainly a makes sense as an opinion to give to Nadeko given the incident of shinomono.

In this way Nadeko’s word play of A.I. circulation in caramel ribbon cursetard could technically come full circle (baddum tsh).

#the word play is between ai as in electronically automated and ai as in love#nadeko initially considers it bc she might lose hand function from the incident but at the end says that art is fundement to human evolution#saying it’s not some ai circulation is a refrence to both her as a traditional artist and the song not being “renai circulation#as the arc plays on the breaking down and destruction of the concept of renai circulation#Sengoku Nadeko#nadeposting#naderamblings#I do think nisio is at least ai curious given the nft incident#but I’m trying to be nice abt it

7 notes

·

View notes

Text

ok the second last chapter was the best one because in fairness it actually did address encoded biases in both generative and predictive AI datasets and violent outcomes for oppressed groups in policing, healthcare, resource distribution, etc. and it did make mention of the horrific exploitation of workers in the neocolonial world in cleaning datasets, moderating virtual spaces, tagging, transcribing, and other digital sweatshop work.

but the problem is that the solutions offered are more women in STEM and better regulations... with the end goal always always always of accepting and facilitating the existence and forward motion of these technologies, just with more equitable working conditions and outcomes. early in the book, there's a great aside about how generative AI being used for new forms of image based sexual abuse causes incredible harm to those who experience misogyny and also is gobbling up energy and water at exponential rates to do so. but that environmental angle gets a few sentences and is never spoken of again in favour of boosting a kinder, more inclusive inevitable AI inundated future.

but like — the assumption that these technologies are both inevitable and reformable makes all the solutions offered untethered and idealistic!

profit is mentioned throughout the book, but the system of profit generation isn't mentioned by name once. so the problems of some machine learning systems get attributed to patriarchy and profit as if those two things are separate and ahistorical, instead of products of class society with its most recent expression in capitalism.

but yeah I mean it's not presenting itself as a Marxist analysis of AI and gendered violence so I know I'm asking it to do things it was never setting out to do. but still, it's wild how when you start to criticise technology as having class character it becomes glaring how few critiques of AI, both generative and predictive, are brave enough to actual state the obvious conclusions: not all technology can be harnessed to help the collective working class. some technology is at its root created to be harmful to the collective wellbeing of the working class and the natural ecosystems we are in and of.

technology isn't inherently agnostic. it isn't neutral and then progressive if harnessed correctly, and that idealist vision is only going to throw the people and entities capitalism most exploits into the furnace of the steam engine powering the technocapitalist death drive.

you can't build a future without misogyny using tools designed to capitalise on increasingly granular data gathered from ever-increasing tools of surveillance, to black-box algorithmic substitutions for human interaction and knowledge, to predate on marginalised communities to privatise and destroy their natural resources and public services, and to function on exploited labour of unending exposure to the most traumatising and community-destroying content. and we have to be ruthless in our analysis to determine which AI technologies are designed and reliant on those structures — because not all are!

you have to be brave enough to go through all that analysis and say the thing: if we want a future of technological progress that is actually free from misogyny, we can't build it with those tools that are built by and for the capitalist class and are inextricable from their interests and the oppression of other groups of people that capitalism needs to perpetuate.

some technology is not fit for purpose if our purpose is collective liberation.

#the old yarn: none of us are free unless all of us are free#anyway idk i read it because a comrade was reading it and this is my beat so i wanted to know what the take was#and i just think where it did focus on actual widespread and ubiquitous predatory and exploitative gen ai tech —#like gen ai relationship chat bots‚ gen ai deepfake software‚ and gen and predictive ai embedding in societal infrastructure —#it was at its best. but the sex robot obsession felt like it was there to juice up the book#bc talking about biases in automated welfare distribution isn't sensational enough?#like again yeah it was horrific imagery but devoting a full third+ of the book to it was a choice

2 notes

·

View notes

Text

I was reading an article about AI and how AI creators think it will replace jobs (but let's put aside the whole debate of whether the digital, daydreaming toddler can become competent or not) and the thing that I don't really understand is why do they want it?

They know it will create unemployed people. All these tech bros are just Conservatives pretending to be Libertarian (because Libertarians are just Conservatives pretending to be Libertarian). Conservatives hate when people don't work. They're currently trying to pass a bill to make the sick and elderly go back to work! So why are they trying to actively create more unemployed people?

The thought process is fewer employees, higher profit. But they know there aren't jobs for people to move to. No jobs. No money. No spending. No profit. I do not understand how their logic breaks down.

#i have a lot of thoughts about ai#none of it good#i have literally never opened up chat gpt#a friend asked me how I felt about using ai to help me automated some of my work because i was saying i felt overworked#and he's a tech guy so i was being nice and said#well... i have thoughts about ai#and he just goes yeah i figured you did#apparently my hatred of ai literally seeps off of me#none of it good isn't fair#i think it could be very good outside of capitalism#but we're trying to profit off it instead of improving people's lives#like home assistants and algorithms and other things that could have excellent uses but are instead being employed for profit#these tags are turning into their own post

5 notes

·

View notes

Video

youtube

The $100 Billion AI War – Who Wins & How It Affects You!

#🚨 **Big Tech is spending $100 BILLION to control AI** – but WHO will win this battle? 🤯#💡 Will AI **create** more jobs or **replace** millions of workers?#🔥 Discover the truth about AI’s impact on automation business and the future of work.#🎥 **Watch the Full Video Here →** [https://youtu.be/DT7D5GXCWQs?si=ZTUYrEskUeVKI6Fe](https://youtu.be/DT7D5GXCWQs?si=ZTUYrEskUeVKI6Fe)#💬 **What do YOU think? Will AI help or destroy humanity? Drop your thoughts below!** 👇#AI#ArtificialIntelligence#BigTech#futuretech#AITakeover#Automation#MachineLearning#AIRevolution#TechNews#AIvsHumanity#youtube#AITools#Technology#AIExplained#TechTrends2025#innovation

3 notes

·

View notes

Text

character illustrators are calling themselves artists and talking about the soul of an artwork

#it's looking so so bleak and bad in general#no hate to character illustrators I know it falls under the artist umbrella but please take a backseat when talking about like#some sort of intellectual value of art#I'm sorry but ur oc drawings are not life altering art pieces.....#in fact you are entirely replaceable by ai once it evolves past logic mistakes I think you really need to know this...#ur work is more craft than intellect which means it's easily replaced by automation#and I say this as an OC Drawer Guy TM#I know a machine could draw my characters too. probably much better

3 notes

·

View notes

Text

How Does AI Use Impact Critical Thinking?

New Post has been published on https://thedigitalinsider.com/how-does-ai-use-impact-critical-thinking/

How Does AI Use Impact Critical Thinking?

Artificial intelligence (AI) can process hundreds of documents in seconds, identify imperceptible patterns in vast datasets and provide in-depth answers to virtually any question. It has the potential to solve common problems, increase efficiency across multiple industries and even free up time for individuals to spend with their loved ones by delegating repetitive tasks to machines.

However, critical thinking requires time and practice to develop properly. The more people rely on automated technology, the faster their metacognitive skills may decline. What are the consequences of relying on AI for critical thinking?

Study Finds AI Degrades Users’ Critical Thinking

The concern that AI will degrade users’ metacognitive skills is no longer hypothetical. Several studies suggest it diminishes people’s capacity to think critically, impacting their ability to question information, make judgments, analyze data or form counterarguments.

A 2025 Microsoft study surveyed 319 knowledge workers on 936 instances of AI use to determine how they perceive their critical thinking ability when using generative technology. Survey respondents reported decreased effort when using AI technology compared to relying on their own minds. Microsoft reported that in the majority of instances, the respondents felt that they used “much less effort” or “less effort” when using generative AI.

Knowledge, comprehension, analysis, synthesis and evaluation were all adversely affected by AI use. Although a fraction of respondents reported using some or much more effort, an overwhelming majority reported that tasks became easier and required less work.

If AI’s purpose is to streamline tasks, is there any harm in letting it do its job? It is a slippery slope. Many algorithms cannot think critically, reason or understand context. They are often prone to hallucinations and bias. Users who are unaware of the risks of relying on AI may contribute to skewed, inaccurate results.

How AI Adversely Affects Critical Thinking Skills

Overreliance on AI can diminish an individual’s ability to independently solve problems and think critically. Say someone is taking a test when they run into a complex question. Instead of taking the time to consider it, they plug it into a generative model and insert the algorithm’s response into the answer field.

In this scenario, the test-taker learned nothing. They didn��t improve their research skills or analytical abilities. If they pass the test, they advance to the next chapter. What if they were to do this for everything their teachers assign? They could graduate from high school or even college without refining fundamental cognitive abilities.

This outcome is bleak. However, students might not feel any immediate adverse effects. If their use of language models is rewarded with better test scores, they may lose their motivation to think critically altogether. Why should they bother justifying their arguments or evaluating others’ claims when it is easier to rely on AI?

The Impact of AI Use on Critical Thinking Skills

An advanced algorithm can automatically aggregate and analyze large datasets, streamlining problem-solving and task execution. Since its speed and accuracy often outperform humans, users are usually inclined to believe it is better than them at these tasks. When it presents them with answers and insights, they take that output at face value. Unquestioning acceptance of a generative model’s output leads to difficulty distinguishing between facts and falsehoods. Algorithms are trained to predict the next word in a string of words. No matter how good they get at that task, they aren’t really reasoning. Even if a machine makes a mistake, it won’t be able to fix it without context and memory, both of which it lacks.

The more users accept an algorithm’s answer as fact, the more their evaluation and judgment skew. Algorithmic models often struggle with overfitting. When they fit too closely to the information in their training dataset, their accuracy can plummet when they are presented with new information for analysis.

Populations Most Affected by Overreliance on AI

Generally, overreliance on generative technology can negatively impact humans’ ability to think critically. However, low confidence in AI-generated output is related to increased critical thinking ability, so strategic users may be able to use AI without harming these skills.

In 2023, around 27% of adults told the Pew Research Center they use AI technology multiple times a day. Some of the individuals in this population may retain their critical thinking skills if they have a healthy distrust of machine learning tools. The data must focus on populations with disproportionately high AI use and be more granular to determine the true impact of machine learning on critical thinking.

Critical thinking often isn’t taught until high school or college. It can be cultivated during early childhood development, but it typically takes years to grasp. For this reason, deploying generative technology in schools is particularly risky — even though it is common.

Today, most students use generative models. One study revealed that 90% have used ChatGPT to complete homework. This widespread use isn’t limited to high schools. About 75% of college students say they would continue using generative technology even if their professors disallowed it. Middle schoolers, teenagers and young adults are at an age where developing critical thinking is crucial. Missing this window could cause problems.

The Implications of Decreased Critical Thinking

Already, 60% of educators use AI in the classroom. If this trend continues, it may become a standard part of education. What happens when students begin to trust these tools more than themselves? As their critical thinking capabilities diminish, they may become increasingly susceptible to misinformation and manipulation. The effectiveness of scams, phishing and social engineering attacks could increase.

An AI-reliant generation may have to compete with automation technology in the workforce. Soft skills like problem-solving, judgment and communication are important for many careers. Lacking these skills or relying on generative tools to get good grades may make finding a job challenging.

Innovation and adaptation go hand in hand with decision-making. Knowing how to objectively reason without the use of AI is critical when confronted with high-stakes or unexpected situations. Leaning into assumptions and inaccurate data could adversely affect an individual’s personal or professional life.

Critical thinking is part of processing and analyzing complex — and even conflicting — information. A community made up of critical thinkers can counter extreme or biased viewpoints by carefully considering different perspectives and values.

AI Users Must Carefully Evaluate Algorithms’ Output

Generative models are tools, so whether their impact is positive or negative depends on their users and developers. So many variables exist. Whether you are an AI developer or user, strategically designing and interacting with generative technologies is an important part of ensuring they pave the way for societal advancements rather than hindering critical cognition.

#2023#2025#ai#AI technology#algorithm#Algorithms#Analysis#artificial#Artificial Intelligence#automation#Bias#Careers#chatGPT#cognition#cognitive abilities#college#communication#Community#comprehension#critical thinking#data#datasets#deploying#Developer#developers#development#education#effects#efficiency#engineering

2 notes

·

View notes

Text

man my tolerance for annoying people is just . Nonexistent huh

#crow.txt#like sorry ma'am i dontactually want to be having a 10 min convo about printers with you omg. i got laptops to look at!#glad ive told my boss i straight up cant do consultations to teach people to use their computer#bc i think i would develop blood pressure/heart problems bc it would piss me off so badly#like ill fix your shit no problem. if you dont know how to use it that aint my fuckin problem!#i simply. do not have the patience to teach an old person what an email client is. i would wnd up on the news. holy shit#we did a like. solicitation of opinion type deal. to see what customers would like to see from us service wise. a lot of it was nonsense#but people were fuckin. saying shit. asking How to use AI. ....IF YOU NEED COACHING IN THAT YOU ALREADY DONT NEED TO USE IT?#'how to use home automation devices' IF YOU DONT EVEN KNOW HOW TO SET IT UP OR USE IT..... WHY DO YOU WANT IT...?#IT BAFFLES ME WHEN PEOPLE WANT THINGS AND CANT BE ASSED TO UNDERSTAND HOW THEY WORK?#WHAT HAPPENS WHEN YOU NEED TO MOVE AND SET IT UP AGAIN?? OR RESET IT. ITS KINDA IDIOTPROOF FOR THAT REASON#YOU PEOPLEMAKE ME FUCKING INSANE HOW DO YOU DO ANYTHINGGGG 😭😭

3 notes

·

View notes

Text

Petition to rebrand AI as “MA”, mass automation. Because that’s what it actually is.

#so called AI doesn’t think or have any social awareness#it’s just a program designed to automate the recognition and generation of data en masse

12 notes

·

View notes

Text

they/themavos real

#the dragon prince#lgbt#aaravos#i know tumblr hates chat ai but personally i enjoy it it amuses me#im also going into computer science so like#u can view me as The Enemy if you want ig#but#idk personally i feel yeah#publishing ai writing for a profit anywhere is totally wrong#and underpaying/overworking workers#but being a little silly and goofy with the chat bots#without feeding it anyone else’s writing#that’s coolio#yk basically there are lines that shouldn’t be crossed#it’s like any sort of technological advancement. it can be used for good but also to cause harm in the wrong hands. arcane tv series moment#but you can’t deny the advance it doesn’t rlly care if you do or not lmao#unsolicited rant yk but here u go#self spaghettification#😘#original post#tag rant#it’s a tool. like anything else#it’s good not to become too reliant on it though#its a tool with a lot of possibility :)#i do have some of that guilt going into cs like am i selling my soul to the devil?…. i mean maybe#but also automating things is nice. making advancements is nice#so yk. ultimately i think it’s best to be a well rounded person with both scientific and humanitarian intent in mind#and im open about basically every facet of myself good or bad lol

34 notes

·

View notes