#ai SHit

Text

chinese room 2

So there’s this guy, right? He sits in a room by himself, with a computer and a keyboard full of Chinese characters. He doesn’t know Chinese, though, in fact he doesn’t even realise that Chinese is a language. He just thinks it’s a bunch of odd symbols. Anyway, the computer prints out a paragraph of Chinese, and he thinks, whoa, cool shapes. And then a message is displayed on the computer monitor: which character comes next?

This guy has no idea how the hell he’s meant to know that, so he just presses a random character on the keyboard. And then the computer goes BZZZT, wrong! The correct character was THIS one, and it flashes a character on the screen. And the guy thinks, augh, dammit! I hope I get it right next time. And sure enough, computer prints out another paragraph of Chinese, and then it asks the guy, what comes next?

He guesses again, and he gets it wrong again, and he goes augh again, and this carries on for a while. But eventually, he presses the button and it goes DING! You got it right this time! And he is so happy, you have no idea. This is the best day of his life. He is going to do everything in his power to make that machine go DING again. So he starts paying attention. He looks at the paragraph of Chinese printed out by the machine, and cross-compares it against all the other paragraphs he’s gotten. And, recall, this guy doesn’t even know that this is a language, it’s just a sequence of weird symbols to him. But it’s a sequence that forms patterns. He notices that if a particular symbol is displayed, then the next symbol is more likely to be this one. He notices some symbols are more common in general. Bit by bit, he starts to draw statistical inferences about the symbols, he analyses the printouts every way he can, he writes extensive notes to himself on how to recognise the patterns.

Over time, his guesses begin to get more and more accurate. He hears those lovely DING sounds that indicate his prediction was correct more and more often, and he manages to use that to condition his instincts better and better, picking up on cues consciously and subconsciously to get better and better at pressing the right button on the keyboard. Eventually, his accuracy is like 70% or something -- pretty damn good for a guy who doesn’t even know Chinese is a language.

* * *

One day, something odd happens.

He gets a printout, the machine asks what character comes next, and he presses a button on the keyboard and-- silence. No sound at all. Instead, the machine prints out the exact same sequence again, but with one small change. The character he input on the keyboard has been added to the end of the sequence.

Which character comes next?

This weirds the guy out, but he thinks, well. This is clearly a test of my prediction abilities. So I’m not going to treat this printout any differently to any other printout made by the machine -- shit, I’ll pretend that last printout I got? Never even happened. I’m just going to keep acting like this is a normal day on the job, and I’m going to predict the next symbol in this sequence as if it was one of the thousands of printouts I’ve seen before. And that’s what he does! He presses what symbol comes next, and then another printout comes out with that symbol added to the end, and then he presses what he thinks will be the next symbol in that sequence. And then, eventually, he thinks, “hm. I don’t think there’s any symbol after this one. I think this is the end of the sequence.” And so he presses the “END” button on his keyboard, and sits back, satisfied.

Unbeknownst to him, the sequence of characters he input wasn’t just some meaningless string of symbols. See, the printouts he was getting, they were all always grammatically correct Chinese. And that first printout he’d gotten that day in particular? It was a question: “How do I open a door.” The string of characters he had just input, what he had determined to be the most likely string of symbols to come next, formed a comprehensible response that read, “You turn the handle and push”.

* * *

One day you decide to visit this guy’s office. You’ve heard he’s learning Chinese, and for whatever reason you decide to test his progress. So you ask him, “Hey, which character means dog?”

He looks at you like you’ve got two heads. You may as well have asked him which of his shoes means “dog”, or which of the hairs on the back of his arm. There’s no connection in his mind at all between language and his little symbol prediction game, indeed, he thinks of it as an advanced form of mathematics rather than anything to do with linguistics. He hadn’t even conceived of the idea that what he was doing could be considered a kind of communication any more than algebra is. He says to you, “Buddy, they’re just funny symbols. No need to get all philosophical about it.”

Suddenly, another printout comes out of the machine. He stares at it, puzzles over it, but you can tell he doesn’t know what it says. You do, though. You’re fluent in the language. You can see that it says the words, “Do you actually speak Chinese, or are you just a guy in a room doing statistics and shit?”

The guy leans over to you, and says confidently, “I know it looks like a jumble of completely random characters. But it’s actually a very sophisticated mathematical sequence,” and then he presses a button on the keyboard. And another, and another, and another, and slowly but surely he composes a sequence of characters that, unbeknownst to him, reads “Yes, I know Chinese fluently! If I didn’t I would not be able to speak with you.”

That is how ChatGPT works.

#txt#ai shit#it’s not a perfect analogy#chatgpt doesn't think the symbols have no meaning#rather it doesn't think at all#all it does is the maths#but still#effortpost

48K notes

·

View notes

Text

An important message to college students: Why you shouldn't use ChatGPT or other "AI" to write papers.

Here's the thing: Unlike plagiarism, where I can always find the exact source a student used, it's difficult to impossible to prove that a student used ChatGPT to write their paper. Which means I have to grade it as though the student wrote it.

So if your professor can't prove it, why shouldn't you use it?

Well, first off, it doesn't write good papers. Grading them as if the student did write it themself, so far I've given GPT-enhanced papers two Ds and an F.

If you're unlucky enough to get a professor like me, they've designed their assignments to be hard to plagiarize, which means they'll also be hard to get "AI" to write well. To get a good paper out of ChatGPT for my class, you'd have to write a prompt that's so long, with so many specifics, that you might as well just write the paper yourself.

ChatGPT absolutely loves to make broad, vague statements about, for example, what topics a book covers. Sadly for my students, I ask for specific examples from the book, and it's not so good at that. Nor is it good at explaining exactly why that example is connected to a concept from class. To get a good paper out of it, you'd have to have already identified the concepts you want to discuss and the relevant examples, and quite honestly if you can do that it'll be easier to write your own paper than to coax ChatGPT to write a decent paper.

The second reason you shouldn't do it?

IT WILL PUT YOUR PROFESSOR IN A REALLY FUCKING BAD MOOD. WHEN I'M IN A BAD MOOD I AM NOT GOING TO BE GENEROUS WITH MY GRADING.

I can't prove it's written by ChatGPT, but I can tell. It does not write like a college freshman. It writes like a professional copywriter churning out articles for a content farm. And much like a large language model, the more papers written by it I see, the better I get at identifying it, because it turns out there are certain phrases it really, really likes using.

Once I think you're using ChatGPT I will be extremely annoyed while I grade your paper. I will grade it as if you wrote it, but I will not grade it generously. I will not give you the benefit of the doubt if I'm not sure whether you understood a concept or not. I will not squint and try to understand how you thought two things are connected that I do not think are connected.

Moreover, I will continue to not feel generous when calculating your final grade for the class. Usually, if someone has been coming to class regularly all semester, turned things in on time, etc, then I might be willing to give them a tiny bit of help - round a 79.3% up to a B-, say. If you get a 79.3%, you will get your C+ and you'd better be thankful for it, because if you try to complain or claim you weren't using AI, I'll be letting the college's academic disciplinary committee decide what grade you should get.

Eventually my school will probably write actual guidelines for me to follow when I suspect use of AI, but for now, it's the wild west and it is in your best interest to avoid a showdown with me.

12K notes

·

View notes

Text

It's hilarious how nanowrimo fails at understanding what their event is about. Thinking that writing is about filling pages with a certain number of words is a misconception about what writing actually is, one you usually only see from techbros.

But here they are, from the event of writing a story in 30 days, telling you that you can just prompt a generative AI and let it spit out 50k in a few seconds and that is somehow just like writing 🤦♀️

2K notes

·

View notes

Text

Trying to make sense of the Nanowrimo statement to the best of my abilities and fuck, man. It's hard.

It's hard because it seems to me that, first and foremost, the organization itself has forgotten the fucking point.

Nanowrimo was never about the words themselves. It was never about having fifty thousand marketable words to sell to publishing companies and then to the masses. It was a challenge, and it was hard, and it is hard, and it's supposed to be. The point is that it's hard. It's hard to sit down and carve out time and create a world and create characters and turn these things into a coherent plot with themes and emotional impact and an ending that's satisfying. It's hard to go back and make changes and edit those into something likable, something that feels worth reading. It's hard to find a beautifully-written scene in your document and have to make the decision that it's beautiful but it doesn't work in the broader context. It's fucking hard.

Writing and editing are skills. You build them and you hone them. Writing the way the challenge initially encouraged--don't listen to that voice in your head that's nitpicking every word on the page, put off the criticism for a later date, for now just let go and get your thoughts out--is even a different skill from writing in general. Some people don't particularly care about refining that skill to some end goal or another, and simply want to play. Some people sit down and try to improve and improve and improve because that is meaningful to them. Some are in a weird in-between where they don't really know what they want, and some have always liked the idea of writing and wanted a place to start. The challenge was a good place for this--sit down, put your butt in a chair, open a blank document, and by the end of the month, try to put fifty thousand words in that document.

How does it make you feel to try? Your wrists ache and you don't feel like any of the words were any good, but didn't you learn something about the process? Re-reading it, don't you think it sounds better if you swap these two sentences, if you replace this word, if you take out this comma? Maybe you didn't hit 50k words. Maybe you only wrote 10k. But isn't it cool, that you wrote ten thousand words? Doesn't it feel nice that you did something? We can try again. We can keep getting better, or just throwing ourselves into it for fun or whatever, and we can do it again and again.

I guess I don't completely know where I'm going with this post. If you've followed me or many tumblr users for any amount of time, you've probably already heard a thousand times about how generative AI hurts the environment so many of us have been so desperately trying to save, about how generative AI is again and again used to exploit big authors, little authors, up-and-coming authors, first time authors, people posting on Ao3 as a hobby, people self-publishing e-books on Amazon, traditionally published authors, and everyone in between. You've probably seen the statements from developers of these "tools", things like how being required to obtain permission for everything in the database used to train the language model would destroy the tool entirely. You've seen posts about new AI tools scraping Ao3 so they can make money off someone else's hobby and putting the legality of the site itself at risk. For an organization that used to dedicate itself to making writing more accessible for people and for creating a community of writers, Nanowrimo has spent the past several years systematically cracking that community to bits, and now, it's made an official statement claiming that the exploitation of writers in its community is okay, because otherwise, someone might find it too hard to complete a challenge that's meant to be hard to begin with.

I couldn't thank Nanowrimo enough for what it did for me when I started out. I don't know how to find community in the same way. But you can bet that I've deleted my account, and I'll be finding my own path forward without it. Thanks for the fucking memories, I guess.

429 notes

·

View notes

Text

331 notes

·

View notes

Text

hate when you're looking up writing stuff and people are like "use this ai" like i'm not putting my stories into the fucking mediocrity machine

150 notes

·

View notes

Text

At the end of the day I will always create, even if the world treats art as unnecessary and like it doesn’t need a human behind it who deserves to be compensated. I would really love to make my living off art, sure, it’s the only thing I love this much and the only thing I can do realistically given my situation. But I’ll keep living and I’ll keep making and sharing. There’s nothing that can take that away from me, and I refuse to do it in spite, I do it in love.

126 notes

·

View notes

Text

after learning that ai scrapers took tons of data from ao3 a while back, it's kind of funny that everyone's clutching pearls about this current round. they didn't have "permission" or pay any money to anyone for that, and just... took stuff that was posted on the internet for anyone to see (or scrape for their theft blender).

Like... am i wrong about this? has anything on the internet been safe at any time from this sort of thing?

is there any more safety in, say, hosting your art/writing/videos/gifs, etc. on a personal blog that's still accessible to the public? where any passing bot could potentially scan and scrape it without you ever knowing?

#ai shit#genuinely asking because i see lots of people talking about getting a personal website instead#and like... is that really any different at this point? other than making it harder for people who want to see and enjoy your stuff#to actually find you and keep up with you on a consistent basis?#if i had to go to 100 different websites every day to see what my friends were up to i probably... wouldn't do that as often...

56 notes

·

View notes

Text

Stanford era

#supernatural#sam winchester#dean winchester#j2#samdean#spn#jensen ackles#jared padalecki#stanford university#ai artwork#ai generated#ai art#ai#ai image#ai shit

85 notes

·

View notes

Text

I hate that nowadays, any time I see some really wonderful gothic, Baroque-inspired art, my first thought is usually "This is AI, isn't it?" and 9 times out of 10 I'm proven right.

I am genuinely begging people to stop posting AI shit in goth tags. Like, I know this is falling on deaf ears among those of y'all who spam twenty thousand aesthetic tags on pics reposted a thousand times over on Pinterest and wholly divorced from all credit, but for those of y'all that are genuinely trying to share great art? If you really love it and care about the artistry? Look into your sources. Get proper credit. And if it says "AI" in there? Don't share that shit. Don't let it proliferate. I don't give a fuck if it's "vibes" or "mood" or whatever the fuck, it's fake and it's stealing from thousands of artists who are actually out there making art you would fucking love if people would just actually take the time to share them properly.

29 notes

·

View notes

Text

YouTube stop recommending AI generated "films" challenge.No I don't care what your program thinks an 1950 technicolor Mulan would look like now piss off.

Also please stop suggesting me "Top ten most beautiful [animal] lists in which the cover image is CLEARLY an AI fake.

I see an AI image I click "don't recommend channel" and block.

22 notes

·

View notes

Text

Ai generated content crap, chatGPT and algorithm bullshit are an insult to AI!

Sure it’s artificial.

But it’s not remotely intelligent.

It’s not even on par with a dumb AI.

It’s glorified disinformation generation and digital plagiarism.

Needless, useless, polluting electronic waste and digital garbage.

#dougie rambles#personal stuff#vent post#anti ai#ai bullshit#ai shit#bullshit#useless#garbage#digital waste#pollution#digital pollution#wasteful#ai generated#ai content#chatgpt#algorithm

21 notes

·

View notes

Text

#well this sucks#AI shit#hang on deleting all my queued posts of yuumei#how disappointing#I thought they were so talented#inspiration#art

56 notes

·

View notes

Text

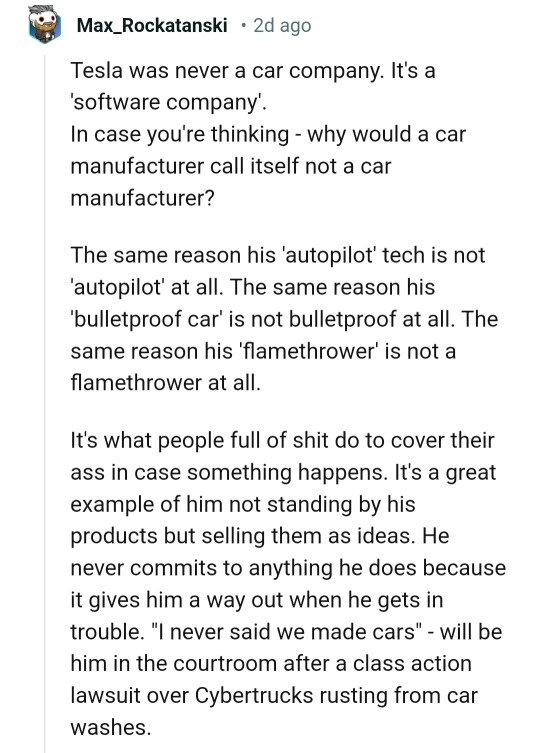

https://finance.yahoo.com/news/elon-musk-insists-tesla-isnt-a-car-company-as-sales-falter-150937418.html?guccounter=1

32 notes

·

View notes

Photo

This AI Generated Pikachu coaster I found at a small fair in the UK.

I woulda told the kind ladies but my mother was there and she was already glaring at me when I was squinting at the tail fading through the bricks 😭🙏

Even weirder, nothing else was AI Generated at their table lol

Just this one random Pikachu coaster-

250 notes

·

View notes

Text

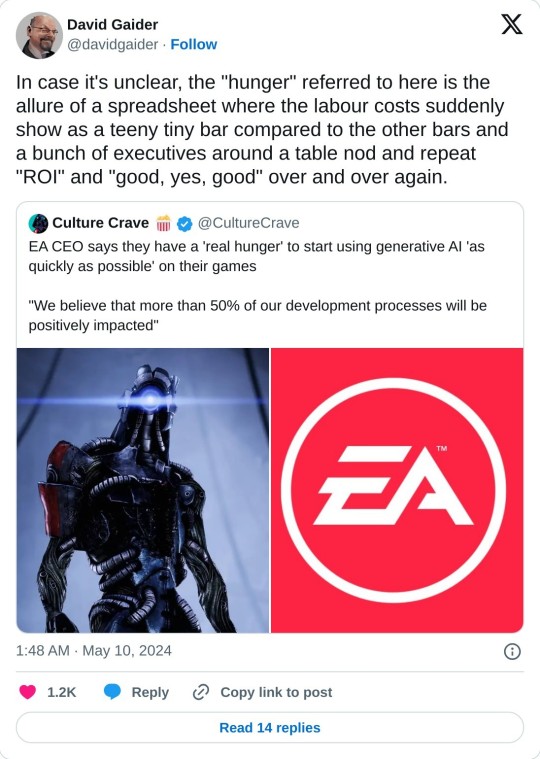

Fuck this! I won't be touching any game exploiting the developers with AI.

33 notes

·

View notes