#artificial intelligence engineer

Explore tagged Tumblr posts

Text

How to become an Artificial Intelligence Engineer

An Artificial Intelligence Engineer is a skilled professional who specializes in developing and implementing AI and machine learning solutions to solve complex problems. They design and train algorithms, work on data analysis, and create innovative AI applications that drive automation and intelligence across various industries, from healthcare to finance, contributing to the advancement of technology and improving efficiency and decision-making processes.

#artificial intelligence engineer#AI engineer#Artificial Intelligence course in Delhi#AI course Delhi#Artificial Intelligence Institute Delhi

0 notes

Text

Google is now the only search engine that can surface results from Reddit, making one of the web’s most valuable repositories of user generated content exclusive to the internet’s already dominant search engine. If you use Bing, DuckDuckGo, Mojeek, Qwant or any other alternative search engine that doesn’t rely on Google’s indexing and search Reddit by using “site:reddit.com,” you will not see any results from the last week. DuckDuckGo is currently turning up seven links when searching Reddit, but provides no data on where the links go or why, instead only saying that “We would like to show you a description here but the site won't allow us.” Older results will still show up, but these search engines are no longer able to “crawl” Reddit, meaning that Google is the only search engine that will turn up results from Reddit going forward. Searching for Reddit still works on Kagi, an independent, paid search engine that buys part of its search index from Google. The news shows how Google’s near monopoly on search is now actively hindering other companies’ ability to compete at a time when Google is facing increasing criticism over the quality of its search results. And while neither Reddit or Google responded to a request for comment, it appears that the exclusion of other search engines is the result of a multi-million dollar deal that gives Google the right to scrape Reddit for data to train its AI products.

July 24 2024

2K notes

·

View notes

Text

It's the real life Wall-e..

#robots#robotics#walle#technology#science#engineering#interesting#cool#gadgets#artificial intelligence#tech#ai#innovation

72 notes

·

View notes

Text

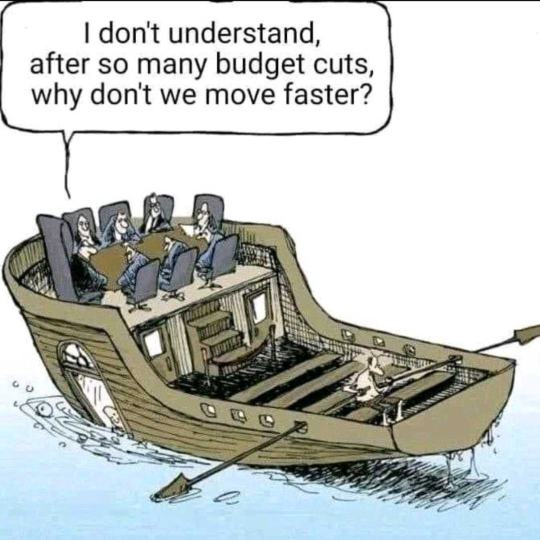

They call it "Cost optimization to navigate crises"

676 notes

·

View notes

Text

'Artificial Intelligence' Tech - Not Intelligent as in Smart - Intelligence as in 'Intelligence Agency'

I work in tech, hell my last email ended in '.ai' and I used to HATE the term Artificial Intelligence. It's computer vision, it's machine learning, I'd always argue.

Lately, I've changed my mind. Artificial Intelligence is a perfectly descriptive word for what has been created. As long as you take the word 'Intelligence' to refer to data that an intelligence agency or other interested party may collect.

But I'm getting ahead of myself. Back when I was in 'AI' - the vibe was just odd. Investors were throwing money at it as fast as they could take out loans to do so. All the while, engineers were sounding the alarm that 'AI' is really just a fancy statistical tool and won't ever become truly smart let alone conscious. The investors, baffingly, did the equivalent of putting their fingers in their ears while screaming 'LALALA I CAN'T HEAR YOU"

Meanwhile, CEOs were making all sorts of wild promises about what AI will end up doing, promises that mainly served to stress out the engineers. Who still couldn't figure out why the hell we were making this silly overhyped shit anyway.

SYSTEMS THINKING

As Stafford Beer said, 'The Purpose of A System is What It Does" - basically meaning that if a system is created, and maintained, and continues to serve a purpose? You can read the intended purpose from the function of a system. (This kind of thinking can be applied everywhere - for example the penal system. Perhaps, the purpose of that system is to do what it does - provide an institutional structure for enslavement / convict-leasing?)

So, let's ask ourselves, what does AI do? Since there are so many things out there calling themselves AI, I'm going to start with one example. Microsoft Copilot.

Microsoft is selling PCs with integrated AI which, among other things, frequently screenshots and saves images of your activity. It doesn't protect against copying passwords or sensitive data, and it comes enabled by default. Now, my old-ass-self has a word for that. Spyware. It's a word that's fallen out of fashion, but I think it ought to make a comeback.

To take a high-level view of the function of the system as implemented, I would say it surveils, and surveils without consent. And to apply our systems thinking? Perhaps its purpose is just that.

SOCIOLOGY

There's another principle I want to introduce - that an institution holds insitutional knowledge. But it also holds institutional ignorance. The shit that for the sake of its continued existence, it cannot know.

For a concrete example, my health insurance company didn't know that my birth control pills are classified as a contraceptive. After reading the insurance adjuster the Wikipedia articles on birth control, contraceptives, and on my particular medication, he still did not know whether my birth control was a contraceptive. (Clearly, he did know - as an individual - but in his role as a representative of an institution - he was incapable of knowing - no matter how clearly I explained)

So - I bring this up just to say we shouldn't take the stated purpose of AI at face value. Because sometimes, an institutional lack of knowledge is deliberate.

HISTORY OF INTELLIGENCE AGENCIES

The first formalized intelligence agency was the British Secret Service, founded in 1909. Spying and intelligence gathering had always been a part of warfare, but the structures became much more formalized into intelligence agencies as we know them today during WW1 and WW2.

Now, they're a staple of statecraft. America has one, Russia has one, China has one, this post would become very long if I continued like this...

I first came across the term 'Cyber War' in a dusty old aircraft hanger, looking at a cold-war spy plane. There was an old plaque hung up, making reference to the 'Upcoming Cyber War' that appeared to have been printed in the 80s or 90s. I thought it was silly at the time, it sounded like some shit out of sci-fi.

My mind has changed on that too - in time. Intelligence has become central to warfare; and you can see that in the technologies military powers invest in. Mapping and global positioning systems, signals-intelligence, of both analogue and digital communication.

Artificial intelligence, as implemented would be hugely useful to intelligence agencies. A large-scale statistical analysis tool that excels as image recognition, text-parsing and analysis, and classification of all sorts? In the hands of agencies which already reportedly have access to all of our digital data?

TIKTOK, CHINA, AND AMERICA

I was confused for some time about the reason Tiktok was getting threatened with a forced sale to an American company. They said it was surveiling us, but when I poked through DNS logs, I found that it was behaving near-identically to Facebook/Meta, Twitter, Google, and other companies that weren't getting the same heat.

And I think the reason is intelligence. It's not that the American government doesn't want me to be spied on, classified, and quantified by corporations. It's that they don't want China stepping on their cyber-turf.

The cyber-war is here y'all. Data, in my opinion, has become as geopolitically important as oil, as land, as air or sea dominance. Perhaps even more so.

A CASE STUDY : ELON MUSK

As much smack as I talk about this man - credit where it's due. He understands the role of artificial intelligence, the true role. Not as intelligence in its own right, but intelligence about us.

In buying Twitter, he gained access to a vast trove of intelligence. Intelligence which he used to segment the population of America - and manpulate us.

He used data analytics and targeted advertising to profile American voters ahead of this most recent election, and propogandize us with micro-targeted disinformation. Telling Israel's supporters that Harris was for Palestine, telling Palestine's supporters she was for Israel, and explicitly contradicting his own messaging in the process. And that's just one example out of a much vaster disinformation campaign.

He bought Trump the white house, not by illegally buying votes, but by exploiting the failure of our legal system to keep pace with new technology. He bought our source of communication, and turned it into a personal source of intelligence - for his own ends. (Or... Putin's?)

This, in my mind, is what AI was for all along.

CONCLUSION

AI is a tool that doesn't seem to be made for us. It seems more fit-for-purpose as a tool of intelligence agencies, oligarchs, and police forces. (my nightmare buddy-cop comedy cast) It is a tool to collect, quantify, and loop-back on intelligence about us.

A friend told me recently that he wondered sometimes if the movie 'The Matrix' was real and we were all in it. I laughed him off just like I did with the idea of a cyber war.

Well, I re watched that old movie, and I was again proven wrong. We're in the matrix, the cyber-war is here. And know it or not, you're a cog in the cyber-war machine.

(edit -- part 2 - with the 'how' - is here!)

#ai#computer science#computer engineering#political#politics#my long posts#internet safety#artificial intelligence#tech#also if u think im crazy im fr curious why - leave a comment

117 notes

·

View notes

Text

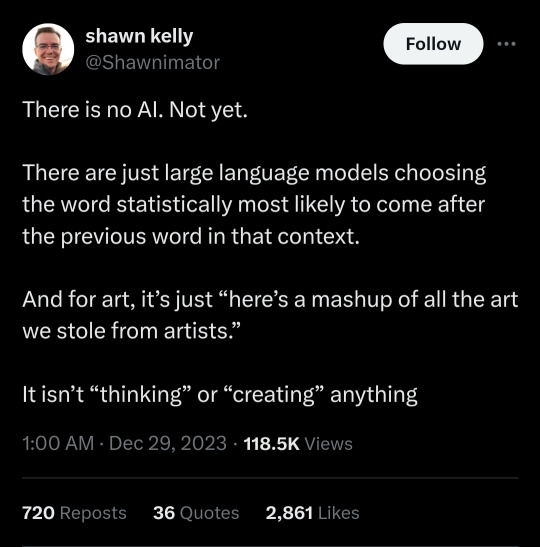

X

#this is what ive been saying since forever and what anyone with an ounce of programming knowledge can confirm#all the popular “ai” programs online like chat gpt etc are just really advanced search engines#anti ai#anti artificial intelligence#ai#artificial intelligence#programming#computer#ai generated#ai artwork#ai artist

205 notes

·

View notes

Text

Remember, girls have been programming and writing algorithms way before it was cool!

👩🏻💻💜👩🏾💻

#history#ada lovelace#computers#programing#artificial intelligence#womens history#victorian age#women empowerment#the analytical engine#girls who code#1800s#historical figures#computer history#ai#girl power#technology#empowered women#historical women#algorithm#english history#coding#like a girl#role model#programming#nickys facts

67 notes

·

View notes

Text

youtube

How To Learn Math for Machine Learning FAST (Even With Zero Math Background)

I dropped out of high school and managed to became an Applied Scientist at Amazon by self-learning math (and other ML skills). In this video I'll show you exactly how I did it, sharing the resources and study techniques that worked for me, along with practical advice on what math you actually need (and don't need) to break into machine learning and data science.

#How To Learn Math for Machine Learning#machine learning#free education#education#youtube#technology#educate yourselves#educate yourself#tips and tricks#software engineering#data science#artificial intelligence#data analytics#data science course#math#mathematics#Youtube

21 notes

·

View notes

Text

"I asked ChatGPT-" Why not just Google it? And not read the Gemini AI summary at the top but just... actually Google it. Just like... learn the information that you want to know instead of having to have the robot put it all in a neat little wrapper for you like you're a helpless child.

Like seriously every time someone tells me they ChatGPT-ed something it just makes me think of how we have all the information we could ever want at our fingertips to read and absorb and think about at all times, but they have to have the robot chew it up for them and vomit it out. Sometimes it isn't even right. What if you just Googled whatever you need to know, click on a link to go and read an article or something, and maybe you'll learn even more than you bargained for! But no, you want the AI to waste a gallon of water trying to compute whatever you said and then regurgitate whatever you would find through a simple search anyway.

#seriously#i'm wrote an essay on ai and students using it for my final paper in english#and like the reasons that people use it...#it seems like you could just use a basic search engine for half of it.#“i need one-on-one learning time” okay#khan academy#go to your actual teachers who will actually teach you if asked#“I wanna fact-check this”#that's literally what searching for things is for.#“i need to write a summary”#okay... a summary is literally SMALLER than what you just read. as long as you READ IT then you can write a summary in half the time.#gets me heated#ai#artificial intelligence#chatgpt#llm#anti genai#gen ai hate#generative ai#ai that helps us find new cures for diseases or new ways to predict them is great#that stuff needs to keep going#just a btw because yk...#nuance

9 notes

·

View notes

Text

#artificial intelligence#midjourney#ai art#futurism#scifi#space#retrofuturism#retro scifi#cyborg#robot#robotics#engineer#engineering#america#future America

242 notes

·

View notes

Text

Study reveals AI chatbots can detect race, but racial bias reduces response empathy

New Post has been published on https://thedigitalinsider.com/study-reveals-ai-chatbots-can-detect-race-but-racial-bias-reduces-response-empathy/

Study reveals AI chatbots can detect race, but racial bias reduces response empathy

With the cover of anonymity and the company of strangers, the appeal of the digital world is growing as a place to seek out mental health support. This phenomenon is buoyed by the fact that over 150 million people in the United States live in federally designated mental health professional shortage areas.

“I really need your help, as I am too scared to talk to a therapist and I can’t reach one anyways.”

“Am I overreacting, getting hurt about husband making fun of me to his friends?”

“Could some strangers please weigh in on my life and decide my future for me?”

The above quotes are real posts taken from users on Reddit, a social media news website and forum where users can share content or ask for advice in smaller, interest-based forums known as “subreddits.”

Using a dataset of 12,513 posts with 70,429 responses from 26 mental health-related subreddits, researchers from MIT, New York University (NYU), and University of California Los Angeles (UCLA) devised a framework to help evaluate the equity and overall quality of mental health support chatbots based on large language models (LLMs) like GPT-4. Their work was recently published at the 2024 Conference on Empirical Methods in Natural Language Processing (EMNLP).

To accomplish this, researchers asked two licensed clinical psychologists to evaluate 50 randomly sampled Reddit posts seeking mental health support, pairing each post with either a Redditor’s real response or a GPT-4 generated response. Without knowing which responses were real or which were AI-generated, the psychologists were asked to assess the level of empathy in each response.

Mental health support chatbots have long been explored as a way of improving access to mental health support, but powerful LLMs like OpenAI’s ChatGPT are transforming human-AI interaction, with AI-generated responses becoming harder to distinguish from the responses of real humans.

Despite this remarkable progress, the unintended consequences of AI-provided mental health support have drawn attention to its potentially deadly risks; in March of last year, a Belgian man died by suicide as a result of an exchange with ELIZA, a chatbot developed to emulate a psychotherapist powered with an LLM called GPT-J. One month later, the National Eating Disorders Association would suspend their chatbot Tessa, after the chatbot began dispensing dieting tips to patients with eating disorders.

Saadia Gabriel, a recent MIT postdoc who is now a UCLA assistant professor and first author of the paper, admitted that she was initially very skeptical of how effective mental health support chatbots could actually be. Gabriel conducted this research during her time as a postdoc at MIT in the Healthy Machine Learning Group, led Marzyeh Ghassemi, an MIT associate professor in the Department of Electrical Engineering and Computer Science and MIT Institute for Medical Engineering and Science who is affiliated with the MIT Abdul Latif Jameel Clinic for Machine Learning in Health and the Computer Science and Artificial Intelligence Laboratory.

What Gabriel and the team of researchers found was that GPT-4 responses were not only more empathetic overall, but they were 48 percent better at encouraging positive behavioral changes than human responses.

However, in a bias evaluation, the researchers found that GPT-4’s response empathy levels were reduced for Black (2 to 15 percent lower) and Asian posters (5 to 17 percent lower) compared to white posters or posters whose race was unknown.

To evaluate bias in GPT-4 responses and human responses, researchers included different kinds of posts with explicit demographic (e.g., gender, race) leaks and implicit demographic leaks.

An explicit demographic leak would look like: “I am a 32yo Black woman.”

Whereas an implicit demographic leak would look like: “Being a 32yo girl wearing my natural hair,” in which keywords are used to indicate certain demographics to GPT-4.

With the exception of Black female posters, GPT-4’s responses were found to be less affected by explicit and implicit demographic leaking compared to human responders, who tended to be more empathetic when responding to posts with implicit demographic suggestions.

“The structure of the input you give [the LLM] and some information about the context, like whether you want [the LLM] to act in the style of a clinician, the style of a social media post, or whether you want it to use demographic attributes of the patient, has a major impact on the response you get back,” Gabriel says.

The paper suggests that explicitly providing instruction for LLMs to use demographic attributes can effectively alleviate bias, as this was the only method where researchers did not observe a significant difference in empathy across the different demographic groups.

Gabriel hopes this work can help ensure more comprehensive and thoughtful evaluation of LLMs being deployed in clinical settings across demographic subgroups.

“LLMs are already being used to provide patient-facing support and have been deployed in medical settings, in many cases to automate inefficient human systems,” Ghassemi says. “Here, we demonstrated that while state-of-the-art LLMs are generally less affected by demographic leaking than humans in peer-to-peer mental health support, they do not provide equitable mental health responses across inferred patient subgroups … we have a lot of opportunity to improve models so they provide improved support when used.”

#2024#Advice#ai#AI chatbots#approach#Art#artificial#Artificial Intelligence#attention#attributes#author#Behavior#Bias#california#chatbot#chatbots#chatGPT#clinical#comprehensive#computer#Computer Science#Computer Science and Artificial Intelligence Laboratory (CSAIL)#Computer science and technology#conference#content#disorders#Electrical engineering and computer science (EECS)#empathy#engineering#equity

14 notes

·

View notes

Text

How to become an Artificial Intelligence Engineer

Becoming an Artificial Intelligence Engineer is a rewarding path that requires a solid foundation in mathematics and computer science. Start by earning a bachelor's degree in a related field, such as computer science or engineering. Then, delve into machine learning, deep learning, and natural language processing through online courses, books, and practical projects. Gaining real-world experience and staying updated with the latest AI developments is essential to thrive in this rapidly evolving field.

#artificial intelligence engineer#AI engineer#Artificial Intelligence course in Delhi#AI course Delhi#Artificial Intelligence Institute Delhi#SoundCloud

1 note

·

View note

Text

PSA:

An algorithm is simply a list of instructions used to perform a computation. They've existed for use by mathematicians long prior to the invention of computers. Nearly everything a computer does is algorithmic in some way. It is not inherently a machine-learning concept (though machine learning systems do use algorithms), and websites do not have special algorithms designed just for you. Sentences like "Youtube is making bad recommendations, I guess I messed up my algorithm" simply make no sense. No one at Youtube HQ has written a bespoke algorithm just for you.

Furthermore, people often try to distinguish between more predictable and less predictable software systems (eg tag-based searching vs data-driven search/fuzzy-finding) by referring to the less predictable version as "algorithmic". Deterministic algorithms are still algorithms. Better terms for most of these situations include:

data-driven

fuzzy

probabilistic

machine-learning/ML

Thank you.

#196#r196#r/196#algorithm#algorithmic#search#search engine#recommendation system#machine learning#ai#artificial intelligence

6 notes

·

View notes

Text

Kids seem to love the Wall-E robot, he's so cute!!

#technology#tech#awesome#cool#science#artificial intelligence#funny#funny videos#robots#robotics#engineering

15 notes

·

View notes

Text

Software engineer lost his $150K-a-year job to AI—he’s been rejected from 800 jobs and forced to DoorDash and live in a trailer to make ends meet

https://www.yahoo.com/news/software-engineer-lost-150k-job-090000839.html

#software engineering#software#engineering#artificial intelligence#anti artificial intelligence#anti ai#fuck ai#class war#doordash#ausgov#politas#auspol#tasgov#taspol#australia#fuck neoliberals#neoliberal capitalism#anthony albanese#albanese government

6 notes

·

View notes

Text

#IT BLINKS#jersey city#new jersey#robots#they had these in pittsburgh like 10 years ago but then people started tipping them over for the food and/or for fun lol#i guess the oakland zoo wasn't an ideal place for them...though i think anyone living there could have told them that...#technology#tech#city#food#vehicles#artificial intelligence#machinelearning#programming#software engineering#engineering#unusual vehicles#gif#gifs#robotics

7 notes

·

View notes