#css card design

Explore tagged Tumblr posts

Text

Card Flip Animation Using CSS

#card flip animation#css animation examples#css animation tutorial#css animation effect#card flip effect#css card flip animation#css card design#html css#html5 css3#animation#codingflicks#web design#frontend#frontenddevelopment#learn to code

8 notes

·

View notes

Text

Responsive CSS Card Overlay Get Code from divinectorweb website

#css card#css card design#html css cards#responsive css cards#responsive web design#html css#learn to code#learn css#webdesign

0 notes

Text

CSS Responsive Profile Card

#css cards#codenewbies#html css#html5 css3#webdesign#css#css profile card#profile card html css#responsive web design

3 notes

·

View notes

Text

The Sequence Chat: Justin D. Harris - About Building Microsoft Copilot

New Post has been published on https://thedigitalinsider.com/the-sequence-chat-justin-d-harris-about-building-microsoft-copilot/

The Sequence Chat: Justin D. Harris - About Building Microsoft Copilot

Quick bio

This is your second interview at The Sequence. Please tell us a bit about yourself. Your background, current role and how did you get started in AI?

I grew up in the suburbs of Montreal and I have always been passionate about mathematics. I left Montreal to study math and computer science at the University of Waterloo in Canada. I currently live in Toronto with my wonderful girlfriend and our little dog Skywalker who enjoys kayaking with us around the beaches and bluffs. I am a Principal Software Engineer at Microsoft, where I have worked on various AI projects and have been a core contributor in the development of Microsoft Copilot. While my colleagues recognize me as a diligent engineer, but only a few have had the opportunity to witness my prowess as a skier.

For my career, I have been dedicated to building AI applications since I was in university 15 years ago. During my studies, I joined Maluuba as one of the early engineers. We developed personal assistants for phones, TVs, and cars that handled a wide range of commands. We started with using classical machine learning models such as SVMs, Naive Bayes, and CRFs before adapting to use deep learning. We sold Maluuba to Microsoft in 2017 to help Microsoft in their journey to incorporate AI into more products. I have been working on a few AI projects at Microsoft including some research to put trainable models in Ethereum smart contracts, which we talked about in our last interview. Since 2020, I have been working on a chat system built into Bing which evolved into Microsoft Copilot. I am currently a Principal Software Engineer on the Copilot Platform team at Microsoft, where we’re focused on developing a generalized platform for copilots at Microsoft.

TheSequence is a reader-supported publication. To receive new posts and support my work, consider becoming a free or paid subscriber.

🛠 ML Work

Your recent work includes working on Microsoft Copilot, which is a central piece of Microsoft’s AI vision. Tell us about the vision and core capabilities of the platform.

We have built a platform for copilots and apps that want to leverage large language models (LLMs) and easily take advantage of the latest developments in AI. Many products use our platform such as Windows, Edge, Skype, Bing, SwiftKey, and many Office products. Their needs and customization points vary. It’s a fun engineering challenge to build a system that’s designed to work well for many different types of clients in different programming languages and that scales from simple LLM usage to more sophisticated integrations with plugins and custom middlewares. Many teams benefit not only from the power of our customizable platform, but they also benefit from the many Responsible AI (RAI) and security guards built into our system.

Are copilots/agents the automation paradigm of the AI era? How would you compare copilots with previous automation trends, such as robotic process automation (RPA), middleware platforms, and others?

Copilots help us automate many types of tasks and get our work done more quickly in a breadth of scenarios, but right now, we still often need to review their work such as emails or code they write. Other types of automation might be hard for an individual to configure, but once it’s configured, it’s designed to run autonomously and be trusted because its scope is limited. Another big difference with using LLMs compared to previous automation trends is that we can now use the same model to help with many different types of tasks when given the right instructions and examples. When given the right instructions and grounding information, LLMs can often generalize to new scenarios.

There are capabilities such as planning/reasoning, memory, and action execution that are fundamental building blocks of copilots or agents. What are some of the most exciting research and technologies you have seen in these areas?

AutoGen is an interesting paradigm that’s adapting classical ideas like ensembling techniques from previous eras of AI for the new more generalized LLMs. AutoGen can use multiple different LLMs to collaborate and solve a task.

Semantic Kernel is a great tool for aiding in orchestrating LLMs and easily integrating plugins, RAG, and different models. It also works well with my favorite tool to easily run models locally: Ollama.

Here’s a somewhat controversial question: copilots/agents are typically constructed as an LLM surrounded by features like RAG, action execution, memory, etc. How much of those capabilities do you foresee becoming part of the LLM (maybe fine-tuned) themselves versus what remains external? In other words, does the model become the copilot?

It’s really helpful to have features like RAG available as external integrations for brand new scenarios and to ensure that we cite the right data. When training models, we talk about the ‘cold start’ problem: how do we get data and examples for new use cases? Very large models can learn about certain desired knowledge, but it’s hard to foresee what will be required in this quickly changing space. Many teams using our Copilot Platform expect to use RAG and plugins to easily integrate their stored knowledge from various sources that update often, such as content from the web based on news, or documentation that changes daily. It would be outlandish to tell them to collect lots of training data, even if it’s unlabeled or unstructured data, and to fine-tune a model hourly or even more often as the world changes. We’re not ready for that yet. Citing the right data is also important. Without RAG, current models hallucinate too much and cannot yet be trusted to cite the original source of information. With RAG, we know what information is available for the model to cite at runtime and we include those links in the UI along with a model’s response, even if the model did not choose to cite them, because they’re helpful as references for us to learn more about a topic.

One of the biggest challenges with using models such as GPT-4 for domain-specific agents or copilots is that they are just too big and expensive. Microsoft has recently been pushing the small language model (SLMs) trend with models like Orca or Phi. Are SLMs generally better suited for business copilot scenarios?

SLMs are very useful for specific use cases and can be more easily fine-tuned. The biggest caveat for SLMs is that many only work well in fewer languages than GPT-4 which knows many languages. I’ve had a great time playing around with them using Ollama. It’s easy to experiment and build an application with a local SLM, especially while you’re more focused on traditional engineering problems and designing parts of your project. Once you’re ready to scale to many languages and meet the foray of customer needs, a more powerful model might be more useful. I think the real answer will be hybrid systems that find ways to leverage small and large models.

How important are guardrails in Microsoft’s Copilots, and how do you think about that space?

We have many important guardrails for Responsible AI (RAI) built into our Copilot Platform from inspecting user input to verifying model output. These protections are one of the main reasons that many teams use our platform. The guardrails and shields that we set up for RAI are very important in our designs. RAI is a core part of every design review, and we standardize how RAI works for everything that goes into and comes out of our platform. We work with many teams across Microsoft to standardize what to validate and share knowledge. We also ensure that the long prompt with special instructions, examples, and formatting is treated securely, just like code, and not exposed outside of our platform.

Your team was very vocal about their work in the Copilot user experience, using technologies like SignalR. What makes the UX for copilots/agents different from previous paradigms?

We built new user experiences for our copilots to integrate them into existing products and we wrote a blog to share some of our design choices such as how we stream responses and designed the platform to work with many different types of clients in different programming languages. I also did a podcast to discuss some topics mentioned in the blog post more. One of the biggest noticeable differences with previous assistants or agents is how an answer is streamed word by word, or token by token, as the response is generated. The largest and most powerful models can also be the slowest ones and it can take many seconds or sometimes minutes to generate a full response with grounding data and references, so it’s important for us to start to show the user an answer as quickly as possible. We use SignalR to help us simplify streaming the answer to the client. SignalR automatically detects and chooses the best transport method among the various web standard protocols and techniques. WebSockets are used as the transport method by default for most of our applications and we can gracefully fall back to Server-Sent Events or long polling. SignalR also simplifies bidirectional communication, such as when the application needs to send information to the service to interrupt the streaming of a response.

We use Adaptive Cards and Markdown to easily scale to displaying responses in multiple different applications or different programming languages. We use the new object-basin library that we built to generalize and simplify streaming components of JSON to modify the JSON in the Adaptive Cards that were already streamed to the application. This gives the service a lot of control over what is displayed in the applications and the application can easily tweak how the response is formatted, for example, by changing CSS.

💥 Miscellaneous – a set of rapid-fire questions

What is your favorite area of research outside of generative AI?

Quantum Computing.

Is reasoning and planning the next big thing for LLMs and, consequently, copilots? What other research areas are you excited about?

Reasoning and planning are important for some complex scenarios beyond question answering where multiple steps are involved such as planning a vacation or determining the phases of a project. I’m also excited about ways that we can use smaller and simpler local models securely for simple scenarios.

How far can the LLM scaling laws take us? All the way to AGI?

I’m confident that we will get far with LLMs because we’ve seen them do awesome things already. My personal observation is that we tend to make giant leaps in AI every few years and then the progress is slower and more incremental in the years between the giant leaps. I think at least one more giant leap will be required before AGI is achieved, but I’m confident that LLMs will help us make that giant leap sooner by making us more productive. Language is just one part of intelligence. Models will need to understand the qualia associated with sensory experiences to become truly intelligent.

Describe a programmer’s world with copilots in five years.

Copilots will be integrated more into the development experience, but I hope they don’t eliminate coding completely. Copilots will help us even more with our tasks and going back to not having a copilot already feels weird and lonely to me. I like coding and feeling like I built something, but I’m happy to let a copilot take over with more tedious tasks or help me discover different techniques. Copilots will help us get more done faster as they get more powerful and increase in context size to understand more of a project instead of just a couple of sections or files. Copilots will also need to become more proactive and less reactive to respond only when prompted. We will have to be careful to build helpful systems that are not pestering.

Who are your favorite mathematicians and computer scientists, and why?

I don’t think I can pick a specific person that I fully admire, but right now, even though we wouldn’t typically call them mathematicians, Amos Tversky and Daniel Kahneman come to mind. People have been talking more about them lately because Daniel Kahneman passed away a few months ago. I think about them, system 1 vs. system 2 thinking, and slowing down to apply logic, a deep kind of mathematics, as I read “The Undoing Project” and “Thinking, Fast and Slow” a few years ago.

TheSequence is a reader-supported publication. To receive new posts and support my work, consider becoming a free or paid subscriber.

#adaptive cards#agents#AGI#ai#applications#apps#automation#background#bing#Blog#Building#Business#Canada#career#Cars#challenge#classical#code#coding#collaborate#communication#computer#Computer Science#computing#content#CSS#data#Deep Learning#Design#development

0 notes

Video

youtube

Card Hover Effect in CSS | Card UI Design in 2024 | Devhubspot

0 notes

Video

youtube

Card Hover Effect in CSS | Card UI Design in 2024 | Devhubspot

0 notes

Video

youtube

🔴 Real Glassmorphism Card Hover Effects | Html CSS Glass Tilt Effects | ...

#youtube#devhubspot#card#effect#tilt#html#html css#html5#html5 css3#html website#css#css3#ui#ui ux design#uidesign#tumblr ui

1 note

·

View note

Video

youtube

🔴 Real Glassmorphism Card Hover Effects | Html CSS Glass Tilt Effects | ...

#youtube#css#hover#card#effect#$tilt#tilt#glass#devhubspot#ui#ui ux design#uidesign#html#html css#html5#html5 css3

0 notes

Video

youtube

🔴 Animated Profile Card UI Design using Html & CSS | devhubspot

0 notes

Text

#tool#tools#resouce#web#design#generator#free#css#html#data#structured#twitter#cards#open#graph#codeblr

0 notes

Text

Twine/SugarCube ressources

Some/most of you must know that Arcadie: Second-Born was coded in ChoiceScript before I converted it to Twine for self-publishing (for various reasons).

I have switched to Ren'Py for Cold Lands, but I thought I would share the resources that helped me when I was working with Twine. This is basically an organized dump of nearly all the bookmarks I collected. Hope this is helpful!

Guides

Creating Interactive Fiction: A Guide to Using Twine by Aidan Doyle

A Total Beginner’s Guide to Twine

Introduction to Twine By Conor Walsh (covers Harlowe and not SugarCube)

Twine Grimoire I

Twine Grimoire II

Twine and CSS

Documentation

SugarCube v2 Documentation

Custom Macros

Chapel's Custom Macro Collection, particularly Fairmath function to emulate CS operations if converting your CS game to Twine

Cycy's custom macros

Clickable Images with HTML Maps

Character pages

Character Profile Card Tutorial

Twine 2 / SugarCube 2 Sample Code by HiEv

Templates

Some may be outdated following Twine/SugarCube updates

Twine/Sugarcube 2 Template

Twine SugarCube template

Twine Template II

Twine Template by Vahnya

Sample Code and more resources

A post from 2 years ago where I share sample code

TwineLab

nyehilism Twine masterpost

How to have greyed out choices

idrellegames's tutorials

Interactive Fiction Design, Coding in Twine & Other IF Resources by idrellegames (idrellegames has shared many tutorials and tips for Twine, browse their #twine tag)

How to print variables inside links

How do I create a passage link via clicking on a picture

App Builder

Convert your Twine game into a Windows and macOS executable (free)

Convert your Twine game into a mobile app for Android and iPhone (90$ one-time fee if memory serves me right) // Warning: the Android app it creates is outdated for Google Play, you'll need to update the source code yourself

246 notes

·

View notes

Text

Product Card CSS

#html css#code#product card#css card design#css product card#product card html css#frontend#webdesign#css#html#css3#frontenddevelopment#learn to code#codingflicks

0 notes

Photo

Responsive User Profile Card Get Source Code from divinectorweb website

#responsive#user profile card#profile card#responsive web design#css cards#html css cards#css tricks#learn to code#code#css card design#frontenddevelopment#divinectorweb

0 notes

Text

Movie Card UI Design

#movie card ui design#movie card css#css cards#responsive card design html css#html css#codenewbies#frontenddevelopment#css#html5 css3#webdesign#pure css tutorial#basic html css tutorial#responsive web design

2 notes

·

View notes

Text

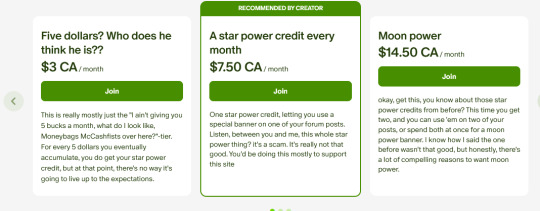

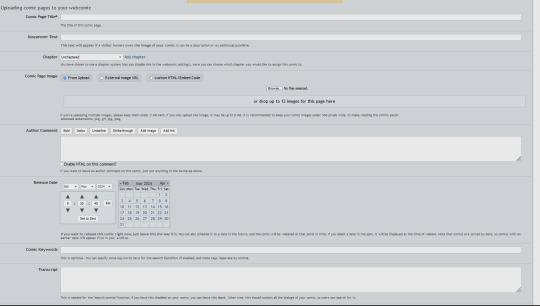

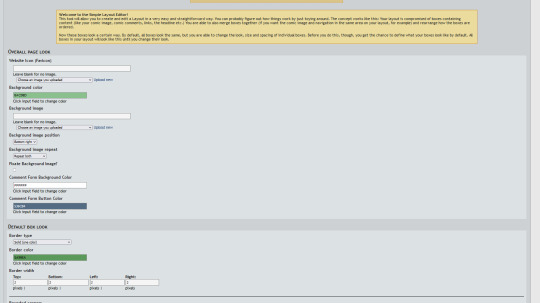

All the cool kids use ComicFury 😘

Hey y'all! If you love independent comic sites and have a few extra dollars in your pocket, please consider supporting ComicFury, the owner Kyo has been running it for nearly twenty years and it's one of the only comic hosting platforms left that's entirely independent and reminiscent of the 'old school' days that I know y'all feel nostalgic over.

(kyo's sense of humor is truly unmatched lmao)

Here are some of the other great features it offers:

Message board forums! It's a gift from the mid-2000's era gods!

Entirely free-to-use HTML and CSS editing! You can use the provided templates, or go wild and customize the site entirely to your liking! There's also a built-in site editor for people like me who want more control over their site design but don't have the patience to learn HTML/CSS ;0

In-depth site analytics that allow you to track and moderate comments, monitor your comic's performance per week, and let you see how many visitors you get. You can also set up Google Analytics on your site if you want that extra touch of data, without any bullshit from the platform. Shit, the site doesn't come with ads, but you can run ads on your site. The site owners don't ask questions, they don't take a cut. Pair your site with ComicAd and you'll be as cool as a crocodile alligator !

RSS feeds! They're like Youtube subscriptions for millennials and Gen X'ers!

NSFW comics are allowed, let the "female presenting nipples" run free! (just tag and content rate them properly!)

Tagging. Tagging. Remember that? The basic feature that every comic site has except for the alleged "#1 webcomic site"? The independent comic site that still looks the same as it did 10 years ago has that. Which you'd assume isn't that big a deal, but isn't it weird that Webtoons doesn't?

Blog posts. 'Nuff said.

AI-made comics are strictly prohibited. This also means you don't have to worry about the site owners sneaking in AI comics or installing AI scrapers (cough cough)

Did I mention that the hosting includes actual hosting? Meaning for only the cost of the domain you can change your URL to whatever site name you want. No extra cost for hosting because it's just a URL redirect. No stupid "pro plan" or "gold tier" subscription necessary, every feature of the site is free to use for all. If this were a sponsored Pornhub ad, this is the part where I'd say "no credit card, no bullshit".

Don't believe me? Alright, look at my creator backend (feat stats on my old ass 2014 comic, I ain't got anything to hide LOL)

TRANSCRIPTS! CHAPTER ORGANIZATION! MASS PAGE UPLOADING! MULTIPLE CREATOR SUPPORT! FULL HTML AND CSS SUPPORT! SIMPLIFIED EDITORS! ACTUAL STATISTICS THAT GIVE YOU WEEKLY BREAKDOWNS! THE POWER OF CHOICE!!

So yeah! You have zero reasons to not use and support ComicFury! It being "smaller" than Webtoons shouldn't stop you! Regain your independence, support smaller platforms, and maybe you'll even find that 'tight-knit community' that we all miss from the days of old! They're out there, you just gotta be willing to use them! ( ´ ∀ `)ノ~ ♡

#comicfury#support small platforms#webcomic platforms#webcomic advice#please reblog#also i'm posting my original work over there so if you want pure unhinged weeb puff that's where you can find it LOL#and no this isn't a 'sponsored post'#but i have been paid in the currency known as good faith to promote the shit out of it#because i don't wanna see sites like this die out#we already lost smackjeeves#comicfury is one of the only survivors left

377 notes

·

View notes

Text

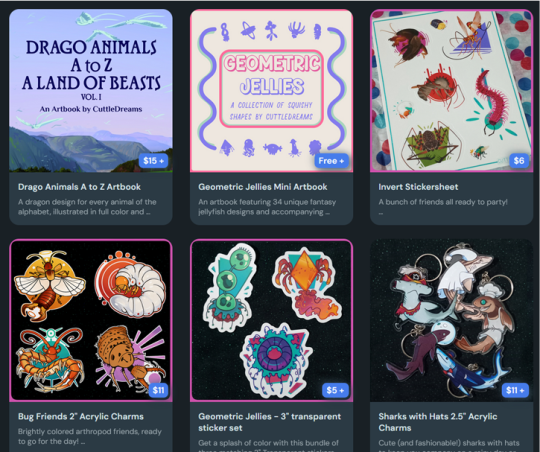

Website is Live!

After several weeks of hard work learning html and css, I've finally finished putting together my new website! !

On it you can find information as to where to find me online, updates to my Invertebrate Tarot project, and commission information!

(Easily check out the full deck as each card updates)

I have also updated my Ko-Fi page. It hosts my commission listings, as well as the last of my stock from my previous BigCartel shop (though shipping is for now limited to the usa), so if you wanted to pick up any stickers or acrylic charms of my designs, this is the place to grab them. I also host my mini-artbooks as pay-what-you-want.

With any commission, I have decided to take 15% of comm payments and donate the funds in an effort to resist the violence of the current usa administration.

As I set up which group I'll focus those donations on (looking at the Trans Youth Emergency Project) , for now I will be donating those funds to a friend of mine who's wheelchair was broken by an airline.

Finally, I've donated several of my illustrations as wallpapers to a fundraiser organized by Franz:

This is a nature-themed wallpaper pack featuring dozens of artists donations. It is available to the end of February. As per the instructions on the post, donate to either Lambda Legal or Assigned Media and Franz will send you the download link to the wallpaper collection.

LINKS:

Webpage: https://cuttledreams.neocities.org/

Shop/Commissions: ko-fi.com/cuttledreams

Faun's GoFundMe: https://www.gofundme.com/f/help-faun-replace-a-broken-wheelchair

Trans Support Wallpaper Fundraiser: https://bsky.app/profile/franzanth.bsky.social/post/3lhmetdyrq22h

#cuttledreams#update#ko-fi#shop#commission#website#gosh this is a big one#its not much but I hope I will end up being able to donate a little bit

39 notes

·

View notes