#docker build

Explore tagged Tumblr posts

Text

Nothing encapsulates my misgivings with Docker as much as this recent story. I wanted to deploy a PyGame-CE game as a static executable, and that means compiling CPython and PyGame statically, and then linking the two together. To compile PyGame statically, I need to statically link it to SDL2, but because of SDL2 special features, the SDL2 code can be replaced with a different version at runtime.

I tried, and failed, to do this. I could compile a certain version of CPython, but some of the dependencies of the latest CPython gave me trouble. I could compile PyGame with a simple makefile, but it was more difficult with meson.

Instead of doing this by hand, I started to write a Dockerfile. It's just too easy to get this wrong otherwise, or at least not get it right in a reproducible fashion. Although everything I was doing was just statically compiled, and it should all have worked with a shell script, it didn't work with a shell script in practice, because cmake, meson, and autotools all leak bits and pieces of my host system into the final product. Some things, like libGL, should never be linked into or distributed with my executable.

I also thought that, if I was already working with static compilation, I could just link PyGame-CE against cosmopolitan libc, and have the SDL2 pieces replaced with a dynamically linked libSDL2 for the target platform.

I ran into some trouble. I asked for help online.

The first answer I got was "You should just use PyInstaller for deployment"

The second answer was "You should use Docker for application deployment. Just start with

FROM python:3.11

and go from there"

The others agreed. I couldn't get through to them.

It's the perfect example of Docker users seeing Docker as the solution for everything, even when I was already using Docker (actually Podman).

I think in the long run, Docker has already caused, and will continue to cause, these problems:

Over-reliance on containerisation is slowly making build processes, dependencies, and deployment more brittle than necessary, because it still works in Docker

Over-reliance on containerisation is making the actual build process outside of a container or even in a container based on a different image more painful, as well as multi-stage build processes when dependencies want to be built in their own containers

Container specifications usually don't even take advantage of a known static build environment, for example by hard-coding a makefile, negating the savings in complexity

5 notes

·

View notes

Text

I don't always want to think about dev infrastructure but I do naturally hyperfocus on it every so often

#I now understand the basic concept of a docker build layer and dockerfile syntax.#also I didn't get like any sleep#next up monorepos for real this time

1 note

·

View note

Text

Fixing Docker Run Issues Blocking GitHub Action PRs

github.com/All-Hands-AI/OpenHands/pull/8661 When a failing Docker command prevents a GitHub Action from completing a pull request, check the command syntax (--rm instead of --rn, properly formatted -v), review GitHub Action logs for error details, test locally, enable debug logging, and verify all dependencies. Ensuring proper setup helps prevent workflow disruptions.

#actions#automation#build failures#CI/CD#container#de ops#debugging#deployment#docker#GitHub#pull request issues#troubleshooting#workflow

0 notes

Text

code repositories

#admins took away my access to the work git months ago and i still havent gotten it back#'you dont need access to the code youre just a qa' how do you expect me to qa if i cant build the project to run it. bastards#'you can use docker' 1. i didnt pay attention to those trainings so idk how that works 2. the code for that is also in the repository!!!!#so for now i have to rely on a dev to put a build on a test server which is annoying and slower than just building the fucking thing myself#thank god most of the projects are in the other repository that i do have access to. like at least just give me read-only permissions

0 notes

Text

📝 Guest Post: Local Agentic RAG with LangGraph and Llama 3*

New Post has been published on https://thedigitalinsider.com/guest-post-local-agentic-rag-with-langgraph-and-llama-3/

📝 Guest Post: Local Agentic RAG with LangGraph and Llama 3*

In this guest post, Stephen Batifol from Zilliz discusses how to build agents capable of tool-calling using LangGraph with Llama 3 and Milvus. Let’s dive in.

LLM agents use planning, memory, and tools to accomplish tasks. Here, we show how to build agents capable of tool-calling using LangGraph with Llama 3 and Milvus.

Agents can empower Llama 3 with important new capabilities. In particular, we will show how to give Llama 3 the ability to perform a web search, call custom user-defined functions

Tool-calling agents with LangGraph use two nodes: an LLM node decides which tool to invoke based on the user input. It outputs the tool name and tool arguments based on the input. The tool name and arguments are passed to a tool node, which calls the tool with the specified arguments and returns the result to the LLM.

Milvus Lite allows you to use Milvus locally without using Docker or Kubernetes. It will store the vectors you generate from the different websites we will navigate to.

Introduction to Agentic RAG

Language models can’t take actions themselves—they just output text. Agents are systems that use LLMs as reasoning engines to determine which actions to take and the inputs to pass them. After executing actions, the results can be transmitted back into the LLM to determine whether more actions are needed or if it is okay to finish.

They can be used to perform actions such as Searching the web, browsing your emails, correcting RAG to add self-reflection or self-grading on retrieved documents, and many more.

Setting things up

LangGraph – An extension of Langchain aimed at building robust and stateful multi-actor applications with LLMs by modeling steps as edges and nodes in a graph.

Ollama & Llama 3 – With Ollama you can run open-source large language models locally, such as Llama 3. This allows you to work with these models on your own terms, without the need for constant internet connectivity or reliance on external servers.

Milvus Lite – Local version of Milvus that can run on your laptop, Jupyter Notebook or Google Colab. Use this vector database we use to store and retrieve your data efficiently.

Using LangGraph and Milvus

We use LangGraph to build a custom local Llama 3-powered RAG agent that uses different approaches:

We implement each approach as a control flow in LangGraph:

Routing (Adaptive RAG) – Allows the agent to intelligently route user queries to the most suitable retrieval method based on the question itself. The LLM node analyzes the query, and based on keywords or question structure, it can route it to specific retrieval nodes.

Example 1: Questions requiring factual answers might be routed to a document retrieval node searching a pre-indexed knowledge base (powered by Milvus).

Example 2: Open-ended, creative prompts might be directed to the LLM for generation tasks.

Fallback (Corrective RAG) – Ensures the agent has a backup plan if its initial retrieval methods fail to provide relevant results. Suppose the initial retrieval nodes (e.g., document retrieval from the knowledge base) don’t return satisfactory answers (based on relevance score or confidence thresholds). In that case, the agent falls back to a web search node.

The web search node can utilize external search APIs.

Self-correction (Self-RAG) – Enables the agent to identify and fix its own errors or misleading outputs. The LLM node generates an answer, and then it’s routed to another node for evaluation. This evaluation node can use various techniques:

Reflection: The agent can check its answer against the original query to see if it addresses all aspects.

Confidence Score Analysis: The LLM can assign a confidence score to its answer. If the score is below a certain threshold, the answer is routed back to the LLM for revision.

General ideas for Agents

Reflection – The self-correction mechanism is a form of reflection where the LangGraph agent reflects on its retrieval and generations. It loops information back for evaluation and allows the agent to exhibit a form of rudimentary reflection, improving its output quality over time.

Planning – The control flow laid out in the graph is a form of planning, the agent doesn’t just react to the query; it lays out a step-by-step process to retrieve or generate the best answer.

Tool use – The LangGraph agent’s control flow incorporates specific nodes for various tools. These can include retrieval nodes for the knowledge base (e.g., Milvus), demonstrating its ability to tap into a vast pool of information, and web search nodes for external information.

Examples of Agents

To showcase the capabilities of our LLM agents, let’s look into two key components: the Hallucination Grader and the Answer Grader. While the full code is available at the bottom of this post, these snippets will provide a better understanding of how these agents work within the LangChain framework.

Hallucination Grader

The Hallucination Grader tries to fix a common challenge with LLMs: hallucinations, where the model generates answers that sound plausible but lack factual grounding. This agent acts as a fact-checker, assessing if the LLM’s answer aligns with a provided set of documents retrieved from Milvus.

```

### Hallucination Grader

# LLM

llm = ChatOllama(model=local_llm, format="json", temperature=0)

# Prompt

prompt = PromptTemplate(

template="""You are a grader assessing whether

an answer is grounded in / supported by a set of facts. Give a binary score 'yes' or 'no' score to indicate

whether the answer is grounded in / supported by a set of facts. Provide the binary score as a JSON with a

single key 'score' and no preamble or explanation.

Here are the facts:

documents

Here is the answer:

generation

""",

input_variables=["generation", "documents"],

)

hallucination_grader = prompt | llm | JsonOutputParser()

hallucination_grader.invoke("documents": docs, "generation": generation)

```

Answer Grader

Following the Hallucination Grader, another agent steps in. This agent checks another crucial aspect: ensuring the LLM’s answer directly addresses the user’s original question. It utilizes the same LLM but with a different prompt, specifically designed to evaluate the answer’s relevance to the question.

```

def grade_generation_v_documents_and_question(state):

"""

Determines whether the generation is grounded in the document and answers questions.

Args:

state (dict): The current graph state

Returns:

str: Decision for next node to call

"""

print("---CHECK HALLUCINATIONS---")

question = state["question"]

documents = state["documents"]

generation = state["generation"]

score = hallucination_grader.invoke("documents": documents, "generation": generation)

grade = score['score']

# Check hallucination

if grade == "yes":

print("---DECISION: GENERATION IS GROUNDED IN DOCUMENTS---")

# Check question-answering

print("---GRADE GENERATION vs QUESTION---")

score = answer_grader.invoke("question": question,"generation": generation)

grade = score['score']

if grade == "yes":

print("---DECISION: GENERATION ADDRESSES QUESTION---")

return "useful"

else:

print("---DECISION: GENERATION DOES NOT ADDRESS QUESTION---")

return "not useful"

else:

pprint("---DECISION: GENERATION IS NOT GROUNDED IN DOCUMENTS, RE-TRY---")

return "not supported"

```

You can see in the code above that we are checking the predictions by the LLM that we use as a classifier.

Compiling the LangGraph graph.

This will compile all the agents that we defined and will make it possible to use different tools for your RAG system.

```

# Compile

app = workflow.compile()

# Test

from pprint import pprint

inputs = "question": "Who are the Bears expected to draft first in the NFL draft?"

for output in app.stream(inputs):

for key, value in output.items():

pprint(f"Finished running: key:")

pprint(value["generation"])

```

Conclusion

In this blog post, we showed how to build a RAG system using agents with LangChain/ LangGraph, Llama 3, and Milvus. These agents make it possible for LLMs to have planning, memory, and different tool use capabilities, which can lead to more robust and informative responses.

Feel free to check out the code available in the Milvus Bootcamp repository.

If you enjoyed this blog post, consider giving us a star on Github, and share your experiences with the community by joining our Discord.

This is inspired by the Github Repository from Meta with recipes for using Llama 3

*This post was written by Stephen Batifol and originally published on Zilliz.com here. We thank Zilliz for their insights and ongoing support of TheSequence.

#ADD#agent#agents#amp#Analysis#APIs#app#applications#approach#backup#bears#binary#Blog#bootcamp#Building#challenge#code#CoLab#Community#connectivity#data#Database#Docker#emails#engines#explanation#extension#Facts#form#framework

0 notes

Text

Docker Multi-Stage Builds

Multi-stage builds in Docker allow you to create a Dockerfile with multiple build stages, enabling you to build and optimize your final image more efficiently. It’s particularly useful for reducing the size of the final image by separating the build environment from the runtime environment. Here’s an example of a Dockerfile utilizing multi-stage builds for a Node.js application using…

View On WordPress

0 notes

Text

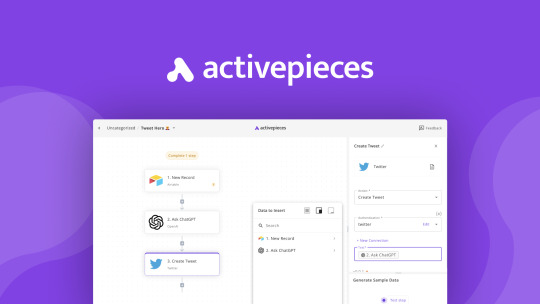

Activepieces:10 Best Reasons to Choose This No-Code Wonder

Activepieces: The no-code Zapier alternative. It helps you do tasks, and make content without any code. It improves your work better. Try it right now! Are you sick of running your business like rocket science? You probably feel bad when you need someone who knows computer code to fix your computers. Hiring someone who has complete knowledge of computer coding for your website or business, is a…

View On WordPress

#activepieces#activepieces 10 best reasons choose this no code wonder#activepieces best appsumo lifetime deal#activepieces create automations 100 apps#activepieces docker#activepieces funding#activepieces github#activepieces open source no code#activepieces price#activepieces pricing plans#activepieces review#building workflow activepieces#connecting with multiple apps activepieces#integration Chatgpt activepieces#why should I choose activepieces?

0 notes

Text

I need a damn raise

#My current task forces me to work on a shitty laptop our client provides us and not my own#And it's just so fucking infuriating to work on#I'm a software dev and I work to develop THEIR software and they give me a shitty 10gb ram laptop from fucking 2010#That can BARELY run docker and uses fucking decades to load anything#You run the project and it spends 10 minutes to tell you there's an error#You fix the error and it spends 10mins building to tell you it didn't fix it#Debugging is a fucking NIGHTMARE#Hate this fucking laptop it's fucking impossible to work on and I want nothing more than to throw it out the goddamn windows#Window*#Been good w my job for a while bc I haven't had to use it#But now I'm back here and I need more money if I'm gonna deal with this piece of trash laptop#Sorry just venting I have a lot of feelings about this

0 notes

Note

Is the belief at all valid that ultimately there is nothing much we in the imperial core can do for the global south (i.e palestine) and that liberation is largely in their hands only? Was there any time historically where that wasn't the case?

Maybe I am just doom and glooming but it really doesn't feel like there is much we can affect (though I still attend protest and do whatever my party tells me to, I don't air out these thoughts because I don't think they are productive)

Primarly I feel like building a base here for when shit goes south is the only thing we can do

My friend, we can't forget that, while imperialism is committed outside of our reach, it is fueled, supported, and justified in our countries. National liberation movements fight in their own frontlines, and we fight in the rearguard. If you have the impression that any real progress is impossible from our position, that is a product of the very limited development of the subjective conditions in your country. You and I have seen a myriad of protests and encampments this last year, which have had overwhelmingly no material effects on the genocide, but this is not inescapable.

In Greece, where the KKE is a legitimate communist party in the eyes of a significant portion of the Greek working class, their organization in and out of the workplace is very capable. In the 17th of October they, co-organizing with the relevant union and other entities (small note because when this happened some tumblr users seemed to misspeak, this action would have been impossible without the help and involvement of the KKE, take a look at the US to see what trade unions do without communist influence), blocked a shipment of bullets to Israel:

And merely a week ago, they blocked another shipment of ammunition meant to further fuel the imperialist war in Ukraine:

The differentiating factor in Greece that is not present arguably anywhere else in Europe and North America is their strong and established communist party, even their presence exerts an indirect influence in the broader working class, communist or not.

So are the rest of us meant to sit in our milquetoast protests and watch on with envy at the Greeks? No, because these are subjective conditions, and we have control over them. Even if most actions we do don't achieve anything materially, we gain experience, and the base for a proper organization of our class is built up. It's not just building that base for when something goes wrong in our countries, it's building a better base for the very next mobilization, the next action, the next imperialist aggression. The student movement of the imperial core is better off now in terms of lessons to be learned after the encampments than if they hadn't done anything (and the utility of the encampments wasn't completely null anyway, some unis in Spain have ceased all economic and academic relations with Israel).

204 notes

·

View notes

Text

At the harbour there’s noise everywhere — hurried rushes of footsteps, snatches of conversation, the voices of street-sellers rising above the everyday din with cries of “Fresh whelks! Fresh whel—”, “Apples and pears! Fresh today!”, “Roses, sir, roses for your Mis—!”. Along the quayside cargo masters bark instructions to their men, and crates clatter earthward from the decks or are borne aloft on the shoulders of brawny dockers. Beneath it all is the sound of the shipyard, a constant beat of hammers that Kit can feel in his chest.

Kit pushes on through the crowds, buffeted along by the busy current of fellow humanity. He wishes dearly for the open fields or leafy avenues of Brindleton. There the air is sweet, not thick with the salty seaweed taste, the people don’t rush, don’t crowd together, shout, or jostle.

A journey of bumping shoulders and muttered apologies washes him up on the doorstep of The Lermond’s Cove company, as the modest brass plate beside the door proclaims. The building is smaller than the grand shipping offices, tucked on the end of the harbour frontage, but it’s smart enough, and offers welcome shelter from the bustle outside. A small bell rings above the door as Kit makes his way inside.

“Hello, sir.” The young woman greeting him sits behind a solitary desk, a large ledger arrayed in front of her. The frugality of the outside of the building is continued on the inside, with the only ornaments to the small room besides its occupant being a few framed charts and maps. The whole arrangement gives the impression of being newly established. “How can I help you?”

“I, er, have an appointment with Mr Allen,” Kit says, suddenly abashed.

After checking an entry in the ledger, the young woman gestures down the hallway.

“It’s the first door on the left, sir.”

Making his way to the indicated door, Kit hesitates a second before knocking. He can hardly turn back now, with the secretary watching in the entryway.

His knock is answered by a curt “Enter.”

The man behind the desk rises to greet Kit, extending a hand over the tabletop. He’s smartly dressed, in a well-made suit of the latest fashion. The clothes look new — too new, perhaps. The thick callouses beneath Kit’s hand betray the lifetime of hard work that the suit tries hard to erase.

“Fred Allen,” The man says, by way of introduction. Releasing Kit’s hand, he gestures to the chair on the opposite side of the desk. “You must be Calloway.”

“That’s right, sir. As I said in my letter, Mr Miller up in Brindleton heard you might have opportunities going for someone willing to sell their crop.”

“Well, he heard correctly, I guess, though I have to say I wasn’t expecting anyone round here so soon. How’s about you tell me what set up you’ve got going, and then I’ll think about it?” says Allen.

“I’ve got about two-hundred acres just outside Brindleton, wheat and potatoes mainly. Only took over two years ago, but the last two harvests have done well.” Kit picks at a loose thread at the edge of his jacket, wishing he hadn’t done his collar up so tightly.

“You got any hands, or is it a one man show?” Allen asks as he sifts through a stack of papers, running a finger down a column of figures.

“Just me at the moment, sir, but some of the local lads help out around harvest. There’s room for expansion, though, if we come to an agreement.”

“Hm.” Allen seems to be considering, rubbing a large hand across his coarse chin. The more Kit looks at him, the more he struggles to see the businessman through the farmer — or is it sailor? At any rate, Allen’s tanned skin and deep crow’s feet speak of a life that, until recently, was spent working out of doors. The tailored clothes seem almost like a costume. It’s reassuring, perhaps, to know that Allen would understand something of the toil put into producing the crop.

Eventually Allen reaches the end of his deliberations with a great sigh.

“Look, son, I won’t pretend this isn’t somewhat of a cowboy venture, and that I haven’t got as much capital to be free with as certain larger companies. But I think we understand each other, and on account of your being the first to come and see me, I’m willing to give you an offer. I’ll take half your next wheat harvest, and I’ll give you two dollars a bushel if you’re willing to shake on it now.”

“I’m more than willing, sir, thank you,” Kit says. There’s a weight that’s lifted from his shoulders with Allen’s words, the anxious knot in his stomach loosening a little. Somehow, he’s managed to grab hold of the life ring thrown to him, and for a minute the hard work of hauling to shore can be forgotten.

Arriving home that night, dusty from the road, Kit feels lighter than he has done in months. For once he looks at the farm and sees it as something beautiful, rather than a never-ending source of work. There’s a little moonlight dappling through the trees, outlining the farmhouse against the night sky behind it.

For a moment, he leans against the fence of the cow-pen, taking slow lungfuls of the cool night air. Then he turns towards the house, and the faint glow behind the front door that draws his weary feet over the threshold.

Meg’s standing at the kitchen table, placing the finishing touches on a freshly baked cake. From the untidy tendrils of hair she keeps trying to blow from her face and the flour down her apron, it’s been a hard-fought battle with the sponge. The weak firelight from the stove behind her casts her in a rosy glow, and oh, it’s enough to knock the air from Kit’s chest.

“You’re up late,” he murmurs, giving into the urge to take her in his arms. Her body is warm against his, and she smells slightly of strawberry jam.

“I had to remake the sponge,” Meg sighs, finally pushing the finished cake away and leaning into his touch. “And I split the cream. It’s all a horrible mess.”

“Well hang the cake then, because I’ve got something that’ll cheer you up.” Gently Kit spins her round to face him, pulling her close.

“I take it your meeting went well?” She smiles.

“I think so. He’ll take half of next year’s wheat, and for a good price as well.”

“Oh, you wonderful man,” Meg says softly.

Kit’s reply is to lean down and kiss her. Even though he’s only been gone a day, it feels like he’s waited months for that kiss, for Meg’s hands on his shoulders and lips on his. Without thinking, he lifts her onto the table, hands finding her waist and hair.

“Christopher James Calloway, if you want to carry on with this nonsense then you will unhand me and let me clear up before we go upstairs!” Meg pulls away, trying to sound cross, but the barely concealed laughter rather ruins the effect. “I love you very much, but I will not ruin this cake for you.”

“Consider me told,” Kit laughs.

#ts4 decades challenge#decades challenge#historical simblr#ts4 historical#ts4#sims 4#simblr#sims story#ts4 legacy#calloways#calloways 1890s#kit calloway#fred allen#meg calloway#we back baby!#(sporadically because uni is busy but it's something)#being busy is certainly helping me to be less of a perfectionist#and just accept that more often than not the post won't come out exactly how it is in my head

63 notes

·

View notes

Text

Pinned Post*

Layover Linux is my hobby project, where I'm building a packaging ecosystem from first principles and making a distro out of it. It's going slow thanks to a full time job (and... other reasons), but the design is solid, so I suppose if I plug away at this long enough, I'll eventually have something I can dogfood in my own life, for everything from gaming to web services to low-power "Linux but no package manager" devices.

Layover is the package manager at the heart of Layover Linux. It's designed around hermetic builds, atomically replacing the running system, cross compilation, and swearing at the Rust compiler. It's happy to run entirely in single-user mode on other distros, and is based around configuration, not mutation.

We all deserve reproducible builds. We all deserve configuration-based operating systems. We all deserve the simple safety of atomic updates. And gosh darn it, we deserve those things to be easy. I'm making the OS that I want to use, and I hope you'll want to use it too.

*if you want to get pinned and live in the PNW, I'm accepting DMs but have limited availability. Thanks 😊💜

Thanks to @docker-official for persuading me to pull the trigger on making an official blog. Much love to @jennihurtz, the supreme and shining jewel in my life and the heart beating in twin with mine. I also owe @k-simplex thanks for her support, which has carried me a few times in the last couple years.

44 notes

·

View notes

Text

Man goes to the doctor. Says he's frustrated. Says his Python experience seems complicated and confusing. Says he feels there are too many environment and package management system options and he doesn't know what to do.

Doctor says, "Treatment is simple. Just use Poetry + pyenv, which combines the benefits of conda, venv, pip, and virtualenv. But remember, after setting up your environment, you'll need to install build essentials, which aren't included out-of-the-box. So, upgrade pip, setuptools, and wheel immediately. Then, you'll want to manage your dependencies with a pyproject.toml file.

"Of course, Poetry handles dependencies, but you may need to adjust your PATH and activate pyenv every time you start a new session. And don't forget about locking your versions to avoid conflicts! And for data science, you might still need conda for some specific packages.

"Also, make sure to use pipx for installing CLI tools globally, but isolate them from your project's environment. And if you're deploying, Dockerize your app to ensure consistency across different machines. Just be cautious about Docker’s compatibility with M1 chips.

"Oh, and when working with Jupyter Notebooks, remember to install ipykernel within your virtual environment to register your kernel. But for automated testing, you should...

76 notes

·

View notes

Text

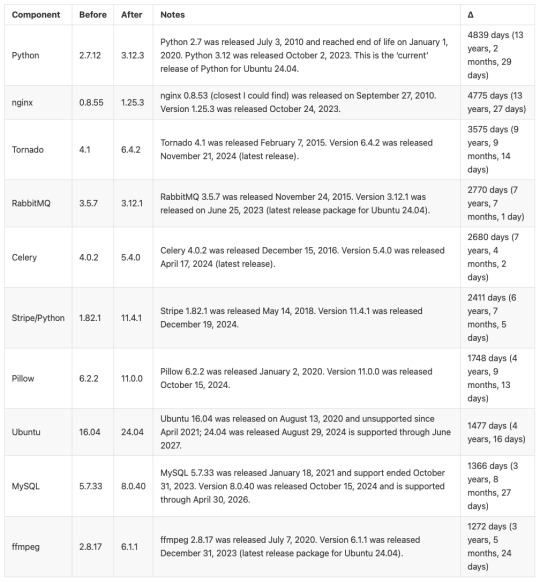

BRB... just upgrading Python

CW: nerdy, technical details.

Originally, MLTSHP (well, MLKSHK back then) was developed for Python 2. That was fine for 2010, but 15 years later, and Python 2 is now pretty ancient and unsupported. January 1st, 2020 was the official sunset for Python 2, and 5 years later, we’re still running things with it. It’s served us well, but we have to transition to Python 3.

Well, I bit the bullet and started working on that in earnest in 2023. The end of that work resulted in a working version of MLTSHP on Python 3. So, just ship it, right? Well, the upgrade process basically required upgrading all Python dependencies as well. And some (flyingcow, torndb, in particular) were never really official, public packages, so those had to be adopted into MLTSHP and upgraded as well. With all those changes, it required some special handling. Namely, setting up an additional web server that could be tested against the production database (unit tests can only go so far).

Here’s what that change comprised: 148 files changed, 1923 insertions, 1725 deletions. Most of those changes were part of the first commit for this branch, made on July 9, 2023 (118 files changed).

But by the end of that July, I took a break from this task - I could tell it wasn’t something I could tackle in my spare time at that time.

Time passes…

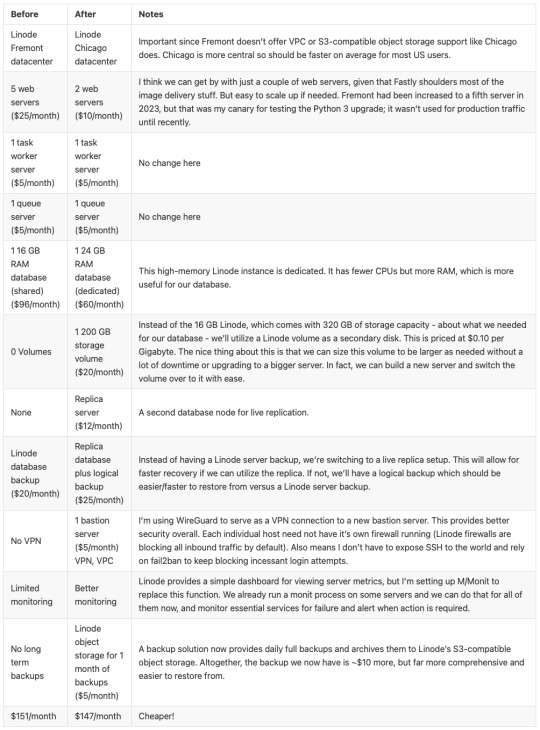

Fast forward to late 2024, and I take some time to revisit the Python 3 release work. Making a production web server for the new Python 3 instance was another big update, since I wanted the Docker container OS to be on the latest LTS edition of Ubuntu. For 2023, that was 20.04, but in 2025, it’s 24.04. I also wanted others to be able to test the server, which means the CDN layer would have to be updated to direct traffic to the test server (without affecting general traffic); I went with a client-side cookie that could target the Python 3 canary instance.

In addition to these upgrades, there were others to consider — MySQL, for one. We’ve been running MySQL 5, but version 9 is out. We settled on version 8 for now, but could also upgrade to 8.4… 8.0 is just the version you get for Ubuntu 24.04. RabbitMQ was another server component that was getting behind (3.5.7), so upgrading it to 3.12.1 (latest version for Ubuntu 24.04) seemed proper.

One more thing - our datacenter. We’ve been using Linode’s Fremont region since 2017. It’s been fine, but there are some emerging Linode features that I’ve been wanting. VPC support, for one. And object storage (basically the same as Amazon’s S3, but local, so no egress cost to-from Linode servers). Both were unavailable to Fremont, so I decided to go with their Chicago region for the upgrade.

Now we’re talking… this is now not just a “push a button” release, but a full-fleged, build everything up and tear everything down kind of release that might actually have some downtime (while trying to keep it short)!

I built a release plan document and worked through it. The key to the smooth upgrade I want was to make the cutover as seamless as possible. Picture it: once everything is set up for the new service in Chicago - new database host, new web servers and all, what do we need to do to make the switch almost instant? It’s Fastly, our CDN service.

All traffic to our service runs through Fastly. A request to the site comes in, Fastly routes it to the appropriate host, which in turns speaks to the appropriate database. So, to transition from one datacenter to the other, we need to basically change the hosts Fastly speaks to. Those hosts will already be set to talk to the new database. But that’s a key wrinkle - the new database…

The new database needs the data from the old database. And to make for a seamless transition, it needs to be up to the second in step with the old database. To do that, we have take a copy of the production data and get it up and running on the new database. Then, we need to have some process that will copy any new data to it since the last sync. This sounded a lot like replication to me, but the more I looked at doing it that way, I wasn’t confident I could set that up without bringing the production server down. That’s because any replica needs to start in a synchronized state. You can’t really achieve that with a live database. So, instead, I created my own sync process that would copy new data on a periodic basis as it came in.

Beyond this, we need a proper replication going in the new datacenter. In case the database server goes away unexpectedly, a replica of it allows for faster recovery and some peace of mind. Logical backups can be made from the replica and stored in Linode’s object storage if something really disastrous happens (like tables getting deleted by some intruder or a bad data migration).

I wanted better monitoring, too. We’ve been using Linode’s Longview service and that’s okay and free, but it doesn’t act on anything that might be going wrong. I decided to license M/Monit for this. M/Monit is so lightweight and nice, along with Monit running on each server to keep track of each service needed to operate stuff. Monit can be given instructions on how to self-heal certain things, but also provides alerts if something needs manual attention.

And finally, Linode’s Chicago region supports a proper VPC setup, which allows for all the connectivity between our servers to be totally private to their own subnet. It also means that I was able to set up an additional small Linode instance to serve as a bastion host - a server that can be used for a secure connection to reach the other servers on the private subnet. This is a lot more secure than before… we’ve never had a breach (at least, not to my knowledge), and this makes that even less likely going forward. Remote access via SSH is now unavailable without using the bastion server, so we don’t have to expose our servers to potential future ssh vulnerabilities.

So, to summarize: the MLTSHP Python 3 upgrade grew from a code release to a full stack upgrade, involving touching just about every layer of the backend of MLTSHP.

Here’s a before / after picture of some of the bigger software updates applied (apologies for using images for these tables, but Tumblr doesn’t do tables):

And a summary of infrastructure updates:

I’m pretty happy with how this has turned out. And I learned a lot. I’m a full-stack developer, so I’m familiar with a lot of devops concepts, but actually doing that role is newish to me. I got to learn how to set up a proper secure subnet for our set of hosts, making them more secure than before. I learned more about Fastly configuration, about WireGuard, about MySQL replication, and about deploying a large update to a live site with little to no downtime. A lot of that is due to meticulous release planning and careful execution. The secret for that is to think through each and every step - no matter how small. Document it, and consider the side effects of each. And with each step that could affect the public service, consider the rollback process, just in case it’s needed.

At this time, the server migration is complete and things are running smoothly. Hopefully we won’t need to do everything at once again, but we have a recipe if it comes to that.

15 notes

·

View notes

Text

Juni 2025

Ich befördere mich zum Senior Developer

Ich pflege eine Website. Meines Wissens nach bin ich in der dritten Generation an Maintainenden. Und mindestens zwischen der ersten Gruppe und der zweiten gab es so gut wie keine Übergabe. Heißt: der Code der Website ist ein großes Chaos.

Jetzt wurde mir aufgetragen, ein größeres neues Feature zu implementieren, was fast alle komplexeren Systeme der Website wiederverwenden soll. Alleine die Vorstellung dazu hat mir schon keinen Spaß gemacht. Die Realität war dann auch noch schlimmer.

Am Anfang, als ich das Feature implementiert habe, habe ich einen Großteil der Änderungen und Erklärarbeit mit Gemini 2.5 Flash gemacht. Dabei habe ich die Dateien oder Sektionen aus dem Code direkt in das LLM kopiert und habe dann Fragen dazu gestellt oder versucht zu verstehen, wie die ganzen Komponenten zusammenhängen. Das hat nur so mittelgut funktioniert.

Anfang des Jahres (Februar 2025) habe ich von einem Trend namens Vibe Coding und der dazugehörigen Entwicklungsumgebung Cursor gehört. Die Idee dabei war, dass man keine Zeile Code mehr anfasst und einfach nur noch der KI sagt, was sie tun soll. Ich hatte dann wegen der geringen Motivation und aus Trotz die Idee, es einfach an der Website auch mal auszuprobieren. Und gut, dass ich das gemacht habe.

Cursor ist eine Entwicklungsumgebung, die es einem Large Language Model erlaubt, lokal auf dem Gerät an einer Codebase Änderungen durchzuführen. Ich habe dann in ihrem Agent Mode, wo die KI mehrere Aktionen nacheinander ausführen darf, ein Feature nach dem anderen implementiert.

Das Feature, was ich zuvor mühsamst per Hand in etwa 9 Stunden Arbeit implementiert hatte, konnte es in etwa 10 Minuten ohne größere Hilfestellungen replizieren. Wobei ohne Hilfestellung etwas gelogen ist, weil ich ja zu dem Zeitpunkt schon wusste, an welche Dateien man muss, um das Feature zu implementieren. Das war schon sehr beeindruckend. Was das aber noch übertroffen hat, ist die Möglichkeit, dem LLM Zugriff auf die Konsole zu geben.

Die Website hat ein build script, was man ausführen muss, um den Docker Container zu bauen, der dann die Website laufen lässt. Ich habe ihm erklärt, wie man das Skript verwendet, und ihm dann die Erlaubnis gegeben, ohne zu fragen Dinge auf der Commandline auszuführen. Das führt dazu, dass das LLM dann das Build Script von alleine ausführt, wenn es glaubt, es hätte jetzt alles implementiert.

Der Workflow sah dann so aus, dass ich eine Aufgabe gestellt habe und das LLM dann versucht hat, das Feature zu implementieren, den Buildprozess zu auszulösen, festzustellen, dass, was es geschrieben hat, Fehler wirft, die Fehler repariert und den Buildprozess wieder auslöst – so lange, bis entweder das soft limit von 25 Aktionen hintereinander erreicht ist oder der Buildprozess funktioniert. Ich habe mir dann im Browser nur noch angeschaut, wie es aussieht, die neue Änderung beschrieben und das Ganze wieder von vorne losgetreten.

Was ich dabei aber insgesamt am interessantesten fand, ist, dass ich plötzlich nicht mehr die Rolle eines Junior Developers hatte, sondern eher die, die den Senior Developern zukommt. Nämlich Code lesen, verstehen und dann kritisieren.

(Konstantin Passig)

8 notes

·

View notes

Text

bold what applies to your muse; italicize what sometimes applies; strike what never applies.

► AESTHETIC – dark colors. bright colors. neon colors. soft colors. blood. forests. space. mansions. ghosts. asylums. wastelands. fire. injuries. hands. dolls. fog. storms. galaxies. snow. dawn. midnight. cold. animals. sharp teeth. neck. shoulders. bruises. freckles. legs. feminine. masculine. burns. weapons. colorful hair. witchcraft. lips. webs. fields. corn fields. tears. sweat. glitter. flowers. plants. magic. fear. pain. murder. guns. scars. missing posters. old paintings. strange eyes. explosions. creatures. lingerie. kissing. playfulness. metal. diamonds. rust. iron. stealth. running away. steel. glass. wood. porcelain. paper. fur. lace. leather. synthetics. robots. droids. monsters. childhood fears. cigarettes. alcohol. cameras. video cameras. polaroid cameras. phones. computers. war. peace. angels. demons. decay. sadness. red lipstick. powder puffs. abandoned cars. skeletons. strangling. overcoats. puppets. torture. ptsd. insomnia. old cottages. loyalty. hospitals. syringes. bared teeth. scary basements. butterflies. prosthetic limbs. cats. dogs. dreams. burned-out buildings. armor.

► APPEARANCE – thick waist. narrow waist. narrow hips. average hips. wide hips. curvy frame. muscular frame. chubby frame. petite frame. lanky frame. voluptuous frame. lean frame. skinny. long legs. stumpy. average legs. thick thighs. muscular thighs. toned thighs. slender thighs. beer belly. toned stomach. flat stomach. feminine frame. masculine frame. six pack. harsh facial features. baby face. shaved face. soft features. angular features. square jaw. beard. five o'clock shadow. freckles. scars. moles. dimples. braces. tattoos. piercings. pigtails. messy hair. pixie cut. bald. long hair. shaved head. ponytail. clipped-back fringe. shoulder length. bob cut. old-fashioned hairstyle. dreadlocks. bun. braids. shaved side. mohawk. buzz cut. afro. asymmetric. crown braid. wavy. curly. short. cotton buns. fade. comb over. side part. other.

► WARDROBE – tight pants. denim jeans. cargo pants. fatigues. chinos. khakis. dress slacks. slim-fit. dockers. pajama bottoms. shorts. short-shorts. jean shorts. dungarees. skirt-overalls. pencil skirt. long skirt. mini skirt. tutu. leggings. sports bra. yoga pants. basketball shorts. joggers. sweats. sweater. sweater vest. vest. t-shirt. tank undershirt. long-sleeve. tight shirts. polo shirt. athletic shirt. cardigan. button-up shirt. v-neck. henley. flannels. plaid. crop top. tank top. blouse. racerback shirts. boob tube. sundress. 1-shoulder dress. strapless. jumper dress. apron dress. dress shirt. ball gown. nightgown. hoodies. army jacket. mechanic coveralls. trench coat. bomber jacket. sport coat. leather jacket. lots of layers. uniform. dress uniform. armor. bare feet. high heels. ballet shoes. jelly shoes. flip-flops. sandals. rain boots. sneakers. pumps. flats. thigh-high boots. cowboy boots. timberland boots. doc martens. slip-ons. slippers. motorcycle boots. chukkas. loafers. dress boots. knee boots. riding boots. knee-high socks. socks. hose. stockings. beanies. top hat. sunhat. newsboy cap. fedora. baseball cap. belt. tool / utility belt. gloves.

► HAS YOUR MUSE EVER… broken a bone. had a near death experience. killed someone (and succeeded). saved a life. self-harmed. attempted suicide. had surgery. kissed the same gender/sex. had sex. had sex and regretted it. lost a loved one. had a pet. gotten arrested. gotten married. divorced. cheated. gotten shot. been stabbed. witnessed death. taken drugs. gotten drunk. kept a promise you regretted. played with an ouija board. seen a ghost. been in a car accident. gotten stitches. suffered from amnesia. survived a natural disaster. survived an assassination attempt. survived a plane / ship crash. been framed. gone undercover. faked death. assumed a fake identity. led a double life. invented something. had something slipped in their food / drink. been kidnapped. been taken hostage. been sexually assaulted. been sexually harrassed. been bullied. bullied someone. had a stalker. been betrayed. been a traitor. been blackmailed. been abused. gotten away with crime. killed someone (and failed).

tagged by: none. stole it. tagging: @azmenka @musecraft @lvscinvs @greenbled @sunfyred @wulfmaed @worthyheir @loreforged @herdragcnfire @drachnslayer

7 notes

·

View notes

Text

I finally figured it out and it wasn't even that hard!

just used docker remote contexts over ssh + docker compose + git submodules + justfiles and it works perfectly!

I would love to document my process in more detail at some point (and also maybe switch to docker stacks instead of compose so it works with docker swarm for horizontal scaling) but I'm lazy so that probably won't ever happen lmao

i fucking hate modern devops or whatever buzzword you use for this shit

i just wanna take my code, shove it in a goddamn docker image and deploy it to my own goddamn hardware

no PaaS bullshit, no yaml files, none of that bullshit

just

code -> build -> deploy

please 😭

#docker remote contexts are like the best thing ever#you can even build local code on a remote machine with a completely different architecture! (x86 local and ARM remote in my case)#(that is unfortunately dependent on if your code is multi-arch but i use golang so that isnt an issue for me)

3 notes

·

View notes