#hadoop Ecosystem

Explore tagged Tumblr posts

Text

As a dev, can confirm.

I've used multiple dev- or sysadmin-oriented software without understanding what they were for, but they were needed by some other parts of the system.

#still not sure what zookeeper does#it's supposedly a vital part of the hadoop ecosystem#we used it for kafka#other things i took a while to get:#mongodb#noSQL#document-based DBs#map-reduce#and so much networking stuff

24K notes

·

View notes

Text

🚀 Exploring Kafka: Scenario-Based Questions 📊

Dear community, As Kafka continues to shape modern data architectures, it's crucial for professionals to delve into scenario-based questions to deepen their understanding and application. Whether you're a seasoned Kafka developer or just starting out, here are some key scenarios to ponder: 1️⃣ **Scaling Challenges**: How would you design a Kafka cluster to handle a sudden surge in incoming data without compromising latency? 2️⃣ **Fault Tolerance**: Describe the steps you would take to ensure high availability in a Kafka setup, considering both hardware and software failures. 3️⃣ **Performance Tuning**: What metrics would you monitor to optimize Kafka producer and consumer performance in a high-throughput environment? 4️⃣ **Security Measures**: How do you secure Kafka clusters against unauthorized access and data breaches? What are some best practices? 5️⃣ **Integration with Ecosystem**: Discuss a real-world scenario where Kafka is integrated with other technologies like Spark, Hadoop, or Elasticsearch. What challenges did you face and how did you overcome them? Follow : https://algo2ace.com/kafka-stream-scenario-based-interview-questions/

#Kafka #BigData #DataEngineering #TechQuestions #ApacheKafka #BigData #Interview

2 notes

·

View notes

Text

Essential Skills for Aspiring Data Scientists in 2024

Welcome to another edition of Tech Insights! Today, we're diving into the essential skills that aspiring data scientists need to master in 2024. As the field of data science continues to evolve, staying updated with the latest skills and tools is crucial for success. Here are the key areas to focus on:

1. Programming Proficiency

Proficiency in programming languages like Python and R is foundational. Python, in particular, is widely used for data manipulation, analysis, and building machine learning models thanks to its rich ecosystem of libraries such as Pandas, NumPy, and Scikit-learn.

2. Statistical Analysis

A strong understanding of statistics is essential for data analysis and interpretation. Key concepts include probability distributions, hypothesis testing, and regression analysis, which help in making informed decisions based on data.

3. Machine Learning Mastery

Knowledge of machine learning algorithms and frameworks like TensorFlow, Keras, and PyTorch is critical. Understanding supervised and unsupervised learning, neural networks, and deep learning will set you apart in the field.

4. Data Wrangling Skills

The ability to clean, process, and transform data is crucial. Skills in using libraries like Pandas and tools like SQL for database management are highly valuable for preparing data for analysis.

5. Data Visualization

Effective communication of your findings through data visualization is important. Tools like Tableau, Power BI, and libraries like Matplotlib and Seaborn in Python can help you create impactful visualizations.

6. Big Data Technologies

Familiarity with big data tools like Hadoop, Spark, and NoSQL databases is beneficial, especially for handling large datasets. These tools help in processing and analyzing big data efficiently.

7. Domain Knowledge

Understanding the specific domain you are working in (e.g., finance, healthcare, e-commerce) can significantly enhance your analytical insights and make your solutions more relevant and impactful.

8. Soft Skills

Strong communication skills, problem-solving abilities, and teamwork are essential for collaborating with stakeholders and effectively conveying your findings.

Final Thoughts

The field of data science is ever-changing, and staying ahead requires continuous learning and adaptation. By focusing on these key skills, you'll be well-equipped to navigate the challenges and opportunities that 2024 brings.

If you're looking for more in-depth resources, tips, and articles on data science and machine learning, be sure to follow Tech Insights for regular updates. Let's continue to explore the fascinating world of technology together!

#artificial intelligence#programming#coding#python#success#economy#career#education#employment#opportunity#working#jobs

2 notes

·

View notes

Text

Java's Lasting Impact: A Deep Dive into Its Wide Range of Applications

Java programming stands as a towering pillar in the world of software development, known for its versatility, robustness, and extensive range of applications. Since its inception, Java has played a pivotal role in shaping the technology landscape. In this comprehensive guide, we will delve into the multifaceted world of Java programming, examining its wide-ranging applications, discussing its significance, and highlighting how ACTE Technologies can be your guiding light in mastering this dynamic language.

The Versatility of Java Programming:

Java programming is synonymous with adaptability. It's a language that transcends boundaries and finds applications across diverse domains. Here are some of the key areas where Java's versatility shines:

1. Web Development: Java has long been a favorite choice for web developers. Robust and scalable, it powers dynamic web applications, allowing developers to create interactive and feature-rich websites. Java-based web frameworks like Spring and JavaServer Faces (JSF) simplify the development of complex web applications.

2. Mobile App Development: The most widely used mobile operating system in the world, Android, mainly relies on Java for app development. Java's "write once, run anywhere" capability makes it an ideal choice for creating Android applications that run seamlessly on a wide range of devices.

3. Desktop Applications: Java's Swing and JavaFX libraries enable developers to craft cross-platform desktop applications with sophisticated graphical user interfaces (GUIs). This cross-platform compatibility ensures that your applications work on Windows, macOS, and Linux.

4. Enterprise Software: Java's strengths in scalability, security, and performance make it a preferred choice for developing enterprise-level applications. Customer Relationship Management (CRM) systems, Enterprise Resource Planning (ERP) software, and supply chain management solutions often rely on Java to deliver reliability and efficiency.

5. Game Development: Java isn't limited to business applications; it's also a contender in the world of gaming. Game developers use Java, along with libraries like LibGDX, to create both 2D and 3D games. The language's versatility allows game developers to target various platforms.

6. Big Data and Analytics: Java plays a significant role in the big data ecosystem. Popular frameworks like Apache Hadoop and Apache Spark utilize Java for processing and analyzing massive datasets. Its performance capabilities make it a natural fit for data-intensive tasks.

7. Internet of Things (IoT): Java's ability to run on embedded devices positions it well for IoT development. It is used to build applications for smart homes, wearable devices, and industrial automation systems, connecting the physical world to the digital realm.

8. Scientific and Research Applications: In scientific computing and research projects, Java's performance and libraries for data analysis make it a valuable tool. Researchers leverage Java to process and analyze data, simulate complex systems, and conduct experiments.

9. Cloud Computing: Java is a popular choice for building cloud-native applications and microservices. It is compatible with cloud platforms such as AWS, Azure, and Google Cloud, making it integral to cloud computing's growth.

Why Java Programming Matters:

Java programming's enduring significance in the tech industry can be attributed to several compelling reasons:

Platform Independence: Java's "write once, run anywhere" philosophy allows code to be executed on different platforms without modification. This portability enhances its versatility and cost-effectiveness.

Strong Ecosystem: Java boasts a rich ecosystem of libraries, frameworks, and tools that expedite development and provide solutions to a wide range of challenges. Developers can leverage these resources to streamline their projects.

Security: Java places a strong emphasis on security. Features like sandboxing and automatic memory management enhance the language's security profile, making it a reliable choice for building secure applications.

Community Support: Java enjoys the support of a vibrant and dedicated community of developers. This community actively contributes to its growth, ensuring that Java remains relevant, up-to-date, and in line with industry trends.

Job Opportunities: Proficiency in Java programming opens doors to a myriad of job opportunities in software development. It's a skill that is in high demand, making it a valuable asset in the tech job market.

Java programming is a dynamic and versatile language that finds applications in web and mobile development, enterprise software, IoT, big data, cloud computing, and much more. Its enduring relevance and the multitude of opportunities it offers in the tech industry make it a valuable asset in a developer's toolkit.

As you embark on your journey to master Java programming, consider ACTE Technologies as your trusted partner. Their comprehensive training programs, expert guidance, and hands-on experiences will equip you with the skills and knowledge needed to excel in the world of Java development.

Unlock the full potential of Java programming and propel your career to new heights with ACTE Technologies. Whether you're a novice or an experienced developer, there's always more to discover in the world of Java. Start your training journey today and be at the forefront of innovation and technology with Java programming.

8 notes

·

View notes

Text

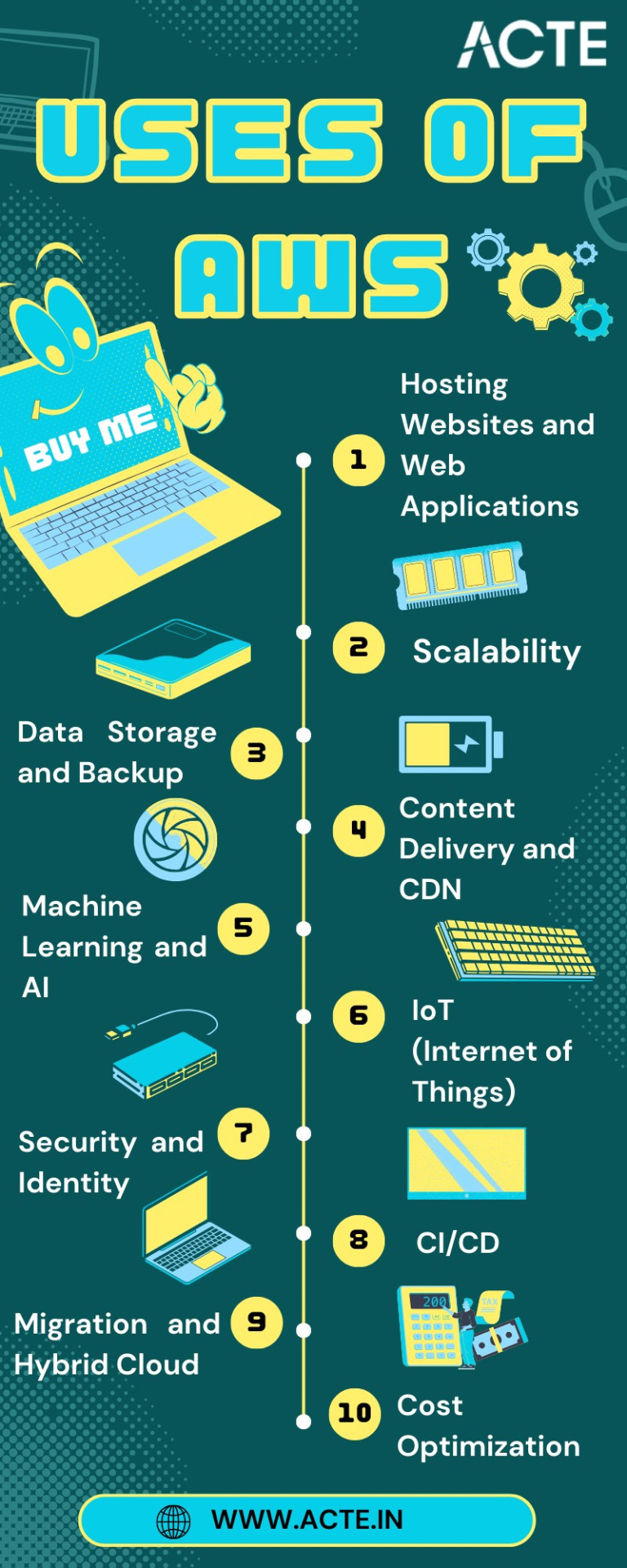

Your Journey Through the AWS Universe: From Amateur to Expert

In the ever-evolving digital landscape, cloud computing has emerged as a transformative force, reshaping the way businesses and individuals harness technology. At the forefront of this revolution stands Amazon Web Services (AWS), a comprehensive cloud platform offered by Amazon. AWS is a dynamic ecosystem that provides an extensive range of services, designed to meet the diverse needs of today's fast-paced world.

This guide is your key to unlocking the boundless potential of AWS. We'll embark on a journey through the AWS universe, exploring its multifaceted applications and gaining insights into why it has become an indispensable tool for organizations worldwide. Whether you're a seasoned IT professional or a newcomer to cloud computing, this comprehensive resource will illuminate the path to mastering AWS and leveraging its capabilities for innovation and growth. Join us as we clarify AWS and discover how it is reshaping the way we work, innovate, and succeed in the digital age.

Navigating the AWS Universe:

Hosting Websites and Web Applications: AWS provides a secure and scalable place for hosting websites and web applications. Services like Amazon EC2 and Amazon S3 empower businesses to deploy and manage their online presence with unwavering reliability and high performance.

Scalability: At the core of AWS lies its remarkable scalability. Organizations can seamlessly adjust their infrastructure according to the ebb and flow of workloads, ensuring optimal resource utilization in today's ever-changing business environment.

Data Storage and Backup: AWS offers a suite of robust data storage solutions, including the highly acclaimed Amazon S3 and Amazon EBS. These services cater to the diverse spectrum of data types, guaranteeing data security and perpetual availability.

Databases: AWS presents a panoply of database services such as Amazon RDS, DynamoDB, and Redshift, each tailored to meet specific data management requirements. Whether it's a relational database, a NoSQL database, or data warehousing, AWS offers a solution.

Content Delivery and CDN: Amazon CloudFront, AWS's content delivery network (CDN) service, ushers in global content distribution with minimal latency and blazing data transfer speeds. This ensures an impeccable user experience, irrespective of geographical location.

Machine Learning and AI: AWS boasts a rich repertoire of machine learning and AI services. Amazon SageMaker simplifies the development and deployment of machine learning models, while pre-built AI services cater to natural language processing, image analysis, and more.

Analytics: In the heart of AWS's offerings lies a robust analytics and business intelligence framework. Services like Amazon EMR enable the processing of vast datasets using popular frameworks like Hadoop and Spark, paving the way for data-driven decision-making.

IoT (Internet of Things): AWS IoT services provide the infrastructure for the seamless management and data processing of IoT devices, unlocking possibilities across industries.

Security and Identity: With an unwavering commitment to data security, AWS offers robust security features and identity management through AWS Identity and Access Management (IAM). Users wield precise control over access rights, ensuring data integrity.

DevOps and CI/CD: AWS simplifies DevOps practices with services like AWS CodePipeline and AWS CodeDeploy, automating software deployment pipelines and enhancing collaboration among development and operations teams.

Content Creation and Streaming: AWS Elemental Media Services facilitate the creation, packaging, and efficient global delivery of video content, empowering content creators to reach a global audience seamlessly.

Migration and Hybrid Cloud: For organizations seeking to migrate to the cloud or establish hybrid cloud environments, AWS provides a suite of tools and services to streamline the process, ensuring a smooth transition.

Cost Optimization: AWS's commitment to cost management and optimization is evident through tools like AWS Cost Explorer and AWS Trusted Advisor, which empower users to monitor and control their cloud spending effectively.

In this comprehensive journey through the expansive landscape of Amazon Web Services (AWS), we've embarked on a quest to unlock the power and potential of cloud computing. AWS, standing as a colossus in the realm of cloud platforms, has emerged as a transformative force that transcends traditional boundaries.

As we bring this odyssey to a close, one thing is abundantly clear: AWS is not merely a collection of services and technologies; it's a catalyst for innovation, a cornerstone of scalability, and a conduit for efficiency. It has revolutionized the way businesses operate, empowering them to scale dynamically, innovate relentlessly, and navigate the complexities of the digital era.

In a world where data reigns supreme and agility is a competitive advantage, AWS has become the bedrock upon which countless industries build their success stories. Its versatility, reliability, and ever-expanding suite of services continue to shape the future of technology and business.

Yet, AWS is not a solitary journey; it's a collaborative endeavor. Institutions like ACTE Technologies play an instrumental role in empowering individuals to master the AWS course. Through comprehensive training and education, learners are not merely equipped with knowledge; they are forged into skilled professionals ready to navigate the AWS universe with confidence.

As we contemplate the future, one thing is certain: AWS is not just a destination; it's an ongoing journey. It's a journey toward greater innovation, deeper insights, and boundless possibilities. AWS has not only transformed the way we work; it's redefining the very essence of what's possible in the digital age. So, whether you're a seasoned cloud expert or a newcomer to the cloud, remember that AWS is not just a tool; it's a gateway to a future where technology knows no bounds, and success knows no limits.

6 notes

·

View notes

Text

Unlock the World of Data Analysis: Programming Languages for Success!

💡 When it comes to data analysis, choosing the right programming language can make all the difference. Here are some popular languages that empower professionals in this exciting field

https://www.clinicalbiostats.com/

🐍 Python: Known for its versatility, Python offers a robust ecosystem of libraries like Pandas, NumPy, and Matplotlib. It's beginner-friendly and widely used for data manipulation, visualization, and machine learning.

📈 R: Built specifically for statistical analysis, R provides an extensive collection of packages like dplyr, ggplot2, and caret. It excels in data exploration, visualization, and advanced statistical modeling.

🔢 SQL: Structured Query Language (SQL) is essential for working with databases. It allows you to extract, manipulate, and analyze large datasets efficiently, making it a go-to language for data retrieval and management.

💻 Java: Widely used in enterprise-level applications, Java offers powerful libraries like Apache Hadoop and Apache Spark for big data processing. It provides scalability and performance for complex data analysis tasks.

📊 MATLAB: Renowned for its mathematical and numerical computing capabilities, MATLAB is favored in academic and research settings. It excels in data visualization, signal processing, and algorithm development.

🔬 Julia: Known for its speed and ease of use, Julia is gaining popularity in scientific computing and data analysis. Its syntax resembles mathematical notation, making it intuitive for scientists and statisticians.

🌐 Scala: Scala, with its seamless integration with Apache Spark, is a valuable language for distributed data processing and big data analytics. It combines object-oriented and functional programming paradigms.

💪 The key is to choose a language that aligns with your specific goals and preferences. Embrace the power of programming and unleash your potential in the dynamic field of data analysis! 💻📈

#DataAnalysis#ProgrammingLanguages#Python#RStats#SQL#Java#MATLAB#JuliaLang#Scala#DataScience#BigData#CareerOpportunities#biostatistics#onlinelearning#lifesciences#epidemiology#genetics#pythonprogramming#clinicalbiostatistics#datavisualization#clinicaltrials

4 notes

·

View notes

Text

Big Data Technologies: Hadoop, Spark, and Beyond

In this era where every click, transaction, or sensor emits a massive flux of information, the term "Big Data" has gone past being a mere buzzword and has become an inherent challenge and an enormous opportunity. These are datasets so enormous, so complex, and fast-growing that traditional data-processing applications cannot handle them. The huge ocean of information needs special tools; at the forefront of this big revolution being Big Data Technologies- Hadoop, Spark, and beyond.

One has to be familiar with these technologies if they are to make some modern-day sense of the digital world, whether they be an aspiring data professional or a business intent on extracting actionable insights out of their massive data stores.

What is Big Data and Why Do We Need Special Technologies?

Volume: Enormous amounts of data (terabytes, petabytes, exabytes).

Velocity: Data generated and processed at incredibly high speeds (e.g., real-time stock trades, IoT sensor data).

Variety: Data coming in diverse formats (structured, semi-structured, unstructured – text, images, videos, logs).

Traditional relational databases and processing tools were not built to handle this scale, speed, or diversity. They would crash, take too long, or simply fail to process such immense volumes. This led to the emergence of distributed computing frameworks designed specifically for Big Data.

Hadoop: The Pioneer of Big Data Processing

Apache Hadoop was an advanced technological tool in its time. It had completely changed the facets of data storage and processing on a large scale. It provides a framework for distributed storage and processing of datasets too large to be processed on a single machine.

· Key Components:

HDFS (Hadoop Distributed File System): It is a distributed file system, where the data is stored across multiple machines and hence are fault-tolerant and highly scalable.

MapReduce: A programming model for processing large data sets with a parallel, distributed algorithm on a cluster. It subdivides a large problem into smaller ones that can be solved independently in parallel.

What made it revolutionary was the fact that Hadoop enabled organizations to store and process data they previously could not, hence democratizing access to massive datasets.

Spark: The Speed Demon of Big Data Analytics

While MapReduce on Hadoop is a formidable force, disk-based processing sucks up time when it comes to iterative algorithms and real-time analytics. And so came Apache Spark: an entire generation ahead in terms of speed and versatility.

· �� Key Advantages over Hadoop MapReduce:

In-Memory Processing: Spark processes data in memory, which is from 10 to 100 times faster than MapReduce-based operations, primarily in iterative algorithms (Machine Learning is an excellent example here).

Versatility: Several libraries exist on top of Spark's core engine:

Spark SQL: Structured data processing using SQL

Spark Streaming: Real-time data processing.

MLlib: Machine Learning library.

GraphX: Graph processing.

What makes it important, actually: Spark is the tool of choice when it comes to real-time analytics, complex data transformations, and machine learning on Big Data.

And Beyond: Evolving Big Data Technologies

The Big Data ecosystem is growing by each passing day. While Hadoop and Spark are at the heart of the Big Data paradigm, many other technologies help in complementing and extending their capabilities:

NoSQL Databases: (e.g., MongoDB, Cassandra, HBase) – The databases were designed to handle massive volumes of unstructured or semi-structured data with high scale and high flexibility as compared to traditional relational databases.

Stream Processing Frameworks: (e.g., Apache Kafka, Apache Flink) – These are important for processing data as soon as it arrives (real-time), crucial for fraud-detection, IoT Analytics, and real-time dashboards.

Data Warehouses & Data Lakes: Cloud-native solutions (example, Amazon Redshift, Snowflake, Google BigQuery, Azure Synapse Analytics) for scalable, managed environments to store and analyze big volumes of data often with seamless integration to Spark.

Cloud Big Data Services: Major cloud providers running fully managed services of Big Data processing (e.g., AWS EMR, Google Dataproc, Azure HDInsight) reduce much of deployment and management overhead.

Data Governance & Security Tools: As data grows, the need to manage its quality, privacy, and security becomes paramount.

Career Opportunities in Big Data

Mastering Big Data technologies opens doors to highly sought-after roles such as:

Big Data Engineer

Data Architect

Data Scientist (often uses Spark/Hadoop for data preparation)

Business Intelligence Developer

Cloud Data Engineer

Many institutes now offer specialized Big Data courses in Ahmedabad that provide hands-on training in Hadoop, Spark, and related ecosystems, preparing you for these exciting careers.

The journey into Big Data technologies is a deep dive into the engine room of the modern digital economy. By understanding and mastering tools like Hadoop, Spark, and the array of complementary technologies, you're not just learning to code; you're learning to unlock the immense power of information, shaping the future of industries worldwide.

Contact us

Location: Bopal & Iskcon-Ambli in Ahmedabad, Gujarat

Call now on +91 9825618292

Visit Our Website: http://tccicomputercoaching.com/

0 notes

Text

Beyond the Pipeline: Choosing the Right Data Engineering Service Providers for Long-Term Scalability

Introduction: Why Choosing the Right Data Engineering Service Provider is More Critical Than Ever

In an age where data is more valuable than oil, simply having pipelines isn’t enough. You need refineries, infrastructure, governance, and agility. Choosing the right data engineering service providers can make or break your enterprise’s ability to extract meaningful insights from data at scale. In fact, Gartner predicts that by 2025, 80% of data initiatives will fail due to poor data engineering practices or provider mismatches.

If you're already familiar with the basics of data engineering, this article dives deeper into why selecting the right partner isn't just a technical decision—it’s a strategic one. With rising data volumes, regulatory changes like GDPR and CCPA, and cloud-native transformations, companies can no longer afford to treat data engineering service providers as simple vendors. They are strategic enablers of business agility and innovation.

In this post, we’ll explore how to identify the most capable data engineering service providers, what advanced value propositions you should expect from them, and how to build a long-term partnership that adapts with your business.

Section 1: The Evolving Role of Data Engineering Service Providers in 2025 and Beyond

What you needed from a provider in 2020 is outdated today. The landscape has changed:

📌 Real-time data pipelines are replacing batch processes

📌 Cloud-native architectures like Snowflake, Databricks, and Redshift are dominating

📌 Machine learning and AI integration are table stakes

📌 Regulatory compliance and data governance have become core priorities

Modern data engineering service providers are not just builders—they are data architects, compliance consultants, and even AI strategists. You should look for:

📌 End-to-end capabilities: From ingestion to analytics

📌 Expertise in multi-cloud and hybrid data ecosystems

📌 Proficiency with data mesh, lakehouse, and decentralized architectures

📌 Support for DataOps, MLOps, and automation pipelines

Real-world example: A Fortune 500 retailer moved from Hadoop-based systems to a cloud-native lakehouse model with the help of a modern provider, reducing their ETL costs by 40% and speeding up analytics delivery by 60%.

Section 2: What to Look for When Vetting Data Engineering Service Providers

Before you even begin consultations, define your objectives. Are you aiming for cost efficiency, performance, real-time analytics, compliance, or all of the above?

Here’s a checklist when evaluating providers:

📌 Do they offer strategic consulting or just hands-on coding?

📌 Can they support data scaling as your organization grows?

📌 Do they have domain expertise (e.g., healthcare, finance, retail)?

📌 How do they approach data governance and privacy?

📌 What automation tools and accelerators do they provide?

📌 Can they deliver under tight deadlines without compromising quality?

Quote to consider: "We don't just need engineers. We need architects who think two years ahead." – Head of Data, FinTech company

Avoid the mistake of over-indexing on cost or credentials alone. A cheaper provider might lack scalability planning, leading to massive rework costs later.

Section 3: Red Flags That Signal Poor Fit with Data Engineering Service Providers

Not all providers are created equal. Some red flags include:

📌 One-size-fits-all data pipeline solutions

📌 Poor documentation and handover practices

📌 Lack of DevOps/DataOps maturity

📌 No visibility into data lineage or quality monitoring

📌 Heavy reliance on legacy tools

A real scenario: A manufacturing firm spent over $500k on a provider that delivered rigid ETL scripts. When the data source changed, the whole system collapsed.

Avoid this by asking your provider to walk you through previous projects, particularly how they handled pivots, scaling, and changing data regulations.

Section 4: Building a Long-Term Partnership with Data Engineering Service Providers

Think beyond the first project. Great data engineering service providers work iteratively and evolve with your business.

Steps to build strong relationships:

📌 Start with a proof-of-concept that solves a real pain point

📌 Use agile methodologies for faster, collaborative execution

📌 Schedule quarterly strategic reviews—not just performance updates

📌 Establish shared KPIs tied to business outcomes, not just delivery milestones

📌 Encourage co-innovation and sandbox testing for new data products

Real-world story: A healthcare analytics company co-developed an internal patient insights platform with their provider, eventually spinning it into a commercial SaaS product.

Section 5: Trends and Technologies the Best Data Engineering Service Providers Are Already Embracing

Stay ahead by partnering with forward-looking providers who are ahead of the curve:

📌 Data contracts and schema enforcement in streaming pipelines

📌 Use of low-code/no-code orchestration (e.g., Apache Airflow, Prefect)

📌 Serverless data engineering with tools like AWS Glue, Azure Data Factory

📌 Graph analytics and complex entity resolution

📌 Synthetic data generation for model training under privacy laws

Case in point: A financial institution cut model training costs by 30% by using synthetic data generated by its engineering provider, enabling robust yet compliant ML workflows.

Conclusion: Making the Right Choice for Long-Term Data Success

The right data engineering service providers are not just technical executioners—they’re transformation partners. They enable scalable analytics, data democratization, and even new business models.

To recap:

📌 Define goals and pain points clearly

📌 Vet for strategy, scalability, and domain expertise

📌 Watch out for rigidity, legacy tools, and shallow implementations

📌 Build agile, iterative relationships

📌 Choose providers embracing the future

Your next provider shouldn’t just deliver pipelines—they should future-proof your data ecosystem. Take a step back, ask the right questions, and choose wisely. The next few quarters of your business could depend on it.

#DataEngineering#DataEngineeringServices#DataStrategy#BigDataSolutions#ModernDataStack#CloudDataEngineering#DataPipeline#MLOps#DataOps#DataGovernance#DigitalTransformation#TechConsulting#EnterpriseData#AIandAnalytics#InnovationStrategy#FutureOfData#SmartDataDecisions#ScaleWithData#AnalyticsLeadership#DataDrivenInnovation

0 notes

Text

Big Data Market 2032: Will Enterprises Unlock the Real Power Behind the Numbers

The Big Data Market was valued at USD 325.4 Billion in 2023 and is expected to reach USD 1035.2 Billion by 2032, growing at a CAGR of 13.74% from 2024-2032.

Big Data Market is witnessing a significant surge as organizations increasingly harness data to drive decision-making, optimize operations, and deliver personalized customer experiences. Across sectors like finance, healthcare, manufacturing, and retail, big data is revolutionizing how insights are generated and applied. Advancements in AI, cloud storage, and analytics tools are further accelerating adoption.

U.S. leads global adoption with strong investment in big data infrastructure and innovation

Big Data Market continues to expand as enterprises shift from traditional databases to scalable, intelligent data platforms. With growing data volumes and demand for real-time processing, companies are integrating big data technologies to enhance agility and remain competitive in a data-centric economy.

Get Sample Copy of This Report: https://www.snsinsider.com/sample-request/2817

Market Keyplayers:

IBM

Microsoft

Oracle

SAP

Amazon Web Services (AWS)

Google

Cloudera

Teradata

Hadoop

Splunk

SAS

Snowflake

Market Analysis

The Big Data Market is shaped by exponential data growth and the rising complexity of digital ecosystems. Businesses are seeking solutions that not only store massive datasets but also extract actionable intelligence. Big data tools, combined with machine learning, are enabling predictive analytics, anomaly detection, and smarter automation. The U.S. market is at the forefront, with Europe close behind, driven by regulatory compliance and advanced analytics adoption.

Market Trends

Rapid integration of AI and machine learning with data platforms

Growth in cloud-native data lakes and hybrid storage models

Surge in real-time analytics and streaming data processing

Increased demand for data governance and compliance tools

Rising use of big data in fraud detection and risk management

Data-as-a-Service (DaaS) models gaining traction

Industry-specific analytics solutions becoming more prevalent

Market Scope

Big data’s footprint spans nearly every industry, with expanding use cases that enhance efficiency and innovation. The scope continues to grow with digital transformation and IoT connectivity.

Healthcare: Patient analytics, disease tracking, and personalized care

Finance: Risk modeling, compliance, and trading analytics

Retail: Consumer behavior prediction and inventory optimization

Manufacturing: Predictive maintenance and process automation

Government: Smart city planning and public service optimization

Marketing: Customer segmentation and campaign effectiveness

Forecast Outlook

The Big Data Market is on a strong growth trajectory as data becomes a core enterprise asset. Future success hinges on scalable infrastructure, robust security frameworks, and the ability to translate raw data into strategic value. Organizations investing in modern data architectures and AI integration are best positioned to lead in this evolving landscape.

Access Complete Report: https://www.snsinsider.com/reports/big-data-market-2817

Conclusion

In an increasingly digital world, the Big Data Market is not just a technology trend—it’s a critical engine of innovation. From New York to Berlin, enterprises are transforming raw data into competitive advantage. As the market matures, the focus shifts from volume to value, rewarding those who can extract insights with speed, precision, and responsibility.

About Us:

SNS Insider is one of the leading market research and consulting agencies that dominates the market research industry globally. Our company's aim is to give clients the knowledge they require in order to function in changing circumstances. In order to give you current, accurate market data, consumer insights, and opinions so that you can make decisions with confidence, we employ a variety of techniques, including surveys, video talks, and focus groups around the world.

Related Reports:

Contact Us:

Jagney Dave - Vice President of Client Engagement

Phone: +1-315 636 4242 (US) | +44- 20 3290 5010 (UK)

Mail us: [email protected]

0 notes

Text

How Data Science Powers Ride-Sharing Apps Like Uber

Booking a ride through apps like Uber or Ola feels effortless. You tap a button, get matched with a nearby driver, track your ride in real time, and pay digitally. But behind this seamless experience is a powerful engine of data science, working 24/7 to optimize every part of your journey.

From estimating arrival times to setting dynamic prices, ride-sharing platforms rely heavily on data to deliver fast, efficient, and safe rides. Let’s take a look at how data science powers this complex ecosystem behind the scenes.

1. Matching Riders and Drivers – In Real Time

The first challenge for any ride-sharing platform is matching passengers with the nearest available drivers. This isn’t just about distance—algorithms consider:

Traffic conditions

Driver acceptance history

Ride cancellation rates

Estimated time to pickup

Driver ratings

Data science models use all this information to ensure the best match. Machine learning continuously refines this process by learning from past trips and user behavior.

2. Route Optimization and Navigation

Once a ride is accepted, the app provides the most efficient route to the driver and rider. Data science helps in:

Predicting traffic congestion

Identifying road closures

Estimating arrival and drop-off times accurately

Ride-sharing companies integrate GPS data, historical traffic trends, and real-time updates to offer smart navigation—sometimes even beating popular map apps in accuracy.

3. Dynamic Pricing with Surge Algorithms

If you’ve ever paid extra during peak hours, you’ve experienced surge pricing. This is one of the most sophisticated use cases of data science in ride-sharing.

Algorithms analyze:

Demand vs. supply in real time

Events (concerts, sports matches, holidays)

Weather conditions

Traffic and accident reports

Based on this, prices adjust dynamically to ensure more drivers are incentivized to operate during busy times, balancing supply and demand efficiently.

4. Predictive Demand Forecasting

Data scientists at companies like Uber use predictive models to forecast where and when ride demand will increase. By analyzing:

Past ride data

Time of day

Day of the week

Local events and weather

They can proactively position drivers in high-demand areas, reducing wait times and improving overall customer satisfaction.

5. Driver Incentive and Retention Models

Driver retention is key to the success of ride-sharing platforms. Data science helps create personalized incentive programs, offering bonuses based on:

Ride frequency

Location coverage

Customer ratings

Peak hour availability

By analyzing individual driver patterns and preferences, companies can customize rewards to keep their best drivers motivated and on the road.

6. Fraud Detection and Safety

Security and trust are critical. Machine learning models continuously monitor rides for signs of fraud or unsafe behavior. These include:

Unexpected route deviations

Rapid cancellation patterns

Payment fraud indicators

Fake GPS spoofing

AI-powered systems flag suspicious activity instantly, protecting both riders and drivers.

7. Customer Experience and Feedback Loops

After every ride, passengers and drivers rate each other. These ratings feed into reputation systems built with data science. Natural language processing (NLP) is used to analyze written reviews, identify trends, and prioritize customer support.

Feedback loops help improve:

Driver behavior through coaching or deactivation

App features and interface

Wait time reduction strategies

Real-World Tools Behind the Scenes

Companies like Uber use a combination of technologies:

Big Data Tools: Hadoop, Spark

Machine Learning Libraries: TensorFlow, XGBoost

Geospatial Analysis: GIS, OpenStreetMap, Mapbox

Cloud Platforms: AWS, Google Cloud

These tools process millions of data points per minute to keep the system running smoothly.

Conclusion:

Ride-sharing apps may look simple on the surface, but they’re powered by an intricate web of algorithms, data pipelines, and real-time analytics. Data science is the backbone of this digital transportation revolution—making rides faster, safer, and smarter.

Every time you book a ride, you’re not just traveling—you’re experiencing the power of data science in motion.

#datascience#ridesharing#uber#aiintransportation#machinelearning#bigdata#realtimetechnology#transportationtech#appdevelopment#smartmobility#nschool academy#analytics

0 notes

Text

Hadoop Meets NoSQL: How HBase Enables High-Speed Big Data Processing

In today's data-driven world, businesses and organisations are inundated with huge amounts of information that must be processed and analysed quickly to make informed decisions. Traditional relational databases often struggle to handle this scale and speed. That’s where modern data architectures like Hadoop and NoSQL databases come into play. Among the powerful tools within this ecosystem, HBase stands out for enabling high-speed big data processing. This blog explores how Hadoop and HBase work together to handle large-scale data efficiently and why this integration is essential in the modern data landscape.

Understanding Hadoop and the Rise of Big Data

Hadoop is a framework that is publicly available, developed by the Apache Software Foundation. It allows for the distributed storage and processing of huge datasets across clusters of computers using simple programming models. What makes Hadoop unique is its ability to scale from a single server to thousands of them, each offering local storage and computation.

As more industries—finance, healthcare, e-commerce, and education—generate massive volumes of data, the limitations of traditional databases become evident. The rigid structure and limited scalability of relational databases are often incompatible with the dynamic and unstructured nature of big data. This need for flexibility and performance led to the rise of NoSQL databases.

What is NoSQL and Why HBase Matters

NoSQL stands for "Not Only SQL," referring to a range of database technologies that can handle non-relational, semi-structured, or unstructured data. These databases offer high performance, scalability, and flexibility, making them ideal for big data applications.

HBase, modelled after Google's Bigtable, is a column-oriented NoSQL database that runs on top of Hadoop's Hadoop Distributed File System (HDFS). It is designed to provide quick read/write access to large volumes of sparse data. Unlike traditional databases, HBase supports real-time data access while still benefiting from Hadoop’s batch processing capabilities.

How HBase Enables High-Speed Big Data Processing

HBase’s architecture is designed for performance. Here’s how it enables high-speed big data processing:

Real-Time Read/Write Operations: Unlike Hadoop’s MapReduce, which is primarily batch-oriented, HBase allows real-time access to data. This is crucial for applications where speed is essential, like fraud detection or recommendation engines.

Horizontal Scalability: HBase scales easily by adding more nodes to the cluster, enabling it to handle petabytes of data without performance bottlenecks.

Automatic Sharding: It automatically distributes data across different nodes (regions) in the cluster, ensuring balanced load and faster access.

Integration with Hadoop Ecosystem: HBase integrates seamlessly with other tools like Apache Hive, Pig, and Spark, providing powerful analytics capabilities on top of real-time data storage.

Fault Tolerance: Thanks to HDFS, HBase benefits from robust fault tolerance, ensuring data is not lost even if individual nodes fail.

Real-World Applications of Hadoop and HBase

Organisations across various sectors are leveraging Hadoop and HBase for impactful use cases:

Telecommunications: Managing call detail records and customer data in real-time for billing and service improvements.

Social Media: Storing and retrieving user interactions at a massive scale to generate insights and targeted content.

Healthcare: Analysing patient records and sensor data to offer timely and personalised care.

E-commerce: Powering recommendation engines and customer profiling for better user experiences.

For those interested in diving deeper into these technologies, a data science course in Pune can offer hands-on experience with Hadoop and NoSQL databases like HBase. Courses often cover practical applications, enabling learners to tackle real-world data problems effectively.

HBase vs. Traditional Databases

While traditional databases like MySQL and Oracle are still widely used, they are not always suitable for big data scenarios. Here’s how HBase compares:

Schema Flexibility: HBase does not necessitate a rigid schema, which facilitates adaptation to evolving data needs.

Speed: HBase is optimised for high-throughput and low-latency access, which is crucial for modern data-intensive applications.

Data Volume: It can efficiently store and retrieve billions of rows and millions of columns, far beyond the capacity of most traditional databases.

These capabilities make HBase a go-to solution for big data projects, especially when integrated within the Hadoop ecosystem.

The Learning Path to Big Data Mastery

As data continues to grow in size and importance, understanding the synergy between Hadoop and HBase is becoming essential for aspiring data professionals. Enrolling in data science training can be a strategic step toward mastering these technologies. These programs are often designed to cover everything from foundational concepts to advanced tools, helping learners build career-ready skills.

Whether you're an IT professional looking to upgrade or a fresh graduate exploring career paths, a structured course can provide the guidance and practical experience needed to succeed in the big data domain.

Conclusion

The integration of Hadoop and HBase represents a powerful solution for processing and managing big data at speed and scale. While Hadoop handles distributed storage and batch processing, HBase adds real-time data access capabilities, making the duo ideal for a range of modern applications. As industries continue to embrace data-driven strategies, professionals equipped with these skills will be in huge demand. Exploring educational paths such as data science course can be your gateway to thriving in this evolving landscape.

By understanding how HBase enhances Hadoop's capabilities, you're better prepared to navigate the complexities of big data—and transform that data into meaningful insights.

Contact Us:

Name: Data Science, Data Analyst and Business Analyst Course in Pune

Address: Spacelance Office Solutions Pvt. Ltd. 204 Sapphire Chambers, First Floor, Baner Road, Baner, Pune, Maharashtra 411045

Phone: 095132 59011

0 notes

Text

Data Engineering vs Data Science: Which Course Should You Take Abroad?

In today’s data-driven world, careers in tech and analytics are booming. Two of the most sought-after fields that international students often explore are Data Engineering and Data Science. Both these disciplines play critical roles in helping businesses make informed decisions. However, they are not the same, and if you're planning to pursue a course abroad, understanding the difference between the two is crucial to making the right career move.

In this comprehensive guide, we’ll explore:

What is Data Engineering?

What is Data Science?

Key differences between the two fields

Skills and tools required

Job opportunities and career paths

Best countries to study each course

Top universities offering these programs

Which course is better for you?

What is Data Engineering?

Data Engineering is the backbone of the data science ecosystem. It focuses on the design, development, and maintenance of systems that collect, store, and transform data into usable formats. Data engineers build and optimize the architecture (pipelines, databases, and large-scale processing systems) that data scientists use to perform analysis.

Key Responsibilities:

Developing, constructing, testing, and maintaining data architectures

Building data pipelines to streamline data flow

Managing and organizing raw data

Ensuring data quality and integrity

Collaborating with data analysts and scientists

Popular Tools:

Apache Hadoop

Apache Spark

SQL/NoSQL databases (PostgreSQL, MongoDB)

Python, Scala, Java

AWS, Azure, Google Cloud

What is Data Science?

Data Science, on the other hand, is more analytical. It involves extracting insights from data using algorithms, statistical models, and machine learning. Data scientists interpret complex datasets to identify patterns, forecast trends, and support decision-making.

Key Responsibilities:

Analyzing large datasets to extract actionable insights

Using machine learning and predictive modeling

Communicating findings to stakeholders through visualization

A/B testing and hypothesis validation

Data storytelling

Popular Tools:

Python, R

TensorFlow, Keras, PyTorch

Tableau, Power BI

SQL

Jupyter Notebook

Career Paths and Opportunities

Data Engineering Careers:

Data Engineer

Big Data Engineer

Data Architect

ETL Developer

Cloud Data Engineer

Average Salary (US): $100,000–$140,000/year Job Growth: High demand due to an increase in big data applications and cloud platforms.

Data Science Careers:

Data Scientist

Machine Learning Engineer

Data Analyst

AI Specialist

Business Intelligence Analyst

Average Salary (US): $95,000–$135,000/year Job Growth: Strong demand across sectors like healthcare, finance, and e-commerce.

Best Countries to Study These Courses Abroad

1. United States

The US is a leader in tech innovation and offers top-ranked universities for both fields.

Top Universities:

Massachusetts Institute of Technology (MIT)

Stanford University

Carnegie Mellon University

UC Berkeley

Highlights:

Access to Silicon Valley

Industry collaborations

Internship and job opportunities

2. United Kingdom

UK institutions provide flexible and industry-relevant postgraduate programs.

Top Universities:

University of Oxford

Imperial College London

University of Edinburgh

University of Manchester

Highlights:

1-year master’s programs

Strong research culture

Scholarships for international students

3. Germany

Known for engineering excellence and affordability.

Top Universities:

Technical University of Munich (TUM)

RWTH Aachen University

University of Freiburg

Highlights:

Low or no tuition fees

High-quality public education

Opportunities in tech startups and industries

4. Canada

Popular for its friendly immigration policies and growing tech sector.

Top Universities:

University of Toronto

University of British Columbia

McGill University

Highlights:

Co-op programs

Pathway to Permanent Residency

Tech innovation hubs in Toronto and Vancouver

5. Australia

Ideal for students looking for industry-aligned and practical courses.

Top Universities:

University of Melbourne

Australian National University

University of Sydney

Highlights:

Focus on employability

Vibrant student community

Post-study work visa options

6. France

Emerging as a strong tech education destination.

Top Universities:

HEC Paris (Data Science for Business)

École Polytechnique

Grenoble Ecole de Management

Highlights:

English-taught master’s programs

Government-funded scholarships

Growth of AI and data-focused startups

Course Curriculum: What Will You Study?

Data Engineering Courses Abroad Typically Include:

Data Structures and Algorithms

Database Systems

Big Data Analytics

Cloud Computing

Data Warehousing

ETL Pipeline Development

Programming in Python, Java, and Scala

Data Science Courses Abroad Typically Include:

Statistical Analysis

Machine Learning and AI

Data Visualization

Natural Language Processing (NLP)

Predictive Analytics

Deep Learning

Business Intelligence Tools

Which Course Should You Choose?

Choosing between Data Engineering and Data Science depends on your interests, career goals, and skillset.

Go for Data Engineering if:

You enjoy backend systems and architecture

You like coding and building tools

You are comfortable working with databases and cloud systems

You want to work behind the scenes, ensuring data flow and integrity

Go for Data Science if:

You love analyzing data to uncover patterns

You have a strong foundation in statistics and math

You want to work with machine learning and AI

You prefer creating visual stories and communicating insights

Scholarships and Financial Support

Many universities abroad offer scholarships for international students in tech disciplines. Here are a few to consider:

DAAD Scholarships (Germany): Fully-funded programs for STEM students

Commonwealth Scholarships (UK): Tuition and living costs covered

Fulbright Program (USA): Graduate-level funding for international students

Vanier Canada Graduate Scholarships: For master’s and PhD students in Canada

Eiffel Scholarships (France): Offered by the French Ministry for Europe and Foreign Affairs

Final Thoughts: Make a Smart Decision

Both Data Engineering and Data Science are rewarding and in-demand careers. Neither is better or worse—they simply cater to different strengths and interests.

If you're analytical, creative, and enjoy experimenting with models, Data Science is likely your path.

If you're system-oriented, logical, and love building infrastructure, Data Engineering is the way to go.

When considering studying abroad, research the university's curriculum, available electives, internship opportunities, and career support services. Choose a program that aligns with your long-term career aspirations.

By understanding the core differences and assessing your strengths, you can confidently decide which course is the right fit for you.

Need Help Choosing the Right Program Abroad?

At Cliftons Study Abroad, we help students like you choose the best universities and courses based on your interests and future goals. From counselling to application assistance and visa support, we’ve got your journey covered.

Contact us today to start your journey in Data Science or Data Engineering abroad!

#study abroad#study in uk#study abroad consultants#study in australia#study in germany#study in ireland#study blog

0 notes

Text

Upgrade Your Career with the Best Data Analytics Courses in Noida

In a world overflowing with digital information, data is the new oil—and those who can extract, refine, and analyze it are in high demand. The ability to understand and leverage data is now a key competitive advantage in every industry, from IT and finance to healthcare and e-commerce. As a result, data analytics courses have gained massive popularity among job seekers, professionals, and students alike.

If you're located in Delhi-NCR or looking for quality tech education in a thriving urban setting, enrolling in data analytics courses in Noida can give your career the boost it needs.

What is Data Analytics?

Data analytics refers to the science of analyzing raw data to extract meaningful insights that drive decision-making. It involves a range of techniques, tools, and technologies used to discover patterns, predict trends, and identify valuable information.

There are several types of data analytics, including:

Descriptive Analytics: What happened?

Diagnostic Analytics: Why did it happen?

Predictive Analytics: What could happen?

Prescriptive Analytics: What should be done?

Skilled professionals who master these techniques are known as Data Analysts, Data Scientists, and Business Intelligence Experts.

Why Should You Learn Data Analytics?

Whether you’re a tech enthusiast or someone from a non-technical background, learning data analytics opens up a wealth of opportunities. Here’s why data analytics courses are worth investing in:

📊 High Demand, Low Supply: There is a massive talent gap in data analytics. Skilled professionals are rare and highly paid.

💼 Diverse Career Options: You can work in finance, IT, marketing, retail, sports, government, and more.

🌍 Global Opportunities: Data analytics skills are in demand worldwide, offering chances to work remotely or abroad.

💰 Attractive Salary Packages: Entry-level data analysts can expect starting salaries upwards of ₹4–6 LPA, which can grow quickly with experience.

📈 Future-Proof Career: As long as businesses generate data, analysts will be needed to make sense of it.

Why Choose Data Analytics Courses in Noida?

Noida, part of the Delhi-NCR region, is a fast-growing tech and education hub. Home to top companies and training institutes, Noida offers the perfect ecosystem for tech learners.

Here are compelling reasons to choose data analytics courses in noida:

🏢 Proximity to IT & MNC Hubs: Noida houses leading firms like HCL, TCS, Infosys, Adobe, Paytm, and many startups.

🧑🏫 Expert Trainers: Courses are conducted by professionals with real-world experience in analytics, machine learning, and AI.

🖥️ Practical Approach: Institutes focus on hands-on learning through real datasets, live projects, and capstone assignments.

🎯 Placement Assistance: Many data analytics institutes in Noida offer dedicated job support, resume writing, and interview prep.

🕒 Flexible Batches: Choose from online, offline, weekend, or evening classes to suit your schedule.

Core Modules Covered in Data Analytics Courses

A comprehensive data analytics course typically includes:

Fundamentals of Data Analytics

Excel for Data Analysis

Statistics & Probability

SQL for Data Querying

Python or R Programming

Data Visualization (Power BI/Tableau)

Machine Learning Basics

Big Data Technologies (Hadoop, Spark)

Business Intelligence Tools

Capstone Project/Internship

Who Should Join Data Analytics Courses?

These courses are suitable for a wide audience:

✅ Fresh graduates (B.Sc, BCA, B.Tech, BBA, MBA)

✅ IT professionals seeking domain change

✅ Non-IT professionals like sales, marketing, and finance executives

✅ Entrepreneurs aiming to make data-backed decisions

✅ Students planning higher education in data science or AI

Career Opportunities After Completing Data Analytics Courses

After completing a data analytics course, learners can pursue roles such as:

Data Analyst

Business Analyst

Data Scientist

Data Engineer

Data Consultant

Operations Analyst

Marketing Analyst

Financial Analyst

BI Developer

Risk Analyst

Top Recruiters Hiring Data Analytics Professionals

Companies in Noida and across India actively seek data professionals. Some top recruiters include:

HCL Technologies

TCS

Infosys

Accenture

Cognizant

Paytm

Genpact

Capgemini

EY (Ernst & Young)

ZS Associates

Startups in fintech, health tech, and e-commerce

Tips to Choose the Right Data Analytics Course in Noida

When selecting a training program, consider the following:

🔍 Course Content: Does it cover the latest tools and techniques?

🧑🏫 Trainer Background: Are trainers experienced and industry-certified?

🛠️ Hands-On Practice: Does the course include real-time projects?

📜 Certification: Is it recognized by companies and institutions?

💬 Reviews and Ratings: What do past students say about the course?

🎓 Post-Course Support: Is job placement or internship assistance available?

Certifications That Add Value

A good institute will prepare you for globally recognized certifications such as:

Microsoft Data Analyst Associate

Google Data Analytics Professional Certificate

IBM Data Analyst Certificate

Tableau Desktop Specialist

Certified Analytics Professional (CAP)

These certifications can boost your credibility and help you stand out in job applications.

Final Thoughts: Your Future Starts Here

In this competitive digital era, data is everywhere—but professionals who can understand and use it effectively are still rare. Taking the right data analytics courses in noida is not just a step toward upskilling—it’s an investment in your future.

Whether you're aiming for a job switch, career growth, or knowledge enhancement, data analytics courses offer a versatile, high-growth pathway. With industry-relevant skills, real-time projects, and expert guidance, you’ll be prepared to take on the most in-demand roles of the decade.

0 notes

Text

Advanced Data Science Program in Kerala

Unlock Your Future with an Advanced Data Science Program in Kerala

In today’s technology-driven world, data is the new oil. Businesses across industries — from healthcare to finance to e-commerce — are relying on data to make smarter decisions, optimize operations, and better understand their customers. This growing dependence on data has created an enormous demand for professionals skilled in data science. If you’re in Kerala and looking to build a future-proof career, enrolling in an Advanced Data Science Program in Kerala could be the smartest move you make.

Why Choose Data Science?

Data science combines programming, mathematics, and domain expertise to extract valuable insights from large datasets. A data scientist is not just a number cruncher — they are problem-solvers who turn raw data into actionable insights. With job roles like Data Analyst, Machine Learning Engineer, Business Intelligence Developer, and AI Specialist becoming more prominent, it’s clear that data science is not just a trend but a long-term career path.

Kerala: A Growing Tech Hub

An Advanced Data Science Program in Kerala offers learners the chance to tap into this growing ecosystem. With access to reputed institutes, experienced faculty, and real-world project opportunities, Kerala provides the ideal environment to learn and thrive in the field of data science.

What to Expect from an Advanced Data Science Program

When you sign up for an advanced program in data science, you’re not just learning the basics. These programs are designed for those who want to go beyond foundational knowledge and dive deeper into specialized areas such as:

Machine Learning and Deep Learning

Big Data Technologies (Hadoop, Spark)

Natural Language Processing

Computer Vision

Data Visualization and Storytelling

Advanced Statistical Techniques

Most programs also include hands-on projects, case studies, and capstone projects that simulate real-world challenges. These elements help learners not only understand the theory but also apply their skills in practical settings.

Career Opportunities After Completion

Completing an advanced data science program opens the door to a wide range of career opportunities. As companies continue to invest in AI and analytics, data science professionals are in high demand. Here are some of the roles you can pursue:

Data Scientist

Machine Learning Engineer

Data Engineer

Business Intelligence Analyst

Research Scientist

AI/ML Product Manager

What makes these roles attractive is not just the salary potential but also the versatility. Whether you want to work in healthcare, fintech, retail, or even government sectors, data science skills are universally in demand.

Final Thoughts

An Advanced Data Science Program in Kerala can be your gateway to a high-paying, future-ready career. With the right training, mentorship, and project experience, you can transform your passion for data into a rewarding profession. Whether you’re a recent graduate, an IT professional, or someone looking to switch careers, now is the perfect time to take the leap.

Amal P K Digital Marketing Specialist and Best freelance digital marketer in kerala with updated knowledge about current trends and marketing strategies based on the latest Google algorithms that are most useful for your business. As a business owner, you need to grow your business, and if so, I will be a great asset. Website : https://amaldigiworld.com/

0 notes

Text

Unveiling Java's Multifaceted Utility: A Deep Dive into Its Applications

In software development, Java stands out as a versatile and ubiquitous programming language with many applications across diverse industries. From empowering enterprise-grade solutions to driving innovation in mobile app development and big data analytics, Java's flexibility and robustness have solidified its status as a cornerstone of modern technology.

Let's embark on a journey to explore the multifaceted utility of Java and its impact across various domains.

Powering Enterprise Solutions

Java is the backbone for developing robust and scalable enterprise applications, facilitating critical operations such as CRM, ERP, and HRM systems. Its resilience and platform independence make it a preferred choice for organizations seeking to build mission-critical applications capable of seamlessly handling extensive data and transactions.

Shaping the Web Development Landscape

Java is pivotal in web development, enabling dynamic and interactive web applications. With frameworks like Spring and Hibernate, developers can streamline the development process and build feature-rich, scalable web solutions. Java's compatibility with diverse web servers and databases further enhances its appeal in web development.

Driving Innovation in Mobile App Development

As the foundation for Android app development, Java remains a dominant force in the mobile app ecosystem. Supported by Android Studio, developers leverage Java's capabilities to craft high-performance and user-friendly mobile applications for a global audience, contributing to the ever-evolving landscape of mobile technology.

Enabling Robust Desktop Solutions

Java's cross-platform compatibility and extensive library support make it an ideal choice for developing desktop applications. With frameworks like Java Swing and JavaFX, developers can create intuitive graphical user interfaces (GUIs) for desktop software, ranging from simple utilities to complex enterprise-grade solutions.

Revolutionizing Big Data Analytics

In big data analytics, Java is a cornerstone for various frameworks and tools to process and analyze massive datasets. Platforms like Apache Hadoop, Apache Spark, and Apache Flink leverage Java's capabilities to unlock valuable insights from vast amounts of data, empowering organizations to make data-driven decisions.

Fostering Innovation in Scientific Research

Java's versatility extends to scientific computing and research, where it is utilized to develop simulations, modeling tools, and data analysis software. Its performance and extensive library support make it an invaluable asset in bioinformatics, physics, and engineering, driving innovation and advancements in scientific research.

Empowering Embedded Systems

With its lightweight runtime environment, Java Virtual Machine (JVM), Java finds applications in embedded systems development. From IoT devices to industrial automation systems, Java's flexibility and reliability make it a preferred choice for building embedded solutions that require seamless performance across diverse hardware platforms.

In summary, Java's multifaceted utility and robustness make it an indispensable tool in the arsenal of modern software developers. Whether powering enterprise solutions, driving innovation in mobile app development, or revolutionizing big data analytics, Java continues to shape the technological landscape and drive advancements across various industries. As a versatile and enduring programming language, Java remains at the forefront of innovation, paving the way for a future powered by cutting-edge software solutions.

2 notes

·

View notes

Text

Understanding Data Science: The Backbone of Modern Decision-Making

Data science is the multidisciplinary field that blends statistical analysis, programming, and domain knowledge to extract actionable insights from complex datasets. It plays a critical role in everything from predicting customer behavior to detecting fraud, personalizing healthcare, and optimizing supply chains.

What is Data Science?

At its core, data science is about turning data into knowledge. It combines tools and techniques from statistics, computer science, and mathematics to analyze large volumes of data and solve real-world problems.

A data scientist’s job is to:

Ask the right questions

Collect and clean data

Analyze and interpret trends

Build models and algorithms

Present results in an understandable way

It’s not just about numbers it's about finding patterns and making smarter decisions based on those patterns.

Why is Data Science Important?

Data is often called the new oil, but just like oil, it needs to be refined before it becomes valuable. That’s where data science comes in.

Here’s why it matters:

Business Growth: Data science helps businesses forecast trends, improve customer experience, and create targeted marketing strategies.

Automation: It enables automation of repetitive tasks through machine learning and AI, saving time and resources.

Risk Management: Financial institutions use data science to detect fraud and manage investment risks.

Innovation: From healthcare to agriculture, data science drives innovation by providing insights that lead to better decision-making.

Key Components of Data Science

To truly understand data science, it’s important to know its main components:

Data Collection Gathering raw data from various sources like databases, APIs, sensors, or user behavior logs.

Data Cleaning and Preprocessing Raw data is messy—cleaning involves handling missing values, correcting errors, and formatting for analysis.

Exploratory Data Analysis (EDA) Identifying patterns, correlations, and anomalies using visualizations and statistical summaries.

Machine Learning & Predictive Modeling Building algorithms that learn from data and make predictions—such as spam filters or recommendation engines.

Data Visualization Communicating findings through charts, dashboards, or storytelling tools to help stakeholders make informed decisions.

Deployment & Monitoring Integrating models into real-world systems and constantly monitoring their performance.

Popular Tools & Languages in Data Science

A data scientist’s toolbox includes several powerful tools:

Languages: Python, R, SQL

Libraries: Pandas, NumPy, Matplotlib, Scikit-learn, TensorFlow

Visualization Tools: Tableau, Power BI, Seaborn

Big Data Platforms: Hadoop, Spark

Databases: MySQL, PostgreSQL, MongoDB

Python remains the most preferred language due to its simplicity and vast library ecosystem.

Applications of Data Science

Data science isn’t limited to tech companies. Here’s how it’s applied across different industries:

Healthcare: Predict disease outbreaks, personalize treatments, manage patient data.

Retail: Track customer behavior, manage inventory, and enhance recommendations.

Finance: Detect fraud, automate trading, and assess credit risk.

Marketing: Segment audiences, optimize campaigns, and analyze consumer sentiment.

Manufacturing: Improve supply chain efficiency and predict equipment failures.

Careers in Data Science

Demand for data professionals is skyrocketing. Some popular roles include:

Data Scientist Builds models and interprets complex data.

Data Analyst Creates reports and visualizations from structured data.

Machine Learning Engineer Designs and deploys AI models.

Data Engineer Focuses on infrastructure and pipelines for collecting and processing data.

Business Intelligence Analyst Turns data into actionable business insights.

According to LinkedIn and Glassdoor, data science is one of the most in-demand and well-paying careers globally.

How to Get Started in Data Science

You don’t need a Ph.D. to begin your journey. Start with the basics:

Learn Python or R Focus on data structures, loops, and libraries like Pandas and NumPy.

Study Statistics and Math Understanding probability, distributions, and linear algebra is crucial.

Work on Projects Real-world datasets from platforms like Kaggle or UCI Machine Learning Repository can help you build your portfolio.

Stay Curious Read blogs, follow industry news, and never stop experimenting with data.

Final Thoughts

Data science is more than a buzzword it’s a revolution in how we understand the world around us. Whether you're a student, professional, or entrepreneur, learning data science opens the door to endless possibilities.

In a future driven by data, the question is not whether you can afford to invest in data science but whether you can afford not to.

0 notes