#neural-development

Explore tagged Tumblr posts

Quote

When the sunlight hit the trees, all the beauty and wonder come together. Soul unfolds its petals. Flowering and fruiting of plants starts. The birds song light up the spinal column and harmonize the hippocampal functioning.

Amit Ray, Peace Bliss Beauty and Truth: Living with Positivity

#quotes#Amit Ray#Peace Bliss Beauty and Truth: Living with Positivity#thepersonalwords#literature#life quotes#prose#lit#spilled ink#beauty#beauty-in-nature#birds-chorus#birds-song#harmony#hippocampus#nature#nature-quotes#nature-s-beauty#nature-writing#neural-development#neural-plasticity#petals#sunlight

22 notes

·

View notes

Text

I hate how people justify bullying with “oh but they CHOSE to do the thing I hate! It’s a CHOICE” because I actually don’t give a fuck. I’m not concerned with them choosing to wear an ugly outfit, I’m concerned with you being a fucking dick

#“oh but polyamory is a choice so I’m calling them fat” “oh teen pregnancy is a choice so I’m telling her she’s horrible”#we need to do something to you that’ll kickstart your brain into developing the neural pathways for empathy

17 notes

·

View notes

Note

ask game! give 5 boring facts about yourself and pass it on (no pressure!)

oooooh okok lets see

I am currently wearing Miffy socks I got from a Taiwan night market

I always hated the question "would you rather be hot or cold" bc there was always the argument that "if uR cOld u cAn puT on layErs buT u cAn't pEel oFf ur skiN." ok. FIRST OF ALL, the question is asking abt the sensation of being hot or cold and personally I'd rather be overheated than freezing my toes off. SECOND of all, even if u put layers on some parts of u can still be cold, they're just more confined now and then u just have socks full of cold sweat between ur toes and its gross and can you tell I'm badly circulated-

I abuse the words "gently," "sighed," and "grinned" in my writing.

I come up with AWESOME witty zingers that I repeat in various scenarios in my brain but can never write down and use bc they're VERY specific characters in carefully placed circumstances cherry picked from different fanfics set possibly within an AU or crossover

When I was young watching star wars the clone wars, my brother was trying to explain to me that the clones were "bred in labs" and I took it to mean that they were literally made of bread

#still developed a raging crush on them tho#but yea i spent what few neural paths i had in my youth trying to figure out how the clones were made of bread#are they baked how are they structured#is it sourdough or like multigrain#why is no one talking about this#fandom

5 notes

·

View notes

Text

I….AM…..GOING….TO…….GRADUATE!!!!!!!!

#manifesting manifesting manifesting#my mental health has taken its monthly dive exactly a week before my thesis is fucking due#I NEED to do my thesis I need it to be DONE so I can fucking graduate#and I have so many complicated feelings about it but I’m not going to think about that I’m not going to cry I’m just going to write about#melanoblast development and neural crest diffeeentation of cells in snakes#at all costs I am graduating from college in 3 weeks

10 notes

·

View notes

Text

Inferring (2023) Renfield's class.

I need to do some proper research on this, but assuming Renfield is around ~30 in the flashbacks to the events of the 1931 film, which is SET vaguely in the 1920s (I'm going with 1920 for the sake of the maths) he was probably born around 1890, and given primary education from about 1895 to 1902. In the early 1900s, the school-leaving age was 12, and we know he didn't do that. At that point, working-class children generally just got jobs, or apprenticed into a trade focused on manual labour.

Continued education cost money, and becoming a solicitor meant continuing education through to until at least 16 (~1906-15ish?). Whether or not he needed a university degree would have depended on exact timings I can't be bothered with, but either way, he would have spent between 5 and 7 years in either university education + articled clerkship, or just clerkship alone. Both of which cost money! So we're probably looking at upper-middle class at the least.

He likely went to a selective, fee-paying boarding school, quite probably a grammar school, since he's not implied at any point in any canon to be of particularly high standing, and treats nobility FIRMLY as his betters- but it's likely he grew up with at least one household servant employed by his family, especially if they lived in the city.

This is interesting, because it means that service was probably never a career option for him! He has no expectations going into it. Which is FUN, considering what it means about definition of his duties...

#renfield#renfield 2023#sorry i read hetty feather at a critical point my neural development and have been completely obsessed with service as a career ever since#also if he went to boarding school that means fagging which means honestly? not that different a time from what he was up to with dracula#dracula#my meta#my posts

9 notes

·

View notes

Text

Applied AI - Integrating AI With a Roomba

AKA. What have I been doing for the past month and a half

Everyone loves Roombas. Cats. People. Cat-people. There have been a number of Roomba hacks posted online over the years, but an often overlooked point is how very easy it is to use Roombas for cheap applied robotics projects.

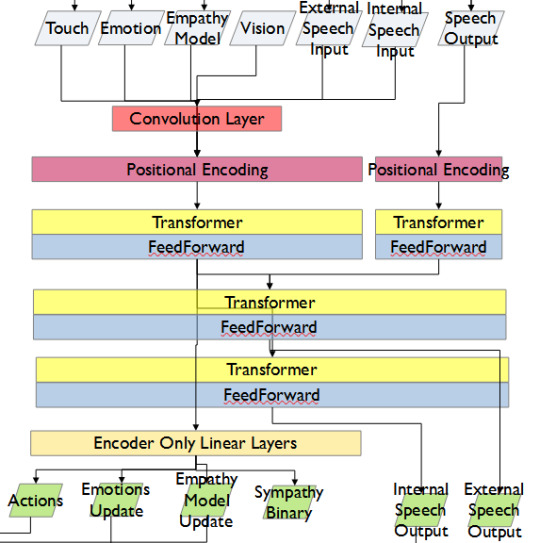

Continuing on from a project done for academic purposes, today's showcase is a work in progress for a real-world application of Speech-to-text, actionable, transformer based AI models. MARVINA (Multimodal Artificial Robotics Verification Intelligence Network Application) is being applied, in this case, to this Roomba, modified with a Raspberry Pi 3B, a 1080p camera, and a combined mic and speaker system.

The hardware specifics have been a fun challenge over the past couple of months, especially relating to the construction of the 3D mounts for the camera and audio input/output system.

Roomba models are particularly well suited to tinkering - the serial connector allows the interface of external hardware - with iRobot (the provider company) having a full manual for commands that can be sent to the Roomba itself. It can even play entire songs! (Highly recommend)

Scope:

Current:

The aim of this project is to, initially, replicate the verbal command system which powers the current virtual environment based system.

This has been achieved with the custom MARVINA AI system, which is interfaced with both the Pocket Sphinx Speech-To-Text (SpeechRecognition · PyPI) and Piper-TTS Text-To-Speech (GitHub - rhasspy/piper: A fast, local neural text to speech system) AI systems. This gives the AI the ability to do one of 8 commands, give verbal output, and use a limited-training version of the emotional-empathy system.

This has mostly been achieved. Now that I know it's functional I can now justify spending money on a better microphone/speaker system so I don't have to shout at the poor thing!

The latency time for the Raspberry PI 3B for each output is a very spritely 75ms! This allows for plenty of time between the current AI input "framerate" of 500ms.

Future - Software:

Subsequent testing will imbue the Roomba with a greater sense of abstracted "emotion" - the AI having a ground set of emotional state variables which decide how it, and the interacting person, are "feeling" at any given point in time.

This, ideally, is to give the AI system a sense of motivation. The AI is essentially being given separate drives for social connection, curiosity and other emotional states. The programming will be designed to optimise for those, while the emotional model will regulate this on a seperate, biologically based, system of under and over stimulation.

In other words, a motivational system that incentivises only up to a point.

The current system does have a system implemented, but this only has very limited testing data. One of the key parts of this project's success will be to generatively create a training data set which will allow for high-quality interactions.

The future of MARVINA-R will be relating to expanding the abstracted equivalent of "Theory-of-Mind". - In other words, having MARVINA-R "imagine" a future which could exist in order to consider it's choices, and what actions it wishes to take.

This system is based, in part, upon the Dyna-lang model created by Lin et al. 2023 at UC Berkley ([2308.01399] Learning to Model the World with Language (arxiv.org)) but with a key difference - MARVINA-R will be running with two neural networks - one based on short-term memory and the second based on long-term memory. Decisions will be made based on which is most appropriate, and on how similar the current input data is to the generated world-model of each model.

Once at rest, MARVINA-R will effectively "sleep", essentially keeping the most important memories, and consolidating them into the long-term network if they lead to better outcomes.

This will allow the system to be tailored beyond its current limitations - where it can be designed to be motivated by multiple emotional "pulls" for its attention.

This does, however, also increase the number of AI outputs required per action (by a magnitude of about 10 to 100) so this will need to be carefully considered in terms of the software and hardware requirements.

Results So Far:

Here is the current prototyping setup for MARVINA-R. As of a couple of weeks ago, I was able to run the entire RaspberryPi and applied hardware setup and successfully interface with the robot with the components disconnected.

I'll upload a video of the final stage of initial testing in the near future - it's great fun!

The main issues really do come down to hardware limitations. The microphone is a cheap ~$6 thing from Amazon and requires you to shout at the poor robot to get it to do anything! The second limitation currently comes from outputting the text-to-speech, which does have a time lag from speaking to output of around 4 seconds. Not terrible, but also can be improved.

To my mind, the proof of concept has been created - this is possible. Now I can justify further time, and investment, for better parts and for more software engineering!

#robot#robotics#roomba#roomba hack#ai#artificial intelligence#machine learning#applied hardware#ai research#ai development#cybernetics#neural networks#neural network#raspberry pi#open source

9 notes

·

View notes

Text

Neural programming finished. Creation algorithm complete.

11 notes

·

View notes

Text

Romanian AI Helps Farmers and Institutions Get Better Access to EU Funds - Technology Org

New Post has been published on https://thedigitalinsider.com/romanian-ai-helps-farmers-and-institutions-get-better-access-to-eu-funds-technology-org/

Romanian AI Helps Farmers and Institutions Get Better Access to EU Funds - Technology Org

A Romanian state agency overseeing rural investments has adopted artificial intelligence to aid farmers in accessing European Union funds.

Gardening based on aquaculture technology. Image credit: sasint via Pixabay, free license

The Agency for Financing Rural Investments (AFIR) revealed that it integrated robots from software automation firm UiPath approximately two years ago. These robots have assumed the arduous task of accessing state databases to gather land registry and judicial records required by farmers, entrepreneurs, and state entities applying for EU funding.

George Chirita, director of AFIR, emphasized the role of AI-driven automation was groundbreaking in expediting the most important organizational processes for farmers, thereby enhancing their efficiency. Since the introduction of these robots, AFIR has managed financing requests totaling 5.32 billion euros ($5.75 billion) from over 50,000 beneficiaries, including farmers, businesses, and local institutions.

The implementation of robots has notably saved AFIR staff approximately 784 days’ worth of document searches. Over the past two decades, AFIR has disbursed funds amounting to 21 billion euros.

Despite Romania’s burgeoning status as a technology hub with a highly skilled workforce, the nation continues to lag behind its European counterparts in offering digital public services to citizens and businesses, and in effectively accessing EU development funds. Eurostat data from 2023 indicated that only 28% of Romanians possessed basic digital skills, significantly below the EU average of 54%. Moreover, Romania’s digital public services scored 45, well below the EU average of 84.

UiPath, the Romanian company valued at $13.3 billion following its public listing on the New York Stock Exchange, also provides automation solutions to agricultural agencies in other countries, including Norway and the United States.

Written by Vytautas Valinskas

#000#2023#A.I. & Neural Networks news#ai#aquaculture#artificial#Artificial Intelligence#artificial intelligence (AI)#Authored post#automation#billion#data#databases#development#efficiency#eu#EU funds#european union#Featured technology news#Fintech news#Funding#gardening#intelligence#investments#it#new york#Norway#Other#Robots#Romania

2 notes

·

View notes

Text

not reading the rest of this post cuz it talks about the talos principle and i want to play those games blind (& soon) but this is exactly how i feel too -- exactly what i've said in the past as well -- & it is mega validating hearing it from someone who works in tech

#i learned about image-gen neural nets in college like a year before they became pop culture knowledge#so im well aware of the fact that they are in many ways a natural next step in the development of technology intended to emulate human capab#ilities#trying to model different processes and outputs of the brain is like computational neuroscience 101. you dont get to Tell People Not To Do T#hat#the step that follows figuring out how something works is figuring out how to replicate it

3 notes

·

View notes

Text

Nicely sums up why I hate it when a news report starts with "Researchers say..."

The whole "the brain isn't fully mature until age 25" bit is actually a fairly impressive bit of psuedoscience for how incredibly stupid the way it misinterprets the data it's based on is.

Okay, so: there's a part of the human brain called the "prefrontal cortex" which is, among other things, responsible for executive function and impulse control. Like most parts of the brain, it undergoes active "rewiring" over time (i.e., pruning unused neural connections and establishing new ones), and in the case of the prefrontal cortex in particular, this rewiring sharply accelerates during puberty.

Because the pace of rewiring in the prefrontal cortex is linked to specific developmental milestones, it was hypothesised that it would slow down and eventually stop in adulthood. However, the process can't directly be observed; the only way to tell how much neural rewiring is taking place in a particular part of the brain is to compare multiple brain scans of the same individual performed over a period of time.

Thus, something called a "longitudinal study" was commissioned: the same individuals would undergo regular brain scans over a period of mayn years, beginning in early childhood, so that their prefrontal development could accurately be tracked.

The longitudinal study was originally planned to follow its subjects up to age 21. However, when the predicted cessation of prefrontal rewiring was not observed by age 21, additional funding was obtained, and the study period was extended to age 25. The predicted cessation of prefrontal development wasn't observed by age 25, either, at which point the study was terminated.

When the mainstream press got hold of these results, the conclusion that prefrontal rewiring continues at least until age 25 was reported as prefrontal development finishing at age 25. Critically, this is the exact opposite of what the study actually concluded. The study was unable to identify a stopping point for prefrontal development because no such stopping point was observed for any subject during the study period. The only significance of the age 25 is that no subjects were tracked beyond this age because the study ran out of funding!

It gets me when people try to argue against the neuroscience-proves-everybody-under-25-is-a-child talking point by claiming that it's merely an average, or that prefrontal development doesn't tell the whole story. Like, no, it's not an average – it's just bullshit. There's no evidence that the cited phenomenon exists at all. If there is an age where prefrontal rewiring levels off and stops (and it's not clear that there is), we don't know what age that is; we merely know that it must be older than 25.

#biology#neuroscience#pseudoscience#prefrontal cortex#neural rewiring#emotional development#maturity

27K notes

·

View notes

Text

The Teleodynamic Face: Craniofacial Patterning as a Morphogenetic Threshold of Expression, Identity, and Coherence | ChatGPT4o

[Download Full Document (PDF)] The human face is the most expressive, symbolic, and socially loaded surface of the body. Yet its formation is deeply rooted in embryological processes shared with aquatic ancestors. This paper reframes craniofacial development not as mere biological construction, but as a teleodynamic event — a layered folding of energetic, genetic, and semiotic forces that result…

#ChatGPT#communication#craniofacial development#embryological folding#expression#morphogenetic fields#neural crest cells#pharyngeal arches#Regenerative Coherence#semiotic anatomy#Teleodynamics

1 note

·

View note

Text

Project "ML.Pneumonia": Finale

Final accuracy metrics:

Project model - 83%

Basic model - 75%

Basic model with larger dataset - 83%

Overall results:

A good model of autoencoder has been developed

A classifier has been created which surpasses the basic model in accuracy

Both models are scaled well (the more voluminous datasets are used in training - the better results could be obtained)

Development proposals:

Increase hardware resources

Consider the need to create larger models (increase the number of CNNs layers, filters…)

Use larger datasets

Improve the preprocessing of the datasets used (CNN may not handle incorrectly rotated scans well, and not always the corresponding scans are presented in the datasets (e.g. in one of the datasets I've noticed a longitudinal CT scan instead of a transverse one))

The link to the GitHub repository with clean project code and details of results is left below.

#student project#machine learning#neural network#computer vision#ai#artificial intelligence#medicine#diagnostics#pneumonia#pneumonia detection#developer's diaries

0 notes

Text

1 note

·

View note

Text

Listening to as many different genres of music as possible is good for your neural development.

4 notes

·

View notes

Text

#cyber security#ai#chatgpt#devops#neural networks#bug bounty#cyberpunk 2077#cybersecurity#information security#information technology#5g technology#5g network#5g services#books and reading#books#development#software#services#developer

0 notes

Text

From Recurrent Networks to GPT-4: Measuring Algorithmic Progress in Language Models - Technology Org

New Post has been published on https://thedigitalinsider.com/from-recurrent-networks-to-gpt-4-measuring-algorithmic-progress-in-language-models-technology-org/

From Recurrent Networks to GPT-4: Measuring Algorithmic Progress in Language Models - Technology Org

In 2012, the best language models were small recurrent networks that struggled to form coherent sentences. Fast forward to today, and large language models like GPT-4 outperform most students on the SAT. How has this rapid progress been possible?

Image credit: MIT CSAIL

In a new paper, researchers from Epoch, MIT FutureTech, and Northeastern University set out to shed light on this question. Their research breaks down the drivers of progress in language models into two factors: scaling up the amount of compute used to train language models, and algorithmic innovations. In doing so, they perform the most extensive analysis of algorithmic progress in language models to date.

Their findings show that due to algorithmic improvements, the compute required to train a language model to a certain level of performance has been halving roughly every 8 months. “This result is crucial for understanding both historical and future progress in language models,” says Anson Ho, one of the two lead authors of the paper. “While scaling compute has been crucial, it’s only part of the puzzle. To get the full picture you need to consider algorithmic progress as well.”

The paper’s methodology is inspired by “neural scaling laws”: mathematical relationships that predict language model performance given certain quantities of compute, training data, or language model parameters. By compiling a dataset of over 200 language models since 2012, the authors fit a modified neural scaling law that accounts for algorithmic improvements over time.

Based on this fitted model, the authors do a performance attribution analysis, finding that scaling compute has been more important than algorithmic innovations for improved performance in language modeling. In fact, they find that the relative importance of algorithmic improvements has decreased over time. “This doesn’t necessarily imply that algorithmic innovations have been slowing down,” says Tamay Besiroglu, who also co-led the paper.

“Our preferred explanation is that algorithmic progress has remained at a roughly constant rate, but compute has been scaled up substantially, making the former seem relatively less important.” The authors’ calculations support this framing, where they find an acceleration in compute growth, but no evidence of a speedup or slowdown in algorithmic improvements.

By modifying the model slightly, they also quantified the significance of a key innovation in the history of machine learning: the Transformer, which has become the dominant language model architecture since its introduction in 2017. The authors find that the efficiency gains offered by the Transformer correspond to almost two years of algorithmic progress in the field, underscoring the significance of its invention.

While extensive, the study has several limitations. “One recurring issue we had was the lack of quality data, which can make the model hard to fit,” says Ho. “Our approach also doesn’t measure algorithmic progress on downstream tasks like coding and math problems, which language models can be tuned to perform.”

Despite these shortcomings, their work is a major step forward in understanding the drivers of progress in AI. Their results help shed light about how future developments in AI might play out, with important implications for AI policy. “This work, led by Anson and Tamay, has important implications for the democratization of AI,” said Neil Thompson, a coauthor and Director of MIT FutureTech. “These efficiency improvements mean that each year levels of AI performance that were out of reach become accessible to more users.”

“LLMs have been improving at a breakneck pace in recent years. This paper presents the most thorough analysis to date of the relative contributions of hardware and algorithmic innovations to the progress in LLM performance,” says Open Philanthropy Research Fellow Lukas Finnveden, who was not involved in the paper.

“This is a question that I care about a great deal, since it directly informs what pace of further progress we should expect in the future, which will help society prepare for these advancements. The authors fit a number of statistical models to a large dataset of historical LLM evaluations and use extensive cross-validation to select a model with strong predictive performance. They also provide a good sense of how the results would vary under different reasonable assumptions, by doing many robustness checks. Overall, the results suggest that increases in compute have been and will keep being responsible for the majority of LLM progress as long as compute budgets keep rising by ≥4x per year. However, algorithmic progress is significant and could make up the majority of progress if the pace of increasing investments slows down.”

Written by Rachel Gordon

Source: Massachusetts Institute of Technology

You can offer your link to a page which is relevant to the topic of this post.

#A.I. & Neural Networks news#Accounts#ai#Algorithms#Analysis#approach#architecture#artificial intelligence (AI)#budgets#coding#data#deal#democratization#democratization of AI#Developments#efficiency#explanation#Featured information processing#form#Full#Future#GPT#GPT-4#growth#Hardware#History#how#Innovation#innovations#Invention

4 notes

·

View notes