#parametric modeling

Explore tagged Tumblr posts

Text

youtube

In this Grasshopper exercise for beginners, you'll learn about many components and techniques that will help create many forms besides the one in the video. At the end of this tutorial, I will leave you with 2 small exercises that you can do on your own, basically minor adjustments to this exercise.

#mcneel grasshopper#grasshopper3d#grasshopper#grasshopper tutorials#parametric3d#parametric modeling#parametric design#grasshopper modeling#learn grasshopper#rhino grasshopper#mcneel rhino#rhino 3d#parametric tutorials#grasshopper tips and tricks#Youtube

2 notes

·

View notes

Text

Explore Parametric Modeling in 3D Design | BeeGraphy

Learn how parametric modeling is revolutionizing 3D design and architecture. Discover trends, techniques, and applications in BeeGraphy’s latest blog post.

0 notes

Text

Have you guys ever heard about this game "Slay The Princess"?

I sure have... It's been constantly spinning in my mind for a couple of months...

So I've tried to make something in Hero Forge about it... Here are my attempts at slaying modeling Chapter 2 Princesses...

Bonus: Hero Forge doesn't allow more than two figures on one base, so there is no way I could model The Stranger... So here is five of her fragments:

And also Tower lifting the chin of a raven that was used to determine Her hand position... He was so charming to me, I've decided to show you... Not Long, but a Little Quiet...

All models are free to use in your DnD campaigns, and will be available through links in my Google Doc... Once I finish compiling it... I also need to acknowledge, that I'm not the first person doing this... That honor, as far as I'm aware, goes to @imafuckingnerdineveryway and their two sets of models: Long Quiet and Shifting Mound Adversary and Damsel Which were partial inspiration for starting this project... Check them out! More people should engage with Hero Forge as an art program!

#slay the princess#stp#slay the princess fanart#stp fanart#stp princess#stp damsel#stp razor#stp witch#stp adversary#stp tower#stp beast#stp prisoner#stp nightmare#stp spectre#stp stranger#Hero Forge#Beast didn't have cool reference pose... Since she's always hiding... She is posed like a pouncing cougar in this one...#I've also gotten very used to modeling skirts from posable tails... Maybe Razor should have one as well...#Also all of them were modeled starting from the same base Princess... Just contorting her parametres in different ways...#At some point I'd like to model ch3 as well...#If anyone cares...#Boy do I look forward to modeling Networked Wild... Or Mutually Assured Destruction...#Wish me luck...

153 notes

·

View notes

Text

experiencing The Problems

#specifically the Problem of#either i need to reduce the set of syntactic parameters to exclusively independent items in order to use it with a bayesian model#(just Not how parametric syntactic theory works)#or i need to find a non-Bayesian way to detect horizontal transmission in phylogenetic trees#(not available to the best of my knowledge)#... or i need to change up my research aims#(NOT what i want to do)

4 notes

·

View notes

Text

liveblogging my descent into madness

#okay okay okay okay okay okay okay#my supervisor set a new deadline for Now. tonight#bc he wants to meet tomorrow 2 with more draft to talk about#rn im on 4 full pages and trying to figure out what the hell my analysis would practically look like step by step#which is hard when im not that good at stats and this is actually one of the things he should be helping me with#and he evaded questions when I did ask him abt#but! getting annoyed doesn’t help me now#I am putting together bullet point steps to help me get my head round it bc it’s midnight and I’m having trouble like#keeping how exactly the methods work straight in my head#generalised linear mixed models! woo!! I don’t know whether they substitute for finding an association between two factors first or are like#subsequent step to that. more refined. gives amount of variance in x due to y that can be explained by z factor#if I had more time I’d be able to figure this out and I will want to ask about this so maybe that’s worth leaving for now as long as I know#roughly what outputs I’m expecting and what things I’ll need to separate for each hypothesis#ohhhhhhh wait I’m describing summary statistics. Im saying I’ll do summary statistics for each factor first before I do a glmm#eg for spatial effect I need to see the correlation between distance and occupancy in individual sites#and whether there’s a difference in the average distance between my two groups#wait so that’s not a correlation it’s comparing two categories and seeing whether their distributions differ which. anova? non parametric?#dude i have no idea at this point I think this is smth I have to ask about#okay. so I haven’t touched my extension section and I want to have something there that he can give feedback on#so for each of my objectives I’ll detail an experiment I couldn’t do that would advance the objective somehow#in the first two that’ll be quantification#or do I do that? what did he say last week#okay im going now I got shit to do#deeply sorry to anyone who is still reading these science is hard and I’m TIRED#luke.txt

1 note

·

View note

Text

HyperTransformer: G Additional Tables and Figures

Subscribe .tade0b48c-87dc-4ecb-b3d3-8877dcf7e4d8 { color: #fff; background: #222; border: 1px solid transparent; border-radius: undefinedpx; padding: 8px 21px; } .tade0b48c-87dc-4ecb-b3d3-8877dcf7e4d8.place-top { margin-top: -10px; } .tade0b48c-87dc-4ecb-b3d3-8877dcf7e4d8.place-top::before { content: “”; background-color: inherit; position: absolute; z-index: 2; width: 20px; height: 12px; }…

View On WordPress

#conventional-machine-learning#convolutional-neural-network#few-shot-learning#hypertransformer#parametric-model#small-target-cnn-architectures#supervised-model-generation#task-independent-embedding

0 notes

Text

For the last several months I've been resisting the siren call of machining while enjoying the new-to-me channel Pask Makes, with its woodworking and tool production.

But yesterday I watched two videos from Adam Savage in a row, with all their semi-chaotic plotting, layout work, and winging it. I now desperately need access to a machine shop and I'm being so brave about it.

That said, I have just downloaded FreeCAD to get as close as I can digitally to that thought process without the metal shavings, blue stained fingertips, and sulfuric lubricant smell. Or at least as close as I can for free.

#started writing this post and had to pause for about an hour to search desperately for the name of my Intro to Machining Technology teacher#i'd thought of him and gotten to the point of being *pretty* sure he'd vanished from linkedin before#i confirmed he's definitely not there (or at least not the account that connected with mine)#and another person with his first name overwrote my memory of his last BUT this time i managed to find the right search terms#that pulled up his spot in the school's whitepages directory#so i emailed his school account knowing full well he probably doesn't have access anymore as an old adjunct#i certainly don't - it was almost ten years ago#but if he does or there's some email forwarding possible he's gotten a thank you message#because that was one of the classes i loved the most from my community college and tbh my whole school experience#anyway this isn't just impulsive yearning to machine#if I'm doing it right the project after next will have a lot of assets that parametric modeling would help#including vehicles if I'm brave which i need to be if I'm really using it as an environment artist portfolio piece#specifically they might require nurbs which would need me to download and practice with the silk add-on#which i think common sense dictates should happen after i understand how to use the vanilla toolsets#so gaining that familiarity might be what we're up to some stream soon#ramblings#tag you're writ

1 note

·

View note

Text

Inventor and Solid-Works are both 3D modeling software that is similar, and therefore they make great choices. Due to a number of factors, both tools are industry standards, and both provide extensive simulations before designs become reality.

You should keep in mind that Inventor is cheaper, especially for initial setup. In the end, neither program can go wrong.

Inventor

Creator was Autodesk's 3D parametric tool before it became Inventor. Developed in 1999, it is a direct competitor of Solid-Works. The software combines 2D and 3D mechanical design and simulation tools, as well as documenting tools.

The geometry of an Inventor model is parametric, which means that specific parameters determine and modify the geometry.

Benefits of Inventor

1. Direct-editing and free-form modeling is options to parametric design's predictive approach.

2. Inventor's unique feature loads a design's graphic parts separately from its material and geometric components. Because the latter is the heaviest data consumer of most designs, this makes it noticeable faster.

3. An inventor may test a 3D design in real-life situations according to its methods. In its Dynamic Simulation module, pressure is a key point like joints by applying torque. The burst tool is a favorite among many. Using this method, one can simulate an emergency scenario where the weld gives way.

4. Mechanical parts, such as blades or support wires, can also be automatically calculated behind the scenes. A large design can view without getting bogged down in small details.

Read more

0 notes

Note

Are there any primitives or operations you wished parametric CAD software had?

This is tricky, because parametric CAD is what I learned to design on so its feature set feels "natural".

I don't really think so! Most of the obvious innovations are already covered, SolidWorks can take a model back and forth between parametric and primitives modelling in its own weird way, Inventor has really great design for manufacture features, from what I've seen SolidEdge has done some clever stuff with the solver to help you design parts that are customizable as you go down the chain. Who knows what's going on in NX these days, not me. There's definitely some holes in the sense of individual packages lacking features, but almost anything you can ask has been implemented somewhere, by someone.

Good quality design for manufacture tools really do help, I remember doing sheet metal stuff in Inventor back before they cut off free Inventor access and being able to see your generated sheet and bend allowances so clearly was great, and now even OnShape has pretty solid design helpers.

A thing small shops and hobbyists would probably like is better handling of point clouds and photogrammetry for matching parts, since you're much more likely to be working with parts and projects where you didn't do all the design, I've spent many hours trying to accurately model a mating feature, but even that's like. Pretty good these days, importing 3D scans into an editor is pretty standard and the good CAD packages will even let you pick up holes and clean up point clouds directly from the scan.

I'm not that much of a mech eng, and never really was, my CAD is mostly self taught for simple tasks, real mechanical designers no doubt have better opinions on this, @literallymechanical probably has thoughts on T-splines.

37 notes

·

View notes

Text

youtube

In this third part of the Grasshopper series on attractors for beginners, we will learn about another component called Curve Closest Point, another alternative way to have attractor points affecting your shape. I recommend you also watch the first two videos in the series to have a better understanding about the attractors' logic, even though this video, by itself alone, presents a good explanation on its own. In the video description, you can find the links for the other two videos, also a link to download the demo file used in the second part that I used as a starting point for this tutorial.

#rhino grasshopper#grasshopper 3d#grasshopper tutorials#mcneel grasshopper#parametric 3d#parametric tutorials#grasshopper beginners#parametric beginners#parametric modeling#learn grasshopper#mcneel#learn parametric#grasshopper attractors#Youtube

0 notes

Text

Old-school planning vs new-school learning is a false dichotomy

I wanted to follow up on this discussion I was having with @metamatar, because this was getting from the original point and justified its own thread. In particular, I want to dig into this point

rule based planners, old school search and control still outperform learning in many domains with guarantees because end to end learning is fragile and dependent on training distribution. Lydia Kavraki's lab recently did SIMD vectorisation to RRT based search and saw like a several hundred times magnitude jump for performance on robot arms – suddenly severely hurting the case for doing end to end learning if you can do requerying in ms. It needs no signal except robot start, goal configuration and collisions. Meanwhile RL in my lab needs retraining and swings wildly in performance when using a slightly different end effector.

In general, the more I learn about machine learning and robotics, the less I believe that the dichotomies we learn early on actually hold up to close scrutiny. Early on we learn about how support vector machines are non-parametric kernel methods, while neural nets are parametric methods that update their parameters by gradient descent. And this is true, until you realize that kernel methods can be made more efficient by making them parametric, and large neural networks generalize because they approximate non-parametric kernel methods with stationary parameters. Early on we learn that model-based RL learns a model that it uses for planning, while model free methods just learn the policy. Except that it's possible to learn what future states a policy will visit and use this to plan without learning an explicit transition function, using the TD learning update normally used in model-free RL. And similar ideas by the same authors are the current state-of-the-art in offline RL and imitation learning for manipulation Is this model-free? model-based? Both? Neither? does it matter?

In my physics education, one thing that came up a lot is duality, the idea that there are typically two or more equivalent representations of a problem. One based on forces, newtonian dynamics, etc, and one as a minimization* problem. You can find the path that light will take by knowing that the incoming angle is always the same as the outgoing angle, or you can use the fact that light always follows the fastest* path between two points.

I'd like to argue that there's a similar but underappreciated analog in AI research. Almost all problems come down to optimization. And in this regard, there are two things that matter -- what you're trying to optimize, and how you're trying to optimize it. And different methods that optimize approximately the same objective see approximately similar performance, unless one is much better than the other at doing that optimization. A lot of classical planners can be seen as approximately performing optimization on a specific objective.

Let me take a specific example: MCTS and policy optimization. You can show that the Upper Confidence Bound algorithm used by MCTS is approximately equal to regularized policy optimization. You can choose to guide the tree search with UCB (a classical bandit algorithm) or policy optimization (a reinforcement learning algorithm), but the choice doesn't matter much because they're optimizing basically the same thing. Similarly, you can add a state occupancy measure regularization to MCTS. If you do, MCTS reduces to RRT in the case with no rewards. And if you do this, then the state-regularized MCTS searches much more like a sampling-based motion planner instead of like the traditional UCB-based MCTS planner. What matters is really the objective that the planner was trying to optimize, not the specific way it was trying to optimize it.

For robotics, the punchline is that I don't think it's really the distinction of new RL method vs old planner that matters. RL methods that attempt to optimize the same objective as the planner will perform similarly to the planner. RL methods that attempt to optimize different objectives will perform differently from each other, and planners that attempt to optimize different objectives will perform differently from each other. So I'd argue that the brittleness and unpredictability of RL in your lab isn't because it's RL persay, but because standard RL algorithms don't have long-horizon exploration term in their loss functions that would make them behave similarly to RRT. If we find a way to minimize the state occupancy measure loss described in the above paper other theory papers, I think we'll see the same performance and stability as RRT, but for a much more general set of problems. This is one of the big breakthroughs I'm expecting to see in the next 10 years in RL.

*okay yes technically not always minimization, the physical path can can also be an inflection point or local maxima, but cmon, we still call it the Principle of Least Action.

#note: this is of course a speculative opinion piece outlining potentially fruitful research directions#not a hard and fast “this will happen” prediction or guide to achieving practical performance

29 notes

·

View notes

Text

Preserved in our archive

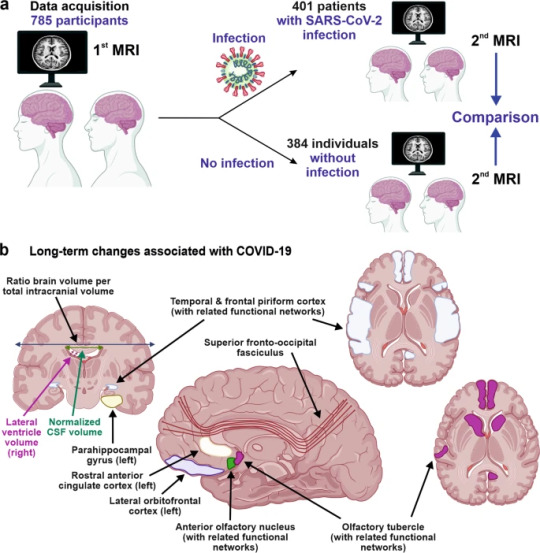

A research letter from 2022 highlighting the effects of even "mild" covid on the brain.

Dear Editor,

A recent study published in Nature by Douaud and colleagues1 shows that SARS-CoV-2 infection is associated with longitudinal effects, particularly on brain structures linked to the olfactory cortex, modestly accelerated reduction in global brain volume, and enhanced cognitive decline. Thus, even mild COVID-19 can be associated with long-lasting deleterious effects on brain structure and function.

Loss of smell and taste are amongst the earliest and most common effects of SARS-CoV-2 infection. In addition, headaches, memory problems, confusion, or loss of speech and motility occur in some individuals.2 While important progress has been made in understanding SARS-CoV-2-associated neurological manifestations, the underlying mechanisms are under debate and most knowledge stems from analyses of hospitalized patients with severe COVID-19.2 Most infected individuals, however, develop mild to moderate disease and recover without hospitalization. Whether or not mild COVID-19 is associated with long-term neurological manifestations and structural changes indicative of brain damage remained largely unknown.

Douaud and co-workers examined 785 participants of the UK Biobank (www.ukbiobank.ac.uk) who underwent magnetic resonance imaging (MRI) twice with an average inter-scan interval of 3.2 years, and 401 individuals testing positive for SARS-CoV-2 infection between MRI acquisitions (Fig. 1a). Strengths of the study are the large number of samples, the availability of scans obtained before and after infection, and the multi-parametric quantitative analyses of serial MRI acquisitions.1 These comprehensive and automated analyses with a non-infected control group allowed the authors to dissect consistent brain changes caused by SARS-CoV-2 infection from pre-existing conditions. Altogether, the MRI scan processing pipeline used extracted more than 2,000 features, named imaging-derived phenotypes (IDPs), from each participant’s imaging data. Initially, the authors focused on IDPs involved in the olfactory system. In agreement with the frequent impairment of smell and taste in COVID-19, they found greater atrophy and indicators of increased tissue damage in the anterior cingulate cortex, orbitofrontal cortex and insula, as well as in the ventral striatum, amygdala, hippocampus and para-hippocampal gyrus, which are connected to the primary olfactory cortex (Fig. 1b). Taking advantage of computational models allowing to differentiate changes related to SARS-CoV-2 infection from physiological age-related brain changes (e.g. decreases of brain volume with aging),3 they also explored IDPs covering the entire brain. Although most individuals experienced only mild symptoms of COVID-19, the authors detected an accelerated reduction in whole-brain volume and more pronounced cognitive declines associated with increased atrophy of a cognitive lobule of the cerebellum (crus II) in individuals with SARS-CoV-2 infection compared to the control group. These differences remained significant when 15 people who required hospitalization were excluded. Most brain changes for IDPs were moderate (average differences between the two groups of 0.2–2.0%, largest for volume of parahippocampal gyrus and entorhinal cortex) and accelerated brain volume loss was “only” observed in 56–62% of infected participants. Nonetheless, these results strongly suggest that even clinically mild COVID-19 might induce long-term structural alterations of the brain and cognitive impairment.

The study provides unique insights into COVID-19-associated changes in brain structure. The authors took great care in appropriately matching the case and control groups, making it unlikely that observed differences are due to confounding factors, although this possibility can never be entirely excluded. The mechanisms underlying these infection-associated changes, however, remain to be clarified. Viral neurotropism and direct infection of cells of the olfactory system, neuroinflammation and lack of sensory input have been suggested as reasons for the degenerative events in olfactory-related brain structures and neurological complications.4 These mechanisms are not mutually exclusive and may synergize in causing neurodegenerative disorders as consequence of COVID-19.

The study participants became infected between March 2020 and April 2021, before the emergence of the Omicron variant of concern (VOC) that currently dominates the COVID-19 pandemic. During that time period, the Alpha and Beta VOCs dominated in the UK and all results were obtained from individuals between 51 and 81 years of age. It will be of great interest to clarify whether Omicron, that seems to be less pathogenic than other SARS-CoV-2 variants, also causes long-term brain damage. The vaccination status of the participants was not available in the study1 and it will be important to clarify whether long-term changes in brain structure also occur in vaccinated and/or younger individuals. Other important questions are whether these structural changes are reversible or permanent and may even enhance the frequency for neurodegenerative diseases that are usually age-related, such as Alzheimer’s, Parkinson’s or Huntington’s disease. Previous findings suggest that cognitive disorders improve over time after severe COVID-19;5 yet it remains to be determined whether the described brain changes will translate into symptoms later in life such as dementia. Douaud and colleagues report that none of top 10 IDPs correlated significantly with the time interval between SARS-CoV-2 infection and the 2nd MRI acquisition, suggesting that the observed abnormalities might be very long-lasting.

Currently, many restrictions and protective measures are relaxed because Omicron is highly transmissible but usually causes mild to moderate acute disease. This raises hope that SARS-CoV-2 may evolve towards reduced pathogenicity and become similar to circulating coronaviruses causing mild respiratory infections. More work needs to be done to clarify whether the current Omicron and future variants of SARS-CoV-2 may also cause lasting brain abnormalities and whether these can be prevented by vaccination or therapy. However, the finding by Douaud and colleagues1 that SARS-CoV-2 causes structural changes in the brain that may be permanent and could relate to neurological decline is of concern and illustrates that the pathogenesis of this virus is markedly different from that of circulating human coronaviruses. Further studies, to elucidate the mechanisms underlying COVID-19-associated neurological abnormalities and how to prevent or reverse them are urgently needed.

REFERENCES (Follow link)

#public health#wear a mask#covid 19#pandemic#covid#wear a respirator#mask up#still coviding#coronavirus#sars cov 2#long covid#covid conscious#covid is airborne

27 notes

·

View notes

Text

i have to make a 10-part thing for my parametric modeling class but idk what i wanna do

#my first thought is maybe a way for me to hold a pen that would make it easier on my tendonitis cus ive got ideas already#buuuuut. i dunno if i could stretch that into 10 individual parts.

12 notes

·

View notes

Text

[ 21st may, 2024 • DAY 100/145 ]

don't like the maths but the parametric surfaces are so cute??? they're just adorable, ok?? they're giving me happiness. wish i could have a couple as pets <33

-> Comp minitest (20/20)

-> finished my part of the CG project (aka modelled 4 parametric surfaces - picked some really cool ones too!!) + made a list of stuff we still need to fix before the deadline

-> studied CG (read everything + notes - really need a good grade.. i don't want to do the exam!)

-> played Sea of Stars (it's a small addiction in the making but that's okaaaaayy)

-> watched Marriage Story (2019)

#stargazerbibi#bless whoever made these available for me to see 🙈#study#studyblr#100 dop#100 days of productivity#studyspo#studygram#stem#uni#student#studyspiration#studystudystudy#aesthetic#productivity#student life#studying#studies#study blog#study motivation#college#uni life#university#stem studyblr#stemblr#stem girls

38 notes

·

View notes

Text

John Cage, "Imaginary Landscape no. 4"

1951

Musical composition

Imaginary Landscape No 4 explores indeterminacy mass media and highly structured experience in a piece that changes each time and place it is performed. Two performers are stationed at each radio one responsible for dialing the radio station and the second to control the amplitude and timbre of the signal. Using conventional notation-notes on a five line staff, performers adjust the radio according to a 2-1-3 rhythmic structure. Since the 'music' of the piece emerges from whatever happens to be on the airwaves, it is impossible to predict what it will sound like in any given performance, with was Cage's intended effect. Employing a parametric compositional model and aleatory chance methods Cage used the I-Chang or Book of Changes, an ancient Chinese book of wisdom and prophecy to determine changes in the turning, amplitude and timbre of each radio.

3 notes

·

View notes

Text

HyperTransformer: A Example of a Self-Attention Mechanism For Supervised Learning

Subscribe .t9ce7d96b-e3c9-448d-b1fd-97f643ade4ab { color: #fff; background: #222; border: 1px solid transparent; border-radius: undefinedpx; padding: 8px 21px; } .t9ce7d96b-e3c9-448d-b1fd-97f643ade4ab.place-top { margin-top: -10px; } .t9ce7d96b-e3c9-448d-b1fd-97f643ade4ab.place-top::before { content: “”; background-color: inherit; position: absolute; z-index: 2; width: 20px; height: 12px; }…

View On WordPress

#conventional-machine-learning#convolutional-neural-network#few-shot-learning#hypertransformer#parametric-model#small-target-cnn-architectures#supervised-model-generation#task-independent-embedding

0 notes