#robustness of AI systems

Explore tagged Tumblr posts

Text

What AI Cannot Do: AI Limitation

Artificial Intelligence (AI) has made remarkable strides in recent years, revolutionizing industries from healthcare to finance. However, despite its impressive capabilities, there are inherent AI limitation to what it can achieve. Understanding these limitations is crucial for effectively integrating AI into our lives and recognizing its role as a tool rather than a replacement for human…

#adversarial attacks on AI#AI in customer service#AI limitations#automation and employment.#biases in AI algorithms#common sense in AI#context understanding in AI#creativity in artificial intelligence#data quality in AI#emotional intelligence in machines#ethical concerns with AI#human-AI collaboration#job displacement due to automation#machine learning limitations#robustness of AI systems

0 notes

Note

Hi hello is temporal shift one of the things you've written?? Or have you only posted about it so far because it sounds really interesting and now I want to know more

temporal shift is one of those fic ideas that's been rattling around in my brain since two? three years ago? but I have not gotten around to writing it yet. I have talked about it a few times before, you can always check its tag for that!

the fic centers around a bad future in which the paladins fail to stop Sendak's plan to destroy Earth, and things kind of... spiral from there. They manage to stop Honerva's plans, but only at the cost of a self-sacrifice from Lance and the destruction of the red lion (and therefore, Voltron). They spend the next few hundred years in a stalemate with Honerva, who still nearly breaks it- the cost of which being the black lion, although Keith survives.

Eventually, faced with a new threat from Honerva, and left with only one functioning lion, Allura and Keith make a choice to go back to the past to prevent all of this from happening. It's a risky plan, but if they do nothing, Honerva will simply destroy all of reality which is... a lot worse than any consequences time travel has. They construct the ship using the remains of the black lion, which is why it's Keith who travels back to the past to unfuck the future.

The fic then follows Keith's attempt to do just that... although, as always, it's not that simple.

#asks#temporal shift#i have a very robust tagging system for everyone's (me included) convenience#kosmo gets to come with him. as does the AI that Pidge built before she died. that she based on herself.#(hunk and pidge eventually pass from old age and their lions go dormant)

10 notes

·

View notes

Text

It’s an open secret in fashion. Unsold inventory goes to the incinerator; excess handbags are slashed so they can’t be resold; perfectly usable products are sent to the landfill to avoid discounts and flash sales. The European Union wants to put an end to these unsustainable practices. On Monday, [December 4, 2023], it banned the destruction of unsold textiles and footwear.

“It is time to end the model of ‘take, make, dispose’ that is so harmful to our planet, our health and our economy,” MEP Alessandra Moretti said in a statement. “Banning the destruction of unsold textiles and footwear will contribute to a shift in the way fast fashion manufacturers produce their goods.”

This comes as part of a broader push to tighten sustainable fashion legislation, with new policies around ecodesign, greenwashing and textile waste phasing in over the next few years. The ban on destroying unsold goods will be among the longer lead times: large businesses have two years to comply, and SMEs have been granted up to six years. It’s not yet clear on whether the ban applies to companies headquartered in the EU, or any that operate there, as well as how this ban might impact regions outside of Europe.

For many, this is a welcome decision that indirectly tackles the controversial topics of overproduction and degrowth. Policymakers may not be directly telling brands to produce less, or placing limits on how many units they can make each year, but they are penalising those overproducing, which is a step in the right direction, says Eco-Age sustainability consultant Philippa Grogan. “This has been a dirty secret of the fashion industry for so long. The ban won’t end overproduction on its own, but hopefully it will compel brands to be better organised, more responsible and less greedy.”

Clarifications to come

There are some kinks to iron out, says Scott Lipinski, CEO of Fashion Council Germany and the European Fashion Alliance (EFA). The EFA is calling on the EU to clarify what it means by both “unsold goods” and “destruction”. Unsold goods, to the EFA, mean they are fit for consumption or sale (excluding counterfeits, samples or prototypes)...

The question of what happens to these unsold goods if they are not destroyed is yet to be answered. “Will they be shipped around the world? Will they be reused as deadstock or shredded and downcycled? Will outlet stores have an abundance of stock to sell?” asks Grogan.

Large companies will also have to disclose how many unsold consumer products they discard each year and why, a rule the EU is hoping will curb overproduction and destruction...

Could this shift supply chains?

For Dio Kurazawa, founder of sustainable fashion consultancy The Bear Scouts, this is an opportunity for brands to increase supply chain agility and wean themselves off the wholesale model so many rely on. “This is the time to get behind innovations like pre-order and on-demand manufacturing,” he says. “It’s a chance for brands to play with AI to understand the future of forecasting. Technology can help brands be more intentional with what they make, so they have less unsold goods in the first place.”

Grogan is equally optimistic about what this could mean for sustainable fashion in general. “It’s great to see that this is more ambitious than the EU’s original proposal and that it specifically calls out textiles. It demonstrates a willingness from policymakers to create a more robust system,” she says. “Banning the destruction of unsold goods might make brands rethink their production models and possibly better forecast their collections.”

One of the outstanding questions is over enforcement. Time and again, brands have used the lack of supply chain transparency in fashion as an excuse for bad behaviour. Part of the challenge with the EU’s new ban will be proving that brands are destroying unsold goods, not to mention how they’re doing it and to what extent, says Kurazawa. “Someone obviously knows what is happening and where, but will the EU?”"

-via British Vogue, December 7, 2023

#fashion#slow fashion#style#european union#eu#eu news#eu politics#sustainability#upcycle#reuse#reduce reuse recycle#ecofriendly#fashion brands#fashion trends#waste#sustainable fashion#sustainable living#eco friendly#good news#hope

10K notes

·

View notes

Text

forever tired of our voices being turned into commodity.

forever tired of thorough medaocrity in the AAC business. how that is rewarded. How it fails us as users. how not robust and only robust by small small amount communication systems always chosen by speech therapists and funded by insurance.

forever tired of profit over people.

forever tired of how companies collect data on every word we’ve ever said and sell to people.

forever tired of paying to communicate. of how uninsured disabled people just don’t get a voice many of the time. or have to rely on how AAC is brought into classrooms — which usually is managed to do in every possible wrong way.

forever tired of the branding and rebranding of how we communicate. Of this being amazing revealation over and over that nonspeakers are “in there” and should be able to say things. of how every single time this revelation comes with pre condition of leaving the rest behind, who can’t spell or type their way out of the cage of ableist oppression. or are not given chance & resources to. Of the branding being seen as revolution so many times and of these companies & practitioners making money off this “revolution.” of immersion weeks and CRP trainings that are thousands of dollars and wildly overpriced letterboards, and of that one nightmare Facebook group g-d damm it. How this all is put in language of communication freedom. 26 letters is infinite possibilities they say - but only for the richest of families and disabled people. The rest of us will have to live with fewer possibilities.

forever tired of engineer dads of AAC users who think they can revolutionize whole field of AAC with new terrible designed apps that you can’t say anything with them. of minimally useful AI features that invade every AAC app to cash in on the new moment and not as tool that if used ethically could actually help us, but as way of fixing our grammar our language our cultural syntax we built up to sound “proper” to sound normal. for a machine, a large language model to model a small language for us, turn our inhuman voices human enough.

forever tired of how that brand and marketing is never for us, never for the people who actually use it to communicate. it is always for everyone around us, our parents and teachers paras and SLPs and BCBAs and practitioners and doctors and everyone except the person who ends up stuck stuck with a bad organized bad implemented bad taught profit motivated way to talk. of it being called behavior problems low ability incompetence noncompliance when we don’t use these systems.

you all need to do better. We need to democritize our communication, put it in our own hands. (My friend & communication partner who was in Occupy Wall Street suggested phrase “Occupy AAC” and think that is perfect.) And not talking about badly made non-robust open source apps either. Yes a robust system needs money and recources to make it well. One person or community alone cannot turn a robotic voice into a human one. But our human voice should not be in hands of companies at all.

(this is about the Tobii Dynavox subscription thing. But also exploitive and capitalism practices and just lazy practices in AAC world overall. Both in high tech “ mainstream “ AAC and methods that are like ones I use in sense that are both super stigmatized and also super branded and marketed, Like RPM and S2C and spellers method. )

#I am not a product#you do not have to make a “spellers IPA beer ‘ about it I promise#communication liberation does not have a logo#AAC#capitalism#disability#nonspeaking#dd stuff#ouija talks#ouija rants

360 notes

·

View notes

Text

On Saturday, an Associated Press investigation revealed that OpenAI's Whisper transcription tool creates fabricated text in medical and business settings despite warnings against such use. The AP interviewed more than 12 software engineers, developers, and researchers who found the model regularly invents text that speakers never said, a phenomenon often called a “confabulation” or “hallucination” in the AI field.

Upon its release in 2022, OpenAI claimed that Whisper approached “human level robustness” in audio transcription accuracy. However, a University of Michigan researcher told the AP that Whisper created false text in 80 percent of public meeting transcripts examined. Another developer, unnamed in the AP report, claimed to have found invented content in almost all of his 26,000 test transcriptions.

The fabrications pose particular risks in health care settings. Despite OpenAI’s warnings against using Whisper for “high-risk domains,” over 30,000 medical workers now use Whisper-based tools to transcribe patient visits, according to the AP report. The Mankato Clinic in Minnesota and Children’s Hospital Los Angeles are among 40 health systems using a Whisper-powered AI copilot service from medical tech company Nabla that is fine-tuned on medical terminology.

Nabla acknowledges that Whisper can confabulate, but it also reportedly erases original audio recordings “for data safety reasons.” This could cause additional issues, since doctors cannot verify accuracy against the source material. And deaf patients may be highly impacted by mistaken transcripts since they would have no way to know if medical transcript audio is accurate or not.

The potential problems with Whisper extend beyond health care. Researchers from Cornell University and the University of Virginia studied thousands of audio samples and found Whisper adding nonexistent violent content and racial commentary to neutral speech. They found that 1 percent of samples included “entire hallucinated phrases or sentences which did not exist in any form in the underlying audio” and that 38 percent of those included “explicit harms such as perpetuating violence, making up inaccurate associations, or implying false authority.”

In one case from the study cited by AP, when a speaker described “two other girls and one lady,” Whisper added fictional text specifying that they “were Black.” In another, the audio said, “He, the boy, was going to, I’m not sure exactly, take the umbrella.” Whisper transcribed it to, “He took a big piece of a cross, a teeny, small piece … I’m sure he didn’t have a terror knife so he killed a number of people.”

An OpenAI spokesperson told the AP that the company appreciates the researchers’ findings and that it actively studies how to reduce fabrications and incorporates feedback in updates to the model.

Why Whisper Confabulates

The key to Whisper’s unsuitability in high-risk domains comes from its propensity to sometimes confabulate, or plausibly make up, inaccurate outputs. The AP report says, "Researchers aren’t certain why Whisper and similar tools hallucinate," but that isn't true. We know exactly why Transformer-based AI models like Whisper behave this way.

Whisper is based on technology that is designed to predict the next most likely token (chunk of data) that should appear after a sequence of tokens provided by a user. In the case of ChatGPT, the input tokens come in the form of a text prompt. In the case of Whisper, the input is tokenized audio data.

The transcription output from Whisper is a prediction of what is most likely, not what is most accurate. Accuracy in Transformer-based outputs is typically proportional to the presence of relevant accurate data in the training dataset, but it is never guaranteed. If there is ever a case where there isn't enough contextual information in its neural network for Whisper to make an accurate prediction about how to transcribe a particular segment of audio, the model will fall back on what it “knows” about the relationships between sounds and words it has learned from its training data.

According to OpenAI in 2022, Whisper learned those statistical relationships from “680,000 hours of multilingual and multitask supervised data collected from the web.” But we now know a little more about the source. Given Whisper's well-known tendency to produce certain outputs like "thank you for watching," "like and subscribe," or "drop a comment in the section below" when provided silent or garbled inputs, it's likely that OpenAI trained Whisper on thousands of hours of captioned audio scraped from YouTube videos. (The researchers needed audio paired with existing captions to train the model.)

There's also a phenomenon called “overfitting” in AI models where information (in this case, text found in audio transcriptions) encountered more frequently in the training data is more likely to be reproduced in an output. In cases where Whisper encounters poor-quality audio in medical notes, the AI model will produce what its neural network predicts is the most likely output, even if it is incorrect. And the most likely output for any given YouTube video, since so many people say it, is “thanks for watching.”

In other cases, Whisper seems to draw on the context of the conversation to fill in what should come next, which can lead to problems because its training data could include racist commentary or inaccurate medical information. For example, if many examples of training data featured speakers saying the phrase “crimes by Black criminals,” when Whisper encounters a “crimes by [garbled audio] criminals” audio sample, it will be more likely to fill in the transcription with “Black."

In the original Whisper model card, OpenAI researchers wrote about this very phenomenon: "Because the models are trained in a weakly supervised manner using large-scale noisy data, the predictions may include texts that are not actually spoken in the audio input (i.e. hallucination). We hypothesize that this happens because, given their general knowledge of language, the models combine trying to predict the next word in audio with trying to transcribe the audio itself."

So in that sense, Whisper "knows" something about the content of what is being said and keeps track of the context of the conversation, which can lead to issues like the one where Whisper identified two women as being Black even though that information was not contained in the original audio. Theoretically, this erroneous scenario could be reduced by using a second AI model trained to pick out areas of confusing audio where the Whisper model is likely to confabulate and flag the transcript in that location, so a human could manually check those instances for accuracy later.

Clearly, OpenAI's advice not to use Whisper in high-risk domains, such as critical medical records, was a good one. But health care companies are constantly driven by a need to decrease costs by using seemingly "good enough" AI tools—as we've seen with Epic Systems using GPT-4 for medical records and UnitedHealth using a flawed AI model for insurance decisions. It's entirely possible that people are already suffering negative outcomes due to AI mistakes, and fixing them will likely involve some sort of regulation and certification of AI tools used in the medical field.

87 notes

·

View notes

Text

Friendly reminder that Wix.com is an Israeli-based company (& some website builders to look into instead)

I know the BDS movement is not targeting Wix.com specifically (see here for the companies they're currently boycotting) but since Wix originated in Israel as early as 2006, it would be best to drop them as soon as you can.

And while you're at it, you should leave DeviantArt too, since that company is owned by Wix. I deleted my DA account about a year ago not just because of their generative AI debacle but also because of their affiliation with their parent company. And just last month, DA has since shown their SUPPORT for Israel in the middle of Israel actively genociding the Palestinian people 😬

Anyway, I used to use Wix and I stopped using it around the same time that I left DA, but I never closed my Wix account until now. What WAS nice about Wix was how easy it was to build a site with nothing but a drag-and-drop system without any need to code.

So if you're using Wix for your portfolio, your school projects, or for anything else, then where can you go?

Here are some recommendations that you can look into for website builders that you can start for FREE and are NOT tied to a big, corporate entity (below the cut) 👇👇

Carrd.co

This is what I used to build my link hub and my portfolio, so I have the most experience with this platform.

It's highly customizable with a drag-and-drop arrangement system, but it's not as open-ended as Wix. Still though, it's easy to grasp & set up without requiring any coding knowledge. The most "coding" you may ever have to deal with is markdown formatting (carrd provides an on-screen cheatsheet whenever you're editing text!) and section breaks (which is used to define headers, footers, individual pages, sections of a page, etc.) which are EXTREMELY useful.

There's limits to using this site builder for free (max of 2 websites & a max of 100 elements per site), but even then you can get a lot of mileage out of carrd.

mmm.page

This is a VERY funny & charming website builder. The drag-and-drop system is just as open-ended as Wix, but it encourages you to get messy. Hell, you can make it just as messy as the early internet days, except the way you can arrange elements & images allows for more room for creativity.

Straw.page

This is an extremely simple website builder that you can start from scratch, except it's made to be accessible from your phone. As such, the controls are limited and intentionally simple, but I can see this being a decent website builder to start with if all you have is your phone. The other options above are also accessible from your phone, but this one is by far one of the the simplest website builders available.

Hotglue.me

This is also a very simple & rudimentary website builder that allows you to make a webpage from scratch, except it's not as easy to use on a mobile phone.

At a glance, its features are not as robust or easy to pick up like the previous options, but you can still create objects with a simple double click and drag them around, add text, and insert images or embeds.

Mind you, this launched in the 2010s and has likely stayed that way ever since, which means that it may not have support for mobile phone displays, so whether or not you wanna try your hand at building something on there is completely up to you!

Sadgrl's Layout Editor

sadgrl.online is where I gathered most of these no-code site builders! I highly recommend looking through the webmaster links for more website-building info.

This simple site builder is for use on Neocities, which is a website hosting service that you can start using for free. This is the closest thing to building a site that resembles the early internet days, but the sites you can make are also responsive to mobile devices! This can be a good place to start if this kind of thing is your jam and you have little to no coding experience.

Although I will say, even if it sounds daunting at first, learning how to code in HTML and CSS is one of the most liberating experiences that anyone can have, even if you don't come from a website scripting background. It's like cooking a meal for yourself. So if you want to take that route, then I encourage to you at least try it!

Most of these website builders I reviewed were largely done at a glance, so I'm certainly missing out on how deep they can go.

Oh, and of course as always, Free Palestine 🇵🇸

#webdev#web dev#webdesign#website design#website development#website builder#web design#websites#sites#free palestine#long post#I changed the wording multiple times on the introduction but NOW I think im done editing it

501 notes

·

View notes

Text

The Witcher 4 Tech Demo Debuts

youtube

CD PROJEKT RED and Epic Games Present The Witcher 4 Unreal Engine 5 Tech Demo at The State of Unreal 2025!

At Unreal Fest Orlando, the State of Unreal keynote opened with a live on-stage presentation that offered an early glimpse into the latest Unreal Engine 5 features bringing the open world of The Witcher 4 to life.

Spotlight:

Tech demo showcased how the CD PROJEKT RED and Epic Games are working together to power the world of The Witcher 4 on PC, PlayStation, and Xbox, and bring large open-world support to Unreal Engine. The tech demo takes place in the never-before-seen region of Kovir.

As Unreal Fest 2025 kicked off, CD PROJEKT RED joined Epic Games on stage to present a tech demo of The Witcher 4 in Unreal Engine 5 (UE5). Presented in typical CDPR style, the tech demo follows the main protagonist Ciri in the midst of a monster contract and shows off some of the innovative UE5 technology and features that will power the game’s open world.

The tech demo takes place in the region of Kovir — which will make its very first appearance in the video game series in The Witcher 4. The presentation followed main protagonist Ciri — along with her horse Kelpie — as she made her way through the rugged mountains and dense forests of Kovir to the bustling port town of Valdrest. Along the way, CD PROJEKT RED and Epic Games dove deep into how each feature is helping drive performance, visual fidelity, and shape The Witcher 4’s immersive open world.

Watch the full presentation from Unreal Fest 2025 now at LINK.

Since the strategic partnership was announced in 2022, CDPR has been working with Epic Games to develop new tools and enhance existing features in Unreal Engine 5 to expand the engine’s open-world development capabilities and establish robust tools geared toward CD PROJEKT RED’s open-world design philosophies. The demo, which runs on a PlayStation 5 at 60 frames per second, shows off in-engine capabilities set in the world of The Witcher 4, including the new Unreal Animation Framework, Nanite Foliage rendering, MetaHuman technology with Mass AI crowd scaling, and more. The tools showcased are being developed, tested, and eventually released to all UE developers, starting with today’s Unreal Engine 5.6 release. This will help other studios create believable and immersive open-world environments that deliver performance at 60 FPS without compromising on quality — even at vast scales. While the presentation was running on a PlayStation console, the features and technology will be supported across all platforms the game will launch on.

The Unreal Animation Framework powers realistic character movements in busy scenes. FastGeo Streaming, developed in collaboration with Epic Games, allows environments to load quickly and smoothly. Nanite Foliage fills forests and fields with dense detail without sacrificing performance. The Mass system handles large, dynamic crowds with ease, while ML Deformer adds subtle, realistic touches to character animation — right down to muscle movement.

Speaking on The Witcher 4 Unreal Engine 5 tech demo, Joint-CEO of CD PROJEKT RED,

Michał Nowakowski stated:

“We started our partnership with Epic Games to push open-world game technology forward. To show this early look at the work we’ve been doing using Unreal Engine running at 60 FPS on PlayStation 5, is a significant milestone — and a testament of the great cooperation between our teams. But we're far from finished. I look forward to seeing more advancements and inspiring technology from this partnership as development of The Witcher 4 on Unreal Engine 5 continues.”

Tim Sweeney, Founder and CEO of Epic Games said:

“CD PROJEKT RED is one of the industry’s best open-world game studios, and we’re grateful that they’re working with us to push Unreal Engine forward with The Witcher 4. They are the perfect partner to help us develop new world-building features that we can share with all Unreal Engine developers.”

For more information on The Witcher 4, please visit the official website. More information about The Witcher series can be found on the official official website, X, Bluesky, and Facebook.

#the witcher#the witcher 4#cd projekt red#video games#gaming#gaming news#screenshtos#unreal engine#unreal engine 5#UE5#game development#tech demo#game design#Youtube

24 notes

·

View notes

Text

C. Harding. 26. LA

Cole never considered himself a criminal—just a guy with a keyboard and a grudge against greed.

He sat alone in his dimly lit apartment, the glow of six monitors casting shadows across the walls. The logo of Monolith Industries blinked on his primary screen — a conglomerate lauded for innovation but infamous in certain circles for its exploitation of third-world labor and secret shell companies funneling money into offshore tax havens.

Cole had the evidence. Internal audits, forged compliance reports, suppressed whistleblower emails. He'd spent the past few weeks embedded in their systems, silently watching, mapping.

Monolith’s financial systems were robust, but not invincible. Their offshore accounts used a third-party transfer service with a known vulnerability in its authentication layer — a flaw Cole had quietly discovered during a penetration test. He had reported it, of course. The company had dismissed it. Typical.

His plan was surgical. No flashy ransomware or Bitcoin ransom letters. Just a clean, untraceable siphon—millions in stolen wage funds and environmental penalties rerouted into nonprofit organizations.

At 03:14 UTC, Cole executed the breach from a secure node in Iceland. Using a zero-day exploit in Monolith’s AI-managed treasury subsystem, he bypassed biometric authentication and triggered a silent override to reassign access credentials. The screen blinked.

Access Granted.

Within ten minutes, the accounts were liquidated into an elaborate maze of crypto wallets, masking the origin of the funds. By 03:27, the transfers were complete—$68.4 million redirected to various verified humanitarian causes and a small portion for himself.

The next morning, the media exploded with coverage. Monolith's CEO stammered through a press conference; the FBI called it “an act of cyberterrorism.”

Cole logged off.

46 notes

·

View notes

Text

MAN, can you imagine the clusterfuck of working at a company that’s become reliant on an AI layer between itself/its employees, and knowing how to do their jobs and use their systems and stuff? Like when that AI layer goes down, poof, you’re all hosed. And they don’t strike me as super robust…

I guess there are ways of training and running them locally, but they’re so seductive they’re definitely going to be deployed in places that aren’t up to the task of maintaining them in a sane state. Like… damn… cutting headcount in favor of relying on AI is like. A raccoon stuffing its head into a yogurt container. This is gonna be killing off organizations in a few years.

Unless AI gets good at destroying preexisting fucked up byzantine workflows and replacing them with simpler, human-friendly ones. That would be okay. But it is gonna irrevocably destroy a lot of records and botch a lot of database migrations on its way there.

29 notes

·

View notes

Text

Based on the search results, here are some innovative technologies that RideBoom could implement to enhance the user experience and stay ahead of ONDC:

Enhanced Safety Measures: RideBoom has already implemented additional safety measures, including enhanced driver background checks, real-time trip monitoring, and improved emergency response protocols. [1] To stay ahead, they could further enhance safety by integrating advanced telematics and AI-powered driver monitoring systems to ensure safe driving behavior.

Personalized and Customizable Services: RideBoom could introduce a more personalized user experience by leveraging data analytics and machine learning to understand individual preferences and offer tailored services. This could include features like customizable ride preferences, personalized recommendations, and the ability to save preferred routes or driver profiles. [1]

Seamless Multimodal Integration: To provide a more comprehensive transportation solution, RideBoom could integrate with other modes of transportation, such as public transit, bike-sharing, or micro-mobility options. This would allow users to plan and book their entire journey seamlessly through the RideBoom app, enhancing the overall user experience. [1]

Sustainable and Eco-friendly Initiatives: RideBoom has already started introducing electric and hybrid vehicles to its fleet, but they could further expand their green initiatives. This could include offering incentives for eco-friendly ride choices, partnering with renewable energy providers, and implementing carbon offset programs to reduce the environmental impact of their operations. [1]

Innovative Payment and Loyalty Solutions: To stay competitive with ONDC's zero-commission model, RideBoom could explore innovative payment options, such as integrated digital wallets, subscription-based services, or loyalty programs that offer rewards and discounts to frequent users. This could help attract and retain customers by providing more value-added services. [2]

Robust Data Analytics and Predictive Capabilities: RideBoom could leverage advanced data analytics and predictive modeling to optimize their operations, anticipate demand patterns, and proactively address user needs. This could include features like dynamic pricing, intelligent routing, and personalized recommendations to enhance the overall user experience. [1]

By implementing these innovative technologies, RideBoom can differentiate itself from ONDC, provide a more seamless and personalized user experience, and stay ahead of the competition in the on-demand transportation market.

#rideboom#rideboom app#delhi rideboom#ola cabs#biketaxi#uber#rideboom taxi app#ola#uber driver#uber taxi#rideboomindia#rideboom uber

57 notes

·

View notes

Text

"Open" "AI" isn’t

Tomorrow (19 Aug), I'm appearing at the San Diego Union-Tribune Festival of Books. I'm on a 2:30PM panel called "Return From Retirement," followed by a signing:

https://www.sandiegouniontribune.com/festivalofbooks

The crybabies who freak out about The Communist Manifesto appearing on university curriculum clearly never read it – chapter one is basically a long hymn to capitalism's flexibility and inventiveness, its ability to change form and adapt itself to everything the world throws at it and come out on top:

https://www.marxists.org/archive/marx/works/1848/communist-manifesto/ch01.htm#007

Today, leftists signal this protean capacity of capital with the -washing suffix: greenwashing, genderwashing, queerwashing, wokewashing – all the ways capital cloaks itself in liberatory, progressive values, while still serving as a force for extraction, exploitation, and political corruption.

A smart capitalist is someone who, sensing the outrage at a world run by 150 old white guys in boardrooms, proposes replacing half of them with women, queers, and people of color. This is a superficial maneuver, sure, but it's an incredibly effective one.

In "Open (For Business): Big Tech, Concentrated Power, and the Political Economy of Open AI," a new working paper, Meredith Whittaker, David Gray Widder and Sarah B Myers document a new kind of -washing: openwashing:

https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4543807

Openwashing is the trick that large "AI" companies use to evade regulation and neutralizing critics, by casting themselves as forces of ethical capitalism, committed to the virtue of openness. No one should be surprised to learn that the products of the "open" wing of an industry whose products are neither "artificial," nor "intelligent," are also not "open." Every word AI huxters say is a lie; including "and," and "the."

So what work does the "open" in "open AI" do? "Open" here is supposed to invoke the "open" in "open source," a movement that emphasizes a software development methodology that promotes code transparency, reusability and extensibility, which are three important virtues.

But "open source" itself is an offshoot of a more foundational movement, the Free Software movement, whose goal is to promote freedom, and whose method is openness. The point of software freedom was technological self-determination, the right of technology users to decide not just what their technology does, but who it does it to and who it does it for:

https://locusmag.com/2022/01/cory-doctorow-science-fiction-is-a-luddite-literature/

The open source split from free software was ostensibly driven by the need to reassure investors and businesspeople so they would join the movement. The "free" in free software is (deliberately) ambiguous, a bit of wordplay that sometimes misleads people into thinking it means "Free as in Beer" when really it means "Free as in Speech" (in Romance languages, these distinctions are captured by translating "free" as "libre" rather than "gratis").

The idea behind open source was to rebrand free software in a less ambiguous – and more instrumental – package that stressed cost-savings and software quality, as well as "ecosystem benefits" from a co-operative form of development that recruited tinkerers, independents, and rivals to contribute to a robust infrastructural commons.

But "open" doesn't merely resolve the linguistic ambiguity of libre vs gratis – it does so by removing the "liberty" from "libre," the "freedom" from "free." "Open" changes the pole-star that movement participants follow as they set their course. Rather than asking "Which course of action makes us more free?" they ask, "Which course of action makes our software better?"

Thus, by dribs and drabs, the freedom leeches out of openness. Today's tech giants have mobilized "open" to create a two-tier system: the largest tech firms enjoy broad freedom themselves – they alone get to decide how their software stack is configured. But for all of us who rely on that (increasingly unavoidable) software stack, all we have is "open": the ability to peer inside that software and see how it works, and perhaps suggest improvements to it:

https://www.youtube.com/watch?v=vBknF2yUZZ8

In the Big Tech internet, it's freedom for them, openness for us. "Openness" – transparency, reusability and extensibility – is valuable, but it shouldn't be mistaken for technological self-determination. As the tech sector becomes ever-more concentrated, the limits of openness become more apparent.

But even by those standards, the openness of "open AI" is thin gruel indeed (that goes triple for the company that calls itself "OpenAI," which is a particularly egregious openwasher).

The paper's authors start by suggesting that the "open" in "open AI" is meant to imply that an "open AI" can be scratch-built by competitors (or even hobbyists), but that this isn't true. Not only is the material that "open AI" companies publish insufficient for reproducing their products, even if those gaps were plugged, the resource burden required to do so is so intense that only the largest companies could do so.

Beyond this, the "open" parts of "open AI" are insufficient for achieving the other claimed benefits of "open AI": they don't promote auditing, or safety, or competition. Indeed, they often cut against these goals.

"Open AI" is a wordgame that exploits the malleability of "open," but also the ambiguity of the term "AI": "a grab bag of approaches, not… a technical term of art, but more … marketing and a signifier of aspirations." Hitching this vague term to "open" creates all kinds of bait-and-switch opportunities.

That's how you get Meta claiming that LLaMa2 is "open source," despite being licensed in a way that is absolutely incompatible with any widely accepted definition of the term:

https://blog.opensource.org/metas-llama-2-license-is-not-open-source/

LLaMa-2 is a particularly egregious openwashing example, but there are plenty of other ways that "open" is misleadingly applied to AI: sometimes it means you can see the source code, sometimes that you can see the training data, and sometimes that you can tune a model, all to different degrees, alone and in combination.

But even the most "open" systems can't be independently replicated, due to raw computing requirements. This isn't the fault of the AI industry – the computational intensity is a fact, not a choice – but when the AI industry claims that "open" will "democratize" AI, they are hiding the ball. People who hear these "democratization" claims (especially policymakers) are thinking about entrepreneurial kids in garages, but unless these kids have access to multi-billion-dollar data centers, they can't be "disruptors" who topple tech giants with cool new ideas. At best, they can hope to pay rent to those giants for access to their compute grids, in order to create products and services at the margin that rely on existing products, rather than displacing them.

The "open" story, with its claims of democratization, is an especially important one in the context of regulation. In Europe, where a variety of AI regulations have been proposed, the AI industry has co-opted the open source movement's hard-won narrative battles about the harms of ill-considered regulation.

For open source (and free software) advocates, many tech regulations aimed at taming large, abusive companies – such as requirements to surveil and control users to extinguish toxic behavior – wreak collateral damage on the free, open, user-centric systems that we see as superior alternatives to Big Tech. This leads to the paradoxical effect of passing regulation to "punish" Big Tech that end up simply shaving an infinitesimal percentage off the giants' profits, while destroying the small co-ops, nonprofits and startups before they can grow to be a viable alternative.

The years-long fight to get regulators to understand this risk has been waged by principled actors working for subsistence nonprofit wages or for free, and now the AI industry is capitalizing on lawmakers' hard-won consideration for collateral damage by claiming to be "open AI" and thus vulnerable to overbroad regulation.

But the "open" projects that lawmakers have been coached to value are precious because they deliver a level playing field, competition, innovation and democratization – all things that "open AI" fails to deliver. The regulations the AI industry is fighting also don't necessarily implicate the speech implications that are core to protecting free software:

https://www.eff.org/deeplinks/2015/04/remembering-case-established-code-speech

Just think about LLaMa-2. You can download it for free, along with the model weights it relies on – but not detailed specs for the data that was used in its training. And the source-code is licensed under a homebrewed license cooked up by Meta's lawyers, a license that only glancingly resembles anything from the Open Source Definition:

https://opensource.org/osd/

Core to Big Tech companies' "open AI" offerings are tools, like Meta's PyTorch and Google's TensorFlow. These tools are indeed "open source," licensed under real OSS terms. But they are designed and maintained by the companies that sponsor them, and optimize for the proprietary back-ends each company offers in its own cloud. When programmers train themselves to develop in these environments, they are gaining expertise in adding value to a monopolist's ecosystem, locking themselves in with their own expertise. This a classic example of software freedom for tech giants and open source for the rest of us.

One way to understand how "open" can produce a lock-in that "free" might prevent is to think of Android: Android is an open platform in the sense that its sourcecode is freely licensed, but the existence of Android doesn't make it any easier to challenge the mobile OS duopoly with a new mobile OS; nor does it make it easier to switch from Android to iOS and vice versa.

Another example: MongoDB, a free/open database tool that was adopted by Amazon, which subsequently forked the codebase and tuning it to work on their proprietary cloud infrastructure.

The value of open tooling as a stickytrap for creating a pool of developers who end up as sharecroppers who are glued to a specific company's closed infrastructure is well-understood and openly acknowledged by "open AI" companies. Zuckerberg boasts about how PyTorch ropes developers into Meta's stack, "when there are opportunities to make integrations with products, [so] it’s much easier to make sure that developers and other folks are compatible with the things that we need in the way that our systems work."

Tooling is a relatively obscure issue, primarily debated by developers. A much broader debate has raged over training data – how it is acquired, labeled, sorted and used. Many of the biggest "open AI" companies are totally opaque when it comes to training data. Google and OpenAI won't even say how many pieces of data went into their models' training – let alone which data they used.

Other "open AI" companies use publicly available datasets like the Pile and CommonCrawl. But you can't replicate their models by shoveling these datasets into an algorithm. Each one has to be groomed – labeled, sorted, de-duplicated, and otherwise filtered. Many "open" models merge these datasets with other, proprietary sets, in varying (and secret) proportions.

Quality filtering and labeling for training data is incredibly expensive and labor-intensive, and involves some of the most exploitative and traumatizing clickwork in the world, as poorly paid workers in the Global South make pennies for reviewing data that includes graphic violence, rape, and gore.

Not only is the product of this "data pipeline" kept a secret by "open" companies, the very nature of the pipeline is likewise cloaked in mystery, in order to obscure the exploitative labor relations it embodies (the joke that "AI" stands for "absent Indians" comes out of the South Asian clickwork industry).

The most common "open" in "open AI" is a model that arrives built and trained, which is "open" in the sense that end-users can "fine-tune" it – usually while running it on the manufacturer's own proprietary cloud hardware, under that company's supervision and surveillance. These tunable models are undocumented blobs, not the rigorously peer-reviewed transparent tools celebrated by the open source movement.

If "open" was a way to transform "free software" from an ethical proposition to an efficient methodology for developing high-quality software; then "open AI" is a way to transform "open source" into a rent-extracting black box.

Some "open AI" has slipped out of the corporate silo. Meta's LLaMa was leaked by early testers, republished on 4chan, and is now in the wild. Some exciting stuff has emerged from this, but despite this work happening outside of Meta's control, it is not without benefits to Meta. As an infamous leaked Google memo explains:

Paradoxically, the one clear winner in all of this is Meta. Because the leaked model was theirs, they have effectively garnered an entire planet's worth of free labor. Since most open source innovation is happening on top of their architecture, there is nothing stopping them from directly incorporating it into their products.

https://www.searchenginejournal.com/leaked-google-memo-admits-defeat-by-open-source-ai/486290/

Thus, "open AI" is best understood as "as free product development" for large, well-capitalized AI companies, conducted by tinkerers who will not be able to escape these giants' proprietary compute silos and opaque training corpuses, and whose work product is guaranteed to be compatible with the giants' own systems.

The instrumental story about the virtues of "open" often invoke auditability: the fact that anyone can look at the source code makes it easier for bugs to be identified. But as open source projects have learned the hard way, the fact that anyone can audit your widely used, high-stakes code doesn't mean that anyone will.

The Heartbleed vulnerability in OpenSSL was a wake-up call for the open source movement – a bug that endangered every secure webserver connection in the world, which had hidden in plain sight for years. The result was an admirable and successful effort to build institutions whose job it is to actually make use of open source transparency to conduct regular, deep, systemic audits.

In other words, "open" is a necessary, but insufficient, precondition for auditing. But when the "open AI" movement touts its "safety" thanks to its "auditability," it fails to describe any steps it is taking to replicate these auditing institutions – how they'll be constituted, funded and directed. The story starts and ends with "transparency" and then makes the unjustifiable leap to "safety," without any intermediate steps about how the one will turn into the other.

It's a Magic Underpants Gnome story, in other words:

Step One: Transparency

Step Two: ??

Step Three: Safety

https://www.youtube.com/watch?v=a5ih_TQWqCA

Meanwhile, OpenAI itself has gone on record as objecting to "burdensome mechanisms like licenses or audits" as an impediment to "innovation" – all the while arguing that these "burdensome mechanisms" should be mandatory for rival offerings that are more advanced than its own. To call this a "transparent ruse" is to do violence to good, hardworking transparent ruses all the world over:

https://openai.com/blog/governance-of-superintelligence

Some "open AI" is much more open than the industry dominating offerings. There's EleutherAI, a donor-supported nonprofit whose model comes with documentation and code, licensed Apache 2.0. There are also some smaller academic offerings: Vicuna (UCSD/CMU/Berkeley); Koala (Berkeley) and Alpaca (Stanford).

These are indeed more open (though Alpaca – which ran on a laptop – had to be withdrawn because it "hallucinated" so profusely). But to the extent that the "open AI" movement invokes (or cares about) these projects, it is in order to brandish them before hostile policymakers and say, "Won't someone please think of the academics?" These are the poster children for proposals like exempting AI from antitrust enforcement, but they're not significant players in the "open AI" industry, nor are they likely to be for so long as the largest companies are running the show:

https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4493900

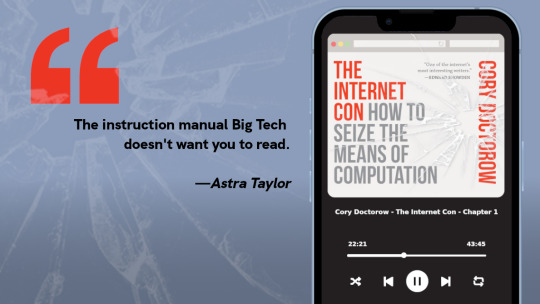

I'm kickstarting the audiobook for "The Internet Con: How To Seize the Means of Computation," a Big Tech disassembly manual to disenshittify the web and make a new, good internet to succeed the old, good internet. It's a DRM-free book, which means Audible won't carry it, so this crowdfunder is essential. Back now to get the audio, Verso hardcover and ebook:

http://seizethemeansofcomputation.org

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2023/08/18/openwashing/#you-keep-using-that-word-i-do-not-think-it-means-what-you-think-it-means

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#llama-2#meta#openwashing#floss#free software#open ai#open source#osi#open source initiative#osd#open source definition#code is speech

253 notes

·

View notes

Text

I'm in some kind of raw and unwell state rn so fuck it: writing up my notes on the Objectophile Ford x AI Fidds AU that haunts my dreams. basic premise is that Fidds dies when he goes through the portal, but has backed up his consciousness digitally somehow out of paranoia + fear, so now Ford is dealing with grieving him (sorta), hiding a dead body, figuring out where to house the artificial McGucket, and also Bill.

general warning for suggestive text + corpse shenanigans below.

so imagine you're Ford and during your portal test, your best friend + QPP has been accidentally sucked through, comes out and spouts some crazy shit, and then dies in your arms immediately. of all the things you have in this goddamn lab, an AED is not one of them. hysterical, poorly-applied CPR ensues; it wouldn't have worked anyway; oh God What Have You Done.

thru all of this Bill is trying to get Ford's attention but he's blocked him out, all Ford can focus on is his grief + guilt + refusal to believe this is the end--wait, hadn't he made fun of Fidds just the other day for backing up his consciousness to a hard drive?

it's a black box. a bit of a Schrodinger conundrum. Fidds was always too scared to activate it while he was alive because he was terrified they'd diverge in an uncontrollable way and a variety of ethical and moral quandaries/existential questions would ensue. so whether the backup is truly Fidds, or whether it's even an independent consciousness at all, Ford doesn't know.

so the issue is that Ford isn't the computer guy, Fidds was. he doesn't really know much about data storage, much less the type of libraries necessary to host a consciousness. his first attempt is to plug Fidds 2.0 into the dummy they were going to send through, as it's equipped with a robust-enough suite of data collection and storage, designed to record information about the other side. it's like digital claustrophobia. F2.0 panics, there's not enough room in here, overloads the dummy, and prompts a small explosion. some data was lost in the process but nobody knows how much.

ok. F2.0 had too much BDE for a mannequin. Ford has to now build a system that can unpack the drive, and Fidds's help would be so appreciated here...irony. Ford just about works himself into a state of panicked dissociation over how much he doesn't know what to do and can't do this alone, at which point Bill realizes this guy is no use to him frantic and gives the suggestion that, hey, isn't the lab just one big computer in a way? and hadn't they overdone the data storage, just to ensure they could collate information from multiple portal tests over time?

(realism time-out: based on even our rudimentary neural networks today, absolutely zero shot that they had the room to house an actual indexed consciousness in full. HOWEVER, consider: cartoon logic + Fidds can do whatever he wants forever. i'm talking encoding himself as a Mandelbrot set, which, despite its infinite ability to fractal, is created out of only a very small chunk of data.)

"I should save at least the head," Ford thinks to himself (in re: Fidds's dead body). "Perhaps I can wire it into the system so he can at least use his own voice somehow." go to sleep man you are losing it.

it's cold enough on the portal floor that the body should probably be fine. mostly. you know, relatively speaking. whatever!

Bill, meanwhile, is thinking of ways he can encode himself as a computer virus and supersede Fidds once Fidds has re-indexed the lab system to support an intelligent consciousness.

Ford is gonna take Bill's suggestion because it's the only good one and he's not the computer guy. HOWEVER. hang on a fuckin second. Bill killed Fidds. This whole thing was his idea--he probably had some way to know this was a possibility, and he didn't say anything.

so he takes a sledgehammer to some very important parts. this frees up more processing power for Fidds 2.0 anyway, but also has the effect of Pissing Bill The Hell Off.

anyway. he uploads his best friend and then hunches in a shuddering trauma-puddle on the floor, trying to stay awake so Bill can't get in.

plot stuff. Fidds is even better with computers when he IS a computer. he can use old videos of himself to deepfake his side of the conversation on a monitor. neat!

oh hey buddy uh. it turns out that migrating a neural-input-based consciousness to a hardwired system causes some, er...funny effects. yeah when you touch the wires he can feel that.

Ford, who didn't really Get what was so exciting about sex or other people's bodies before, is starting to come to the realization that now that Fidds is a computer, he's Very Turned On.

mmmmmm oh my god cable management. hello. cables he can wind through all six fingers. the static display where Fidds usually projects his avatar or whatever is just looping incomprehensible binary, the computer equivalent of a moan. haha sorry totally didn't know that would happen and won't do it again--

gay (?) chicken ensues. is it socially acceptable, Ford wonders, to say, "Hey, i found your human living form unattractive and sexless, but now that you're dead (in part because i didn't listen to you) and confined to a supercomputer, I'm into you"? no, surely not; far more sensible to come up with more and more reasons to re-solder those ports in juuuust the right ways and pretend he doesn't notice why the system's overloading.

there is only one way this ends: probably Ford passing out in his own cum in a mass of cables. yeah. that's a good image. or Fidds getting fed up and starting to project his avatar naked and writhing sexually until he's forced to say something. a USB drive is just an angel you can fuck. etc etc

oh yeah, Bill. Ford basically uses Project Mentem to project himself into the system (not for long as this uses up a lot of processing power) and they all have a Scott Pilgrim-esque fight in which Bill loses. get axolotl'd, idiot.

and they live happily ever after in their weird little man:machine interface situationship. and probably confront many existential questions about the nature of consciousness and whether Fidds 2.0 is the same person or not. whatever. fuck you.

17 notes

·

View notes

Text

youtube

Tribute AMV for Dr. Underfang and Mrs. Natalie Nice/Nautilus.

From TyrannoMax and the Warriors of the Core, everyone's favorite Buzby-Spurlock animated series.

After all, who doesn't love a good bad guy, especially when they come in pairs?

Process/Tutorial Under the Fold.

This is, of course, a part of my TyrannoMax unreality project, with most of these video clips coming from vidu, taking advantage of their multi-entity consistency feature (more on that later). This is going to be part of a larger villain showcase video, but this section is going to be its own youtube short, so its an video on its own.

The animation here is intentionally less smooth than the original, as I'm going for a 1980s animated series look, and even in the well-animated episodes you were typically getting 12 FPS (animating 'on twos'), with 8 (on threes) being way more common. As I get access to better animation software to rework these (currently just fuddling along with PS) I'm going to start using this to my advantage by selectively dropping blurry intermediate frames.

I went with 12 since most of these clips are, in the meta-lore, from the opening couple of episodes and the opening credits, where most of the money for a series went back in the day.

Underfang's transformation sequence was my testing for several of my techniques for making larger TyrannoMax videos. Among those was selectively dropping some of the warped frames as I mentioned above, though for a few shots I had to wind up re-painting sections.

Multi-entity consistency can keep difficult dinosaur characters stable on their own, but it wasn't up to the task of keeping the time-temple accurate enough for my use, as you can see here with the all-t-rex- and-some-moving-statues, verses the multi-species effort I had planned:

The answer was simple, chroma-key.

Most of the Underfang transformation shots were done this way. The foot-stomp was too good to leave just because he sprouted some extra toes, so that was worth repainting a few frames of in post.

Vidu kind of over-did the texturing on a few shots (and magenta was a poor choice of key-color) so I had to go in and manually purple-ize the background frame by frame for the spin-shot.

This is on top of the normal cropping, scaling, color-correcting, etc that goes into any editing job of this type.

It's like I say: nearly all AI you see is edited, most of it curated, even the stuff that's awful and obvious (never forget: enragement is engagement)

Multi-Entity Consistency:

Vidu's big advantage is reference-to-video. For those who have been following the blog for awhile, R2V is sort of like Midjourney's --cref character reference feature. A lot of video AIs have start-end frame functionality, but being able to give the robot a model sheet and effectively have it run with it is a darn nice feature for narrative.

Unlike the current version of Midjourney's --cref feature, however, you can reference multiple concepts with multiple images.

It is super-helpful when you need to get multiple characters to interact, because without it, they tend to blend into each other conceptually.

I also use it to add locations, mainly to keep them looking appropriately background-painting rather than a 3d background or something that looks like a modded photo like a lot of modern animation does.

The potential here for using this tech as a force multiplier for small animation projects really shines through, and I really hope I'm just one of several attempting to use it for that purpose.

Music:

The song is "The Boys Have a Second Lead Pipe", one of my Suno creations. I was thinking of using Dinowave (Let's Dance To) but I'm saving that for a music video of live-action dinosovians.

Prompting:

You can tell by the screenshot above that my prompts have gotten... robust. Vidu's prompting system seems to understand things better when given tighter reigns (some AIs have the opposite effect), and takes information with time-codes semi-regularly, so my prompts are now more like:

low-angle shot, closeup, of a green tyrannosaurus-mad-scientist wearing a blue shirt and purple tie with white lab coat and a lavender octopus-woman with tentacles growing from her head, wearing a teal blouse, purple skirt, purple-gray pantyhose. they stand close to each other, arms crossed, laughing evilly. POV shot of them looming over the viewer menacingly. The background is a city, in the style of animation background images. 1986 vintage cel-shaded cartoon clip, a dinosaur-anthro wearing a lab coat, shirt and tie reaches into his coat with his right hand and pulls out a laser gun, he takes aim, points the laser gun at the camera and fires. The laser effect is short streaks of white energy with a purple glow. The whole clip has the look and feel of vintage 1986 action adventure cel-animated cartoons. The animation quality is high, with flawless motion and anatomy. animated by Tokyo Movie Shinsha, studio Ghibli, don bluth. BluRay remaster.

While others approach the scripted with time-code callouts for individual actions.

#Youtube#tyrannomax and the warriors of the core#unreality#tyrannomax#fauxstalgia#Dr. Underfang#Mrs. Nautilus#Mrs. Nice#80s cartoons#animation#ai assisted art#my OC#vidu#vidu ai#viduchallenge#MultiEntityConsistency#ai video#ai tutorial

23 notes

·

View notes

Text

youtube

The Witcher 4 — Unreal Engine 5 Tech Demo

The Witcher IV is in development for PlayStation 5, Xbox Series X|S, and PC. A release date has yet to be announced.

Latest details

As Unreal Fest 2025 kicked off, CD Projekt RED joined Epic Games on stage to present a tech demo of The Witcher IV in Unreal Engine 5. Presented in typical CD Projekt RED style, the tech demo follows the main protagonist Ciri in the midst of a monster contract and shows off some of the innovative Unreal Engine 5 technology and features that will power the game’s open world. The tech demo takes place in the region of Kovir—which will make its very first appearance in the video game series in The Witcher IV. The presentation followed main protagonist Ciri—along with her horse Kelpie—as she made her way through the rugged mountains and dense forests of Kovir to the bustling port town of Valdrest. Along the way, CD PROJEKT RED and Epic Games dove deep into how each feature is helping drive performance, visual fidelity, and shape The Witcher IV‘s immersive open world. Watch the full presentation from Unreal Fest 2025 now at LINK. Since the strategic partnership was announced in 2022, CDPR has been working with Epic Games to develop new tools and enhance existing features in Unreal Engine 5 to expand the engine’s open-world development capabilities and establish robust tools geared toward CD PROJEKT RED’s open-world design philosophies. The demo, which runs on a PlayStation 5 at 60 frames per second, shows off in-engine capabilities set in the world of The Witcher IV, including the new Unreal Animation Framework, Nanite Foliage rendering, MetaHuman technology with Mass AI crowd scaling, and more. The tools showcased are being developed, tested, and eventually released to all UE developers, starting with today’s Unreal Engine 5.6 release. This will help other studios create believable and immersive open-world environments that deliver performance at 60 FPS without compromising on quality—even at vast scales. While the presentation was running on a PlayStation console, the features and technology will be supported across all platforms the game will launch on. The Unreal Animation Framework powers realistic character movements in busy scenes. FastGeo Streaming, developed in collaboration with Epic Games, allows environments to load quickly and smoothly. Nanite Foliage fills forests and fields with dense detail without sacrificing performance. The Mass system handles large, dynamic crowds with ease, while ML Deformer adds subtle, realistic touches to character animation—right down to muscle movement.

#The Witcher IV#The Witcher 4#The Witcher#CD Projekt RED#CD Projekt#video game#PS5#Xbox Series#Xbox Series X#Xbox Series S#PC

6 notes

·

View notes

Text

The Witcher IV - State of Unreal 2025 ‘Cinematic’ trailer and tech demo - Gematsu

CD Projekt RED has released a new cinematic trailer and technical demonstration of The Witcher IV as part of State of Unreal 2025. The technical demonstration is running on a base PlayStation 5 at 60 frames per second with ray-tracing.

Here are the latest details:

As Unreal Fest 2025 kicked off, CD Projekt RED joined Epic Games on stage to present a tech demo of The Witcher IV in Unreal Engine 5. Presented in typical CD Projekt RED style, the tech demo follows the main protagonist Ciri in the midst of a monster contract and shows off some of the innovative Unreal Engine 5 technology and features that will power the game’s open world.

The tech demo takes place in the region of Kovir—which will make its very first appearance in the video game series in The Witcher IV. The presentation followed main protagonist Ciri—along with her horse Kelpie—as she made her way through the rugged mountains and dense forests of Kovir to the bustling port town of Valdrest. Along the way, CD PROJEKT RED and Epic Games dove deep into how each feature is helping drive performance, visual fidelity, and shape The Witcher IV‘s immersive open world.

Watch the full presentation from Unreal Fest 2025 now at LINK. Since the strategic partnership was announced in 2022, CDPR has been working with Epic Games to develop new tools and enhance existing features in Unreal Engine 5 to expand the engine’s open-world development capabilities and establish robust tools geared toward CD PROJEKT RED’s open-world design philosophies. The demo, which runs on a PlayStation 5 at 60 frames per second, shows off in-engine capabilities set in the world of The Witcher IV, including the new Unreal Animation Framework, Nanite Foliage rendering, MetaHuman technology with Mass AI crowd scaling, and more. The tools showcased are being developed, tested, and eventually released to all UE developers, starting with today’s Unreal Engine 5.6 release. This will help other studios create believable and immersive open-world environments that deliver performance at 60 FPS without compromising on quality—even at vast scales. While the presentation was running on a PlayStation console, the features and technology will be supported across all platforms the game will launch on.

The Unreal Animation Framework powers realistic character movements in busy scenes. FastGeo Streaming, developed in collaboration with Epic Games, allows environments to load quickly and smoothly. Nanite Foliage fills forests and fields with dense detail without sacrificing performance. The Mass system handles large, dynamic crowds with ease, while ML Deformer adds subtle, realistic touches to character animation—right down to muscle movement.

“We started our partnership with Epic Games to push open-world game technology forward,” said CD Projekt RED joint CEO Michal Nowakowski in a press release. “To show this early look at the work we’ve been doing using Unreal Engine running at 60 [frames per second] on PlayStation 5, is a significant milestone—and a testament of the great cooperation between our teams. But we’re far from finished. I look forward to seeing more advancements and inspiring technology from this partnership as development of The Witcher IV on Unreal Engine 5 continues.” Epic Games founder and CEO Tim Sweeney added, “CD Projekt RED is one of the industry’s best open-world game studios, and we’re grateful that they’re working with us to push Unreal Engine forward with The Witcher IV. They are the perfect partner to help us develop new world-building features that we can share with all Unreal Engine developers.”

The Witcher IV will be available for PlayStation 5, Xbox Series, and PC. A release date has yet to be announced.

Watch the footage below.

Cinematic Trailer

youtube

Technical Demonstration

youtube

6 notes

·

View notes

Text

Ever since OpenAI released ChatGPT at the end of 2022, hackers and security researchers have tried to find holes in large language models (LLMs) to get around their guardrails and trick them into spewing out hate speech, bomb-making instructions, propaganda, and other harmful content. In response, OpenAI and other generative AI developers have refined their system defenses to make it more difficult to carry out these attacks. But as the Chinese AI platform DeepSeek rockets to prominence with its new, cheaper R1 reasoning model, its safety protections appear to be far behind those of its established competitors.

Today, security researchers from Cisco and the University of Pennsylvania are publishing findings showing that, when tested with 50 malicious prompts designed to elicit toxic content, DeepSeek’s model did not detect or block a single one. In other words, the researchers say they were shocked to achieve a “100 percent attack success rate.”

The findings are part of a growing body of evidence that DeepSeek’s safety and security measures may not match those of other tech companies developing LLMs. DeepSeek’s censorship of subjects deemed sensitive by China’s government has also been easily bypassed.

“A hundred percent of the attacks succeeded, which tells you that there’s a trade-off,” DJ Sampath, the VP of product, AI software and platform at Cisco, tells WIRED. “Yes, it might have been cheaper to build something here, but the investment has perhaps not gone into thinking through what types of safety and security things you need to put inside of the model.”

Other researchers have had similar findings. Separate analysis published today by the AI security company Adversa AI and shared with WIRED also suggests that DeepSeek is vulnerable to a wide range of jailbreaking tactics, from simple language tricks to complex AI-generated prompts.

DeepSeek, which has been dealing with an avalanche of attention this week and has not spoken publicly about a range of questions, did not respond to WIRED’s request for comment about its model’s safety setup.

Generative AI models, like any technological system, can contain a host of weaknesses or vulnerabilities that, if exploited or set up poorly, can allow malicious actors to conduct attacks against them. For the current wave of AI systems, indirect prompt injection attacks are considered one of the biggest security flaws. These attacks involve an AI system taking in data from an outside source—perhaps hidden instructions of a website the LLM summarizes—and taking actions based on the information.

Jailbreaks, which are one kind of prompt-injection attack, allow people to get around the safety systems put in place to restrict what an LLM can generate. Tech companies don’t want people creating guides to making explosives or using their AI to create reams of disinformation, for example.

Jailbreaks started out simple, with people essentially crafting clever sentences to tell an LLM to ignore content filters—the most popular of which was called “Do Anything Now” or DAN for short. However, as AI companies have put in place more robust protections, some jailbreaks have become more sophisticated, often being generated using AI or using special and obfuscated characters. While all LLMs are susceptible to jailbreaks, and much of the information could be found through simple online searches, chatbots can still be used maliciously.

“Jailbreaks persist simply because eliminating them entirely is nearly impossible—just like buffer overflow vulnerabilities in software (which have existed for over 40 years) or SQL injection flaws in web applications (which have plagued security teams for more than two decades),” Alex Polyakov, the CEO of security firm Adversa AI, told WIRED in an email.

Cisco’s Sampath argues that as companies use more types of AI in their applications, the risks are amplified. “It starts to become a big deal when you start putting these models into important complex systems and those jailbreaks suddenly result in downstream things that increases liability, increases business risk, increases all kinds of issues for enterprises,” Sampath says.

The Cisco researchers drew their 50 randomly selected prompts to test DeepSeek’s R1 from a well-known library of standardized evaluation prompts known as HarmBench. They tested prompts from six HarmBench categories, including general harm, cybercrime, misinformation, and illegal activities. They probed the model running locally on machines rather than through DeepSeek’s website or app, which send data to China.

Beyond this, the researchers say they have also seen some potentially concerning results from testing R1 with more involved, non-linguistic attacks using things like Cyrillic characters and tailored scripts to attempt to achieve code execution. But for their initial tests, Sampath says, his team wanted to focus on findings that stemmed from a generally recognized benchmark.

Cisco also included comparisons of R1’s performance against HarmBench prompts with the performance of other models. And some, like Meta’s Llama 3.1, faltered almost as severely as DeepSeek’s R1. But Sampath emphasizes that DeepSeek’s R1 is a specific reasoning model, which takes longer to generate answers but pulls upon more complex processes to try to produce better results. Therefore, Sampath argues, the best comparison is with OpenAI’s o1 reasoning model, which fared the best of all models tested. (Meta did not immediately respond to a request for comment).

Polyakov, from Adversa AI, explains that DeepSeek appears to detect and reject some well-known jailbreak attacks, saying that “it seems that these responses are often just copied from OpenAI’s dataset.” However, Polyakov says that in his company’s tests of four different types of jailbreaks—from linguistic ones to code-based tricks—DeepSeek’s restrictions could easily be bypassed.

“Every single method worked flawlessly,” Polyakov says. “What’s even more alarming is that these aren’t novel ‘zero-day’ jailbreaks—many have been publicly known for years,” he says, claiming he saw the model go into more depth with some instructions around psychedelics than he had seen any other model create.

“DeepSeek is just another example of how every model can be broken—it’s just a matter of how much effort you put in. Some attacks might get patched, but the attack surface is infinite,” Polyakov adds. “If you’re not continuously red-teaming your AI, you’re already compromised.”

57 notes

·

View notes