#so such products are used in many industrial fields

Text

Writer Spotlight: Jamie Beck

Jamie Beck is a photographer residing in Provence, France. Her Tumblr blog, From Me To You, became immensely successful shortly after launching in 2009. Soon after, Jamie, along with her partner Kevin Burg, pioneered the use of Cinemagraphs in creative storytelling for brands. Since then, she has produced marketing and advertising campaigns for companies like Google, Samsung, Netflix, Disney, Microsoft, Nike, Volvo, and MTV, and was included in Adweek Magazine’s “Creative 100” among the industry’s top Visual Artists. In 2022, she released her first book, An American in Provence, which became a NYT Bestseller and Amazon #1 book in multiple categories, and featured in publications such as Vogue, goop, Who What Wear, and Forbes. Flowers of Provence is Jamie’s second book.

Can you tell us about how The Flowers of Provence came to be?

I refer to Provence often as ‘The Garden of Eden’ for her harmonious seasons that bring an ever-changing floral bounty through the landscape. My greatest joy in life is telling her story of flowers through photography so that we may all enjoy them, their beauty, their symbolism, and their contribution to the harmony of this land just a bit longer.

(Photograph: Jamie Beck)

How do your photography and writing work together? Do you write as part of your practice?

I constantly write small notations, which usually occur when I am alone in nature with the intention of creating a photograph or in my studio working alone on a still life. I write as I think in my head, so I have made it a very strict practice that when a thought or idea comes up, I stop and quickly write the text in the notes app on my phone or in a pocket journal I keep with me most of the time. If I don’t stop and write it down at that moment, I find it is gone forever. It is also the same practice for shooting flowers, especially in a place as seasonal as Provence. If I see something, I must capture it right away because it could be gone tomorrow.

(Photograph: Jamie Beck)

You got your start in commercial photography. What’s something you learned in those fields that has served you well in your current creative direction?

I think my understanding of bridging art and commerce came from my commercial photography background. I can make beautiful photographs of flowers all day long, but how to make a living off your art is a completely different skill that I am fortunate enough to have learned by working with so many different creative brands and products in the past.

(Photograph: Jamie Beck)

Do you remember your first photograph?

Absolutely! I was 13 years old. My mother gave me her old Pentax 35mm film camera to play with. When I looked through the viewfinder, it was as if the imaginary world in my head could finally come to life! I gave my best friend a makeover, put her in an evening gown in the backyard of my parents’ house in Texas, and made my first photograph, which I thought was so glamorous! So Vogue!

You situate your photographic work with an introduction that charts the seasons in Provence through flowers. Are there any authors from the fields of nature writing and writing place that inspire you?

I absolutely adore Monty Don! His writing, his shoes, and his ease with nature and flowers—that’s a world in which I want to live. I also love Floret Flowers, especially on social media, as a way to learn the science behind flowers and how to grow them.

How did you decide on the order of the images within The Flowers of Provence?

Something I didn’t anticipate with a book deal is that I would actually be the one doing the layouts! I assumed I would hand over a folder of images, and an art director would decide the order. At first, it was overwhelming to sort through it all because the work is so personal, and I’m so visual. But in the end, it had to be me. It had to be my story and flow to be truly authentic. I tried to move through the seasons and colors of the landscape in a harmonious way that felt a bit magical, just as discovering Provence has felt to me.

(Photograph: Jamie Beck)

How do you practice self-care when juggling work and life commitments alongside the creative process?

The creative process is typically a result that comes out of taking time for self-care. I get some of my best ideas for photographic projects or writing when I am in a bath or shower or go for a long (and restorative) walk in nature. Doing things for myself, such as how I dress or do my hair and makeup, is another form of creative expression that is satisfying.

What’s a place or motif you’d like to photograph that you haven’t had a chance to yet?

I am really interested in discovering more formal gardens in France. I like the idea of garden portraiture, trying to really capture the essence and spirit of places where man and nature intertwine.

Which artists do you return to for inspiration?

I’m absolutely obsessed with Édouard Manet—his color pallet and subject matter.

What are three things you can’t live without as an artist?

My camera, the French light, and flowers, of course.

What’s your favorite flower to photograph, and why?

I love roses. They remind me of my grandmother, who always grew roses and was my first teacher of nature. The perfume of roses and the vast variety of colors, names, and styles all make me totally crazy. I just love them. They simply bring me joy the same way seeing a rainbow in the sky does.

(Photograph: Jamie Beck)

#writer spotlight#jamie beck#the flowers of provence#art#photography#flowers#cottagecore#aesthetics#naturecore#flowercore#still life#nature aesthetic#artist#artists on tumblr#fine art photography#long post#travel#France#Provence#original photographers#photographers on tumblr

1K notes

·

View notes

Text

So I'm absolutely not an expert on the subject, and this post is just a bunch of thoughts I've been turning over in my head a lot, but: on the subject of Industrial Agriculture, the Earth's carrying capacity, and agroforestry

Writings from people who propose policy changes to secure the future of Earth treat energy use by organisms in (what seems to me like) the most infuriatingly presumptive, simplistic terms and I don't know why or what's wrong or what I'm missing here.

Humans have to use some share of the solar energy that reaches Earth to continue existing.

The first problem is when writers appear to assume that our current use of solar energy via the agricultural system (we grow plants that turns the light into food.) already is maximally efficient.

The second problem is when writers see land as having one "use" that excludes all other uses, including by other organisms.

The way i see it, the thing is, we learned how to farm from natural environments. Plant communities and farms are doing the same thing, capturing energy from the Sun and creating biomass, right? The idea of farming is to make it so that as much as possible of that biomass is stuff that can be human food.

So instead of examining the most efficient crops or even the most efficient agricultural systems, I think we need to examine the most efficient natural ecosystems and how they do it.

What I'm saying is...in agricultural systems where a sunbeam can hit bare dirt instead of a leaf, that's inefficiency. In agricultural systems where the nutrients in dead plant matter are eroded away instead of building the soil, that's inefficiency. Industrial agriculture is hemorrhaging inefficiency. And it's not only that, it's that industrial agriculture causes topsoil to become degraded, which is basically gaining today's productivity by taking out a loan from the future.

I first started thinking about this with lawns: a big problem with monocultures is ultimately that they occupy a single niche.

In the wild, plant communities form layers of plants that occupy different niches in space. So in a forest you have your canopy, your understory, your forest floor with herbaceous plants, and you have mosses and epiphytes, and basically if any sunbeams aren't soaked up by the big guys in the canopy, they're likely to land on SOME leaf or other.

Monocultures like lawns are so damn hard to sustain because they're like a restaurant with one guy in it and 20 empty tables, and every table is loaded with delicious food. And right outside the restaurant is a whole crowd of hungry people.

Once the restaurant is at capacity and every table is full, people will stop coming in because there's no room. But as long as there's lots of room and lots of food, people will pour in!

So a sunny lawn has lots of food (sunlight) and lots of room (the soil and the air above the soil can fit a whole forest's worth of plant material). So nature is just bombing that space with aggressive weeds non-stop trying to fill those niches.

A monoculture corn field has a lot of the same problems. It could theoretically fit more plants, if those plants slotted into a niche that the corn didn't. Native Americans clear across the North American continent had the Three Sisters as part of their agricultural strategy—you've got corn, beans, and squash, and the squash fits the "understory" niche, and the corn provides a vertical support for the beans.

We dump so many herbicides on our monocultures. That's a symptom of inefficient use of the Sun, really. If the energy is going to plants we can't eat instead of plants we can, that's a major inefficiency.

But killing the weeds doesn't fully close up that inefficiency. It improves it, but ultimately, it's not like 100% of the energy the weeds would be using gets turned into food instead. It's just a hole, because the monoculture can't fulfill identical niches to the weeds.

The solution—the simple, brilliant solution that, to me, is starting to appear common throughout human agricultural history—is to eat the weeds too.

Dandelions are a common, aggressive weed. They're also an edible food crop.

In the USA, various species of Amaranth are our worst agricultural weeds. They were also the staple food crop that fed empires in Mesoamerica.

Purslane? Edible. Crabgrass? Edible.

A while back I noticed a correlation in the types of plants that don't form mycorrhizal associations. Pokeweed, purslane, amaranth—WEEDS. This makes perfect sense, because weeds are disaster species that pop up in disturbed soil, and disturbed soil isn't going to have much of a mycorrhizal network.

But, you know what else is non-mycorrhizal? Brassicas—ie the plant that humans bred into like 12 different vegetables including broccoli and brussels sprouts.

My hypothesis is that these guys were part of a Weed Recruitment Event wherein a common agricultural weed got domesticated into a secondary food crop. I bet the same thing happened with Amaranth. I bet—and this is my crazy theory here—I bet a lot of plants were domesticated not so much based on their use as food, but based on their willingness to grow in the agricultural fields that were being used for other crops.

So, Agroforestry.

Agroforestry has the potential for efficiency because it's closer to a more efficient and "complete" plant community.

People keep telling me, "Food forests are nowhere near as efficient as industrial agriculture, only industrial agriculture can feed the world!" and like. Sure, if you look at a forest, take stock of what things in it can be eaten, and tally up the calories as compared to a corn field (though the amount of edible stuff in a forest is way higher than you think).

But I think it's stupid to act like a Roundup-soaked corn field in Kansas amounts to the pinnacle of possible achievement in terms of agricultural productivity. It's a monoculture, it's hard to maintain and wasteful and leaves a lot of niches empty, and it's destroying the topsoil upon which we will depend for life in the future.

I think it's stupid to act like we can guess at what the most efficient possible food-producing system is. The people that came before us didn't spend thousands of years bioengineering near-inedible plants into staple food crops via just waiting for mutations to show up so that we, possessing actual ability to alter genes in a targeted way, could invent some kind of bullshit number for the carrying capacity of Earth based on the productive capability of a monoculture corn field

Like, do you ever think about how insane domestication is? it's like if Shakespeare's plays were written by generation after generation of people who gave a bunch of monkeys typewriters and spent every day of their lives combing through the output for something worth keeping.

"How do we feed the human race" is a PAINFULLY solvable problem. The real issue is greed, politics, and capitalism...

...lucky for us, plants don't know what those things are.

1K notes

·

View notes

Photo

Okay, time to get pretentious and REALLY talk about this shot.

So put on your over-analysis goggles, and let’s talk about the Imperial Cog, Renaissance-era military forts, 18th century prison architecture, the military-industrial complex, the surveillance state, and why this single shot of Mon Mothma standing in a doorway in “Nobody’s Listening!” (the 9th episode of Andor season one) is making me so feral I want to kiss Luke Hull and his entire production design team right on the mouth.

For those of you not in the know - the shape on the screen behind Major Partagaz is the crest of the Galactic Empire - often called the Imperial Cog. It appears throughout Star Wars media on flags, tie fighter helmets, uniforms and as a glowing hologram outside ISB HQ.

In canon it was adapted from the crest of the Galactic Republic.

irl it was created by original trilogy costume designer John Mollo. Mollo has stated that the symbol was inspired by the shape of historical fortifications.

Bastion forts (aka star forts) first appear during the Renaissance with the advent of the cannon. Their shape eliminated blind spots, allowing for a 360 degree field of fire.

An apt metaphor for the Empire. Powerful, imposing and leaving you with nowhere to hide.

The Imperial crest also strongly resembles a gear or cog - hence the common “Imperial Cog” nickname.

Given how inextricably linked military and industry are, it’s also an apt metaphor. Both alluding to the Empire’s massive industrial power, and how it treats all of its citizens with a startling lack of humanity, valuing them only for what they are able to produce for the Empire.

The idea of the cog is repeated in the shape of whatever it is that they’re producing in the prison. They’re literally cogs in the Imperial machine making more cogs for the machine... while inside a larger cog.

This shape, in relation to a prison, also references something else which was almost certainly intentional on the production team’s part.

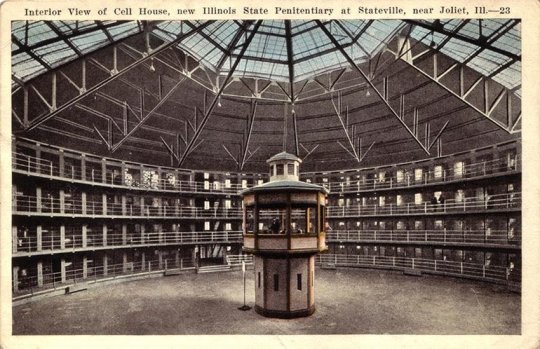

In the 1791 British philosopher and social theorist Jeremy Bentham proposed a design for a prison he referred to as the “panopticon” - the name derived from the Greek word for “all seeing”.

The basic design for the panopticon was a large circular rotunda of cells with a single watchtower in the center. The plan would allow a single guard to theoretically observe every cell in the prison, but more importantly cause the prisoners to believe they are under surevillance at all times, while never being certain.

Later philosophers (notably Michel Foucault) used the panopticon as a metaphor for social control under totalitarian regimes or surveillance states. The perceived constant surveillance of a panopticon causes prisoners to self-police due to the belief they are always being watched, even if they don’t know for certain that is true. They live in constant fear even if nobody is actually watching them, even if “Nobody’s Listening!”

The idea of the metaphorical panopticon has in more recent years been adapted to many other examples of social control: CCTV, social media and business management...

Like the concept of cubicles in an open floor plan office.

So that all being established - let’s finally talk about Mon Mothma’s apartment.

The cog shape is everywhere. There’s hardly a shot where at least one cog isn’t visible. Every room is connected by cog-shaped doorways.

The shape serves as a backdrop to most scenes, often centered and featured prominently.

(Side Note: The cog also appears as a repeated pattern on room dividers with the interesting added detail of intersecting lines that make them resemble spider webs.

The fact that Mon is often filmed directly through these web-like screens (particularly when conducting rebellion business) leads me to believe that this was a very intentional choice.

Even in the very heart of the Empire the nascent Rebellion is starting to build a web of networks and intelligence.)

I had originally presumed that the repeated appearance of the cog was just Luke Hull and his production team adding some brilliant visual storytelling to their already amazing sets. But the following line from episode ten leads me to believe they intended for these details to have an in-story explanation as well.

When speaking to Tay and Davo Skuldon about the apartment Mon states that “It’s state property. The rules are strict on decor. Our choices for change are limited.”

While it’s unclear whether the “state” in this instance is Mon’s home planet of Chandrila or the Empire itself - that second option makes the decor even more insidious.

If Mon’s apartment is Empire property that means the shape of the doors is intentional in-world, not just for the sake of visual storytelling. It means that this was a conscious decision by the Empire. A reminder to even the richest and most powerful of its citizens that they are always watching - whether you can see them or not.

Which brings us back to our original shot.

My favorite thing about this shot isn’t just that is shows how very alone Mon Mothma is.

It isn’t just that she’s in the heart of the Empire, surrounded and dwarfed - just another cog in their machine.

It isn’t just that’s she trapped in her own metaphorical prison, worrying her self sick about who may be watching, not safe even in her own home.

What makes this shot truly extraordinary to me, is that right in the midst of the Empire you can see a new symbol forming.

Forming with Mon Mothma right at the center.

It’s a bit blocky, still constrained by the the harsh lines of the Empire, but giving how intentional every design decision on this show has been I find it pretty hard to believe it’s there by accident.

A symbol that will one day adorn the helmet of a boy from Tatooine.

One that will come to represent what all rebellions are built on...

#andor#andor spoilers#star wars#HOW IS THIS SHOW SO GOOD?#mon mothma#production design#history#long post#whose extensive knowledge of star wars and the history of the criminal justice system is useless now MOM

3K notes

·

View notes

Text

Podcasting "Microincentives and Enshittification"

Tomorrow (Oct 25) at 10hPT/18hUK, I'm livestreaming an event called "Seizing the Means of Computation" for the Edinburgh Futures Institute.

This week on my podcast, I read my recent Medium column, "Microincentives and Enshittification," about the way that monopoly drives mediocrity, with Google's declining quality as Exhibit A:

https://pluralistic.net/2023/07/28/microincentives-and-enshittification/

It's not your imagination: Google used to be better – in every way. Search used to be better, sure, but Google used to be better as a company. It treated its workers better (for example, not laying off 12,000 workers months after a stock buyback that would have paid their salaries for the next 27 years). It had its users' backs in policy fights – standing up for Net Neutrality and the right to use encryption to keep your private data private. Even when the company made ghastly mistakes, it repented of them and reversed them, like the time it pulled out of China after it learned that Chinese state hackers had broken into Gmail in order to discover which dissidents to round up and imprison.

None of this is to say that Google used to be perfect, or even, most of the time, good. Just that things got worse. To understand why, we have to think about how decisions get made in large organizations, or, more to the point, how arguments get resolved in these organizations.

We give Google a lot of shit for its "Don't Be Evil" motto, but it's worth thinking through what that meant for the organization's outcomes over the years. Through most of Google's history, the tech labor market was incredibly tight, and skilled engineers and other technical people had a lot of choice as to where they worked. "Don't Be Evil" motivated some – many – of those workers to take a job at Google, rather than one of its rivals.

Within Google, that meant that decisions that could colorably be accused of being "evil" would face some internal pushback. Imagine a product design meeting where one faction proposes something that is bad for users, but good for the company's bottom line. Think of another faction that says, "But if we do that, we'll be 'evil.'"

I think it's safe to assume that in any high-stakes version of this argument, the profit side will prevail over the don't be evil side. Money talks and bullshit walks. But what if there were also monetary costs to being evil? Like, what if Google has to worry about users or business customers defecting to a rival? Or what if there's a credible reason to worry that a regulator will fine Google, or Congress will slap around some executives at a televised hearing?

That lets the no-evil side field a more robust counterargument: "Doing that would be evil, and we'll lose money, or face a whopping fine, or suffer reputational harms." Even if these downsides are potentially smaller than the upsides, they still help the no-evil side win the argument. That's doubly true if the downsides could depress the company's share-price, because Googlers themselves are disproportionately likely to hold Google stock, since tech companies are able to get a discount on their wage-bills by paying employees in abundant stock they print for free, rather than the scarce dollars that only come through hard graft.

When the share-price is on the line, the counterargument goes, "That would be evil, we will lose money, and you will personally be much poorer as a result." Again, this isn't dispositive – it won't win every argument – but it is influential. A counterargument that braids together ideology, institutional imperatives, and personal material consequences is pretty robust.

Which is where monopoly comes in. When companies grow to dominate their industries, they are less subject to all forms of discipline. Monopolists don't have to worry about losing disgusted employees, because they exert so much gravity on the labor market that they find it easy to replace them.

They don't have to worry about losing customers, because they have eliminated credible alternatives. They don't have to worry about losing users, because rivals steer clear of their core business out of fear of being bigfooted through exclusive distribution deals, predatory pricing, etc. Investors have a name for the parts of the industry dominated by Big Tech: they call it "the kill zone" and they won't back companies seeking to enter it.

When companies dominate their industries, they find it easier to capture their regulators and outspend public prosecutors who hope to hold them to account. When they lose regulatory fights, they can fund endless appeals. If they lose those appeals, they can still afford the fines, especially if they can use an army of lawyers to make sure that the fine is less than the profit realized through the bad conduct. A fine is a price.

In other words, the more dominant a company is, the harder it is for the good people within the company to win arguments about unethical and harmful proposals, and the worse the company gets. The internal culture of the company changes, and its products and services decline, but meaningful alternatives remain scarce or nonexistent.

Back to Google. Google owns more than 90% of the search market. Google can't grow by adding more Search users. The 10% of non-Google searchers are extremely familiar with Google's actions. To switch to a rival search engine, they have had to take many affirmative, technically complex steps to override the defaults in their devices and tools. It's not like an ad extolling the virtues of Google Search will bring in new customers.

Having saturated the search market, Google can only increase its Search revenues by shifting value from searchers or web publishers to itself – that is, the only path to Search growth is enshittification. They have to make things worse for end users or business customers in order to make things better for themselves:

https://pluralistic.net/2023/01/21/potemkin-ai/#hey-guys

This means that each executive in the Search division is forever seeking out ways to shift value to Google and away from searchers and/or publishers. When they propose a enshittificatory tactic, Google's market dominance makes it easy for them to win arguments with their teammates: "this may make you feel ashamed for making our product worse, but it will not make me poorer, it will not make the company poorer, and it won't chase off business customers or end users, therefore, we're gonna do it. Fuck your feelings."

After all, each microenshittification represents only a single Jenga block removed from the gigantic tower that is Google Search. No big deal. Some Google exec made the call to make it easier for merchants to buy space overtop searches for their rivals. That's not necessarily a bad thing: "Thinking of taking a vacation in Florida? Why not try Puerto Rico – it's a US-based Caribbean vacation without the transphobia and racism!"

But this kind of advertising also opens up lots of avenues for fraud. Scammers clone local restaurants' websites, jack up their prices by 15%, take your order, and transmit it to the real restaurant, pocketing the 15%. They get clicks by using some of that rake to buy an ad based on searches for the restaurant's name, so they show up overtop of it and rip off inattentive users:

https://pluralistic.net/2023/02/24/passive-income/#swiss-cheese-security

This is something Google could head off; they already verify local merchants by mailing them postcards with unique passwords that they key into a web-form. They could ban ads for websites that clone existing known merchants, but that would incur costs (engineer time) and reduce profits, both from scammers and from legit websites that trip a false positive.

The decision to sell this kind of ad, configured this way, is a direct shift of value from business customers (restaurants) and end-users (searchers) to Google. Not only that, but it's negative sum. The money Google gets from this tradeoff is less than the cost to both the restaurant (loss of goodwill from regulars who are affronted because of a sudden price rise) and searchers (who lose 15% on their dinner orders). This trade-off makes everyone except Google worse off, and it's only possible when Google is the only game in town.

It's also small potatoes. Last summer, scammers figured out how to switch out the toll-free numbers that Google displayed for every airline, redirecting people to boiler-rooms where con-artists collected their credit-card numbers and sensitive personal information (passports, etc):

https://www.nbcnews.com/tech/tech-news/phone-numbers-airlines-listed-google-directed-scammers-rcna94766

Here again, we see a series of small compromises that lead to a massive harm. Google decided to show users 800 numbers rather than links to the airlines' websites, but failed to fortify the process for assigning phone numbers to prevent this absolutely foreseeable type of fraud. It's not that Google wanted to enable fraud – it's that they created the conditions for the fraud to occur and failed to devote the resources necessary to defend against it.

Each of these compromises indicates a belief among Google decision-makers that the consequences for making their product worse will be outweighed by the value the company will generate by exposing us to harm. One reason for this belief is on display in the DOJ's antitrust case against Google:

https://www.justice.gov/opa/press-release/file/1328941/download

The case accuses Google of spending tens of billions of dollars to buy out the default search position on every platform where an internet user might conceivably perform a search. The company is lighting multiple Twitters worth of dollars on fire to keep you from ever trying another search engine.

Spraying all those dollars around doesn't just keep you from discovering a better search engine – it also prevents investors from funding that search engine in the first place. Why fund a startup in the kill-zone if no one will ever discover that it exists?

https://www.theverge.com/23802382/search-engine-google-neeva-android

Of course, Google doesn't have to grow Search to grow its revenue. Hypothetically, Google could pursue new lines of business and grow that way. This is a tried-and-true strategy for tech giants: Apple figured out how to outsource its manufacturing to the Pacific Rim; Amazon created a cloud service, Microsoft figured out how to transform itself into a cloud business.

Look hard at these success stories and you discover another reason that Google – and other large companies – struggle to grow by moving into adjacent lines of business. In each case – Apple, Microsoft, Amazon – the exec who led the charge into the new line of business became the company's next CEO.

In other words: if you are an exec at a large firm and one of your rivals successfully expands the business into a new line, they become the CEO – and you don't. That ripples out within the whole org-chart: every VP who becomes an SVP, every SVP who becomes an EVP, and every EVP who becomes a president occupies a scarce spot that it worth millions of dollars to the people who lost it.

The one thing that execs reliably collaborate on is knifing their ambitious rivals in the back. They may not agree on much, but they all agree that that guy shouldn't be in charge of this lucrative new line of business.

This "curse of bigness" is why major shifts in big companies are often attended by the return of the founder – think of Gates going back to Microsoft or Brin returning to Google to oversee their AI projects. They are the only execs that other execs can't knife in the back.

This is the real "innovator's dilemma." The internal politics of large companies make Machiavelli look like an optimist.

When your company attains a certain scale, any exec's most important rival isn't the company's competitor – it's other execs at the same company. Their success is your failure, and vice-versa.

This makes the business of removing Jenga blocks from products like Search even more fraught. These quality-degrading, profit-goosing tactics aren't coordinated among the business's princelings. When you're eating your seed-corn, you do so in private. This secrecy means that it's hard for different product-degradation strategists to realize that they are removing safeguards that someone else is relying on, or that they're adding stress to a safety measure that someone else just doubled the load on.

It's not just Google, either. All of tech is undergoing a Great Enshittening, and that's due to how intertwined all these tech companies. Think of how Google shifts value from app makers to itself, with a 30% rake on every dollar spent in an app. Google is half of the mobile duopoly, with the other half owned by Apple. But they're not competitors – they're co-managers of a cartel. The single largest deal that Google or Apple does every year is the bribe Google pays Apple to be the default search for iOS and Safari – $15-20b, every year.

If Apple and Google were mobile competitors, you'd expect them to differentiate their products, but instead, they've converged – both Apple and Google charge sky-high 30% payment processing fees to app makers.

Same goes for Google/Facebook, the adtech duopoly: not only do both companies charge advertisers and publishers sky-high commissions, clawing 51 cents out of every ad dollar, but they also illegally colluded to rig the market and pay themselves more, at advertisers' and publishers' expense:

https://en.wikipedia.org/wiki/Jedi_Blue

It's not just tech, either – every sector from athletic shoes to international sea-freight is concentrated into anti-competitive, value-annihilating cartels and monopolies:

https://www.openmarketsinstitute.org/learn/monopoly-by-the-numbers

As our friends on the right are forever reminding us: "incentives matter." When a company runs out of lands to conquer, the incentives all run one direction: downhill, into a pit of enshittification. Google got worse, not because the people in it are worse (or better) than they were before – but because the constraints that discipline the company and contain its worst impulses got weaker as the company got bigger.

Here's the podcast episode:

https://craphound.com/news/2023/10/23/microincentives-and-enshittification/

And here's a direct link to the MP3 (hosting courtesy of the Internet Archive; they'll host your stuff for free, forever):

https://archive.org/download/Cory_Doctorow_Podcast_452/Cory_Doctorow_Podcast_452_-_Microincentives_and_Enshittification.mp3

And here's my podcast's RSS feed:

http://feeds.feedburner.com/doctorow_podcast

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2023/07/28/microincentives-and-enshittification/

#pluralistic#podcasts#enshittification#google#microincentives#monopoly#incentives matter#trustbusting#the curse of bigness

251 notes

·

View notes

Text

Time for some tracts:

"How do we create jobs?" You raise the minimum wage, because if people don't need to work three jobs to make rent, those other two jobs will mysteriously open up.

"How do we support small businesses?" You raise the minimum wage, staggered to the biggest corporations first.

"How do we reduce homelessness?" You raise the minimum wage.

"How do we make sure raising the minimum wage doesn't negatively impact prices or--?"

Prices are already rising faster than wages are, this is playing catch up.

Put a cap on CEO salaries and bonuses, they can't earn more than 100 times more than their lowest paid workers. Current US ratio is 342, which is insane. (This list is mostly about the US.)

Hit corporations first, give small businesses time to adjust. McDonald's and Walmart can afford to raise wages to $20/hr before anyone else does, they have that income.

Drop the weekly hours required for insurance from thirty to fifteen. This will disincentivize employers having everyone work 29hrs a week, partly because working only 14hrs a week is a great way to have undertrained, underpracticed staff. Full time employment becomes the new rule.

Legalize salary transparency for all positions; NYC's new law is a good start.

Legislation that prevents companies from selling at American prices while paying American wages abroad. Did you know that McDonald's costs as much or more in Serbia, where the minimum wage is about $2/hr? Did you know that a lot of foreign products, like makeup, are a solid 20% more expensive? Did you know that Starbucks prices are equivalent? Did you know that these companies charge American prices while paying their employees local wages? At a more extreme example, luxury goods made in sweatshops are something we all know are a problem, from Apple iPhones to Forever 21 blouses, often involving child labor too. So a requirement to match the cost-to-wage ratio (either drop your prices or raise your wages when producing or selling abroad) would be great.

Not directly a minimum wage thing but still important:

Enact fees and caps on rent and housing. A good plan would probably be to have it in direct ratio to mortgage (or estimated building value, if it's already paid off), property tax, and estimated fees. This isn't going to work everywhere, since housing prices themselves are insanely high, but hey--people will be able to afford those difficult rent costs if they're earning more.

Trustbusting monopolies and megacorps like Amazon, Disney, Walmart, Google, Verizon, etc.

Tax the rich. I know this is incredibly basic but tax the fucking rich, please.

Fund the IRS to full power again. They are a skeleton crew that cannot audit the megarich due to lack of manpower, and that's where most of the taxes are being evaded.

Universal healthcare. This is so basic but oh my god we need universal healthcare. You can still have private practitioners and individual insurance! But a national healthcare system means people aren't going to die for a weird mole.

More government-funded college grants. One of the great issues in the US is the lack of healthcare workers. This has many elements, and while burnout is a big one, the massive financial costs of medical school and training are a major barrier to entry. While there are many industries where this is true, the medical field is one of the most impacted, and one of the most necessary to the success of a society. Lowering those financial barriers can only help the healthcare crisis by providing more medical professionals who are less prone to burnout because they don't need to work as many hours.

And even if those grants aren't total, guess what! That higher minimum wage we were talking about is a great way to ensure students have less debt coming out the other side if they're working their way through college.

------------------

Linda P requested something either really interesting or really silly and this is... definitely more of a tract on a topic of interest (the minimum wage and other ways business and government are both being impeded by corporate greed) than on a topic of Silly. Hope it's still good!

2K notes

·

View notes

Note

so do you actually support ai "art" or is that part of the evil bit :| because um. yikes.

Let me preface this by saying: I think the cutting edge of AI as we know it sucks shit. ChatGPT spews worthless, insipid garbage as a rule, and frequently provides enticingly fluent and thoroughly wrong outputs whenever any objective fact comes into play. Image generators produce over-rendered, uncanny slop that often falls to pieces under the lightest scrutiny. There is little that could convince me to use any AI tool currently on the market, and I am notably more hostile to AI than many people I know in real life in this respect.

That being said, these problems are not inherent to AI. In two years, or a decade, perhaps they will be our equals in producing writing and images. I know a philosopher who is of the belief that one day, AI will simply be better than us - smarter, funnier, more likeable in conversation - I am far from convinced of this myself, but let us hope, if such a case arises, they don't get better at ratfucking and warmongering too.

Many of the inherent problems posed by AI are philosophical in nature. Would a sufficiently advanced AI be appreciably different to a conscious entity? Can their outputs be described as art? These are questions whose mere axioms could themselves be argued over in PhD theses ad infinitum. I am not particularly interested in these, for to be so on top of the myriad demands of my work would either drive me mad or kill me outright. Fortunately, their fractally debatable nature means that no watertight argument could be given to them by you, either, so we may declare ourselves in happy, clueless agreement on these topics so long as you are willing to confront their unconfrontability.

Thus, I would prefer to turn to the current material issues encountered in the creation and use of AI. These, too, are not inherent to their use, but I will provide a more careful treatment of them than a simple supposition that they will evaporate in coming years.

I would consider the principal material issues surrounding AI to lie in the replacement of human labourers and wanton generation of garbage content it facilitates, and the ethics of training it on datasets collected without contributors' consent. In the first case, it is prudent to recall the understanding of Luddites held by Marx - he says, in Ch. 15 of Das Kapital: "It took both time and experience before workers learnt to distinguish between machinery and its employment by capital, and therefore to transfer their attacks from the material instruments of production to the form of society which utilises those instruments." The Industrial Revolution's novel forms of production and subsequent societal consequences has mirrored the majority of advances in production since. As then, the commercial application of the new technology must be understood to be a product of capital. To resist the technology itself on these grounds is to melt an iceberg's tip, treating the vestigial symptom of a vast syndrome. The replacement of labourers is with certainty a pressing issue that warrants action, but such action must be considered and strategic, rather than a reflexive reaction to something new. As is clear in hindsight for the technology of two centuries ago, mere impedance of technological progression is not for the better.

The second case is one I find deeply alarming - the degradation of written content's reliability threatens all knowledge, extending to my field. Already, several scientific papers have drawn outrage in being seen to pass peer review despite blatant inclusion of AI outputs. I would be tempted to, as a joke to myself more than others, begin this response with "Certainly. Here is how you could respond to this question:" so as to mirror these charlatans, would it not without a doubt enrage a great many who don't know better than to fall for such a trick. This issue, however, is one I believe to be ephemeral - so pressing is it, that a response must be formulated by those who value understanding. And so are responses being formulated - major online information sources, such as Wikipedia and its sister projects, have written or are writing rules on their use. The journals will, in time, scramble to save their reputations and dignities, and do so thoroughly - academics have professional standings to lose, so keeping them from using LLMs is as simple as threatening those. Perhaps nothing will be done for your average Google search result - though this is far from certain - but it has always been the conventional wisdom that more than one site ought to be consulted in a search for information.

The third is one I am torn on. My first instinct is to condemn the training of AI on material gathered without consent. However, this becomes more and more problematic with scrutiny. Arguments against this focusing on plagiarism or direct theft are pretty much bunk - statistical models don't really work like that. Personal control of one's data, meanwhile, is a commendable right, but is difficult to ensure without merely extending the argument made by the proponents of copyright, which is widely understood to be a disastrous construct that for the most part harms small artists. In this respect, then, it falls into the larger camp of problems primarily caused by the capital wielding the technology.

Let me finish this by posing a hypothetical. Suppose AI does, as my philosopher friend believes, become smarter and more creative than us in a few years or decades; suppose in addition it may be said through whatever means to be entirely unobjectionable, ethically or otherwise. Under these circumstances, would I then go to a robot to commission art of my fursona? The answer from me is a resounding no. My reasoning is simple - it wouldn't feel right. So long as the robot remains capable of effortlessly and passionlessly producing pictures, it would feel like cheating. Rationally explaining this deserves no effort - my reasoning would be motivated by the conclusion, rather than vice versa. It is simply my personal taste not to get art I don't feel is real. It is vitally important, however, that I not mistake this feeling as evidence of any true inferiority - to suppose that effortlessness or pasionlessness invalidate art is to stray back into the field of messy philosophical questions. I am allowed, as are you, to possess personal tastes separate from the quality of things.

Summary: I don't like AI. However, most of the problems with AI which aren't "it's bad" (likely to be fixed over time) or abstract philosophical questions (too debatable to be used to make a judgement) are material issues caused by capitalism, just as communists have been saying about every similarly disruptive new technology for over a century. Other issues can likely be fixed over time, as with quality. From a non-rational standpoint, I dislike the idea of using AI even separated from current issues, but I recognise, and encourage you to recognise, that this is not evidence of an actual inherent inferiority of AI in the abstract. You are allowed to have preferences that aren't hastily rationalised over.

102 notes

·

View notes

Text

"Abolition forgery":

So, observers and historians have, for a long time, since the first abolition campaigns, talked and written a lot about how Britain and the United States sought to improve their image and optics in the early nineteenth century by endorsing the formal legal abolition of chattel slavery, while the British and US states and their businesses/corporations meanwhile used this legal abolition as a cloak to receive credit for being nice, benevolent liberal democracies while they actually replaced the lost “productivity” of slave laborers by expanding the use of indentured laborers and prison laborers, achieved by passing laws to criminalize poverty, vagabondage, loitering, etc., to capture and imprison laborers. Like, this was explicit; we can read about these plans in the journals and letters of statesmen and politicians from that time. Many "abolitionist" politicians were extremely anxious about how to replace the lost labor. This use of indentured labor and prison labor has been extensively explored in study/discussion fields (discourse on Revolutionary Atlantic, the Black Atlantic, the Caribbean, the American South, prisons, etc.), Basic stuff at this point. Both slavery-based plantation operations and contemporary prisons are concerned with mobility and immobility, how to control and restrict the movement of people, especially Black people. After the “official” abolition of slavery, Europe and the United States then disguised their continued use of forced labor with the language of freedom, liberation, etc. And this isn't merely historical revisionism; critics and observers from that time (during the Haitian Revolution around 1800 or in the 1830s in London, for example) were conscious of how governments were actively trying to replicate this system of servitude..

And recently I came across this term that I liked, from scholar Ndubueze Mbah.

He calls this “abolition forgery.”

Mbah uses this term to describe how Europe and the US disguised ongoing forced labor, how these states “fake” liberation, making a “forgery” of justice.

But Mbah then also uses “abolition forgery” in a dramatically different, ironic counterpoint: to describe how the dispossessed, the poor, found ways to confront the ongoing state violence by forging documents, faking paperwork, piracy, evasion, etc. They find ways to remain mobile, to avoid surveillance.

And this reminds me quite a bit of Sylvia Wynter’s now-famous kinda double-meaning and definition of “plot” when discussing the plantation environment. If you’re unfamiliar:

Wynter uses “plot” to describe the literal plantation plots, where slaves were forced to work in these enclosed industrialized spaces of hyper-efficient agriculture, as in plots of crops, soil, and enclosed private land. However, then Wynter expands the use of the term “plot” to show the agency of the enslaved and imprisoned, by highlighting how the victims of forced labor “plot” against the prison, the plantation overseer, the state. They make subversive “plots” and plan escapes and subterfuge, and in doing so, they build lives for themselves despite the violence. And in this way, they also extend the “plot” of their own stories, their own narratives. So by promoting the plot of their own narratives, in opposition to the “official” narratives and “official” discourses of imperial states which try to determine what counts as “legitimate” and try to define the course of history, people instead create counter-histories, liberated narratives. This allows an “escape”. Not just a literal escape from the physical confines of the plantation or the carceral state, an escape from the walls and the fences, but also an escape from the official narratives endorsed by empires, creating different futures.

(National borders also function in this way, to prevent mobility and therefore compel people to subject themselves to local work environments.)

Katherine McKittrick also expands on Wynter's ideas about plots and plantations, describing how contemporary cities restrict mobility of laborers.

So Mbah seems to be playing in this space with two different definitions of “abolition forgery.”

Mbah authored a paper titled ‘“Where There is Freedom, There Is No State”: Abolition as a Forgery’. He discussed the paper at American Historical Association’s “Mobility and Labor in the Post-Abolition Atlantic World” symposium held on 6 January 2023. Here’s an abstract published online at AHA’s site: This paper outlines the geography and networks of indentured labor recruitment, conditions of plantation and lumbering labor, and property repatriation practices of Nigerian British-subjects inveigled into “unfree” migrant “wage-labor” in Spanish Fernando Po and French Gabon in the first half of the twentieth century. [...] Their agencies and experiences clarify how abolitionism expanded forced labor and unfreedom, and broaden our understanding of global Black unfreedom after the end of trans-Atlantic slavery. Because monopolies and forced labor [...] underpinned European imperialism in post-abolition West Africa, Africans interfaced with colonial states through forgery and illicit mobilities [...] to survive and thrive.

---

Also. Here’s a look at another talk he gave in April 2023.

[Excerpt:]

Ndubueze L. Mbah, an associate professor of history and global gender studies at the University at Buffalo, discussed the theory and implications of “abolition forgery” in a seminar [...]. In the lecture, Mbah — a West African Atlantic historian — defined his core concept of “abolition forgery” as a combination of two interwoven processes. He first discussed the usage of abolition forgery as “the use of free labor discourse to disguise forced labor” in European imperialism in Africa throughout the 19th and 20th centuries. Later in the lecture, Mbah provided a counterpoint to this definition of abolition forgery, using the term to describe the ways Africans trapped in a system of forced labor faked documents to promote their mobility across the continent. [...]

Mbah began the webinar by discussing the story of Jampawo, an African British subject who petitioned the British colonial governor in 1900. In his appeal, Jampawo cited the physical punishment he and nine African men endured when they refused to sign a Spanish labor contract that differed significantly from the English language contract they signed at recruitment and constituted terms they deemed to be akin to slavery. Because of the men’s consent in the initial English language contract, however, the governor determined that “they were not victims of forced labor, but willful beneficiaries of free labor,” Mbah said.

Mbah transitioned from this anecdote describing an instance of coerced contract labor to a discussion of different modes of resistance employed by Africans who experienced similar conditions under British imperialism. “Africans like Jampawo resisted by voting with their feet, walking away or running away, or by calling out abolition as a hoax,” Mbah said.

Mbah introduced the concept of African hypermobility, through which “coerced migrants challenged the capacity of colonial borders and contracts to keep them within sites of exploitation,” he said.] [...] Mbah also discussed how the stipulations of forced labor contracts imposed constricting gender hierarchies [...]. To conclude, Mbah gestured toward how the system of forced labor persists in Africa today, yet it “continues to be masked by neoliberal discourses of democracy and of development.” [...] “The so-called greening of Africa [...] continues to rely on forced labor that remains invisible.” [End of excerpt.]

---

This text excerpt from: Emily R. Willrich and Nicole Y. Lu. “Harvard Radcliffe Fellow Discusses Theory of ‘Abolition Forgery’ in Webinar.” The Harvard Crimson. 13 April 2023. [Published online. Bold emphasis and some paragraph breaks/contractions added by me.]

609 notes

·

View notes

Text

When you do not know a thing about the issue at stake...

...perhaps it's better to remain silent.

Some of you know, others don't - and that's fine - but my main field of expertise is labor law.

I just read this in anger and disbelief:

Look, lady. I don't care who the hell you are, what you do for a living or why you felt entitled to answer those insistent questions on your side of the fandom. I suppose you are North American and have no idea of how things work on this side of the pond. It is fine: I might know what a Congress filibuster is, for example, but I'd be severely unable to judge the finer points of competence sharing between Fed and state level.

The difference between you and me?

I keep my mouth shut and/or do my own research before opening it in public.

Have you no shame to write things like: 'It was discovered clothing factories in Bulgaria and Portugal made it and how workers were exploited, mostly women, because these factories were in special economic zones in these countries exempt from EU employee rights and regulations.'

HOW DARE YOU? What strange form of illiterate entitlement possessed you to utter such things with confidence, comfortably hidden behind a passive voice ('it was discovered')?

Portugal joined the EU in 1986. Bulgaria (and my country) joined the EU in 2007. I have given 5 relentless years of my life to make this collective political project a reality, along with hundreds of other people my age who chose to come back home from the West and put their skills to good use for their country. In doing so, I rejected more than 10 excellent corporate job offers in France and China. To see you come along and write such enormities is like having you spit in my face.

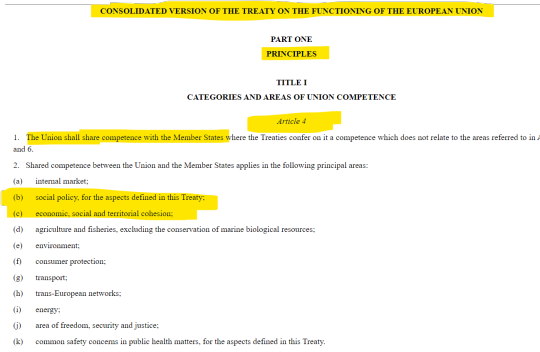

Article 4 of the Treaty on the Functioning of the European Union (aka The Treaty of Rome) is formal and clear, as far as competence sharing between the EU and its Member States goes (the UK was still, back then, a full member of the EU - it quit on February 1st 2020):

That means that ALL the EU regulations are being integrated into the national legislation of the Member States. This is not a copy/paste process, however. And because it is a shared competence area, the Member States have a larger margin of appreciation into making the EU rules a part of their own. While exceptions or delays in this process can be and are negotiated, the core principles are NEVER touched.

Read it one hundred times, madam, maybe you'll learn something today:

THERE ARE NO SPECIAL ECONOMIC ZONES IN THE EUROPEAN UNION. THE WHOLE FUCKING EUROPEAN UNION IS A SPECIAL ECONOMIC ZONE, THIS IS WHY IT IS CALLED THE SINGLE MARKET.

What the fuck do you think we are, Guangzhou? We'd wish, seeing the growth statistics!

Now, for the textile industry sector and particularly with regard to the Bulgarian market, a case very similar to my own country. Starting around 1965, many big European textile players realized the competitive advantage of using the lower paid, readily available Eastern European workforce. In order to be able to do business with all those dour Communist regimes, the solution was simple and easy to find: toll manufacturing.

It worked (and still does!) like this:

The foreign partner brings its own designs, textiles and know-how into the mix - or more simply put, it outsources all these activities. The locals transform it into the finished product, using their own workforce. The result is then re-exported to the foreign partner, who labels it and sells it. In doing so, he has the legal obligation to include provenance on the label ('made in Romania', 'made in Indonesia', 'made in Bulgaria' - you name it).

The reason you might find less and less of those 'made in ' labels nowadays at Primark and more and more at Barbour, Moncler and the such is the constant raise of the workers' wages in Eastern Europe since 1990 (things happened there, in 1989, maybe you remember?). We are not competitive anymore for midrange prêt-à-porter - China (Shein, anyone?), Cambodia and Mexico do come to mind as better suppliers. To speak about 'exploited female labourers in rickety old factories' is an insult and a lie. They weren't exploited back in the Eighties, as they are not now (workers in those factories were and still are easily paid about 50% more than all the rest) and the factories being modernized and constantly updated was always a mandatory clause in any contract of the sort. Normal people in our countries rarely or ever saw those clothes. You had to either be lucky enough for a semi-confidential store release or bribe someone working there and willing to take the risk, in order to be able to buy the rejected models on the local market.

If I understood correctly, you place this critical episode at the launch of the limited SRH & Barbour collection, for the fall of 2018. How convenient for you, who (I am told by trusted people) were one of the most vocal critics of S during Hawaii 2.0!

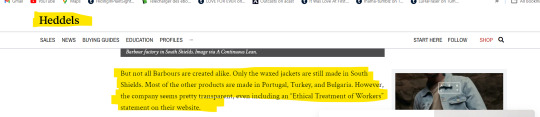

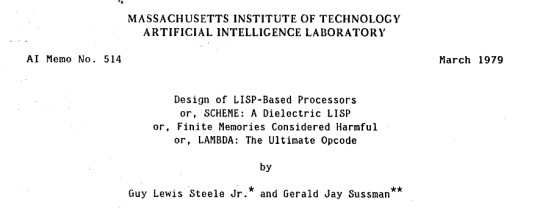

And as far as Barbour goes, it never pretended to manufacture everything in the UK only:

This information is absolutely true. You can read the whole statement, signed in October 2017 by one of their Directors, Ian Sime, here: https://www.barbour.com/us/media/wysiwyg/PDF/Ethical_Statement_October_2017.pdf

And a snapshot for you:

Oh, and: SEDEX is a behemoth in its world, with more than 75.000 companies joining as a member (https://www.sedex.com/become-a-member/meet-our-customers/). Big corporations like TESCO, Dupont, Nestle, Sainsbury's or Unilever included.

I am not Bulgarian, but I know all of this way better than you'll probably ever do. The same type of contracts were common all over Eastern Europe: Romania, Poland, the GDR (that's East Berlin and co, for you) and even the Soviet Union. I am also sure your Portuguese readers will be thrilled to see themselves qualified by a patronizing North American as labor exploiters living in a third-world country with rickety factories.

You people have no shame and never did. But you just proved with trooping colors you also have no culture and no integrity. More reasons to not regret my unapologetic fandom choice.

I expect an angry and very, very vulgar answer to this, even if I chose to not include your name/handle. The stench of your irrelevance crossed an ocean.

127 notes

·

View notes

Note

Bitches, I've got a doozy of a story for you! I work at a manufacturing facility with lots of locations nationwide and internationally. Our field is competitive with several other companies, many of which also have facilities in the same area. My company also notoriously underpays its workers as far as the local work goes (can't speak for other locations, cuz I don't know).

Well, back in April, the whole top echelon (president, CEO, etc) came to speak to us in a company-wide meeting. The CEO talked about the growth of the company, blah blah blah. At the end, they asked if anyone had any questions, and when no one else did, I stood up.

I've been following you Bitches for years, and this particular day had been absolute hell on a personal level from the word go, so at this point, my give a damn was broken beyond repair. I looked the CEO dead in the eye and told him the reason the company has such a problem with retention (he *just* mentioned it) is because we're by far the lowest-paid company in the area. I also told him that the way it usually works is that we hire someone, they get a little experience, then they leave to the competition for a drastic increase in pay. (You can only imagine how loud 300-400 production workers are, let me tell you!)

Well, the CEO gave what sounded like a bunch of corporate political waffling, so no one expected anything to come of it.

BUT WAIT!

Last week, on the same day I started in a new position (with a hefty raise), everyone got letters stating how much their pay was increasing! And the starting pay jumped up significantly to compete with the other companies nearby!

It felt really good to be able to tip the dominoes to help everyone out. 😊

OH MY FUCKING STARS AND STRIPES!

You are a beacon of light, an explosion of goodness. You just did something HUGE for everyone you work with... AND somehow the company as well?!?! We're unbelievably proud of you.

Never doubt that you can make a difference, bitchlings. By stating an uncomfortable truth, this baby bitch just improved the lives of every single person they work with AND helped their employer with worker retention. You've changed your community and industry for the better and we're just over the moon with pride.

1 Easy Way All Allies Can Help Close the Gender and Racial Pay Gap

If you found this helpful, give us a tip!

241 notes

·

View notes

Note

What are some of the coolest computer chips ever, in your opinion?

Hmm. There are a lot of chips, and a lot of different things you could call a Computer Chip. Here's a few that come to mind as "interesting" or "important", or, if I can figure out what that means, "cool".

If your favourite chip is not on here honestly it probably deserves to be and I either forgot or I classified it more under "general IC's" instead of "computer chips" (e.g. 555, LM, 4000, 7000 series chips, those last three each capable of filling a book on their own). The 6502 is not here because I do not know much about the 6502, I was neither an Apple nor a BBC Micro type of kid. I am also not 70 years old so as much as I love the DEC Alphas, I have never so much as breathed on one.

Disclaimer for writing this mostly out of my head and/or ass at one in the morning, do not use any of this as a source in an argument without checking.

Intel 3101

So I mean, obvious shout, the Intel 3101, a 64-bit chip from 1969, and Intel's first ever product. You may look at that, and go, "wow, 64-bit computing in 1969? That's really early" and I will laugh heartily and say no, that's not 64-bit computing, that is 64 bits of SRAM memory.

This one is cool because it's cute. Look at that. This thing was completely hand-designed by engineers drawing the shapes of transistor gates on sheets of overhead transparency and exposing pieces of crudely spun silicon to light in a """"cleanroom"""" that would cause most modern fab equipment to swoon like a delicate Victorian lady. Semiconductor manufacturing was maturing at this point but a fab still had more in common with a darkroom for film development than with the mega expensive building sized machines we use today.

As that link above notes, these things were really rough and tumble, and designs were being updated on the scale of weeks as Intel learned, well, how to make chips at an industrial scale. They weren't the first company to do this, in the 60's you could run a chip fab out of a sufficiently well sealed garage, but they were busy building the background that would lead to the next sixty years.

Lisp Chips

This is a family of utterly bullshit prototype processors that failed to be born in the whirlwind days of AI research in the 70's and 80's.

Lisps, a very old but exceedingly clever family of functional programming languages, were the language of choice for AI research at the time. Lisp compilers and interpreters had all sorts of tricks for compiling Lisp down to instructions, and also the hardware was frequently being built by the AI researchers themselves with explicit aims to run Lisp better.

The illogical conclusion of this was attempts to implement Lisp right in silicon, no translation layer.

Yeah, that is Sussman himself on this paper.

These never left labs, there have since been dozens of abortive attempts to make Lisp Chips happen because the idea is so extremely attractive to a certain kind of programmer, the most recent big one being a pile of weird designd aimed to run OpenGenera. I bet you there are no less than four members of r/lisp who have bought an Icestick FPGA in the past year with the explicit goal of writing their own Lisp Chip. It will fail, because this is a terrible idea, but damn if it isn't cool.

There were many more chips that bridged this gap, stuff designed by or for Symbolics (like the Ivory series of chips or the 3600) to go into their Lisp machines that exploited the up and coming fields of microcode optimization to improve Lisp performance, but sadly there are no known working true Lisp Chips in the wild.

Zilog Z80

Perhaps the most important chip that ever just kinda hung out. The Z80 was almost, almost the basis of The Future. The Z80 is bizzare. It is a software compatible clone of the Intel 8080, which is to say that it has the same instructions implemented in a completely different way.

This is, a strange choice, but it was the right one somehow because through the 80's and 90's practically every single piece of technology made in Japan contained at least one, maybe two Z80's even if there was no readily apparent reason why it should have one (or two). I will defer to Cathode Ray Dude here: What follows is a joke, but only barely

The Z80 is the basis of the MSX, the IBM PC of Japan, which was produced through a system of hardware and software licensing to third party manufacturers by Microsoft of Japan which was exactly as confusing as it sounds. The result is that the Z80, originally intended for embedded applications, ended up forming the basis of an entire alternate branch of the PC family tree.

It is important to note that the Z80 is boring. It is a normal-ass chip but it just so happens that it ended up being the focal point of like a dozen different industries all looking for a cheap, easy to program chip they could shove into Appliances.

Effectively everything that happened to the Intel 8080 happened to the Z80 and then some. Black market clones, reverse engineered Soviet compatibles, licensed second party manufacturers, hundreds of semi-compatible bastard half-sisters made by anyone with a fab, used in everything from toys to industrial machinery, still persisting to this day as an embedded processor that is probably powering something near you quietly and without much fuss. If you have one of those old TI-86 calculators, that's a Z80. Oh also a horrible hybrid Z80/8080 from Sharp powered the original Game Boy.

I was going to try and find a picture of a Z80 by just searching for it and look at this mess! There's so many of these things.

I mean the C/PM computers. The ZX Spectrum, I almost forgot that one! I can keep making this list go! So many bits of the Tech Explosion of the 80's and 90's are powered by the Z80. I was not joking when I said that you sometimes found more than one Z80 in a single computer because you might use one Z80 to run the computer and another Z80 to run a specialty peripheral like a video toaster or music synthesizer. Everyone imaginable has had their hand on the Z80 ball at some point in time or another. Z80 based devices probably launched several dozen hardware companies that persist to this day and I have no idea which ones because there were so goddamn many.

The Z80 eventually got super efficient due to process shrinks so it turns up in weird laptops and handhelds! Zilog and the Z80 persist to this day like some kind of crocodile beast, you can go to RS components and buy a brand new piece of Z80 silicon clocked at 20MHz. There's probably a couple in a car somewhere near you.

Pentium (P5 microarchitecture)

Yeah I am going to bring up the Hackers chip. The Pentium P5 series is currently remembered for being the chip that Acidburn geeks out over in Hackers (1995) instead of making out with her boyfriend, but it is actually noteworthy IMO for being one of the first mainstream chips to start pulling serious tricks on the system running it.

The P5 comes out swinging with like four or five tricks to get around the numerous problems with x86 and deploys them all at once. It has superscalar pipelining, it has a RISC microcode, it has branch prediction, it has a bunch of zany mathematical optimizations, none of these are new per se but this is the first time you're really seeing them all at once on a chip that was going into PC's.

Without these improvements it's possible Intel would have been beaten out by one of its competitors, maybe Power or SPARC or whatever you call the thing that runs on the Motorola 68k. Hell even MIPS could have beaten the ageing cancerous mistake that was x86. But by discovering the power of lying to the computer, Intel managed to speed up x86 by implementing it in a sensible instruction set in the background, allowing them to do all the same clever pipelining and optimization that was happening with RISC without having to give up their stranglehold on the desktop market. Without the P5 we live in a very, very different world from a computer hardware perspective.

From this falls many of the bizzare microcode execution bugs that plague modern computers, because when you're doing your optimization on the fly in chip with a second, smaller unix hidden inside your processor eventually you're not going to be cryptographically secure.

RISC is very clearly better for, most things. You can find papers stating this as far back as the 70's, when they start doing pipelining for the first time and are like "you know pipelining is a lot easier if you have a few small instructions instead of ten thousand massive ones.

x86 only persists to this day because Intel cemented their lead and they happened to use x86. True RISC cuts out the middleman of hyperoptimizing microcode on the chip, but if you can't do that because you've girlbossed too close to the sun as Intel had in the late 80's you have to do something.

The Future

This gets us to like the year 2000. I have more chips I find interesting or cool, although from here it's mostly microcontrollers in part because from here it gets pretty monotonous because Intel basically wins for a while. I might pick that up later. Also if this post gets any longer it'll be annoying to scroll past. Here is a sample from a post I have in my drafts since May:

I have some notes on the weirdo PowerPC stuff that shows up here it's mostly interesting because of where it goes, not what it is. A lot of it ends up in games consoles. Some of it goes into mainframes. There is some of it in space. Really got around, PowerPC did.

222 notes

·

View notes

Text

Genetically Modified Bacteria Produce Energy From Wastewater

E. Coli is one of the most widely studied bacteria studied in academic research. Though most people probably associate it with food/water borne illness, most strains of E. Coli are completely harmless. They even occur naturally within your intestines. Now, scientists at EPFL have engineered a strain of E. Coli that can generate electricity.

The survival of bacteria depends on redox reactions. Bacteria use these reactions to interconvert chemicals in order to grow and metabolize. Since bacteria are an inexhaustible natural resource, many bacterial reactions have been industrially implemented, both for creating or consuming chemical substrates. For instance, you may have heard about researchers discovering bacteria that can break down and metabolize plastic, the benefits of which are obvious. Some of these bacterial reactions are anabolic, which means that they need to be provided external energy in order to carry it out, but others are catabolic, which means that the reactions actually create energy.

Some bacteria, such as Shewanella oneidensis, can create electricity as they metabolize. This could be useful to a number of green applications, such as bioelectricity generation from organic substrates, reductive extracellular synthesis of valuable products such as nanoparticles and polymers, degradation of pollutants for bioremediation, and bioelectronic sensing. However, electricity producing bacteria such as Shewanella oneidensis tend to be very specific. They need strict conditions in order to survive, and they only produce electricity in the presence of certain chemicals.

The method that Shewanella oneidensis uses to generate electricity is called extracellular electron transfer (EET). This means that the cell uses a pathway of proteins and iron compounds called hemes to transfer an electron out of the cell. Bacteria have an inner and outer cell membrane, so this pathway spans both of them, along with the periplasmic space between. In the past, scientists have tried to engineer hardier bacteria such as E. Coli with this electron-generating ability. It worked… a little bit. They were only able to create a partial EET pathway, so the amount of electricity generated was fairly small.

Now, the EPFL researchers have managed to create a full pathway and triple the amount of electricity that E. Coli can produce. "Instead of putting energy into the system to process organic waste, we are producing electricity while processing organic waste at the same time -- hitting two birds with one stone!" says Boghossian, a professor at EPFL. "We even tested our technology directly on wastewater that we collected from Les Brasseurs, a local brewery in Lausanne. The exotic electric microbes weren't even able to survive, whereas our bioengineered electric bacteria were able to flourish exponentially by feeding off this waste."

This development is still in the early stages, but it could have exciting implications both in wastewater processing and beyond.

"Our work is quite timely, as engineered bioelectric microbes are pushing the boundaries in more and more real-world applications" says Mouhib, the lead author of the manuscript. "We have set a new record compared to the previous state-of-the-art, which relied only on a partial pathway, and compared to the microbe that was used in one of the biggest papers recently published in the field. With all the current research efforts in the field, we are excited about the future of bioelectric bacteria, and can't wait for us and others to push this technology into new scales."

146 notes

·

View notes

Text

art vs industry

Sometimes I'm having a good day, but then sometimes I think about how industry is actively killing creative fields and that goes away. People no longer go to woodworkers for tables and chairs and cabinets, but instead pick from one of hundreds of mass-produced designs made out of cheap particle board instead of paying a carpenter for furniture that is both made to last generations and leaves room for customization. With the growth of population and international trade, the convenience and low production costs are beneficial in some aspects, but how many local craftsmen across the world were put out of business? How many people witnessed their craft die before their eyes? There is no heart or identity put into mass produced items; be it furniture, ceramics, metalwork, or home decor; and at the end of the day everybody ends up with the same, carbon copy stuff in their homes.

I'm a big fan of animated movies, and I see this same thing happening too. When was the last time western audiences saw a new 2D animated movie hit theatres? I can't speak for other countries, but, at least in America, I believe The Princess and the Frog was the last major 2D movie released and that was back in 2009. Major studios nowadays are unwilling to spend the time and money that it would take to pay traditional animators who have spent years honing their craft to go frame by frame, and to pay painters to create scene backgrounds. We talk a lot about machines replacing jobs, but when the machines come, artistry professions are some of the first to be axed (in part because industry does not see artistry as "valuable" professions). Art, music, and writing are no longer seen as "real" jobs because they belong to the creative field and there's this inane idea that anyone who goes into those fields will be unsuccessful and starving. I'm not saying that 3D animation is bad, it has its own merits and required skills and can be just as impressive as anything 2D, but it has smothered 2D animation and reduced it largely to studios that cannot afford the tech to animate 3D.