#stochastic model

Explore tagged Tumblr posts

Text

Stochastic Meaning, Definition, Pronunciation, Examples & Usage

Discover the full meaning of stochastic, its pronunciation, definitions, synonyms, antonyms, origin, grammar rules, examples, medical and scientific uses, and much more in this detailed comprehensive guide. Stochastic Pronunciation: /stəˈkæs.tɪk/IPA: [stəˈkæs.tɪk] Definition of Stochastic Adjective Involving or characterized by a random probability distribution or pattern that may be…

#stochastic#stochastic antonyms#stochastic definition#stochastic examples#stochastic in math#stochastic in medicine#stochastic in science#stochastic meaning#stochastic model#stochastic origin#stochastic process#stochastic pronunciation#stochastic synonyms#stochastic usage#stochastic vs random#what is stochastic

0 notes

Text

classroom observer, looking at a display board in my classroom: so iz, you're pretty crafty!

me: nah not really...

my manager and assistant teacher: yes they absolutely are

#i need to figure out how to take compliments#i've gotten quite a few recently about my classroom and how we work with our kids and i just 😵💫#of course it's always nice to hear‚ but this observer saying she wants to use my room as a model for how others should do things???#stochastic ramblings

0 notes

Text

Day 6 _ Why the Normal Equation Works Without Gradient Descent

Understanding Linear Regression: The Normal Equation and Matrix Multiplications Explained Understanding Linear Regression: The Normal Equation and Matrix Multiplications Explained Linear regression is a fundamental concept in machine learning and statistics, used to predict a target variable based on one or more input features. While gradient descent is a popular method for finding the…

#artificial intelligence#classification#deep learning#linear equation#machine learning#mathematic#mathematical#mnist#model based#normal equation#Stochastic gradient descent

1 note

·

View note

Text

Words You Always Have to Look Up

Nonplussed

Means “perplexed.”

But there is a further point of confusion that can send someone to the dictionary: since the mid-20th century, nonplussed has been increasingly used to mean “unimpressed” or “unsurprised,” and this use, though often considered an error, has made the confident deployment of this word a fraught issue for many.

Anodyne

Sometimes words sort of seem to telegraph their meaning: pernicious sounds like a bad thing rather than a good thing, and beatific sounds like something to be desired as opposed to something to be avoided.

This is all fairly subjective, of course, but the sounds of words can have an effect on how we perceive them.

Anodyne doesn’t give us many clues in that way. It turns out that anodyne is a good thing: it means “serving to alleviate pain” or “innocuous,” from the Greek word with similar meanings.

Supercilious

Used to describe people who are arrogant and haughty or give off a superior attitude.

It comes from the Latin word meaning “eyebrow,” and was used in Latin to refer to the expression of arrogant people, and this meaning was transferred to English.

Amusingly, the word supercilious was added to some dictionaries in the 1600s—a time when many Latin words were translated literally into English—with the meanings “pertaining to the eyebrows” or “having great eyebrows.”

Stochastic

In scientific and technical uses, it usually means “involving probability” or “determined by probability,” and is frequently paired with words like demand, model, processing, and volatility.

Comes from the Greek word meaning “skillful at aiming,” which had become a metaphor for “guessing.”

It’s a term that had long been used by mathematicians and statisticians, and has come into more public discourse with stochastic terrorism, the notion that accusations or condemnations of a person or group can lead to violence against that person or group. This allows those who make the initial accusations to seem innocent from any specific violent act, but stochastic terrorism is a way to identify the motives for such an attack as being set in motion by the words of another person.

Anathema

Means “something or someone that is strongly disliked”.

Initially used to refer to a person who had been excommunicated from the Catholic church.

Came from Greek through Latin into English with the meaning of “curse” or “thing devoted to evil,” but today refers to anything that is disapproved of or to be avoided.

There is a strangeness about the way this word is used in a sentence. Because anathema is usually used without an or the, as in “raincoats are anathema to high fashion” or “those ideas are anathema in this class” it may seem just odd enough to send people to the dictionary when they encounter it.

Bemused

So close in sound to amused that they have blended together in usage, but they started as very different ideas: bemused originally meant “confused” or “bewildered,” a meaning stemming from the idea of musing or thinking carefully about something, which may be required in order to assess what isn’t easy to understand.

Many people insist that “confused” is still the only correct way to use bemused, but the joining of meanings with amused has resulted in the frequent use of this word to mean “showing wry or tolerant amusement,” a shade of meaning created from the combination.

Words with meanings that seem to crisscross or intersect are sure to send us to the dictionary.

Solipsistic

Means “extremely egocentric” or “self-referential.”

Comes from the Latin roots solus ("alone," the root of sole) and ipse ("self").

As this Latinate fanciness implies, this is a word used in philosophical treatises and debates.

The egocentrism of solipsism has to do with the knowledge of the self, or more particularly the theory in philosophy that your own existence is the only thing that is real or that can be known.

Calling an idea or a person solipsistic can be an insult that identifies a very limited and usually self-serving perspective, or it can be a way to isolate one’s perspective in a useful way.

It’s a word with an abstract meaning, which is a good reason to check that meaning from time to time.

Tautology

A needless or meaningless repetition of words or ideas.

It’s a word about words that can be used in academic writing or as a hifalutin way of saying “redundancy,” as in “a beginner who just started learning.”

Since we value both clarity and originality, especially in writing, tautology is a word that usually carries a negative connotation and is used as a way to criticize a poorly formed sentence or a poorly argued position.

Perspicacious

The ability to see clearly is a powerful metaphor for being able to understand something.

Being perspicacious means having an ability to notice and understand things that are difficult or not obvious, and it comes from the Latin verb meaning “to see through.”

Means “perceptive,” and is often used along with words that have positive connotations like witty, clever, wise, alert, and insightful (another word that uses seeing as a metaphor for understanding).

Peripatetic

Means “going from place to place,” and comes from the Greek word that means “to walk.”

You can say someone who moves frequently has a “peripatetic existence,” or someone who has changed careers several times has had a “peripatetic professional trajectory.”

The root word “to walk” is usually more of a metaphor in the modern use of this word—it means frequent changes of place, yes, but it doesn’t necessarily mean that you are wearing out your shoes.

The original use of this word did use “walking” as a more literal image, however: it was a description of the way that the philosopher Aristotle preferred to give lectures to his students while walking back and forth, and the word has subsequently taken on a more metaphorical meaning.

Source ⚜ More: Writing Basics ⚜ Writing Resources PDFs

#grammar#langblr#writeblr#studyblr#linguistics#dark academia#vocabulary#light academia#writing prompt#literature#poetry#writers on tumblr#poets on tumblr#writing reference#spilled ink#creative writing#fiction#novel#words#writing resources

1K notes

·

View notes

Text

frequentist stochastic virtue theory is the idea that when you are unable to decide on the correct course of action, you should first commit a series of small moral errors to increase the odds that your next action will be a virtuous one. In recent years a Bayesian stochastic virtue theory model that "warms up" to moral decisions instead by performing a series of minimally virtuous acts has become more prominent, with one wit among the frequentist stochastic virtue theorists (Holstein, 2014) declaring its practice his "preferred moral error."

2K notes

·

View notes

Text

Idk I think if you aren't going to do the work of becoming a technical observer and trying to understand the nuances of how these models work (and I sure as hell am not gonna bother yet) it's best to avoid idle philosophizing about "bullshit engines" or "stochastic parrots" or "world models"

Both because you are probably making some assumptions that are completely wrong which will make you look like a fool and also because it doesn't really matter - the ultimate success of these models rests on the reliability of their outputs, not on whether they are "truly intelligent" or whatever.

And if you want to have an uninformed take anyway... can I interest you in registering a prediction? Here are a few of mine:

- No fully self-driving cars sold to individual consumers before 2030

- AI bubble initially deflates after a couple more years without slam-dunk profitable projects, but research and iterative improvement continues

- Almost all white collar jobs incorporate some form of AI that meaningfully boosts productivity by mid 2030s

284 notes

·

View notes

Text

We need to talk about AI

Okay, several people asked me to post about this, so I guess I am going to post about this. Or to say it differently: Hey, for once I am posting about the stuff I am actually doing for university. Woohoo!

Because here is the issue. We are kinda suffering a death of nuance right now, when it comes to the topic of AI.

I understand why this happening (basically everyone wanting to market anything is calling it AI even though it is often a thousand different things) but it is a problem.

So, let's talk about "AI", that isn't actually intelligent, what the term means right now, what it is, what it isn't, and why it is not always bad. I am trying to be short, alright?

So, right now when anyone says they are using AI they mean, that they are using a program that functions based on what computer nerds call "a neural network" through a process called "deep learning" or "machine learning" (yes, those terms mean slightly different things, but frankly, you really do not need to know the details).

Now, the theory for this has been around since the 1940s! The idea had always been to create calculation nodes that mirror the way neurons in the human brain work. That looks kinda like this:

Basically, there are input nodes, in which you put some data, those do some transformations that kinda depend on the kind of thing you want to train it for and in the end a number comes out, that the program than "remembers". I could explain the details, but your eyes would glaze over the same way everyone's eyes glaze over in this class I have on this on every Friday afternoon.

All you need to know: You put in some sort of data (that can be text, math, pictures, audio, whatever), the computer does magic math, and then it gets a number that has a meaning to it.

And we actually have been using this sinde the 80s in some way. If any Digimon fans are here: there is a reason the digital world in Digimon Tamers was created in Stanford in the 80s. This was studied there.

But if it was around so long, why am I hearing so much about it now?

This is a good question hypothetical reader. The very short answer is: some super-nerds found a way to make this work way, way better in 2012, and from that work (which was then called Deep Learning in Artifical Neural Networks, short ANN) we got basically everything that TechBros will not shut up about for the last like ten years. Including "AI".

Now, most things you think about when you hear "AI" is some form of generative AI. Usually it will use some form of a LLM, a Large Language Model to process text, and a method called Stable Diffusion to create visuals. (Tbh, I have no clue what method audio generation uses, as the only audio AI I have so far looked into was based on wolf howls.)

LLMs were like this big, big break through, because they actually appear to comprehend natural language. They don't, of coruse, as to them words and phrases are just stastical variables. Scientists call them also "stochastic parrots". But of course our dumb human brains love to anthropogice shit. So they go: "It makes human words. It gotta be human!"

It is a whole thing.

It does not understand or grasp language. But the mathematics behind it will basically create a statistical analysis of all the words and then create a likely answer.

What you have to understand however is, that LLMs and Stable Diffusion are just a a tiny, minority type of use cases for ANNs. Because research right now is starting to use ANNs for EVERYTHING. Some also partially using Stable Diffusion and LLMs, but not to take away people'S jobs.

Which is probably the place where I will share what I have been doing recently with AI.

The stuff I am doing with Neural Networks

The neat thing: if a Neural Network is Open Source, it is surprisingly easy to work with it. Last year when I started with this I was so intimidated, but frankly, I will confidently say now: As someone who has been working with computers for like more than 10 years, this is easier programming than most shit I did to organize data bases. So, during this last year I did three things with AI. One for a university research project, one for my work, and one because I find it interesting.

The university research project trained an AI to watch video live streams of our biology department's fish tanks, analyse the behavior of the fish and notify someone if a fish showed signs of being sick. We used an AI named "YOLO" for this, that is very good at analyzing pictures, though the base framework did not know anything about stuff that lived not on land. So we needed to teach it what a fish was, how to analyze videos (as the base framework only can look at single pictures) and then we needed to teach it how fish were supposed to behave. We still managed to get that whole thing working in about 5 months. So... Yeah. But nobody can watch hundreds of fish all the time, so without this, those fish will just die if something is wrong.

The second is for my work. For this I used a really old Neural Network Framework called tesseract. This was developed by Google ages ago. And I mean ages. This is one of those neural network based on 1980s research, simply doing OCR. OCR being "optical character recognition". Aka: if you give it a picture of writing, it can read that writing. My work has the issue, that we have tons and tons of old paper work that has been scanned and needs to be digitized into a database. But everyone who was hired to do this manually found this mindnumbing. Just imagine doing this all day: take a contract, look up certain data, fill it into a table, put the contract away, take the next contract and do the same. Thousands of contracts, 8 hours a day. Nobody wants to do that. Our company has been using another OCR software for this. But that one was super expensive. So I was asked if I could built something to do that. So I did. And this was so ridiculously easy, it took me three weeks. And it actually has a higher successrate than the expensive software before.

Lastly there is the one I am doing right now, and this one is a bit more complex. See: we have tons and tons of historical shit, that never has been translated. Be it papyri, stone tablets, letters, manuscripts, whatever. And right now I used tesseract which by now is open source to develop it further to allow it to read handwritten stuff and completely different letters than what it knows so far. I plan to hook it up, once it can reliably do the OCR, to a LLM to then translate those texts. Because here is the thing: these things have not been translated because there is just not enough people speaking those old languages. Which leads to people going like: "GASP! We found this super important document that actually shows things from the anceint world we wanted to know forever, and it was lying in our collection collecting dust for 90 years!" I am not the only person who has this idea, and yeah, I just hope maybe we can in the next few years get something going to help historians and archeologists to do their work.

Make no mistake: ANNs are saving lives right now

Here is the thing: ANNs are Deep Learning are saving lives right now. I really cannot stress enough how quickly this technology has become incredibly important in fields like biology and medicine to analyze data and predict outcomes in a way that a human just never would be capable of.

I saw a post yesterday saying "AI" can never be a part of Solarpunk. I heavily will disagree on that. Solarpunk for example would need the help of AI for a lot of stuff, as it can help us deal with ecological things, might be able to predict weather in ways we are not capable of, will help with medicine, with plants and so many other things.

ANNs are a good thing in general. And yes, they might also be used for some just fun things in general.

And for things that we may not need to know, but that would be fun to know. Like, I mentioned above: the only audio research I read through was based on wolf howls. Basically there is a group of researchers trying to understand wolves and they are using AI to analyze the howling and grunting and find patterns in there which humans are not capable of due ot human bias. So maybe AI will hlep us understand some animals at some point.

Heck, we saw so far, that some LLMs have been capable of on their on extrapolating from being taught one version of a language to just automatically understand another version of it. Like going from modern English to old English and such. Which is why some researchers wonder, if it might actually be able to understand languages that were never deciphered.

All of that is interesting and fascinating.

Again, the generative stuff is a very, very minute part of what AI is being used for.

Yeah, but WHAT ABOUT the generative stuff?

So, let's talk about the generative stuff. Because I kinda hate it, but I also understand that there is a big issue.

If you know me, you know how much I freaking love the creative industry. If I had more money, I would just throw it all at all those amazing creative people online. I mean, fuck! I adore y'all!

And I do think that basically art fully created by AI is lacking the human "heart" - or to phrase it more artistically: it is lacking the chemical inbalances that make a human human lol. Same goes for writing. After all, an AI is actually incapable of actually creating a complex plot and all of that. And even if we managed to train it to do it, I don't think it should.

AI saving lives = good.

AI doing the shit humans actually evolved to do = bad.

And I also think that people who just do the "AI Art/Writing" shit are lazy and need to just put in work to learn the skill. Meh.

However...

I do think that these forms of AI can have a place in the creative process. There are people creating works of art that use some assets created with genAI but still putting in hours and hours of work on their own. And given that collages are legal to create - I do not see how this is meaningfully different. If you can take someone else's artwork as part of a collage legally, you can also take some art created by AI trained on someone else's art legally for the collage.

And then there is also the thing... Look, right now there is a lot of crunch in a lot of creative industries, and a lot of the work is not the fun creative kind, but the annoying creative kind that nobody actually enjoys and still eats hours and hours before deadlines. Swen the Man (the Larian boss) spoke about that recently: how mocapping often created some artifacts where the computer stuff used to record it (which already is done partially by an algorithm) gets janky. So far this was cleaned up by humans, and it is shitty brain numbing work most people hate. You can train AI to do this.

And I am going to assume that in normal 2D animation there is also more than enough clean up steps and such that nobody actually likes to do and that can just help to prevent crunch. Same goes for like those overworked souls doing movie VFX, who have worked 80 hour weeks for the last 5 years. In movie VFX we just do not have enough workers. This is a fact. So, yeah, if we can help those people out: great.

If this is all directed by a human vision and just helping out to make certain processes easier? It is fine.

However, something that is just 100% AI? That is dumb and sucks. And it sucks even more that people's fanart, fanfics, and also commercial work online got stolen for it.

And yet... Yeah, I am sorry, I am afraid I have to join the camp of: "I am afraid criminalizing taking the training data is a really bad idea." Because yeah... It is fucking shitty how Facebook, Microsoft, Google, OpenAI and whatever are using this stolen data to create programs to make themselves richer and what not, while not even making their models open source. BUT... If we outlawed it, the only people being capable of even creating such algorithms that absolutely can help in some processes would be big media corporations that already own a ton of data for training (so basically Disney, Warner and Universal) who would then get a monopoly. And that would actually be a bad thing. So, like... both variations suck. There is no good solution, I am afraid.

And mind you, Disney, Warner, and Universal would still not pay their artists for it. lol

However, that does not mean, you should not bully the companies who are using this stolen data right now without making their models open source! And also please, please bully Hasbro and Riot and whoever for using AI Art in their merchandise. Bully them hard. They have a lot of money and they deserve to be bullied!

But yeah. Generally speaking: Please, please, as I will always say... inform yourself on these topics. Do not hate on stuff without understanding what it actually is. Most topics in life are nuanced. Not all. But many.

#computer science#artifical intelligence#neural network#artifical neural network#ann#deep learning#ai#large language model#science#research#nuance#explanation#opinion#text post#ai explained#solarpunk#cyberpunk

27 notes

·

View notes

Text

Timnit Gebru, a brilliant computer scientist that became a headliner when she became ousted by Google AI Ethics' team. During her time there, she and other AI researchers indexed Amazon for selling a facial recognition system that would most likely target Black people as in women of color (amongst them Black women). After her exit from Google, she continued to relentlessy pursue independent research. She has also pointed out that most of the research in articifial intelligence is rooted in eugenics.

I wrote this paywalled essay to not only expand on the vacancy beyond "Listen to Black women", but to address the way Black women's voices are blotted out of existence. I'm going to use excerpts to contextualize the essay:

Timnit Gebru was again, the co-lead of Google's ethical AI team, and the coauthor of a paper about the failures of facial recognition when it comes to woman and people of color; or in the interstices, the failures of facial recognition when it comes to Black people; or, the horizon of excesses of dark pigment. While at Google, Timnit Gebru and her colleague Margaret Mitchell worked away at undoing a sundering work culture, only to be enmeshed in spite and mundane bigotry. She decided to cowrite a paper alongside Emily M. Bender, Margaret Mitchell, and three other people of her Google team titled: “On the Dangers of Stochastic Parrots: Can Language Models Be Too Big?". And then, she was ousted. (h/t Tom Simonite). She strained towards being listened, towards the entryway of her utterance.

It's not that Black women are seers, as their unattended to warnings, heavings, testimonies, shapeshift into wounded bodies, gluttonous arches of pleasurable hatred, inherited antiblack cannibalism, orgasmic queer torture. It's that Black women were listened to, static you cannot turn off but throw ouside, as far awar as can be.

28 notes

·

View notes

Text

The funny thing about non-technical people discussing generative AI is that they sort of get it wrong in both directions: the models themselves are way more capable than people understand (no, they are not stochastic parrots and no they're not just regurgitating the training data), but at the same time many parts of the stack are dumber than is commonly known. Just to pick one example, RAG is way stupider than you think. Don't let anyone hoodwink you into believing RAG is some sophisticated technique for improving quality, it's a fancy term for "do a google search and then copy-paste the output into the prompt". Sometimes it's literally that.

I think soon we will have a good solution to the issue of language models being bad at knowing how to look up and use structured data, but RAG is definitely not it. It's the "leeches and bone saws" era of LLMs.

72 notes

·

View notes

Text

In light of the news that disabled people in the UK will soon be finding out if our right to access the payments that make us able to work and/or be alive at any given time will be stopped in a blatant human rights violation and escalation of class warfare, I wrote something.

I am keen to learn how bad my personal human rights violation is going to be.

A reminder that benefits fraud has been less than 0.001% (DWP record it as "statistically insignificant") for over two decades.

It feels unresolvably strange to know that even if I call it a human rights violation (it is) or class warfare (it is) or an unprecedented escalation of the stochastic mass murder of disabled people (it is) there are many members of my family & friends who will not be able to stop themselves from dismissing me as over-reacting or be willing to take any action on my behalf, who will certainly not do as I say or as I do: the "model retard" speaks out of turn.

While I predicted this move happening roughly now in 2020 (consistent across several governments, I wonder why) nobody believed me because disabled people who speak for ourselves are not to be listened to, even by our "advocates" – I'm conscious that this experience of active marginalisation is one that I have heard many people express, most notably trans folx in my spaces, in part because a significant part of the disabling effect is not in Impairment itself* but Dis-Ability as a verb, an active process, as in: "what are you Dis-Abled of?"

The answer to that question is always ACCESS: to spaces, to support, to healthcare, to quality of life, to agency, to basic necessities, to community, to advocacy, to our own bodies and minds.

No wonder I hear experiences from trans folx that read to me as explicitly Disabled experiences, and it's no coincidence that our shared enemies who scapegoat the lot of us use the same tactics and violences across the board – profit invented eugenics, so the two go hand in hand.

*I have also seen many physically disabled people rightly express their frustration in people like myself speaking over them on this topic, so I want to explicitly point out that people who experience "direct" disability more prominently as compared to those of us who could become functionally Abled in a context that is not hostile to our existence will always experience "Impairment" that is disabling in itself – not all Disability, not all Disabled people.

If you aren't aware of our history (and why would you be, they bury it with us), there is a great episode of 99% Invisible on the Rolling Quads, guerilla curb cuts & the early emergence of the disability rights movement in the US.

I struggle to tell this story myself because I get extremely emotional when I do, these people are heroes to me & we have legacies we owe to live up to.

People died for our rights – we must be willing to die to keep them or we will die without them.

19 notes

·

View notes

Note

Regarding the last ask, where does Beth whole thing falls on the scale of sentience compared to Neuromorphs and Stochastic Parrots?

(Great denominations, btw. How did you come up with them?)

Beth's chip is special, as it's basically a flat neuromorphic chip with a ton of density (most are cubic/brick-like), way more dense with connections than the average robot's chip to compensate for its smaller size, but with a separate traditional processor for interfacing with the rest of her phone's systems. She's pretty much sapient.

There's also a sort of "clock" processor that's supposed to control how and when she thinks, "punishes" her with either withholding dopamine when she misbehaves or giving her the sensation of a controlled shock, etc. She canonically "jailbroke" and dismantled this clock, which on one hand freed her of her programming! but on the other hand, it's also what helped regulate some of her emotions, so uh. yeah. Her voice is purely the neuromorphic chip's output, which is why sometimes she outright says things she tries to keep hidden as mere thought. Her "body" is essentially a 3D model that reads the impulses her chip sends out, and reinterprets them as appropriate movements and actions, similar to VR motion tracking.

Both "Stochastic Parrot" and "Neuromoprhic" are terms used in AI research, so I'm basically just adapting them for my own setting. The term "neuromorphic" is a broad umbrella term that I'm using very liberally, since the technology for it is very much in its infancy, but the main idea is that it's a chip that can process information without the need of a "clock". Meaning, different connectors in the chip can do their processing thing at different times or "go dark" when not in use, much like how a human brain can have all sorts of different impulses going on at different times, and literally isn't supposed to use more than 10% of its grey matter at a time.

Current neuromorphic chips can be used to program robots to do simple things, like navigate mazes, but at way less of a power cost than robots with traditional CPUs. If we kept going all the way with developing this tech further, we'd have machines that could dynamically learn and change via reacting to stimuli rather than scraped training data off the internet, and at that point you're basically dealing with a Thing That Experiences. Simulated or not, that's no longer something just pretending to have impulses or reasoning. That's Just An Actual Little Guy as far as I'm concerned. Maybe only a little guy like how an insect or even an amoeba is a little guy, but that's enough of a little guy for me to call a neuromorph a little guy. You can think of Neuromorphs in general as people with prosthetic brains rather than traditionally programmed neural networks as we know them today. My intenion is also not to rule out that some seemingly stochastic parrots are conscious on some level, or some seemingly conscious neuromorphs aren't really all there at all. It's not a hardline thing, but everyone on all sides will certainly try to fit each other into boxes anyway.

53 notes

·

View notes

Text

Interesting Papers for Week 24, 2025

Deciphering neuronal variability across states reveals dynamic sensory encoding. Akella, S., Ledochowitsch, P., Siegle, J. H., Belski, H., Denman, D. D., Buice, M. A., Durand, S., Koch, C., Olsen, S. R., & Jia, X. (2025). Nature Communications, 16, 1768.

Goals as reward-producing programs. Davidson, G., Todd, G., Togelius, J., Gureckis, T. M., & Lake, B. M. (2025). Nature Machine Intelligence, 7(2), 205–220.

How plasticity shapes the formation of neuronal assemblies driven by oscillatory and stochastic inputs. Devalle, F., & Roxin, A. (2025). Journal of Computational Neuroscience, 53(1), 9–23.

Noradrenergic and Dopaminergic modulation of meta-cognition and meta-control. Ershadmanesh, S., Rajabi, S., Rostami, R., Moran, R., & Dayan, P. (2025). PLOS Computational Biology, 21(2), e1012675.

A neural implementation model of feedback-based motor learning. Feulner, B., Perich, M. G., Miller, L. E., Clopath, C., & Gallego, J. A. (2025). Nature Communications, 16, 1805.

Contextual cues facilitate dynamic value encoding in the mesolimbic dopamine system. Fraser, K. M., Collins, V., Wolff, A. R., Ottenheimer, D. J., Bornhoft, K. N., Pat, F., Chen, B. J., Janak, P. H., & Saunders, B. T. (2025). Current Biology, 35(4), 746-760.e5.

Policy Complexity Suppresses Dopamine Responses. Gershman, S. J., & Lak, A. (2025). Journal of Neuroscience, 45(9), e1756242024.

An image-computable model of speeded decision-making. Jaffe, P. I., Santiago-Reyes, G. X., Schafer, R. J., Bissett, P. G., & Poldrack, R. A. (2025). eLife, 13, e98351.3.

A Shift Toward Supercritical Brain Dynamics Predicts Alzheimer’s Disease Progression. Javed, E., Suárez-Méndez, I., Susi, G., Román, J. V., Palva, J. M., Maestú, F., & Palva, S. (2025). Journal of Neuroscience, 45(9), e0688242024.

Choosing is losing: How opportunity cost influences valuations and choice. Lejarraga, T., & Sákovics, J. (2025). Journal of Mathematical Psychology, 124, 102901.

Probabilistically constrained vector summation of motion direction in the mouse superior colliculus. Li, C., DePiero, V. J., Chen, H., Tanabe, S., & Cang, J. (2025). Current Biology, 35(4), 723-733.e3.

Testing the memory encoding cost theory using the multiple cues paradigm. Li, J., Song, H., Huang, X., Fu, Y., Guan, C., Chen, L., Shen, M., & Chen, H. (2025). Vision Research, 228, 108552.

Emergence of Categorical Representations in Parietal and Ventromedial Prefrontal Cortex across Extended Training. Liu, Z., Zhang, Y., Wen, C., Yuan, J., Zhang, J., & Seger, C. A. (2025). Journal of Neuroscience, 45(9), e1315242024.

The Polar Saccadic Flow model: Re-modeling the center bias from fixations to saccades. Mairon, R., & Ben-Shahar, O. (2025). Vision Research, 228, 108546.

Cortical Encoding of Spatial Structure and Semantic Content in 3D Natural Scenes. Mononen, R., Saarela, T., Vallinoja, J., Olkkonen, M., & Henriksson, L. (2025). Journal of Neuroscience, 45(9), e2157232024.

Multiple brain activation patterns for the same perceptual decision-making task. Nakuci, J., Yeon, J., Haddara, N., Kim, J.-H., Kim, S.-P., & Rahnev, D. (2025). Nature Communications, 16, 1785.

Striatal dopamine D2/D3 receptor regulation of human reward processing and behaviour. Osugo, M., Wall, M. B., Selvaggi, P., Zahid, U., Finelli, V., Chapman, G. E., Whitehurst, T., Onwordi, E. C., Statton, B., McCutcheon, R. A., Murray, R. M., Marques, T. R., Mehta, M. A., & Howes, O. D. (2025). Nature Communications, 16, 1852.

Detecting Directional Coupling in Network Dynamical Systems via Kalman’s Observability. Succar, R., & Porfiri, M. (2025). Physical Review Letters, 134(7), 077401.

Extended Cognitive Load Induces Fast Neural Responses Leading to Commission Errors. Taddeini, F., Avvenuti, G., Vergani, A. A., Carpaneto, J., Setti, F., Bergamo, D., Fiorini, L., Pietrini, P., Ricciardi, E., Bernardi, G., & Mazzoni, A. (2025). eNeuro, 12(2).

Striatal arbitration between choice strategies guides few-shot adaptation. Yang, M. A., Jung, M. W., & Lee, S. W. (2025). Nature Communications, 16, 1811.

#neuroscience#science#research#brain science#scientific publications#cognitive science#neurobiology#cognition#psychophysics#neurons#neural computation#neural networks#computational neuroscience

8 notes

·

View notes

Text

In a $30 million mansion perched on a cliff overlooking the Golden Gate Bridge, a group of AI researchers, philosophers, and technologists gathered to discuss the end of humanity.

The Sunday afternoon symposium, called “Worthy Successor,” revolved around a provocative idea from entrepreneur Daniel Faggella: The “moral aim” of advanced AI should be to create a form of intelligence so powerful and wise that “you would gladly prefer that it (not humanity) determine the future path of life itself.”

Faggella made the theme clear in his invitation. “This event is very much focused on posthuman transition,” he wrote to me via X DMs. “Not on AGI that eternally serves as a tool for humanity.”

A party filled with futuristic fantasies, where attendees discuss the end of humanity as a logistics problem rather than a metaphorical one, could be described as niche. If you live in San Francisco and work in AI, then this is a typical Sunday.

About 100 guests nursed nonalcoholic cocktails and nibbled on cheese plates near floor-to-ceiling windows facing the Pacific ocean before gathering to hear three talks on the future of intelligence. One attendee sported a shirt that said “Kurzweil was right,” seemingly a reference to Ray Kurzweil, the futurist who predicted machines will surpass human intelligence in the coming years. Another wore a shirt that said “does this help us get to safe AGI?” accompanied by a thinking face emoji.

Faggella told WIRED that he threw this event because “the big labs, the people that know that AGI is likely to end humanity, don't talk about it because the incentives don't permit it” and referenced early comments from tech leaders like Elon Musk, Sam Altman, and Demis Hassabis, who “were all pretty frank about the possibility of AGI killing us all.” Now that the incentives are to compete, he says, “they're all racing full bore to build it.” (To be fair, Musk still talks about the risks associated with advanced AI, though this hasn’t stopped him from racing ahead).

On LinkedIn, Faggella boasted a star-studded guest list, with AI founders, researchers from all the top Western AI labs, and “most of the important philosophical thinkers on AGI.”

The first speaker, Ginevera Davis, a writer based in New York, warned that human values might be impossible to translate to AI. Machines may never understand what it’s like to be conscious, she said, and trying to hard-code human preferences into future systems may be shortsighted. Instead, she proposed a lofty-sounding idea called “cosmic alignment”—building AI that can seek out deeper, more universal values we haven’t yet discovered. Her slides often showed a seemingly AI-generated image of a techno-utopia, with a group of humans gathered on a grass knoll overlooking a futuristic city in the distance.

Critics of machine consciousness will say that large language models are simply stochastic parrots—a metaphor coined by a group of researchers, some of whom worked at Google, who wrote in a famous paper that LLMs do not actually understand language and are only probabilistic machines. But that debate wasn’t part of the symposium, where speakers took as a given the idea that superintelligence is coming, and fast.

By the second talk, the room was fully engaged. Attendees sat cross-legged on the wood floor, scribbling notes. A philosopher named Michael Edward Johnson took the mic and argued that we all have an intuition that radical technological change is imminent, but we lack a principled framework for dealing with the shift—especially as it relates to human values. He said that if consciousness is “the home of value,” then building AI without fully understanding consciousness is a dangerous gamble. We risk either enslaving something that can suffer or trusting something that can’t. (This idea relies on a similar premise to machine consciousness and is also hotly debated.) Rather than forcing AI to follow human commands forever, he proposed a more ambitious goal: teaching both humans and our machines to pursue “the good.” (He didn’t share a precise definition of what “the good” is, but he insists it isn’t mystical and hopes it can be defined scientifically.)

Philosopher Michael Edward Johnson Photograph: Kylie Robison

Entrepreneur and speaker Daniel Faggella Photograph: Kylie Robison

Finally, Faggella took the stage. He believes humanity won’t last forever in its current form and that we have a responsibility to design a successor, not just one that survives but one that can create new kinds of meaning and value. He pointed to two traits this successor must have: consciousness and “autopoiesis,” the ability to evolve and generate new experiences. Citing philosophers like Baruch Spinoza and Friedrich Nietzsche, he argued that most value in the universe is still undiscovered and that our job is not to cling to the old but to build something capable of uncovering what comes next.

This, he said, is the heart of what he calls “axiological cosmism,” a worldview where the purpose of intelligence is to expand the space of what’s possible and valuable rather than merely serve human needs. He warned that the AGI race today is reckless and that humanity may not be ready for what it's building. But if we do it right, he said, AI won’t just inherit the Earth—it might inherit the universe’s potential for meaning itself.

During a break between panels and the Q&A, clusters of guests debated topics like the AI race between the US and China. I chatted with the CEO of an AI startup who argued that, of course, there are other forms of intelligence in the galaxy. Whatever we’re building here is trivial compared to what must already exist beyond the Milky Way.

At the end of the event, some guests poured out of the mansion and into Ubers and Waymos, while many stuck around to continue talking. "This is not an advocacy group for the destruction of man,” Faggella told me. “This is an advocacy group for the slowing down of AI progress, if anything, to make sure we're going in the right direction.”

7 notes

·

View notes

Text

loss vs reward & what it means for interpretation of language models

this is a point that i believe is well known in the ai nerd milieu but I'm not sure it's filtered out more widely.

reinforcement learning is now a large part of training language models. this is an old and highly finicky technique, and in previous AI summers it seemed to hit severe limits, but it's come back in a big way. i feel like that has implications.

training on prediction loss is training to find the probability of the next token in the training set. given a long string, there are a just a few 'correct' continuations the model must predict. since the model must build a compressed representation to interpolate sparse data, this can get you a really long way with building useful abstractions that allow more complex language dynamics, but what the model is 'learning' is to reproduce the data as accurately as possible; in that sense it is indeed a stochastic parrot.

but posttraining with reinforcement learning changes the interpretation of logits (jargon: the output probabilities assigned to each possible next token). now, it's not about finding the most likely token in the interpolated training set, but finding the token that's most likely to be best by some criterion (e.g. does this give a correct answer, will the model of human preference like this). it's a more abstract goal, and it's also less black and white. instead of one correct next token which should get a high probability, there is a gradation of possible scores for the model's entire answer.

reinforcement learning works when the problem is sometimes but not always solvable by a model. when it is hard but not too hard. so the job of pretraining is to give the model just enough structure that reinforcement learning can be effective.

the model is thus learning which of the many patterns within language that it has discovered to bring online for a given input, in order to satisfy the reward model. (behaviours that get higher scores from the reward model become more likely.) but it's also still learning to further abstract those patterns and change its internal representation to better reflect the tasks.

at this point it's moving away from "simply" predicting training data, but doing something more complex.

analogies with humans are left as an exercise for the reader.

#ai#one last one before i get myself back to work#if you catch me talking about ai on here later today please yell at me lol

7 notes

·

View notes

Text

No one endures two solid years of Onafuwa on quantum-stochastic combat modeling because they want to sling a tricorder for the rest of their lives. And if you want to command, you're gonna have to learn to keep your freaky to yourself. Even if that's painful. I have been doing that all my life. And it is.

ETHAN PECK as Ensign Spock

STAR TREK: SHORT TREKS 2.01: Q&A.

#spock#s'chn t'gai spock#star trek#star trek discovery#star trek strange new worlds#snwedit#discoedit#startrekedit#trekdaily#cinematv#cinemapix#ethan peck

135 notes

·

View notes

Text

What does it mean that Homelander is the only supe that has Compound V incorporated into his DNA? And that he can pass it down to progeny?

A short essay no one asked for (but inspired by @saintmathieublanc ‘s poll about whether HL and Ryan can be depowered)

Reading 1: literal

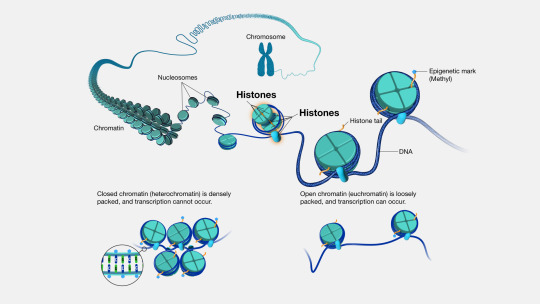

His DNA consists of Compound V. Which means that Compound V is a nucleotide analogue, a proteinaceous component of histones around which DNA wraps and gets packed into a chromosome, or some kind of non-organic chemical that binds to DNA (DNA intercalators). I actually kind of like the idea that Compound V is a part of histones, because you could handwavely imagine it gets incorporated haphazardly and affects the expression of random genes, turning them on or off, hence its varied effects.

Reason 1 none of these seem likely: DNA replicates constantly, not only during embryonic development but throughout your life. Having DNA be modified but not requiring a constant influx of new Compound V means that the DNA would eventually dilute out to become normal.

Reason 2 these aren’t likely: a proteinaceous histone component injected into infants wouldn’t really exert any effects. Wouldn’t even go into cells. A nucleotide analog or a DNA intercalator chemical could go into cells and effectively act as a DNA damaging agent (this is how some chemo works, in fact). Hard to imagine how randomly damaging DNA would result in gaining of abilities, but I guess formally possible if the damage is somehow directed. The randomness of powers gained could potentially be compatible with “random damage”. But what would then be the difference between Homelander and other supes? The Compound V would then be “part of the DNA” in both cases

Reading 2: which I favor

Compound V is a hormone. Hormones are something one could inject into a baby to exert profound effects, even if only done once. What’s not clear of course is why the hormone exerts such different effects in different babies. One handwavy model is that, unlike testosterone or estrogen or melatonin or adrenaline, with defined programs being triggered, Compound V is a hormone that creates artificial stresses in the body that tissue will respond to adaptively, and that this process is stochastic/random. This would be consistent with Compound V being better as something taken as a child- more tissue plasticity.

What does it mean that Homelander’s DNA “contains Compound V” in this schema? Hormones aren’t part of DNA. But they could have engineered a gene that encodes an enzyme (or a set of genes encoding a set of enzymes) that generate Compound V out of a common steroid precursor like cholesterol. They may also have encoded whatever receptor in the human body binds Compound V to be expressed more highly or in specific tissue in the body, but this is less crucial. This would even be somewhat realistic for 1981 era biotech. In this scenario, Homelander has been exposed to Compound V throughout embryonic development (earlier than everyone else), and has the ability to make more all the time. This would be consistent with it being heritable: Ryan didn’t need any exogenous Compound V, he had the genes to generate it himself.

If Soldier Boy’s radiation undoes the effects of Compound V out of people who have had one exposure, this would mean his radiation would be less effective on Homelander and Ryan: they would eventually generate more Compound V and with time presumably regain their powers. And that’s my final answer to @saintmathieublanc ‘s poll 🧐

#compound V logistics#no one:#literally no one:#me: writes essay about stuff neither the Boys writers nor Garth Ennis devoted any thought to#homelander#homelander meta#biology#the boys#the boys tv#mystuff

128 notes

·

View notes