#DataMapping

Explore tagged Tumblr posts

Text

Top AI Tools to Skyrocket Your Website Rankings

In the ever-evolving digital world, staying ahead in search rankings is more competitive than ever. But what if you could work smarter, not harder? That’s where AI-powered SEO tools come into play. These tools help automate tasks, analyze data more effectively, and provide actionable insights that can elevate your website’s visibility in search engines.

Let’s explore the top AI tools that can truly skyrocket your website rankings.

1. Surfer SEO

Best for: Content Optimization

Surfer SEO is a powerful AI tool that helps you create content designed to rank. It compares your content against the top-ranking pages for a keyword and gives real-time optimization suggestions—like ideal word count, keyword usage, and NLP recommendations.

🔍 Key Features:

Content Editor with real-time scoring

SERP Analyzer for competitive analysis

Keyword Surfer Chrome Extension

2. Jasper AI

Best for: Writing SEO-Friendly Content

Jasper AI (formerly Jarvis) is an AI writing assistant that can generate high-quality blog posts, product descriptions, and meta tags. It’s great for marketers who want to scale content production without sacrificing quality.

✍️ Key Features:

Long-form content creation

SEO mode (integrated with Surfer SEO)

50+ content templates

3. Frase.io

Best for: Content Briefs & Answering Search Queries

Frase helps you create content that directly answers user search intent. It analyzes the top results and builds a brief so you can structure your content with maximum SEO impact.

📈 Key Features:

AI-generated content briefs

Question research from Google’s “People Also Ask”

Content scoring system

4. MarketMuse

Best for: Content Strategy & Optimization

MarketMuse uses AI to identify content gaps and helps you create topic-rich, optimized pages. It’s ideal for large sites or agencies working on complex content strategies.

🧠 Key Features:

AI-driven content plans

SERP and competitor analysis

Optimization suggestions with content scoring

5. RankIQ

Best for: Bloggers & Beginners

RankIQ offers a simplified interface and easy-to-use tools that make SEO content creation accessible for bloggers and small business owners. It provides keyword suggestions and outlines for ranking on Google’s first page.

✅ Key Features:

Low-competition keyword library

Content optimizer

Title and SEO score analysis

6. Clearscope

Best for: Enterprise SEO Optimization

Clearscope is trusted by large brands and agencies to refine their content for better performance. It provides a content grade, readability score, and keyword suggestions based on top-ranking pages.

📊 Key Features:

Real-time optimization guidance

Deep keyword and SERP analysis

Integrations with Google Docs & WordPress

7. ChatGPT (OpenAI)

Best for: Idea Generation & SEO Support

ChatGPT isn’t a traditional SEO tool but can be used creatively for keyword ideas, blog outlines, SEO copywriting, and even analyzing competitors if prompted correctly.

💡 Key Features:

Fast brainstorming and outline generation

Can summarize competitor content

Helps write SEO-optimized meta tags and product descriptions

Final Thoughts

AI is no longer the future of SEO—it’s the present. Whether you're a solo blogger or managing enterprise-level websites, the right AI tools can significantly enhance your SEO efforts. By integrating tools like Surfer SEO, Jasper, and Frase, you’ll not only save time but also produce data-driven content that ranks.

Ready to skyrocket your website rankings? Start leveraging AI and let technology take your SEO game to the next level.

#aitools#ai#chatgpt#artificialintelligence#gpt#digitalmarketing#machinelearning#technology#socialmediamarketing#openai#business#tech#o#productivity#aiart#newtech#datamapping#geospatialtechnology#gis#digitaltwins#dmapping#geospatial#marketing#marketingtips#midjourney#lidar#aitechnology#maps#web#entrepreneurship

0 notes

Text

Effortless Transition from Google Workspace to Microsoft 365

0 notes

Text

🎯 Unlock ArcGIS Pro Skills – BIG DISCOUNT!

🚀 Become a GIS expert with this easy-to-follow ArcGIS Pro course on Udemy! Learn essential skills and apply them in real-world projects.

🔥 GET DISCOUNT 48% OFF (ONLY $12.99 FROM $19.99) – LIMITED TIME, ENROLL NOW !

#GISFundamentals#ArcGISProLearning#MappingSkills#GeospatialAnalysis#GISBeginner#ArcGISProCourse#DataMapping#SpatialAnalysis#OpenSourceGIS#UdemyPromo

0 notes

Text

#Greetings from Ashra Technologies#we are hiring#ashra#ashratechnologies#jobs#hiring#jobalert#jobsearch#jobhunt#recruiting#recruitingpost#maximo#ibm#java#automation#dataloading#datamapping#dataextraction#upgrade#patching#installation#integrity#chennai#bangalore#pune#mumbai#linkedin#linkedinprofessionals#linkedinlearning#linkedinads

0 notes

Text

Transforming Data into Actionable Insights with Domo

In today's data-driven world, organizations face the challenge of managing vast amounts of data from various sources and deriving meaningful insights from it. Domo, a powerful cloud-based platform, has emerged as a game-changer in the realm of business intelligence and data analytics. In this blog post, we will explore the capabilities of Domo and how it enables businesses to harness the full potential of their data.

What is Domo?

Domo is a cloud-based business intelligence and data analytics platform that empowers organizations to easily connect, prepare, visualize, and analyze their data in real-time. It offers a comprehensive suite of tools and features designed to streamline data operations and facilitate data-driven decision-making.

Key Features and Benefits:

Data Integration: Domo enables seamless integration with a wide range of data sources, including databases, spreadsheets, cloud services, and more. It simplifies the process of consolidating data from disparate sources, allowing users to gain a holistic view of their organization's data.

Data Preparation: With Domo, data preparation becomes a breeze. It offers intuitive data transformation capabilities, such as data cleansing, aggregation, and enrichment, without the need for complex coding. Users can easily manipulate and shape their data to suit their analysis requirements.

Data Visualization: Domo provides powerful visualization tools that allow users to create interactive dashboards, reports, and charts. It offers a rich library of visualization options and customization features, enabling users to present their data in a visually appealing and easily understandable manner.

Collaboration and Sharing: Domo fosters collaboration within organizations by providing a centralized platform for data sharing and collaboration. Users can share reports, dashboards, and insights with team members, fostering a data-driven culture and enabling timely decision-making across departments.

AI-Powered Insights: Domo leverages artificial intelligence and machine learning algorithms to uncover hidden patterns, trends, and anomalies in data. It provides automated insights and alerts, empowering users to proactively identify opportunities and mitigate risks.

Use Cases:

Sales and Marketing Analytics: Domo helps businesses analyze sales data, track marketing campaigns, and measure ROI. It provides real-time visibility into key sales metrics, customer segmentation, and campaign performance, enabling organizations to optimize their sales and marketing strategies.

Operations and Supply Chain Management: Domo enables organizations to gain actionable insights into their operations and supply chain. It helps identify bottlenecks, monitor inventory levels, track production metrics, and streamline processes for improved efficiency and cost savings.

Financial Analysis: Domo facilitates financial reporting and analysis by integrating data from various financial systems. It allows CFOs and finance teams to monitor key financial metrics, track budget vs. actuals, and perform advanced financial modeling to drive strategic decision-making.

Human Resources Analytics: Domo can be leveraged to analyze HR data, including employee performance, retention, and engagement. It provides HR professionals with valuable insights for talent management, workforce planning, and improving overall employee satisfaction.

Success Stories: Several organizations have witnessed significant benefits from adopting Domo. For example, a global retail chain utilized Domo to consolidate and analyze data from multiple stores, resulting in improved inventory management and optimized product placement. A technology startup leveraged Domo to analyze customer behavior and enhance its product offerings, leading to increased customer satisfaction and higher revenue.

Domo offers a powerful and user-friendly platform for organizations to unlock the full potential of their data. By providing seamless data integration, robust analytics capabilities, and collaboration features, Domo empowers businesses to make data-driven decisions and gain a competitive edge in today's fast-paced business landscape. Whether it's sales, marketing, operations, finance, or HR, Domo can revolutionize the way organizations leverage data to drive growth and innovation.

#DataCleaning#DataNormalization#DataIntegration#DataWrangling#DataReshaping#DataAggregation#DataPivoting#DataJoining#DataSplitting#DataFormatting#DataMapping#DataConversion#DataFiltering#DataSampling#DataImputation#DataScaling#DataEncoding#DataDeduplication#DataRestructuring#DataReformatting

0 notes

Text

Data Integrity & Mapping Analyst-Nashville, TN(Oniste) Apply SohanIT Inc Jobs on #JobsHorn: https://jobshorn.com/job/data-integrity-mapping-analyst/7823 12+ Months| 7+Yrs| C2C/W2 Interview Type: Skype or Phone

JD: *Primary focus will be on data mapping and integrity assessment within the LARS application.

Contact: [email protected] |+1 470-410-5352 EXT:111

#dataintergrity #datamapping #tenneessee

1 note

·

View note

Text

Better Understand DataMaps – A Google Maps Analogy

The shift from physical maps to dynamic digital applications has transformed how we navigate the world, both physically and digitally. Paper maps, like those by Rand McNally, were essential for decades, but they had inherent limitations. Static and fixed in time, they required constant updates to remain useful. Changes to roads and terrain after printing meant extra work (and time) from drivers. Visualizing the bigger picture, such as understanding multiple routes or zooming into specific locations, demanded several resources. Similarly, early DataMaps offered static representations of data. These could be lists of system inventories, isolated database schemas, or sporadic architecture diagrams, providing snapshots of information without integration, interaction, or real-time updates.

With the advent of digital mapping tools like Google Maps and Apple Maps, navigation became intuitive, accessible, and dynamic. These tools incorporate static data, like street maps and landmarks, with real-time information like traffic conditions, construction updates, and weather influences. This evolution from static to dynamic mapping tools offers a direct analogy for understanding the transformation of DataMaps in organizations.

DataMaps represent the organization’s data ecosystem, detailing where data resides, how it flows between systems, and where it interacts with internal and external entities. Early DataMaps, much like paper maps, often existed as disconnected resources. A list of databases here, a flowchart there, or maybe a spreadsheet outlining access permissions. These were useful but far from comprehensive or user-friendly. Modern DataMaps, on the other hand, integrate diverse data sources, reflect real-time updates, and provide a clear visual interface that adapts to user needs.

Consider Google Maps. Beyond navigation, it allows users to explore surroundings, find services, and even navigate indoors. Similarly, modern DataMaps should enable stakeholders to drill down into specific data pipelines, understand connections, and monitor data integrity and security in real-time.

Building a DataMap manually is akin to creating a map of the world by hand: technically possible, but time-intensive and prone to errors. Automation revolutionizes this process, much as satellite imagery and AI have advanced digital cartography. Modern DataMaps leverage automation to ingest and reconcile data from disparate sources. This ensures accuracy, reduces manual effort, and allows organizations to focus on interpreting and acting upon insights rather than gathering basic information.

Yet automation alone isn’t enough. Just as mapping tools allow user corrections, like reporting a closed road or a new business location, DataMaps require user input for fine-tuning. This symbiotic relationship between automation and manual refinement ensures that the DataMap remains accurate, relevant, and useful across diverse use cases.

Much like navigation apps, which continuously evolve to incorporate new technologies, modern DataMaps are not static tools. Once implemented, they often expand beyond their original purpose. Initially created to map data flows for compliance or operational oversight, they can quickly become invaluable for risk management, strategic planning, and even innovation.

For instance, in a healthcare organization, a DataMap might start as a tool to track patient data flows for regulatory compliance. Over time, it could evolve to optimize data sharing across departments, support research initiatives by identifying valuable datasets, or even enhance patient care through predictive analytics.

This adaptability is key. Organizations that adopt DataMaps often discover new applications, from identifying inefficiencies in data transfer to supporting AI model training by ensuring clean, well-structured data pipelines.

The success of apps like Google Maps offers key lessons for DataMaps:

Data Integration: Digital maps combine multiple data layers (like geographic, traffic, and business location data) into a cohesive user experience. Similarly, effective DataMaps must integrate diverse data sources, from cloud storage to on-premise systems, providing a unified view.

User-Friendly Interfaces: A key strength of mapping apps is their intuitive design, enabling users to navigate effortlessly. DataMaps should aim for the same simplicity, offering clear visualizations that allow stakeholders to explore data relationships without needing technical expertise.

Continuous Improvement: Mapping tools constantly evolve, adding features like real-time traffic updates or indoor navigation. DataMaps should similarly grow, incorporating new datasets, improving analytics capabilities, and adapting to organizational needs.

Just as the mapping world has expanded to include augmented reality (AR) for visualizing complex datasets in real-world environments, the future of DataMaps lies in integrating advanced technologies like AI. Unlike AR, which enhances spatial visualization, AI enables DataMaps to predict potential bottlenecks or vulnerabilities proactively. By leveraging machine learning algorithms, these tools can provide automated recommendations to optimize workflows or secure sensitive information, offering predictive and prescriptive insights beyond traditional data mapping.

As organizations increasingly rely on data to drive decisions, the demand for robust, dynamic DataMaps will only grow. These tools will not just document the data ecosystem but actively shape it, providing the insights and foresight needed to stay ahead in a competitive landscape. DataMaps provide foundational insights into how information flows within an organization, unlocking multiple applications across different tasks. They can be used to identify and address potential data bottlenecks, ensure compliance with privacy regulations like GDPR or HIPAA, optimize resource allocation, and enhance data security by pinpointing vulnerabilities. Additionally, DataMaps are invaluable for streamlining workflows in complex systems, supporting audits, and enabling better decision-making by providing a clear visual representation of interconnected data processes. This versatility demonstrates their importance across industries and operational needs.

The analogy between digital maps and DataMaps highlights a fundamental truth: tools that integrate diverse data sources, provide dynamic updates, and prioritize user experience are transformative. Just as Google Maps redefined navigation, DataMaps are redefining how organizations understand and leverage their data. By embracing automation, fostering adaptability, and prioritizing ease of use, DataMaps empower organizations to navigate their data ecosystems with clarity and confidence. In a world driven by data, the journey is just as important as the destination—and a well-designed DataMap ensures you reach it effectively.

0 notes

Text

"Guardians of Privacy: Privacy Management Software Market 2025–2033 🔐🌐"

Privacy Management Software Market is rapidly expanding, fueled by growing regulatory requirements and heightened consumer awareness around data privacy. These solutions enable organizations to manage personal data effectively, ensuring compliance with global privacy laws and fostering trust through transparency. Key features include data mapping, consent management, incident response, and privacy impact assessments.

To Request Sample Report : https://www.globalinsightservices.com/request-sample/?id=GIS31522 &utm_source=SnehaPatil&utm_medium=Article

As businesses face complex privacy challenges, the market has seen substantial growth. The data discovery and mapping sub-segment leads, playing a critical role in identifying sensitive data across IT ecosystems. Consent management follows as a high-performing segment, driven by an emphasis on user consent and ethical data practices.

Regionally, North America dominates, supported by advanced privacy laws like the California Consumer Privacy Act (CCPA) and a mature tech infrastructure. Europe, propelled by the General Data Protection Regulation (GDPR), ranks as the second-largest market, with Germany leading in adoption due to its strong commitment to privacy rights.

In 2023, the market reached an estimated 350 million units, with projections to grow to 600 million units by 2033. The data protection segment commands the largest share at 45%, followed by consent management (30%) and risk assessment tools (25%). Leading players such as OneTrust, TrustArc, and BigID continue to innovate, leveraging AI, machine learning, and blockchain technologies to stay competitive.

While the market offers promising growth opportunities, particularly in emerging markets and sectors like healthcare and finance, challenges such as evolving regulatory landscapes and cybersecurity threats persist. Despite these hurdles, a 15% annual growth rate is anticipated, driven by investments in AI-powered analytics and cloud-based solutions.

#PrivacyManagement #DataProtection #GDPRCompliance #CCPA #PrivacySoftware #AIinPrivacy #DataMapping #ConsentManagement #RiskAssessment #DataPrivacy #CyberSecurity #TechInnovation #CloudPrivacy #DigitalCompliance #TrustInTech

0 notes

Link

At B2B Sales Arrow, the data management professionals develop & sub-divide nearly 50,000 data records and deliver implacable and best quality data to the client.

Within four weeks, the data management professionals at B2B Sales Arrow could develop and sub-divide nearly 50,000 data records and deliver uncompromising data quality to the client

#marketresearch#datamining#datadrivenmarketing#deepdataresearch#demandgeneration#datavalidation#datamapping#databasepositioning#csuiteleadgeneration#b2bservices#leadgeneration#databaseservices

1 note

·

View note

Text

🎯 Unlock ArcGIS Pro Skills – BIG DISCOUNT!

🚀 Become a GIS expert with this easy-to-follow ArcGIS Pro course on Udemy! Learn essential skills and apply them in real-world projects.

🔥 GET DISCOUNT 48% OFF (ONLY $12.99 FROM $19.99) – LIMITED TIME, ENROLL NOW !

#GISFundamentals#ArcGISProLearning#MappingSkills#GeospatialAnalysis#GISBeginner#ArcGISProCourse#DataMapping#SpatialAnalysis#OpenSourceGIS#UdemyPromo

0 notes

Photo

REFLECTIVE JOURNALISM | DATA MAPPING OBJECTS

THE BEGINNING

The data object brief was my first assignment for ICT so for me it was about getting use to the course structure and the process of how a project would unfold. It was also my first group assignment for the course, so it was just a matter of going with the flow and seeing what unfolds. Initially when Ricardo pitched the brief in the morning lecture with some amazing examples of how data could be translated into a product and seeing how these products could help you understand an issue or be a constant reminder of an problem by either just being a simple stand-alone form or through human interactiveness. It seemed like an amazing assignment to work on, especially because the brief was somewhat to do with product design which is something I love. But as I went along one of the biggest hurdles I found was to find a data set and the problem was that I was reverse engineering the whole process, I was trying to imagine the product I wanted to make and trying to find a data that’ll help me achieve that. This made the process very slow and tedious, by the end of the week there was only a bunch of links collected with no grounding of what, how and where I wanted to go.

Week two. The first week was a slow weeks for most students with similar problems and there wasn’t much progress being made so the teachers though it was a good idea to put us in groups. We did this by putting post-it notes with our issue on a wall with everyone else’s notes, groups were then made by people with similar projects. Mine was an environmental issue, looking at global energy consumption and the usage of energy sources.

GOING IN TO GROUPS

Once we were in groups we faced similar problem, trying to figure out what we wanted to make before specifying on the dataset from the links. Most of us thought that in-order for the project to be good we had to choose a complex issue and use multiple dataset, this really set us back. We were so stuck with finding that ideal data-set that another week went by and we had a collection of links and plenty of ideas for a product by non to act on.

We’d set-up a trello account to manage and assign tasks but we barely used it due to the casualness of the whole process. We though we’d assign tasks once we had a data set, but because we didn’t get around to doing this until the first week of holiday, there were no deadlines or task assigned. Towards the end of the the first week working as a group we had Kinda decided on a product (Wind-mill) and on the second week with Sangeeta’s help we’re hustled towards choosing a dataset (global renewable energy usage from 1965 -2015). This was great, for the first time we felt that we’re getting somewhere and there was a sense of achievement. The girls worked on simplifying the data onto a chart and converting it to cm, the boys worked on researching on wind-chimes (design, style ect). We noticed that the data was very small in numbers and so the impact on the product wouldn’t be that strong, so we just took the beginning 1965 and the end 2015 cutting out everything in between and using that to base our product on. We created a couple of prototypes with existing materials in the class room, pens, paper and ice-block sticks. The following day we went to look sharp and bought mini toothpick wind-chimes to create our second prototype, the day after we cut open plastic bottles and thick zip lock bags and used them to create a event bigger free-standing model. With all these prototypes we didn’t have a specific goal in mind, we’re just getting the ideas out of our head by exploring.

While progress was being made, there was still a lack of interest within our group of actually going ahead with our current data-set and product.

Square 1

We had created 3-4 prototypes by now but it still didn’t feel exciting, I guess we’re still subconsciously exploring possibilities of what to do and actually choosing a dataset and doing it made us realise that it wasn’t something we wanted to do. We had previously brought up deforestation as an option so we eventually gravitated back to that. It was two days before mid-semester break and it basically went in gathering new links for deforestation data-sets. We’d come up with a few new ideas, one of the products we thought of was, a tea coaster. These coasters would be cut from a fallen tree branch at different thickness and different angle. The idea was the damage would be mapped on the angle at which the wood slab would be cut, bigger the damage, steeper the angle making it difficult to hold a drink when a cup would be placed on it (the tipping point). The other methods would be the size and thickness of the slab along with heat sensitive paint, painted at the proposition of the data-set (eg. In-order to resemble 80% on the surface of the tea coaster, you’d make a design that would cover 80% of the surface.

THE PRODUCTIVE MEET UP

We manage to pick a day and organise a meetup over the first of our holidays, which was probably our most productive time together yet. We choose a data-set from our various links which was to do with bird/ bird habitat threat in Canada, America, Mexico. After drinking couple of rounds fo cider one of us suggested to use the bottles to map the data-set through creating different sounds. We quickly latched on to this idea and started blowing on the bottle banning it with a stick whilst having it filled with different levels of water. This experiment became the basis of our final ideas, the wind-chime and the flute. So for our project we wanted to use materials that were organic, naturally grown out in the nature, sustainable in abundance and related to sound due to the topic of the issue. Bamboo was the one that immediately came up, we managed to find plenty of pre-cut semi dried bamboos where we were and started making use of it. The wind-chime would be made up of two datasets, “Threats: Species at great risk of extinction” and “Habitat: Tropical residents” decay in Usa, Canada and Mexico over the course of 40 years. Our second product “The flute” which came later and was intended to be a fun project, had a global data set of ‘Global risk for bird extinction’.

Reflection

There were multiple ups and down through-out the project, highs and lows of both exciting when a new idea arose or a dull sunken feel when we had a feeling of not moving further. For me it was my first project/ assignment for this course so it was all about getting a feel for the mood of the group and understanding the people around me, how they think, how are ideas shared and how do we collaborate effectively.

Dataset links / Resources.

http://www.savingoursharedbirds.org/loss-of-abundance

https://www.theguardian.com/environment/2018/apr/23/one-in-eight-birds-is-threatened-with-extinction-global-study-finds

1 note

·

View note

Text

#aitools#ai#artificialintelligence#digitaltwin#indoormapping#newtech#geospatialdata#datamapping#gis#mapping#geospatialtechnology#gismapping#dmapping#geospatial#dmap#digitaltwins#machinelearning#lidar#onpassiveai#onpassive#assetmapping#maps#fieldmapping#aitechnology#dataextraction#earth#voxelmaps#utilitymapping#onpassiveaitools#dmodeling

0 notes

Text

Third-Party Governance: Ensuring hygienic vendor data handling practices

When we collect, store and use data on a daily basis, there are a number of regulatory requirements that we are meant to comply with. This includes:

Ensuring informed consent from users is obtained.

Privacy notices on websites are up to date and easy to understand.

Appropriate security measures are in place for the data collected, and

DSARs are handled efficiently and in a timely manner, etc.

Complying with these requirements isn’t always straightforward. Since most companies deal with multiple third parties (which can be service providers, vendors, contractors, suppliers, partners, and other external entities) we are required, by law, to ensure that these third parties are also compliant with the applicable regulatory requirements.

Third party, vendor and service provider governance are a crucial component of a strong and sustainable privacy program. In October of 2024, the Data Protection Authority in the Netherlands, imposed a €290 million fine on Uber for failing to have appropriate transfer mechanisms for personal data that it was sharing to third-party countries including its headquarters in the U.S. According to Article 44 of EU General Data Protection Regulation (GDPR), data controllers and processors must comply with the data transfer provisions laid out in Chapter V of EU GDPR when transferring personal data to a third-party based outside of the EEA. This includes the provisions of Article 46 which mandates data controllers and processors implement appropriate safeguards where transfers are to a country that has not been given an adequacy ruling by the EU. In the U.S., the Federal Trade Commission (FTC) brought action against General Motors (GM) and OnStar (owned by GM) for collecting sensitive information and sharing it with third parties without consumer’s consent.

These are just examples of companies knowingly selling and sharing data with third parties. In some cases, data is collected by third parties, through Software Development Kits (SDKs) and pixels embedded on company websites without the full and proper knowledge of the first party companies. Some websites are built using third party service providers, these third parties also collect data from website visitors without the knowledge of the first party. The AdTech ecosystem in general is a complex environment; data changes hands with so many parties that it's difficult to understand how the data gets used and which third parties are actually involved.

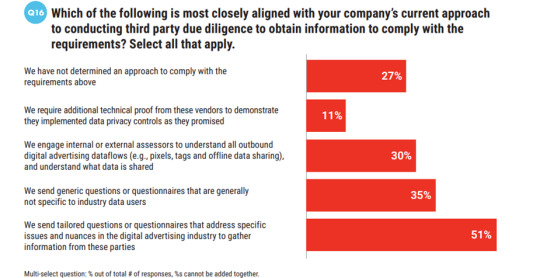

The CCPA and other state regulations require that businesses conduct due diligence of their service providers and third parties to avoid potential liability for acts of non-compliance on the part of these third parties. However, the Interactive Advertising Bureau’s (IAB) recent survey report provided interesting statistics, with 27% of the companies reporting that they had not yet finalized an approach to meet these third-party due diligence requirements.

In an already complex and evolving legal landscape, how do we ensure that our third-party governance is adequate?

This is where DataMapping comes in. A comprehensive and effective DataMap provides clear insights into what data is collected, its’ source, its’ storage location, the security measures in place, to which third-parties the data is shared with and how, points of contact and security measures during data transfers. A relation map within a DataMap provides an overview of different systems within the organisation and third parties to understand what data passes between them, the frequency of the transfers, security measures, etc. All this information is key in when sharing data to third parties.

It’s important to be aware of the complexities around DataMapping when it involves third parties. Often, the contracts and documentation provided by third parties will allocate a lot of responsibility on the business to ensure the data is collected and managed in a privacy compliant way. Further, when Privacy Impact Assessments (PIAs) are made, the business owners are sometimes not able to provide accurate and complete information as they themselves might not understand all the nuances of the data collected and processed by the third-party.

There is a need for automation and audits to capture detailed information about the data collected by third parties.

This information is also vital when ensuring that third parties handle Data Subject Access Requests (DSARs) and opt-out requests in a timely and efficient manner, which is a regulatory requirement. Depending on the requirement, sometimes passthrough requests are made in the case of service providers and processors, whereas sometimes we are required to disclose the third party and provide contact information. The vendor can be the same in both cases.

When there is a clear understanding of what data has been shared with which third party, DSARs and opt-out requests can be handled effectively; Whenever an opt-out request is received, or a signal is detected, the company should have the capability to automatically communicate the information of the request to the third parties involved so that they honor the request as well. A DSAR or an opt-out request is not effectively and completely honored until the third parties involved are also in compliance with the requirements of the request

The Interactive Advertising Bureau (IAB) has provided a solution to reach out to hundreds of third parties in the AdTech ecosystem. Companies can register with IAB and set up the IAB Global Privacy Platform (GPP), which informs those third parties tied with IAB of the users’ preferences.

In the case of SDK’s which are used in mobile apps and smart devices, maintaining a privacy-compliant app environment is vital. Periodic auditing of SDKs is a best practice to keep track of SDKs as they might change their policies or update their policies on privacy and data collection, especially while upgrading. The responsibility falls on developers to ensure that any SDKs to be integrated are privacy compliant and to thoroughly study the documentation provided, as the specifications for maintaining compliance are generally included here.

Appropriate security measures when data is being transferred is also necessary. Privacy enhancing technologies (PETs) can be used to anonymize or pseudonymize data so that it is not vulnerable to data breaches and bad actors during the transfer. Differential Privacy, a privacy enhancing technology used in data analytics can also be utilized. The National Institute of Standards and Technology (NIST) recently published their guidelines for evaluating differential privacy.

Finally, third parties and service providers need to be audited and assessed on a regular basis. Often, third party data handling processes are overlooked in order to focus on other matters. However, third parties need to be audited to ensure that they are complying with the requirements of their contracts and to ensure that Service Level Agreements (SLAs) and Master Service Agreements (MSAs) are met. In fact, third party, service provider and vendor contracts, first need to be assessed and audited to ensure they meet industry standards and compliance requirements.

In an environment where different companies interact with and work very closely with one another, whether it is to build websites, using SDKs, for AdTech purposes, or for additional tech support, ensuring that, the risk of facing regulatory heat for noncompliance is high. When substantial efforts are being made to guarantee that our data handling practices are compliant with regulations, we should ensure that we don’t face heat for the data handling practices of those third parties that we interact with. In fact, it is safe to say that a privacy program is not complete and sustainable until third party governance is also strong. However, there is often a lack of technical talent and expertise to handle the demands of the third-party governance in the complex AdTech ecosystem. As the IAB survey report found, 30% of the companies require internal and external assessors to fully understand the scope of what data is shared. Sustainable solutions require deep technical knowledge and skill in multiple areas. This is often not easy to find.

0 notes

Text

Dirty data can lead to lost opportunities and revenue. Don't let your business suffer due to inaccurate or incomplete data. Our data cleansing services can help you clean and standardize your data, so you can make informed decisions and achieve your business goals. Contact us today to learn more about how we can help you keep your data clean and sparkling!

For more information, https://hirinfotech.com/data-cleansing/ or contact us at [email protected]

#data cleansing services#dataquality#data#hirinfotech#dataset#data transfer#datavalidation#data processing#datamapping#problem solving#business

0 notes

Text

Data Mapping Helps Create a Better NHS

Data Mapping Protects Patient Records

Press release February 22, 2019

Gainesville, FL., February 22, 2019 (swampstratcomm-sp19.tumblr.com)-- Recently, Healthcare Global has focused on ways to innovate healthcare. One article reviews the top healthcare innovations for 2019, discussing new technologies such as artificial intelligence and new tools for storing patient records. Another article focuses on how healthcare is becoming more digitized and a new smartphone application released by NHS. One article, Fit for Purpose: Introducing Data Mapping for a Healthier NHS, focuses on how the NHS can use data mapping to help keep patients information more secure.

The NHS has been storing patient data for over 70 years, so the amount of data held by the NHS is large. The amount of data stored by the NHS presents a large threat to the security of patient records. During 2017, the global WannaCry attack resulted in the cancellation of 20,000 appointments and a large loss in revenue. The NHS is more prone to attack by cybercriminals because they use outdated technology and operating systems. If NHS suffers another cyber attack, it is possible they would not be able to recover from the damages. Even though there is much skepticism surrounding data security people still trust the NHS with their records. This makes it important that there is a way for NHS to store data and keep it secure. One way to do this is through data mapping. “Data mapping is about creating a visual overview of all the data collected and stored by an organisation, providing an insight into the potential risks associated with each data type and location.” Data mapping does not rely on data being strictly online, so it is compatible with all NHS documents that are still kept on paper. NHS must decide how all current audio, online, and paper data must be stored. They also must implement new policies for how data is controlled and create new guidelines for who controls the data. Once the NHS takes these steps, they can begin to chart the data flow. After NHS understands the data that is being stored, they can ask a series of questions to determine how the data is being processed and collected.

Data mapping is a technology that any company can use to make sure people’s information is safe. It is especially important when it comes to people’s medical records. By streamlining how data is collected, stored, and transferred through data mapping people’s information will be safer in the long run.

Healthcare Global highlights innovation and untraditional ways of handling patient care to continually move healthcare forward.

Contact:

Kionistorm

1 note

·

View note

Text

The Importance of Privacy Assessments & Privacy Management | DataBench

Looking for a Privacy Assessment? Databench offers a range of services to help you manage your privacy. We can help you assess your current privacy practices, develop a privacy management plan, and more. Contact us today to learn more.

1 note

·

View note