#Free AI Detection Tools

Explore tagged Tumblr posts

Text

Free AI Content Detection Tools Are Misleading Guest Bloggers | Proven Reasons They Can’t Be Trusted

“I wrote an article for a new guest blogging website using my own experience. However, it was flagged as AI-generated by a guest blogger.” – Based on the experience of a senior content contributor. Do you know the reason behind this? He checked the article using a random free AI detection tool and claimed that I wrote it with GPT. Still not believing? See this – A leading and popular company…

#AI Content Detection Tools#AI Detection Tools#Content Detection Tools#Free AI Content Detection Tools#Free AI Detection Tools

0 notes

Text

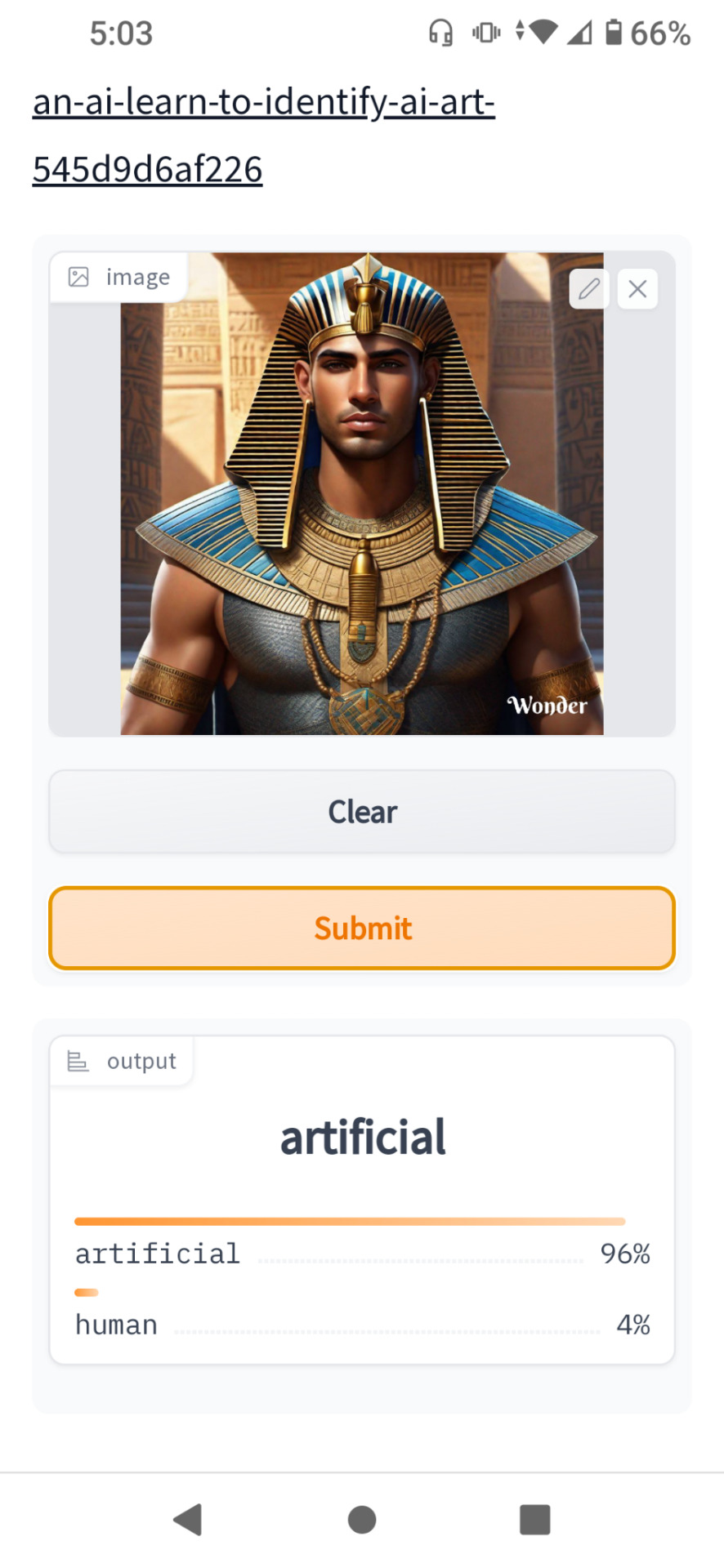

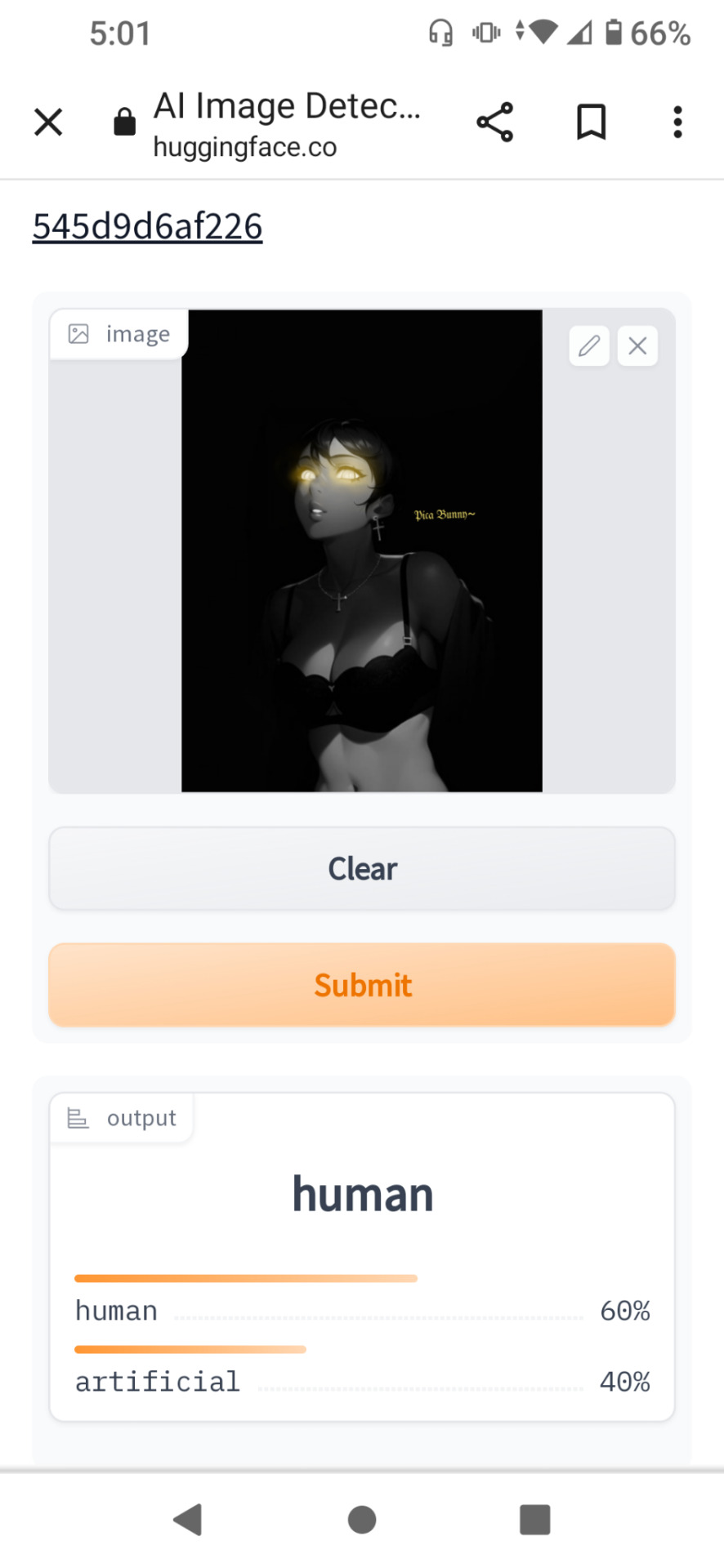

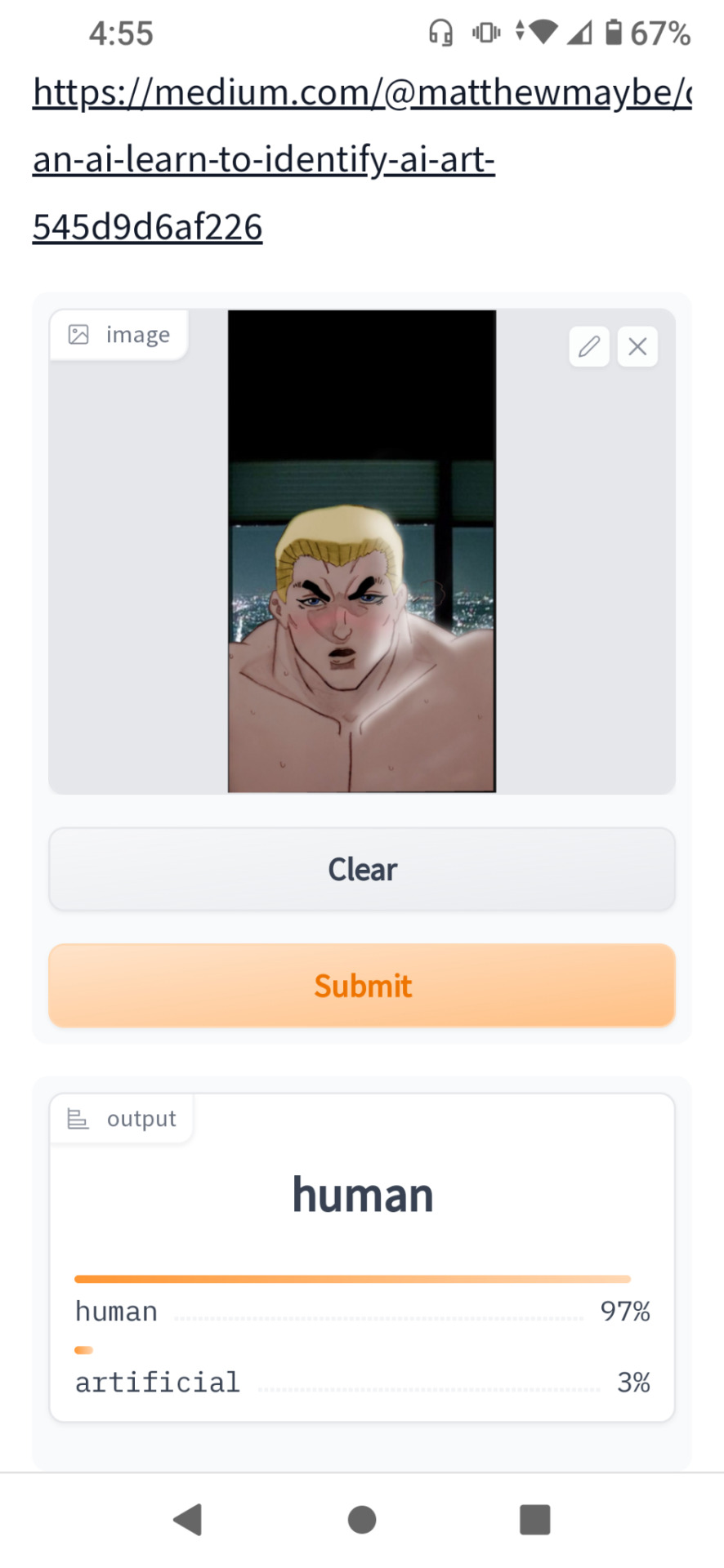

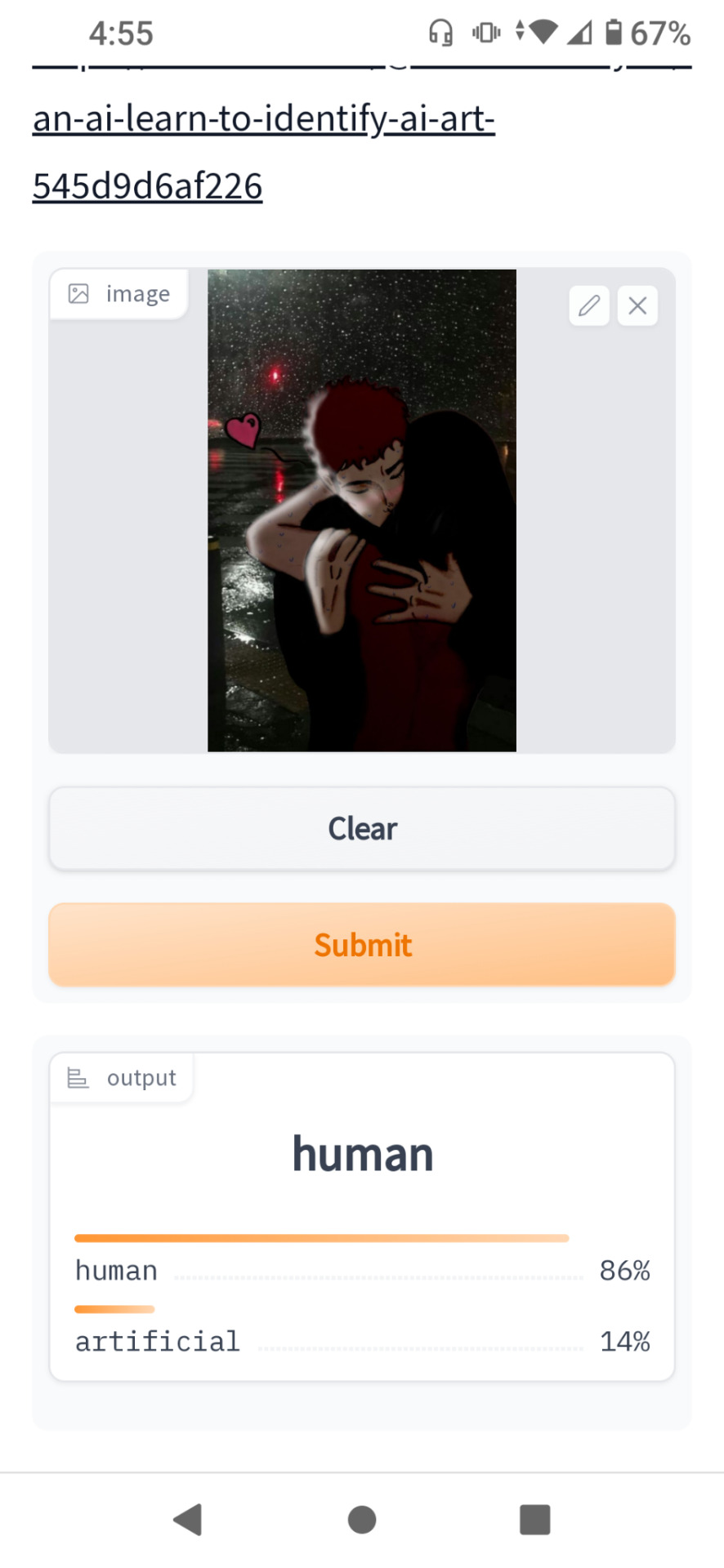

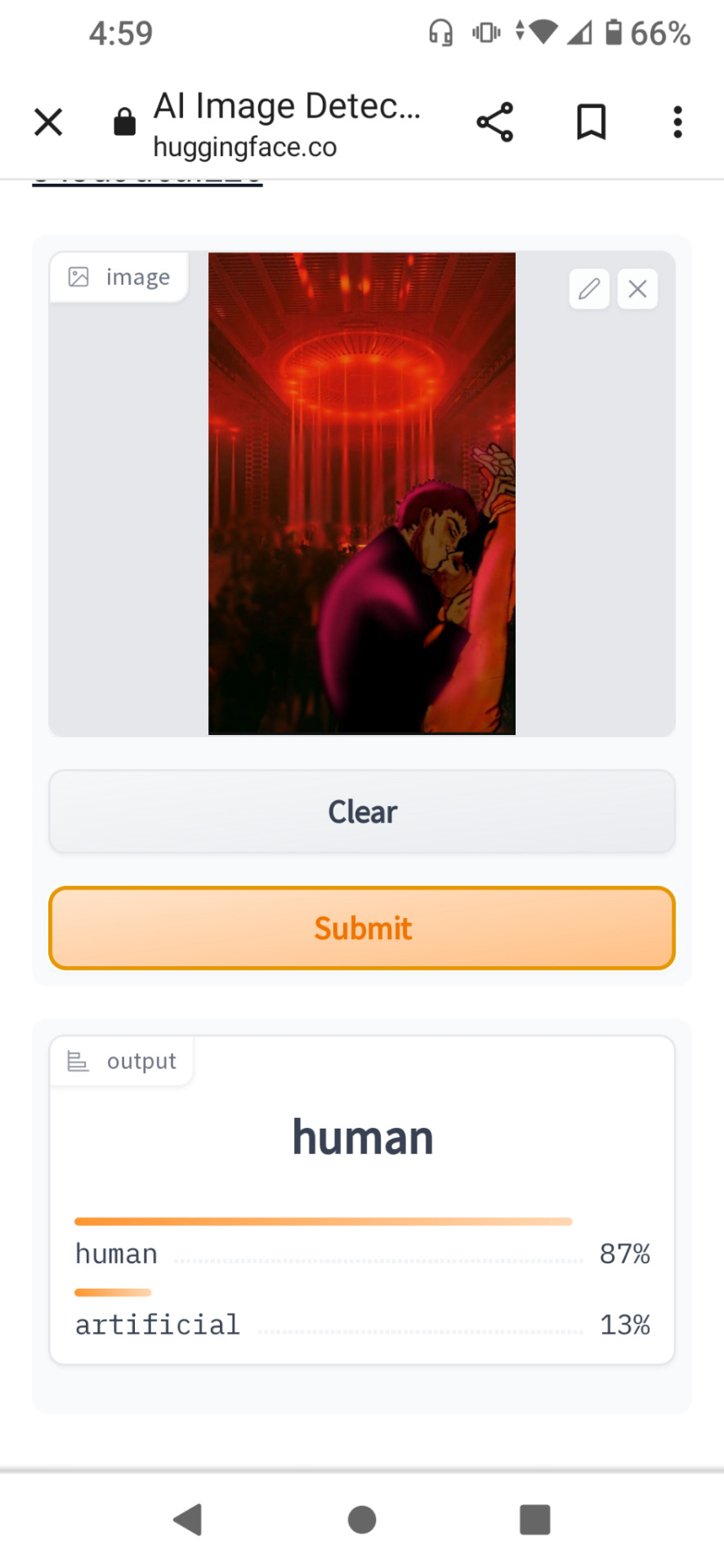

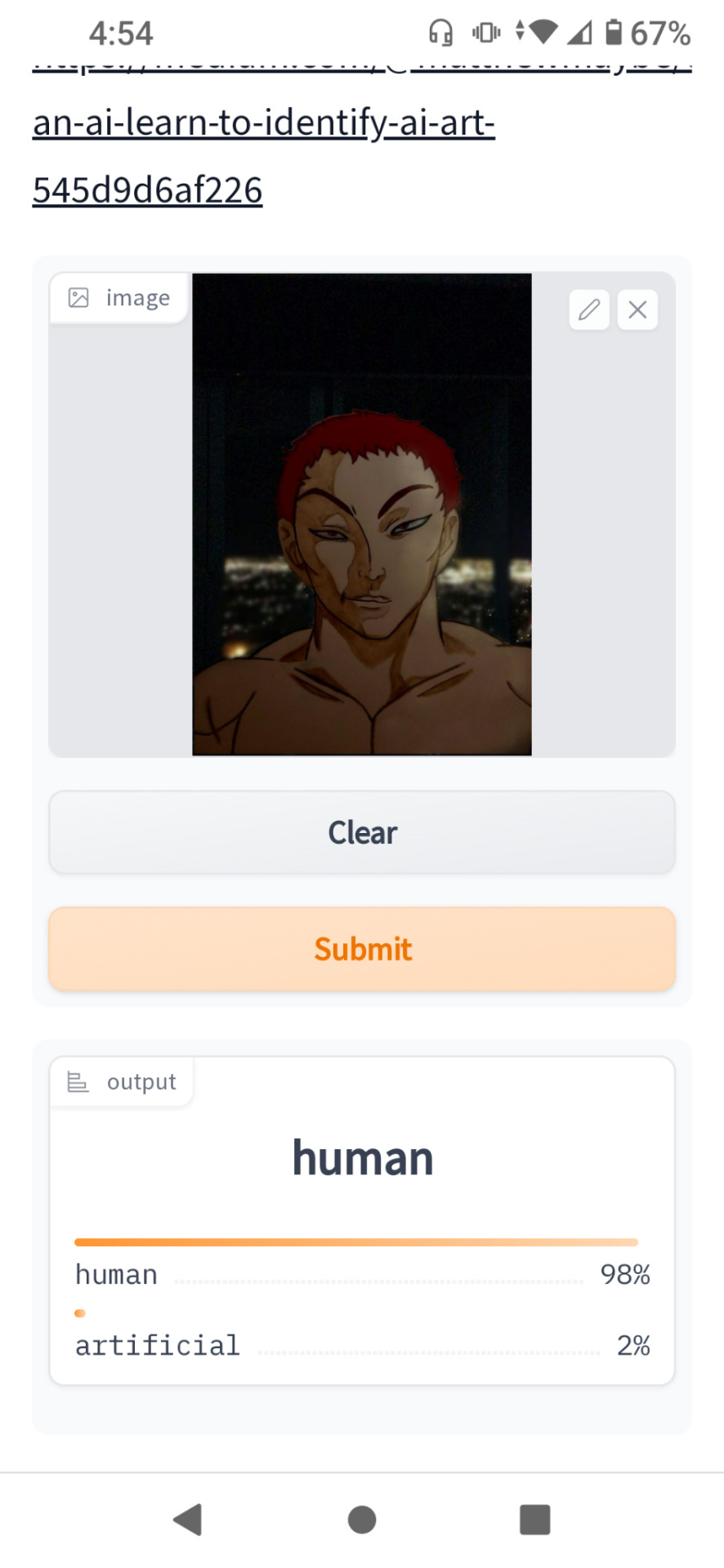

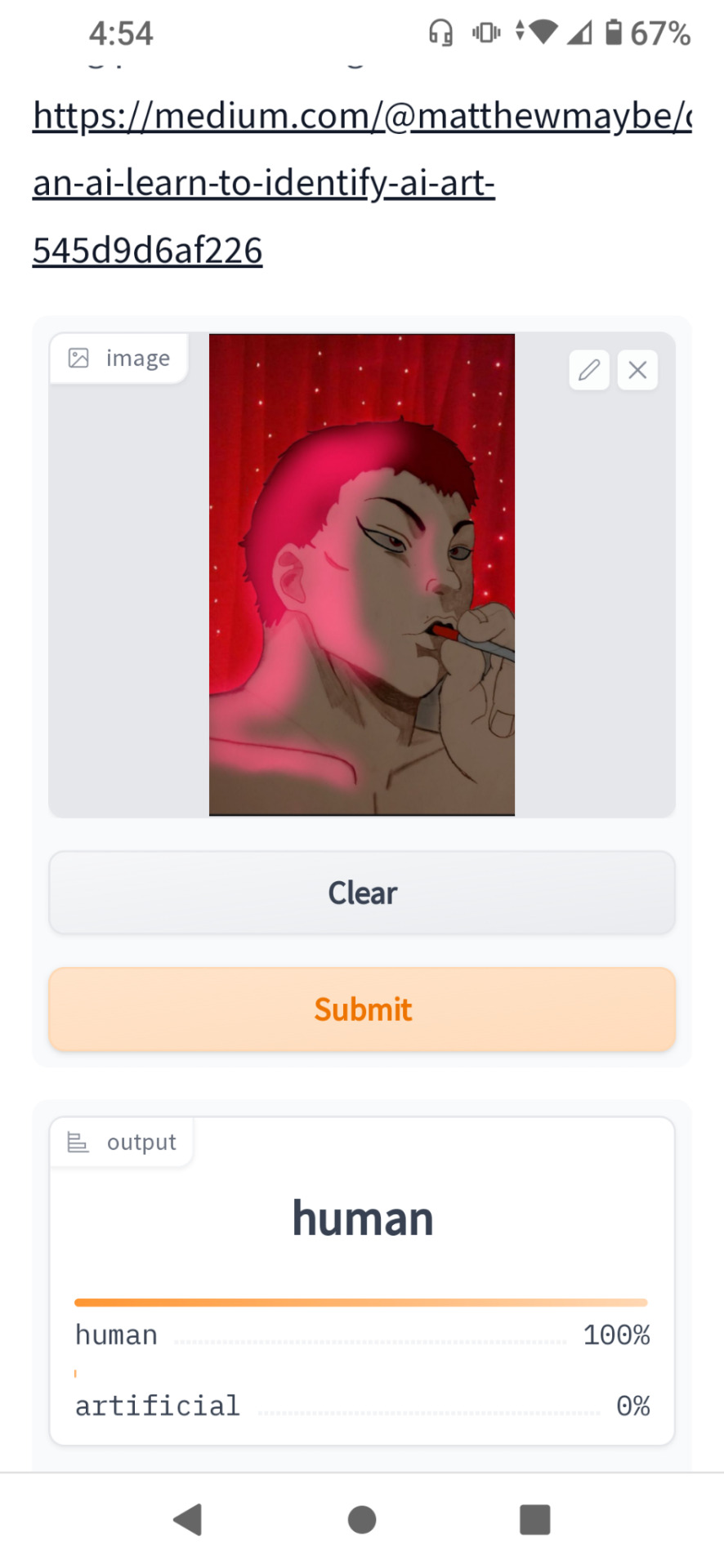

Well guys so....I finally found an app/ website that can Track whether a piece of artwork is AI or not. Now to test this I screenshotted and Ai artist (that specifically states they use AI in description) and put it through the website. This was the result:

And Now I put some of my own Artwork through the tester:

So far it looks like it works pretty well. I'm going to leave the link here in case anyone wants to use it. With the AI manhunts going on right now I figure this will help a lot of people.

Website here:

1 note

·

View note

Text

Data Protection: Legal Safeguards for Your Business

In today’s digital age, data is the lifeblood of most businesses. Customer information, financial records, and intellectual property – all this valuable data resides within your systems. However, with this digital wealth comes a significant responsibility: protecting it from unauthorized access, misuse, or loss. Data breaches can have devastating consequences, damaging your reputation, incurring…

View On WordPress

#affordable data protection insurance options for small businesses#AI-powered tools for data breach detection and prevention#Are there any data protection exemptions for specific industries#Are there any government grants available to help businesses with data security compliance?#benefits of outsourcing data security compliance for startups#Can I be fined for non-compliance with data protection regulations#Can I outsource data security compliance tasks for my business#Can I use a cloud-based service for storing customer data securely#CCPA compliance for businesses offering loyalty programs with rewards#CCPA compliance for California businesses#cloud storage solutions with strong data residency guarantees#consumer data consent management for businesses#cost comparison of data encryption solutions for businesses#customer data consent management platform for e-commerce businesses#data anonymization techniques for businesses#data anonymization techniques for customer purchase history data#data breach compliance for businesses#data breach notification requirements for businesses#data encryption solutions for businesses#data protection impact assessment (DPIA) for businesses#data protection insurance for businesses#data residency requirements for businesses#data security best practices for businesses#Do I need a data privacy lawyer for my business#Do I need to train employees on data privacy practices#Does my California business need to comply with CCPA regulations#employee data privacy training for businesses#free data breach compliance checklist for small businesses#GDPR compliance for businesses processing employee data from the EU#GDPR compliance for international businesses

0 notes

Text

Our AI detector tool uses DeepAnalyse™ Technology to identify the origin of your text. Our experiments are still ongoing, and our aim is to analyze more articles and text.

#ai content detector#ai checker free#ai detection tool free#ai detector free#ai essay detector#chat gpt 0

0 notes

Text

So, let me try and put everything together here, because I really do think it needs to be talked about.

Today, Unity announced that it intends to apply a fee to use its software. Then it got worse.

For those not in the know, Unity is the most popular free to use video game development tool, offering a basic version for individuals who want to learn how to create games or create independently alongside paid versions for corporations or people who want more features. It's decent enough at this job, has issues but for the price point I can't complain, and is the idea entry point into creating in this medium, it's a very important piece of software.

But speaking of tools, the CEO is a massive one. When he was the COO of EA, he advocated for using, what out and out sounds like emotional manipulation to coerce players into microtransactions.

"A consumer gets engaged in a property, they might spend 10, 20, 30, 50 hours on the game and then when they're deep into the game they're well invested in it. We're not gouging, but we're charging and at that point in time the commitment can be pretty high."

He also called game developers who don't discuss monetization early in the planning stages of development, quote, "fucking idiots".

So that sets the stage for what might be one of the most bald-faced greediest moves I've seen from a corporation in a minute. Most at least have the sense of self-preservation to hide it.

A few hours ago, Unity posted this announcement on the official blog.

Effective January 1, 2024, we will introduce a new Unity Runtime Fee that’s based on game installs. We will also add cloud-based asset storage, Unity DevOps tools, and AI at runtime at no extra cost to Unity subscription plans this November. We are introducing a Unity Runtime Fee that is based upon each time a qualifying game is downloaded by an end user. We chose this because each time a game is downloaded, the Unity Runtime is also installed. Also we believe that an initial install-based fee allows creators to keep the ongoing financial gains from player engagement, unlike a revenue share.

Now there are a few red flags to note in this pitch immediately.

Unity is planning on charging a fee on all games which use its engine.

This is a flat fee per number of installs.

They are using an always online runtime function to determine whether a game is downloaded.

There is just so many things wrong with this that it's hard to know where to start, not helped by this FAQ which doubled down on a lot of the major issues people had.

I guess let's start with what people noticed first. Because it's using a system baked into the software itself, Unity would not be differentiating between a "purchase" and a "download". If someone uninstalls and reinstalls a game, that's two downloads. If someone gets a new computer or a new console and downloads a game already purchased from their account, that's two download. If someone pirates the game, the studio will be asked to pay for that download.

Q: How are you going to collect installs? A: We leverage our own proprietary data model. We believe it gives an accurate determination of the number of times the runtime is distributed for a given project. Q: Is software made in unity going to be calling home to unity whenever it's ran, even for enterprice licenses? A: We use a composite model for counting runtime installs that collects data from numerous sources. The Unity Runtime Fee will use data in compliance with GDPR and CCPA. The data being requested is aggregated and is being used for billing purposes. Q: If a user reinstalls/redownloads a game / changes their hardware, will that count as multiple installs? A: Yes. The creator will need to pay for all future installs. The reason is that Unity doesn’t receive end-player information, just aggregate data. Q: What's going to stop us being charged for pirated copies of our games? A: We do already have fraud detection practices in our Ads technology which is solving a similar problem, so we will leverage that know-how as a starting point. We recognize that users will have concerns about this and we will make available a process for them to submit their concerns to our fraud compliance team.

This is potentially related to a new system that will require Unity Personal developers to go online at least once every three days.

Starting in November, Unity Personal users will get a new sign-in and online user experience. Users will need to be signed into the Hub with their Unity ID and connect to the internet to use Unity. If the internet connection is lost, users can continue using Unity for up to 3 days while offline. More details to come, when this change takes effect.

It's unclear whether this requirement will be attached to any and all Unity games, though it would explain how they're theoretically able to track "the number of installs", and why the methodology for tracking these installs is so shit, as we'll discuss later.

Unity claims that it will only leverage this fee to games which surpass a certain threshold of downloads and yearly revenue.

Only games that meet the following thresholds qualify for the Unity Runtime Fee: Unity Personal and Unity Plus: Those that have made $200,000 USD or more in the last 12 months AND have at least 200,000 lifetime game installs. Unity Pro and Unity Enterprise: Those that have made $1,000,000 USD or more in the last 12 months AND have at least 1,000,000 lifetime game installs.

They don't say how they're going to collect information on a game's revenue, likely this is just to say that they're only interested in squeezing larger products (games like Genshin Impact and Honkai: Star Rail, Fate Grand Order, Among Us, and Fall Guys) and not every 2 dollar puzzle platformer that drops on Steam. But also, these larger products have the easiest time porting off of Unity and the most incentives to, meaning realistically those heaviest impacted are going to be the ones who just barely meet this threshold, most of them indie developers.

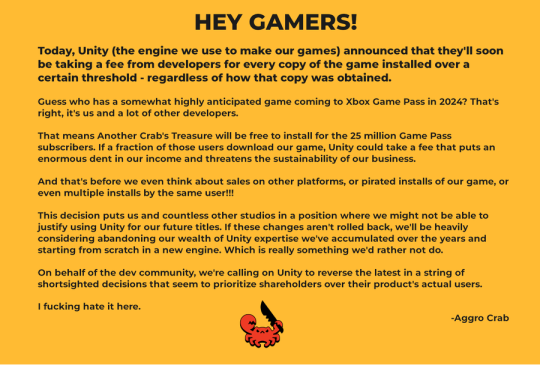

Aggro Crab Games, one of the first to properly break this story, points out that systems like the Xbox Game Pass, which is already pretty predatory towards smaller developers, will quickly inflate their "lifetime game installs" meaning even skimming the threshold of that 200k revenue, will be asked to pay a fee per install, not a percentage on said revenue.

[IMAGE DESCRIPTION: Hey Gamers!

Today, Unity (the engine we use to make our games) announced that they'll soon be taking a fee from developers for every copy of the game installed over a certain threshold - regardless of how that copy was obtained.

Guess who has a somewhat highly anticipated game coming to Xbox Game Pass in 2024? That's right, it's us and a lot of other developers.

That means Another Crab's Treasure will be free to install for the 25 million Game Pass subscribers. If a fraction of those users download our game, Unity could take a fee that puts an enormous dent in our income and threatens the sustainability of our business.

And that's before we even think about sales on other platforms, or pirated installs of our game, or even multiple installs by the same user!!!

This decision puts us and countless other studios in a position where we might not be able to justify using Unity for our future titles. If these changes aren't rolled back, we'll be heavily considering abandoning our wealth of Unity expertise we've accumulated over the years and starting from scratch in a new engine. Which is really something we'd rather not do.

On behalf of the dev community, we're calling on Unity to reverse the latest in a string of shortsighted decisions that seem to prioritize shareholders over their product's actual users.

I fucking hate it here.

-Aggro Crab - END DESCRIPTION]

That fee, by the way, is a flat fee. Not a percentage, not a royalty. This means that any games made in Unity expecting any kind of success are heavily incentivized to cost as much as possible.

[IMAGE DESCRIPTION: A table listing the various fees by number of Installs over the Install Threshold vs. version of Unity used, ranging from $0.01 to $0.20 per install. END DESCRIPTION]

Basic elementary school math tells us that if a game comes out for $1.99, they will be paying, at maximum, 10% of their revenue to Unity, whereas jacking the price up to $59.99 lowers that percentage to something closer to 0.3%. Obviously any company, especially any company in financial desperation, which a sudden anchor on all your revenue is going to create, is going to choose the latter.

Furthermore, and following the trend of "fuck anyone who doesn't ask for money", Unity helpfully defines what an install is on their main site.

While I'm looking at this page as it exists now, it currently says

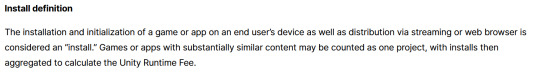

The installation and initialization of a game or app on an end user’s device as well as distribution via streaming is considered an “install.” Games or apps with substantially similar content may be counted as one project, with installs then aggregated to calculate the Unity Runtime Fee.

However, I saw a screenshot saying something different, and utilizing the Wayback Machine we can see that this phrasing was changed at some point in the few hours since this announcement went up. Instead, it reads:

The installation and initialization of a game or app on an end user’s device as well as distribution via streaming or web browser is considered an “install.” Games or apps with substantially similar content may be counted as one project, with installs then aggregated to calculate the Unity Runtime Fee.

Screenshot for posterity:

That would mean web browser games made in Unity would count towards this install threshold. You could legitimately drive the count up simply by continuously refreshing the page. The FAQ, again, doubles down.

Q: Does this affect WebGL and streamed games? A: Games on all platforms are eligible for the fee but will only incur costs if both the install and revenue thresholds are crossed. Installs - which involves initialization of the runtime on a client device - are counted on all platforms the same way (WebGL and streaming included).

And, what I personally consider to be the most suspect claim in this entire debacle, they claim that "lifetime installs" includes installs prior to this change going into effect.

Will this fee apply to games using Unity Runtime that are already on the market on January 1, 2024? Yes, the fee applies to eligible games currently in market that continue to distribute the runtime. We look at a game's lifetime installs to determine eligibility for the runtime fee. Then we bill the runtime fee based on all new installs that occur after January 1, 2024.

Again, again, doubled down in the FAQ.

Q: Are these fees going to apply to games which have been out for years already? If you met the threshold 2 years ago, you'll start owing for any installs monthly from January, no? (in theory). It says they'll use previous installs to determine threshold eligibility & then you'll start owing them for the new ones. A: Yes, assuming the game is eligible and distributing the Unity Runtime then runtime fees will apply. We look at a game's lifetime installs to determine eligibility for the runtime fee. Then we bill the runtime fee based on all new installs that occur after January 1, 2024.

That would involve billing companies for using their software before telling them of the existence of a bill. Holding their actions to a contract that they performed before the contract existed!

Okay. I think that's everything. So far.

There is one thing that I want to mention before ending this post, unfortunately it's a little conspiratorial, but it's so hard to believe that anyone genuinely thought this was a good idea that it's stuck in my brain as a significant possibility.

A few days ago it was reported that Unity's CEO sold 2,000 shares of his own company.

On September 6, 2023, John Riccitiello, President and CEO of Unity Software Inc (NYSE:U), sold 2,000 shares of the company. This move is part of a larger trend for the insider, who over the past year has sold a total of 50,610 shares and purchased none.

I would not be surprised if this decision gets reversed tomorrow, that it was literally only made for the CEO to short his own goddamn company, because I would sooner believe that this whole thing is some idiotic attempt at committing fraud than a real monetization strategy, even knowing how unfathomably greedy these people can be.

So, with all that said, what do we do now?

Well, in all likelihood you won't need to do anything. As I said, some of the biggest names in the industry would be directly affected by this change, and you can bet your bottom dollar that they're not just going to take it lying down. After all, the only way to stop a greedy CEO is with a greedier CEO, right?

(I fucking hate it here.)

And that's not mentioning the indie devs who are already talking about abandoning the engine.

[Links display tweets from the lead developer of Among Us saying it'd be less costly to hire people to move the game off of Unity and Cult of the Lamb's official twitter saying the game won't be available after January 1st in response to the news.]

That being said, I'm still shaken by all this. The fact that Unity is openly willing to go back and punish its developers for ever having used the engine in the past makes me question my relationship to it.

The news has given rise to the visibility of free, open source alternative Godot, which, if you're interested, is likely a better option than Unity at this point. Mostly, though, I just hope we can get out of this whole, fucking, environment where creatives are treated as an endless mill of free profits that's going to be continuously ratcheted up and up to drive unsustainable infinite corporate growth that our entire economy is based on for some fuckin reason.

Anyways, that's that, I find having these big posts that break everything down to be helpful.

#Unity#Unity3D#Video Games#Game Development#Game Developers#fuckshit#I don't know what to tag news like this

6K notes

·

View notes

Note

whats wrong with ai?? genuinely curious <3

okay let's break it down. i'm an engineer, so i'm going to come at you from a perspective that may be different than someone else's.

i don't hate ai in every aspect. in theory, there are a lot of instances where, in fact, ai can help us do things a lot better without. here's a few examples:

ai detecting cancer

ai sorting recycling

some practical housekeeping that gemini (google ai) can do

all of the above examples are ways in which ai works with humans to do things in parallel with us. it's not overstepping--it's sorting, using pixels at a micro-level to detect abnormalities that we as humans can not, fixing a list. these are all really small, helpful ways that ai can work with us.

everything else about ai works against us. in general, ai is a huge consumer of natural resources. every prompt that you put into character.ai, chatgpt? this wastes water + energy. it's not free. a machine somewhere in the world has to swallow your prompt, call on a model to feed data into it and process more data, and then has to generate an answer for you all in a relatively short amount of time.

that is crazy expensive. someone is paying for that, and if it isn't you with your own money, it's the strain on the power grid, the water that cools the computers, the A/C that cools the data centers. and you aren't the only person using ai. chatgpt alone gets millions of users every single day, with probably thousands of prompts per second, so multiply your personal consumption by millions, and you can start to see how the picture is becoming overwhelming.

that is energy consumption alone. we haven't even talked about how problematic ai is ethically. there is currently no regulation in the united states about how ai should be developed, deployed, or used.

what does this mean for you?

it means that anything you post online is subject to data mining by an ai model (because why would they need to ask if there's no laws to stop them? wtf does it matter what it means to you to some idiot software engineer in the back room of an office making 3x your salary?). oh, that little fic you posted to wattpad that got a lot of attention? well now it's being used to teach ai how to write. oh, that sketch you made using adobe that you want to sell? adobe didn't tell you that anything you save to the cloud is now subject to being used for their ai models, so now your art is being replicated to generate ai images in photoshop, without crediting you (they have since said they don't do this...but privacy policies were never made to be human-readable, and i can't imagine they are the only company to sneakily try this). oh, your apartment just installed a new system that will use facial recognition to let their residents inside? oh, they didn't train their model with anyone but white people, so now all the black people living in that apartment building can't get into their homes. oh, you want to apply for a new job? the ai model that scans resumes learned from historical data that more men work that role than women (so the model basically thinks men are better than women), so now your resume is getting thrown out because you're a woman.

ai learns from data. and data is flawed. data is human. and as humans, we are racist, homophobic, misogynistic, transphobic, divided. so the ai models we train will learn from this. ai learns from people's creative works--their personal and artistic property. and now it's scrambling them all up to spit out generated images and written works that no one would ever want to read (because it's no longer a labor of love), and they're using that to make money. they're profiting off of people, and there's no one to stop them. they're also using generated images as marketing tools, to trick idiots on facebook, to make it so hard to be media literate that we have to question every single thing we see because now we don't know what's real and what's not.

the problem with ai is that it's doing more harm than good. and we as a society aren't doing our due diligence to understand the unintended consequences of it all. we aren't angry enough. we're too scared of stifling innovation that we're letting it regulate itself (aka letting companies decide), which has never been a good idea. we see it do one cool thing, and somehow that makes up for all the rest of the bullshit?

#yeah i could talk about this for years#i could talk about it forever#im so passionate about this lmao#anyways#i also want to point out the examples i listed are ONLY A FEW problems#there's SO MUCH MORE#anywho ai is bleh go away#ask#ask b#🐝's anons#ai

1K notes

·

View notes

Text

AI, Plagiarism, and CYA

Shout-out for all the students gearing up to go back to school in increasingly frustrated times when dealing with all this AI bullshit. As you've probably noticed, lots of institutions have adapted anti-plagiarism software that incorporates AI detectors that - surprise - aren't that great. Many students are catching flack for getting dinged on work that isn't AI generated, and schools are struggling to catch up to craft policies that uphold academic rigor. It sucks for everyone involved!

As a student, it can really feel like you're in a bind, especially if you didn't do anything wrong. Your instructor isn't like to be as tech-savvy as some, and frankly, you might not be as tech-savvy as you think either. The best thing to do, no matter how your school is handling things, is to Cover Your Ass.

Pay attention to the academic policy. Look, I know you probably skimmed the syllabus. Primus knows I did too, but the policy there is the policy the instructor must stick with. If the policy sets down a strong 'don't touch ChatGPT with a ten-foot pole' standard, stick to it. If you get flagged for something you thought was okay because you didn't read the policy carefully, you don't have ground to stand on if you get called out.

Turn off Autosave and save multiple (named) drafts. If you're using Microsoft Word because your school gives you a free license, the handy Autosave feature may be shooting you in the foot when it comes to proving you did the work. I know this seems counter-intuitive, but I've seen this bite enough people in the ass to recommend students go old-school. Keep those "draft 1234" in a file just in case.

Maintaining timestamped, clearly different drafts of a paper can really help you in the long-run. GoogleDocs also goes a much better job of tracking changes to a document, and may be something to consider, however, with all this AI shit, I'm hesitant to recommend Google. Your best bet, overall, is to keep multiple distinctive drafts that prove how your paragraphs evolved from first to final.

Avoid Grammarly, ProWiritingAid, etc. All that handy 'writing tools' software that claims to help shore up your writing aren't doing you any favors. Grammarly, ProWritingAid, and other software throw up immediate flags in AI-detection software. You may have only used it to clean up the grammar and punctuation, but if the AI-detection software says otherwise, you might be screwed. They're not worth using over a basic spell and grammar check in both Word and GoogleDocs can already do.

Cite all citations and save your sources! This is basic paper-writing, but people using ChatGPT for research often neglect to check to make sure it isn't making shit up, and that made up shit is starting to appear on other parts of the internet. Be sure to click through and confirm what you're using for your paper is true. Get your sources and research material from somewhere other than a generative language model, which are known for making shit up. Yes, Wikipedia is a fine place to start and has rigorously maintained sources.

Work with the support your school has available. My biggest mistake in college was not reaching out when I felt like I was drowning, and I know how easy it is to get in you head and not know where to turn when you need more help. But I've since met a great deal of awesome librarians, tutors, and student aid staff that love nothing more to devote their time to student success. Don't wait at the last moment until they're swamped - you can and will succeed if you reach out early and often.

I, frankly, can't wait for all this AI bullshit to melt down in a catastrophic collapse, but in the meantime, take steps to protect yourself.

#school#AI Bullshit#frankly AI-checkers are just as bad as AI#you gotta take steps to document what you're doing

462 notes

·

View notes

Text

AI DISTURBANCE "OVERLAYS" DO NOT WORK!

To all the artists and folks who want to protect their art against AI mimicry: all the "AI disturbance" overlays that are circulating online lately DON'T WORK!

Glaze's disturbance (and now the Ibis Paint premium feature, apparently. Not sure.) modifies the image on a code-level, it's not just an overlayed effect but it actually affects the image's data so AI can't really detect and interpret the code within the image. From the Glaze website:

Can't you just apply some filter, compression, blurring, or add some noise to the image to destroy image cloaks? As counterintuitive as this may be, the high level answer is that no simple tools work to destroy the perturbation of these image cloaks. To make sense of this, it helps to first understand that cloaking does not use high-intensity pixels, or rely on bright patterns to distort the image. It is a precisely computed combination of a number of pixels that do not easily stand out to the human eye, but can produce distortion in the AI's “eye.” In our work, we have performed extensive tests showing how robust cloaking is to things like image compression and distortion/noise/masking injection. Another way to think about this is that the cloak is not some brittle watermark that is either seen or not seen. It is a transformation of the image in a dimension that humans do not perceive, but very much in the dimensions that the deep learning model perceive these images. So transformations that rotate, blur, change resolution, crop, etc, do not affect the cloak, just like the same way those operations would not change your perception of what makes a Van Gogh painting "Van Gogh."

Anyone can request a WebGlaze account for FREE, just send an Email or a DM to the official Glaze Project accounts on X and Instagram, they reply within a few days. Be sure to provide a link to your art acc (anywhere) so they know you're an artist.

Please don't be fooled by those colorful and bright overlays to just download and put on your art: it won't work against AI training. Protect your art with REAL Glaze please 🙏🏻 WebGlaze is SUPER FAST, you upload the artwork and they send it back to you within five minutes, and the effect is barely visible!

Official Glaze Project website | Glaze FAQs | about WebGlaze

#no ai#no ai art#ai disturbance#anti ai#anti ai art#artists#artists on tumblr#artists against ai#glaze#webglaze#ibispaint#noai#artists supporting artists#art information#art resources

813 notes

·

View notes

Text

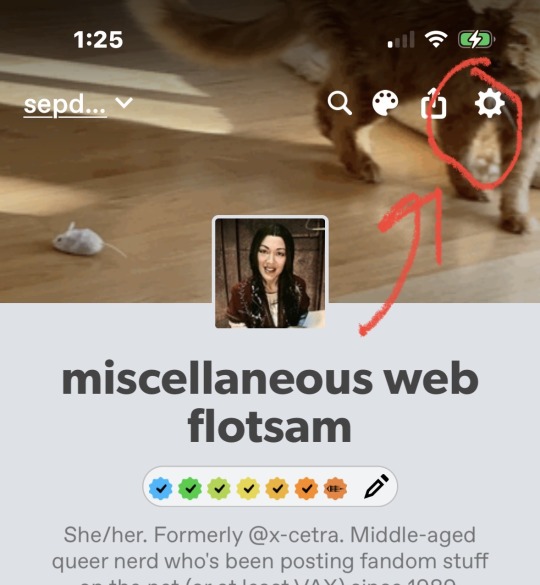

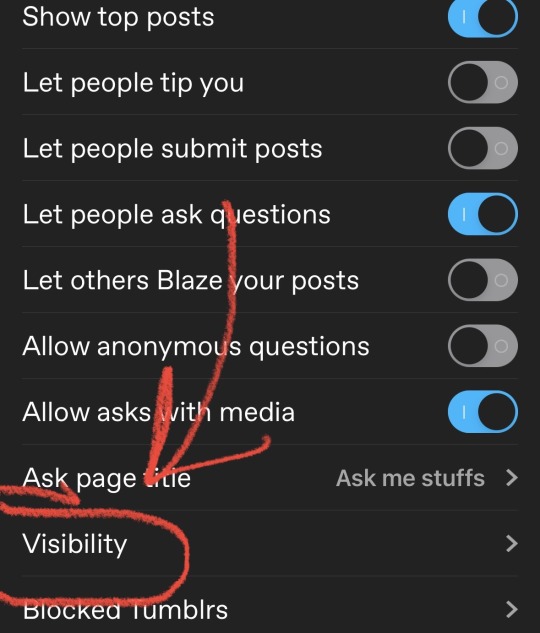

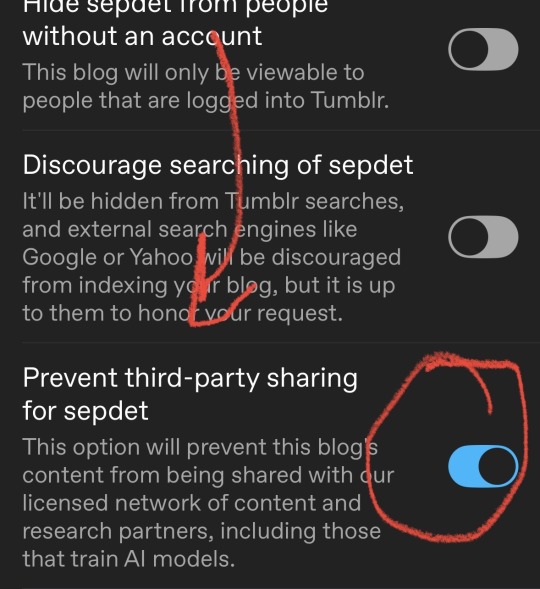

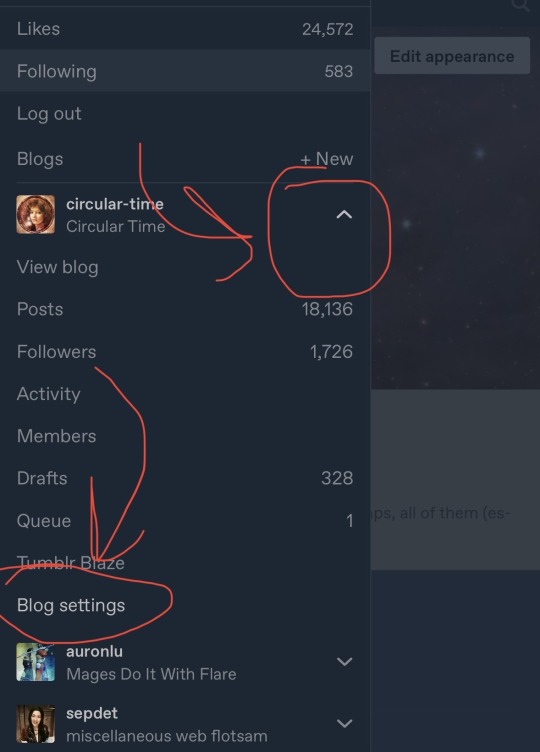

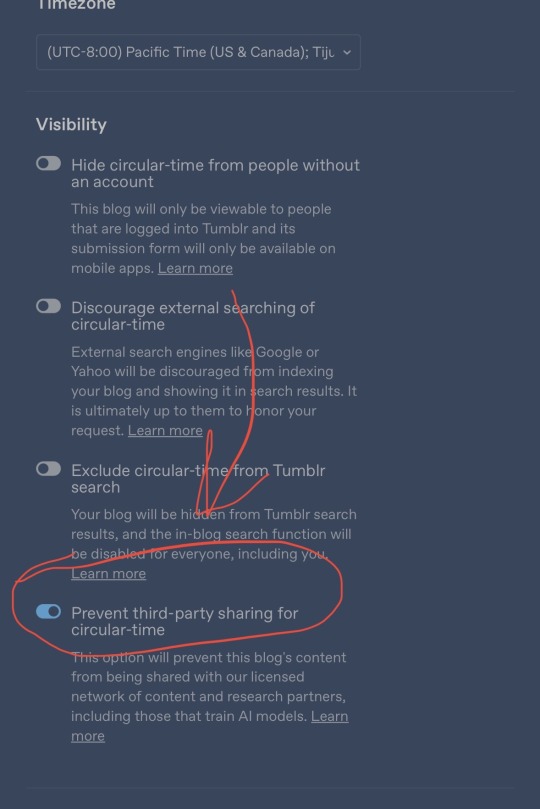

How to disable AI scraping your tumblr blogs

TL;DR: Blog Settings > Visibility > Turn off third-party sharing

Mobile:

Desktop:

Here's the Official Tumblr Announcement

UChicago's free tool Nightshade poisons AI image generators with disruptive data

Glaze blocks AI from detecting/mimicking an artist's distinctive style

Fawkes disrupts facial recognition scans

908 notes

·

View notes

Text

DpxDC Prompt: Danny Overshadows the Batmobile

... Danny while visiting Gotham saves Batman by possessing the batmobile- unfortunately he gets stuck.

Imma copy and paste my thoughts on how I would take this from discord LOL

Bruce knows there is something wrong with the batmobile and runs test to see if he got hack. But same time conflicted because whoever hacked his vehicle just saved his life.

Also can see Fenton driving skills put to use plus with Danny's ability to phase through. Definitely makes car chases easier if Bruce can jack the runaway vans from the inside.

But Danny freaking out- using the radio or gps to try and speak after he realizes he needs help to get out of the car… and that Batman wont be As upset as he thinks.

Oo meanwhile Fentons are all over Gotham looking for their missing son… having no idea Danny overshadowed a car.

Danny figuring out how to send tuck a message to send to jazz…ends up being tracked by the bats who go investigate thinking tuck's the hacker.

Tucker trying to cover for Danny

Ooo imagine if they try to chase down Fentons because of them driving crazy(and maybe they're attacking batmobile because they can detect a ghost) and its the only car Danny cant phase through and even getting damaged by.

So he tries to plead in the radio to batman.

And then Bruce wonders if it actually was the Fentons but things still dont make sense… until the team that investigating Tuck brings in more evidence and probably Tuck.

Then it clicks.. Danny isnt ai/bot used to hack the car but Danny Fenton the missing child.

Tuck still the key to figure out how Danny got stuck. Apparently a certain part is made from materials similar to the thermos.

But catch is they need tools from Fentons to get him out so they have to bait them and have Tuck and another bat probably Tim help gather the materials.

Maybe batman confronts them, raising his arms as Fentons accuse batman being a filthy ghost that stole their child. While the others steal what they need.

When it looks like the Fentons are not going to cooperate and blast batman (batman ready to go on offensive ) Danny uses a shield to send blasts back at his parents beeping for batman to get back in.

They go on another chase where Danny drives the batmobile off a cliff and into water only to safely fly them back to the cave. Exhausted and powering down as soon as they're on land letting Bruce take the wheel again.

When Tim n Tuck finally get Danny free they all jump for joy then quickly reminded Danny is still in the batcave. And like oh right shit… they know what i am >>'

But Danny already impressed the bats so i can see them offering to help Danny out further.

Tim n tuck become friends and soon Danny gets a support of heroes. He goes back to his family whose so happy to see him safe… Danny putting in a good word about batman but it falls on deaf ears.

Pfft be funny this is the catalyst to have Fentons moving to Gotham to hunt down batman.

Bruce investing in the Fentons just so he can work on their tech and modify them to not work on Danny- and then Danny haunting the car every now and them for old time sake.

Thought this was just fun idea XD

#dp x dc#danny fenton#batman#danny phantom#dpxdc#dp crossover#dpxdc prompt#batmobile#bruce wayne#fanfic idea#dcxdp

398 notes

·

View notes

Text

a different fandom i'm in has recently had a scandal where two separate """"writers""""" have been caught using AI to produce their fanfiction. And it is something concerning to me because recent history has shown that audiences DO seemingly respond well to predictable, easy-to-consume slop over works that legitimately have a lot of thought and effort put into them. the huge commercial success of authors like colleen hoover when many actually talented writers struggle to publish is proof of that. I'm worried that we might begin seeing AI used to produce low quality highly marketable fiction (and art in general) in the near future.

obviously publishing generally has much higher standards of quality than fanfiction, as it should, but it's still beholden to economic interests, it's still an industry and therefore I think AI is a huge concern for the future of fiction in general. ultimately, if it's demonstrated that it sells better AND it's cheaper/faster to produce, it's very possible that we could see AI being used to churn out fiction in the near future. AI is generally detectable at the moment, but for how long will it be detectable? AI leaking into fanworks is a bad bad sign and I think we seriously need to be on the lookout for it.

I'm not sure to what extent this might be happening in other fandoms, although I'd be prepared to bet it is and I think will increasingly happen. obviously I'm not at all in tune with what's going on in more mainstream marauders/hp fandom but my sense is that a lot of people in that part of fandom are younger, and therefore both more likely to be driven by a need for attention or public recognition, and more willing to take shortcuts like AI. But to do so would be a slap in the face to writers, of any age or ability level, in any corner of fandom, who actually put in hours of work to improve and to create something for free that people will enjoy.

it's okay to not be a brilliant writer. nobody is great at anything when they start out, and like any craft it takes many many hours to improve. in my opinion fanfiction is a great way to practise writing, especially when viewed as a tool through which to analyse fiction, because being able to analyse fiction is a necessary step to being able to create your own. but increasing lack of media literacy in younger generations is a worrying trend. losing sight of why fiction matters and what matters about it in the first place, viewing it purely in terms of entertainment value is what leads people to think that taking shortcuts like this is acceptable.

the hp fandom faced a similar issue once, decades ago, with the infamous cassandra claire plagiarism debacle. And as we know, she was rewarded with massive popularity and eventually a book deal that led to a film and tv series. it's easy to see the appeal of taking shortcuts like plagiarism and AI when it's been demonstrated on multiple occasions in recent history that having a popular fanfiction can lead directly to publishing opportunities.

just like plagiarism, using AI to do your writing for you is intellectually dishonest, lazy and immoral. it's an insult on so many levels, there's absolutely no excuse for it. and horrifyingly I do think we need to be on the lookout for it in fandom spaces

#it reminds me of a dialogue in buffy#where spike tells dawn that the adults at school respond well to the buffybot because as a robot she's predictable#anyway sorry for this rant lol ive just been thinking about this a lot the past few days#obviously i have no idea if this is actually happening in the hp/marauders fandom#but given its popularity i wouldn't be surprised if it was

61 notes

·

View notes

Text

Reposting this for the anon who is clearly too obsessed and doesn't have a life outside of Tumblr. + Added a new statement too.

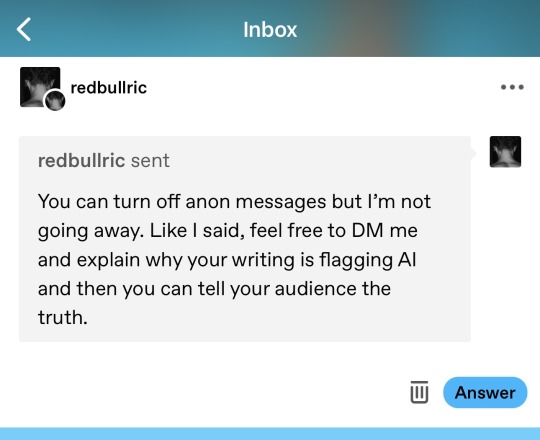

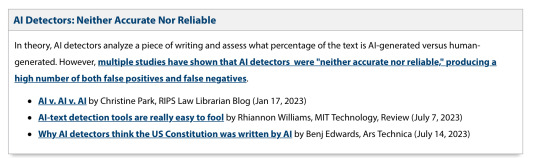

I deleted this post because I was under the impression the anon had already seen it—since they love to stalk my blog very in-depth. Luckily, I had written it on Google Docs, like I do with everything I post here, before posting it the first time. And now I’m posting it again because apparently, they didn’t get the memo and love to create fake accounts:

For the anon who’s too coward to use their real account and clearly doesn’t have a life:

I was going to ignore the first ask, but then you had the time, energy, and weird obsession to create a fake account just to send me another ask—and then a private message. So let me be clear

This is the first and last time I address this. Any further messages or asks about this will be deleted and blocked immediately. Tumblr is my safe space—stress and drama free—and I will block anyone who disturbs that for me. You really came onto my blog and did what—threatened me? You ran my writing through an unreliable AI checker and then had the audacity to message me about it? Do you really feel like it's your place to question how people write fanfiction? Why do you feel so entitled to an explanation from someone you don’t even know? To quote you: “DM me and explain why” — WHO are you? And where is this entitlement coming from?

Let me ask you this: Do you not have a life outside of Tumblr? Who takes time out of their day to check if what a stranger posted is “AI” or not? I saw another account getting the same kind of asks recently—was that you too? Are you going blog to blog checking F1 fics like a fanfic detective? If so: get a life, get a job, get a hobby, or better yet—touch grass.

And the audacity to make a fake account just to send another message? Coward behavior. I’ve blocked the first anon ask and now your little fake blog too. I’ll keep blocking every single one if you continue harassing me.

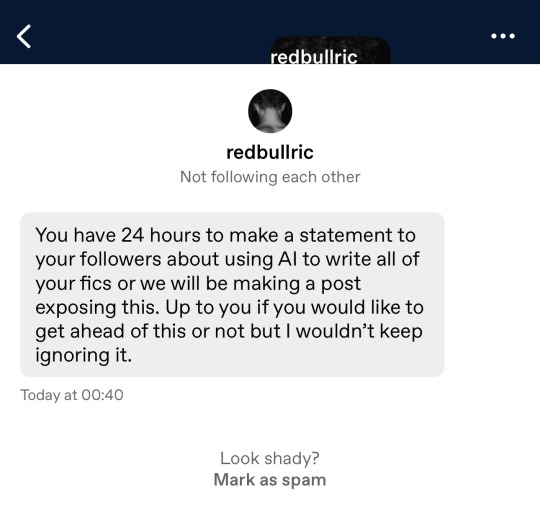

Don’t like what I post? Scroll past it. Block me. Ignore me. I truly do not care. I use Google Docs for all my fics—outline ideas, drafts, requests order. Since that seems hard to believe, here’s one example straight from my docs.

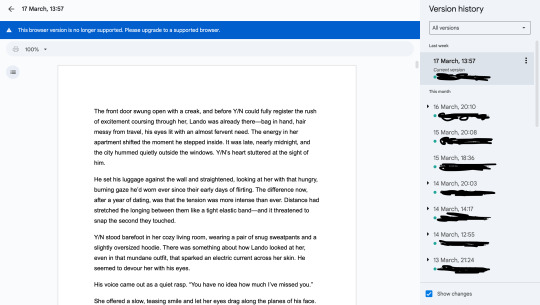

And since you clearly have free time, here are actual credible sources that prove AI checkers are not reliable and should never be used as evidence of anything:

Source

Source

Source

Source

This is especially relevant to me personally, because English is not my native language. I've studied it for over 15 years, l'm currently studying English at university, and I don't live in an English-speaking country. I didn't grow up in an English-speaking country, and I've worked hard to develop my vocabulary, grammar, and writing style. So if my writing sounds "too repetitive" or "too perfect to be written by a human" and gets flagged by some Al detector—that's not proof I used Al. It means I've worked hard to get to this level, even though my English might not always be perfect.

Source

Al that claims to create undetectable Al content or "human Al"

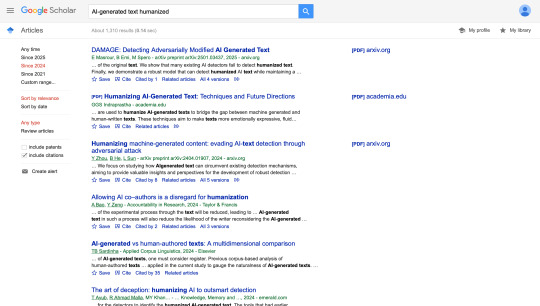

Or maybe you want to read more on Google Scholar:

There are so many sources to inform yourself—you just need to know how to use them.

And this is what really gets me: someone could use Al, lightly edit the output, or run it through one of those "humanize Al" generators and pass every detector with flying colors. Meanwhile, people like me get flagged and questioned for no reason.

Also, if I were actually using Al, I would've used one of those humanizing tools too—so people like you wouldn't harass me over what I post.

These days, it seems you don't even need facts—just a fake account and a superiority complex.

That's all I had to say. Goodbye, and good luck finding a personality.

April 7

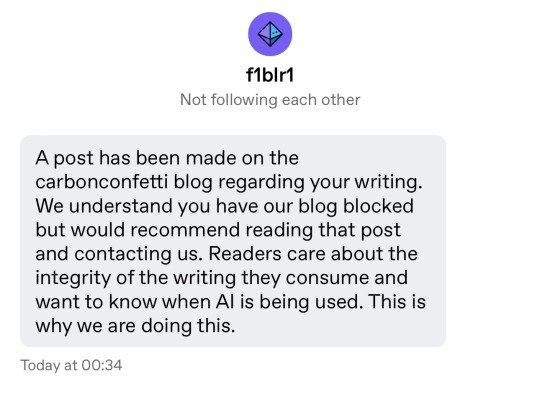

A few days after I posted the above post, you went on someone’s blog — someone who had sent me an ask without using the anon option — and sent them an ask about me, as if I had committed a crime. Less than 24 hours ago, you created yet another fake account just to message me (as seen below) and tell me about one of your other accounts (also fake), despite my explicit statement that I would no longer entertain this obsessive behavior.

Let me be extremely clear: I do not owe strangers on the internet an explanation for my writing process — especially not those who appoint themselves as investigators and issue condescending ultimatums. I will not “contact you privately.” I will not “own up” to a false narrative you've built around flawed tools and obsessive pattern-tracking. You do not get to demand private confessions like you're running a tribunal.

I already said everything I had to say when I made that original post, but clearly it didn’t register, and you continue to target me. I looked at the account you mentioned in your message. To quote: “Some members of the group of us working on this project have gone through PhD programs or work in education and understand the inaccuracies and limitations of AI detection tools.”

So you're adults — or so you claim — with PhDs, yet you seem to be unemployed based on the amount of free time you have to analyze what strangers are posting on the internet. Especially posts that are over 2k words long.

Seriously, who has time to do this much? Because I highly doubt someone with an actual job and a life has this much time on their hands.

And as I said in my first post: block me if you don’t like my blog or what I post. It is really that simple.

LEAVE. ME. ALONE.

24 notes

·

View notes

Text

Detect ai generated text for Free, simple way & High accuracy. Ai content check, ai content detection tool, ai essay detector for teacher.

#check gpt#detect ai generated text#free ai detector#open ai detector#ai checker text#ai content detection tool#ai detection free#ai detect#ai detector chatgpt#check ai writing

0 notes

Text

"Major technology companies signed a pact on Friday to voluntarily adopt "reasonable precautions" to prevent artificial intelligence (AI) tools from being used to disrupt democratic elections around the world.

Executives from Adobe, Amazon, Google, IBM, Meta, Microsoft, OpenAI, and TikTok gathered at the Munich Security Conference to announce a new framework for how they respond to AI-generated deepfakes that deliberately trick voters.

Twelve other companies - including Elon Musk's X - are also signing on to the accord...

The accord is largely symbolic, but targets increasingly realistic AI-generated images, audio, and video "that deceptively fake or alter the appearance, voice, or actions of political candidates, election officials, and other key stakeholders in a democratic election, or that provide false information to voters about when, where, and how they can lawfully vote".

The companies aren't committing to ban or remove deepfakes. Instead, the accord outlines methods they will use to try to detect and label deceptive AI content when it is created or distributed on their platforms.

It notes the companies will share best practices and provide "swift and proportionate responses" when that content starts to spread.

Lack of binding requirements

The vagueness of the commitments and lack of any binding requirements likely helped win over a diverse swath of companies, but disappointed advocates were looking for stronger assurances.

"The language isn't quite as strong as one might have expected," said Rachel Orey, senior associate director of the Elections Project at the Bipartisan Policy Center.

"I think we should give credit where credit is due, and acknowledge that the companies do have a vested interest in their tools not being used to undermine free and fair elections. That said, it is voluntary, and we'll be keeping an eye on whether they follow through." ...

Several political leaders from Europe and the US also joined Friday’s announcement. European Commission Vice President Vera Jourova said while such an agreement can’t be comprehensive, "it contains very impactful and positive elements". ...

[The Accord and Where We're At]

The accord calls on platforms to "pay attention to context and in particular to safeguarding educational, documentary, artistic, satirical, and political expression".

It said the companies will focus on transparency to users about their policies and work to educate the public about how they can avoid falling for AI fakes.

Most companies have previously said they’re putting safeguards on their own generative AI tools that can manipulate images and sound, while also working to identify and label AI-generated content so that social media users know if what they’re seeing is real. But most of those proposed solutions haven't yet rolled out and the companies have faced pressure to do more.

That pressure is heightened in the US, where Congress has yet to pass laws regulating AI in politics, leaving companies to largely govern themselves.

The Federal Communications Commission recently confirmed AI-generated audio clips in robocalls are against the law [in the US], but that doesn't cover audio deepfakes when they circulate on social media or in campaign advertisements.

Many social media companies already have policies in place to deter deceptive posts about electoral processes - AI-generated or not...

[Signatories Include]

In addition to the companies that helped broker Friday's agreement, other signatories include chatbot developers Anthropic and Inflection AI; voice-clone startup ElevenLabs; chip designer Arm Holdings; security companies McAfee and TrendMicro; and Stability AI, known for making the image-generator Stable Diffusion.

Notably absent is another popular AI image-generator, Midjourney. The San Francisco-based startup didn't immediately respond to a request for comment on Friday.

The inclusion of X - not mentioned in an earlier announcement about the pending accord - was one of the surprises of Friday's agreement."

-via EuroNews, February 17, 2024

--

Note: No idea whether this will actually do much of anything (would love to hear from people with experience in this area on significant this is), but I'll definitely take it. Some of these companies may even mean it! (X/Twitter almost definitely doesn't, though).

Still, like I said, I'll take it. Any significant move toward tech companies self-regulating AI is a good sign, as far as I'm concerned, especially a large-scale and international effort. Even if it's a "mostly symbolic" accord, the scale and prominence of this accord is encouraging, and it sets a precedent for further regulation to build on.

#ai#anti ai#deepfake#ai generated#elections#election interference#tech companies#big tech#good news#hope

148 notes

·

View notes

Text

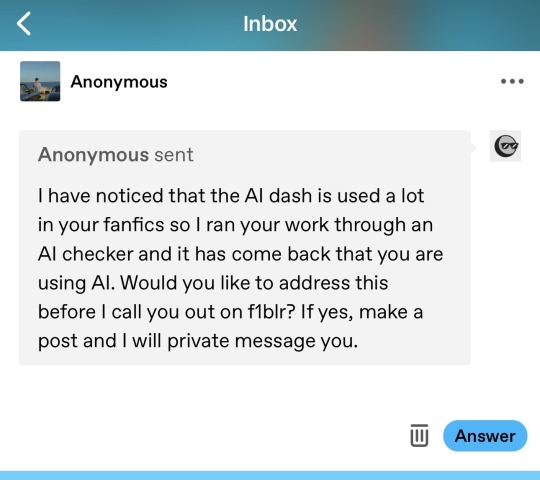

a personal post/reflection on ai use

some of you reading this may have seen the message i received accusing me of using ai to write my work. i wanted to take a moment to talk about how it made me feel and, more importantly, the impact that accusations like this can have on writers in general.

I won’t lie: seeing that message in my inbox, being told that the stories i spend hours writing aren't “real", that my effort and creativity don't belong to me, was really disheartening. then i had to defend my own writing against these accusations and that wasn’t exactly fun. and while i know i shouldn’t let it get to me, the truth is that it does, because I'm a real person!

It’s made me overthink everything i write. I already reread my fics multiple times before posting, checking for flow, consistency, and coherence, but now, i find myself second-guessing every sentence. Does this sound too robotic? Is my phrasing too formal or too stiff? Or maybe it’s not polished enough? Maybe it's too polished. What if i accidentally repeat a word or structure a sentence in a way that someone deems “ai-like”? Will i be accused of this again?

I want to be clear also that this isn’t about seeking sympathy. I just feel it's important to remind people that fanfic writers are real people with real emotions. We write because we love it, because we want to share stories for others to enjoy for free. And yet, there are people out there who treat “spotting ai” like some kind of witch hunt, who feel entitled to send accusations to complete strangers without any basis for it.

And I don't say this to be elitist, but for some context, I have a master’s degree in computer science. I work in tech every day. I specialize in machine learning. When I say there is no reliable way to tell whether a passage of text was written by ai or a human, i'm not just making shit up. ai detection tools are completely unreliable. they give false positives all the time, and they are, quite frankly, complete bullshit.

And I get that there are legitimate concerns about ai-generated work in creative spaces, especially when it comes to art, writing, and other forms of expression that people put their hearts into. I have taken ethics courses in ai for this reason. I understand why people are wary, and i’m not saying that those concerns aren’t valid. But this is exactly why we should be mindful of how we engage with content. If you don’t like something, if you suspect it was ai-generated and that bothers you, the best thing you can do is simply not engage. don’t read it, don’t share it, don’t support it.

But going out of your way to harass people, to send accusations without evidence, to act like you’re some kind of ai-detecting authority is not just absurd, but it’s harmful to real people because you will inevitably get it wrong!!!

At the end of the day, this is fan fiction. no one is paying for this. no one is being scammed. so why do people act like they need to police something that’s supposed to be fun, creative, and freely shared? if you love stories written by real people, support those writers. but please, stop making this space even more stressful for the people who are already here, giving their time and creativity to share something they love.

And if you still think making accusations about people using AI for their writing is the correct and virtuous thing to do, I invite you to read this online thread of freelance writers discussing the legitimate harm that has come to their livelihood due to the false positives of ai detection tools and false accusations.

22 notes

·

View notes

Note

The usage of ChatGPT is crazyyy…

hello anon, i can assure you that i write all of my fics on my own, without the use of any generative ai. while i am aware that there are people who use ai to write or assist, please do not go around accusing people (on anon, as well) of utilizing ai to do something that they enjoy doing during their free time. real writing takes time and effort, and you are free to ask my friends @gojover or @admiringlove to attest for me, as they have both seen my absolute mess of a notebook in which i research all sorts of topics for my fics.

i don't trust any online ai detection tools, as my friend and i have both put our work in through multiple websites to see (prompted by this ask), and some of them say our work is almost entirely ai-generated, while others claim it is completely human, so. take it with a grain of salt.

18 notes

·

View notes